The Point Cloud Reduction Algorithm Based on the Feature Extraction of a Neighborhood Normal Vector and Fuzzy-c Means Clustering †

Abstract

1. Introduction

2. Method

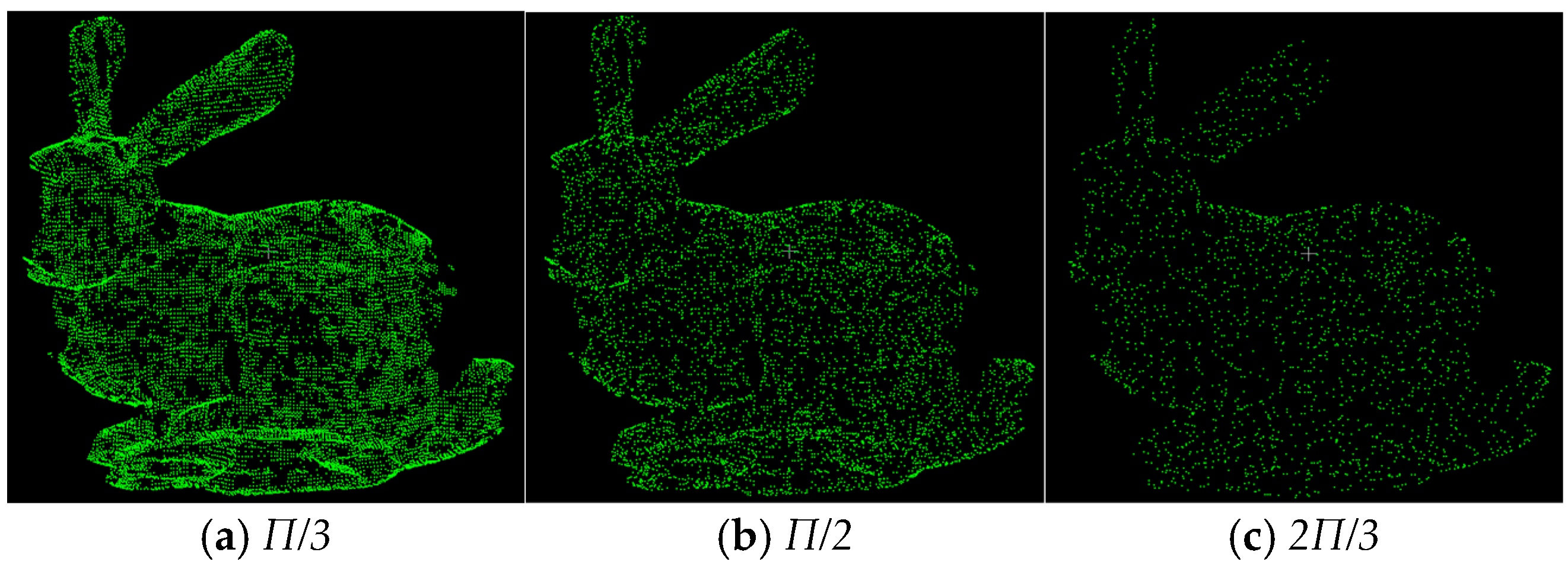

2.1. Global Feature Point Extraction Based on Domain Normal Vector

2.2. Local Feature Point Extraction Based on FCM Clustering Algorithm

3. Result

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chang, K.T.; Chang, J.R.; Liu, J.K. Detection of pavement distresses using 3D laser scanning technology. Comput. Civ. Eng. 2005, 2005, 1–11. [Google Scholar]

- Mouragnon, E.; Lhuillier, M.; Dhome, M.; Dekeyser, F.; Sayd, P. Real time localization and 3d reconstruction. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; IEEE: Piscataway, NJ, USA, 2006; Volume 1, pp. 363–370. [Google Scholar]

- Huang, Y. Research and application of object recognition technology in sweeping robots. Sci. Technol. Innov. 2023, 19, 171–172+175. [Google Scholar]

- Ji, Y.; Li, S.; Peng, C.; Xu, H.; Cao, R.; Zhang, M. Obstacle detection and recognition in farmland based on fusion point cloud data. Comput. Electron. Agric. 2021, 189, 106409. [Google Scholar] [CrossRef]

- Wang, X.; Mizukami, Y.; Tada, M.; Matsuno, F. Navigation of a mobile robot in a dynamic environment using a point cloud map. Artif. Life Robot. 2021, 26, 10–20. [Google Scholar] [CrossRef]

- Mousavian, A.; Anguelov, D.; Flynn, J.; Kosecka, J. 3d bounding box estimation using deep learning and geometry. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7074–7082. [Google Scholar]

- Hu, Q.; Yang, B.; Xie, L.; Rosa, S.; Guo, Y.; Wang, Z.; Trigoni, N.; Markham, A. Learning semantic segmentation of large-scale point clouds with random sampling. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 8338–8354. [Google Scholar] [CrossRef]

- Kim, S.J.; Kim, C.H.; Levin, D. Surface reduction using a discrete curvature norm. Comput. Graph. 2002, 26, 657–663. [Google Scholar] [CrossRef]

- Eldar, Y.; Lindenbaum, M.; Porat, M.; Zeevi, Y.Y. The farthest point strategy for progressive image sampling. IEEE Trans. Image Process. 1997, 6, 1305–1315. [Google Scholar] [CrossRef] [PubMed]

- Shi, B.Q.; Liang, J.; Liu, Q. Adaptive reduction of point cloud using k-means clustering. Comput.-Aided Des. 2011, 43, 910–922. [Google Scholar] [CrossRef]

- Wang, Z.; Yang, H. Local entropy-based feature-preserving reduction and evaluation for large field point cloud. Vis. Comput. 2023, 40, 6705–6721. [Google Scholar] [CrossRef]

- Li, P.; Cui, F. Research on curvature graded point cloud data reduction optimization algorithm based on binary K-means clustering. Electron. Meas. Technol. 2022, 45, 66–71. [Google Scholar]

- Li, R.; Yang, M.; Liu, Y.; Zhang, H. A uniform reduction algorithm for scattered point clouds. Acta Opt. Sin. 2017, 37, 89–97. [Google Scholar]

- Hu, Z.; Cao, L.; Pei, D.; Mei, Z. Adaptive simplified point cloud improved preprocessing optimization 3D reconstruction algorithm. Laser Optoelectron. Prog. 2023, 60, 219–224. [Google Scholar]

- Leal, N.; Leal, E.; German, S.T. A linear programming approach for 3D point cloud reduction. IAEN G Int. J. Comput. Sci. 2017, 44, 60–67. [Google Scholar]

- Martin, R.R.; Stroud, I.A.; Marshall, A.D. Data reduction for reverse engineering. In Proceedings of the 7th Conference on Information Geometers, Maui, HI, USA, 7–10 January 1997; pp. 85–100. [Google Scholar]

- Yang, X.; Tian, Y.L. Super normal vector for activity recognition using depth sequences. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 804–811. [Google Scholar]

- Guo, Y. KD-TREE spatial indexing technology. Comput. Prod. Circ. 2020, 6, 168. [Google Scholar]

- Abdi, H.; Williams, L.J. Principal component analysis. Wiley Interdiscip. Rev. Comput. Stat. 2010, 2, 433–459. [Google Scholar] [CrossRef]

- Rass, S.; König, S.; Ahmad, S.; Goman, M. Metricizing the Euclidean space towards desired distance relations in point clouds. IEEE Trans. Inf. Forensics Secur. 2024, 19, 7304–7319. [Google Scholar] [CrossRef]

| Method | Reduction Rate/% | Reconstruction Time/ms |

|---|---|---|

| algorithm in this paper | 64.47 | 727 |

| uniform grid method | 59.23 | 834 |

| random sampling method | 61.52 | 751 |

| curvature sampling method | 57.18 | 848 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, H.; Jiao, D.; Li, W. The Point Cloud Reduction Algorithm Based on the Feature Extraction of a Neighborhood Normal Vector and Fuzzy-c Means Clustering. Proceedings 2024, 110, 13. https://doi.org/10.3390/proceedings2024110013

Xu H, Jiao D, Li W. The Point Cloud Reduction Algorithm Based on the Feature Extraction of a Neighborhood Normal Vector and Fuzzy-c Means Clustering. Proceedings. 2024; 110(1):13. https://doi.org/10.3390/proceedings2024110013

Chicago/Turabian StyleXu, Hongxiao, Donglai Jiao, and Wenmei Li. 2024. "The Point Cloud Reduction Algorithm Based on the Feature Extraction of a Neighborhood Normal Vector and Fuzzy-c Means Clustering" Proceedings 110, no. 1: 13. https://doi.org/10.3390/proceedings2024110013

APA StyleXu, H., Jiao, D., & Li, W. (2024). The Point Cloud Reduction Algorithm Based on the Feature Extraction of a Neighborhood Normal Vector and Fuzzy-c Means Clustering. Proceedings, 110(1), 13. https://doi.org/10.3390/proceedings2024110013