Advantages, Critics and Paradoxes of Virtual Reality Applied to Digital Systems of Architectural Prefiguration, the Phenomenon of Virtual Migration †

Abstract

:1. Introduction

2. III Digital Revolution: Evolution and Development of Virtuality Applied to Representation

3. Towards a Not Physical Migration, the Paradox of Living the Reality through the Virtual Instruments

4. Fluid Workflow Experimental Application Methodology for Software Management of Interactive and Explorable Digital Scenario. Results and Criticism

4.1. Using the Epic Game Unreal Engine Platform

4.2. Using the EyeCad VR Platform

4.3. Comparison between the Two Analyzed Software

5. Conclusions

Supplementary Materials

Conflicts of Interest

References and Notes

- Livio Sacchi, POST SCRIPT Città Virtuale come fuga dal Reale?; : Roma, Italy, 2014; pp. 35–37, ISBN 9788849228298. In Atlante Dellitare Virtuale; Gangemi Editore: Roma, Italy, 2014; pp. 35–37. ISBN 9788849228298.

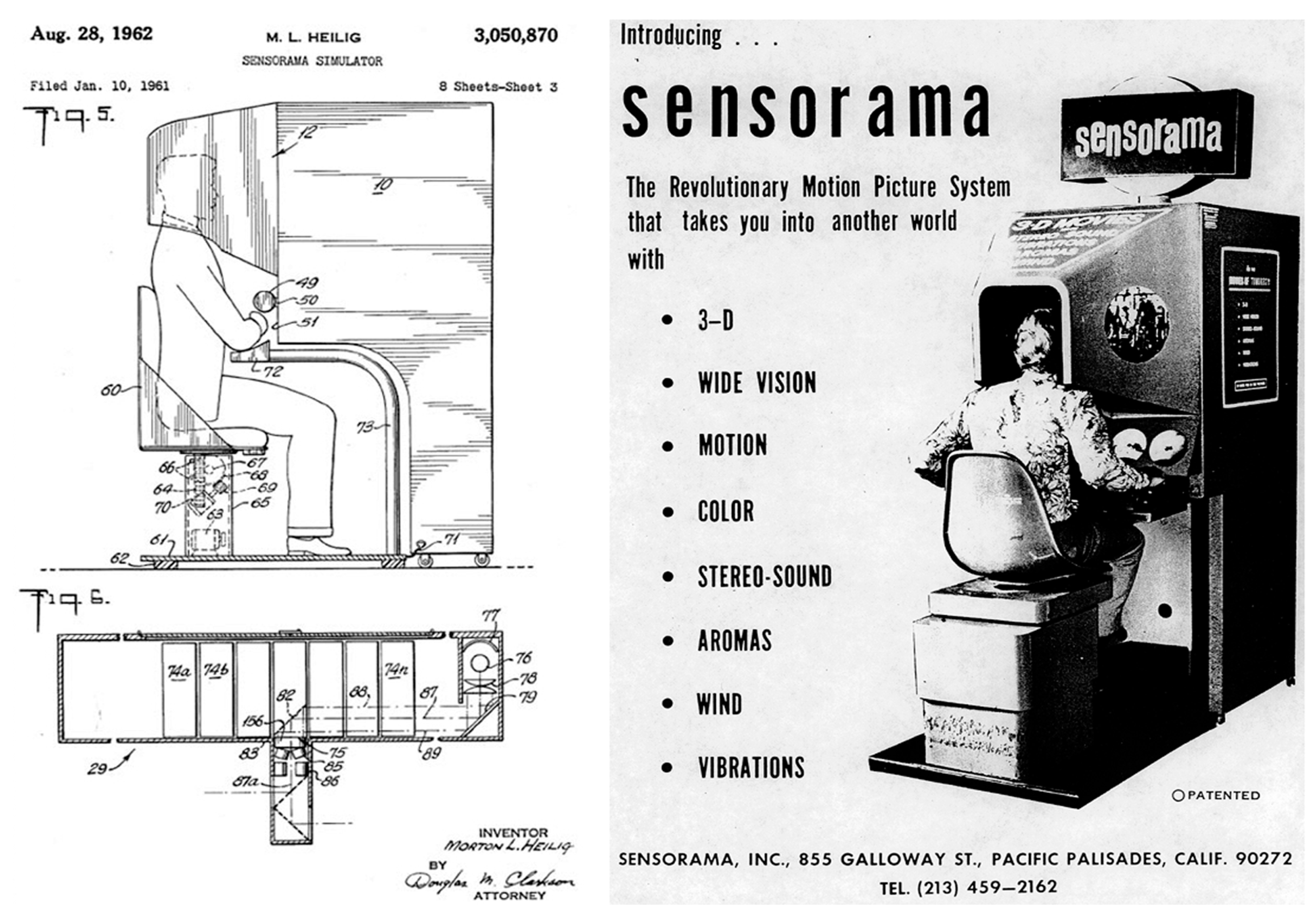

- The Sensorama consisting of a seat, a stereoscopic TV screen developed in 1957 (STAIU) and an interactive handlebars, basically constituted a virtual driving simulator, where by sliding the head into the screen and guiding through joystic, the user could have the “an impression” of driving on the streets of Manhattan and being able to perceive even the smell of car exhaust and wind on the face.

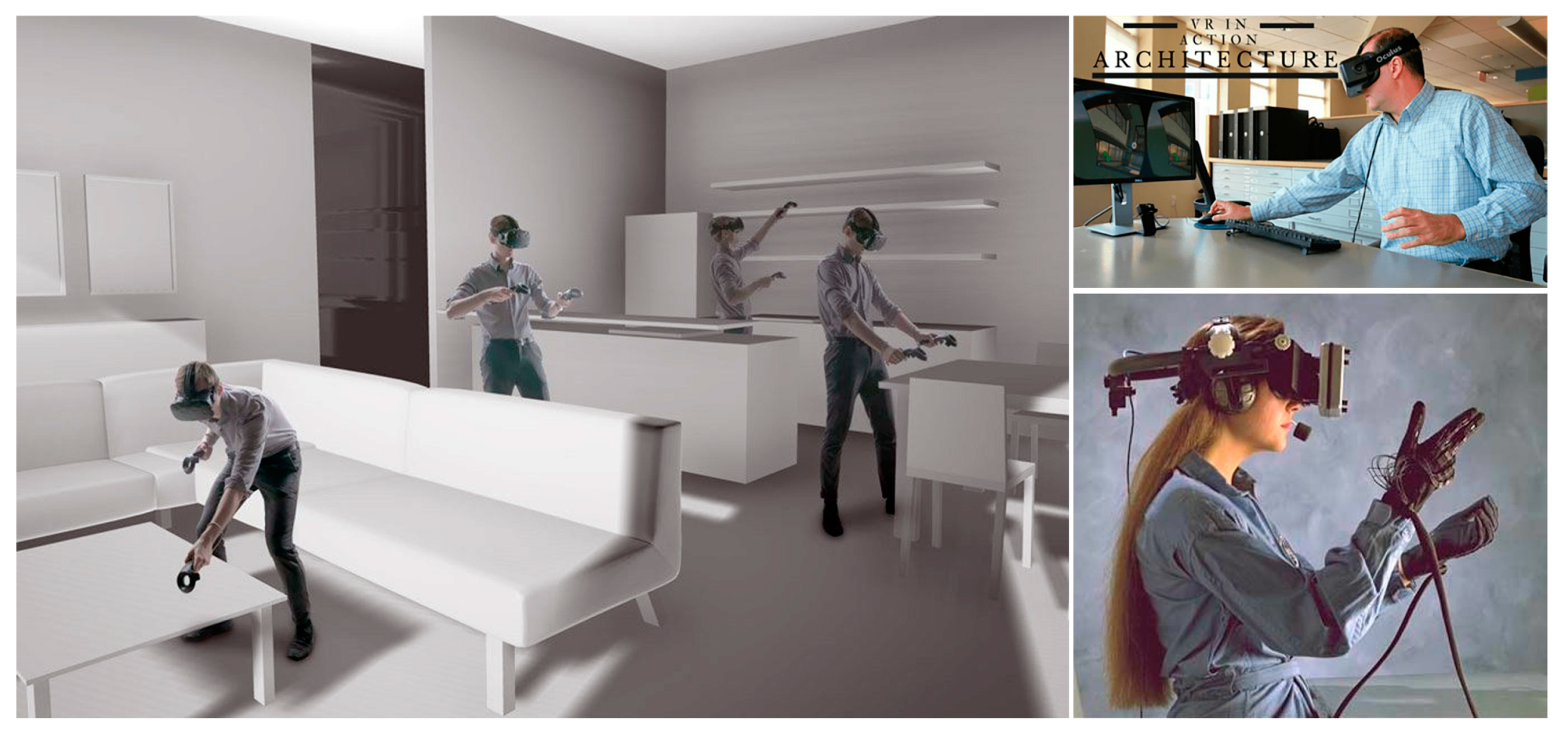

- In 1982, in the scientific research for aptic interaction, Thomas Zimmerman and L. Young Harvill created the interactive Data Glove to play a virtual guitar. The instrument worked to incorporate ultrasonic and magnetic hand position tracking technology.

- Interactive intervention directly in the design editing phases allows you to focus more quickly on the geometric issues of the architecture displayed in 3d, that can now be viewed on computers from various angles and with amazing visual graphics effects to simplify the editing phases and subsequent visual performance steps, thanks to the interactive nature of computer technology.

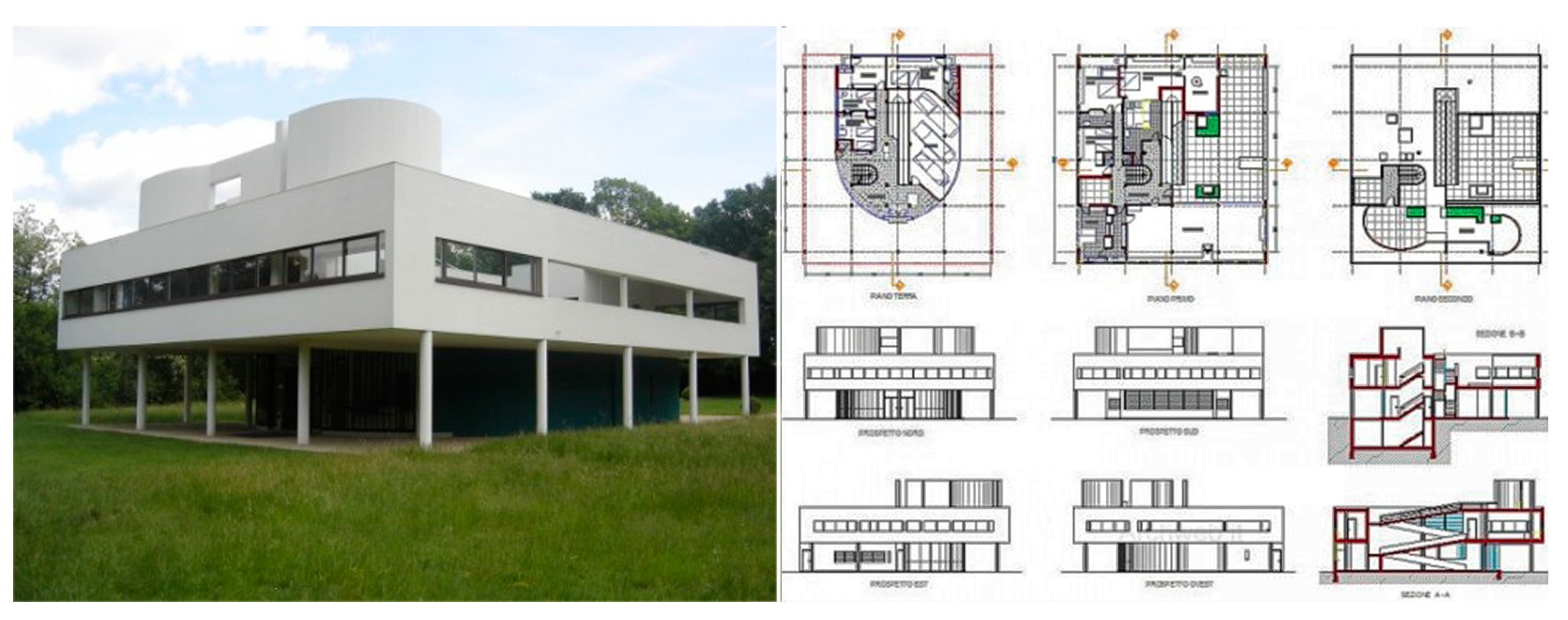

- Available online:. Available online: http://www.archiga.it/progetti-architetti-famosi-villa-savoye-di-le-corbusier-piante-prospetti-e-sezioni/ (accessed on 10 August 2017).

- A lightmap is a data structure used in lightmapping, a form of surface caching in which the brightness of surfaces in a virtual scene is pre-calculated and stored in texture maps for later use. Lightmaps are most commonly applied to static objects in realtime 3d graphics applications, such as video games, in order to provide lighting effects such as global illumination at a relatively low computational cost.

- Introduction to Ray Tracing: A Simple Method for Creating 3D Images. Available online: https://www.scratchapixel.com/lessons/3d-basic-rendering/introduction-to-ray-tracing/raytracing-algorithm-in-a-nutshell (accessed on 13 August 2017).

- Official Site of EyeCadVr Software. Available online: http://www.eyecadvr.com/mondo-eyecadvr.html (accessed on 14 August 2017).

- Official Site of Cry Engine Software. Available online: http://www.crytek.com/cryengine (accessed on 12 August 2017).

- Official Site of Unity Software. Available online: https://unity3d.com/ (accessed on 10 August 2017).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2017 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Basso, A. Advantages, Critics and Paradoxes of Virtual Reality Applied to Digital Systems of Architectural Prefiguration, the Phenomenon of Virtual Migration. Proceedings 2017, 1, 915. https://doi.org/10.3390/proceedings1090915

Basso A. Advantages, Critics and Paradoxes of Virtual Reality Applied to Digital Systems of Architectural Prefiguration, the Phenomenon of Virtual Migration. Proceedings. 2017; 1(9):915. https://doi.org/10.3390/proceedings1090915

Chicago/Turabian StyleBasso, Alessandro. 2017. "Advantages, Critics and Paradoxes of Virtual Reality Applied to Digital Systems of Architectural Prefiguration, the Phenomenon of Virtual Migration" Proceedings 1, no. 9: 915. https://doi.org/10.3390/proceedings1090915

APA StyleBasso, A. (2017). Advantages, Critics and Paradoxes of Virtual Reality Applied to Digital Systems of Architectural Prefiguration, the Phenomenon of Virtual Migration. Proceedings, 1(9), 915. https://doi.org/10.3390/proceedings1090915