1. Introduction

A diffuse opinion wants to trace the origins of processing of digital images and drawings between the 1980s and the 1990s, that is to say when the massive spread of advanced technology, with the advent of personal computers, has allowed a wide distribution of new information media. But from a careful investigation this period has to be put few decades before, between 1950s, and 1960s, when the research was developed in few laboratories of some research center of the USA.

This consideration allow us to try to define some main steps: identifying the origins of the first use of the scanner for images; the use of line-based pictures for 2D and 3D representation (Computer-Aided Design); the origins of the term “pixel”, as a contract form of picture element; the use of computer for the production of artistic images; finally the use of the compression algorithm for optimization process.

2. On the Origins of Digital Image

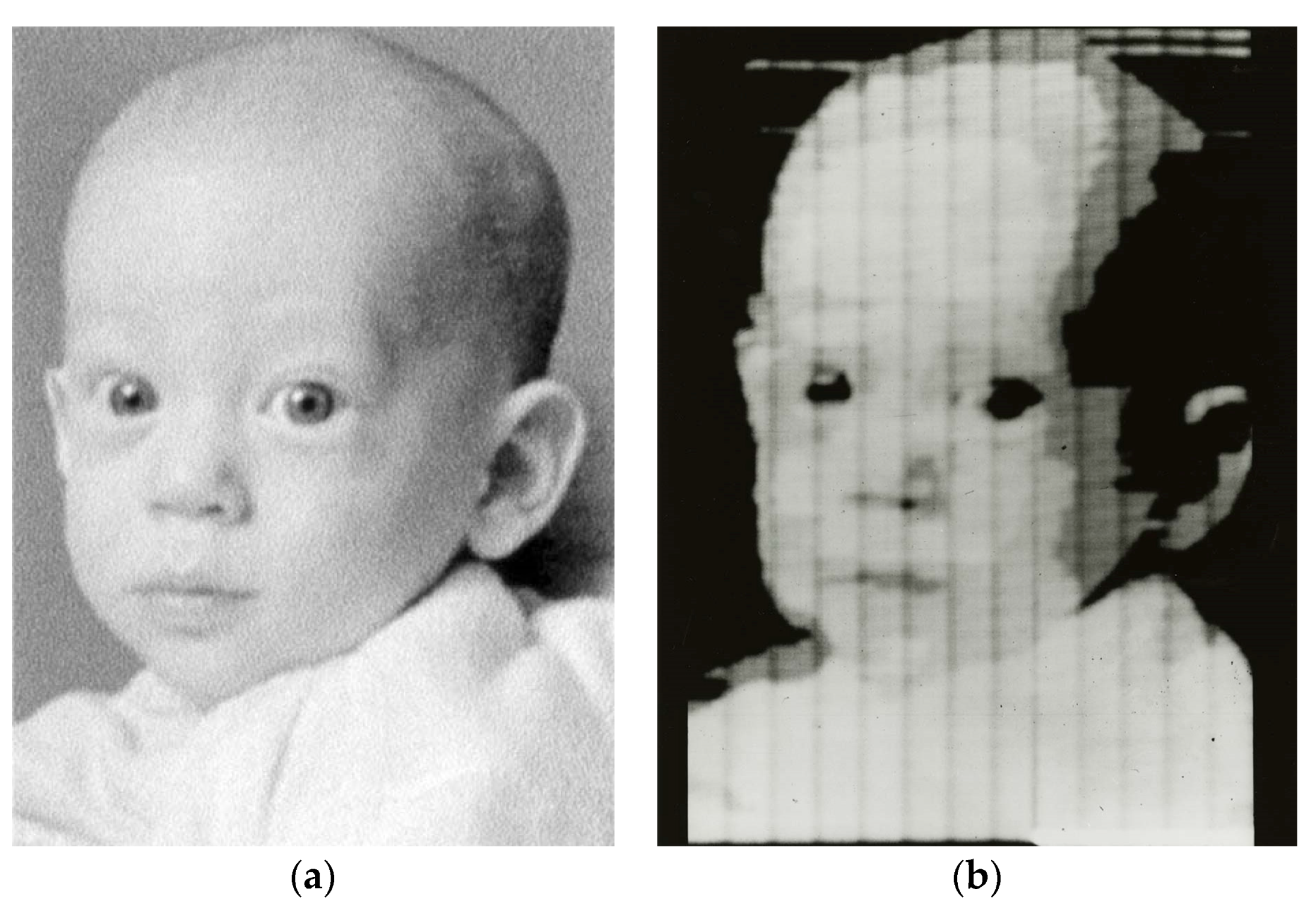

At the end of the 1950s, inside the National Bureau of Standards laboratories, a team led by Russell A. Kirsch developed the first digital system for scanning images. Thanks to this acquisition system, it was possible to obtain the first scan of analog images in numeric format. If we want to identify exactly the subjects of these representations, we could rely on the documents produced by that research team. The presentation of the system, in fact, took place at the Eastern Joint Computer Conference in December 1957 in Washington, dedicated to the topic “Computer with Deadlines to Meet”, and in the proceedings [

1], we can find, in addition to the detailed description of the procedure, the scanned reproduction of the face of Kirsch himself. This square image having a baseline of 44 mm had very different characteristics—in qualitative and quantitative terms—compared to an acquisition that we can get now thanks to a flat scanner (or through a digital camera). It had produced a binary points composition (we use the generic term of

point since the neologism

pixel will be coined only in 1965, as we will see later) in white and black color (bitmap format), so to simulate gray shades through the proximity of them. The pattern consisted of 176 × 176 points, giving a resolution of about 100 dpi; finally, the acquisition time was about 25 s. This resolution, while being considerably lower than the current standards, allowed the identification of the figure and made possible, moreover, to perform some pattern recognition activities, as described in the essay, especially to speed up operations of acquisition and processing of paper documents. The photograph of Kirsch’s face was present in the essay, showing, at one time, original and digital form, to emphasize the great quality of the restitution (

Figure 1).

But the image that is classified as the first digital scanning of the history of numerical iconography is another one: that is, what the same researcher delivered to the archives of the Portland Art Museum in the state of Oregon, the first American museum on the Pacific coast devoted to art. This is the photograph of the newborn Walden, son of Russell (archive code 2003.54.1), having a size of 9 × 7 inches, with the same resolution as the previously mentioned image. Undoubtedly it is to be assumed that numerous scans during that year have been made with the same instrument and the desire to qualify the image of the child as the first of a series—although not officially presented to a conference—perhaps it must be put in relationship with the will to deliver the son, in addition to his father, to the history of computer science. In fact, the image of the “sampled” child will be much more successful, thanks perhaps to the direct association between the birth of the new scanning machine and the birth of the baby (

Figure 2). Walden himself, about forty years later, in 1999, will give the photograph of his daughter, which is also digitized, though now with the usual scanning techniques.

The two series of images—the sampled and the original one—can only bring to mind an extraordinary tool that, made about a century ago, has produced similar images using advanced technology for that period, though it cannot be classified as a numeric system.

This instrument, known with the extravagant term of “Pantelegraph”, allowed to scan an image for remote transmission. The inventor was Abbot Giovanni Caselli (1815–1891), who had developed this procedure thanks to his scientific interest in the field [

2,

3,

4]. In fact, the Pantelegraph allowed to send images remotely using two identical pendulum-based instruments for transmission and reception. The drawing was realized with common ink on a base of tin that was analyzed with a platinum tip in serial mode. Only the ink zone signal was shipped, as the receiving machine lifted the tip in the presence of the base of the tin. The result, called with the “caselligram” neologism, was an image with a certain approximation to the real one, but similar, as we said before, to the same obtained with the first digital scanner. Used from 1856, he had some luck in France, England, Italy and Russia, until he was definitively abandoned around 1871. For its intrinsic nature it is usually considered to be the progenitor of the telefax, although, for the figurative similitude of the images, we can definitely insert it into the list of equipment that can constitute an archeology of the digital iconography (

Figure 3).

On one hand, the digital point processing technology proceeded in the direction of identifying the characteristic elements (as mentioned above), going in the direction of Optical Character Recognition (OCR) and identifying the forms that will lead to the vectorization algorithms (transforming a series of contiguous points into vectors), on the other one, the research on drawing with lines will play a central role in the numerical representation process of image.

3. Images of Lines: From 2D to 3D Representation

For this purpose, the work done inside some Massachusetts Institute of Technology research laboratories since the late 1950s will be significant for experimenting what will lead to the development of three-dimensional modeling software as used today.

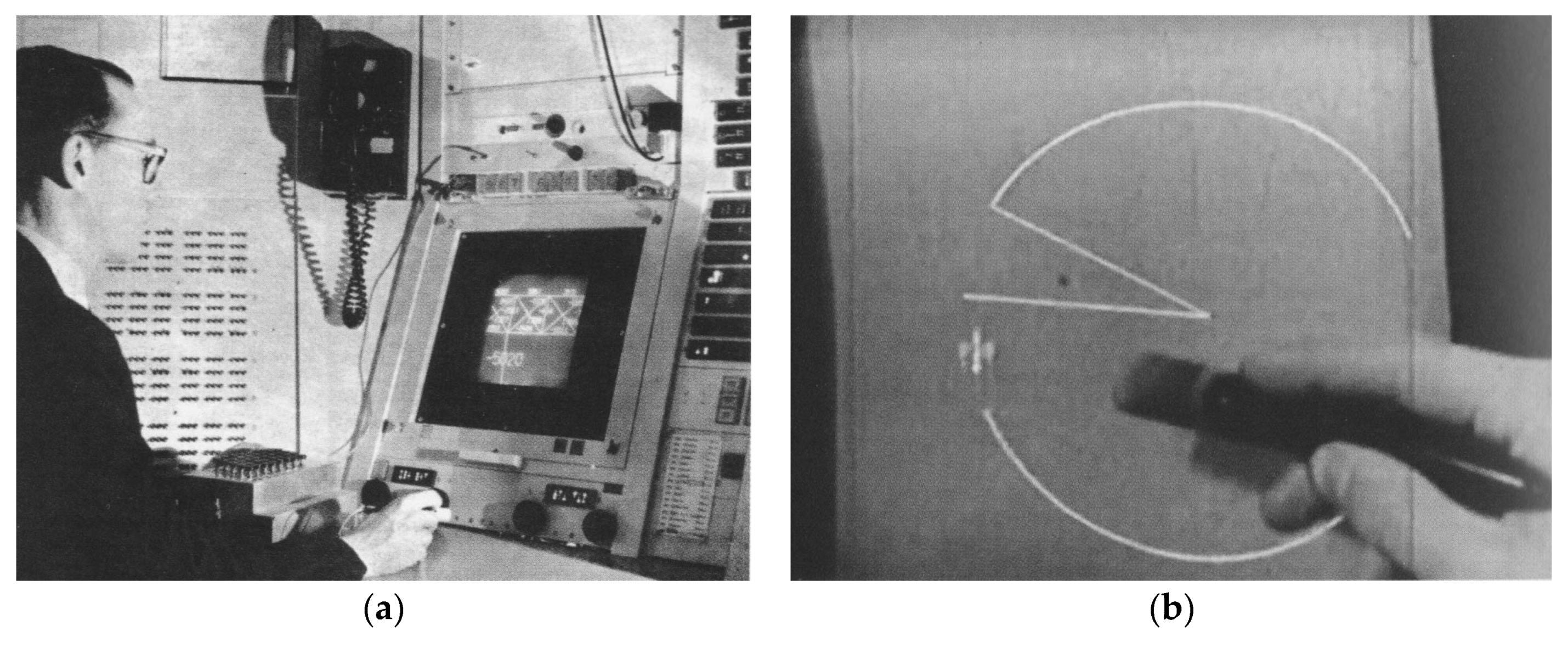

From a theoretical point of view, the contribution of Steven Anson Coons is crucial for participating from the beginning in the process of defining the computer-aided graphics processing system [

5]. A series of research reports stored in the MIT archives show the slow process for setting up a computerized design system, starting with the identification of the term Computer-Aided Design (CAD) [

6]. From a practical point of view, however, we must attribute to Ivan E. Sutherland the presentation of the first instrument able to trace lines on a monitor, within his PhD thesis, recorded in January 1963 under the title

Sketchpad, A Man-Machine Graphical Communication System [

7,

8,

9], that is a drawing book considered as a communication tool between a man and a computer (

Figure 4).

In

Sketchpad, in fact, the two-dimensional representation of straight or curved line segments presented in the luminescent form of vectors on a cathode ray tube was traced with an optical pen, which restored the use of the hand of the designer although in his digital translation. The video image was therefore composed of lines, even though it was transmitted to bright points projected on a screen. Though there was no possibility of drawing sketches—the open or closed polygon or the arched shape were the only two allowed entities—it undoubtedly allowed this work to start the research in the representation that opened up a new experimental field of great interest both for academic research and for private applications. It is interesting to re-read Sutherland’s thesis because in some cases it clarifies the differences that the young researcher had identified with respect to the traditional design. “An idea that was difficult for the author to grasp was that there is no state of the system that can be called ‘drawing’. Conventionally, of course, drawing is an active process that leaves a trail of carbon on the paper. With a computer sketch, however, any line segments are straight and can be relocated by moving one or both of its end points” [

7] (p. 102). While drawing on a computer takes on a different behavior than traditional one, so much to make the use of the same term difficult, the image term does not put the same questions, probably because it could already be done with a mechanical tool, such as, for example, the camera.

By dealing with the theme of digital images, we cannot miss the aspect of virtual processing in the form of realistic image, or

rendering. A digital simulation can only be achieved through a three-dimensional digital model that has been associated with some entities and properties, such as a light source, color and surface textures. In the 3D version of

Sketchpad, developed by Timothy Johnson in the same period at MIT (called

Sketchpad III) [

9,

10,

11], algorithms are defined to work with a three-dimensional digital model (

Figure 5). And starting from it, you can begin looking for advanced visualization through rendering procedures.

The first rendering algorithms were developed at the end of the 1960s: the main problem to solve was the hidden lines one, that is to define the ways in which the frontal edges of a figure could be represented and how to hide the receding ones. There are many attempts to optimize the system of representation, among which one must remember in particular those of R. Galimberti and U. Montanari [

12], of P. Louterl [

13], and of P.G. Comba [

14]. Once these conditions were defined, it was necessary to understand the ways in which the surfaces could be shaded, depending on the position of a virtual illumination. The calculation procedure, in fact, was already widely known and published in various treatises and manuals dedicated to the theory of shadows and chiaroscuro. Scientifically described in Johann Heinrich Lambert’s

Photometria of 1760 [

15], it inseparably binds the tone of a surface by defining the cosine law, according to which the luminous intensity of a surface is directly proportional to the cosine of the angle between the direction of the incident light and the surface normal. The first algorithms therefore take into account this aspect, including the contributions by J.E. Warnock [

16], by G.W. Romney [

17], by W.J. Bouknight and K. Kelley [

18].

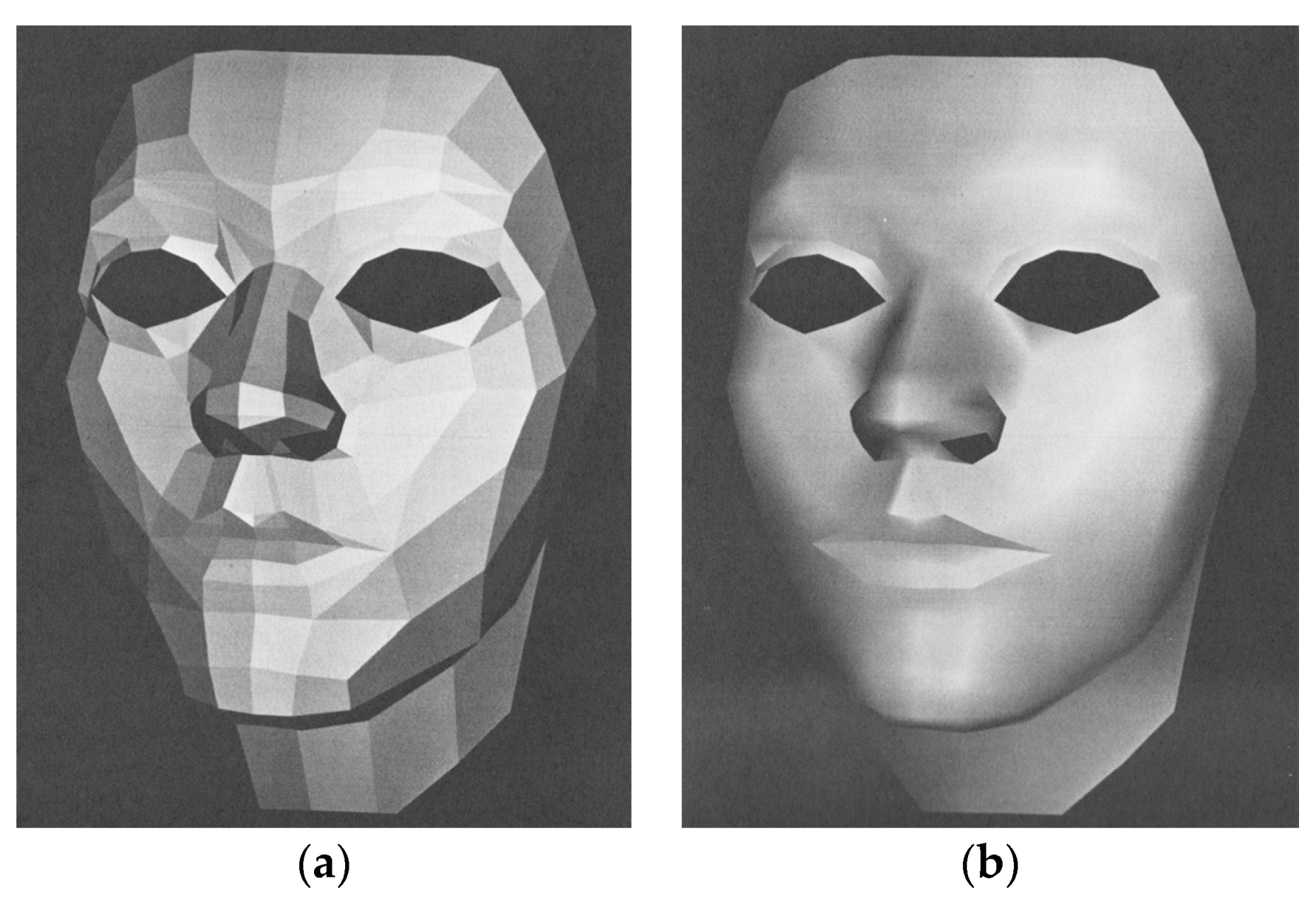

A compendium that, besides analyzing this aspect, also considers the further problem of bending a curved surface is that by Henri Gouraud, who addresses the subject in his PhD thesis, discussed at the Department of Electrical Engineering of the University of Utah in June 1971 [

19]. Gouraud’s work, which was supervised by Sutherland himself, is considered a significant result: digital images are no longer just scans acquired using optical instruments, such as Kirsch’s ones, but they can be created independently from an analog equivalent. By the end of the 1960s and the early 1970s, the digital image therefore became a powerful tool for simulating reality that will deceive in future even the most eye-catching image viewers. Gouraud’s thesis, among other things, also contains another important feature related to the image: this is the transposition of a human face into a digital 3D model. The method used is based on the extraction of homologous points from two photographic images of a feminine face on which were drawn some construction’s lines. The homologous points are therefore immediately attributable to the intersections of these lines. The woman’s face—Sylvie’s wife voluntarily offered for the experiment—will therefore be one of the first three-dimensional models from photographic images, anticipating experiments on Image-Based Modeling developed at the end of the 20th century. The surfaces that make up the face will be smoothed by the algorithm developed by the researcher, so as to get closer to the real appearance of the woman. The cold image of a polyhedric mask similar to an automa will assume human traits thanks to this algorithmic stratagem, although still very far from a representation similar to reality (

Figure 6).

Subsequent studies—most notably those of Bui Tuong Phong [

20] and James F. Blinn [

21]—will give greater realism to the digital image, the first by optimizing the distribution of light in the virtual space of the computer—considering the diffuse component of light in addition of the direct one—the second by starting experimentation on the vast field of application of the textures to a digital model. Their PhD theses will stand out for having tackled the issues in a significant manner.

4. The Origin of the Term Pixel

We cannot miss the aspect of one of the most important terms of the digital image, that is the one that identifies the unit of measurement of it, the

pixel. We have avoided using this term by talking about the first image scans because of the fact that it will be coined in the next decade. The term was used, for the first time, during two conferences of the International Society for Optics and Photonics (SPIE) organized in 1965, inside two papers by Fred C. Billingsley [

22,

23], a researcher of the Imaging Processing Lab of the Jet Propulsion Laboratory, to express in shape contrasts the concept of

picture element. In particular, the first one was published in the same year, titled

Digital Video Processing at JPL, describes the objectives and results of the ongoing researches, especially with reference to the expeditions on the moon of Rangers VII, VIII and IX missions aimed to be able to transmit close-up images of the moon surface before the collapse of the spaceship by touching the ground. Among the objectives was, on one hand, the improvement of the quality of the images and the reduction of the background noise and, on the other hand, “to operate on the pictures themselves to produce other useful products” [

22] (p. 1) as described by the author himself. To clarify these last considerations, the author says: “we have developed a computer program for mosaicking several pictures together and are beginning to program to extract information from the picture in digital form” [

22] (p. 1). Two pages later, the

pixel term for the first time appears: “We have chosen to sample at 500 KC rate and we define each of these samples as a picture element or a pixel” [

22] (p. 3). An alternative to this term was the one proposed by William F. Schreiber of the Massachusetts Institute of Technology, who during the IEEE conference in 1967 proposed the term

pel [

24]. Although for a few years the two terms coexisted in the definition of the same aspect, since 1970 the first became more frequent to describe the smallest entity of the digital image. It is not clear, however, whether the term was coined by the two researchers or whether it was in use in the two structures where they worked and used by them to describe a concept that has now been acquired. A detailed study of the history of the term, not only referring to these specific cases, was drawn up by Richard F. Lyon and presented at a conference of the International Society for Optics and Photonics after about forty years from that 1965 [

25].

5. From Scientific Researches to Art Applications

While scientific application seems to be the only viable outcome of the experimentation—also because of the high costs of technical equipment—on the other hand, artists’ curiosity opens the doors to a possible different application of research, which nothing seems have to deal with science.

The image, therefore, assumes other connotations more linked to the field of art. It must be emphasized, however, that in many cases the artistic object corresponds to a mere ostentation of technology, so the form becomes the content of the matter. It is possible to find a wealthy case repertoire in those years, and it is difficult to make a detailed history due to the fact that documents are often not officially presented—as in the case of a scientific conference, which proceedings are available—but are exhibited in temporary exhibitions, often set up in fairs’ shows and sponsored by hardware and software manufacturers.

Surely, it can be hard to define “artists” these persons, precisely because their knowledge is almost always tied to the world of computer technology and often lack the necessary culture and art formation that should have an operator in that field. Some cases are emblematic: think about Efi Arazi, a MIT engineer who produced a complex elaboration generated by an algorithm that was published on the cover of the January issue of 1963 of the “Computers and Automation” journal [

26]. Just this image convinced the editor of the journal, Edmund Berkeley, to coin the word

computer art, so to propose the launch of an annual competition for the best computer artwork done, the Computer Art Contest.

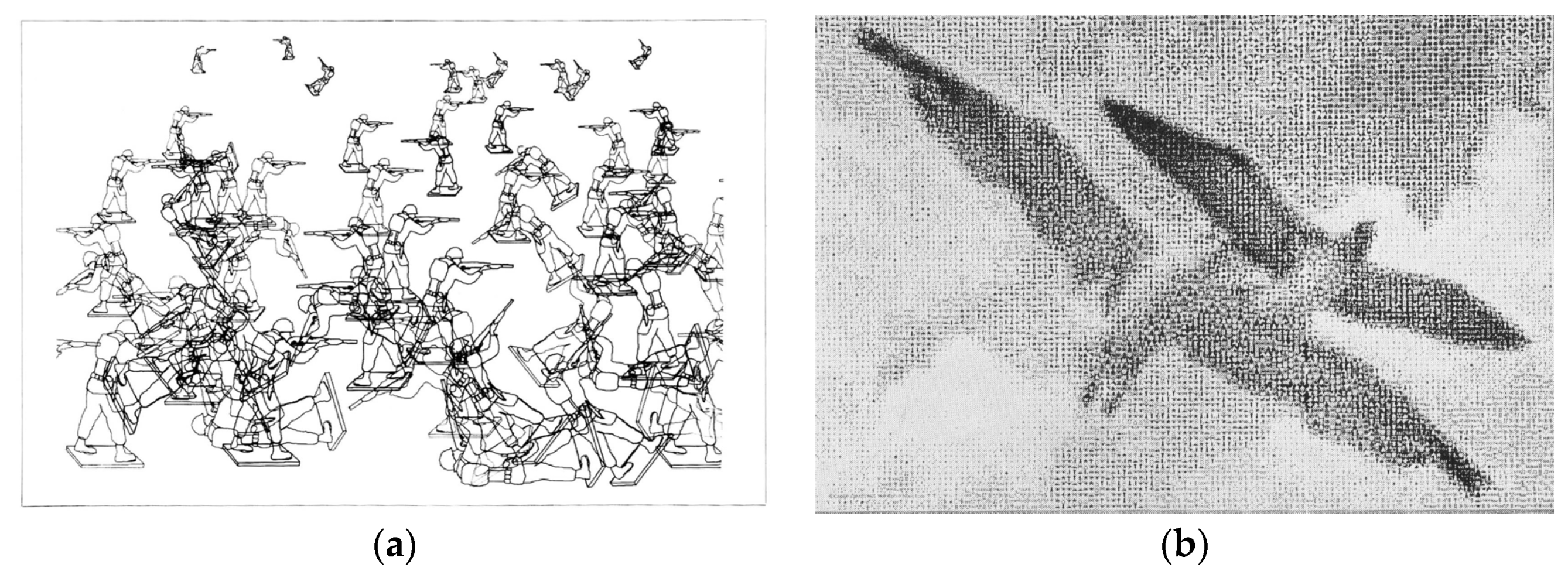

Among the most significant exhibitions of digital artwork, we have to include the

Cybernetic Serendipity exhibition, curated by Jasia Reichardt at the Institute of Contemporary Arts in London in October 1968 [

27]. As a director’s assistant, Reichardt collected a series of electronic experiments to the world of art. Works on music, poetry, dance, but also painting, drawing and images in general were exhibited here: all the elaborations that had as a common element being produced with digital technologies. Among the artists we can find artist-programmers such as: C. Csuri, J. Shaffer, K.C. Knowlton, L.D. Harmon. Among them, we must underline the figure of Charles Csuri, one of the first digital artists whose research is linked to the world of manipulation of computer-generated images. Arthur Efland’s long interview with the artist, presented in the exhibition catalog [

28], allows us to understand better how Csuri works for the production of digital artifacts. Even though Reichardt is a critic of art, it is necessary to underline that the interest in these materials will be shown above all by those who deal with graphics and communication rather than who operates in the world of criticism (

Figure 7).

Among the famous artists who have used the digital instrument for their work, we must remember Andy Warhol and Wim Wenders: the first one has used, in the last years of his life—after 1985—a computer capable of editing images (a technology, that is to say, at that time available to everyone), with which he made several digital works, recently found in the electronic archives of Andy Warhol Museum in Pittsburg [

29]. The second has faced problem of digital technology during the writing of the well-known film

Until the end of the world. In it, in fact, digital images are shown as a result of brain activity recordings, conducted with a strange electronic sampling machine, to allow images to be transmitted to a blind person. These images in false colors and chromatically altered, made in 1991, were also be presented by Wenders himself at the 45th Venice Biennale in 1993 as

Electronic Paintings [

30]. It is interesting to note the director’s texts about their conception: “All our images were transferred to high definition tape, and then processed digitally, some of them for more than a hundred different effects and generations. Sean Naughton and I sent those images through every possible manipulation, via paint-box, matte-box, color correctors and every trick in the book” [

30] (p. 25).

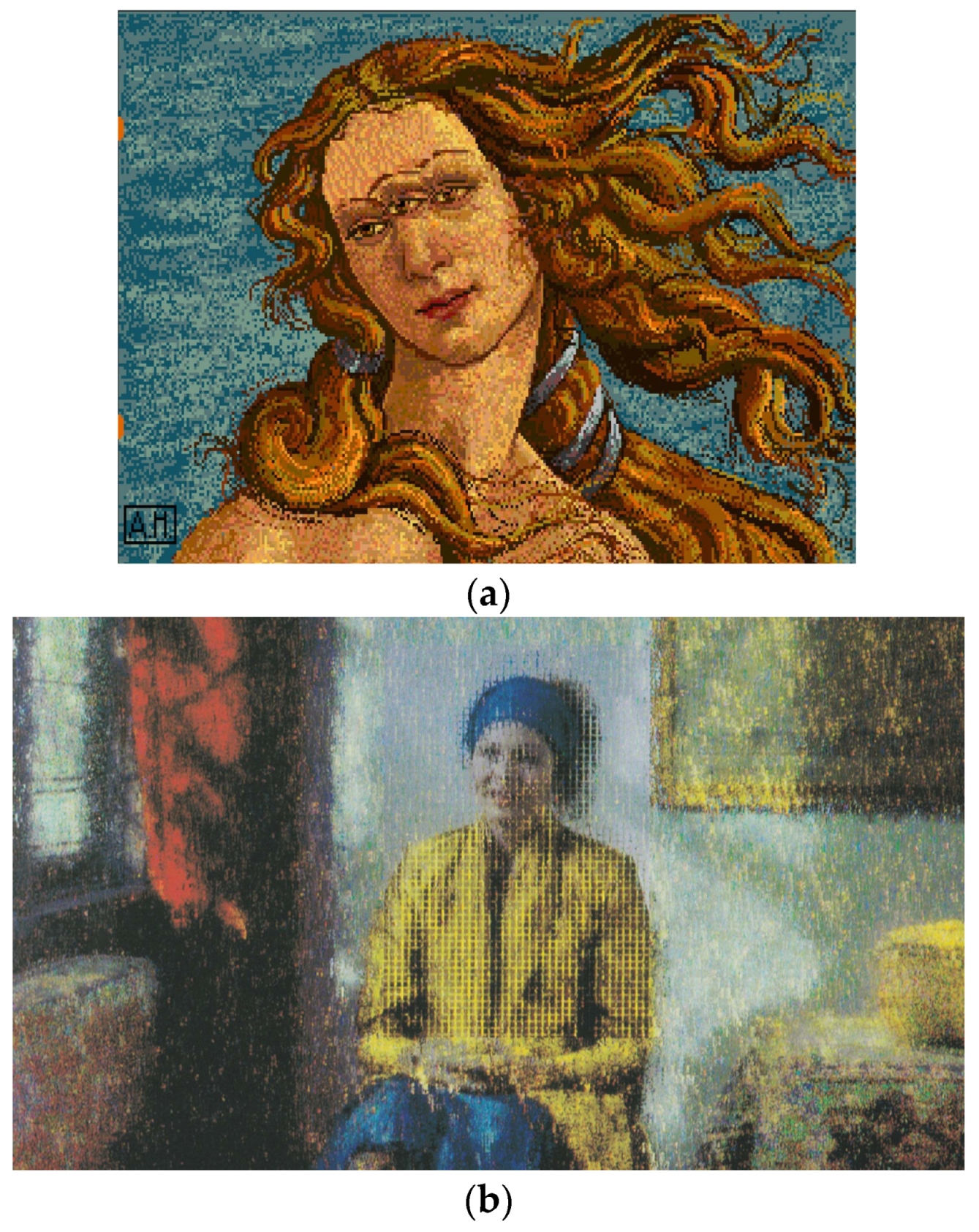

Both in Warhol’s and in Wenders’s case we have a singular way to go on by traditional artists who are working with new technologies (

Figure 8).

6. The Compression Algorithm

But coming back on the origins of digital image processing we cannot miss a another aspect of iconographic manipulation aimed to optimizing content. The exponential increase in computing power has determined now the possibility to process images that are very rich of information in a short time. However, in 1960s, the computing available through those early processors did not allow them to work in real time. The response to each operation was, in fact, very slow, as the size of the storage media on which the information was to be stored were limited. It was therefore necessary to proceed with filtering operations on the source file, safeguarding the readability of the data. This will be the problem of identifying data cataloging strategies reducing the size of the file while keeping the visual indications unaltered.

One of the most significant experience was done by some researchers of the Signal and Image Processing Institute (SIPI) of the University of Southern California, who in the early 1970s addressed the topic. Color algorithms, tonal comparison, chromatic filtering algorithms were produced to optimize the files being processed and create, for example, jpeg standards [

31], now known to all. If the algorithms were sufficiently complex—and required strict procedural control by the researchers—it was thought to overwhelm the inflexibility of a numerical manipulation with the content of the image. In this way, they decided to take as a subject of figuration—to intervene with the alterations needed to improve the image—a female face taken from a magazine of large diffusion. Among them, the choice of the famous adult magazine

Playboy fell almost by accident, perhaps due to its spread among the researchers. In the issue of November 1972 [

32] of it—a particularly lucky issue for the amount of copies sold—the central double page, as in its tradition, was devoted to a young, succinct Swedish-born girl who, without her knowledge, became the subject of scientific experimentation in those research laboratories. The young Lenna Sjööblom (whose real name is Lena Söderberg) was then filtered and manipulated for scientific aims, thanks also to the type of contents present in the photograph: in addition to the partially nude human body, in fact, there were furnishings, lightening shades and particular chromatisms, which made this image really noticeable. Although some parts of the person were carefully cut off, Lenna’s image was a subject of great interest, and soon became a true icon of the digital image, participating—in the iconographic form of a digital artifact—to many conferences concerning this research (

Figure 9).

After a long time the interest in the person grew, requesting for interviews about her personal life in those years (which, of course, have nothing to do with iconographic experimentation), since becoming a godmother in 1997 of the fiftieth meeting of the Society for Imaging Science and Technology in Cambridge. There was also a lawsuit launched in 1991 by

Playboy when the image was presented on the cover of the scientific journal

Optical Engineering [

33], a lawsuit towards the Signal and Image Processing Institute for having used that image without permission.

The most singular acknowledgment for the young pin-up is, however, come from an admirer, Thomas Colthurst, an eclectic software engineer currently employed at Google, who has dedicated a sonnet to her, which the most significant verses are: “O dear Lena, your beauty is so vast/It is hard sometimes to describe it fast/I thought the entire world I would impress/If only your portrait I could compress. […] And for your lips, sensual and tactual/Thirteen Crays found not the proper fractal. […] But when filters took sparkle from your eyes/I said, “Heck with it. I’ll just digitize” [

34]. An admirable way to remember her and to express the subtle boundary between scientific research and

divertissement.

7. Conclusions

After this analysis on the origins of digital image processing, it is possible to understand better the strict relationship between the instruments and the procedures used, but also the terms and the contents of the elaborations. The main and most significant historical examples were identified, finding the relevant bibliographic references in the related fields. The results made it possible to comprehend the difficulties faced by the researchers and the ways in which it was possible to reach the current results. In particular, the relations between scientific activity and application in the field of figurative art were also studied, finalized to start a research strategy that would allow a rediscovery of historical methods that were not considered in technological evolution, but which could be recovered for enrichment of future experimentation.