Intelligent Robot Guidance in Fixed External Camera Network for Navigation in Crowded and Narrow Passages †

Abstract

:1. Introduction

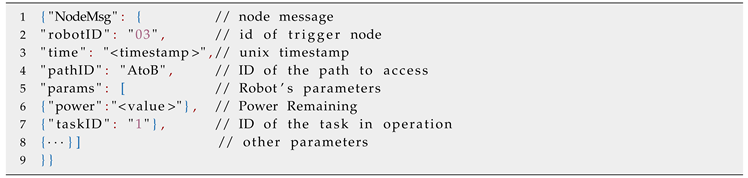

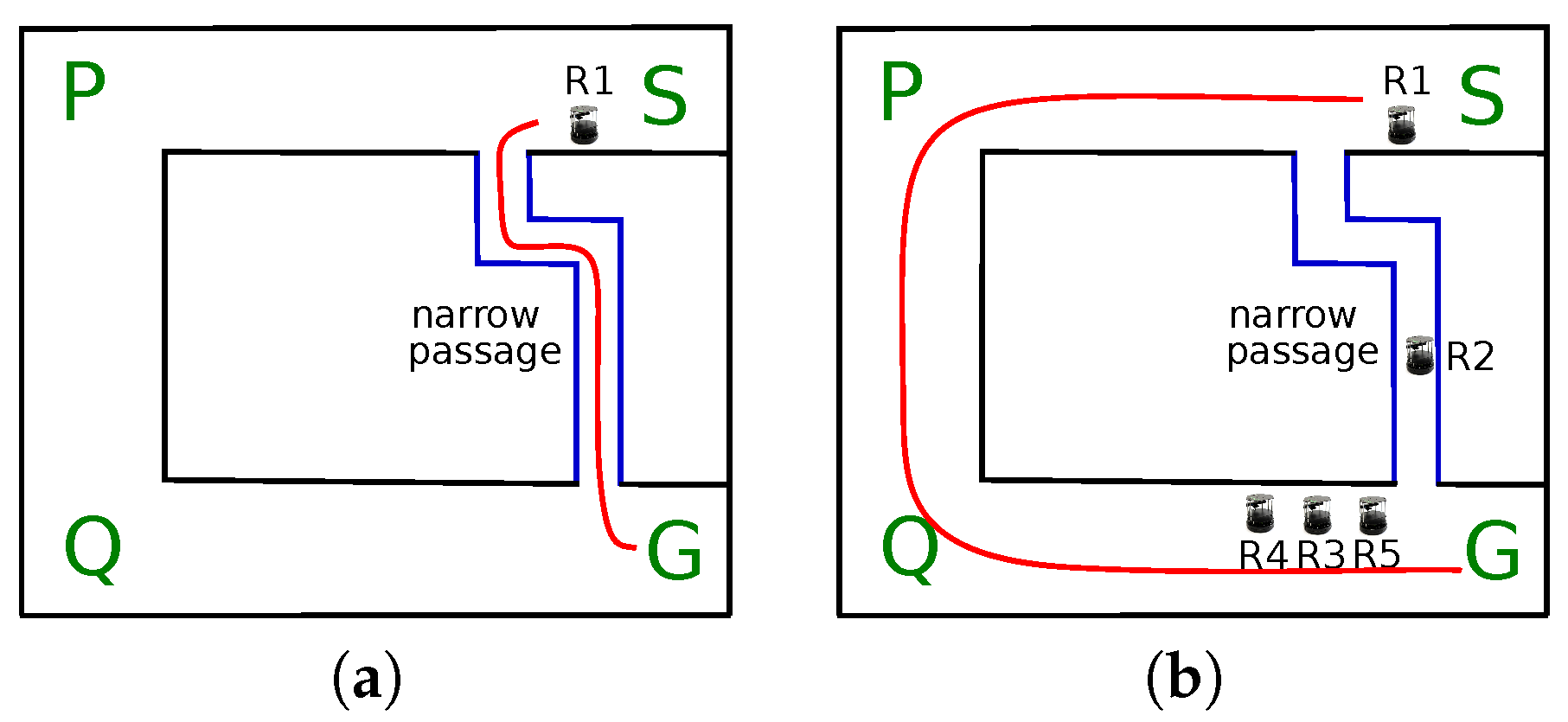

- Example 1: Service robots often have sensors like cameras to perceive the external world and perform tasks like collision avoidance. Most of the times these sensors are ‘forward’ facing. As shown in Figure 1a, this causes the robot to be unaware of the movement of people or other robots in the back. Similarly, they are attached at low height due to which the robot cannot see far off obstacles, particularly in case of occlusion which is also shown in Figure 1a. The mobile robot must change its trajectory according to the movement of people approaching the robot from the back. Similarly, the robot could be in a better position to plan a trajectory if it could also get information about far off entities in the environment.

- Example 2: To maximize area utilization, most of the passages of warehouses, libraries etc. are too narrow. While people are known to demonstrate flexibility to cross-over in such narrow passages, most of the mobile robots are rigid for such crossover to successfully occur in narrow passages. As shown in Figure 1b, robot R1 and R2 are in a deadlock condition and either has to retreat to make way for another robot. The problem is more complex if movement of multiple robots and people is also considered. In Figure 1b, it is possible for robots R3, R4, or R5 and some person to try to access the narrow passage. In the absence of a policy to resolve conflicts, the robots might be perpetually retreating which is inefficient. The policy must first avoid such conflicts by careful planning, and if the conflicts occur then they must be resolved intelligently by taking into account many important factors like the power availability of the robots and the priority of the task undertaken.

1.1. Main Idea of This Paper

2. Graph Representation of Environment

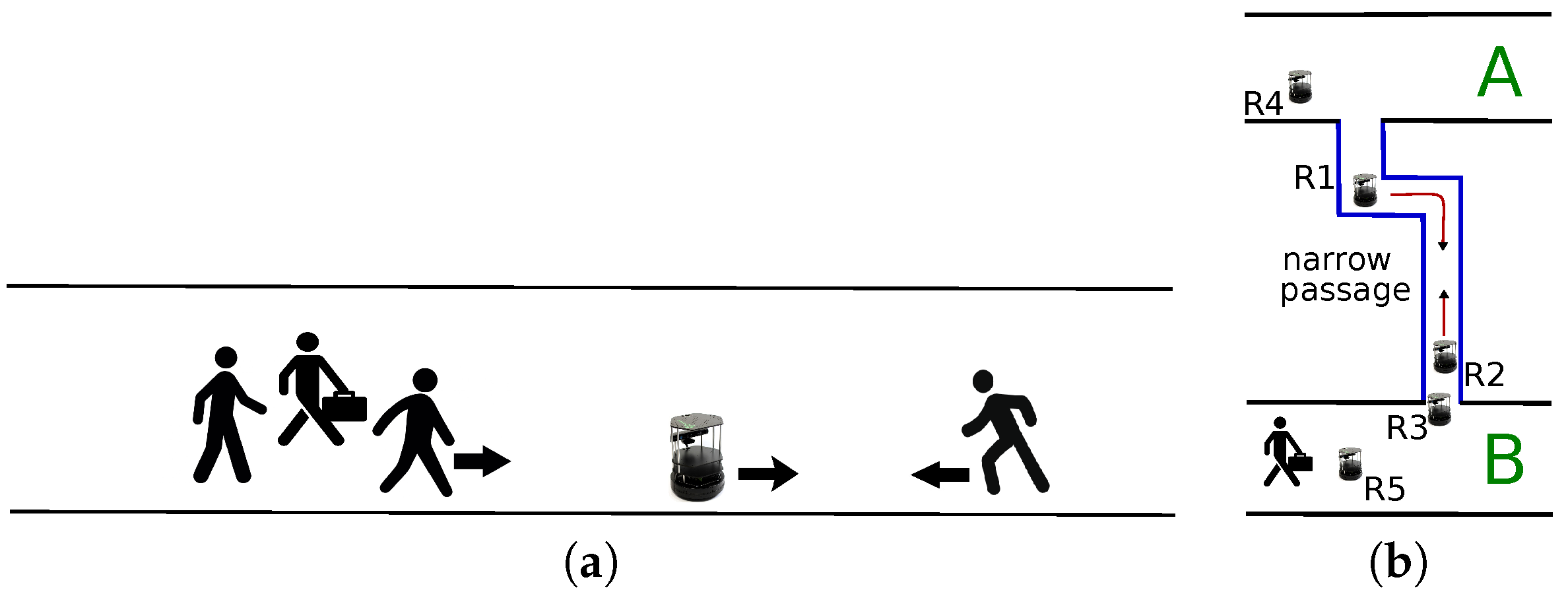

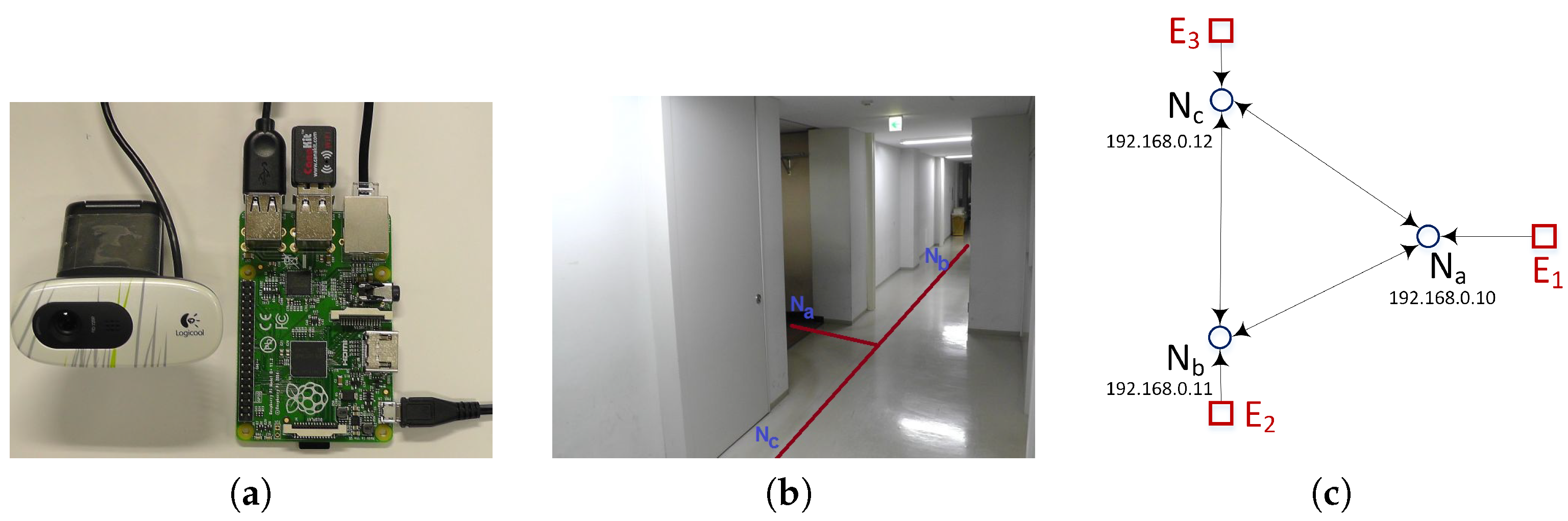

- Node: A node comprises of a processing board with camera attached to it. Depending upon the processing capability of the board, multiple cameras could be attached to the same board.

- Links: A link connects two nodes and represents the spatial path between two processing boards (or nodes). Links could be directional, representing not only the vector of traffic flow, but also the vector of source node and destination node in node communication.

3. Modified Priority Queue

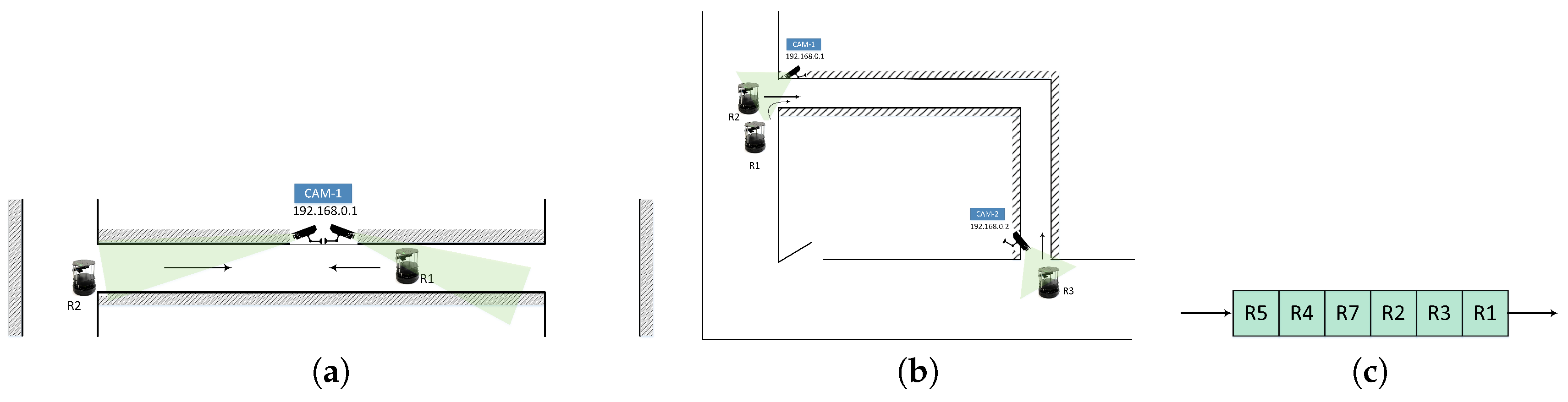

| Listing 1: An Example of Robot’s Request in JSON Format. |

|

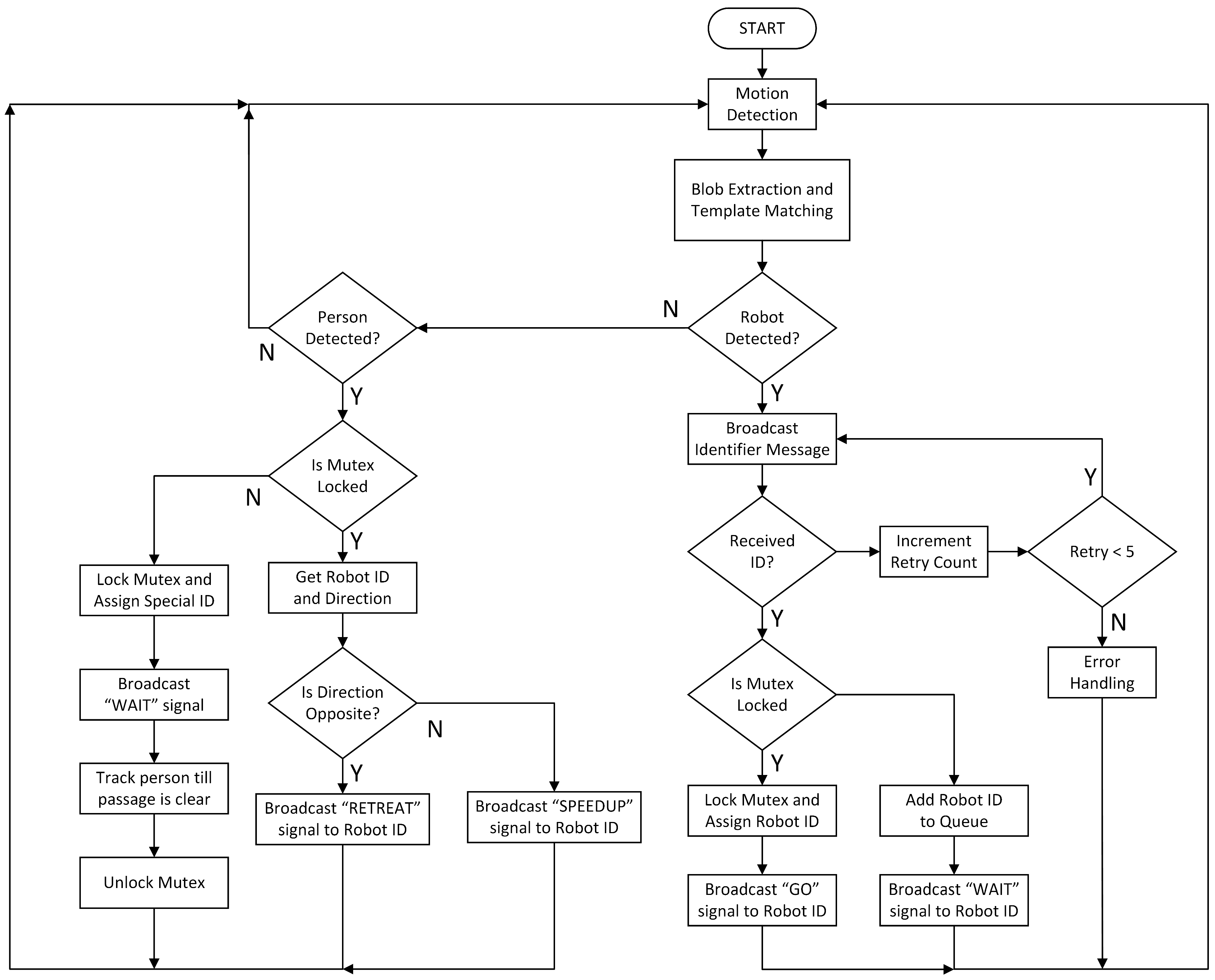

4. Path Allocation Process

5. Experimental Results

6. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Pizarro, D.; Marron, M.; Peon, D.; Mazo, M.; Garcia, J.C.; Sotelo, M.A.; Santiso, E. Robot and obstacles localization and tracking with an external camera ring. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA 2008), Pasadena, CA, USA, 19–23 May 2008; pp. 516–521. [Google Scholar]

- Ji, Y.; Yamashita, A.; Asama, H. Automatic calibration and trajectory reconstruction of mobile robot in camera sensor network. In Proceedings of the IEEE International Conference on Automation Science and Engineering (CASE), Gothenburg, Sweden, 24–28 August 2015; pp. 206–211. [Google Scholar]

- Pizarro, D.; Santiso, E.; Mazo, M.; Marron, M. Pose and Sparse Structure of a Mobile Robot using an External Camera. In Proceedings of the IEEE International Symposium on Intelligent Signal Processing (WISP 2007), Alcala de Henares, Spain, 3–5 October 2007; pp. 1–6. [Google Scholar]

- Ravankar, A.; Ravankar, A.A.; Kobayashi, Y.; Jixin, L.; Emaru, T.; Hoshino, Y. An intelligent docking station manager for multiple mobile service robots. In Proceedings of the 15th International Conference on Control, Automation and Systems (ICCAS), Busan, Korea, 13–16 October 2015; pp. 72–78. [Google Scholar]

- TurtleBot 2. TurtleBot 2 Robot. Available online: http://turtlebot.com/ (accessed on 20 April 2017).

- Pioneer P3-DX. Pioneer P3-DX Robot. Available online: http://www.mobilerobots.com/ (accessed on 20 April 2017).

- Ravankar, A.; Kobayashi, Y.; Ravankar, A.A.; Emaru, T. A Connected Component Labeling Algorithm for Sparse Lidar Data Segmentation. In Proceedings of the 6th International Conference on Automation, Robotics and Applications (ICARA 2015), Queenstown, New Zealand, 17–19 February 2015. [Google Scholar]

| Task | Task ID () | Task Priority () | Required Power() |

|---|---|---|---|

| Surveillance | 10 | ||

| Delivery | 3 | ||

| Patrolling | 8 | ||

| Cleaning | 9 | ||

| ⋮ | ⋮ | ⋮ | ⋮ |

| Others | 7 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2016 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ravankar, A.; Ravankar, A.; Kobayashi, Y.; Emaru, T. Intelligent Robot Guidance in Fixed External Camera Network for Navigation in Crowded and Narrow Passages. Proceedings 2017, 1, 37. https://doi.org/10.3390/ecsa-3-D008

Ravankar A, Ravankar A, Kobayashi Y, Emaru T. Intelligent Robot Guidance in Fixed External Camera Network for Navigation in Crowded and Narrow Passages. Proceedings. 2017; 1(2):37. https://doi.org/10.3390/ecsa-3-D008

Chicago/Turabian StyleRavankar, Abhijeet, Ankit Ravankar, Yukinori Kobayashi, and Takanori Emaru. 2017. "Intelligent Robot Guidance in Fixed External Camera Network for Navigation in Crowded and Narrow Passages" Proceedings 1, no. 2: 37. https://doi.org/10.3390/ecsa-3-D008

APA StyleRavankar, A., Ravankar, A., Kobayashi, Y., & Emaru, T. (2017). Intelligent Robot Guidance in Fixed External Camera Network for Navigation in Crowded and Narrow Passages. Proceedings, 1(2), 37. https://doi.org/10.3390/ecsa-3-D008