Finite-Time Cluster Synchronization of Fractional-Order Complex-Valued Neural Networks Based on Memristor with Optimized Control Parameters

Abstract

1. Introduction

- ①

- This paper studies the fractional-order CNNs with a time delay, where the network includes memristive elements and complex-valued states, resulting in a model that is not only more complex than those studied in [31,32] but also better suited for simulating real-world scenarios. Unlike the conventional decomposition approach [20,21,34], this study employs a non-decomposition method. Although the decomposition method simplifies theoretical analysis, it often falls short in practical applications. The non-decomposition approach, on the other hand, provides a more realistic representation of complex systems, aligning more closely with real-world dynamics.

- ②

- This paper focuses on FTCS, extending beyond conventional CS [35,36] or FTS [37], which has faster convergence time, and the synchronization time can be calculated in advance. To achieve FTCS, we design a simple controller based on the CVSF and derive synchronization criteria that provide a range of control parameters. The settling time for FTCS can be readily determined through the Mittag–Leffler function. While FTCS has been explored in previous studies [19,31,32], the networks investigated in those works differ significantly from the one considered here. Notably, this is the first study to address FTCS for FOCV-coupled neural networks with memristors using a non-decomposition method.

- ③

- An optimization model solved by PSO is proposed to select the most cost-effective control parameters. This optimization approach guides the selection of control parameters within a broad range that satisfies the synchronization conditions. Although previous studies [31,32] designed similar controllers, they only provided a range for the parameters without specifically focusing on actual control requirement.

| Item | [19] | [32] | [20] | [31] | [34] | [21] | [37] | This Paper |

|---|---|---|---|---|---|---|---|---|

| Fractional-order | ✓ | ✓ | ✓ | ✓ | ✓ | |||

| Complex-valued | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| Memristor | ✓ | ✓ | ✓ | ✓ | ||||

| FTS | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| CS | ✓ | ✓ | ✓ | ✓ | ||||

| Non-decomposition | ✓ | ✓ | ✓ | |||||

| Parameter optimization | ✓ |

2. Preliminaries and Model Description

2.1. Preliminaries

- ①

- [32] .

- ②

- There exists such that , when , . , where .

2.2. Model Description

- ①

- ②

- where , , , , , .

- ①

- ②

3. Main Results

4. Optimization of Control Parameters

4.1. The Optimization Model

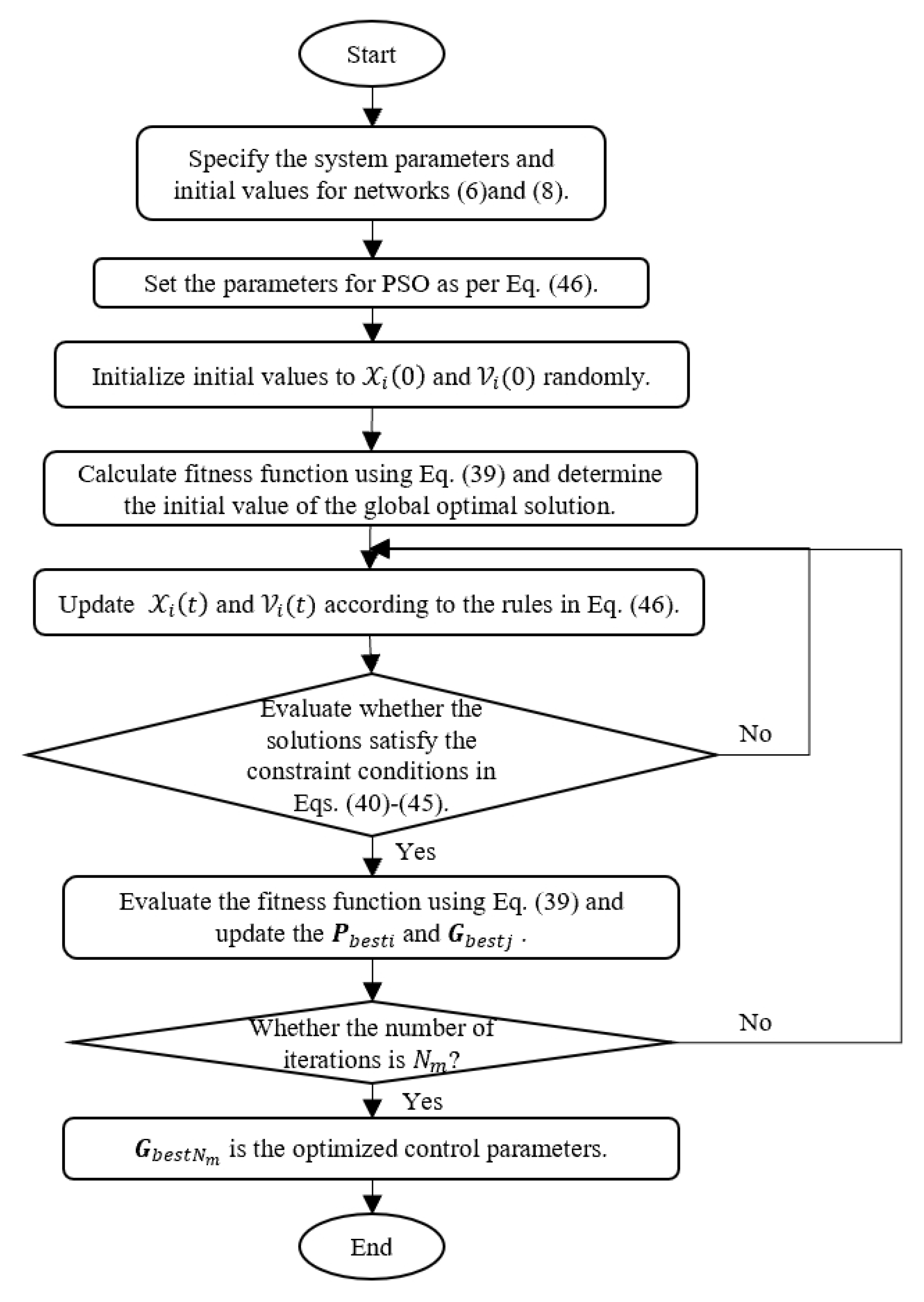

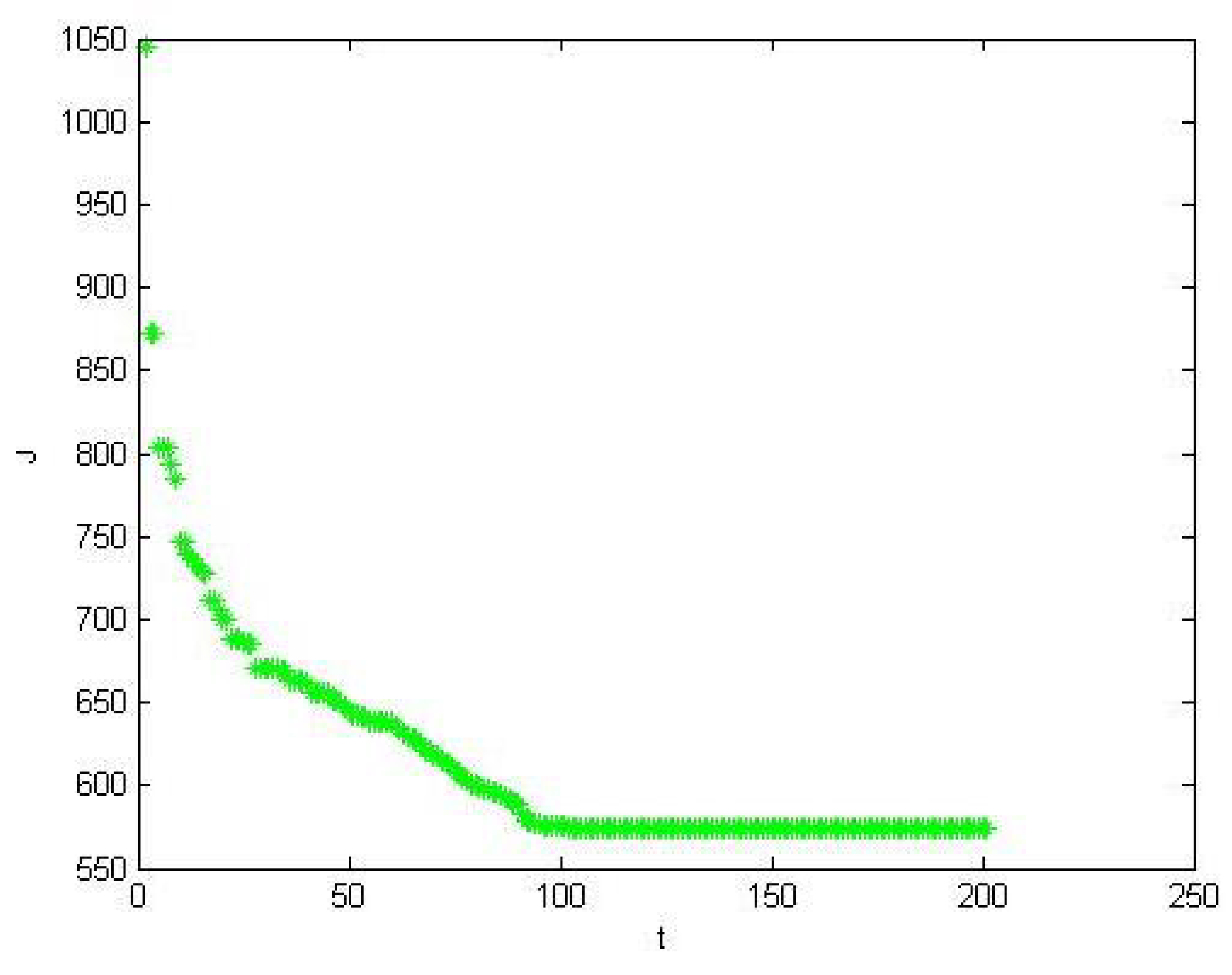

4.2. An Algorithm with PSO

5. Simulation

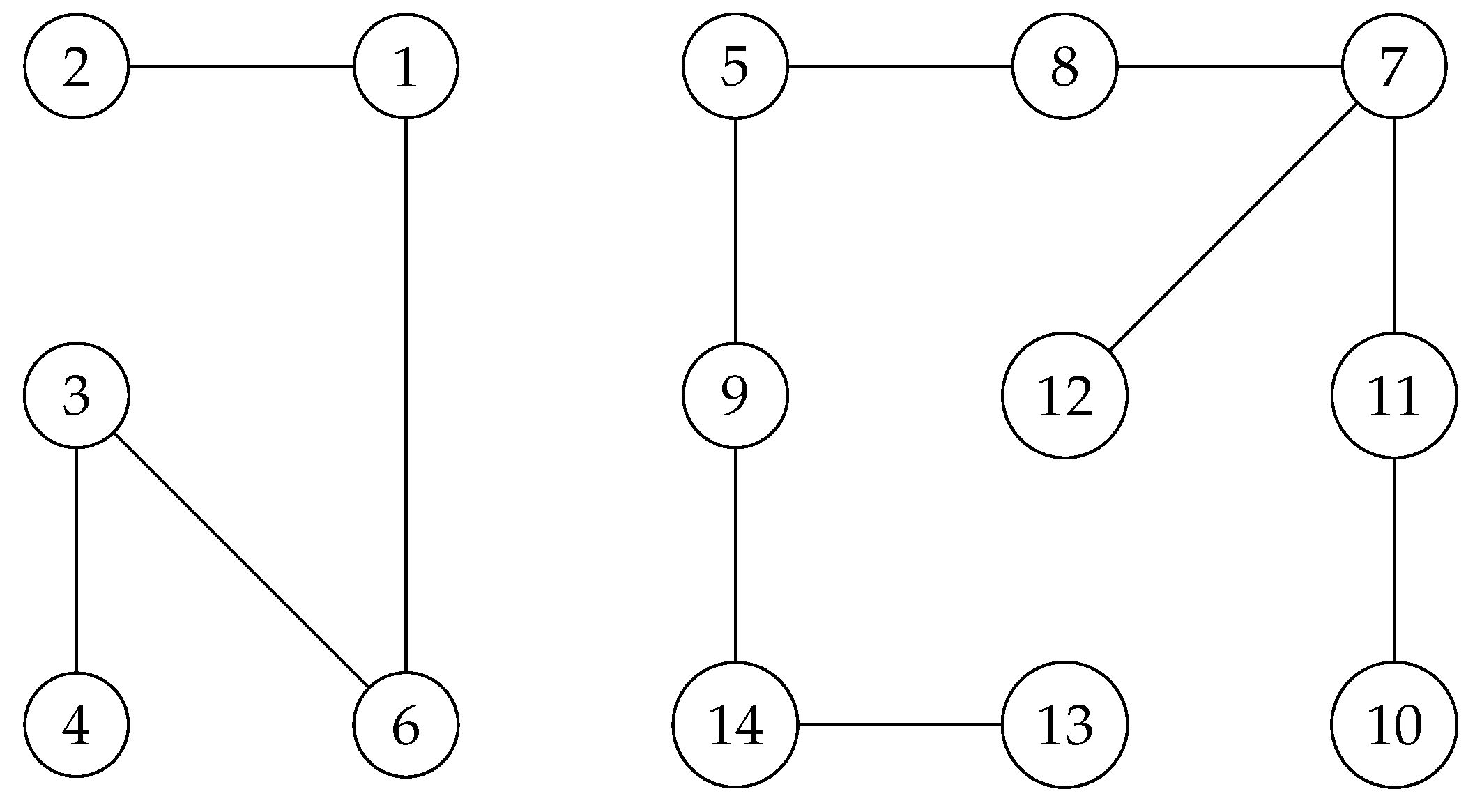

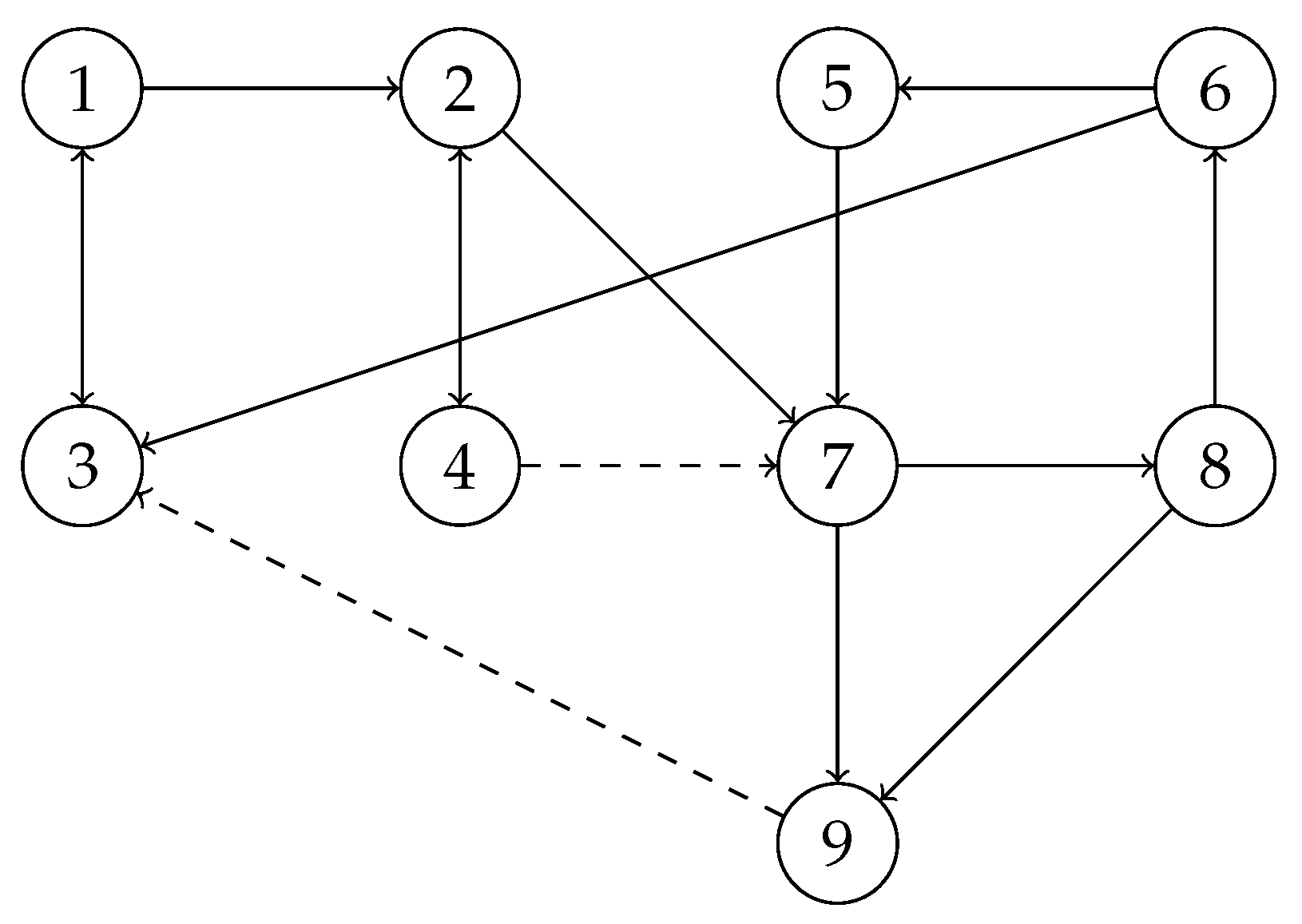

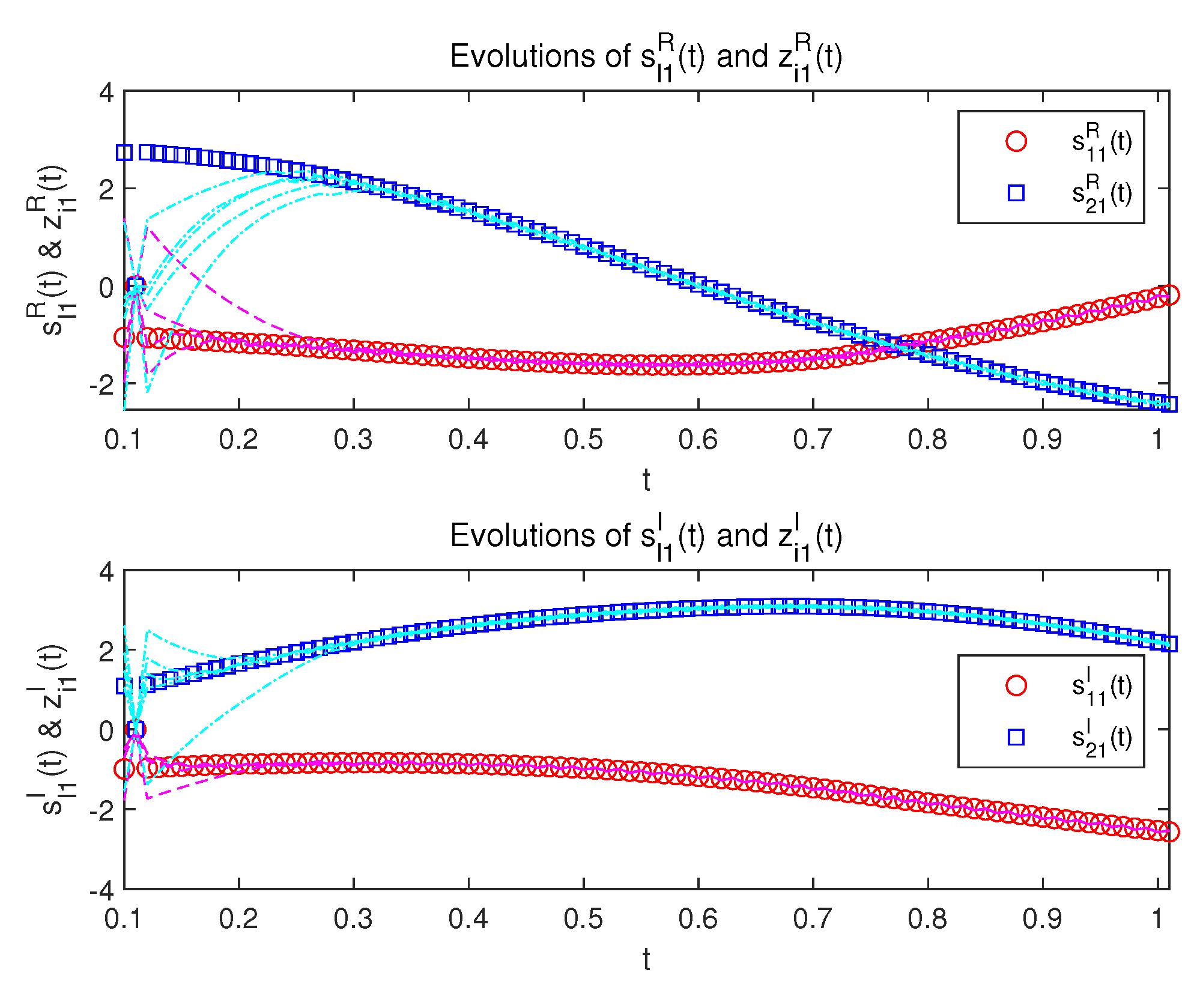

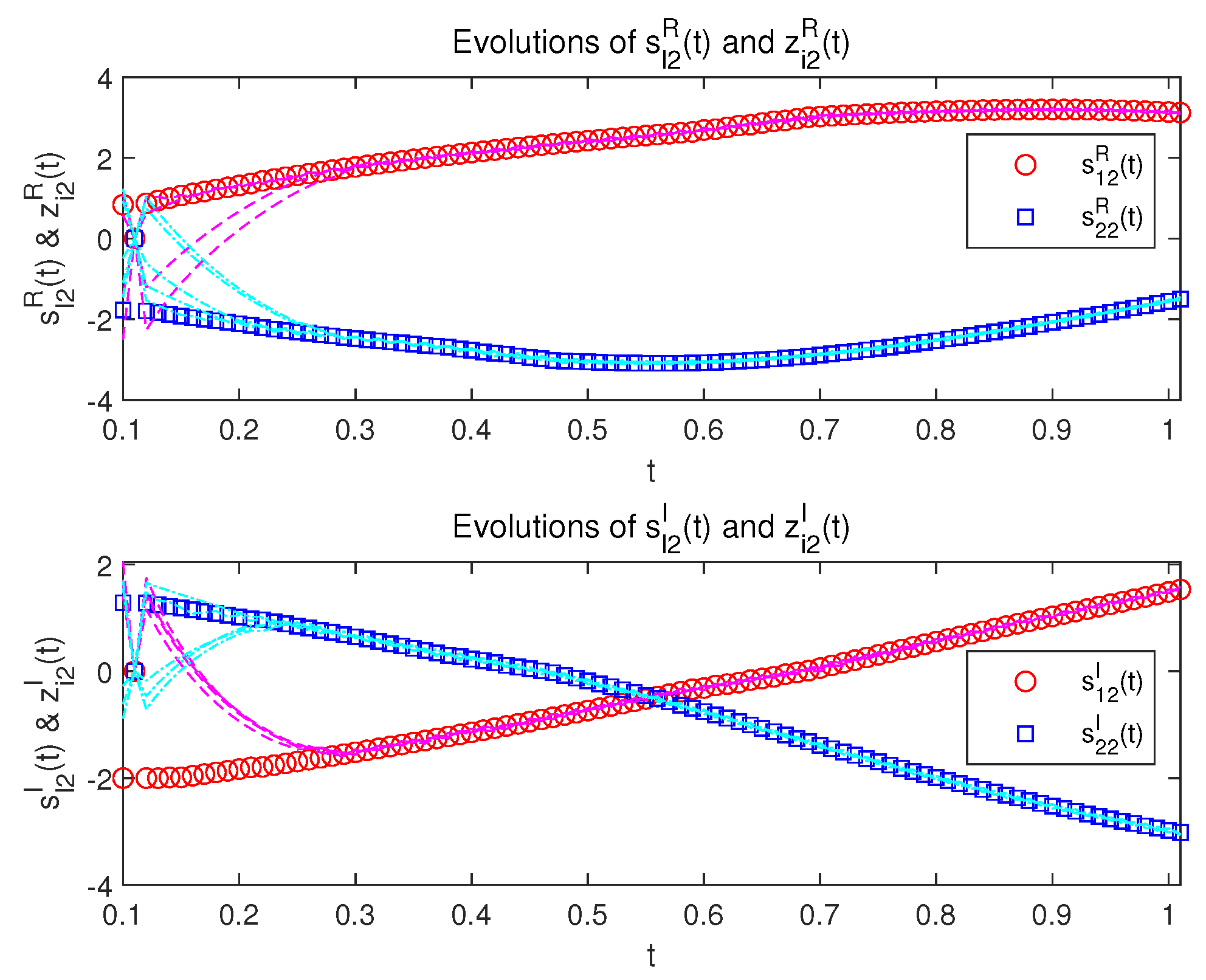

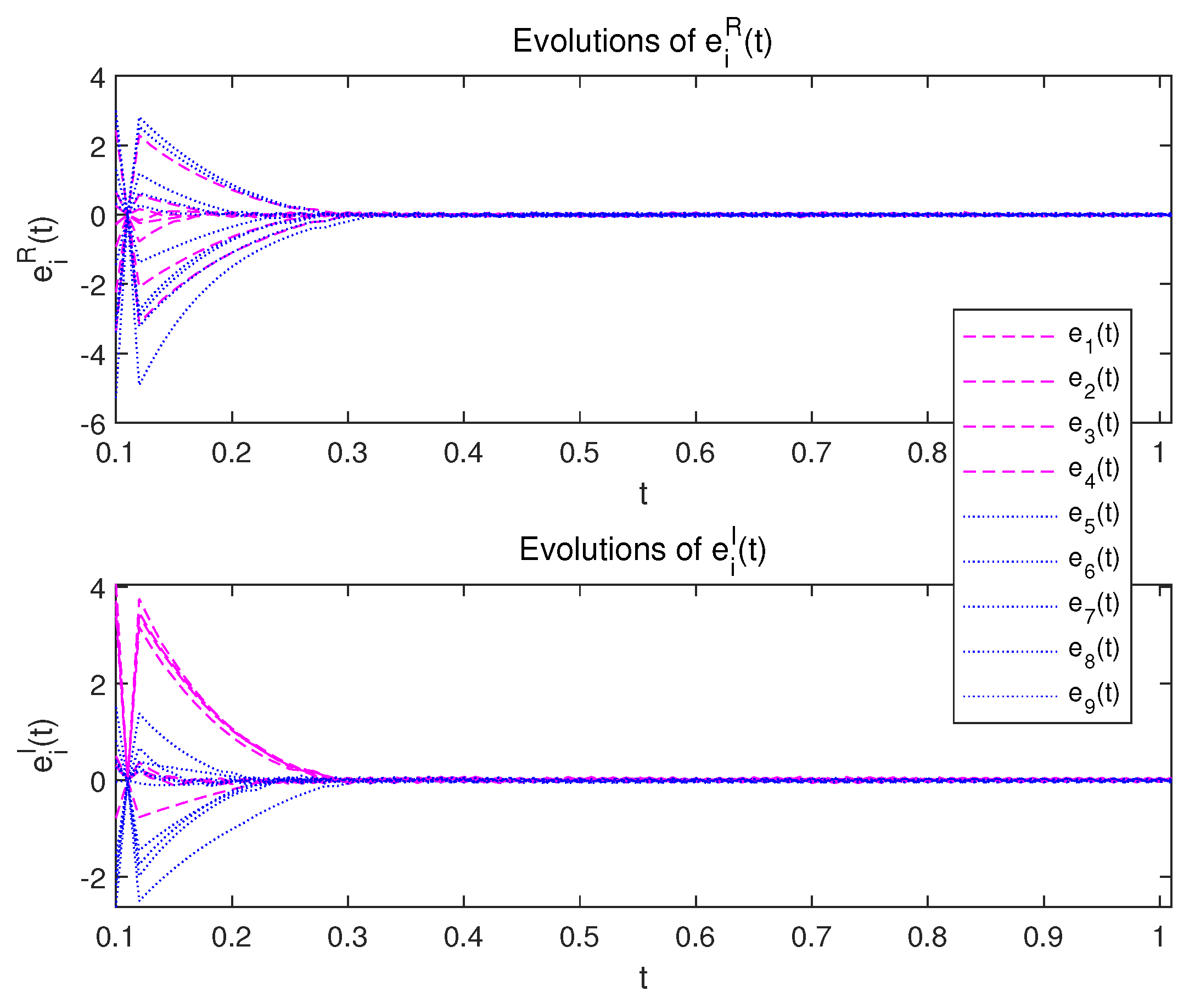

5.1. Example 1

5.2. Example 2

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- lghamdi, F.A.; Almanaseer, H.; Jaradat, G.; Jaradat, A.; Alsmadi, M.K.; Jawarneh, S.; Almurayh, A.S.; Alqurni, J.; Alfagham, H. Multilayer perceptron neural network with arithmetic optimization algorithm-based feature selection for cardiovascular disease prediction. Mach. Learn. Knowl. Extr. 2024, 6, 987–1008. [Google Scholar] [CrossRef]

- Luo, N.; Xu, D.; Xing, B.; Yang, X.; Sun, C. Principles and applications of convolutional neural network for spectral analysis in food quality evaluation: A review. J. Food Compos. Anal. 2024, 128, 105996. [Google Scholar] [CrossRef]

- Zhao, B.; Cao, X.; Zhang, W.; Liu, X.; Miao, Q.; Li, Y. CompNET: Boosting image recognition and writer identification via complementary neural network post-processing. Pattern Recognit. 2025, 157, 110880. [Google Scholar] [CrossRef]

- Park, J.H. Recent Advances in Control Problems of Dynamical Systems and Networks; Springer-Nature: Cham, Switzerland, 2021. [Google Scholar]

- Lai, Q.; Yang, L.; Hu, G.; Guan, Z.-H.; Iu, H.H.-C. Constructing multiscroll memristive neural network with local activity memristor and application in image encryption. IEEE Trans. Cybern. 2024, 54, 4039–4048. [Google Scholar] [CrossRef]

- Liu, H.; Cheng, J.; Cao, J.; Katib, I. Preassigned-time synchronization for complex-valued memristive neural networks with reaction–diffusion terms and Markov parameters. Neural Netw. 2024, 169, 520–531. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhou, L. State estimation for proportional delayed complex-valued memristive neural networks. Inf. Sci. 2024, 680, 121150. [Google Scholar] [CrossRef]

- Chang, Q.; Park, J.H.; Yang, Y. The optimization of control parameters: Finite-time bipartite synchronization of memristive neural networks with multiple time delays via saturation function. IEEE Trans. Neural Networks Learn. Syst. 2022, 34, 7861–7872. [Google Scholar] [CrossRef]

- Aguirre, F.; Sebastian, A.; Le Gallo, M.; Song, W.; Wang, T.; Yang, J.J.; Lu, W.; Chang, M.-F.; Ielmini, D.; Yang, Y.; et al. Hardware implementation of memristor-based artificial neural networks. Nat. Commun. 2024, 15, 1974. [Google Scholar] [CrossRef]

- Zhang, G.; Wen, S. New approximate results of fixed-time stabilization for delayed inertial memristive neural networks. IEEE Trans. Circuits Syst. II Express Briefs 2024, 71, 3428–3432. [Google Scholar] [CrossRef]

- Deng, Q.; Wang, C.; Lin, H. Memristive Hopfield neural network dynamics with heterogeneous activation functions and its application. Chaos Solitons Fractals 2024, 178, 114387. [Google Scholar] [CrossRef]

- Li, Y.; Chen, Y.; Podlubny, I. Mittag–Leffler stability of fractional order nonlinear dynamic systems. Automatica 2009, 45, 1965–1969. [Google Scholar] [CrossRef]

- Panda, S.K.; Nagy, A.; Vijayakumar, V.; Hazarika, B. Stability analysis for complex-valued neural networks with fractional order. Chaos Solitons Fractals 2023, 175, 114045. [Google Scholar] [CrossRef]

- Feng, L.; Yu, J.; Hu, C.; Yang, C.; Jiang, H. Nonseparation method-based finite/fixed-time synchronization of fully complex-valued discontinuous neural networks. IEEE Trans. Cybern. 2020, 51, 212–3223. [Google Scholar] [CrossRef]

- Chang, Q.; Park, J.H.; Yang, Y.; Wang, F. Finite-Time multiparty synchronization of T–S fuzzy coupled memristive neural networks with optimal event-triggered control. IEEE Trans. Fuzzy Syst. 2022, 31, 2545–2555. [Google Scholar] [CrossRef]

- Sorrentino, F.; Pecora, L.M.; Hagerstrom, A.M.; Murphy, T.E.; Roy, R. Complete characterization of the stability of cluster synchronization in complex dynamical networks. Sci. Adv. 2016, 2, e1501737. [Google Scholar] [CrossRef] [PubMed]

- Rossa, F.D.; Pecora, L.; Blaha, K.; Shirin, A.; Klickstein, I.; Sorrentino, F. Symmetries and cluster synchronization in multilayer networks. Nat. Commun. 2020, 11, 3179. [Google Scholar] [CrossRef]

- Zhou, L.; Tan, F.; Yu, F.; Liu, W. Cluster synchronization of two-layer nonlinearly coupled multiplex networks with multi-links and time-delays. Neurocomputing 2019, 359, 264–275. [Google Scholar] [CrossRef]

- Tang, Z.; Park, J.H.; Shen, H. Finite-time cluster synchronization of lur’e networks: A nonsmooth approach. IEEE Trans. Syst. Man Cybern. Syst. 2018, 48, 1213–1224. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, X.; Zhou, D.; Lin, C.; Chen, J.; Wang, H. Finite-time stabilizability and instabilizability for complex-valued memristive neural networks with time delays. IEEE Trans. Syst. Man Cybern. Syst. 2018, 48, 2371–2382. [Google Scholar] [CrossRef]

- Chen, J.; Chen, B.; Zeng, Z. Global asymptotic stability and adaptive ultimate mittag-leffler synchronization for a fractional-order complex-valued memristive neural networks with delays. IEEE Trans. Syst. Man Cybern. Syst. 2019, 49, 2519–2535. [Google Scholar] [CrossRef]

- Li, X.; Wu, H.; Cao, J. Synchronization in finite time for variable-order fractional complex dynamic networks with multi-weights and discontinuous nodes based on sliding mode control strategy. Neural Netw. 2021, 139, 335–347. [Google Scholar] [CrossRef] [PubMed]

- Duan, L.; Shi, M.; Huang, C.; Fang, X. Synchronization in finite-/fixed-time of delayed diffusive complex-valued neural networks with discontinuous activations. Chaos Solitons Fractals 2021, 142, 110386. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Tan, Y.; Yuan, Y.; Xie, X.; Tian, E.; Liu, J. Observer-based event-triggered control for interval type-2 fuzzy networked system with network attacks. IEEE Trans. Fuzzy Syst. 2023, 31, 2788–2798. [Google Scholar] [CrossRef]

- Liu, B.; Liu, T.; Xiao, P. Dynamic event-triggered intermittent control for stabilization of delayed dynamical systems. Automatica 2023, 149, 110847. [Google Scholar] [CrossRef]

- Li, X.; Liu, W.; Gorbachev, S.; Cao, J. Event-triggered impulsive control for input-to-state stabilization of nonlinear time-delay systems. IEEE Trans. Cybern. 2023, 54, 2536–2544. [Google Scholar] [CrossRef]

- Liu, Z.; Gao, H.; Yu, X.; Lin, W.; Qiu, J.; Rodríguez-Andina, J.J.; Qu, D. B-spline wavelet neural-network-based adaptive control for linear-motor-driven systems via a novel gradient descent algorithm. IEEE Trans. Ind. Electron. 2023, 71, 1896–1905. [Google Scholar] [CrossRef]

- Wang, J.; Ru, T.; Shen, H.; Cao, J.; Park, J.H. Finite-time L2−L∞ synchronization for semi-markov jump inertial neural networks using sampled data. IEEE Trans. Network Sci. Eng. 2021, 8, 163–173. [Google Scholar] [CrossRef]

- Park, J.H.; Lee, T.H.; Liu, Y.; Chen, J. Dynamic Systems with Time Delays: Stability and Control; Springer-Nature: Singapore, 2019. [Google Scholar]

- Yang, S.; Hu, C.; Yu, J.; Jiang, H. Finite-time cluster synchronization in complex-variable networks with fractional-order and nonlinear coupling. Neural Netw. 2021, 135, 212–224. [Google Scholar] [CrossRef] [PubMed]

- Liu, P.; Zeng, Z.; Wang, J. Asymptotic and finite-time cluster synchronization of coupled fractional-order neural networks with time delay. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 4956–4967. [Google Scholar] [CrossRef]

- Wang, L.; Song, Q.; Liu, Y.; Zhao, Z.; Alsaadi, F.E. Finite-time stability analysis of fractional-order complex-valued memristor-based neural networks with both leakage and time-varying delays. Neurocomputing 2017, 245, 86–101. [Google Scholar] [CrossRef]

- Yu, T.; Cao, J.; Rutkowski, L.; Luo, Y.-P. Finite-time synchronization of complex-valued memristive-based neural networks via hybrid control. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 3938–3947. [Google Scholar] [CrossRef] [PubMed]

- Zhu, X.; Tang, Z.; Feng, J.; Park, J.H. Aperiodically intermittent event-triggered pinning control on cluster synchronization of directed complex networks. ISA Trans. 2023, 138, 281–290. [Google Scholar] [CrossRef]

- Zhang, J.; Ma, Z.; Li, X.; Qiu, J. Cluster Synchronization in Delayed Networks With Adaptive Coupling Strength via Pinning Control. Front. Phys. 2020, 8, 235. [Google Scholar] [CrossRef]

- Hou, T.; Yu, J.; Hu, C.; Jiang, H. Finite-time synchronization of fractional-order complex-variable dynamic networks. IEEE Trans. Syst. Man Cybern. Syst. 2019, 51, 4297–4307. [Google Scholar] [CrossRef]

- Podlubny, I. Fractional Differential Equations; Academic Press: London, UK, 1999. [Google Scholar]

| Method | Control | Parameters | J | ||

|---|---|---|---|---|---|

| Normal | 9.87 | 10.17 | 10.47 | 691.2507 | |

| 9.87 | 9.87 | 9.87 | |||

| 10.47 | 9.87 | 10.17 | |||

| 4.84 | 4.6 | ||||

| Optimization | 10.6886 | 8.6932 | 573.7228 | ||

| 8.2978 | 9.6064 | 8.4519 | |||

| 0.0408 | 10.643 | 10.355 | |||

| 5.6879 | 3.7145 |

| Method | J | |||||

|---|---|---|---|---|---|---|

| [31] | 9 | 8 | 5 | 5 | 7.6398 | |

| Optimization | 13.8477 | 13.8515 | 2.5530 | 2.5525 | 7.5698 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chang, Q.; Wang, R.; Yang, Y. Finite-Time Cluster Synchronization of Fractional-Order Complex-Valued Neural Networks Based on Memristor with Optimized Control Parameters. Fractal Fract. 2025, 9, 39. https://doi.org/10.3390/fractalfract9010039

Chang Q, Wang R, Yang Y. Finite-Time Cluster Synchronization of Fractional-Order Complex-Valued Neural Networks Based on Memristor with Optimized Control Parameters. Fractal and Fractional. 2025; 9(1):39. https://doi.org/10.3390/fractalfract9010039

Chicago/Turabian StyleChang, Qi, Rui Wang, and Yongqing Yang. 2025. "Finite-Time Cluster Synchronization of Fractional-Order Complex-Valued Neural Networks Based on Memristor with Optimized Control Parameters" Fractal and Fractional 9, no. 1: 39. https://doi.org/10.3390/fractalfract9010039

APA StyleChang, Q., Wang, R., & Yang, Y. (2025). Finite-Time Cluster Synchronization of Fractional-Order Complex-Valued Neural Networks Based on Memristor with Optimized Control Parameters. Fractal and Fractional, 9(1), 39. https://doi.org/10.3390/fractalfract9010039