In the paper, we will evaluate the performance of the efficient MLE and deep-learning models on two resolutions: 0.0909 (1/11) and 0.0476 (1/21). For the efficient MLE, we will estimate two kinds of data: fractional Brownian surfaces (FBSs) (true data) and fractional Brownian images (FBIs), which are obtained from FBSs saved as gray-level images, thereby losing some finer details, especially for larger image sizes. In addition, we will also calculate the corresponding MSEs for comparison. For deep-learning models—including our proposed deep-learning model with 25 layers, GoogleNet, Xception, ResNet18, MobileNetV2, and SqueezeNet—we will first classify these FBIs and then compute the corresponding Hurst exponents according to the formula of Hurst exponents versus classes and finally compute their MSEs.

3.1. Experimental Settings

To investigate the effectiveness, two kinds of classes—11 Hurst exponents and 21 Hurst exponents—will be considered. For 11 classes, the Hurst exponents are H = 1/22, 3/22, …, and 21/22; for 21 classes, the Hurst exponents are H = 1/42, 3/42, …, and 41/42. Hence, the resolution of 11 classes is 0.0909 (1/11), and the resolution of 21 classes is 0.0476 (1/21).

Similar to Hoefer et al. [

28], we generated as our dataset 1000 realizations (seed 1–1000, called Set 1) or observations of 2D FBM for each Hurst exponent or class, and each realization had five sizes: 8 × 8 × 1, 16 × 16 × 1, 32 × 32 × 1, 64 × 64 × 1, and 128 × 128 × 1. They were saved as images (called FBIs) as well as numerical data (called FBSs for the efficient MLE) for comparison. In addition, we also generated another 1000 realizations (seed 1001–2000, called Set 2) as our comparison set. The realizations and appearances/images from Set 2 will not be completely seen by deep-learning models trained on Set 1. Although the appearances or images from Set 1 will not be completely seen through five-fold cross-validation by deep-learning models trained on Set 1, the realizations from Set 1 will be partially seen through five-fold cross-validation by deep-learning models trained on Set 1.

When generating each 2D FBM, we followed the following procedure. First, we calculated the covariance matrix according to Equation (4). Second, we decomposed the covariance matrix using Cholesky factorization to obtain its lower triangular. Third, we produced a realization of standard white Gaussian noise. Finally, we multiplied the lower triangular and the white noise to generate a realization of 2D FBM. Hence, for each size with 1000 observations, we produced 11,000 images and surfaces in total for 11 Hurst exponents and 21,000 images and surfaces in total for 21 Hurst exponents. It is worth mentioning again that FBIs are close to FBSs, with some information or finer details being lost.

For a fairer performance comparison of the MLE and deep-learning models, another 32 equally spaced classes or Hurst exponents were generated from Set 1 to train deep-learning models as our comparison models; that is, FBIs of 11 classes from Set 2 were evaluated on three models (among six models) trained on 32 classes from Set 1.

This approach has two purposes. As we know, any estimator will essentially cause errors to occur. The first purpose is to avoid the possibility of zero error. However, if we indirectly obtain the corresponding MSEs according to the formula of MSEs versus classes, then all correctly classified classes will be zero errors. This is not fair for comparison with the MLE.

When FBIs of 11 classes are evaluated on the trained models of 32 classes, no class will result in zero error. For example, the first class of 11 Hurst exponents is 1/22; if the trained models are sufficiently good, then they are classified possibly in the first few classes of 32 Hurst exponents: 1/64 (squared error of 8.8980 × 10−04), 3/63 (squared error of 2.0177 × 10−06), or 5/64 (squared error of 0.0011). The best estimate lies in the second class; its squared error is 2.0177 × 10−06, not zero.

The second purpose is that we would like to know whether the hidden realizations can be learned through five-fold cross-validation by deep-learning models. As we know, under five-fold cross-validation, the deep-learning models will not see these validated or tested images, and hence, the operation is theoretically considerably fair. However, during training, the models have partially seen their hidden realizations through five-fold cross-validation.

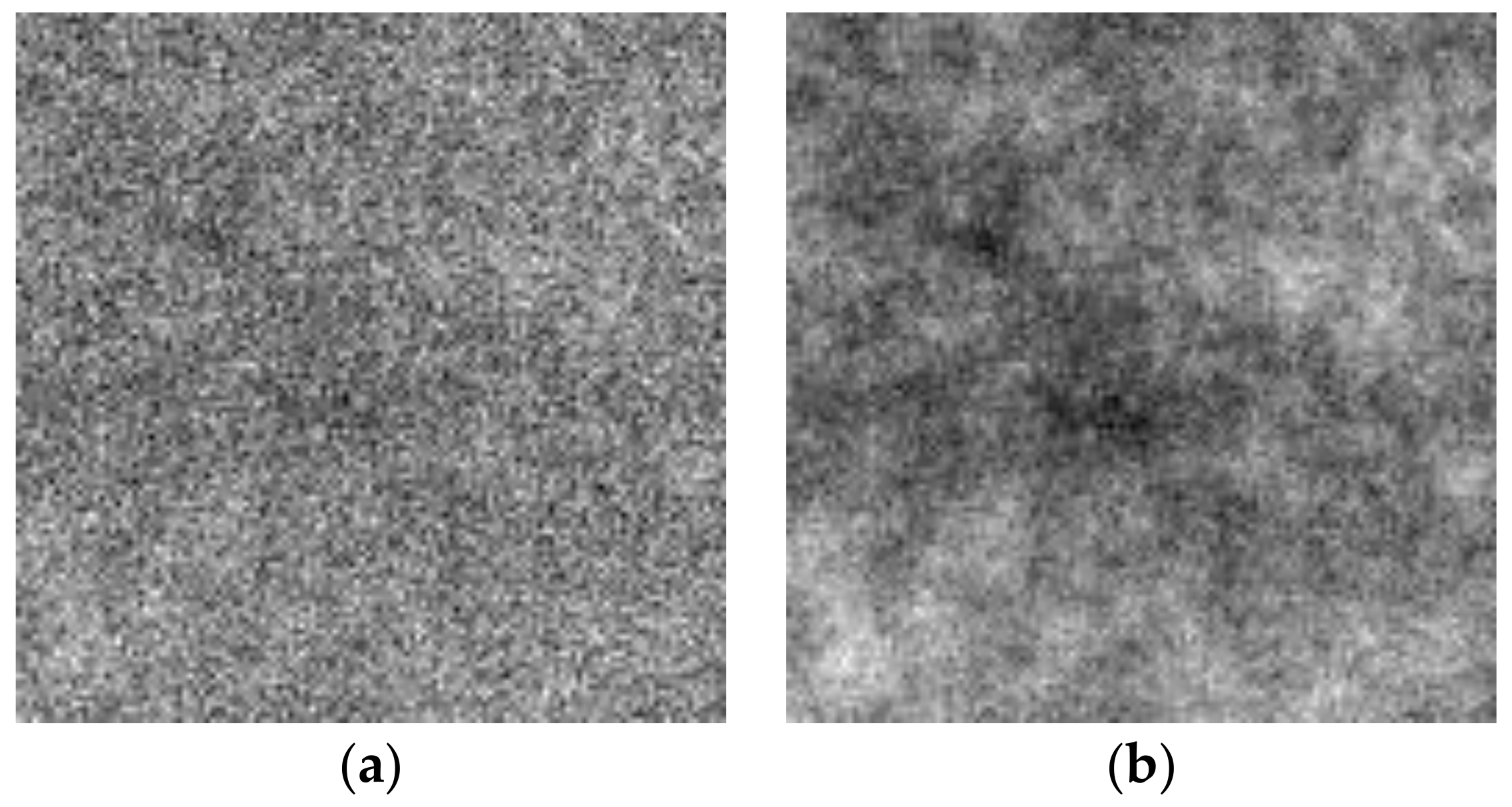

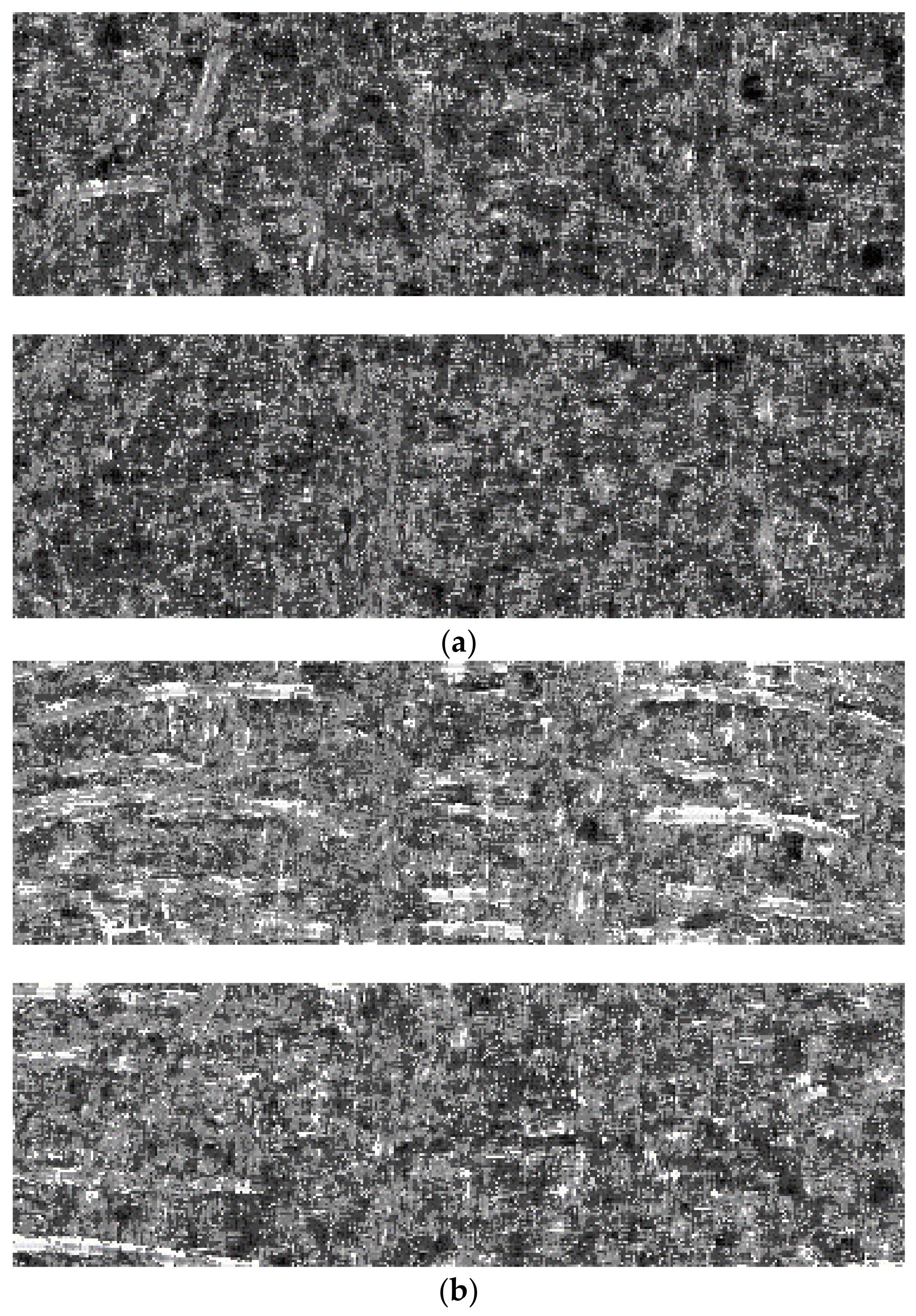

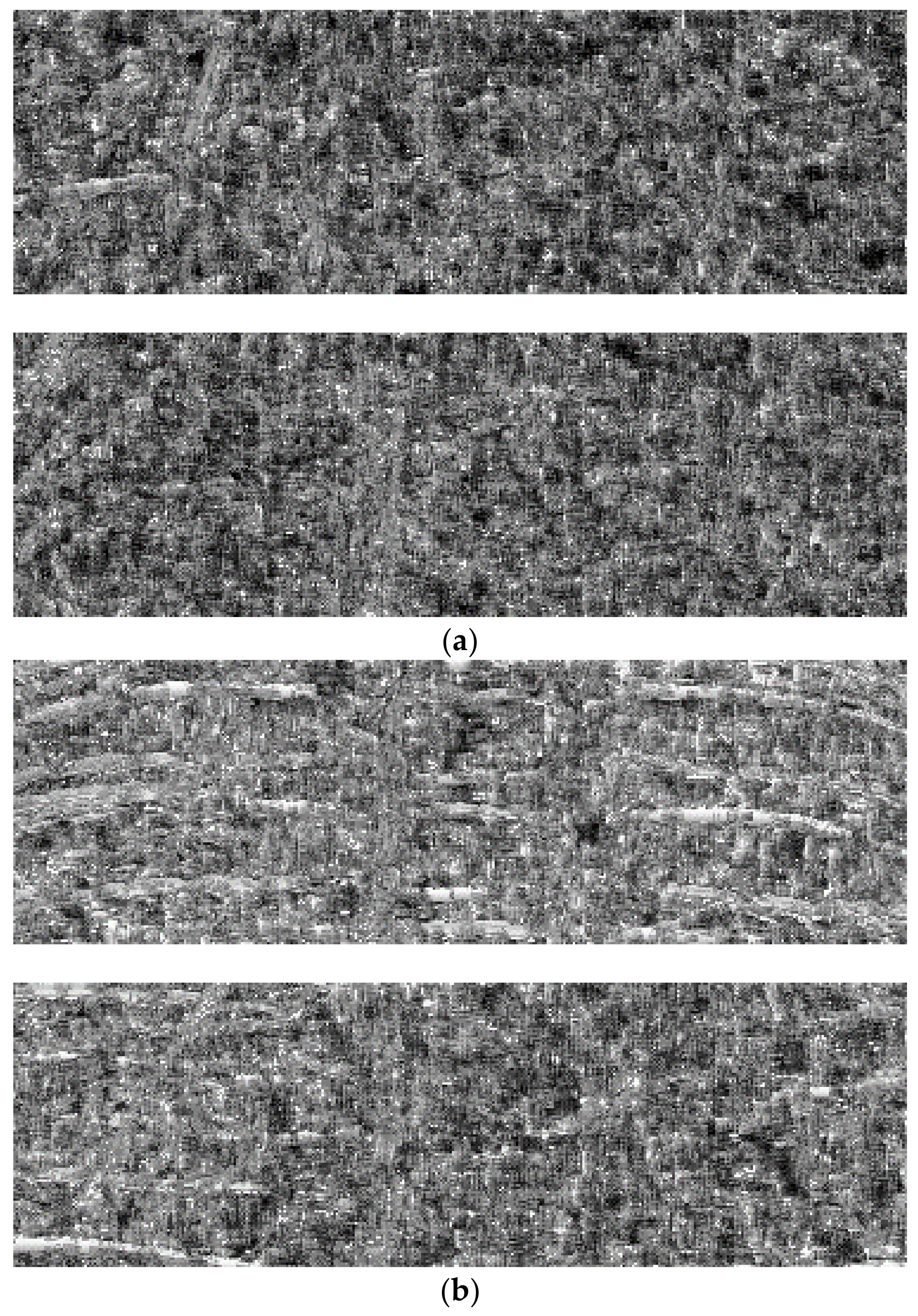

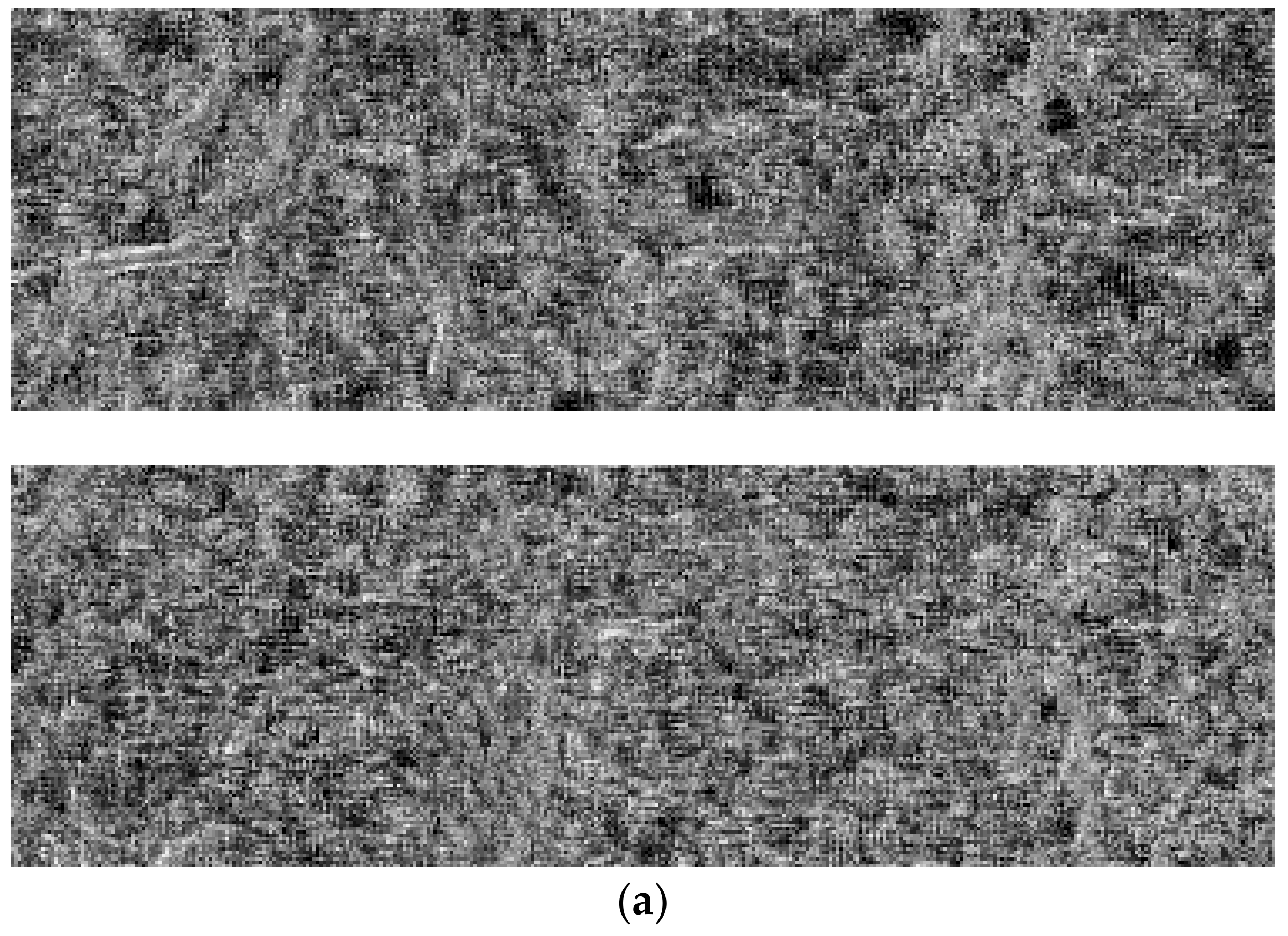

To illustrate some possible images or appearances of different Hurst exponents,

Figure 1 shows two FBIs of

H = 1/64 and

H = 9/64 with size 128 × 128 × 1;

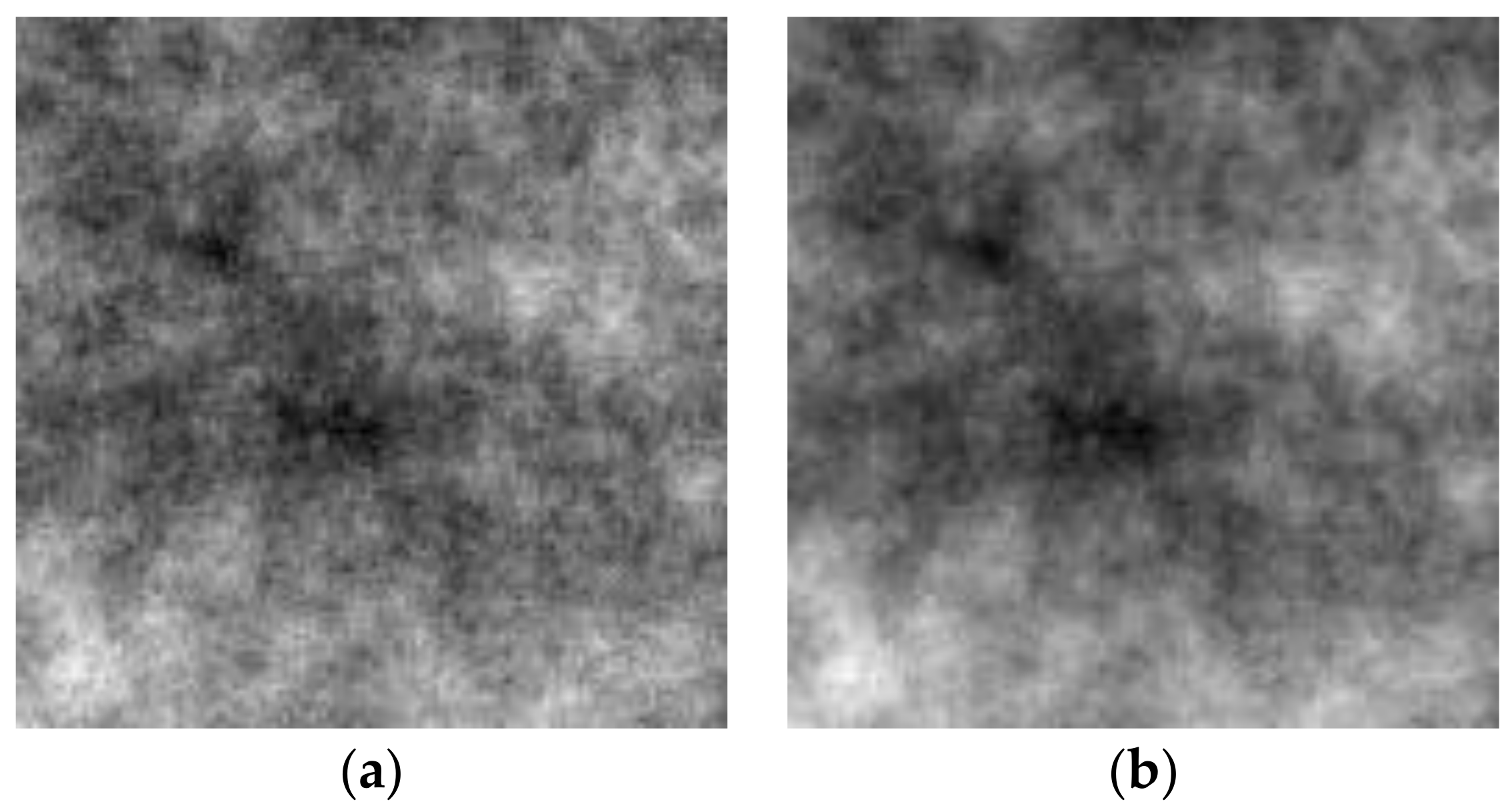

Figure 2 shows two FBIs of

H = 17/64 and

H = 25/64 with size 128 × 128 × 1;

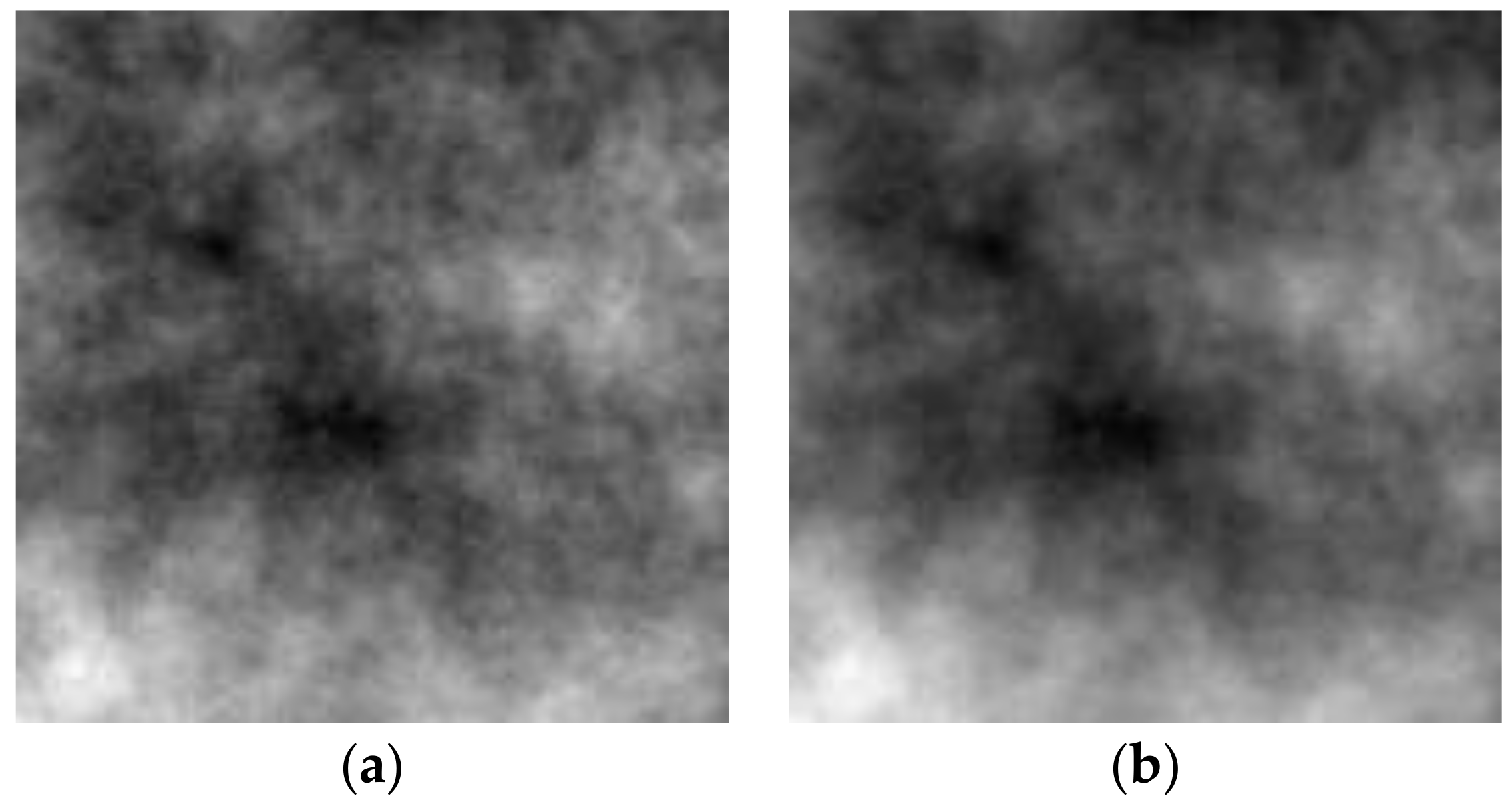

Figure 3 shows two FBIs of

H = 33/64 and

H = 41/64 with size 128 × 128 × 1;

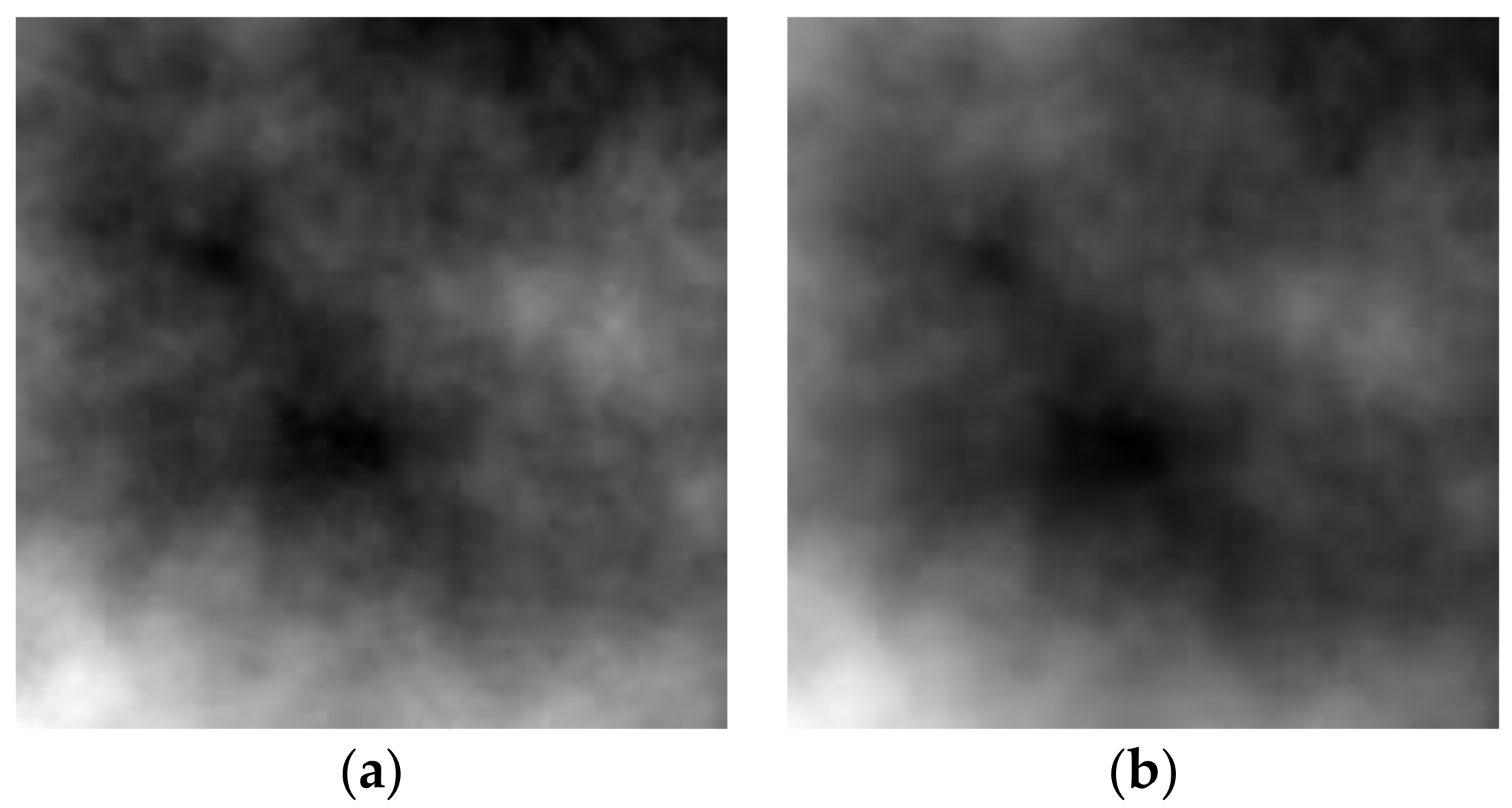

Figure 4 shows two FBIs of

H = 49/64 and

H = 57/64 with size 128 × 128 × 1. All eight FBIs were generated from the same realization or seed.

In

Figure 1,

Figure 2,

Figure 3 and

Figure 4, it is obvious that we cannot easily discriminate the images of two neighboring Hurst exponents with the naked eye, especially for higher Hurst exponents.

For a fair comparison, six deep-learning models were performed in the following operating environment: (1) a computer (Intel

® Xeon(R) W-2235 CPU) with a GPU processor (NVIDIA RTX A4000) for running the models; (2) MATLAB R2022a for programming the models; (3) three solvers or optimizers [

35,

41] for training the models: sgdm, adaptive moment estimation (adam), and root mean square propagation (rmsprop); an initial learning rate of 0.001; a mini-batch size of 128; a piecewise learning rate schedule; a learning rate drop period of 20; a learning rate drop factor of 0.1; a shuffle for every epoch; number of epochs at 30; and a validation frequency of 30. For the efficient MLE, the comparison was performed in the same computing environment as outlined in points (1)–(3).

3.3. Results of Deep-Learning Models

The MLE is the best estimator for 2D FBM; it has the lowest MSE and is an unbiased estimator. The efficient MLE for 2D FBM is the fastest among the MLEs. Nevertheless, the computational costs are still extremely high, especially for larger image sizes. As the hardware for deep-learning models becomes quicker, and as deep-learning models become advanced and reliable, we will naturally pay more attention to this field and think of the ways in which the models can help us reduce the problem of computational costs.

In a previous pilot study [

26] with only size 32 × 32 × 1 and three deep-learning models (one simple 29-layer model and two pre-trained models: AlexNet and GoogleNet) with solver or optimizer sgdm, we experimentally showed that deep-learning models are indeed feasible.

In the paper, we will try to design one 25-layer deep-learning model—which is simpler than previously designed models—and we will choose five pre-trained deep-learning models for a more comprehensive MSE comparison between the efficient MLE and deep-learning models, including five image sizes and three solvers (sgdm, adam, and rmsprop). In addition, we also want to know whether deep-learning models can learn the hidden realizations, i.e., whether deep-learning models do not see the validated data or observations (from different Hurst exponents) under five-fold cross-validation but possibly see partial realizations (i.e., partial white noise with the same seed was seen during training).

First, we develop a simple network mode—only 25 layers—consisting of four groups. The first group has one input layer; the second group is composed of four layers (a convolutional layer, a batch normalization layer, a ReLU layer, and a maximum pooling layer); the third group is composed of four layers (same as the second group without a maximum pooling layer); and finally, the fourth group is composed of three layers (a fully connected layer, a softmax layer, and a classification layer).

Based on the special structures of FBIs, we designed a simple model, consisting of regularly configured filters and increased sizes. Therefore, the input sizes of our proposed model include five image sizes (8 × 8 × 1, 16 × 16 × 1, 32 × 32 × 1, 64 × 64 × 1, and 128 × 128 × 1). From the first to the sixth convolutional layer, the filter numbers are all 128, with sizes from 3 × 3, 5 × 5, 7 × 7, 9 × 9, 11 × 11, to 13 × 13, respectively. In the future, we will try other combinations of filters and sizes, add other layers—such as dropout layers—and fine-tune their hyperparameters.

The size and stride of all maximum pooling layers are 2 × 2 and 2. According to the number of classes, the output size is 11 or 21. For more clarity,

Table 2 shows the detailed architecture of our proposed model.

Based on our proposed 25-layer model, we run five-fold cross-validation on FBIs for five types of image sizes, each Hurst exponent with 1000 realizations from seed 1 to 1000 (Set 1). Under five-fold cross-validation,

Table 3 and

Table 4 show the classification rates of five folds and their mean and standard deviation for 11 classes and 21 classes, and

Table 5 and

Table 6 provide their corresponding MSEs of five folds and their mean and standard deviation for 11 classes and 21 classes. For MSEs, we first convert the classified classes to their corresponding Hurst exponents and then calculate the MSEs between the estimated Hurst exponents and the true Hurst exponents.

It is clear in

Table 3 and

Table 4 that the best accuracies of each solver all occur with size 16 × 16 × 1; that is, the proposed model is more suitable for size 16 × 16 × 1. Obviously, the simple design is not well suited for larger image sizes, such as 64 × 64 × 1 and 128 × 128 × 1, especially when the resolution is finer (21 Hurst exponents). Like all pre-trained models, no model can fit in all databases. Therefore, we can design different architectures of layers for other image sizes in terms of accuracies, MSEs, or other metrics.

Since the values of the Hurst exponent are continuous from 0 to 1, the classes are ordinal, not cardinal; the neighboring classes of the Hurst exponent are closer to each other than distant classes. Under ordinal classes, the misclassified classes of a good model should be closer to or neighboring the correct class. For ordinal classes, the classification rates are only one important indicator of reference, but the most practical metrics should be MSEs. Therefore, the corresponding MSEs for 11 classes and 21 classes—via the formula of MSEs versus classes—are listed for comparison in

Table 5 and

Table 6.

For a concise comparison, our proposed model was further summarized.

Table 7 shows the summary of average accuracies for 11 and 21 classes over three solvers (sgdm, adam, and rmsprop), and

Table 8 shows the summary of average MSEs (AMSEs, simply called MSEs) for 11 and 21 classes over three solvers (sgdm, adam, and rmsprop).

It is clear in

Table 7 and

Table 8 that the lowest MSE corresponds to the highest accuracy. For ordinal classes, the relationship between accuracies and MSEs is often used as an effective evaluation indicator for deep-learning models. The more consistent the relationship between accuracies and MSEs, the better designed the model is. Obviously, the proposed model is, on the whole, well designed and well performed.

On the other hand, the best MSEs occur at 16 × 16 × 1. This indicates that our proposed simple deep-learning model is more suited to smaller sizes. For other sizes, we need other more complex designs or the adoption of some ready-made or pre-trained models.

In addition, although the accuracies of 11 classes are all higher than those of 21 classes, the MSEs of 21 classes are all lower than those of 11 classes. For a well-designed model, this is very reasonable because the misclassified classes only occur at neighboring classes—not at remote classes. The spacing between two neighboring classes under 21 Hurst exponents is finer than that under 11 Hurst exponents. Accordingly, the MSEs will decrease. It can be reasonably expected that the MSEs for reliable deep-learning models will decrease as the resolution increases (or the spacing between two neighboring classes decreases), but for unqualified deep-learning models, the MSEs will possibly increase because these models prematurely converge or cannot converge during training. They will be implemented and discussed in future work.

Compared with MSEs of the MLE, our proposed simple 25-layer deep-learning model is much better than the MLE. This phenomenon may be due to two possibilities: one is that the correctly classified classes are zero errors; the other is that our proposed model can learn the hidden realizations because our model can see the partial realizations of seeds through five-fold cross-validation during training. Therefore, further evaluation is necessary for a fair comparison.

If the reason falls under the second possibility, it can be said experimentally that even a simple deep-learning model has the super power to learn the hidden realizations, not to mention the advanced pre-trained models.

After a simple deep-learning model was successfully proposed, we further experimented with three pre-trained models, which could be directly—without augmentation—run from size 8 × 8 × 1 to 128 × 128 × 1, and two pre-trained models, which could be directly run from size 32 × 32 × 1 to 128 × 128 × 1. The first group of pre-trained deep-learning models included Xception (71 layers deep, 170 layers in total), ResNet18 (18 layers deep, 71 layers in total), and MobileNetV2 (53 layers deep, 154 layers in total); the second group included GoogleNet (22 layers deep, 144 layers in total) and SqueezeNet (18 layers deep, 68 layers in total).

Since GoogleNet and SqueezeNet contain pooling layers—making the size smaller and smaller—GoogleNet and SqueezeNet are not suitable for sizes 8 × 8 × 1 and 16 × 16 × 1. Therefore, in the following four tables (

Table 9,

Table 10,

Table 11 and

Table 12), we use x to represent no experiment performed on the corresponding cell. In total, five pre-trained models were performed. All five pre-trained models were executed under five-fold cross-validation for the same FBIs. The sizes of our images for Xception, ResNet18, MobileNetV2 consisted of 8 × 8 × 1, 16 × 16 × 1, 32 × 32 × 1, 64 × 64 × 1, and 128 × 128 × 1. The sizes of our images for GoogleNet and SqueezeNet consisted of 32 × 32 × 1, 64 × 64 × 1, and 128 × 128 × 1. For a clear comparison,

Table 9,

Table 10,

Table 11 and

Table 12 contain six deep-learning models, including our proposed deep-learning model plus five pre-trained models.

All five pre-trained models were run under 11 classes and 21 classes. Their mean classification rates plus those of our proposed model are shown in

Table 9 for 11 classes and in

Table 10 for 21 classes. Their corresponding MSEs plus those of our proposed model are shown in

Table 11 for 11 classes and in

Table 12 for 21 classes. The corresponding detailed five-fold data of all five pre-trained models are arranged in

Table A1,

Table A2,

Table A3,

Table A4,

Table A5,

Table A6,

Table A7,

Table A8,

Table A9,

Table A10,

Table A11,

Table A12,

Table A13,

Table A14,

Table A15,

Table A16,

Table A17,

Table A18,

Table A19 and

Table A20 in

Appendix A.

In the case of 11 classes, as mentioned previously, our proposed simple deep-learning model—only 25 layers—is more appropriate for smaller sizes, such as 8 × 8 × 1 and 16 × 16 × 1, as well as 32 × 32 × 1 only for the sgdm solver. MobileNetV2 (53 layers deep, 154 layers in total) exhibits an increasing classification rate as the size increases, and Xception (71 layers deep, 170 layers in total) exhibits a similar increasing trend, except with sizes 8 × 8 × 1 (9.10%) and 16 × 16 × 1 (9.09%) with the sgdm solver. This implies that models with deeper layers are beneficial for larger image sizes but detrimental for smaller image sizes. In particular, the sgdm solver is extremely unsuitable for Xception, as performed on our images.

ResNet18 (18 layers deep, 71 layers in total) also exhibits an increasing classification rate as the size increases when the solver is sgdm. When the solver adopted is adam, the best classification rate (99.01%) occurs at 64 × 64 × 1; when the solver adopted is rmsprop, the best classification rate (98.29%) actually occurs at 32 × 32 × 1.

GoogleNet (22 layers deep, 144 layers in total) and SqueezeNet (18 layers deep, 68 layers in total)—only run with data sizes 32 × 32 × 1, 64 × 64 × 1, and 128 × 128 × 1— also exhibit an increasing classification rate as the size increases. Their corresponding MSEs also reflect the trend. It is worth mentioning that an unstable result of mean 49.75% and standard deviation 20.50% occurs in SqueezeNet with the rmsprop solver at 32 × 32 × 1. Its five-fold classification rates are 18.09%, 77.50%, 55.50%, 60.95%, and 36.73% (see

Table A5 in

Appendix A).

In the case of 21 classes, their performance and trends are similar to those of 11 classes. ResNet18 also exhibits an increasing classification rate as the size increases when the solver is sgdm. When adam and rmsprop are chosen as solvers, their best classification rates of 96.87% and 96.29% both occur at 64 × 64 × 1.

GoogleNet exhibits an increasing classification rate as the size increases; the best classification rate is 95.67%. However, the best classification rate (97.28%) for the adam solver occurs at 64 × 64 × 1, while the best classification rate (91.90%) for the rmsprop solver occurs at 32 × 32 × 1.

In addition, an unstable result of mean 28.64% and standard deviation 23.20% occurs in SqueezeNet with the rmsprop solver at 32 × 32 × 1. Its five-fold classification rates are 4.76%, 57.14%, 4.76%, 21.64%, and 54.88% (see

Table A10 in

Appendix A).

Xception (71 layers deep, 170 layers in total) also exhibits an increasing trend as image sizes increase, except with sizes 8 × 8 × 1 (4.77%) and 16 × 16 × 1 (4.75%) with the sgdm solver. This also implies that models with deeper layers are beneficial for larger image sizes but detrimental for smaller image sizes. In particular, the sgdm solver is extremely unsuitable for Xception, as performed on our images. Relatively, the performance of MobileNetV2 (53 layers deep, 154 layers in total) steadily increases as the image size increases.

Upon careful observation of the case of Xception with the rmsprop solver with size 64 × 64 × 1 in

Table 10 and

Table 12, we find that it has the highest classification rate at 98.13%, but its corresponding MSE is 1.63 × 10

−03—the largest among the six models and much higher than other models. This indicates that Xception with the rmsprop solver with size 64 × 64 × 1 is not reliable—even not qualified—for the ordinal classes in our image dataset.

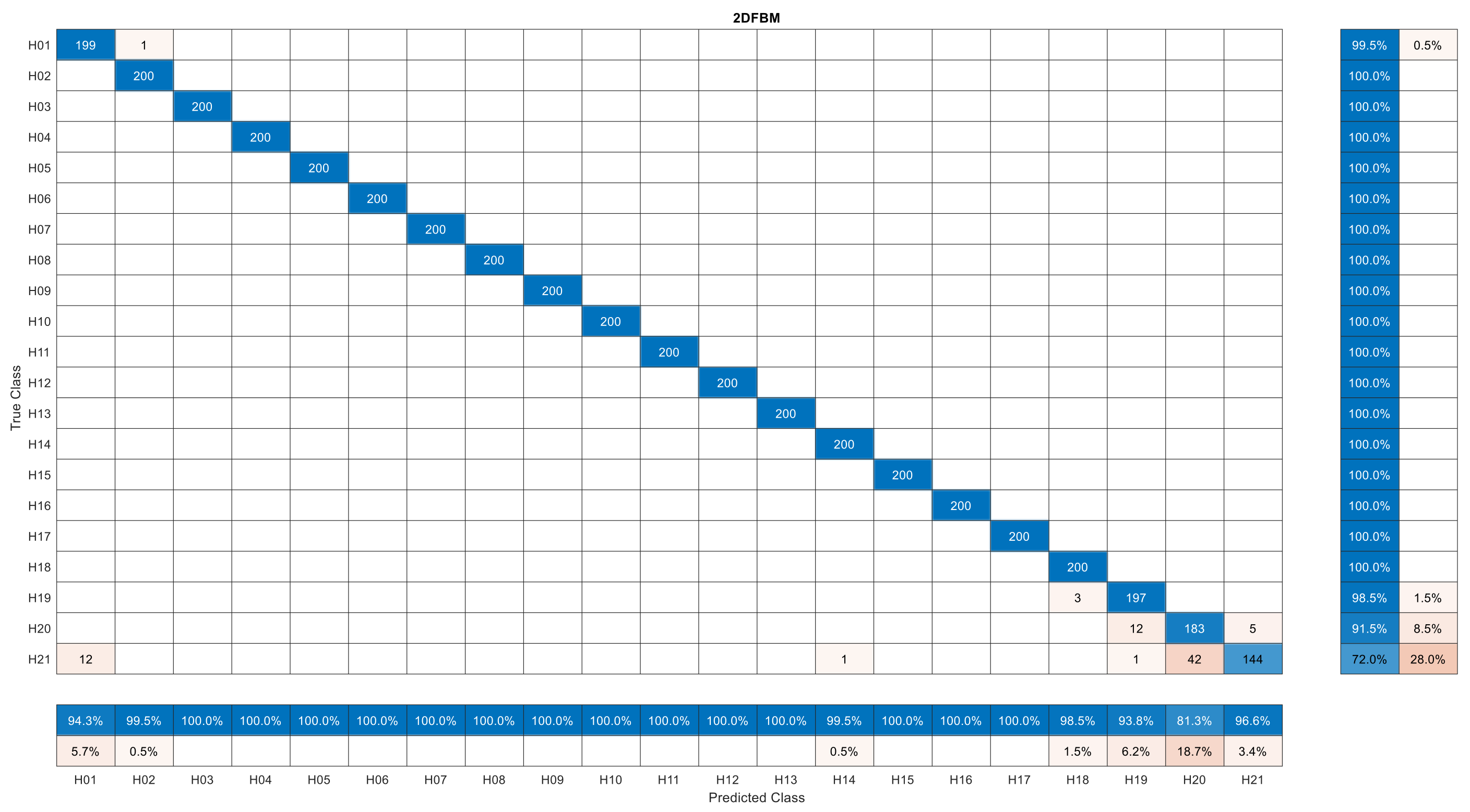

Figure 5 shows the confusion matrix in question from Fold 5. Blue cells stand for the numbers (diagonal) or percentages (vertical or horizontal) of correct classification and non-blue cells for the numbers or percentages of incorrect classification. The darker the color is, the higher the value. Obviously, the sharp increase mainly contributes to the reason for there being 12 class-21 images (

H = 0.9762) classified as class 1 (

H = 0.0238). This is a substantial mistake for our ordinal classes. It also indicates that a complex model under normal settings might not be suitable for our image dataset. For further applications, these kinds of complex models should be modified by fine-tuning their superparameters in order to achieve higher generalization ability.

As with Xception, some solvers are better, while some are worse. To avoid the potential problem of sensitivity or stability with solvers of deep-learning models, we consider the average accuracies and average MSEs (AMSEs, simply called MSEs) over three solvers (sgdm, adam, and rmsprop).

Table 13 shows a summary of the six models for 11 classes, while

Table 14 presents a summary of the six models for 21 classes.

Table 15 shows a summary of the six models for 11 classes, while

Table 16 presents a summary of the six models for 21 classes.

In

Table 13 and

Table 15, we find that each highest classification rate in

Table 13 corresponds exactly to each smallest MSE in

Table 15. Likewise, in

Table 14 and

Table 16, each highest classification rate in

Table 14 corresponds almost exactly to each smallest MSE in

Table 16, except for image size 32 × 32 × 1, where the highest classification rate is achieved in ResNet18, the second highest in GoogleNet, but the smallest MSE is achieved in GoogleNet, the second smallest in our proposed model, while ResNet18 only achieves the fifth smallest MSE.

If deep-learning models are stable for our image dataset, the MSEs of more classes (21 classes) should be smaller than those of fewer classes (11 classes). However, we find in

Table 15 and

Table 16 that there are five unstable cases: Xception at 64 × 64 × 1, ResNet18 at 32 × 32 × 1, and SqueezeNet at 32 × 32 × 1, 64 × 64 × 1, and 128 × 128 × 1. Stability is a very important indicator of which model we should adopt.

Compared to

Table 1, except for some unstable cases—Xception at 8 × 8 × 1 and 16 × 16 × 1, as well as SqueezeNet at 32 × 32 × 1—our chosen five pre-trained models are almost superior to the true and best estimator (the MLE) in terms of MSEs. The results seem to be promising for the future. However, the calculation of MSEs via the formula of MSEs versus classes presents a potential unfair problem, in that the correctly classified classes in our situations are evaluated as zero errors, but those in real-world applications all contain certain errors. Accordingly, a further comparison is necessary.

In order to ascertain whether deep-learning models can learn the hidden realizations through different appearances or images (different Hurst exponents) with the same realizations, we evaluated the accuracies of Set 2 (seed 1001–2000) in models trained on Set 1 (seed 1–1000) with 11 classes.

Table 17 shows the results, and

Table 18 shows the corresponding differences between

Table 17 (between-set evaluation) and

Table 13 (within-set evaluation). For example, the first element of the first row in

Table 17 and

Table 13 is 37.83% and 92.70%, and hence, its difference is approximately −54.86%.

In

Table 18, it is obvious that the accuracies of between-set evaluation are almost lower than those of within-set evaluation, except for our proposed model with size 128 × 128.

Table 17 and

Table 18 reveal to us that deep-learning models for smaller sizes can learn more hidden realizations from appearances or images, and hence, they can obtain higher accuracies even when they did not see the appearances in advance but did see some realizations through five-fold cross-validation during training. On the contrary, deep-learning models have a higher generalization ability for larger sizes because they can learn more detailed structures from their appearances, even when they did not see these realizations.

Now that deep-learning models have the ability to learn hidden realizations, for a fair and reasonable comparison, we additionally train three promising models (our proposed model, ResNet18, and MobileNetV2), each with five folds, on Set 1 (seed 1–1000) with 32 classes—hence five trained models for each deep-learning model—and then use Set 2 (seed 1001–2000) as our test set; that is, the trained models definitely did not see the appearances and their corresponding hidden realizations of the test or validation set (Set 2).

As mentioned before, the more classes of a qualified model there are, the lower its MSEs.

Table 19 lists the average MSEs of the efficient MLE over 11,000 observations (except for size 128 × 128) and three deep-learning models over 165,000 (3 × 5 × 11,000) observations (three solvers and 11,000 observations per trained model from each fold);

Table 20 lists the average computational times per observation of the efficient MLE and three deep-learning models. Since the efficient MLE for size 128 × 128 takes much longer (6.47 × 10

+02 s per observation; at least 82 days for all 11,000 observations) to estimate, only the first 10 observations of each class were estimated for comparison.

In

Table 19, we can observe that the MSEs of deep-learning models are almost lower than those of the efficient MLE for FBIs, except for size 32 × 32. In

Table 1, we also find that the MSEs of these three deep-learning models for size 8 × 8 are also lower than those of the efficient MLE for FBSs (true image data). For size 16 × 16, our proposed model also results in lower MSE than the efficient MLE for FBSs, with MobileNetV2 presenting a slightly lower value.

In addition, the MSE of the MLE for FBIs increases as the size increases beyond 32 × 32 because FBIs lose the finer details when FBSs are stored as FBIs. Although deep-learning models cannot outperform the MLE for FBSs of larger sizes, the real-world data are generally images, not two-dimensional numerical data. The MSE of our proposed simple model almost decreases as the size increases, except for size 128 × 128, because our model is relatively simple (this may be overcome in the future by modifying the simple model structure). Nevertheless, it is still much better than the MLE for FBIs. For size 128 × 128, MobileNetV2 is the best choice in terms of MSE.

As mentioned before, our main purpose is to find an alternative to the estimated Hurst exponent from the efficient MLE because it will take a lot of time to estimate for larger sizes. Accordingly, time performance will play a very important indicator role in our study. It is obvious in

Table 20 that the computational time of the efficient MLE increases as the size increases and that of the three models is approximately the same for all sizes. Therefore, the computational times of models are almost less than those of the efficient MLE, except for sizes smaller than 8 × 8 and 16 × 16. Our proposed model takes the least time among the three models.

In particular, the average computational time of each observation for size 128 × 128 takes 6.46 × 10+02 s, but that of our proposed model only takes 4.14 × 10−03 s. The time ratio of the efficient MLE to our proposed model is approximately 156,248—terribly high. Even for size 64 × 64, the time ratio of the efficient MLE to our proposed model is approximately 3090—still very high.

In terms of the computational time and MSE, our proposed simple deep-learning model should be recommended for indirectly estimating the Hurst exponent. In future, we may design some slightly complex models for sizes larger than 64 × 64 or choose some pre-trained models in order to achieve better MSEs within an acceptable time frame.