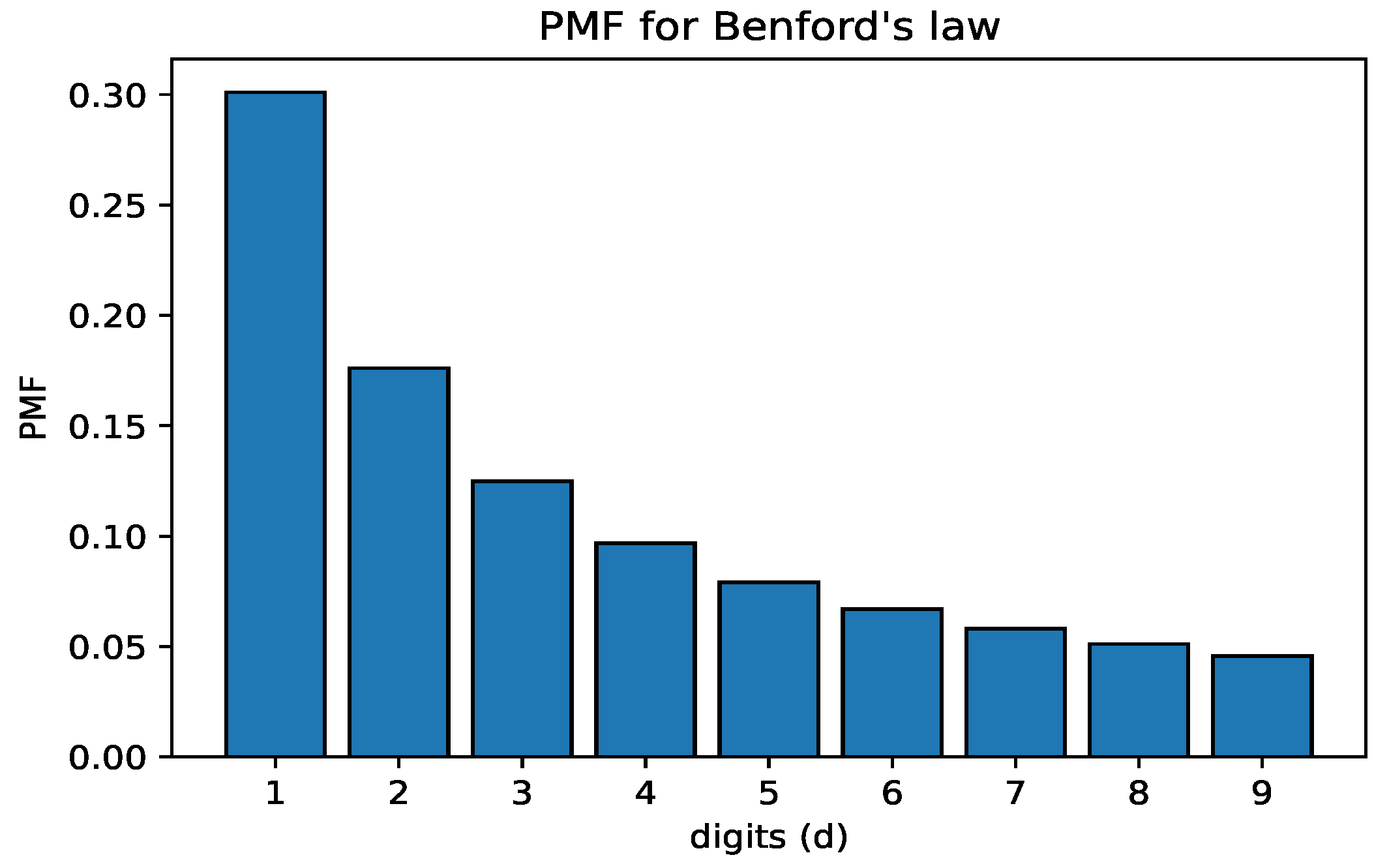

Frank Benford’s 1938 paper,

The Law of Anomalous Numbers [

6], illustrated a profound result, in which the first digits of numbers in a given data set are not uniformly distributed in general. Benford applied statistical analysis to a variety of well-behaved but uncorrelated data sets, such as the areas of rivers, financial returns, and lists of physical constants; in an overwhelming amount of the data, 1 appeared as the leading digit around 30% of the time, and each higher digit was successively less likely [

6,

7]. He then outlined the derivation of a statistical distribution which maintained that the probability of observing a leading digit,

d, for a given base,

b, is

for such data sets [

6,

7]. This logarithmic relation is referred to as Benford’s Law, and its resultant probability measure for base 10 is outlined in

Figure 2. Benford’s Law has been the subject of intensive research over the past several decades, arising in numerous fields; see [

7] for an introduction to the general theory and numerous applications.

Benford’s law appears throughout purely mathematical constructions as well as geometric series, recurrence relations, and geometric Brownian motion. Its ubiquity makes it one of the most interesting objects in modern mathematics, as it arises in many disciplines. Therefore, it is worthwhile to consider non-traditional data sets where the law may appear, such as fractal sets. Basic fractals such as the Cantor set and Sierpinski triangle are obtained as the limiting iterations of sets, and as a result, their unique component measures follow a geometric distribution, which is Benford in most bases. Building on these results, it is plausible that more complicated fractals obey this distribution as well. We studied the Riemann mapping of the exterior of the Mandelbrot set to the complement of the unit disk, along with its reciprocal. These mappings are given by a Taylor and Laurent series, respectively, and we studied their coefficients to determine their fit for Benford’s law. These coefficients are of interest as their asymptotic convergence is intimately related to the conjectured local connectivity of the Mandelbrot set, which is an important open problem in complex dynamics.

4.1. Statistical Testing for Benford’S Law

A common statistical methodology for evaluating whether a data set is distributed according to Benford’s law is to utilize the standard

goodness of fit test. As we are investigating Benford’s law in base 10, we utilize 8 degrees of freedom for our

testing.(There are nine possible first digits, but once we know the proportion that is digits 1 through 8 the percentage that starts with a 9 is forced, and thus we lose one degree of freedom.) If there are

N observations, letting

be the Benford probability of having a first digit of

d, we expect

values to have a first digit of

d. If we let

be the observed number with a first digit of

d, the

value is

If the data are Benford, with 8 degrees of freedom, then 95% of the time the value will be at most 15.5073; this corresponds to a significance level of . We perform multiple testing by creating a distribtuion of values as a function of the sample size by calculating the values of the first m values in the dataset. This is standard practice for studying Benford sequences, and we conduct this account for periodicity in the values, which is typical for certain Benford data sets, such as the integer powers of 2. To account for this multiplicity, we also incorporate the standard Bonferroni correction so that the overall testing is conducted at the same level of significance while giving equal weight in terms of significance to each individual test. We do this by making each test be completed at a significance level of . In particular, we perform 10,045 tests for the and 10,046 tests for the coefficients as we compute the statistic each time we add a new non-zero coefficient to our data set. This brings our final corrected threshold value to 38.9706 and 38.9708, respectively. This corresponds to = 0.05/10,045 = 4.978 × 10 for each individual hypothesis in the dataset and = 0.05/10,046 = 4.977 × 10 for each individual hypothesis in the dataset.

Each data point is not independent, as the values are computed by using a running total of the data, and as such, each point is built on the previous one. This results in a high correlation between the data, and Bonferroni correction likely overcompensates for the increase in type I error in our multiple testing. Still, it is one of the most plausible methods of dealing with the increase in multiplicity, since it is one of the simplest and most conservative estimates, and a value above the Bonferroni correction provides strong evidence that the data are not Benford. Using independent increments to compute each statistic for Benford’s law would fix the issue of independence, but is not recommended since periodic behavior can be missed if the increments are chosen poorly relative to the frequency in the data, and this has the potential to lead to erroneous conclusions.

Since the data set is built iteratively, we also provide a curve to represent the Bonferroni correction for m tests. The rationale is to keep the significance of the overall test constant with respect to the number of tests performed. As we increase the total number of tests performed, we wish to increase our correction accordingly. As such, it does not make sense to consider every value on our plot of values with the same threshold, and this curve is provided as a reference for the tests performed on subsets of our data.

We considered the distribution of the

values to account for random fluctuations and periodic effects. The distribution’s limiting behavior provides our benchmark for the fit to Benford’s law. In addition, we provide the provide the

p-value of our computed

statistic to provide the type I error rate for our conclusions, and we compute the powers of the

tests relative to our null hypothesis that the data are Benford by using the noncentral chi-squared distribution [

15]. We also conducted simulations to estimate the sampling error relative to our null hypothesis. We wish to see how likely it is that a random sample falls in the rejection region for our testing; based on our significance level of

, we expect the sampling error to be roughly 5% if the data are Benford. We randomly sample 1000 coefficients from our data sets and calculate the

value for this sample data. We repeat this simulation 1000 times, and take the ratio of

values in the rejection region to the total number of sample statistics calculated, to estimate the sampling error.

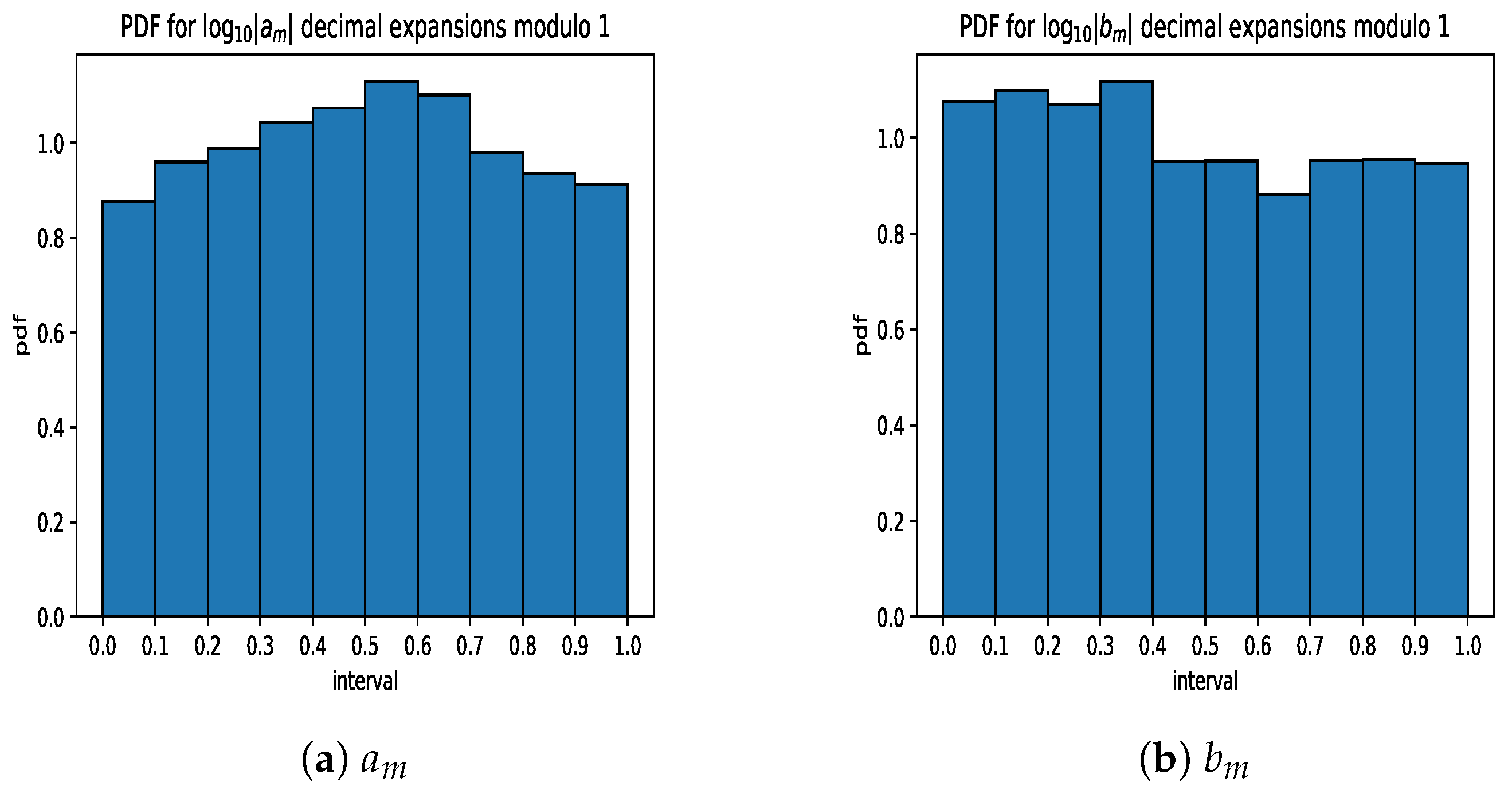

An equivalent test is to consider the distribution of the base 10 logarithms for the absolute value of the data set modulo 1; as a necessary and sufficient condition for a Benford distribution, this sequence converges to the uniform distribution [

16]. As a measure of quantifying the uniformity of this distribution, we may again consider the standard

test. We only perform a single test for the total data set, so we do not need to account for multiplicity. Specifically, we split the interval

into 10 equal bins. If the data are uniform, we expect that each bin obtains

of the total data. Therefore, for each bin in the

data we expect a value of

and for each bin in the

data we expect a value of

. Since there are 10 possible observations, we have 9 degrees of freedom for the data. If the percentage of the data in the first nine bins is known, then the percentage of data in the last bin is forced, and we lose one degree of freedom. We again take

and for nine degrees of freedom, this corresponds to a

value of 16.919. These

results are generated by cells 16, 17, and 18 by the Jupyter notebooks amLogData.ipynb and bmLogData.ipynb, respectively, which may be found under the Data Analysis folder. We also provide the associated

p-values and powers of the

statistic relative to the null hypothesis that this data are uniform.

The coefficients we studied are 2-adic rational numbers, so we considered the distributions of the numerators, denominators, and decimal expansions separately. We considered only the non-zero coefficients, since zero is not defined for our probability measure, and certain theorems and conjectures outlined by Shiamuchi in [

4] already describe the distribution of the zeroes in the coefficients. Our goal is to identify which components of these coefficients are a good fit for Benford’s law through statistical testing, and it is hoped that this will provide motivation for a formal proof.

Table 1 provides examples of the coefficients computed. When the coefficient is 0, numerators are set to 0 and denominators to - for readability. We then use them to compute the exact values in decimal expansion for

and

.

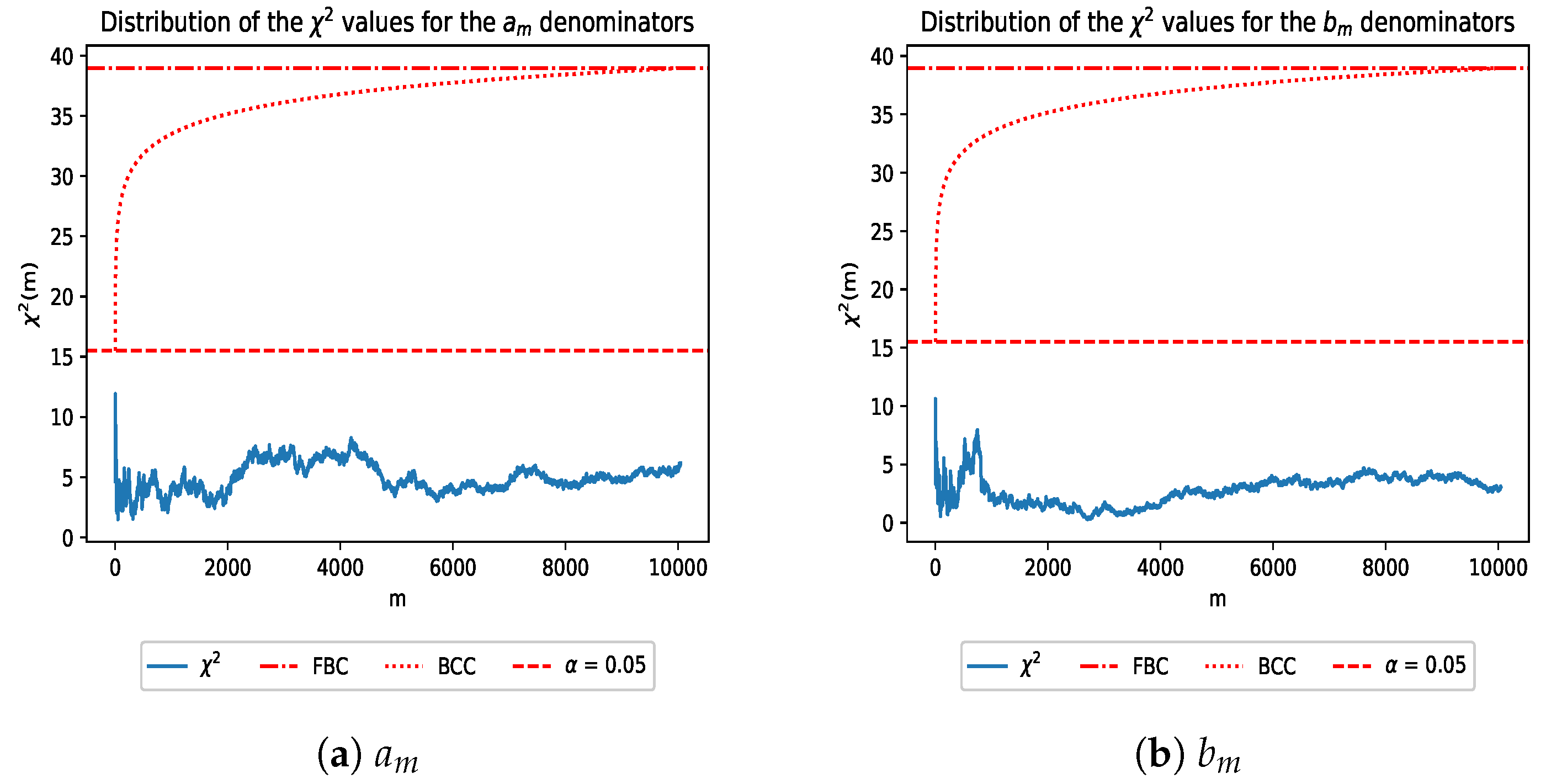

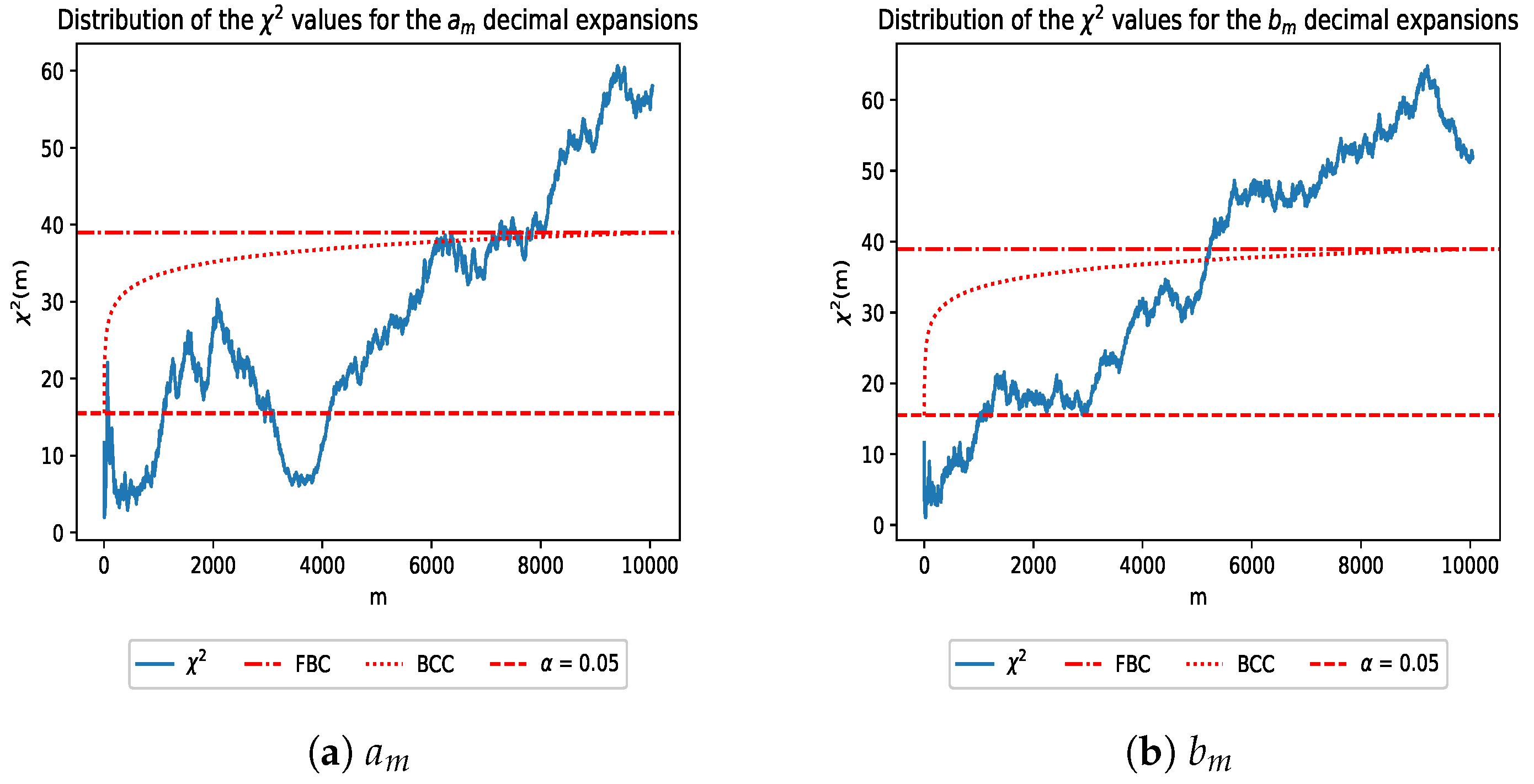

4.2. Benfordness of the Taylor and Laurent Coefficients

We examine the distribution of the first digits of the

and

coefficients. As mentioned earlier, we restrict our discussion to the non-zero coefficients. We conduct the

test and distribution of the base 10 logarithms modulo 1 to evaluate the data. The plots of the

values are shown in

Figure 3,

Figure 4 and

Figure 5; these figures were generated by cells 16, 17, and 18 in the Jupyter notebooks amChiSquareData.ipynb and bmChiSquareData.ipynb respectively, which may be found under the Data Analysis folder. The plots of the logarithms modulo 1 are provided in

Figure 6,

Figure 7 and

Figure 8; these figures were generated by cells 10,11, and 12 in the Jupyter notebooks amLogData.ipynb and bmLogData.ipynb, respectively, which may be found under the Data Analysis folder. Simulation results can be reproduced using a sampling_simulation.ipynb notebook in the same folder.

On the plots of the values, the numerators stay below the uncorrected threshold for statistical significance. The coefficients seem to settle towards the end of the distribution while the coefficients end during a period of relative inflation with many fluctuations. Bearing this in mind, the results for the numerators will be slightly worse than the numerators due to the behavior of the distribution at the point where we finished collecting our data. The final values for the and numerators are 5.968 and 12.785, respectively. The type I error rates (p-values) for the final values are 0.651 and 0.119, both of which are greater than our critical values of for the individual hypotheses and for the overall test. The sampling errors we obtain from our simulations for and are 0.043 and 0.058. The powers relative to the null hypothesis for and are 0.355 and 0.718, respectively. As a result, there is not sufficient evidence to reject our null hypothesis that the data are Benford.

The denominators stay well below the original threshold. This is expected, as they consist of a random sampling of a geometric series, which is known to be Benford in most bases [

16]. The final

values for the

and

denominators are 6.148 and 3.093, respectively. The type I error rates (

p-values) for the final

values are 0.631 and 0.928, both of which are greater than our critical values of

for the individual hypotheses and

for the overall test. The sampling errors we obtain from our simulations for

and

are 0.047 and 0.028. The powers relative to the null hypothesis for

and

are 0.366 and 0.187, respectively. As a result, there is not sufficient evidence to reject our null hypothesis that the data are Benford based on this testing.

The values for the decimal expansions are the most interesting. The values for the coefficients seem to increase in general, but there are erratic periods where the values fall. The sequence ends above the final corrected threshold for significance, and it is plausible that the values will continue to increase more quickly than the correction allows with extra data. The meanwhile surpasses the corrected threshold, which is strong evidence that they are not Benford; the values in the data do decrease towards the end of the distribution, but it is still well above the threshold for significance. The final values for the and data are 58.054 and 51.934, respectively. The type I error rates (p-values) for the final values are × 10 and × 10, both of which are less than our critical values of for the individual hypotheses and for the overall test. The sampling errors we obtain from our simulations for and are 0.235 and 0.243. The powers relative to the null hypothesis for and are 0.999992 and 0.999956, respectively. As a result, there is sufficient evidence to reject our null hypothesis that the data are Benford based on this testing.

As for the distribution of the base 10 logarithms modulo 1, the numerators have a skew towards zero in the distribution of their logarithms. There is a pattern in the

coefficients that explains their behavior, we have observed that when

,

. This result seems to generalize for

, such that when

,

= 1/m, which can be observed in the tables provided by Shiamuchi in [

4], and we have not found a counterexample in our computations. There is regularity in the

numerators as discussed by Bielefeld, Fisher, and von Haeseler in [

11], and it is likely that similar regularities are present in the

coefficients as well. It seems that these patterns become less frequent as the sample size increases. The extra regularity in the

coefficients also helps explain why their distribution is more skewed towards 0 relative to the one provided for the

coefficients as well. Still, for a finite number of iterations, we do not expect perfect agreement, and convergence is likely with larger samples. The

values for the

and

data are 8.482 and 10.203, respectively. These correspond to

p-values of 0.486 and 0.334; the powers relative to the null hypothesis are 0.482 and 0.574. There is not sufficient evidence to reject the null hyopthesis that the data are uniform. In turn, this provides further evidence that the data are Benford.

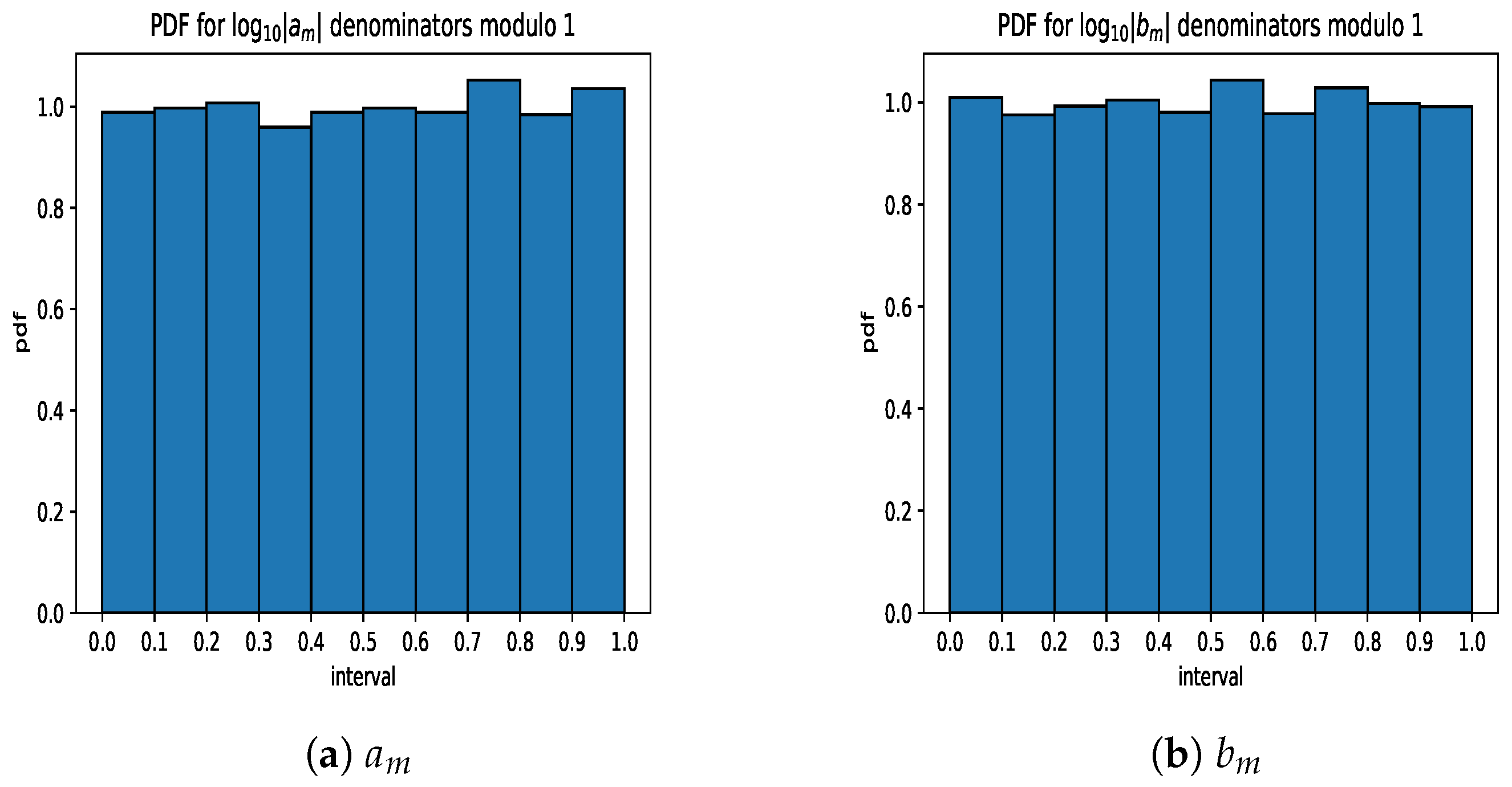

The denominators consist of a sampling of integer powers of 2. Since

is irrational, the sequence

is Benford in base 10, and

(mod 1) converges to the uniform distribution [

16]. Since the denominators span many orders of magnitude, it is expected that they will similarly converge in distribution. The

values for the

and

data are 6.334 and 4.416, respectively. These correspond to

p-values of 0.706 and 0.882; the powers relative to the null hypothesis are 0.358 and 0.248. There is not sufficient evidence to reject the null hyopthesis that the data are uniform. In turn, this provides further evidence that the data are Benford.

The values for the and decimals are 64.261 and 60.757, respectively. These correspond to p-values of × 10 and × 10; the powers relative to the null hypothesis are 0.99998 and 0.99994. There is sufficient evidence to reject the null hyopthesis that the data are uniform. This provides further evidence that the data are not Benford. It is worth noting that the distribution is skewed towards the second half of the interval, while the distribution is skewed towards the first half of the interval. This asymmetry may be related to how the series represent coefficients of reciprocal functions and how they may be computed from each other.

We may also investigate how many orders of magnitude a data set spans on average by computing the arithmetic mean and standard deviation of

to tell where the data set is centered and how spread out it is. It is typical, but not necessary, for a data set to be Benford if it spans many orders of magnitude (see Chapter 2 of [

7,

17] for an analysis that a sufficiently large spread is not enough to ensure Benfordness). Our findings for the Taylor and Laurent coefficients are summarized in

Table 2. The data are generated by cell 8 in the Jupyter notebooks amLogData.ipynb and bmLogData.ipynb, respectively, which may be found under the Data Analysis folder.

The numerators and denominators span many orders of magnitude, but the decimal expansions cluster. The mean for the decimal expansions being negative indicates that the denominators are larger than the numerators, on average. The ratio between the growth rates of the numerators and denominators likely has some form of regularity as well to account for the small standard deviation, but more analysis would be needed to determine the exact relationship. These observations are consistent with the previously discussed conjecture that for all m, and it is plausible a similar relation holds for the coefficients as well. Ultimately, this provides insight into the growth of the coefficients and the shape of the data, and this motivates our conclusion that the numerators and denominators appear to converge to a Benford distribution, while there is no strong evidence for convergence in the decimal expansions.