1. Introduction

Pandemics changed our daily life in many different aspects and, focusing on healthcare and clinical aspects, highlighted the need for managing newly branded

Knowledge-, Decision- and Data-Intensive (KDDI) processes (e.g., [

1,

2,

3,

4,

5,

6,

7,

8]). We introduce this innovative definition to model and describe an approach designed to handle complex and evolving settings, such as healthcare systems impacted by pandemics. It uses an extensive amount of heterogeneous data (from sources like surveillance systems, IoT devices, and electronic health records), integrates expert knowledge (such as clinical guidelines), and helps make challenging decisions (in the face of uncertainty or changing conditions). The modeling, administration, and mining of healthcare systems enable real-time updates, predictive analytics, and adaptive responses to support pandemic prevention and control efforts. According to our vision, KDDI processes are directly involved in both individual-level patient care, for example, we focus on managing

diagnostic and

treatment workflows for

swab-positive individuals, and population-level public health strategies, such as the formulation and enforcement of policies aimed at mitigating disease transmission. By doing so, KDDI supports the dual objectives of preventing new outbreaks and controlling ongoing pandemic spread.

Beyond immediate, short-term interventions, KDDI methodologies also serve a critical role in long-term strategic planning and resilience building. The integration of Information and Communication Technologies (ICT) and Artificial Intelligence (AI) within KDDI frameworks enables robust mechanisms for data collection, analysis, storage, sharing, and visualization. These tools are instrumental in enhancing transparency and coordination among healthcare stakeholders.

Moreover, the recent global experience with COVID-19 has highlighted the necessity for sustained research and innovation in KDDI systems. This includes the development of new paradigms to better support clinical and organizational decision-making, with a focus on long-term capabilities for disease surveillance, outbreak prediction, resource optimization, and patient-centered care planning. Such efforts are pivotal in preparing healthcare systems to monitor, mitigate, and ultimately prevent future pandemic events.

In summary, the integration of KDDI processes into healthcare systems is not only a

reactive measure to pandemic crises but also a proactive strategy aimed at transforming how health information is leveraged to inform policy, practice, and preparedness. In such context,

process modeling, management and mining play a leading role, as to effectively support pandemic control policies at the large, with a special emphasis on the integration of these methodologies with the emerging

big data trend, thus achieving the innovative definition of KDDI process modeling, management, and mining for pandemic scenarios. This, like in recent COVID-19 related studies (e.g., [

3,

4,

9,

10,

11]).

While existing paradigms such as

Knowledge-Intensive BPM (KI-BPM) [

12] and

Data-Intensive BPM [

13] have addressed subsets of these challenges, they typically emphasize either knowledge modeling or data management in isolation. In contrast, we define KDDI processes as an explicit and systematic integration of three complementary perspectives: (

i)

data-intensity, through the ingestion and processing of heterogeneous real-time information such as

Electronic Health Records (EHRs),

IoT streams and

epidemiological data; (

ii)

knowledge-intensity, through the encoding and use of clinical guidelines, care pathways, and domain expertise; and (

iii)

decision-intensity, through the continuous support of adaptive policy-making under uncertainty. By formalizing this triadic combination, KDDI processes move beyond existing single-focus paradigms and provide a unifying framework for pandemic management where all three dimensions are simultaneously critical. Thus, KDDI does not propose an entirely new “

type of process”, but rather a consolidated view that operationalizes the interplay of knowledge, decision, and data for resilience in pandemic response.

According to this last long-term perspective, we propose PROTECTION, a framework for supporting data-centric process modeling, management and mining for pandemic prevention and control. In this paper, we present a framework based on Business Process Model and Notation (BPMN), which represents the most common standard for modeling organizational processes in a formal, yet accessible way. BPMN is particularly effective for capturing the structure and dynamics of KDDI processes, making available a notation that supports both human understanding and machine interpretability. Therefore, integrating BPMN into our framework contributes to the transparency, adaptability, and interoperability of process definitions.

PROTECTION, our proposed framework, builds upon these foundations. It provides support for data-centric process modeling, management, and mining for pandemic prevention and control. PROTECTION focuses the attention on methodological issues in modeling, managing and mining healthcare/clinical KDDI processes for the management of worldwide pandemics. More into details, our proposed framework long-term aims are towards providing:

- (1)

clinical stakeholders with a set of methodologies/tools to manage KDDI processes for the prevention and management of worldwide pandemics;

- (2)

healthcare decision-makers with methodologies/tools for monitoring KDDI processes and resource consumption in their organizations, to control the care quality and the social impact of such pandemic-related processes;

- (3)

software designers with a set of building-blocks and methodologies to support the efficient development of KDDI process systems devoted to the management of worldwide pandemics.

As regards the proper conceptual/software structure, PROTECTION is articulated into the following research assets/components:

- (1)

clinical and healthcare KDDI process modeling and management, to represent knowledge of the target application scenario, plus its conceptual interconnections;

- (2)

clinical and healthcare KDDI process mining, to both discover implicit processes (or process fragments) and to perform an “a posteriori” comparison between designed and actual processes;

- (3)

specific software architecture, for: (i) modeling, managing and evaluating healthcare and clinical KDDI processes for preventing and managing pandemic events, and (ii) continuous KDDI process mining, to monitor actual processes and obtain useful feedback for improvement.

In addition to the framework long-term objectives, we emphasize the importance of

adaptive learning mechanisms within PROTECTION. These mechanisms facilitate dynamic updates to healthcare and clinical processes based on real-time data and evolving pandemic conditions, ensuring a proactive and flexible response. By leveraging advanced machine learning techniques and predictive analytics (e.g., [

14,

15]), our system can identify emerging trends, potential hotspots, and bottlenecks in healthcare resource management. This allows for early intervention, which reduces the spread of infectious diseases and optimizes resource allocation.

The latter adaptive approach empowers clinical stakeholders to not only respond to immediate healthcare needs but also to forecast and prepare for future challenges, ensuring that preparedness strategies evolve in parallel with the progression of a pandemic. Furthermore, the integration of continuous monitoring, data aggregation, and real-time feedback loops ensures high flexibility and responsiveness in the decision-making processes (e.g., [

16,

17]).

The resulting dynamic, data-driven adjustment capability ensures healthcare system resilience, adaptability, and efficiency in managing current and future pandemic threats. By fostering continuous adaptation and learning, the system enhances the overall preparedness of healthcare infrastructures and improves outcomes during critical health crises.

1.1. Research Questions

The intrinsic goal of this paper consists in replying to the following research questions:

Data-Centric approach allows healthcare decision-makers to dynamically track pandemic evolution, patient care pathways, and resource consumption patterns. By integrating real-time data sources, such as Electronic Health Records, epidemiological surveillance systems and IoT-enabled medical devices, PROTECTION ensures a continuous flow of critical healthcare information.

Process mining techniques are crucial for analyzing, optimizing, and enhancing healthcare processes during pandemics. The PROTECTION framework integrates process discovery, conformance checking and enhancement methods to monitor and refine pandemic-related healthcare operations.

Traditional pandemic response systems often rely on static healthcare guidelines that do not adapt quickly to evolving epidemiological patterns. Adaptive learning mechanisms, integrated within the PROTECTION framework, enable healthcare systems to dynamically update response strategies in real-time.

1.2. Paper Key Contributions

Following this main vision, this paper introduces and deeply discusses the framework PROTECTION. Rather than positioning PROTECTION as a completely new methodological framework, we emphasize its novelty as a

synthesis and application framework that integrates and adapts existing concepts into a

coherent, data-driven process for pandemic prevention and control. Specifically, we highlight how PROTECTION unifies knowledge discovery, decision-making, and data analytics perspectives into a single actionable platform, demonstrated through real-life case studies. In more detail, we describe anatomy and main functionalities of PROTECTION, along with several case studies showing how the proposed framework can effectively deal with the complex domain of pandemic control and prevention. In particular, our case studies also comprise several experimental parts where we clearly show how PROTECTION, via using

multidimensional big data analytics methodologies, can really support actionable knowledge discovery and analytics, thus becoming an effective platform in the hands of healthcare decision-makers for pandemic prevention and control. Indeed, this main vision enforces the well-understood impact of

big data analytics technologies in healthcare, as dictated by several recent studies in the area (e.g., [

18,

19]). Overall in this paper, we provide the following contributions:

presenting PROTECTION as a novel synthesis and application framework, grounded in KDDI processes, to enhance pandemic prevention and control;

providing a formalization of KDDI processes as a unifying paradigm that explicitly combines knowledge-intensive, decision-intensive, and data-intensive perspectives into a single methodological framework for complex healthcare scenarios;

integrating adaptive learning mechanisms, big data analytics, and AI-driven predictive models to dynamically update healthcare processes based on real-time data and evolving pandemic conditions;

employing process mining techniques to extract insights, compare designed and actual processes, and continuously improve healthcare operations for better pandemic response;

validating PROTECTION through real-life applications, in order to demonstrate its effectiveness in managing pandemics by leveraging multidimensional big data analytics for decision-making and resource optimization.

1.3. Paper Organization

The remaining part of this paper is organized as follows.

Section 2 focuses the attention on reviewing some relevant related work to our research. In

Section 3, we present the reference architecture of PROTECTION.

Section 4 describes and details the anatomy and main functionalities of PROTECTION. After that, in

Section 5, we provide several case studies demonstrating how PROTECTION effectively addresses the complexities of pandemic control and prevention.

Section 6 provides a vertical scenario representative of the platform. Finally,

Section 7 concludes the paper and provides future research directions for further expanding PROTECTION Framework.

2. Related Work

In this Section, we provide a comprehensive analysis of important and recent research proposals that are related to our work. Indeed, we can identify three relevant research areas that really influence our actions, namely: (i) pandemic data source modeling; (ii) clinical guidelines and care pathways representation and management formalisms; (iii) process modeling and mining.

2.1. Pandemic Data Source Modeling

How to model pandemic data sources? This challenging question can be investigated by carefully looking at the recent COVID-19 pandemic outbreak. Indeed, this critical event has attracted a lot of research in many intertwined fields, from

healthcare and

medicine to

bioinformatics, from

data science to

artificial intelligence, from

risk analysis to

multi-parameter optimization, and so forth. Therefore, the issue of modeling and making publicly available COVID-19-related data and information (e.g., [

20,

21,

22]) has been observed a great effort from the worldwide scientific community. Among these emerging kinds of data sources, which contain directions for modeling pandemic data with specific reference to COVID-19, we can identify the following ones.

First, the

European Centre for Disease Prevention and Control, an agency of the European Union, provides a huge amount of

open healthcare data repositories describing the worldwide history of this pandemic [

23]. One of the main sources related to the evolution of the pandemic is the

COVID-19 Data Repository at

Johns Hopkins University [

24]. Another example of a repository of multiple datasets related to healthcare and social COVID-related issues is [

25]. As for the Italian context, the

Istituto Superiore di Sanità provides information and also historical data about COVID-19 healthcare situation [

22]. Second,

open clinical data repositories are relevant to the scope of PROTECTION as well. Indeed, even though clinical datasets related to COVID-19 are complex to build and share for scientific purposes, some attempts have been made to allow scientists to analyze such data (e.g., [

26,

27,

28]).

Further, since the treatment and prevention of COVID-19 patients received attention from worldwide healthcare institutions, which are providing a sort of continuously evolving recommendations, these can be freely interpreted as authoritative clinical and healthcare guidelines, being an invaluable source of novel research trends and conceptual blueprints for future research efforts.

The latter turns out to be effective under the form of procedures or technical guidance for different social, healthcare and clinical contexts (e.g., [

29,

30,

31]). Finally, even

bibliographic repositories are important sources of knowledge and information. Indeed, different publishers and health organizations launched different initiatives to achieve some shared effort to put at disposal the most recent scientific articles about COVID-19 (e.g., [

21]), thus fostering research exchange and cooperation that, at the end of the day, result to be successful for enhancing the degree of innovation in the investigated research scenario.

2.2. Clinical Guidelines and Care Pathways

Clinical guidelines (GLs) consist of therapeutic and diagnostic recommendations encoding the “best practice” to care for specific patient categories. GLs are defined as “systematically developed statements to assist practitioner and patient decisions about appropriate health care in specific clinical circumstances”.

Care pathways (CPs) are instead defined as “structured multidisciplinary care plans which detail essential steps in the care of patients with a specific clinical problem” [

32]. CPs are often the concrete application of GLs, where it is necessary to explicitly identify decision-based activities and all the complex clinical knowledge and data needed to suitably perform the planned activities. Both GLs and CPs are very relevant in PROTECTION, as they support knowledge modeling in the form of clinical and healthcare processes.

Several formalisms and tools have been proposed to represent, execute and verify GLs, often integrating formalized medical knowledge with data and workflow aspects, and supporting monitoring of GLs over time (e.g., [

33]). A review of the state-of-the-art for these models for

Decision Support Systems (DSS) has been published in [

34,

35]. When GLs are instantiated into a CP, their execution by various actors needs to be coordinated, and this may be performed both by

computerized guideline systems and

Business Process Management (BPM)

systems (e.g., [

36,

37]).

2.3. Process Modeling and Mining

Clinical process management may also benefit from establishing a relationship with BPM [

38,

39], which can rely on a growing general interest and work on many proprietary and open-source tools. A plethora of data and information is generated within the execution of the clinical processes, thus fostering the adoption of BPM-like approaches to model and verify the observed behavior. The intrinsic complexity of the health field calls for models that reflect adaptivity to change, and that are able to deal with incomplete information, i.e.,

models that enjoy flexibility. At the same time, the involved entities are expected to behave in agreement with the specific medical/healthcare knowledge, regulations, norms, business rules, protocols and temporal constraints (e.g., [

40]). Such GL systems (either BPM-based or not) require medical knowledge formalization, often relying on

Ontologies. They have been extensively used in the medical domain since many years, but still deserve research efforts, in particular focusing on process-aware knowledge representation and on data-intensive process models (e.g., [

41,

42,

43,

44]).

Data from already-executed CPs would help to allow the discovery of “actual” processes, as well as their emerging correlations with healthcare and clinical data. Comparing designed processes and “actual” processes may help discover either errors in following a clinical guideline or new, partially unknown,

best practices that could be suitably integrated into clinical guidelines/pathways. Recent approaches treating complex processes try to take advantage of distributed architectures, tackling the aspects of both mining new processes (e.g., [

45]), complex multidimensional process mining (e.g., [

46]), and monitoring the compliance of process executions (e.g., [

47,

48]).

Furthermore, we can acknowledge the amount of researchers’ attention given for the integration of diverse data sources and methodologies to enhance the modeling of healthcare processes. Current research works (e.g., [

49,

50,

51]) have emphasized the importance of synthesizing data from clinical records, real-time patient monitoring systems, social determinants of health, and external environmental factors to create more comprehensive and dynamic process models. This integrated approach not only improves the accuracy of pandemic predictions but also enables more effective resource allocation, optimized treatment strategies, and personalized care.

In addition, the incorporation of advanced artificial intelligence techniques, such as

Deep Learning (DL) (e.g., [

52,

53]),

Natural Language Processing (NLP) (e.g., [

54,

55]) and

Reinforcement Learning (RL) (e.g., [

56,

57]), has shown promise in analyzing unstructured healthcare data, including medical notes, clinical research articles, and even patient-reported outcomes, potentially leading to more

individualized treatment plans. Additionally, the integration of

real-time data from

wearable devices and

IoT systems offers a valuable layer of monitoring, enabling early detection of deteriorating health conditions or the emergence of new health threats.

By employing these technologies, healthcare systems can better understand complex patterns in patient care and outcomes, potentially leading to more individualized treatment plans. Additionally, the integration of real-time data from wearable devices and IoT systems offers a valuable layer of monitoring, enabling early detection of deteriorating health conditions or the emergence of new health threats.

Such intersection of AI, data integration, and healthcare process modeling holds significant potential for improving decision-making speed and accuracy, ultimately resulting in more proactive and effective pandemic responses. As the healthcare landscape continues to evolve, fostering cross-disciplinary collaboration and continuously refining these integrated models will be essential in building resilient, adaptable systems capable of effectively addressing both current and future global health challenges.

Table 1 summarizes the key existing approaches in pandemic management, comparing them based on their methodology. This comparison highlights the limitations of current systems and demonstrates how PROTECTION addresses these gaps in a number of ways as follows. (

i)

Overcoming High Computational Resource Demands, one of the primary limitations identified in the table under “Big Data Analytics for Pandemic Management” is the high computational resources required for analyzing large datasets. The PROTECTION framework mitigates this issue by leveraging cloud-based infrastructures and edge-computing architectures, which allow for distributed processing and more efficient resource utilization. This decentralization reduces the burden on central servers and facilitates real-time data processing, even in resource-constrained settings. Additionally, the use of lightweight machine learning algorithms within the framework reduces the computational intensity of predictive models, enabling faster processing with lower computational costs. (

ii)

Addressing Data Privacy Concerns, as data privacy and security are critical concerns in healthcare applications. While the PROTECTION framework does not explicitly focus on data privacy as the primary research area, it has been designed with privacy-preserving technologies such as federated learning and differential privacy. These technologies enable the analysis of healthcare data without exposing sensitive patient information, which is particularly important in the context of global health crises like pandemics. By utilizing federated learning, data can remain localized on patient devices, and only aggregated insights are shared, thus ensuring compliance with privacy regulations (e.g.,

GDPR,

HIPAA). Moreover, the framework integrates

Secure Multi-Party Computation (SMPC) protocols to protect data from potential breaches while still allowing for meaningful analysis of healthcare trends. (

iii)

Real-Time Integration of Multidimensional Data, the PROTECTION framework addresses the limitation of existing approaches, such as those found in process mining (e.g., [

45,

46,

47,

48]), by incorporating real-time, multidimensional data streams. Unlike retrospective process mining methods that primarily focus on historical data, PROTECTION enables dynamic process monitoring and adaptation. By continuously integrating real-time patient monitoring data, clinical records, and external environmental factors (e.g., weather patterns, social determinants of health), PROTECTION generates highly accurate and up-to-date process models. This allows healthcare systems to better anticipate and respond to rapidly changing pandemic conditions. (

iv)

Scalability and Adaptivity, the framework is designed to be scalable and adaptive, which is critical in the face of evolving pandemic scenarios. For instance, the PROTECTION framework incorporates adaptive decision-support mechanisms that can dynamically adjust to the changing nature of the healthcare environment, such as the appearance of new variants of a virus, changes in government policies, or fluctuations in healthcare resources. This adaptability addresses the limitation in many existing pandemic management tools that struggle to respond to rapidly evolving circumstances. (

v)

Incorporation of AI and Real-Time Decision-Making, while existing systems (e.g., [

49,

50,

51,

52,

53,

54,

55,

56,

57]) do not fully integrate AI-driven insights for decision-making, PROTECTION utilizes advanced AI techniques like DL, NLP, and RL for real-time predictions and personalized treatment plans. These AI models are trained on vast datasets, including

patient history,

clinical guidelines, and

environmental data, allowing healthcare systems to quickly

adapt to patient needs,

optimize resource allocation, and

make informed decisions during a pandemic.

Table 1 lists the approach-related drawbacks of the different pandemic management strategies. A data-centric, knowledge-driven, and decision-supportive approach based on KDDI procedures is integrated into the PROTECTION framework to fill these shortcomings. In contrast to many big data analytics solutions, which are limited to retrospective analysis and lack real-time flexibility, PROTECTION integrates adaptive learning processes and real-time process monitoring, enabling dynamic modifications to healthcare workflows based on real-time data. Furthermore, PROTECTION fills the gap between healthcare guidelines and responsive clinical operations by combining process mining and decision automation.

By utilizing knowledge-intensive structures like care pathways and ontologies, PROTECTION improves process models in contrast to conventional BPM systems, which frequently lack semantic depth and contextual awareness. We recognize that privacy and computational resource limitations, which are well-known problems in big data systems, are important concerns. While they fall outside the purview of PROTECTION at this time, our conclusions specifically name them as crucial areas for further investigation. All things considered, PROTECTION provides an expandable and integrated architecture that addresses the main issues with earlier pandemic prevention and control research.

3. The PROTECTION Reference Architecture

In this Section, we provide the reference architecture of PROTECTION, which has the final goal of capturing the many facets of pandemic prevention and control, as also demonstrated by the recent worldwide COVID-19 epidemic. The proposed architecture is modular in nature, and it unveils the complex interaction of process modeling, management methods, and data mining approaches in the context of treating such virulent viruses.

PROTECTION looks at pandemic events through a scientific lens, and it aims at uncovering the fundamental processes that regulate their transmission patterns, the efficacy of various intervention strategies, and the critical role of data-driven methods in shaping public health policy. Through rigorous analysis, this study not only elucidates the complexity inherent in pandemic management, but also emphasizes the importance of adaptable methods based on strong analytical frameworks.

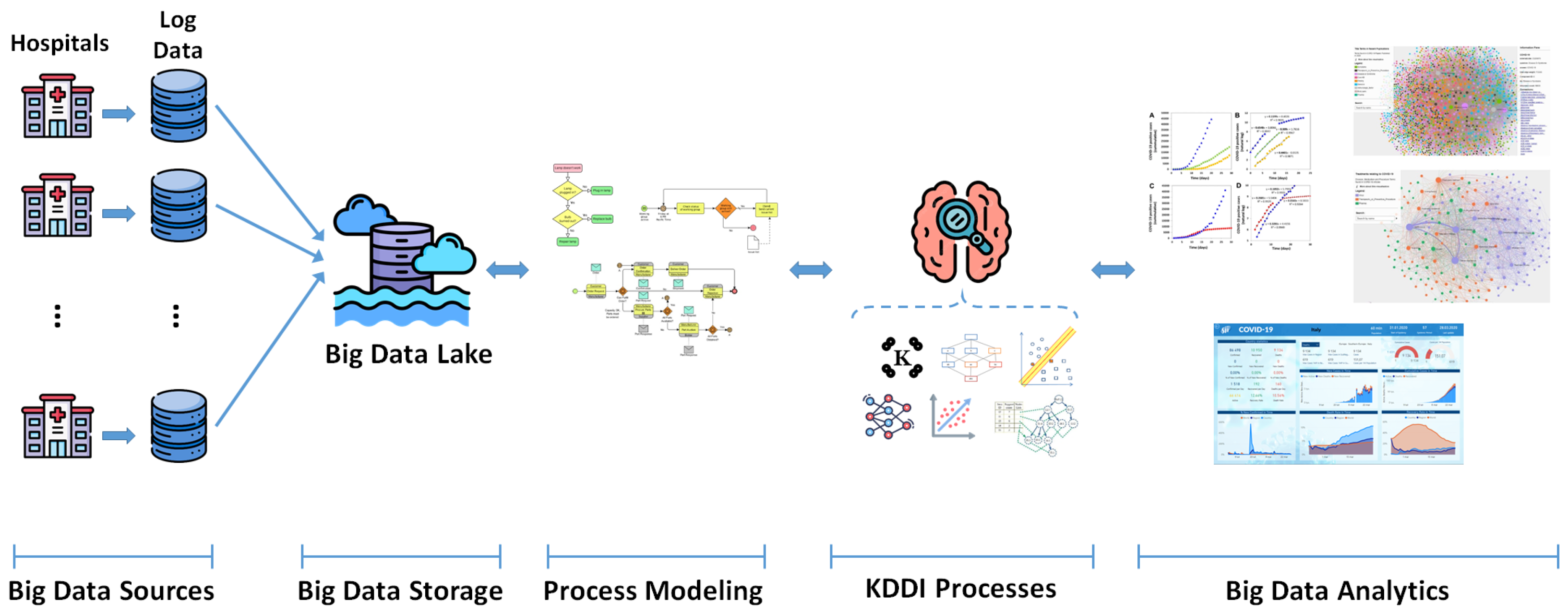

Figure 1 shows the reference architecture of our proposed framework PROTECTION. Our reference architecture consists of several component layers, namely (

i)

Big Data Sources layer; (

ii)

Big Data Storage layer; (

iii)

Process Modeling layer; (

iv)

KDDI Processes layer; (

v)

Big Data Analytics layer. In the following, we describe these layers in detail.

Big Data Sources Layer. Healthcare data logs include valuable information such as patient demographics, clinical symptoms, laboratory findings, treatment procedures, and utilization trends. Using such comprehensive facts, we can build complex models that reflect the pandemic spatio-temporal development, evaluate the success of containment methods, and improve resource allocation in healthcare settings. Furthermore, using healthcare data logs allows for the inclusion of real-time information, permitting dynamic-modeling techniques that react to changing epidemiological patterns and healthcare demands. The rigorous examination of these massive data sources provides useful insights for optimizing pandemic response efforts and improving public health preparedness measures.

Big Data Storage Layer. Cloud data lakes provide scalable and cost-effective storage for pandemic-management data sources such as epidemiological surveillance, genomic sequences, healthcare records, and social media sentiment analysis. Using the flexibility and accessibility of Cloud infrastructures, we can seamlessly combine diverse information, allowing for holistic modeling techniques that reflect the complicated interaction of numerous factors influencing disease transmission and response tactics. By implementing Cloud data lakes, we want to demonstrate the usefulness of such storage solutions in empowering data-driven insights, therefore helping to the refinement of our process modeling framework and the optimization of pandemic mitigation efforts.

Process Modeling Layer. By taking advantages from advanced approaches like machine learning, statistical modeling, and NLP, we can extract valuable insights from various datasets stored in big data repositories. These datasets include epidemiological records, clinical data, genetic sequences, movement patterns, social media sentiment analysis, and other information, allowing for a more thorough knowledge of virus behavior and its implications for public health systems. This strategy not only allows for real-time monitoring and prediction of disease patterns, but it also enables evidence-based decision-making for successful pandemic management strategies.

KDDI Processes Layer. The incorporation of

knowledge-driven decision-making processes within data-intensive techniques applied on extensive Cloud data lakes is a very effective approach that can be adopted in this layer of PROTECTION. By leveraging advanced methodologies such as machine learning algorithms, statistical modeling, and NLP, we can extract valuable insights from the diverse and voluminous big datasets stored within these repositories. These datasets encompass a wide array of information, including epidemiological records, clinical data, genomic sequences, socio-economic indicators, and mobility patterns. By harnessing the capabilities of big data analytics tools and techniques (e.g., [

58,

59,

60]), coupled with knowledge-driven decision-making processes (e.g., [

61]), we can also gain a comprehensive understanding of the pandemic dynamics (e.g., [

62,

63]). This facilitates informed decision-making in public health policy formulation, resource allocation, and intervention strategies aimed at mitigating the spread of pandemics and minimizing their impact on the society.

Big Data Analytics Layer. We can successfully manage and analyze massive amounts of heterogeneous big data stored in PROTECTION repositories by employing advanced approaches such as distributed computing frameworks (e.g., Hadoop, Spark, Hive, etc.), scalable data processing engines, and Cloud-native analytics services. Indeed, machine learning algorithms, DL models, and statistical techniques enable the extraction of significant insights from a wide range of datasets, including genomic sequences, clinical data, mobility patterns, sentiment analysis from social media platforms, and epidemiological records. These analytics tools and methodologies enable us to uncover hidden patterns, correlations, and helpful insights, which are crucial for driving evidence-based decision-making and establishing successful public health initiatives in response to the virulent epidemics. In particular, the strategy of PROTECTION consists in exploiting recent multidimensional big data analytics methodologies, given their proven effectiveness in several application scenarios, including healthcare analytics. Summarizing, these methodologies predicate the application of knowledge discovery techniques over multidimensionally shaped big datasets, in order to obtain all the benefits from powerful multidimensional modeling paradigms.

Building upon the detailed architecture of PROTECTION, we highlight the integration of real-time feedback mechanisms throughout each layer. This continuous feedback loop allows for iterative refinement of the pandemic management strategies in response to evolving data and circumstances. As new epidemiological data becomes available, it feeds directly into the Big Data Sources Layer, thus informing updated models and simulations within the Process Modeling Layer and KDDI Processes Layer. The described real-time data flow not only enhances the responsiveness of the system but also facilitates the rapid identification of emerging trends, potential risks, and areas requiring intervention.

By incorporating adaptive learning mechanisms (e.g., [

64,

65]) that continuously update based on the latest available data, the system can support proactive rather than reactive decision-making, leading to more effective containment strategies and resource allocation. Furthermore, this dynamic system enables the real-time evaluation of intervention measures, providing immediate insights into their effectiveness and allowing for prompt adjustments. The latter flexibility can enhance the integration of adaptive feedback mechanisms within the PROTECTION framework.

Specifically, integrating adaptive learning mechanisms can provide several key advantages to PROTECTION, such as:

Real-Time Process Optimization: continuously refines healthcare workflows by analyzing real-time data and adjusting pandemic response strategies accordingly;

Enhanced Predictive Capabilities: leveraging big data analytics to forecast emerging outbreaks, resource shortages, and potential healthcare bottlenecks;

Automated Decision Support: assists healthcare decision-makers by dynamically updating guidelines and response measures based on evolving epidemiological trends;

Increased System Resilience: enhances the adaptability of healthcare systems, ensuring they can respond effectively to new variants, changing public health conditions, and unforeseen crises.

4. The Emerging PROTECTION Methodology

The proposed framework PROTECTION is part of a long-term computer science and artificial intelligence project focusing on theoretical, methodological, and application-oriented aspects for the development of KDDI process systems able to deal with the complex domain of pandemic control and prevention. In this Section, we describe some important aspects of the emerging methodology induced by the overall PROTECTION proposal.

In order to support methodological issues in modeling, managing and mining issues, our proposed methodology is effective as it is supporting the pandemic control policies at the large, with a special emphasis on the integration of these methodologies with the emerging big data trend, thus achieving the innovative definition of so-called data-centric process modeling, management and mining for pandemic scenarios. As a proof of concept, PROTECTION targets the management of pandemics.

While a lot of attention arose on both healthcare and clinical data analysis and mining for pandemic management, little attention has been paid till now to some more long-term perspectives, mainly focusing on KDDI processes that use and generate such data. The main goal of PROTECTION is to propose a methodological approach, and some related software tools, to face future pandemics (and the continuation of the current one) by considering the healthcare and clinical processes enacted (and to enact) to fight the pandemics. Summarizing, from an attention to data we put the focus on KDDI processes, which have to be suitably designed and executed to take such critical pandemic under control, by a seamless integration of knowledge- decision- and data-related aspects.

The content of our proposed framework is drawn from

open-access repositories, specific clinical and healthcare resources. Moreover, this content helps to serve as a reference for synthetic datasets. Also, we have used technical guidelines during pandemics for patients from

USA, Europe, and

the World Health Organization (WHO) [

23,

29,

30]. History-oriented datasets from

John Hopkins University [

24] are also considered. Moreover, specific healthcare datasets were considered, related to the pharmacological monitoring of patients receiving monoclonal antibody therapies and the upcoming pharmacovigilance activities linked to pandemic-related vaccines. In terms of clinical datasets, we relied on certain clinical data repositories from the pandemic research database [

28], which include electronic medical records of predominantly ambulatory patients.

For the objectives of our research project, we present a technique based on the generation of

synthetic datasets. The synthetic datasets consist of multidimensional healthcare and epidemiological data, which were synthesized based on schema and attribute types observed in various public sources such as

EHR systems,

pandemic surveillance systems, and

process execution logs (e.g., [

24,

28,

30]). Each record in the synthetic datasets is modeled according to attributes such as (e.g., [

31,

35,

37]): (

i)

specific healthcare actions performed; (

ii)

classification of events (e.g.,

diagnosis,

treatment,

intervention); (

iii)

entities performing the action, such as

healthcare providers; (

iv)

execution times; (

v)

anonymized patient identifiers; (

vi)

COVID-19 test type; (

vii)

prescribed treatments; (

viii)

recovery statuses or further

medical actions required. Thus, this makes available a realistic and

privacy-preserving alternative for research in pandemic control.

As a consequence, real-time data streams from

IoT-enabled medical devices are integrated into the datasets, facilitating adaptive learning mechanisms within our framework. These streams enable

continuous monitoring and

dynamic updates to pandemic control strategies, supporting the development of predictive models (e.g., [

42,

45,

61]). These structured synthetic datasets support

process mining,

pattern discovery, and

predictive analytics, offering a

data-driven method for supporting pandemic prevention and control efforts.

Furthermore, we have made an extraction of our synthetic datasets and the associated multidimensional models publicly available in an online open repository [

66]. We were limited to this extraction due to project constraints. This will allow the scientific community to reproduce our study and adapt the datasets to other clinical or epidemiological use cases, thus contributing to broader research efforts in the field of healthcare and pandemic management.

Summarizing, the main axioms of the proposed PROTECTION framework are the following.

- -

Modeling and Analyzing Healthcare KDDI Processes Dealing with the Management of Pandemics. Such processes need to be designed and changed according to the possibly exponential diffusion of pandemics. They are characterized by many decision- and knowledge- intensive tasks. Here, integration with data (e.g., medical records, healthcare population data, and so on) and temporal constraints have to be considered. Simulation of such processes needs to be considered, to estimate feasibility, resource allocation, and so on. Different technical questions have to be addressed in this direction: how to represent medical knowledge of pandemic-related clinical guidelines? How do we merge and evaluate healthcare and clinical guidelines for pandemic prevention and patient management? How do we change healthcare processes according to the evolution of a pandemic? May we specialize healthcare pandemic control processes according to data coming from the pharmacovigilance for vaccines?

- -

Pandemic-Related Process Mining, in order to Discover Process Models from Logs. Whenever it is not possible to have log files to be analyzed in order to mine process models, the main idea is to consider both medical and healthcare records as an indirect kind of log, where therapeutic and specialized exams represent actions, main diagnoses represent (possibly) intermediate states of patients, and decisions for different allowed therapies/interventions/pathways represent knowledge-intensive decisional tasks. Here questions are like: May we discover some recurrent patterns of therapeutic actions/decisions not considered in the guidelines? Are the tasks recorded in medical records confirming the main indications of clinical and healthcare guidelines? Are there some suggestions in guidelines never considered in the medical records? May we suggest improvements for guidelines on the basis of the task patterns discovered from medical records? May we discover specific recurring care patterns for specific high-risk patients undergoing monoclonal antibody therapies?

In more detail, the main objective of our research is not to propose a novel methodology or framework but rather to leverage and apply an established approach,

Business Process Management (e.g., [

36,

37]) within KDDI process paradigm, toward the domain of pandemic management. Our work focuses on demonstrating how BPM principles can be effectively integrated within the KDDI process to enhance

real-time decision-making,

process optimization, and

adaptive healthcare responses in real-life applicative scenarios (i.e.,

Pandemic scenarios). Rather than defining entirely new process models or architectures, the manuscript illustrates how existing BPM techniques can be applied to real-life pandemic prevention and control scenarios, facilitating structured process modeling, management, and mining.

Reaching such goals would lead to significant advantages for the National Healthcare System (NHS) in promptly managing and preventing pandemic events. The progressive adoption of ICT techniques, in fact, can play a strategic role in the current rationalization process aimed at guaranteeing high-quality services, while reducing costs, even in a pandemic event, where the management and prevention has to be enacted and monitored in a fast and dynamic way, to promptly react to diseases spreading with an exponential increase. Such a framework motivates the growing attention towards clinical and healthcare process definition and analysis.

PROTECTION pursues such goals through the development of several advanced and innovative research activities. In particular, process management in the clinical and healthcare domains is a significant topic, and we aim at bringing new challenges in the following research areas: ontological tools, languages based on different kinds of logics, data models and design tools for capturing events and temporal constraints, temporal extensions of GLs and CPs representation formalisms, constraint-based temporal reasoning, design-time and run-time GL verification, multidimensional analysis of healthcare processes, declarative and incremental process mining methods.

Specifically, PROTECTION introduces innovation in the areas of representation logic and ontological tools by structuring behavioral data in a multidimensional and semantically enriched manner. In terms of representation logic, PROTECTION incorporates hierarchical relationships, temporal dependencies, and attribute-based pivoting, enabling a flexible and expressive representation of behavioral responses to pandemic measures. This approach enhances the ability to reason over complex, evolving datasets and identify patterns that traditional flat representations might overlook.

It should be noted, here, that, even if the above-mentioned aspects are strictly related, so far, they have been considered in isolation and not yet applied cooperatively on the specific issue of managing worldwide pandemics. Starting from this limitation, PROTECTION aims at providing a set of methodologies and prototype software tools for the process-oriented prevention and management of worldwide pandemics.

The framework PROTECTION aims to integrate knowledge-driven decision-making processes into pandemic management by focusing on dynamic healthcare process modeling, management, and mining. The so-depicted methodology emphasizes data-centric process modeling, which integrates real-time healthcare data, clinical guidelines, and decision-making, allowing for adaptive and timely responses to evolving pandemic conditions. By incorporating big data techniques like predictive analytics and machine learning, the framework can detect emerging trends, predict resource needs, and provide early warnings.

Additionally, process mining techniques uncover inefficiencies or inconsistencies in the application of clinical guidelines, offering opportunities for process optimization. PROTECTION also promotes interdisciplinary collaboration, ensuring that solutions are robust, adaptable, and scalable across various pandemic scenarios.

For what concerns the privacy and security issues, these aspects are of critical importance, especially in the context of healthcare systems and private Cloud infrastructures. However, the focus of our research is specifically on process modeling, management, and mining within the BPM-KDDI framework for pandemic prevention and control. Our primary objectives are centered on demonstrating how Business Process Management can be effectively leveraged in real-life pandemic scenarios, along with addressing security and privacy concerns, computational resources, real-time integration of multidimensional data, scalability and adaptivity, and the incorporation of AI and real-time decision-making, which are relevant research domains in their own right. Nevertheless, we recognize the necessity of these aspects for real-life deployment and have explicitly integrated them as core components and mechanisms within our proposed framework for secure Cloud architectures, which further enhances the practical applicability of our approach.

Similarly, semantic aspects of data, such as

clinical data labeling, are indeed crucial in the clinical domain, especially when dealing with large volumes of unstructured medical records and unlabeled databases. However, our research focuses on process modeling, management, and mining within the BPM-KDDI framework, rather than on semantic data processing techniques. We acknowledge that clinical data labeling and semantic interoperability play a vital role in healthcare analytics. Nevertheless, incorporating these aspects requires specialized methodologies, such as NLP and

ontology-based approaches (e.g., [

67,

68]), which differ in nature from the core objectives of our research. Therefore, while these aspects are highly relevant, their detailed investigation falls outside the scope of this paper and is left as future work.

Finally, the framework seeks to improve both the immediate response to health crises and the long-term management of healthcare processes, enhancing overall preparedness and resilience, which contributes to the broader advancement of healthcare process management in the face of future global health challenges.

We have also opted for the integration of adaptive learning mechanisms and real-time updates in the context of the BPMN process modeling within the PROTECTION framework. The latter addresses the need for dynamic updates to pandemic management strategies based on real-time data, and includes both specific mechanisms for adaptive learning and detailed explanations of the real-time scale and scope of process modifications.

Clarification of Adaptive Learning Mechanisms. To improve the flexibility and responsiveness of pandemic management, we integrate adaptive learning mechanisms into our BPMN-based process modeling. This Section now explicitly explains how real-time data analytics and continuous feedback from epidemiological trends drive dynamic updates to healthcare processes. Specifically, adaptive learning utilizes real-time event data such as infection rates, hospital capacity, and emerging virus variants to inform and adjust healthcare processes as conditions evolve. The adaptive learning mechanism continuously processes incoming data streams, allowing the system to recommend and apply modifications to BPMN models dynamically. This results in an up-to-date representation of healthcare workflows that adapts rapidly to the changing demands of pandemic control.

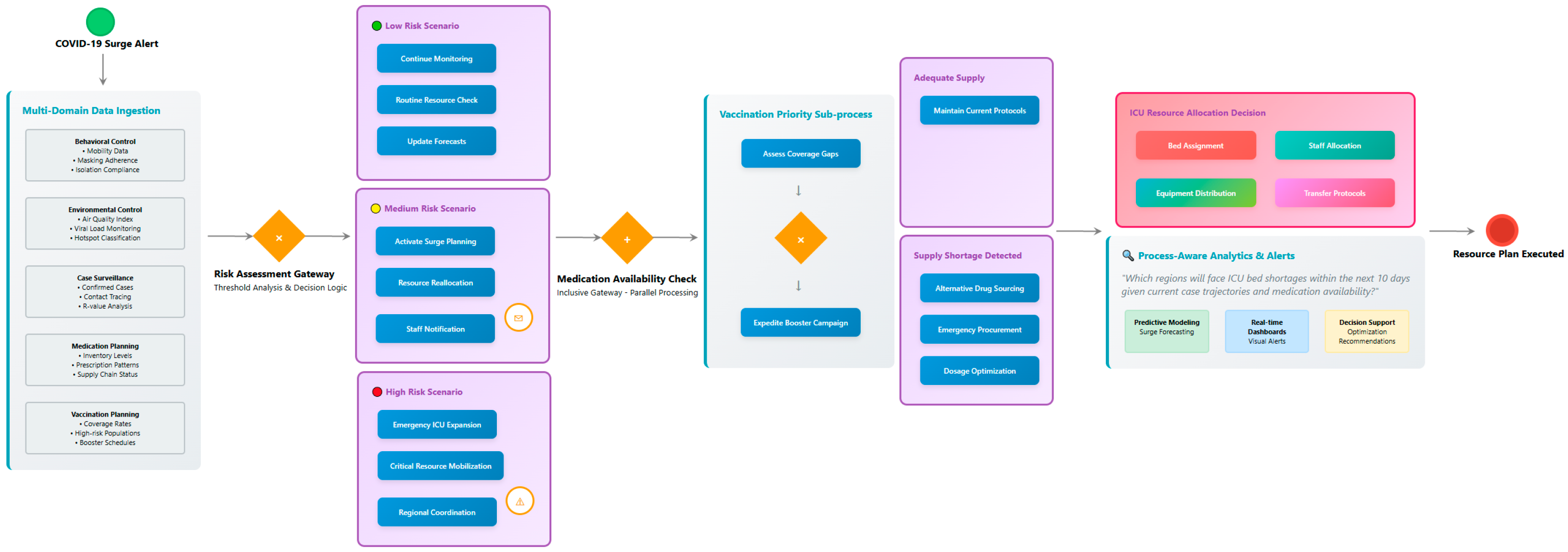

Real-Time Updates to BPMN Models. In order to further assess the feasibility of real-time updates, we provide a concrete example of how such updates occur within the framework. For instance, if a sudden surge in COVID-19 cases is detected in a region, the patient triage model could be dynamically updated to prioritize high-risk individuals or adjust treatment protocols in response to updated clinical guidelines. This update is enabled through the integration of process mining algorithms (e.g., Alpha Miner and Inductive Miner), which detect deviations from the predefined BPMN process model, and adaptive learning algorithms, which recommend modifications to the process flow based on new data inputs.

Types of Modifications and Scope of Updates. The types of modifications to the BPMN models are not restricted to simple rearrangements of tasks. Rather, the framework supports the addition of new roles, tasks, or decision points as necessary to address evolving healthcare requirements. For example, should a new patient care protocol become essential due to the emergence of a new variant of the virus, the framework allows for the integration of additional process steps or the introduction of specialized healthcare teams. Furthermore, process roles can be reassigned dynamically to match resource availability or evolving expertise requirements. This dynamic ability to modify the process models ensures that the healthcare system can respond quickly and efficiently to unforeseen changes in the pandemic landscape.

Triggering Events and Process Mining Algorithms. In this context, triggering events are essential for initiating updates to the process model. Events such as real-time infection rates, hospital occupancy, and patient outcomes serve as key signals for triggering updates to the healthcare processes. These events are processed by the process mining algorithms integrated into the framework, which include Alpha Miner and Inductive Miner. These algorithms identify deviations from expected workflows, detect bottlenecks, and uncover inefficiencies in the process flow. When such deviations are detected, the system initiates a review of the process model and proposes modifications based on the updated data and current operational needs.

Automatic Process Modifications and Clinical Guidelines Update. We further elaborate on the capability of the PROTECTION framework to autonomously update clinical guidelines based on real-time data analysis. For example, if the incoming data suggests a change in treatment protocols or prioritization, the system can autonomously propose updates to clinical guidelines, reflecting the latest available information. However, we clarify that although the process modifications can be automated, critical decisions, particularly those concerning patient care, will always involve some level of human oversight or validation to ensure that the system remains aligned with medical standards and ethical guidelines. This hybrid approach, where machine-based recommendations are supplemented by human decision-making, ensures that process optimizations remain grounded in clinical expertise.

Time Scales and Real-Time Scale of Updates. In response to concerns about the real-time scale of updates, we provide further clarification on the typical time frames involved in adaptive learning and real-time process updates. The frequency of process adjustments typically ranges from minutes to hours, depending on the nature and frequency of incoming data. For instance, when epidemiological models predict a sudden shift in transmission rates, corresponding updates to healthcare workflows (such as patient triage or resource allocation) can occur within a short time frame. This allows healthcare providers to rapidly adjust response strategies, thereby enhancing the effectiveness of pandemic control efforts.

Finally, the integration of adaptive learning mechanisms and the capability for real-time updates within the PROTECTION framework provides a significant advancement in pandemic management. These innovations enable dynamic, data-driven decision-making that can be promptly adjusted in response to emerging epidemiological trends, thus supporting the overall goal of improving pandemic preparedness and response strategies.

5. Pandemic Management and Control Measures Modeling

In this Section, we provide a few examples of pandemic management and control measures processes along with BPMN diagrams. These plans can be applied to various critical aspects of pandemic management, such as (i) behavioral infection control and (ii) environmental pandemic control. The strategic business objectives monitored by the platform include minimizing ICU saturation, optimizing vaccination rollout, and tracking the emergence of new variants, all of which play a key role in pandemic response. As a consequence, these objectives guide the design of the process models and analytics tools, ensuring that the data produced is actionable and aligned with overarching goals.

In addition to the BPMN diagrams, for each process model, we provide: (

i) example datasets of data produced by process executions, which conforms to the datasets modeling described in

Section 4; (

ii) multidimensional big data analytics tools developed on top of these datasets, which are designed to support data-driven decision-making and optimize the control measures implemented. Specifically, indicators such as

R0 (basic reproduction number),

test positivity rate,

ICU occupancy,

vaccination coverage by demographic group, and

average patient care delay are carefully monitored, as these are critical for assessing the effectiveness of intervention strategies. However, due to project

data privacy agreements and

non-divulgation constraints, parts of the specific datasets and some process models used in this study are not made publicly available. These measures are put in place to ensure compliance with privacy regulations and protect sensitive information related to healthcare systems, patient data, and pandemic response activities. As a consequence, while the methodology, process models, and analytics tools are detailed in this paper, the actual data and some process-specific models remain confidential.

The business processes modeled include diagnostic workflows, treatment administration, contact tracing, vaccination campaigns, and patient discharge planning. Each of these processes represents a core area of pandemic response, and the BPMN diagrams are designed to clearly illustrate the flow of activities, decision points, and the interactions between different stakeholders. In fact, such models are particularly useful for optimizing resource allocation and streamlining operations, especially when faced with the challenge of large-scale pandemics.

To support the data modeling efforts, we use a multidimensional approach where facts and dimensions are defined clearly. Facts include daily case counts, hospitalization events, and intervention outcomes, providing the quantitative data necessary to evaluate the impact of various strategies. Dimensions, on the other hand, include factors such as time, location, age group, healthcare facility, and intervention type. These dimensions enable detailed analysis, allowing stakeholders to examine trends and patterns that are specific to different subsets of the population or geographies.

In this paper, we present a technique based on the integration of these facts and dimensions within a comprehensive data model. This method supports the creation of multidimensional data cubes that facilitate complex analytics, such as trend analysis, predictive modeling, and scenario simulation. Furthermore, these analytics tools can be tailored to support specific business objectives, such as optimizing vaccination rollout or minimizing delays in patient care, thereby enhancing decision-making capabilities.

The facts and dimensions used in our data modeling represent the building blocks for creating rich datasets that can be leveraged for advanced analytics. By interpreting these data in the context of business process models, the platform enables real-time monitoring of key indicators and provides actionable insights that can inform decision-making. As in many other cases, the integration of these models with big data tools is essential for adapting to the dynamic and unpredictable nature of pandemics, allowing for continuous updates to control strategies based on the most recent data.

Our approach also makes available a framework for ongoing learning, where adaptive strategies can be implemented as new information becomes available. The ability to track variant emergence and changes in epidemiological trends is particularly important in the context of a rapidly evolving pandemic. Therefore, by ensuring that the business processes are continuously updated and informed by the most recent data, the platform represents a powerful tool in the fight against pandemics.

However, it is important to note that the main issue in developing such systems lies in balancing the complexity of modeling and the practical need for real-time insights. From the above considerations, it follows that a methodical approach to process modeling and data analytics is more convenient than relying solely on real-time data collection without a structured framework. This allows for better preparedness and responsiveness, especially in addressing unexpected surges in cases or the appearance of new variants. By combining business process modeling with powerful analytics tools, the platform supports a dynamic and flexible approach to pandemic management.

5.1. Behavioral Infection Control

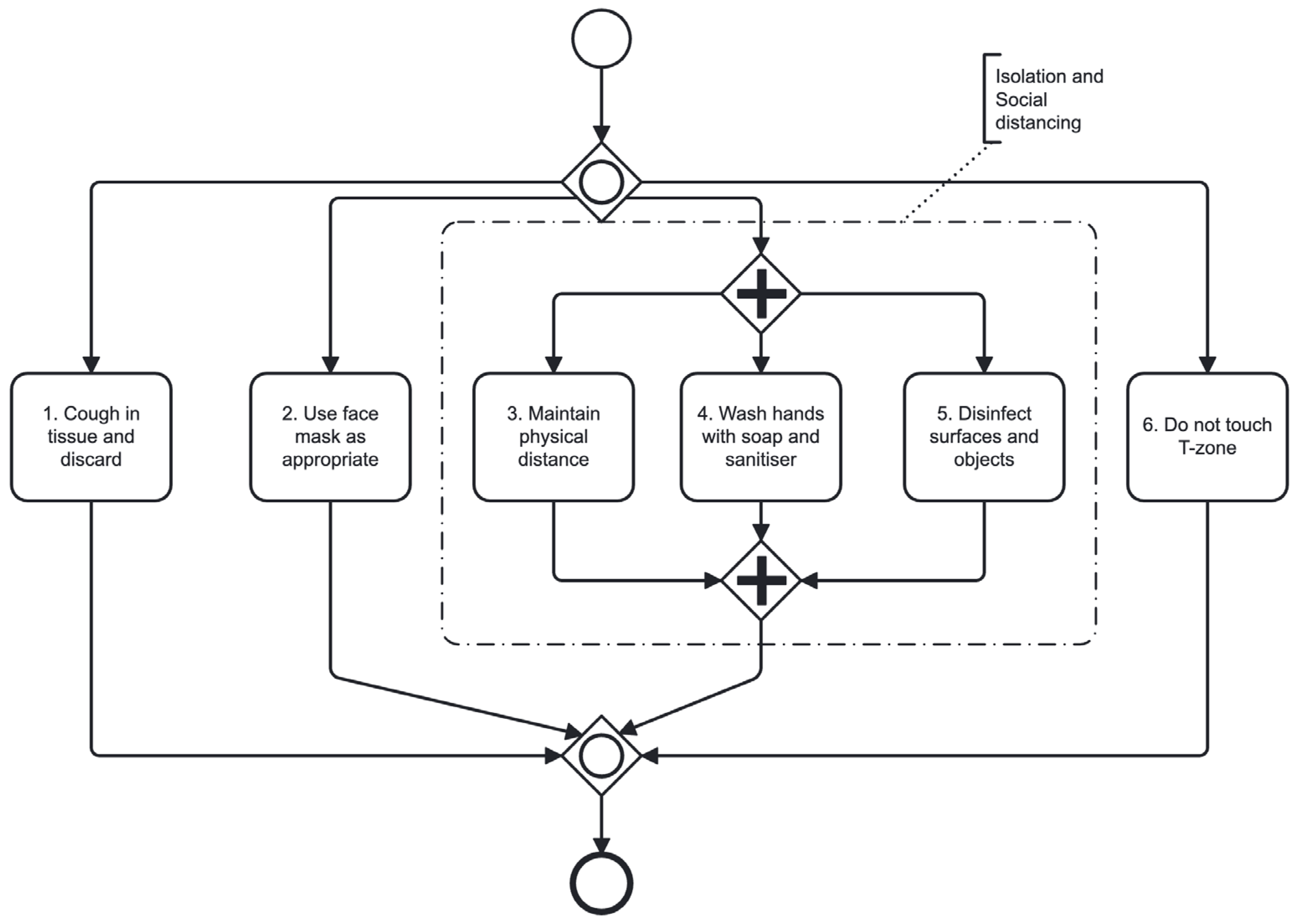

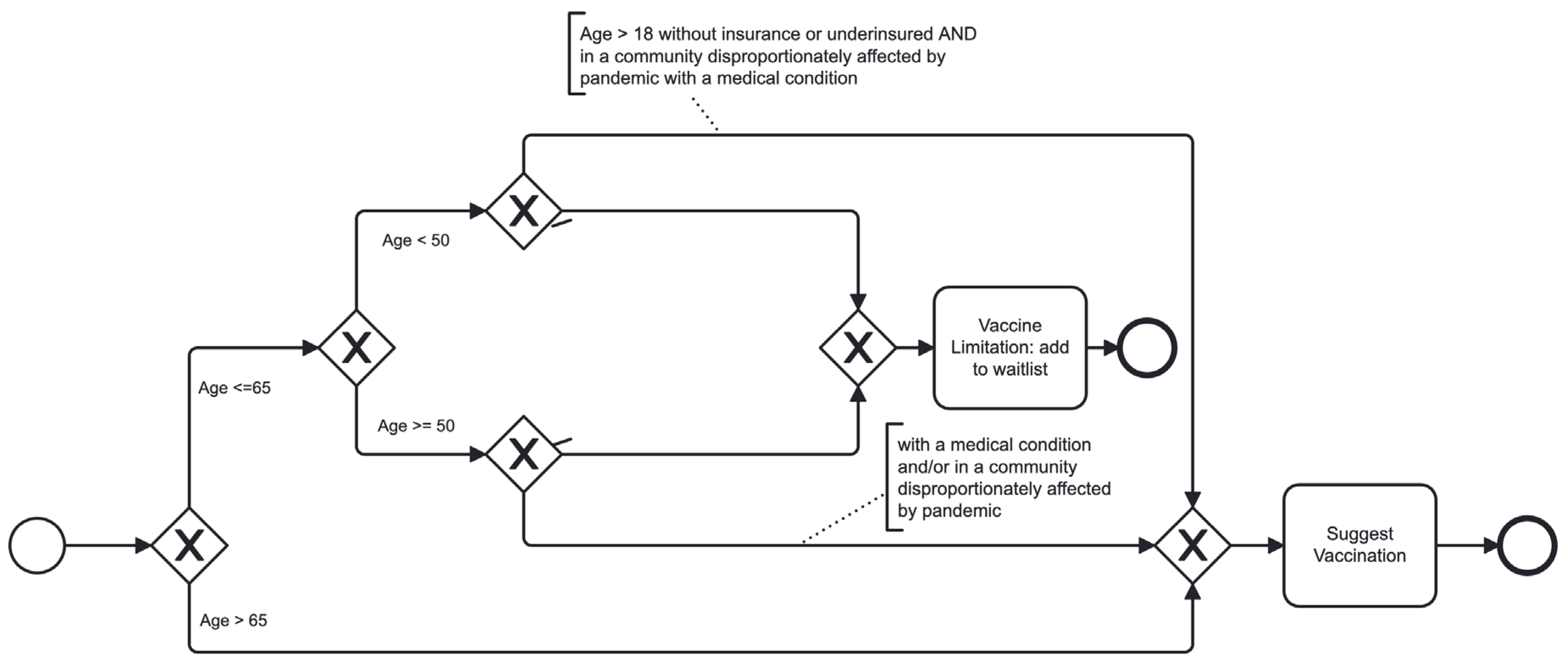

The process model

Behavioral Infection Control is depicted in

Figure 2, which illustrates the potential pathways of pandemic transmission in community settings and the corresponding behaviors capable of mitigating transmission. Isolation and social distancing measures, represented by the separation lane in the BPMN diagram, effectively block transmission by physically distancing infected individuals from others. However, the significant societal costs incurred, including economic, educational, and mental health impacts, highlight the complex challenges associated with adherence to these measures. Moreover, without a viable vaccine, the relaxation of these measures may precipitate a resurgence of infections. Thus, widespread adherence to personal protective behaviors outlined in the model, such as proper cough etiquette, appropriate face mask usage, physical distancing, hand hygiene, disinfection of surfaces, and avoiding touching the face, is imperative. However, the effectiveness of these behaviors is contingent upon comprehensive guidance, training, and support to ensure their consistent implementation and thereby mitigate transmission effectively.

In this paper, we present a technique based on the extraction and modeling of a multidimensional dataset derived from log data, aimed at mining behavioral patterns related to pandemic preventive measures. A sample of the dataset for this running case study, named as

Behavioral Infection Control Dataset, is shown in

Figure 3. This dataset encompasses critical attributes necessary for analyzing human responses to infection control protocols within healthcare institutions. The most common fields include

Task_Name, detailing the specific activity undertaken;

Event_Type, delineating the nature of the action;

Originator, identifying the individual initiating the action;

Timestamp, providing temporal context;

Environment, specifying the location or setting of the activity; Surface, describing the contact surface involved;

T-Zone, indicating interactions with critical facial areas; and

ProtectiveDevice, documenting the use of protective equipment.

From the above considerations, it follows that the kind of data structure used here is particularly suitable for modeling exposure dynamics and evaluating compliance. However, the main issue often lies in the perceived arbitrariness of certain column combinations. Therefore, clarification of column relationships was essential. In fact, fields such as Surface, T-Zone, and ProtectiveDevice are not arbitrarily assigned but are issued according to domain-driven association rules. For example, the task “Disinfect surfaces and objects” is not directly applied to the Nose; instead of implying such a literal interpretation, the T-Zone field represents areas of potential contamination or risk that may arise from neglecting this task.

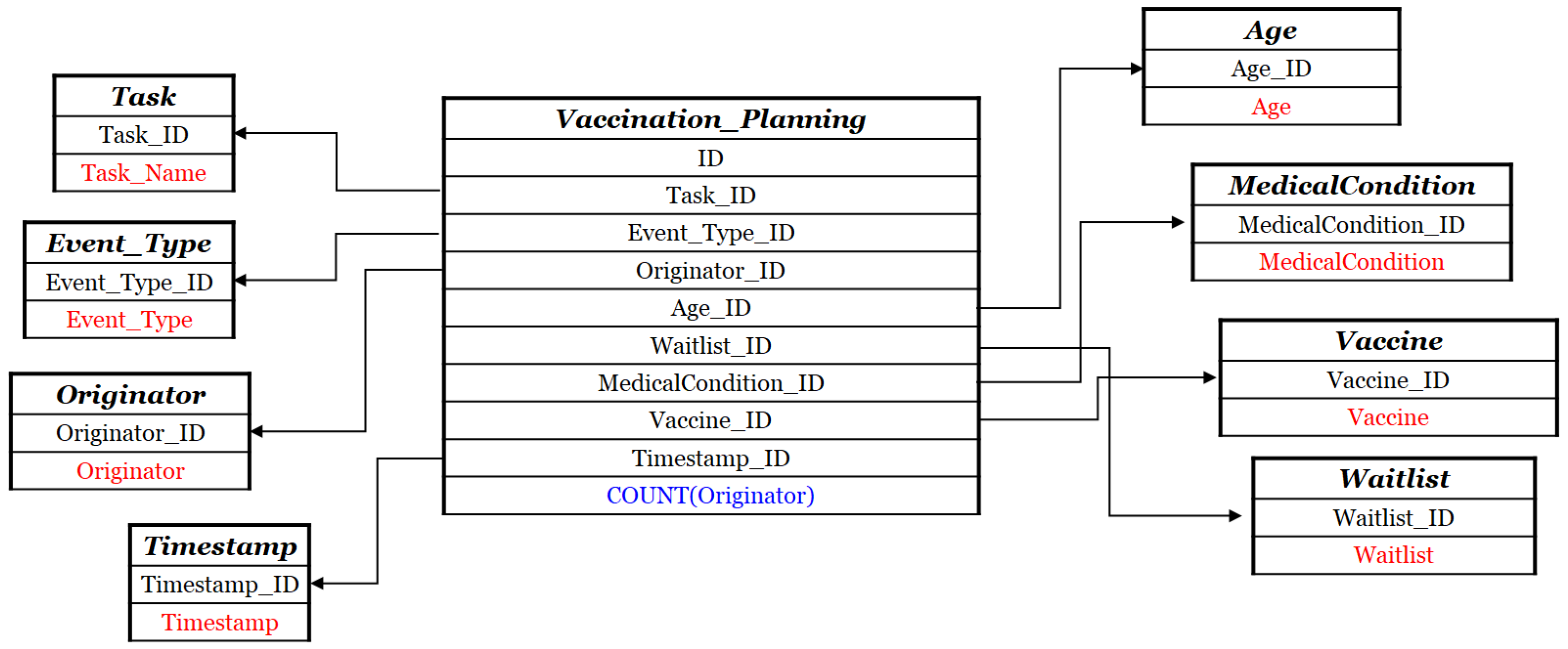

In order to support our multidimensional big data analytics tasks, we modelled and created a suitable

multidimensional model.

Figure 4 presents the

Dimensional Fact Model (DFM) of the

Behavioral Infection Control dataset. The dimensions (red-colored in

Figure 4) identified in this schema are: (

i)

Task_Name; (

ii)

Event_Type; (

iii)

Originator; (

iv)

Environment; (

v)

Surface; and (

vi)

ProtectiveDevice. The selected measures are the count of distinct

Originator and

ProtectiveDevice values, respectively (blue-colored in

Figure 4), which provides a robust quantitative basis for behavioral analysis. This multidimensional representation was generated by integrating behavioral event logs with predefined risk contexts (e.g., surface contacts and protective equipment usage), enabling the systematic association of tasks with exposure-relevant attributes. As a result, the dataset serves as a reliable analytical benchmark, facilitating both epidemiological assessments and behavioral pattern mining in infection control scenarios.

We provide a fully annotated example, which represents an improvement over the previous version, in order to enhance transparency. The earlier sample has been replaced with a clearer, logically structured instance that explicitly traces how each value in the row is derived. By interpreting each attribute in relation to its contextual and procedural logic, the mapping is now more coherent and accessible. Obviously, these enhancements are aimed at facilitating more robust analytical interpretations of protective behavior in healthcare environments, as it strongly affects the efficacy of implemented measures.

The actions performed by an

Originator (e.g., a healthcare worker or patient), such as “

Cough in tissue” or “

Maintain physical distance” are recorded based on log data collected from multiple sources within the healthcare environment. The collected data provide insights into compliance with protective measures, behavioral trends, and intervention effectiveness. In our experimental setup, data collection follows a

batch processing approach (e.g., [

69,

70]), where behavioral logs are aggregated over specific time intervals and later analyzed using our multidimensional framework. However, depending on the technological infrastructure,

real-time recording can also be integrated when automated detection systems are available.

The dataset presented in

Figure 3 has been extracted from log data recorded within a healthcare institution during the application of pandemic preventive measures. This dataset encompasses various relevant attributes and metrics for studying human reactions to protective protocols in actual healthcare environments. The data collection process relies on institutional monitoring systems, digital task management platforms, and automated logs of protective actions, ensuring accurate and continuous data recording. The dataset includes records of personnel activities, resource utilization, intervention tasks, and compliance behaviors, which provide insights into how individuals and teams adhere to pandemic control measures, optimize resource distribution, and respond to institutional protocols. The automatic logging of these activities enhances data reliability and reduces manual entry errors.

Beyond pandemic scenarios, such datasets can be used to monitor healthcare compliance, improve operational efficiency, and support future preparedness strategies. By analyzing behavioral patterns and intervention effectiveness over time, the framework remains sustainable and applicable even outside of pandemic periods, offering long-term benefits for healthcare management and policy-making.

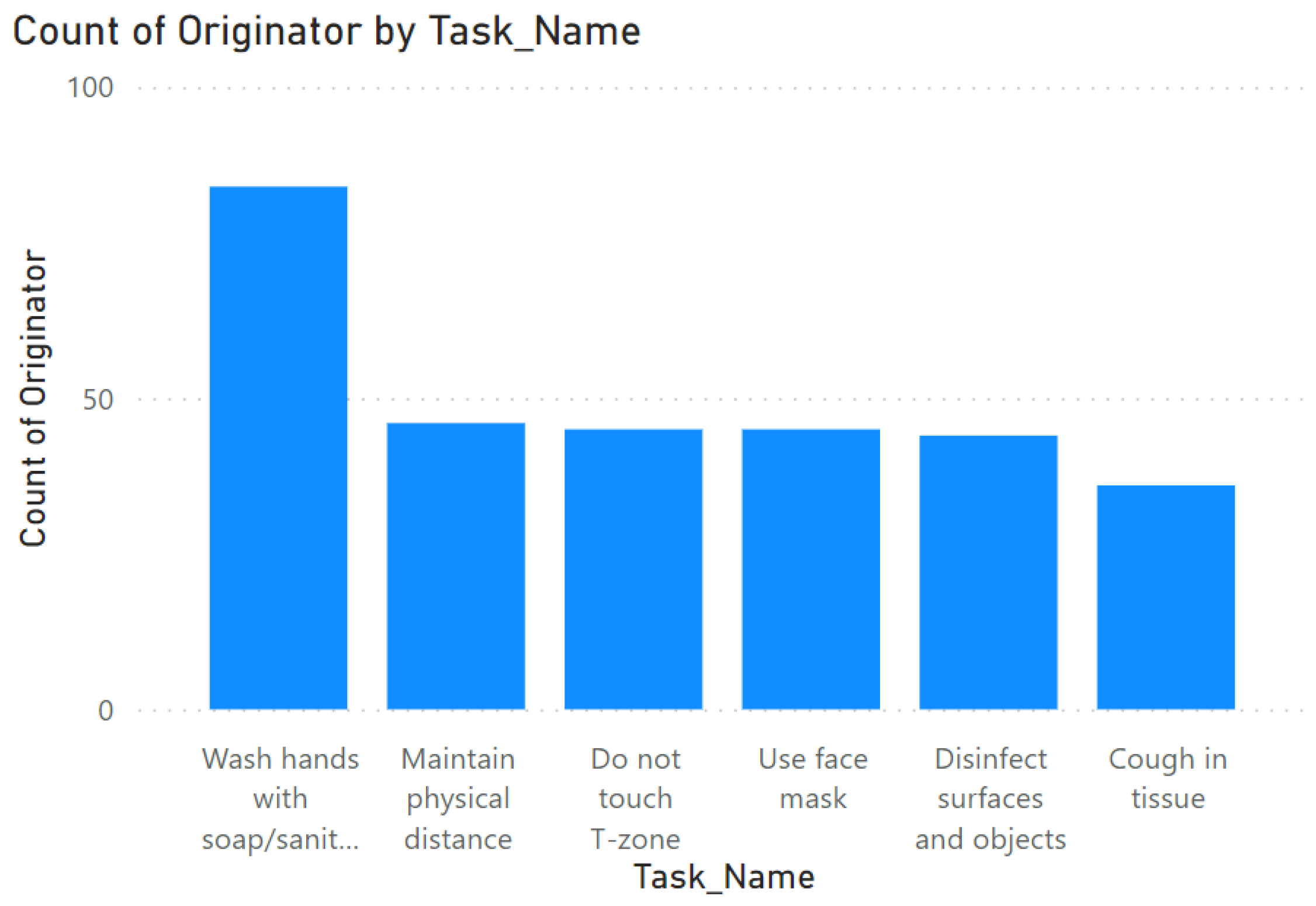

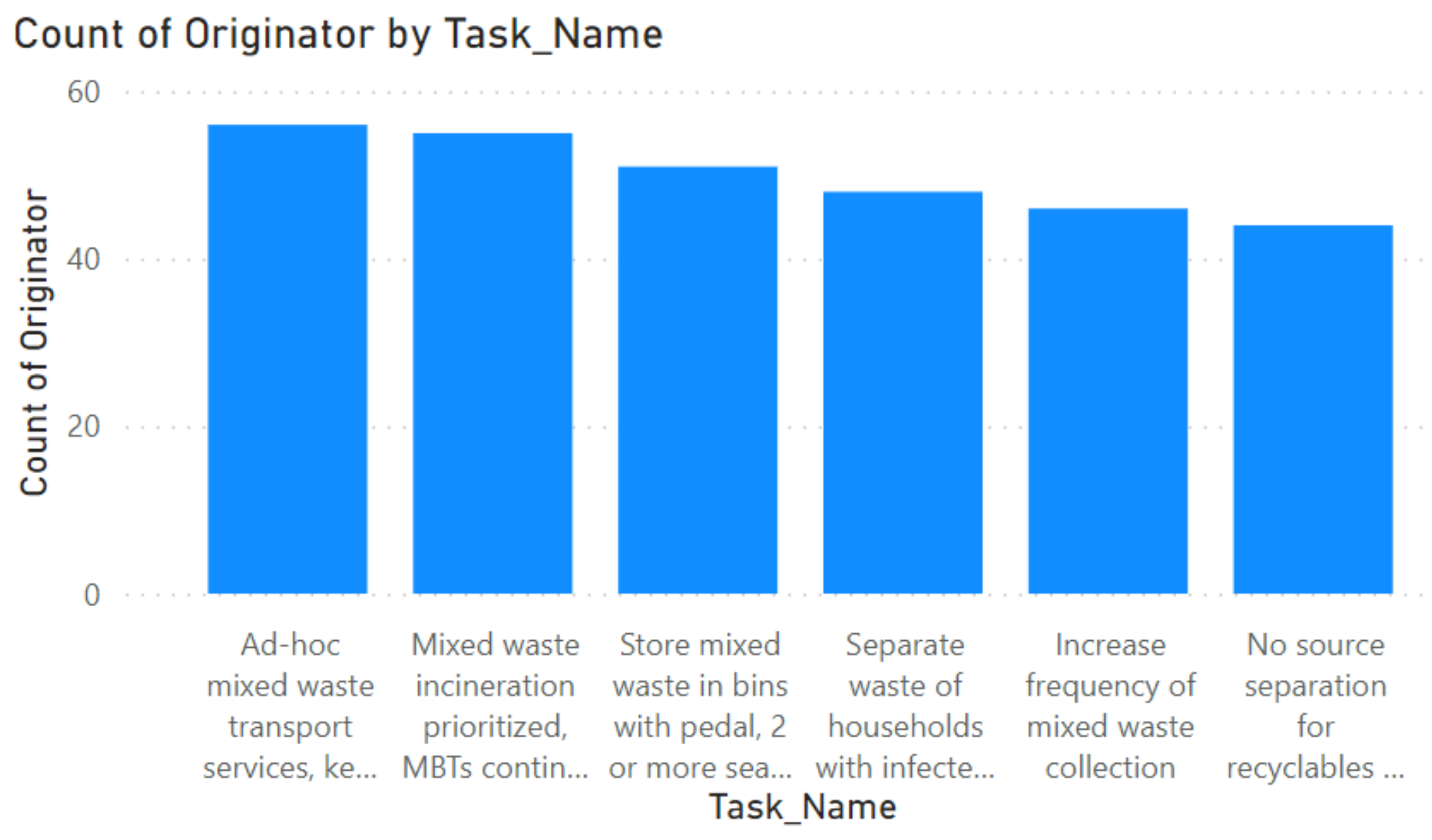

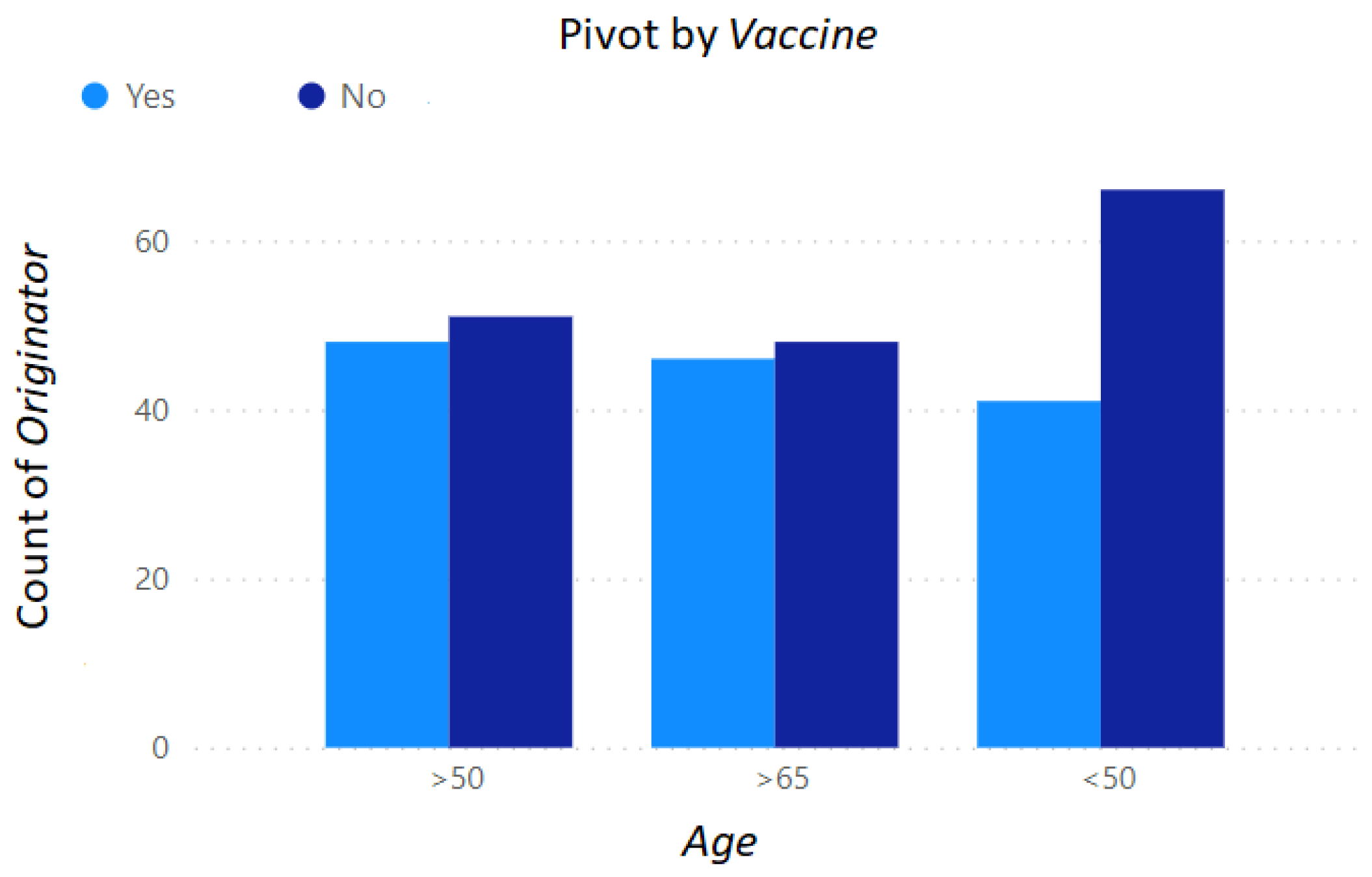

Figure 5 shows an aggregation analysis bar plot representing the count of the healthcare institution staff members (attribute

Originator) that have performed each type of prevention measure (attribute

Task_Name).

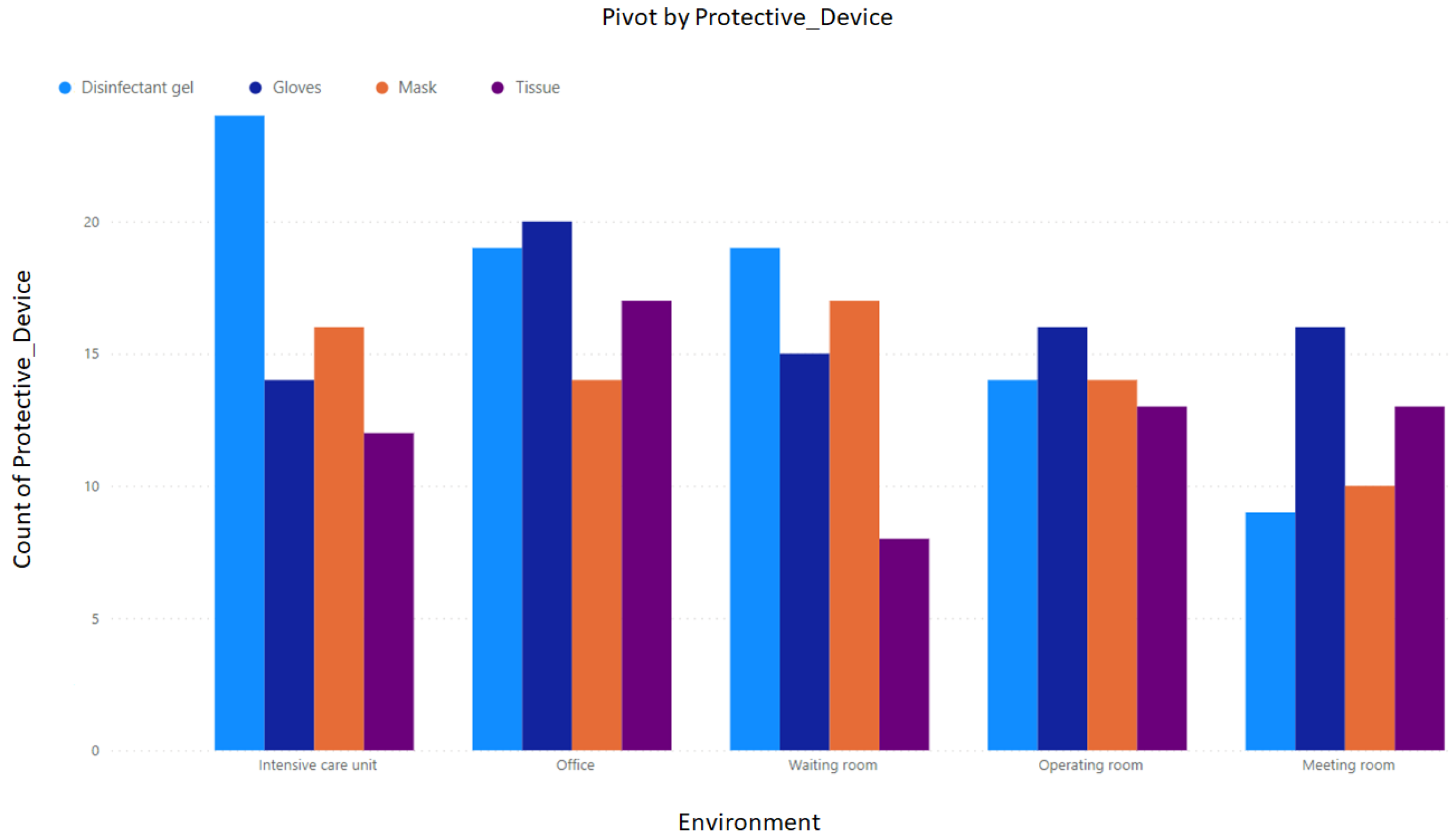

In

Figure 6, we show a pivoting analysis by multi-attributes stacked column bar plot of the count of protective devices (attribute

Protected_Device) used in each specific environment (attribute

Environment) inside the healthcare institution.

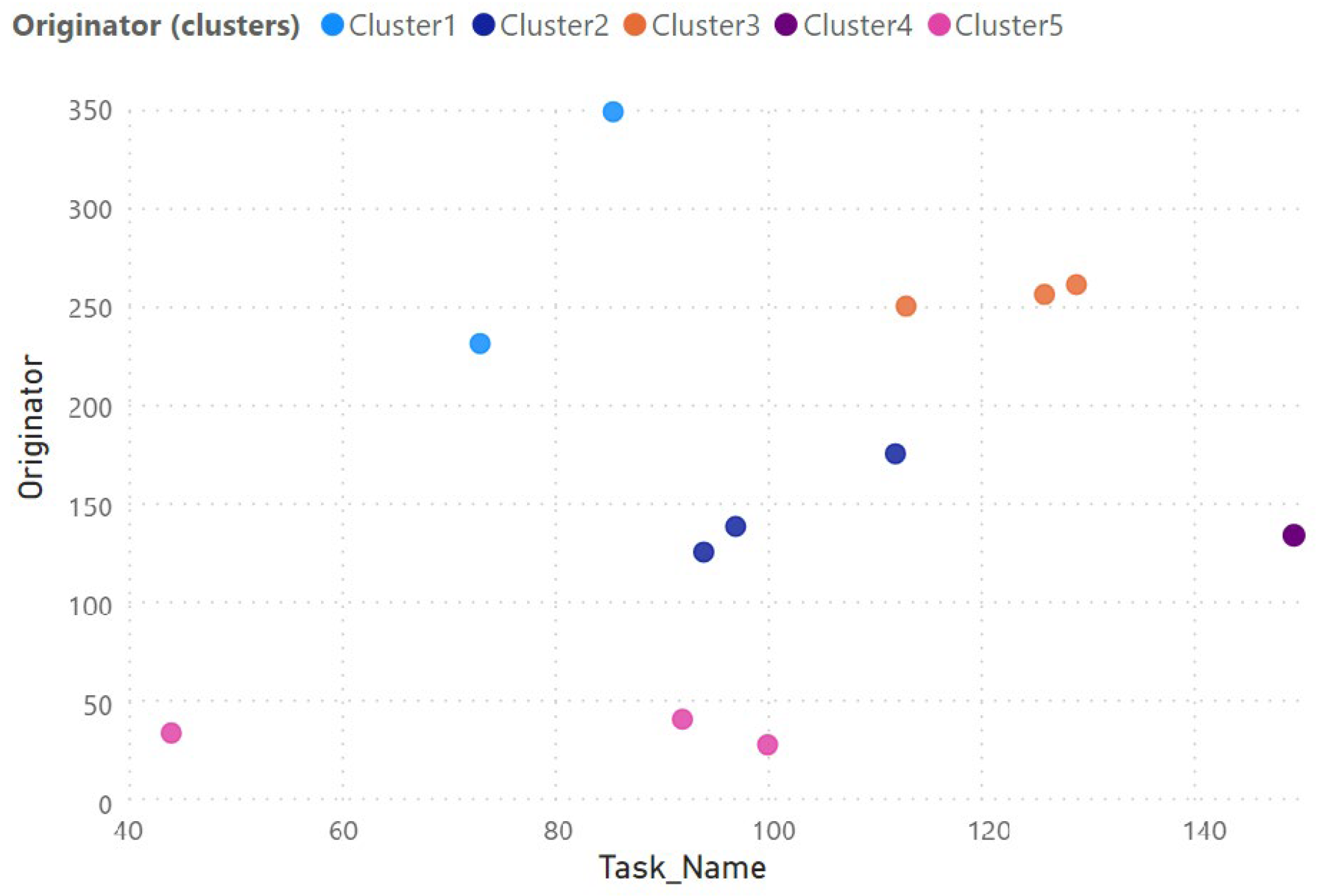

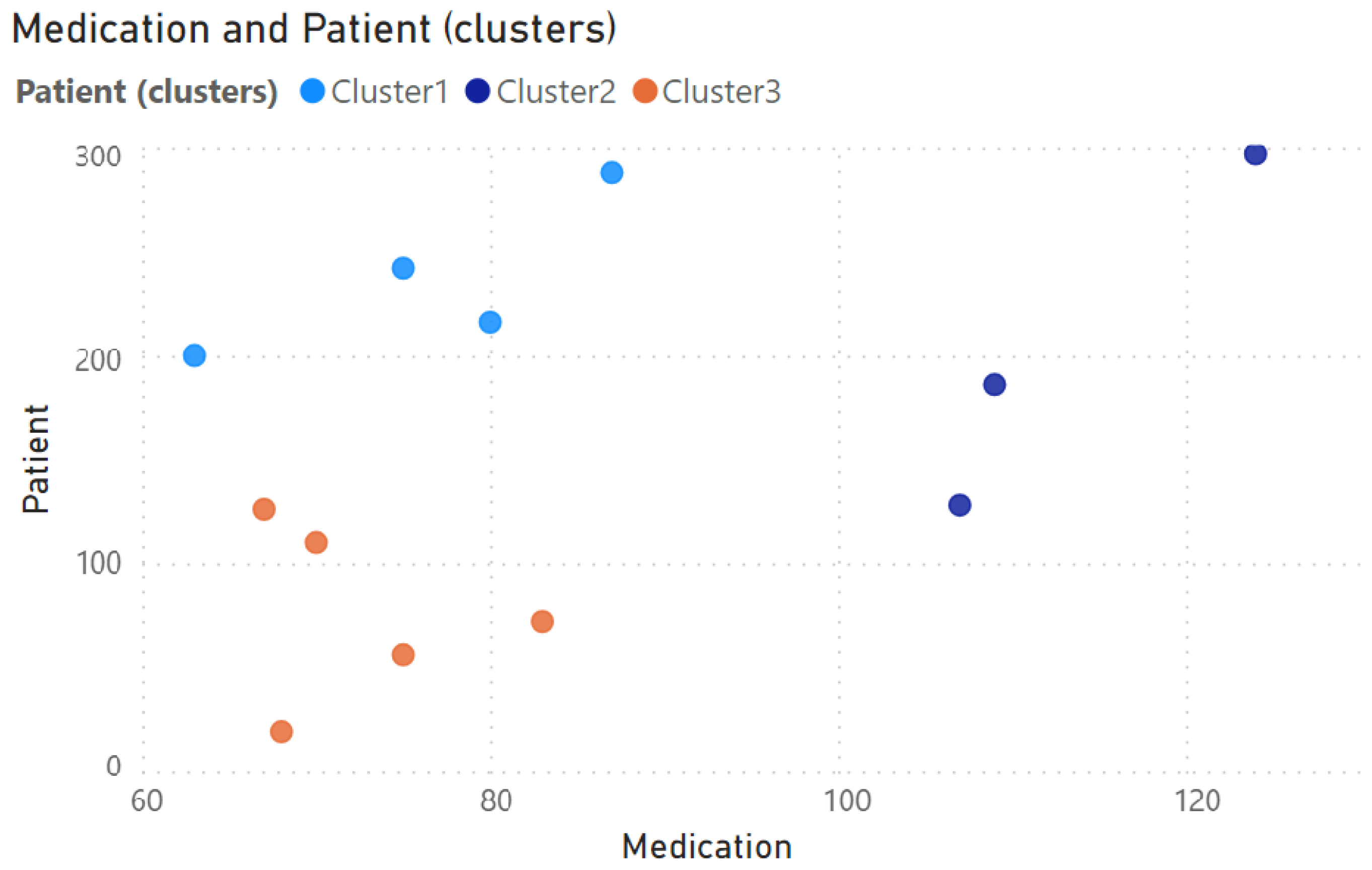

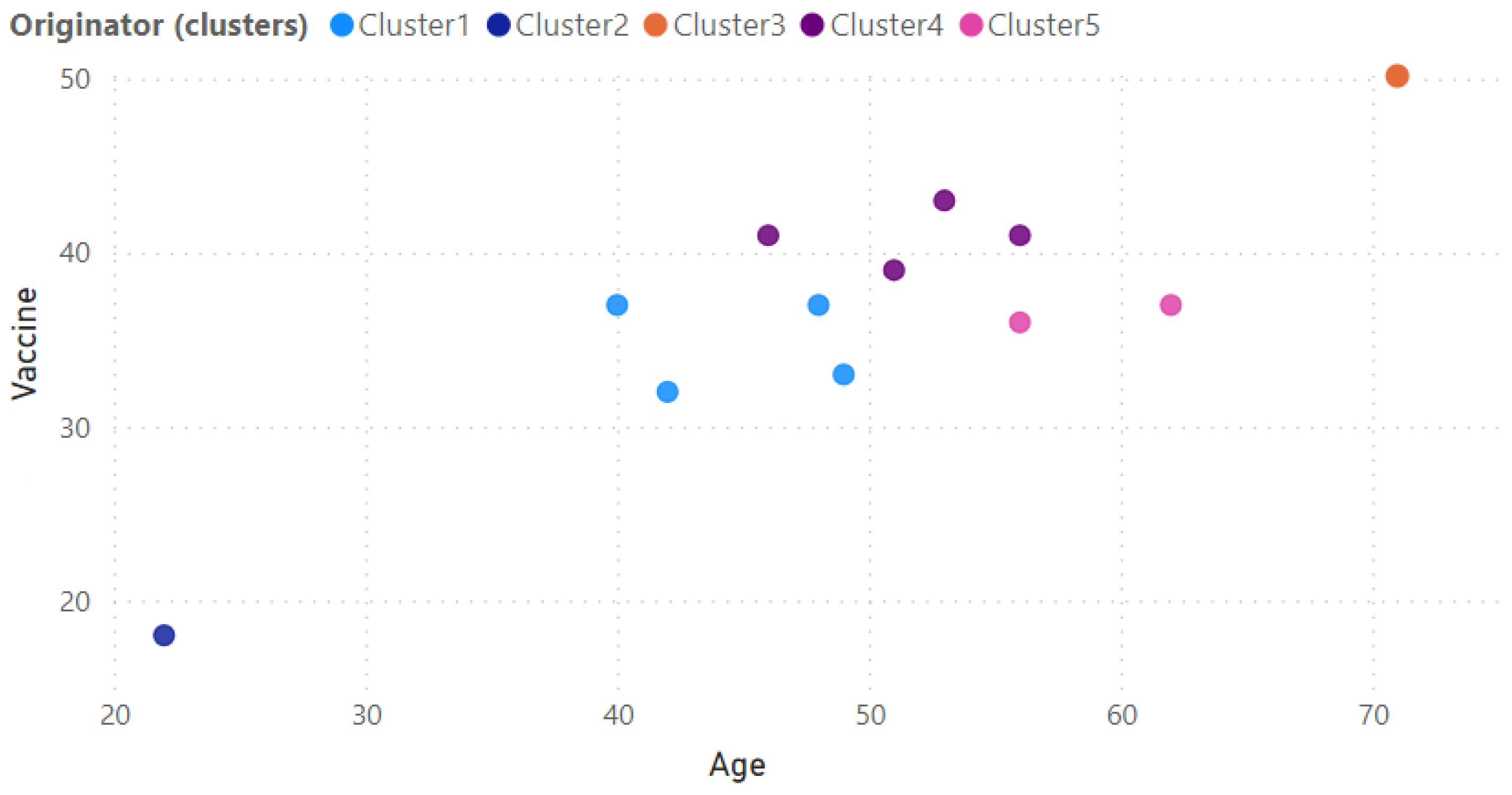

Figure 7 shows the result of applying the clustering algorithm on the

Behavioral Infection Control dataset presented in

Figure 3. The clustering is performed on the prevention measures conducted by the healthcare institution staff members (attribute

Task_Name) by the conductor of the measure (attribute

Originator).

From this first experimental campaign, conducted on the Behavioral Infection Control Process Model (see

Figure 2) and its corresponding dataset depicted in

Figure 3, it follows that employing

data sampling,

aggregation analysis of data,

pivoting analysis, and

clustering methodologies allows us to obtain significant insights into the dynamics of behavioral infection control during pandemics. Particularly, the model dataset provides essential multidimensional attributes that facilitate the understanding of waste management and medical interventions, contributing to more effective and timely decision-making processes.

The adoption of data sampling, aggregation analysis, pivoting analysis, and clustering techniques within our proposed framework offers several advantages. Aggregation analysis allows the synthesis of detailed behavioral data into high-level indicators that facilitate the identification of key patterns and trends related to pandemic management. Pivoting analysis enables flexible exploration of the multidimensional dataset, revealing correlations and dependencies between behavioral attributes such as time, tasks, personnel, and resource utilization. Finally, clustering uncovers hidden patterns and groups of behaviors, supporting the identification of critical situations and the anticipation of future needs. The integration of these techniques into the proposed framework provides decision-makers with actionable insights and contributes to more effective and timely pandemic response strategies.

As a conclusion that can be drawn from this first experiment, the results highlight the effectiveness of the proposed framework in analyzing behavioral infection control measures during pandemics. The aggregation analysis quantifies critical behaviors such as task execution and personnel actions, helping to identify trends and inefficiencies in pandemic-related waste management and medical interventions. Pivoting analysis provides a multidimensional view of the relationships between different attributes (e.g., time, personnel, and resource allocation), allowing for targeted decision-making. Additionally, clustering reveals hidden patterns in resource usage and task execution over time, offering valuable insights for anticipating future preventive actions. These findings confirm that our framework supports effective behavioral data analysis, enabling scalable, data-driven decision-making for pandemic response management.

These analytical tools are essential not only for Behavioral Infection Control but also for improving broader pandemic control efforts, such as Environmental Pandemic Control, Pandemic Medication Planning, Pandemic Case Surveillance, and Pandemic Vaccination Planning, where precise insights guide more effective planning and intervention strategies. These measures are described in detail in the following Sections.

5.2. Environmental Pandemic Control

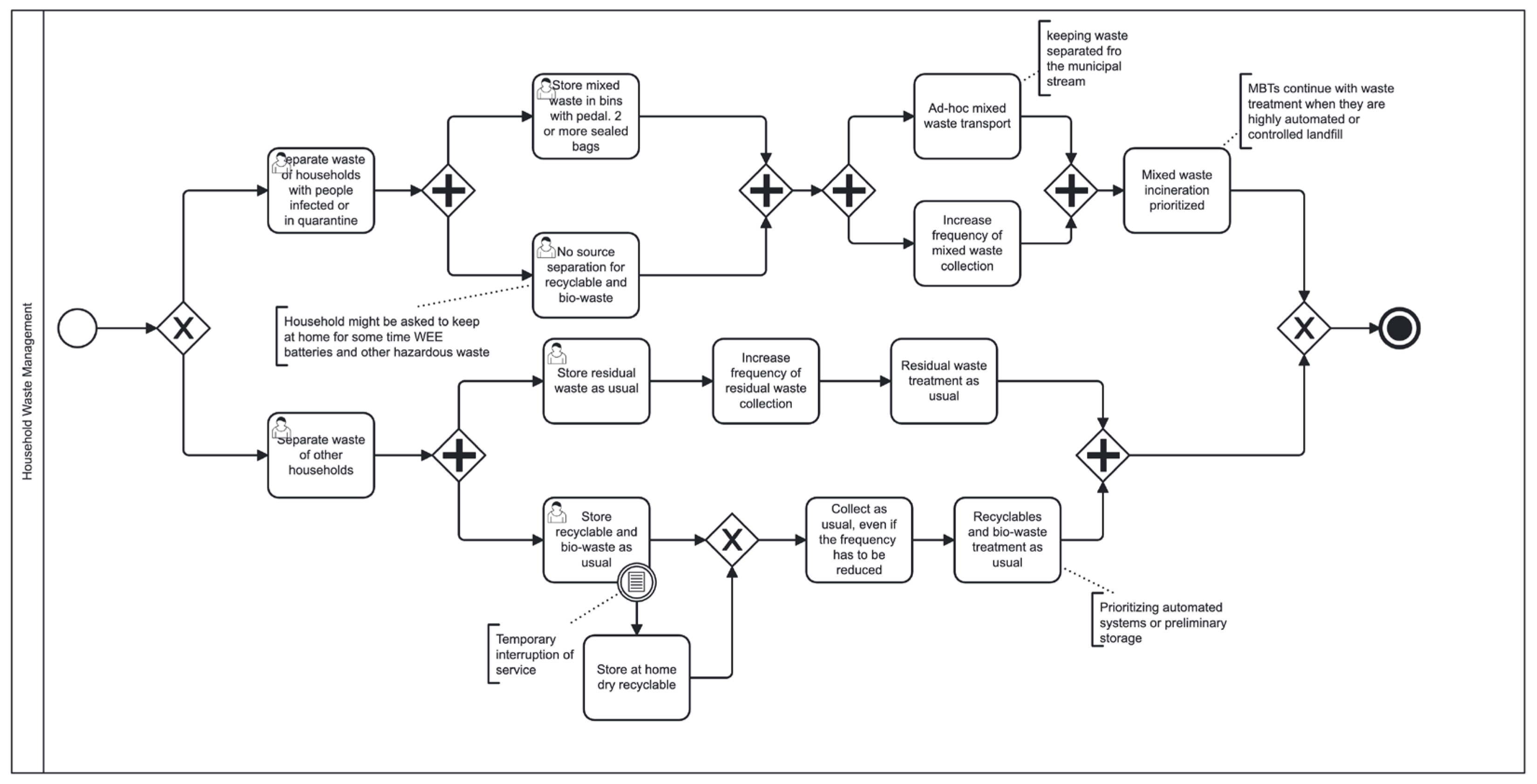

The depicted business process model

Environmental Pandemic Control of household waste management in

Figure 8 delineates three distinct phases: storage, transport, and treatment, accommodating two categories of waste: pandemic virus-infected people waste and susceptible people waste. In the case of infected individuals, the storage phase involves specific tasks, including the segregation of waste from households with infected individuals or individuals in mandatory quarantine, utilization of bins with pedal-operated lids for mixed waste containment, and temporary suspension of source separation for recyclables and bio-waste. Subsequently, the transport phase entails ad hoc mixed waste transport services with segregated containers and increased collection frequency. Treatment primarily prioritizes mixed waste incineration or conventional methods such as

Mechanical-Biological Treatment (MBTs) and

controlled landfill. Conversely, for susceptible individuals, the storage phase maintains conventional waste containment practices with increased emphasis on proper bag sealing. Transport procedures involve heightened collection frequency for residual waste, with treatment strategies focusing on automated systems or preliminary storage for both recyclables and bio-waste. This comprehensive model provides a structured approach to household waste management tailored to different scenarios, thereby contributing to efficient resource utilization and public health protection amidst pandemic circumstances.

In the case of behavioral pandemic fighting and preventive measures pertaining to municipal waste management, the extracted dataset encompasses critical multidimensional attributes/measures essential for understanding waste handling dynamics during health crises.

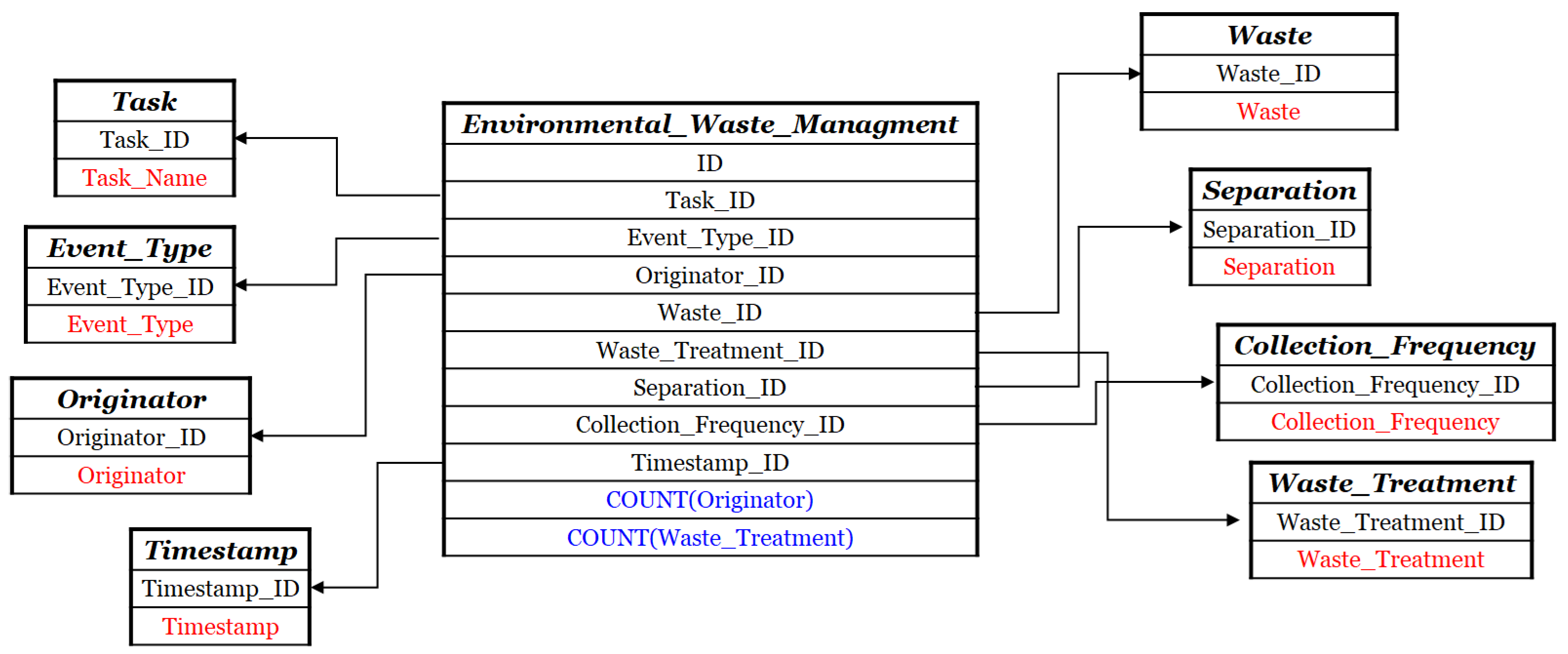

Figure 9 presents

Dimensional Fact Model of the

Environmental Pandemic Control dataset. This diagram delineates how the dataset was constructed by linking recorded task events with environmental control variables related to waste management. Specifically, the model comprises the following key dimensions (red-colored in

Figure 9): (

i)

Task_Name; (

ii)

Event_Type; (

iii)

Originator; (

iv)

Waste,

Separation; (

v)

Collection_Frequency; (

vi)

Waste_Treatment. The selected measures are the count of distinct

Originator and

Waste_Treatment values, respectively (blue-colored in

Figure 9). Each record captures a unique procedural instance, as identified by the

ID and

Timestamp fields. By formalizing the relationships among these variables, the schema clarifies the underlying data structure and supports a more systematic interpretation of environmental interventions. This structured representation enhances the dataset utility for downstream analyses, such as assessing adherence to waste handling protocols and identifying patterns in environmental control practices during pandemic conditions.

These attributes include

Task_Name, delineating specific waste management activities;

Event_Type, specifying the final state of each task execution (i.e., event);

Originator, identifying the entity initiating the action;

Timestamp, providing temporal context for each event;

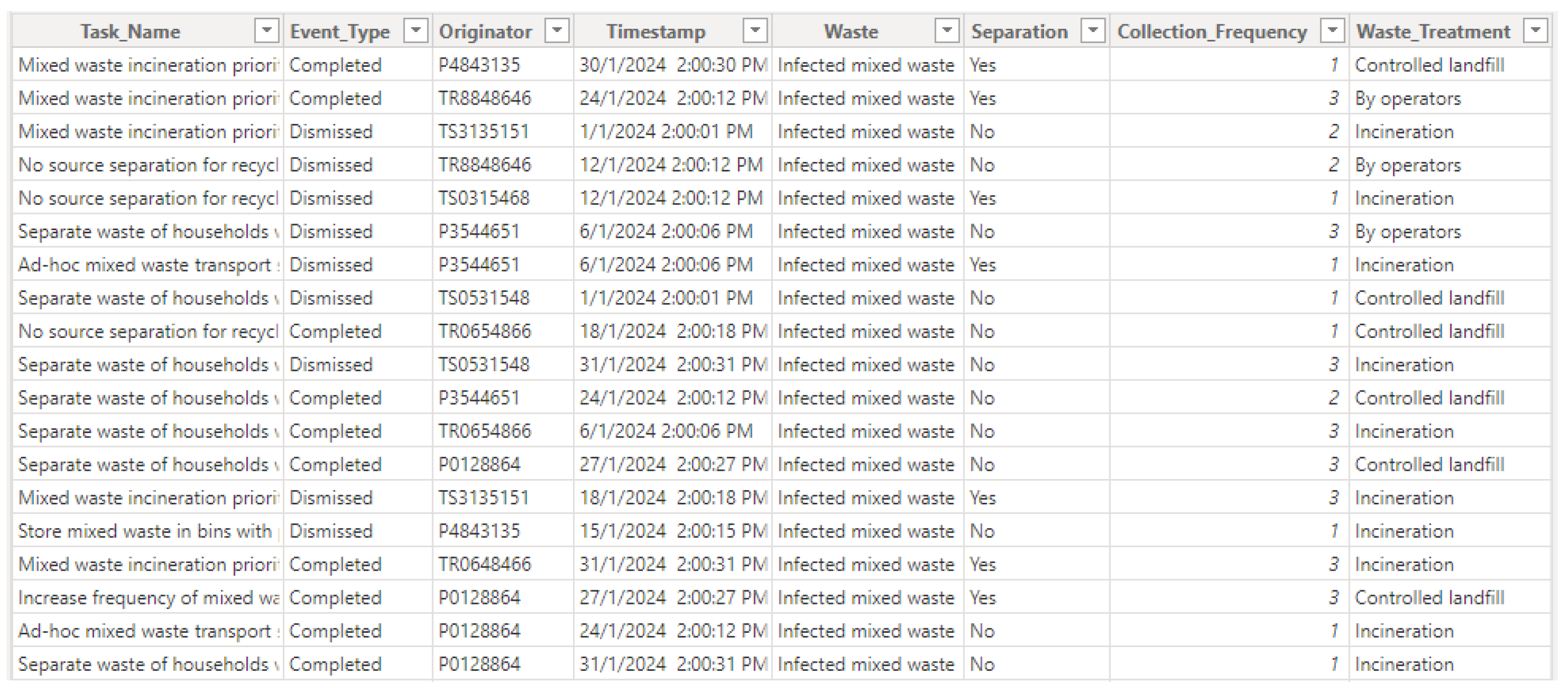

Waste, categorizing the type of waste involved;

Separation, indicating the segregation process undertaken;

Collection_Frequency, detailing the regularity of waste collection activities; and

Waste_Treatment, elucidating the method employed for waste disposal or treatment. Such a dataset facilitates rigorous analysis of behavioral patterns in municipal waste management practices during pandemics, fostering insights into the effectiveness of preventive measures and their impact on waste handling strategies. In

Figure 10, we provide a sample of

Environmental Pandemic Control dataset that we have just described.

Figure 11 shows an aggregation analysis bar plot representing the count of the municipal waste management personnel (attribute

Originator) that have performed each type of pandemic fighting and prevention measures (attribute

Task_Name).

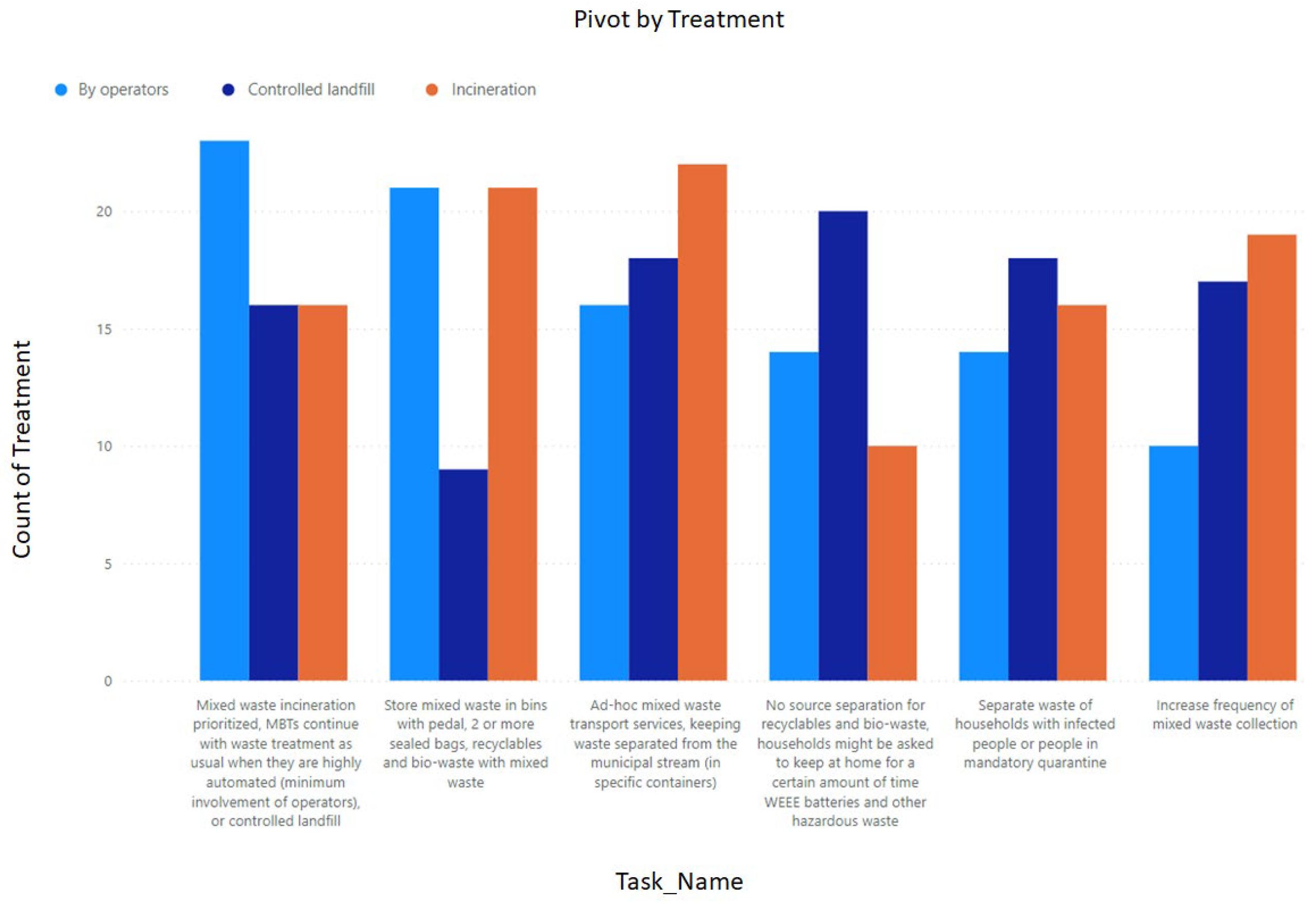

In

Figure 12, we show a pivoting analysis by multi-attributes stacked column bar plot of the count of municipal waste treatments (attribute

Treatment) performed in each specific preventive measure (attribute

Task_Name) while the process of waste management. Similarly to the other cases, it should be noted here that powerful analytics methodologies allow us to really gain

knowledge insights constituting the basis for superior KDDI processes.

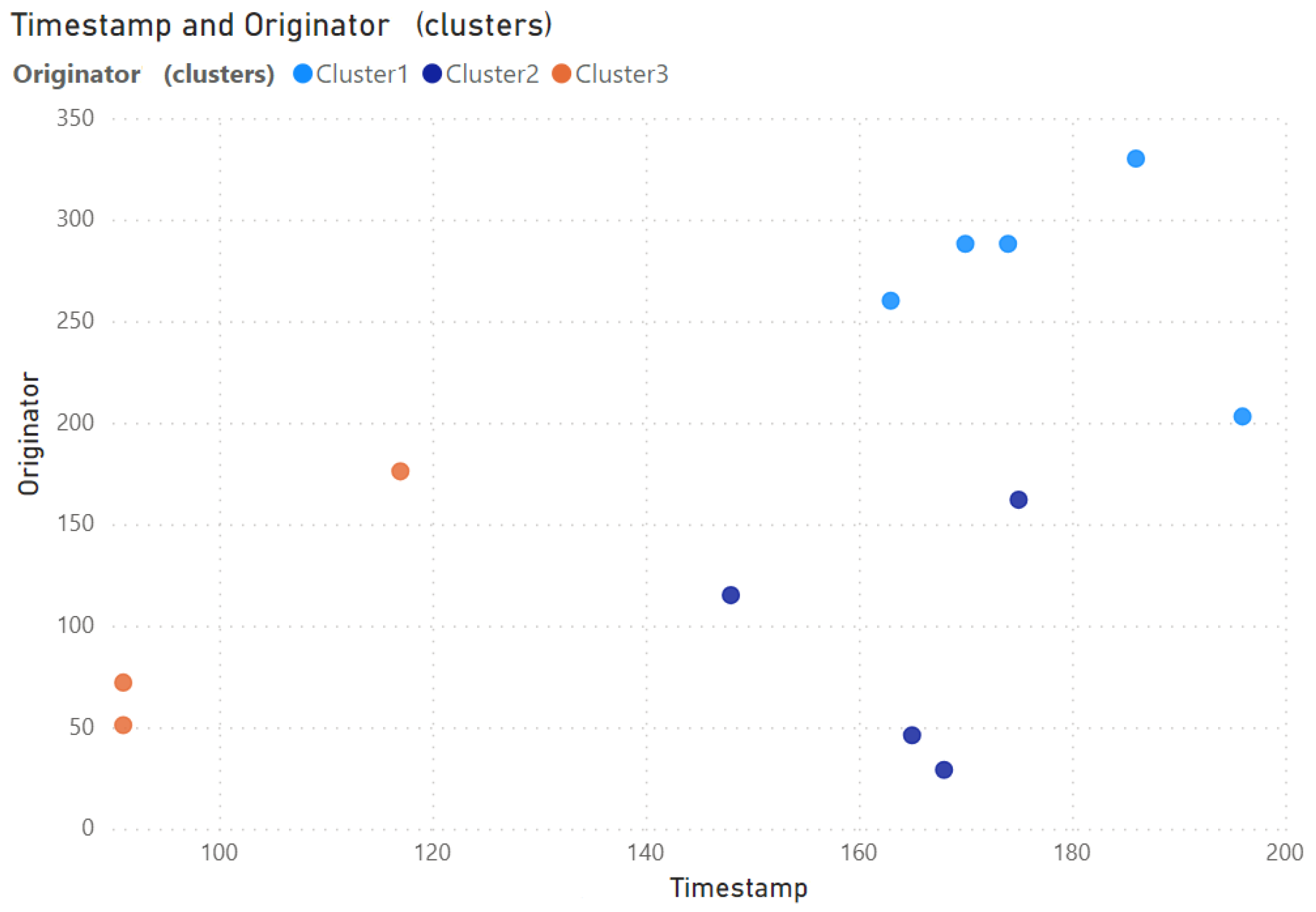

Figure 13 shows the result of applying the clustering algorithm on the

Environmental Pandemic Control dataset presented in

Figure 10. The clustering is performed at the time of execution of action (attribute

Timestamp) by the executor from the municipal waste management personnel (attribute

Originator).

Clustering analysis performed in this experimental campaign provides key insights into behavioral patterns in pandemic-related activities. The results reveal distinct groupings of task execution, personnel involvement, and resource utilization, allowing for the identification of patterns and anomalies in pandemic response strategies. This analysis highlights variations in workload distribution, enabling the detection of potential bottlenecks in medical interventions and waste management processes. Furthermore, clustering helps in predicting future trends by identifying recurring behavioral patterns over time, supporting proactive decision-making.

5.3. Pandemic Medication Planning

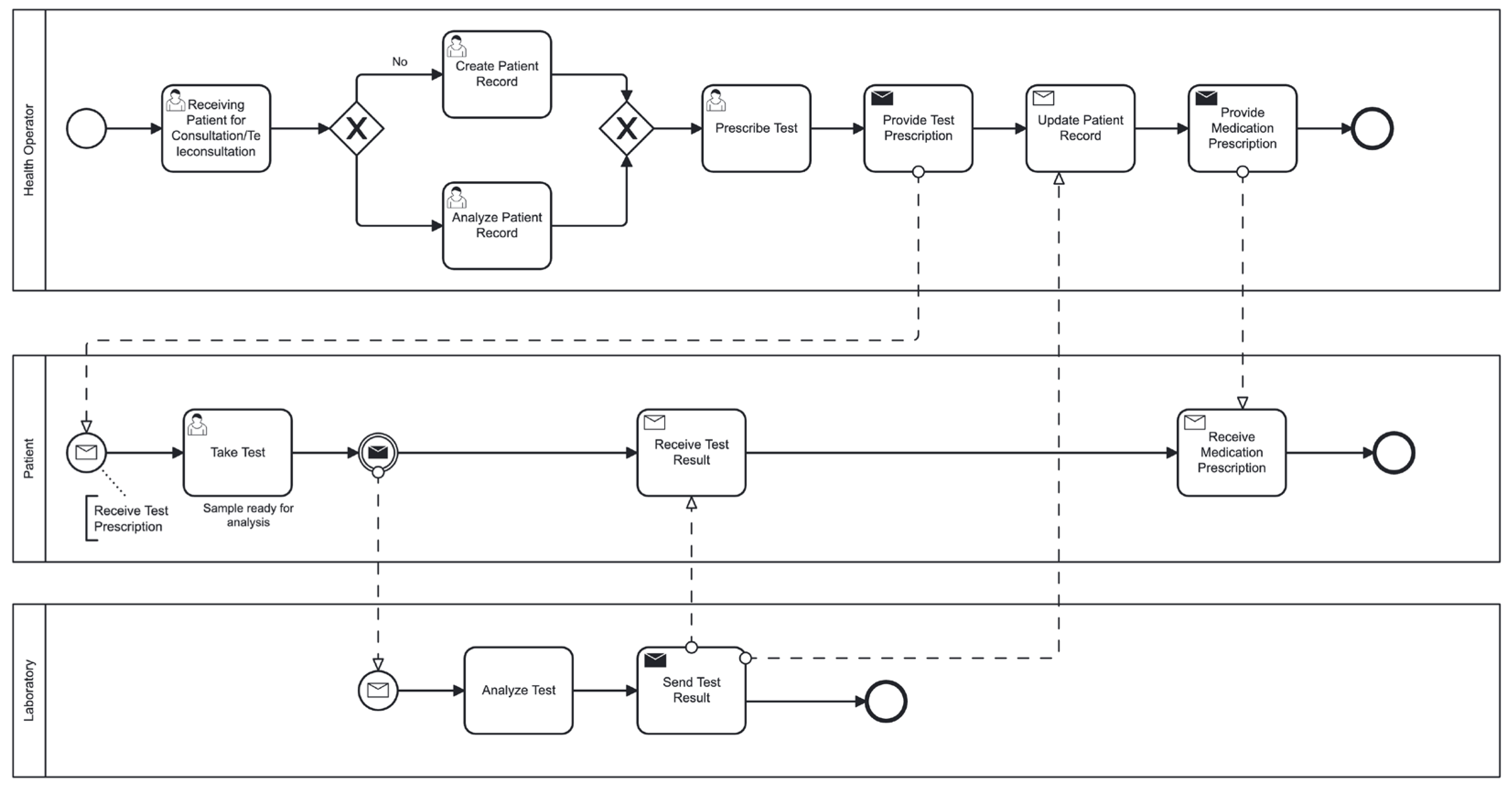

The process model

Pandemic Medication Planning is depicted in

Figure 14, which represents the process we proposed for the identification of patients testing positive by a particular virus pandemic. It is initiated by the reception in consultation on-site or teleconsultation of a patient presenting symptoms. A doctor or a health operator receives the patient and creates a new patient file for him if he is not already registered on the platform, otherwise, he analyzes his request.

Then, the health operator sends the form to the patient to keep it and depending on the symptoms he presents, he prescribes an RT-PCR (Reverse Transcriptase Polymerase Chain Reaction) test. The patient must take the RT-PCR test in a laboratory which must return the results to him within 48 h. The figure below shows the business process that we have just described.

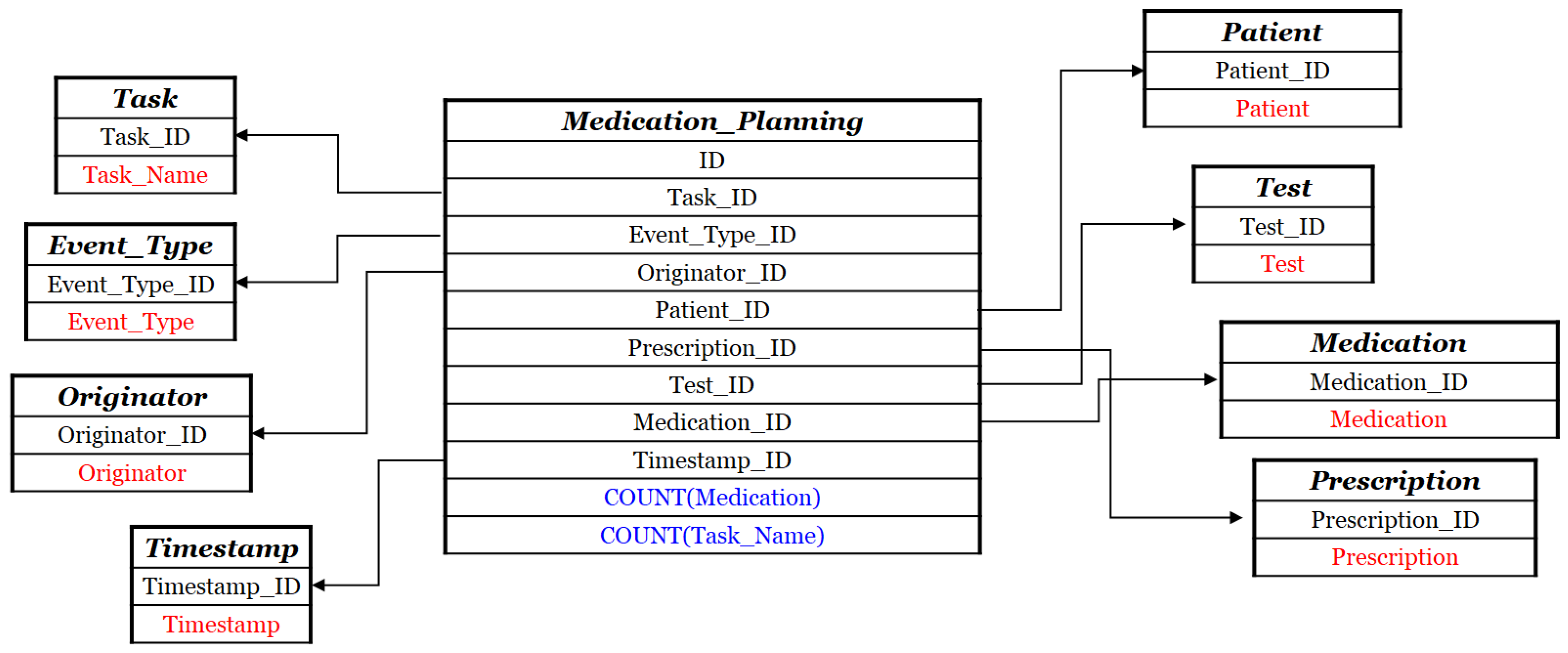

Figure 15 presents

Dimensional Fact Model of the

Pandemic Medication Planning dataset. This diagram outlines the structure of the dataset, which captures the integration of task-level events with medical planning and prescription-related information. The dataset includes the following key dimensions (red-colored in

Figure 15): (

i)

Task_Name; (

ii)

Event_Type; (

iii)

Originator; (

iv)

Patient; (

v)

Test; (

vi)

Medication; (

vii)

Prescription, with each record uniquely identified by an ID and timestamped via the

Timestamp field. The selected measures are the count of distinct

Medication and

Task_Name values, respectively (blue-colored in

Figure 15). The schema reflects the linkage between diagnostic procedures, patient-specific data, and therapeutic decisions, thereby enabling a coherent understanding of the medication planning process during pandemic response scenarios. By formalizing these relationships, the conceptual schema provides clarity on the dataset structure and enhances its applicability for evaluating clinical workflows, prescription compliance, and treatment decision-making dynamics in emergency health contexts.

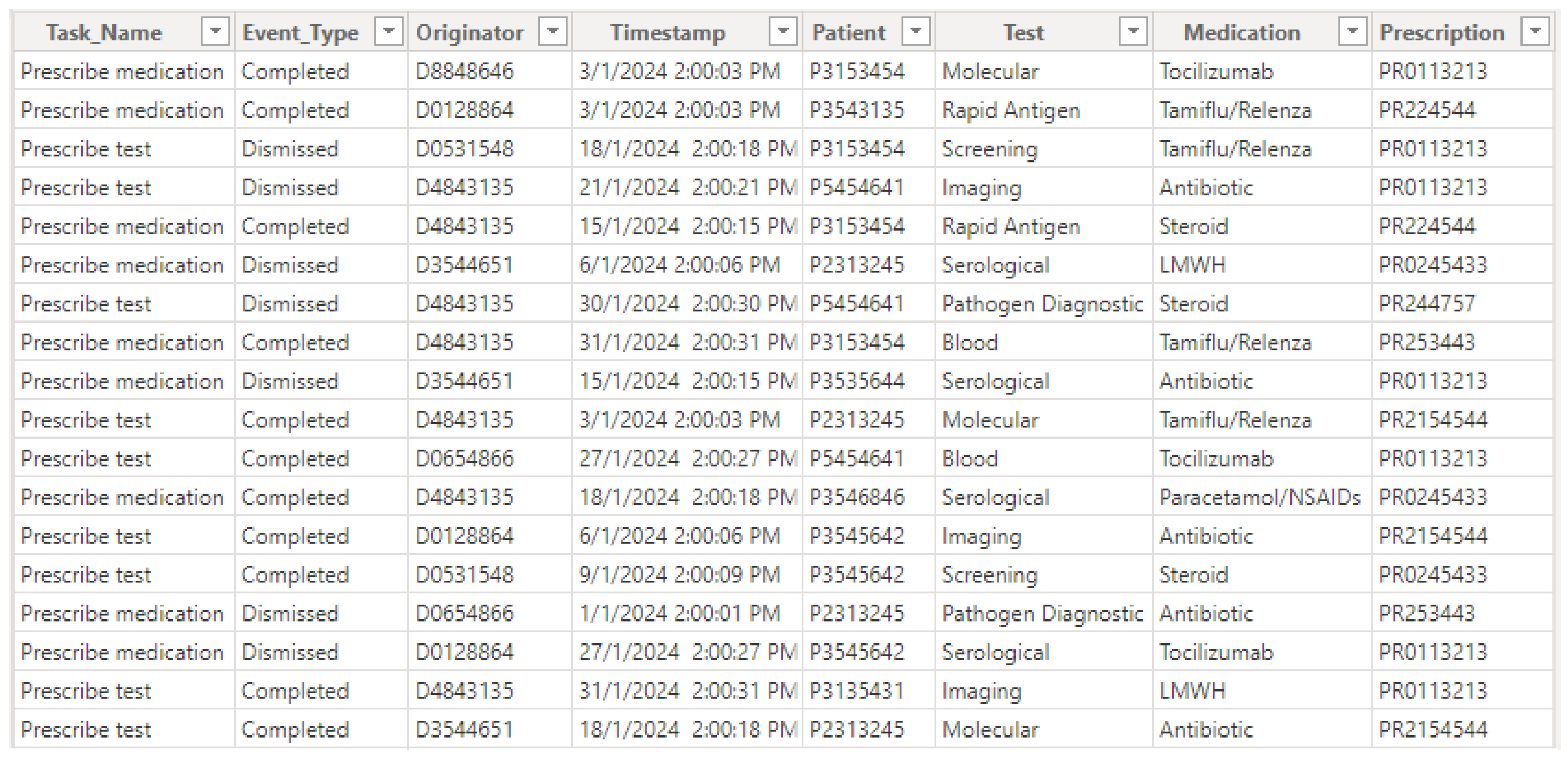

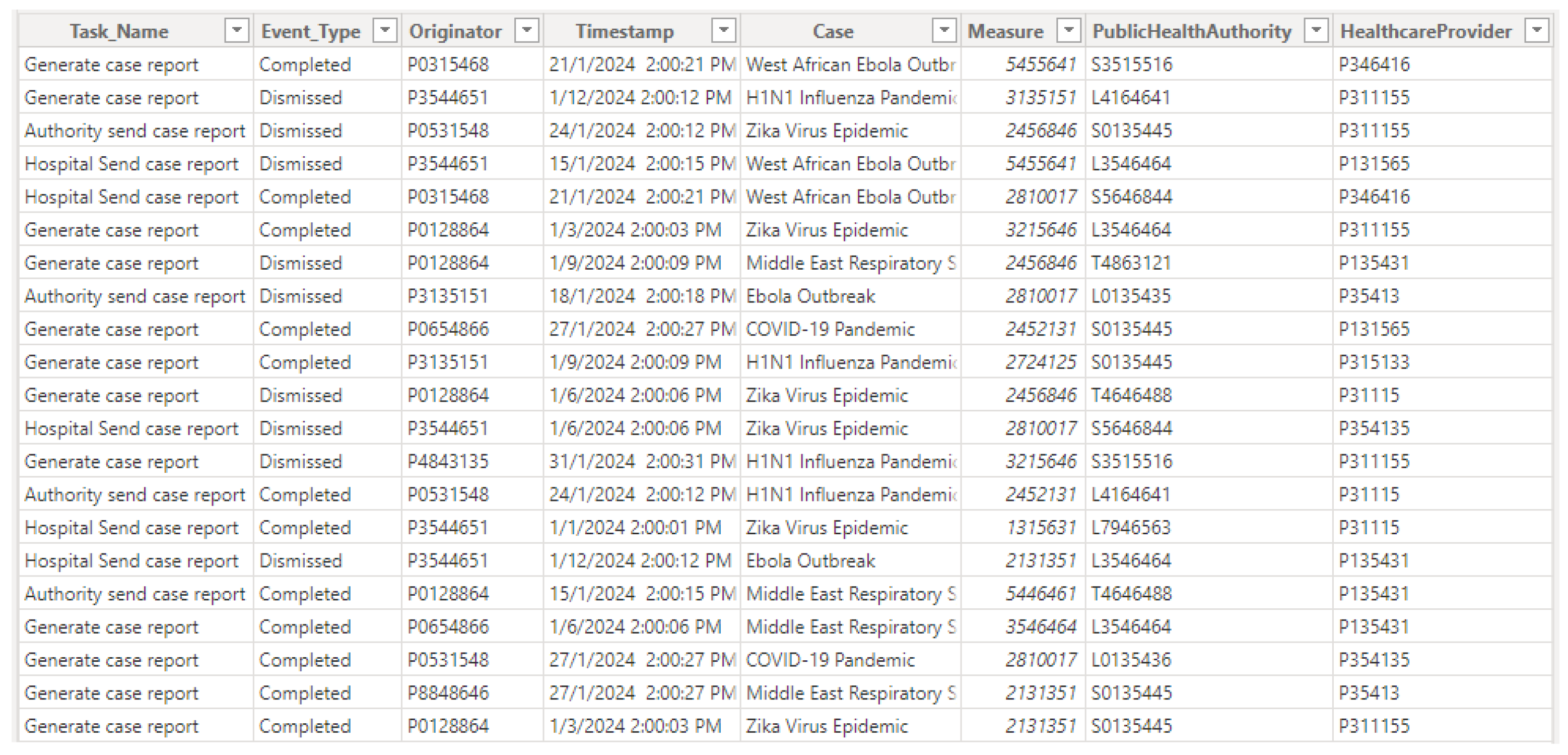

Finally, for medical pandemic fighting and prevention measures focusing on medical test and medication prescription management, the extracted dataset comprises key multidimensional attributes/measures such as

Task_Name, specifying the nature of medical procedures undertaken;

Event_Type, delineating the type of event recorded;

Originator, identifying the initiator of each action;

Timestamp, providing temporal context for events;

Patient, identifying the individuals undergoing medical interventions;

Test, detailing the diagnostic examinations conducted;

Medication, specifying pharmaceutical interventions administered; and

Prescription, documenting the prescribed treatments. Such a dataset enables rigorous analysis of medical intervention patterns during pandemics, facilitating insights into the effectiveness of preventive measures and the optimization of medical resource allocation.

Figure 16 represents a sample of the

Pandemic Medication Planning dataset.

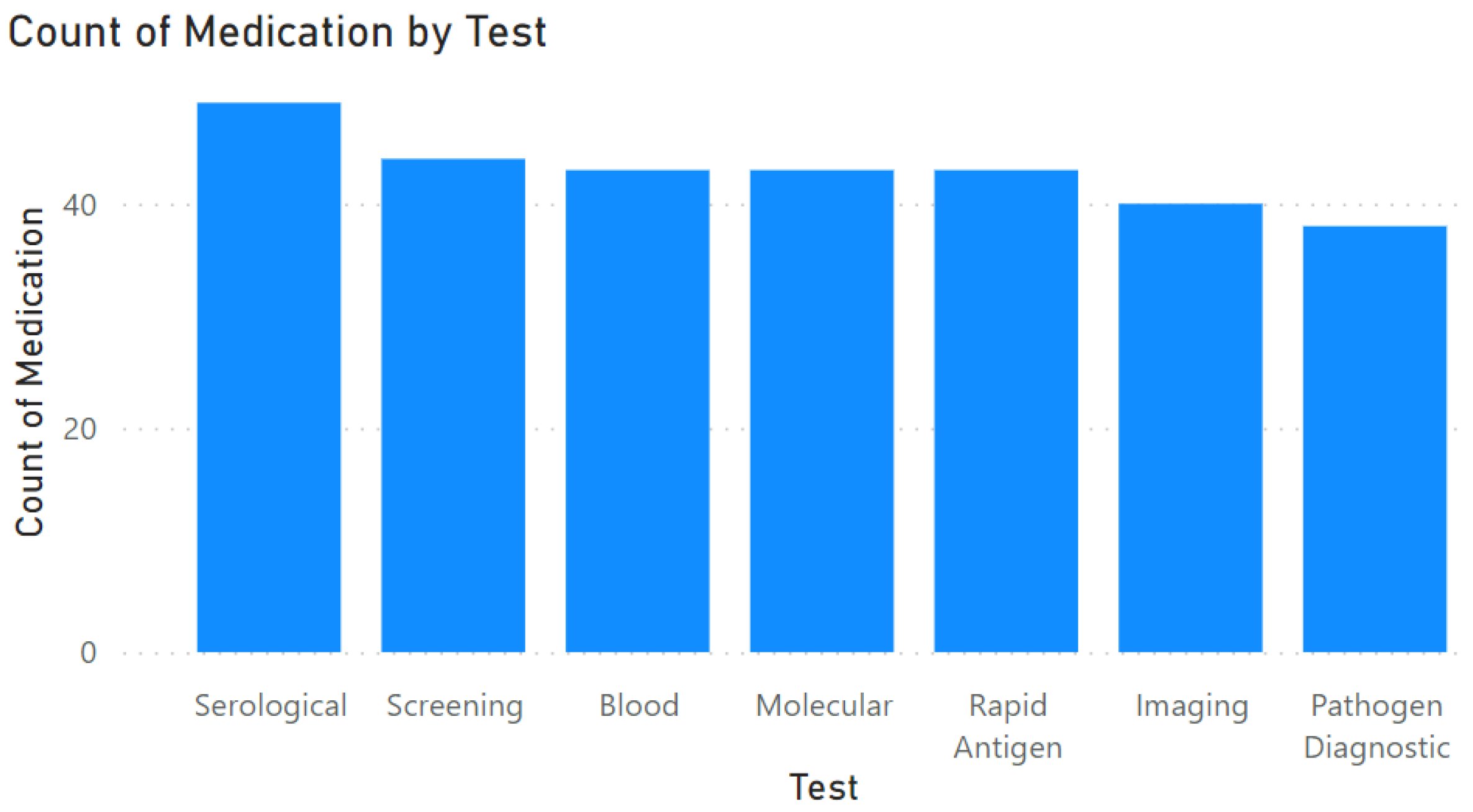

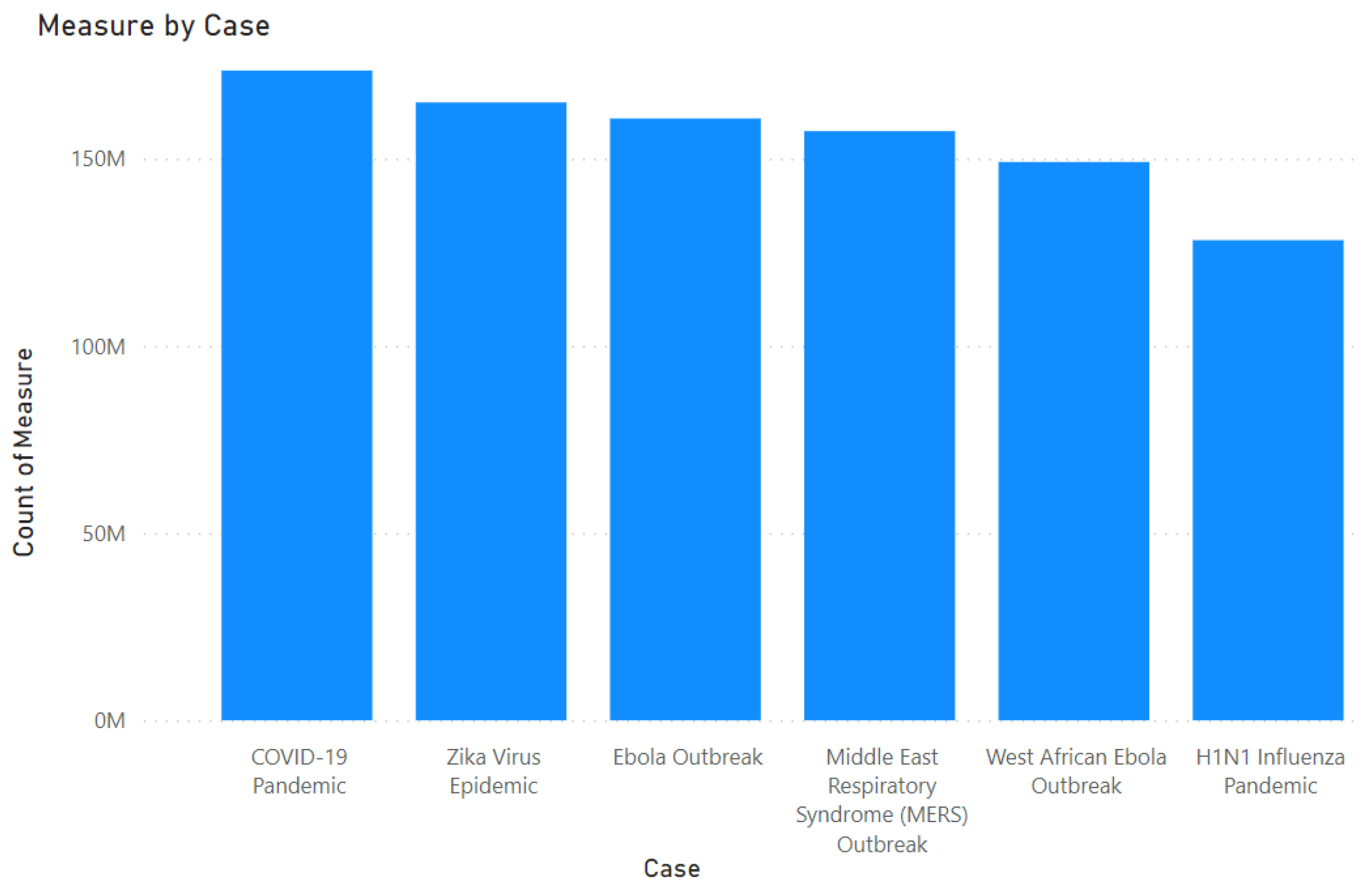

Figure 17 shows an aggregation analysis bar plot representing the count of medications (attribute

Medication) that have been prescribed to patients after taking each type of medical test (attribute

Test).

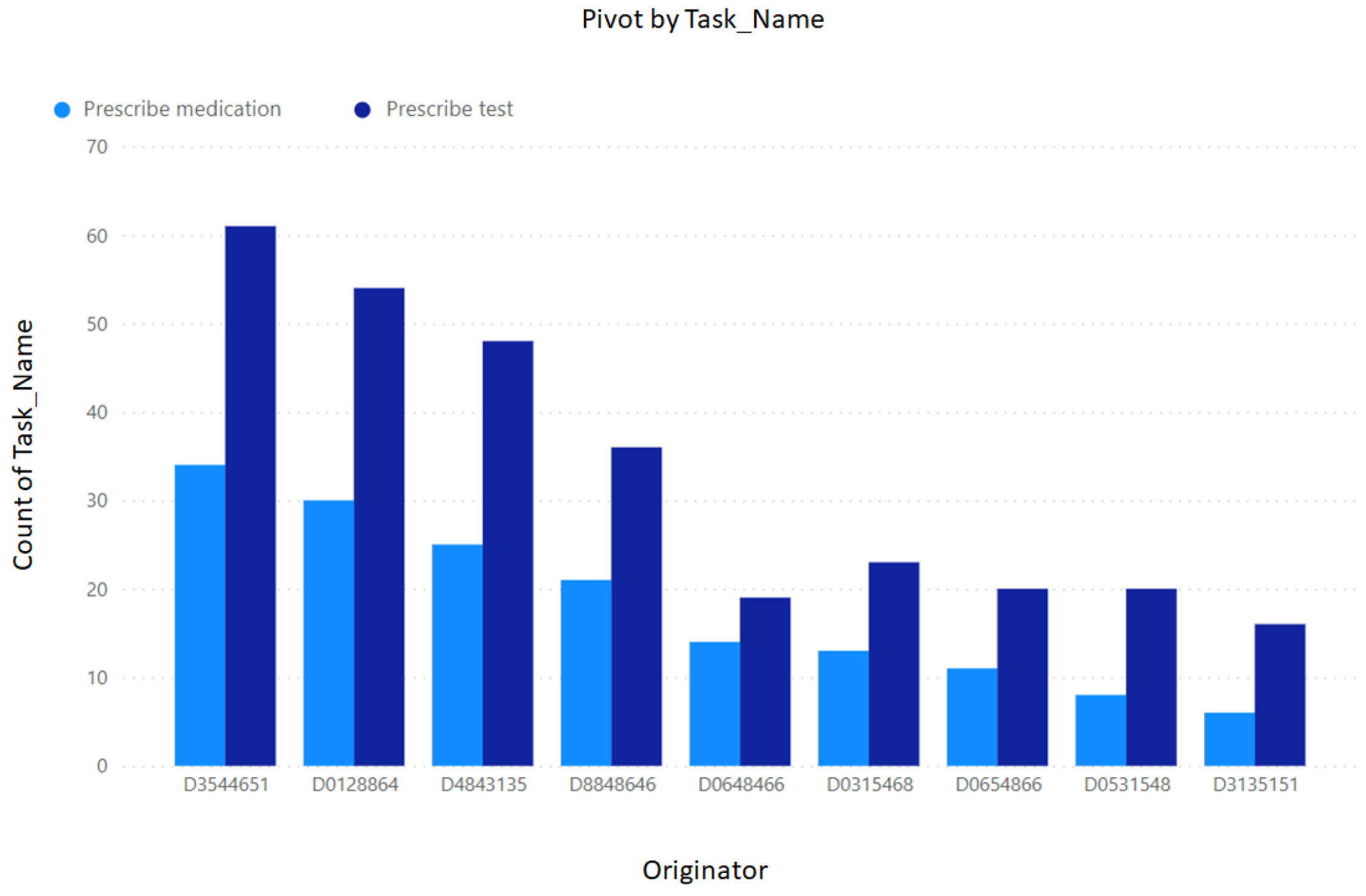

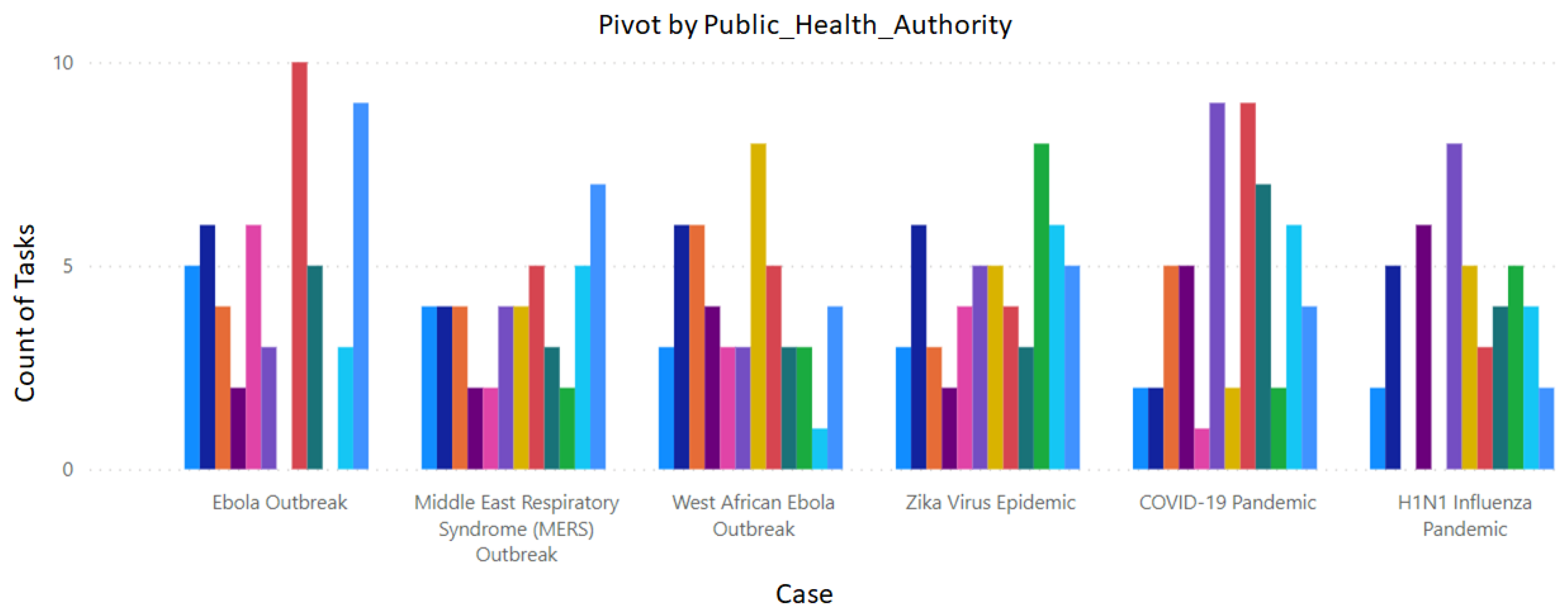

Figure 18 shows a pivoting analysis by multi-attribute bar plot representing the count of medications (attribute