1. Introduction

Scientific documents, such as research articles, are rich sources of data for Information Retrieval (IR) and Natural Language Processing (NLP) tasks, allowing the extraction and analysis of specialized knowledge from extensive bodies of scholarly work [

1]. In this framework, the extraction process can be conducted so much at the document level, as at the section level, depending on the aim of the task.

Extraction that occurs at the level of the entire document is pertinent to tasks whose objective might be overall summarization, topic modeling, or full-text classification. On the other hand, the distinct content typically found in each of those sections of a scientific paper can be leveraged by focusing on specific sections within a document. Thereupon, information about the objectives of a study, the methods used, or the key findings could be extracted using Text Mining (TM) techniques for further analysis [

2].

Along these lines, abstracts from scientific articles can be a valuable source of information for understanding the scope of a study. Although abstract formats may vary, based on field and journal guidelines, they currently tend to follow a semi-structured format that nonetheless includes key elements, such as background, objectives, methods, results, and conclusions.

According to the latest American Psychological Association (APA) publication manual [

3], empirical article abstracts should adhere to specific content guidelines. Among other elements, essential features regarding the study method are requested to be included. These guidelines emphasize the inclusion of essential methodological details, such as data-gathering procedures, sample characteristics, research design, and materials used. While adherence to these guidelines does not inherently ensure methodological rigor or replicability, it supports transparency and encourages robust reporting practices, elements that were not always standard practice in the field.

In 2011, the publication of Bem’s paper [

4], which claimed to provide experimental evidence for precognition, sparked considerable controversy in the scientific community. His research was later found to have serious methodological flaws, whereas his findings, which ultimately could not be replicated, raised critical questions about the methodologies and standards employed generally in psychological research. Notably, six years prior to Bem’s publication, Ioannidis had already voiced his concerns [

5], highlighting that methodological issues in psychology were not a sudden emergence, but rather longstanding problems. This marked the inception of the replication crisis in the field.

In 2015, the Reproducibility Project culminated in a landmark study published in

Science, which succeeded in replicating only 36% of the 100 studies analyzed from leading psychology journals [

6]. Other large-scale replication initiatives, such as Many Labs 1 and 2 [

7], further corroborated these findings, indicating that a significant number of psychological studies, particularly within social psychology, exhibited low replicability.

The concepts of publication bias, p-hacking, and inadequate methodological reporting became central to the discourse surrounding the replication crisis and were thought of as factors intertwined with it [

8]. Publication bias refers to the tendency to publish only positive or statistically significant findings, while p-hacking describes the manipulation of data analysis or selective reporting to produce significant results. In response to these challenges, psychology journals and research institutions have initiated reforms aimed at improving research integrity. For instance, the abovementioned APA reporting guidelines exemplify efforts to enhance methodological transparency and rigor in psychological research.

Fourteen years after his initial publication raising concerns, Ioannidis reiterated the importance for vigilance against selection biases that skew reported

p-values and contribute to the broader misuse of statistical inference [

9]. Enhancing the quality of psychological research depends on robust methodological practices and, crucially, a commitment to methodological transparency. This transparency can be fostered through systematic adoption of practices, such as registered reports, data sharing, and pre-registration, all of which ensure comprehensive method reporting and thereby support robust replication testing [

8].

More than a decade after Bem’s publication, advancements in Machine Learning (ML) and the availability of large-scale data now allow for broader assessments of reliability in psychological research. Since research methods are closely linked to replication rates across psychology subfields [

10], leveraging these advancements to promote greater methodological transparency in abstract reporting can contribute to strengthening research integrity and fostering more robust replication efforts. This growing attention to methodological transparency and publication bias is reflected in recent work examining trends in positive result reporting within psychotherapy research [

11], highlighting the field’s increasing reliance on large-scale, data-driven approaches to assess scientific reliability. Though methodologically similar, the present study adopts a broader perspective by investigating both a wider range of methodological terminology and a broader empirical domain beyond psychotherapy research.

Research Overview and Objectives

This study applies NLP, TM, and Data Mining methods to analyze methodological reporting in psychology article abstracts in the last 30 years. Specifically, it employs a predefined curated glossary to extract method-related keywords from retrieved abstracts. The extracted keywords are then vectorized using SciBERT, and the generated vectors feed both an unsupervised and a weighted clustering model using k-means. Additionally, frequency and trend analyses are performed on the keywords associated with the formed clusters, as well as on the keywords extracted from the entire dataset, irrespective of clustering.

The objectives of the study can be summarized as follows:

A successful targeted extraction of method-related terminology from a large corpus of psychology research abstracts, grounded in a curated, domain-specific dictionary.

The use of context-aware embeddings to examine how the extracted methodological terms are contextualized within psychological discourse.

The application of a scalable methodological pipeline that enables the exploration of thematic groupings underlying methodological terminology in psychological research via dual-mode clustering and contextual embeddings.

The results of trend analysis indicate that there is an increasing presence of method-related keywords in the examined abstracts over the years. With respect to the cluster analyses, generic keywords dominate the cluster formation, even with the weighted approach in which higher weights are assigned to keywords with theoretically high discriminative value.

Overall, this study addresses a research gap by examining temporal method groupings, as well as the prevalence of method-related keywords in psychology abstracts in the last 30 years. Through the effective investigation of these topics, research and method trends are illuminated, contributing not only to greater transparency in scientific communication, but also to potential improvements in the clarity and organization of method reporting in research papers. Notably, the application of contextual embeddings to study methodological language in psychology remains relatively underexplored, further underscoring the novelty and relevance of this work.

The remainder of this paper is structured as follows:

Section 2 discusses previous research relevant to this work.

Section 3 presents materials and methods.

Section 4 outlines the results, and

Section 5 provides a discussion informed by the findings. Finally,

Section 6 summarizes the conclusions and offers suggestions for future research.

2. Related Work

Over the years, the rapid growth in scientific publications has resulted in a vast corpus of information, presenting opportunities for extracting meaningful insights. Given the large volume of text, Data Mining, ML, and NLP techniques have become essential for analyzing these publications and uncovering trends embedded within them.

In ref. [

12], Bittermann and Fischer applied LDA-based topic modeling to a corpus of 314,573 psychological publications from the PSYNDEX database to identify hot topics in psychology. For topic modeling input, they used a controlled vocabulary consisting of standardized terms assigned by the PSYNDEX editorial staff, based on a relevant APA-published thesaurus. The study further extended trend analysis by incorporating nonlinearity, employing Multilayer Perceptron MLPs to model topic probabilities as nonlinear functions of the publication year. By comparing the fit of nonlinear and linear models (R

2MLP vs. R

2linear), they identified hot topics with complex, non-monotonic trends that traditional linear regression methods could not capture. Out of 500 topics, 128 exhibited a significant linear increasing trend, while 135 showed a significant decline. The hot topics identified included online therapy, neuropsychology and genetics, traumatization, and human migration, whereas human-factors engineering, incarceration, psychosomatic disorders, and experimental methodology were found to be fading. Moreover, assigning documents to a single PSYNDEX subject classification proved challenging, as many contained elements from multiple categories.

While Bittermann and Fischer also analyzed psychology publications using a curated vocabulary, their focus was on identifying topical trends through LDA-based topic modeling, rather than investigating methodological terminology. In contrast, the present study applies clustering techniques on contextualized embeddings to explore the semantic structure of method-related language specifically, offering a complementary perspective on research trends in psychology.

In a systematic review conducted in 2019, Park and Park utilized TM techniques on a corpus of 5600 journal articles retrieved from Web of Science (WoS), and spanning from 2008 to 2018, to examine trends in the field of mHealth research [

13]. Specifically, they analyzed the titles and abstracts of the retrieved studies using KH Coder 3, an open-source software package designed for quantitative content analysis and TM. They extracted terms related to medical conditions, types of interventions, and study populations and evaluated them using Document Frequency (DF) and a co-occurrence network. To measure the similarity between paired terms, Jaccard’s coefficient and betweenness centrality were employed. The analysis identified 48 medical terms, with mood disorders (9.7%), diabetes (9.4%), and infections (8.5%) being the most prevalent. Regarding intervention-related terms, 30 unique terms were identified, with cell phone being the most frequently mentioned intervention (DF = 18.7%). A notable shift in trends was observed after 2012, with the use of mobile application interventions surpassing those cell phone-, SMS-, and internet-based. In terms of study populations, the term “female” was the one that appeared most frequently (DF = 20%). Furthermore, the strongest association between paired terms was observed between female populations and pregnancy issues, with a Jaccard value of 0.22. Additionally, cell phones emerged as the central node in the co-occurrence network. Expectedly, it was linked to other intervention types, such as SMS and mobile applications, as well as to a range of medical conditions, including mood disorders, anxiety, and diabetes.

While Park and Park also explored trends in the scientific literature through co-occurrence analysis and term similarity using Jaccard’s coefficient, their focus was on mHealth topics and document frequency. In contrast, the present study incorporates TF-IDF weighting and contextual embeddings to investigate methodological terminology presence across psychology research.

In ref. [

14], Weißer et al. employed an NLP pipeline and a k-means clustering algorithm to automatically categorize large article corpora into distinct topical groups, aiming to refine and objectify the systematic literature review process. The titles, keywords, and abstracts of the documents were preprocessed and vectorized using TF-IDF, before being fed into the clustering algorithm. Singular Value Decomposition (SVD) was applied to reduce the resulting word vectors to their principal components. Through this process, the initial search string was iteratively refined using the top terms from clusters of interest until the identified research community, i.e., research clusters, was sufficiently restricted. The optimal number of clusters was determined using the silhouette score and the elbow method. Notably, the authors tested their methodology using a generic initial search string “(Production OR Manufacturing) AND (Artificial Intelligence OR Machine Learning)”, which allowed room for refinement. However, the effectiveness of their approach was not validated with smaller initial corpora. Additionally, their findings suggested that abstracts may be less effective than titles and indexed keywords, unless the information is substantially reduced through SVD with an explained variance below 30%.

Weißer et al. applied a similar methodological approach, combining NLP and k-means clustering to group scientific documents based on topical similarity, with the aim of improving the systematic literature review workflow. However, the present study clusters method-related terms rather than documents, using contextual embeddings (SciBERT) instead of TF-IDF, and Uniform Manifold Approximation and Projection (UMAP) rather than SVD, to uncover semantic groupings in methodological language within psychology abstracts.

In a study published in 2021 [

15], three years after [

12], Wieczorek et al. also sought to map research trends in psychology, this time analyzing 528,488 abstracts retrieved from WoS. Their preprocessing steps included stop word removal, tokenization, and lemmatization. Additionally, bigrams appearing more than 50 times were concatenated to identify common phrases. For topic identification, they applied Structural Topic Models (STMs), using semantic coherence and exclusivity to determine the optimal number of topics. They identified a range of k = (90, 100) by locating a plateau where coherence was no longer decreasing, and exclusivity showed no further improvement. For the final topic labeling, a qualitative assessment by five scholars served as the last step in the process. Furthermore, linear regression applied for trend identification revealed that psychology is increasingly evolving into an application-oriented, clinical discipline, with a growing emphasis on brain imaging techniques, such as fMRI, and methodologies closely linked to neuroscience and cognitive science. Additionally, the study found a decreasing alignment between psychology and the humanities, as well as a decline in the coverage of psychoanalysis.

While Wieczorek et al. used STM to model topical trends in psychology abstracts, the present study shifts the analytical focus to method-related terminology, clustering semantically similar terms to explore how methodological language is reported.

In ref. [

16], Weng et al. explored key topics at the intersection of AI, ML, and urban planning by analyzing 593 abstracts from Scopus. They proposed an alternative iterative approach to [

14], leveraging GPT−3, the Stanford CoreNLP toolkit, the Maximal Marginal Relevance (MMR) algorithm, and the HDBSCAN clustering method. Candidate keywords were identified using syntactic patterns (Stanford CoreNLP) and refined via MMR. The authors generated embeddings for both abstracts and keywords using GPT−3, then clustered them with HDBSCAN. For the grouping of abstracts, outliers were iteratively treated as separate clusters, until all clusters contained fewer than 50 abstracts. Abstracts were assigned to a certain topic category based on cosine similarity between their embeddings and keyword embeddings. This process brought up 24 clusters, each containing between 10 and 46 abstracts distributed across three well-defined bands. Seventeen out of those clusters achieved good Silhouette scores (>0.5). As for keyword grouping, a total of 2965 keywords were identified, which were organized into 98 keyword groups. On average, each cluster contained three keyword groups, and every cluster had at least one keyword group with good coverage (>50%).

While Weng et al. used GPT−3 embeddings and HDBSCAN clustering to analyze a small corpus of abstracts at the intersection of AI, ML, and urban planning, the present study differs in both scale and scope, employing SciBERT and k-means to cluster methodological terms across a large body of psychology research.

In ref. [

17], research trends in AI and healthcare technology were analyzed through an examination of 15,260 studies, also from Scopus (1863–2018). The researchers preprocessed abstracts using OpenNLP, extracting 3949 key terms based on TF-IDF and occurrence thresholds. These terms were used in an LDA-based topic modeling process, identifying seven research topics, with three major ones: AI for Clinical Decision Support Systems (CDSS), AI for Medical Imaging, and Internet of Healthcare Things (IoHT). Further analysis included two-mode network analysis to assess topic overlap, word cloud analysis of top allocation probabilities, and ego network analysis of key terms. In AI for CDSS, “Medical Doctor” had the highest centrality, linking to terms like “Medical Prescription” and “Decision Making”. AI for Medical Imaging focused on terms like “Medical Image”, “Brain”, and “Computed Tomography (CT)”, reflecting augmented reality applications. IoHT highlighted terms such as “Sensor”, “Body Area Network (BAN)”, and “Real-Time”, indicating trends in personalized healthcare.

While Shin et al. used TF-IDF and LDA to model research topics in AI and healthcare based on abstracts, the present study diverges in both domain and method, using clustering on contextual embeddings to explore methodological language in psychology research.

Pertaining again to the field of psychology and the usage of publication metadata for the identification of trends and hot topics, Sokolova et al. [

18] analyzed emerging trends specifically in clinical psychology and psychotherapy, using metadata from Microsoft Academic Graph (2000–2019). They employed a TM system incorporating NLP and ML techniques via Gensim, following a two-step approach: identifying key terms and clustering them into thematic groups to create trend maps. Term identification combined n-gram and skip-gram extraction, with a Word2Vec-based 200-dimensional vector space, refined through expert evaluation. Terms with cosine similarity >0.5 to a psychotherapy vector were retained, resulting in 234 key terms after filtering, based on significance and dynamics values (reflecting term importance and growth trends). Seven thematic clusters were identified, with some terms overlapping multiple clusters. Key findings included gambling disorder as the top term in mental disorders, moral injury in negative mental factors, mindfulness therapy in psychotherapy, and computational psychiatry in pharmacology and neurobiology.

While Sokolova et al. used Word2Vec-based term vectors and cosine similarity to map emerging trends in clinical psychology and psychotherapy, the present study differs both in scope and approach, focusing on methodological terminology across empirical psychology more broadly, and leveraging contextual embeddings and clustering to explore semantic groupings.

Finally, study [

11] examined positive result reporting in psychotherapy studies by evaluating the classification performance of a fine-tuned SciBERT model and a pre-trained Random Forest model. Using 1978 in-domain and 300 out-of-domain abstracts, they compared these models against rule-based benchmarks relying on statistical phrases (e.g.,

p < 0.05). Abstracts were classified as “positive results only” or “mixed/negative results”, with any negative result placing an abstract in the latter category. A logistic regression model was used as an additional benchmark to control for abstract length bias. SciBERT achieved the highest accuracy (0.86 in-domain, 0.85–0.88 out-of-domain), outperforming Random Forest (0.80 and 0.79–0.83, respectively). After validation, SciBERT was applied to 20,212 PubMed randomized control trial abstracts, revealing an increase in positive result reporting from the early 1990s, peaking in the early 2010s, followed by a modest decline and resurgence in the early 2020s. Moreover, linear regression analysis over two periods (1990–2005 and 2005–2022) identified a significant shift around 2011.

While this study similarly leveraged SciBERT embeddings to examine abstract-level trends, it focused narrowly on positive result reporting within psychotherapy studies using classification models. In contrast, the present study investigates methodological terminology across a broader range of empirical psychology research, applying unsupervised clustering to uncover semantic patterns beyond outcome reporting.

Overall, the present study adopts a broader perspective by examining general methodological terminology across diverse empirical domains within psychology and independently of subfield. By leveraging contextualized SciBERT embeddings, a model still relatively underused in this area, on a large and diverse corpus of over 85,000 abstracts from reputable sources, it offers a scalable and semantically rich approach to understanding method reporting trends.

3. Materials and Methods

Our study aims to provide a comprehensive overview of the most prevalent methods in psychology publications in the last 30 years, based on how they are represented in a corpus of psychology abstracts.

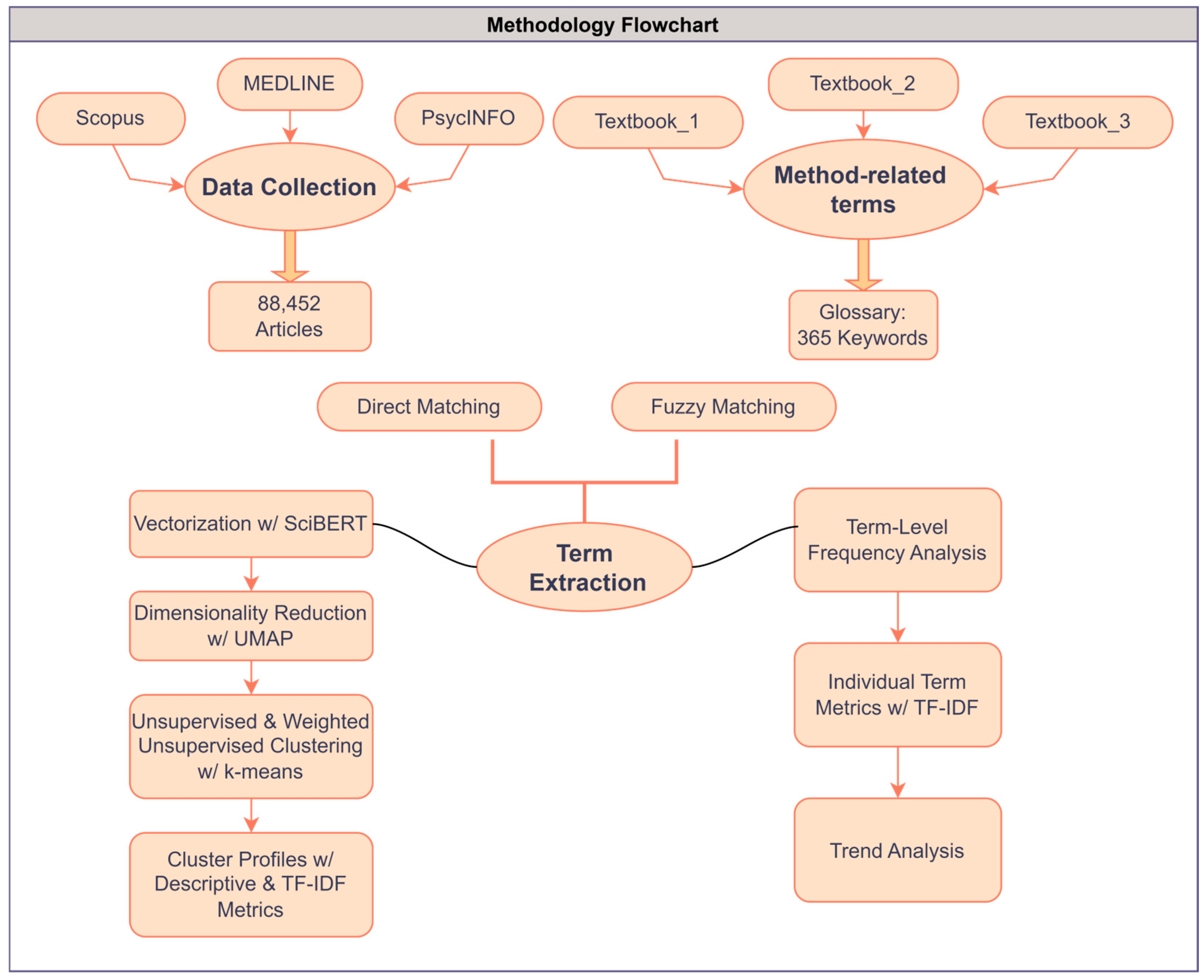

Figure 1 provides a schematic representation of the employed methodology, with the following subsections detailing each presented component.

In the initial stage of the process, we collected the data and curated the glossary. Before proceeding with downstream analyses, we extracted method-related keywords from the retrieved abstracts, using both direct and fuzzy matching. These keywords were then used in two complementary analytical pipelines. In the first, the extracted keywords were vectorized using SciBERT to obtain context-aware embeddings. Dimensionality reduction was performed using UMAP, and the resulting vectors were fed into both unsupervised and semi-supervised clustering models based on k-means.

This pipeline concluded with a detailed examination of the resulting clusters, using descriptive statistics, TF-IDF scores, and Jaccard similarity measures. In the second pipeline, the extracted keywords were analyzed directly, without vectorization, using TF-IDF scoring, frequency counts, and trend analysis across the full dataset to provide a broader overview of their weighted distribution and temporal dynamics.

3.1. Data Collection

Data were collected using 3 highly regarded databases in the fields of health, psychology, and behavioral sciences: Elsevier’s Scopus, PubMed’s MEDLINE, and Ovid’s PsycINFO. For the purposes of the current study, the abstract, as well as other basic metadata, of psychology articles published in these databases between 1995 and 2024 were retrieved. Basic metadata comprised “Title”, “Journal”, “Publication_Year”, “Authors”, “Abstract”, “DOI”, “ISSN”, “Volume”, “Issue”, “Pages”, “Keywords”, “Publication_Type”, and “MeSH_Terms”. The time frame from 1995 to 2024 was deliberately chosen to provide a balanced capture of methodological terminology usage in psychology, encompassing roughly 15 years before and after the publication of Bem’s paper, a pivotal event that intensified scrutiny of replicability in the field.

For retrieving articles from PubMed [

19], its publicly available API, Entrez Programming Utilities (E-utilities), was employed [

20]. The specific search term applied was ‘(Psychology [MeSH Major Topic]) AND (“1995/01/01”[Date—Publication]: “2024/08/15”[Date—Publication]) AND (English[Language]) AND (Clinical Trial[pt] OR Randomized Controlled Trial[pt] OR Observational Study[pt] OR Case Report[pt] OR Comparative Study[pt] OR Meta-Analysis[pt])’. This search initially yielded 1578 results.

For Elsevier’s Scopus [

21], the non-commercial SCOPUS Search API was used [

22]. The query targeted specific psychology journals: ‘((EXACTSRCTITLE (“Psychological Medicine”) OR EXACTSRCTITLE (“Clinical Psychology Review”) OR EXACTSRCTITLE (“Cognitive Therapy and Research”) OR EXACTSRCTITLE (“Psychological Science”) OR EXACTSRCTITLE (“Journal of Clinical Psychology in Medical Settings”) OR EXACTSRCTITLE (“Behavior Research and Therapy”) AND DOCTYPE (ar))’. This query initially returned 30,483 results.

For Ovid’s PsycINFO [

23], the Advanced Search feature was employed. ‘Psychology’ was set as a major keyword, and additional filters were applied, including ‘Articles with Abstracts’, ‘APA PsycArticles’, and ‘Original Articles’. The initial query returned 195,192 results.

Moreover, the date range was specified as a separate parameter in Elsevier’s Scopus retrieval script and applied as an additional limit in Ovid’s PsycINFO search. The selection of Elsevier’s Scopus journals and the application of the ‘APA PsycArticles’ and ‘Original Articles’ limits in PsycINFO are aligned with the study’s objectives. The chosen journals are known for publishing empirical studies, and limiting them to ‘Original Articles’ ensured the exclusion of publication types like editorials, book reviews, corrections, and reports. For the complete retrieval scripts for PubMed and Scopus, refer to the provided GitHub repository [

24].

3.2. Data Cleaning and Preprocessing

The initial corpus consisted of a total of 227,253 records. After removing duplicates, as well as records without available abstracts, and outliers, such as editorials and commentaries, the final dataset amounted to 85,452 articles. Due to the qualitative and contextual nature of the data, substituting missing values with generic placeholders or statistical measures would not preserve the integrity of the information. Therefore, instead of attempting imputation, missing values were consistently labeled as “n/a” to indicate the absence of a value without distorting the analysis. Moreover, to further ensure data integrity and consistency, redundant information such as author ranking numbers and boilerplate texts was removed, and data were coded in a consistent format for each feature. Additionally, publication years were validated to confirm they fell within the targeted date range.

For keyword extraction, three glossaries were drawn from relevant, publicly available psychology textbooks [

25,

26,

27]. Each glossary includes method-related and statistical single- and multi-word entries (hereafter jointly referred to as ‘terms’) commonly encountered in psychological research. These terms were curated to reflect foundational concepts and tools frequently used in psychological methodology and data analysis. By focusing on terms emphasized in educational resources, the glossary aims to capture language that is both central to psychological research and widely recognized within the discipline.

Before merging the glossaries, duplicates were removed, as well as those terms that refer to ethical guidelines, such as “anonymity” and “APA ethics code”, and generic methodological concepts, such as “heuristics” and “deductive reasoning”. The final glossary consisted of 365 terms, serving as the gold-standard collection for the extraction process. For the complete glossary, refer to the GitHub repository provided [

24].

After finalizing the glossary, the first preprocessing step involved converting all glossary terms and the corpus of abstracts to lowercase. Multiword terms from the glossary appearing in the abstracts were hyphenated using Python (version 3.8.10) and Python’s regular expressions module to preserve their integrity during tokenization. Additionally, Python’s inflect library was used to handle both singular and plural forms of the terms.

The next preprocessing steps involved stop-word removal and tokenization. The prior hyphenated terms were replaced temporarily by placeholders to ensure they were treated as single units during tokenization. They were converted back to their hyphenated form after the completion of tokenization. Moreover, numbers, decimals, and percentages embedded in the text were processed using custom regular expressions. This ensured that numeric values (e.g., 75%, 0.8, =3.14) were correctly identified, extracted, and handled separately from other tokens. For the preprocessing steps, the NLTK package 3.9.1 was employed.

3.3. Term Extraction

For the extraction of the terms from the abstracts, two approaches were combined on the preprocessed text. Specifically, two variants of the final glossary were created, one with hyphenated terms and another with spaced terms. Direct string matching was first applied using the hyphenated glossary, aiming to retrieve terms that had been previously hyphenated. For terms that were not directly matched, fuzzy string matching was applied using the spaced version of the glossary. To account for minor variations, the threshold for fuzzy matching was set at 90%.

3.4. Term Vectorization

The terms extracted from the abstracts were vectorized before being used in downstream analysis. For this purpose, the SciBERT model was employed. SciBERT is a pre-trained language model designed specifically for scientific text, making it a suitable choice, given the nature of the dataset in the current study. The CLS token is a special token added at the beginning of each input sequence in transformer-based models like SciBERT. The model generates an embedding for this token, called the CLS embedding, that captures a summary representation of the entire input sequence.

CLS embeddings were generated for the extracted terms within the context of their corresponding abstracts. The CLS token is used to represent the entire input sequence (the abstract plus the key term). The embedding corresponding to this token is then used as the representation of the key term within the context of the abstract. For terms that appear multiple times within the same abstract, the average of their embeddings is computed, yielding a single, context-informed representation that captures the term’s overall usage within that abstract. Additionally, to obtain a single embedding for each abstract, the embeddings of all the terms within that abstract are aggregated using mean-pooling. This approach ensures that each abstract is represented by a single, semantically meaningful vector, suitable for downstream clustering and comparative analysis.

3.5. Dimensionality Reduction

Although the embeddings for each keyword were averaged, SciBERT inherently retains 768 dimensions for each individual embedding. To address this, UMAP was applied. UMAP’s functionality aids not only in reducing the dimensionality of the embeddings, but also in preserving the local and global structure of the data. Additionally, UMAP visualizations provide an initial exploration of the data, which can guide clustering analysis more efficiently.

UMAP allows for a set of parameter settings that influence clustering results. Before settling for a final UMAP configuration, several parameters were examined. Specifically, a range of values for the number of neighbors, the minimum distance, the distance metric, and the number of components were tested.

Table 1 summarizes the different tested parameters. The selection of UMAP parameters was based on a heuristic approach that combined qualitative assessment through visual exploration and quantitative evaluation using silhouette scores after clustering.

3.6. Clustering

To explore latent methodological structures within the abstracts, the context-aware embeddings, reduced in dimensionality via UMAP, were used as input for both an unsupervised and a weighted clustering model based on k-means. In the final phase, a detailed analysis of cluster composition was conducted using descriptive statistics, weighted keyword frequencies, and Jaccard similarity scores.

3.6.1. Unsupervised Clustering Methodology

Among the clustering algorithms considered, K-means was selected for further experimentation due to its simplicity, computational efficiency, and compatibility with embedding-based representations [

28]. As with UMAP, its parameterization can significantly impact clustering performance; therefore, a number of parameters were tested, including the number of clusters, initialization method, and maximum iterations. These parameters are presented in

Table 2.

For the evaluation of clustering performance, the Silhouette score was used as a quantitative metric. Furthermore, the t-distributed Stochastic Neighbor Embedding (t-SNE) technique was employed for visual inspection of the clustering results in high-dimensional data. t-SNE includes parameters that affect visualization quality but do not influence the clustering performance itself. To optimize interpretability, t-SNE settings were tested only on the clustering models with the highest Silhouette scores.

Table 3 presents the tested t-SNE settings.

Additionally, outliers were identified for the chosen clustering model, based on two specific criteria: a Silhouette score lower than 0.2 or a distance greater than 2.0 from the cluster centroid. Since the number of outliers (115) was small relative to the dataset size, and no discernible pattern emerged upon manual inspection of their corresponding abstracts, no further corrective measures were applied.

3.6.2. Weighted Unsupervised Clustering Methodology

In addition to the unsupervised clustering approach utilized in this study, a weighted technique was explored to potentially enhance the clustering outcomes. For this purpose, a weighting scheme was developed, assigning varying weights to the glossary terms. The goal of this weighting approach was to guide the clustering algorithm in emphasizing key concepts with high theoretical discriminative value.

Figure A1 in

Appendix A depicts the employed weighting scheme for the emphasized terms. The rest of the glossary terms were not assigned an additional weight value.

The weight assignment was applied at the embedding level. More specifically, as a first step, the average term embeddings were calculated. Given the usage of SciBERT for the generation of embeddings, a single term had initially different embeddings across different abstracts. Thereupon, those different term embeddings were averaged by summing them and dividing them by the respective total term count. After the calculation of the averaged term embeddings for each term found in an abstract, weights were applied, according to the mentioned weighting scheme, and the abstract embeddings were updated, based on the weighted, averaged, term embeddings.

The clustering method closely followed the unsupervised approach, with some modifications to the tested parameters. UMAP reduced embedding dimensionality, excluding n_neighbors = 50, 100 and n_components = 50, while cosine was the sole distance metric examined.

Additionally, regarding the clustering algorithm, the same parameter values were tested as in the unweighted approach. The Silhouette score and t-SNE were used for evaluation purposes in the same manner as the unsupervised approach. The decision to test a subset of the parameters was based on the results derived from the unsupervised approach.

To further analyze the composition of the clusters, descriptive statistics were calculated. Specifically, the following metrics were computed to gain insights into cluster characteristics: Silhouette coefficients, homogeneity indices, cluster size, total terms, and unique terms per cluster. Unique terms refer to the number of glossary terms that are found in a cluster.

Additionally, to assess the importance of each cluster’s terms, TF-IDF scores were computed. A key difference from typical TF-IDF calculations is the use of binary counts instead of word frequencies for the TF component. This means that the TF value is 1 if a term is present in an abstract, and 0 if it is absent. The frequency of term appearance is not considered, so the focus is on whether a term is “present” or “absent” rather than how often it appears. Furthermore, Jaccard similarity scores were calculated, based on the TF-IDF term scores, to measure pairwise similarity between clusters.

3.7. Terms Frequency and Trend Analyses

To gain an overall perspective of the dataset regarding term occurrences, the corpus of abstracts was analyzed independently of cluster formation. More specifically, we calculated descriptive statistics to summarize the presence and distribution of extracted terms across the abstracts, including the proportion of abstracts containing terms, the average number of terms per abstract, the median, and the standard deviation.

For the assessment of the overall importance of the retrieved terms and their evolution over time, frequency and trend analyses were conducted. This analysis included calculating the frequencies of individual terms and term pairs, as well as their respective TF-IDF scores. The TF-IDF calculation follows the same binary logic as described for the clusters above; however, the TF term is adjusted based on the total number of abstracts per year.

Finally, a trend analysis was conducted to explore temporal variations in term occurrences. Specifically, we analyzed the proportion of abstracts without term presence for each year, calculated as the annual number of abstracts without terms divided by the total number of abstracts for that year.

4. Results

This section presents the results of our research. For the clustering analysis, we report the tested UMAP configurations, descriptive statistics of the formed clusters, and evaluation metrics, including Silhouette scores and t-SNE visualizations, for both the unsupervised and semi-supervised approaches. Regarding the frequency and trend analyses, we provide descriptive statistics on term occurrences, irrespective of cluster formation, along with the corresponding TF-IDF scores. Additionally, we examine temporal trends, including the annual proportion of abstracts without any terms.

4.1. Unsupervised Clustering

The UMAP configuration that yielded the best clustering results, based on the Silhouette score and the inspected UMAP and t-SNE visualizations, had the following parameter settings: 10 neighbors (n_neighbors), 0.1 minimum distance (min_dist), cosine distance metric, and 50 components (n_components).

The best clustering model was based on k-means, which produced six clusters. The parameter settings were random_state set to 42, max_iter at 300, and init_method set to k-means++.

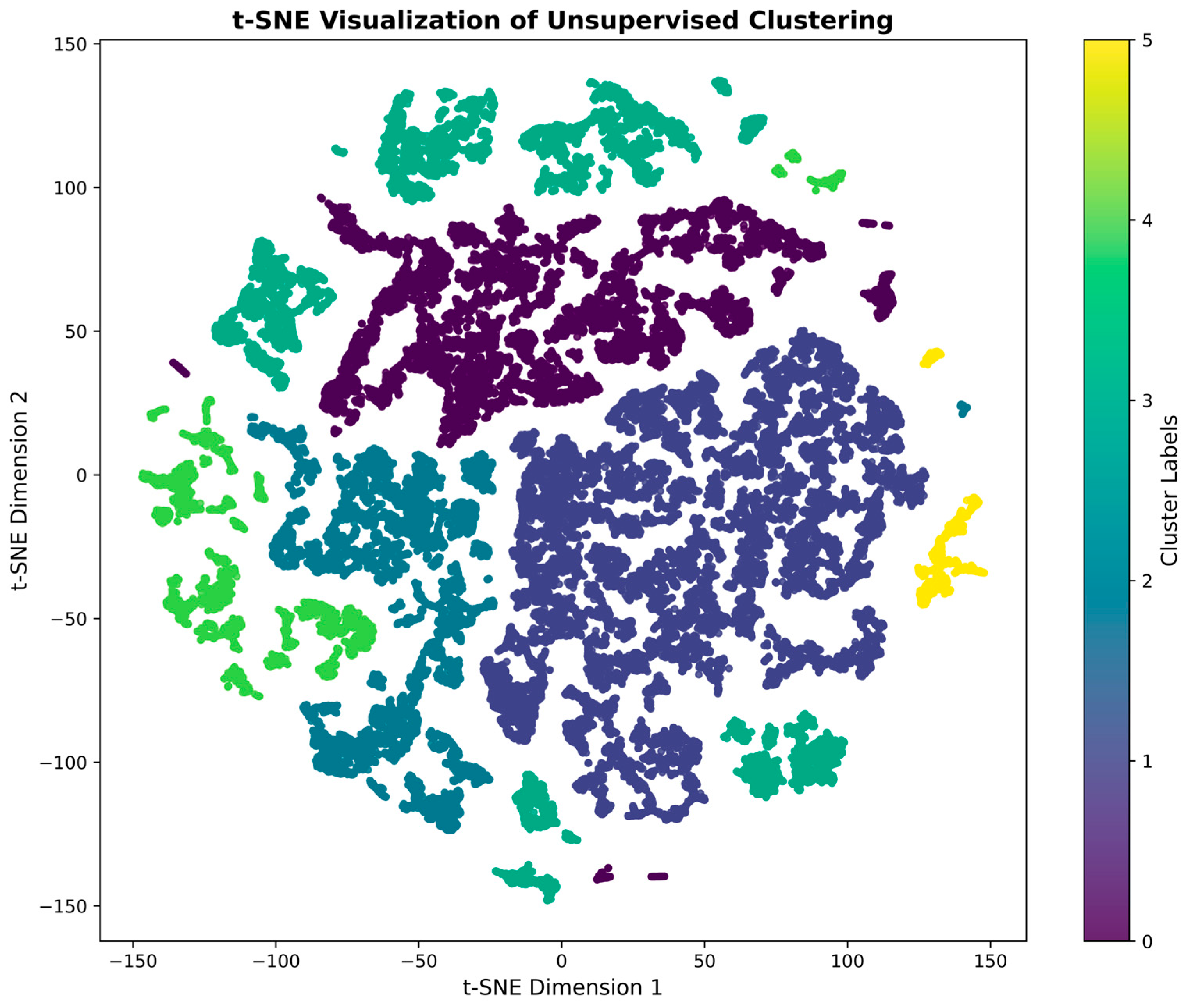

For t-SNE, the parameter settings were random_state also set to 42, perplexity at 40, learning_rate at 300, n_iter at 2000, and early_exaggeration at 18, with cosine as the metric. This combination of parameters resulted in a Silhouette score of 0.765.

Figure 2 depicts the respective t-SNE visualization. As observed, the resulting clusters varied in size. The number of records in each cluster is presented in

Table 4.

Cluster 1 comprises more than one-third of the dataset (37.3%), whereas Clusters 0, 2, and 3 are more evenly distributed in size. In contrast, Clusters 4 and 5 are notably smaller, accounting for just 7.1% and 1.6% of the dataset, respectively. The respective Silhouette coefficients, mean, and Standard Deviation (SD) for each cluster can be found in

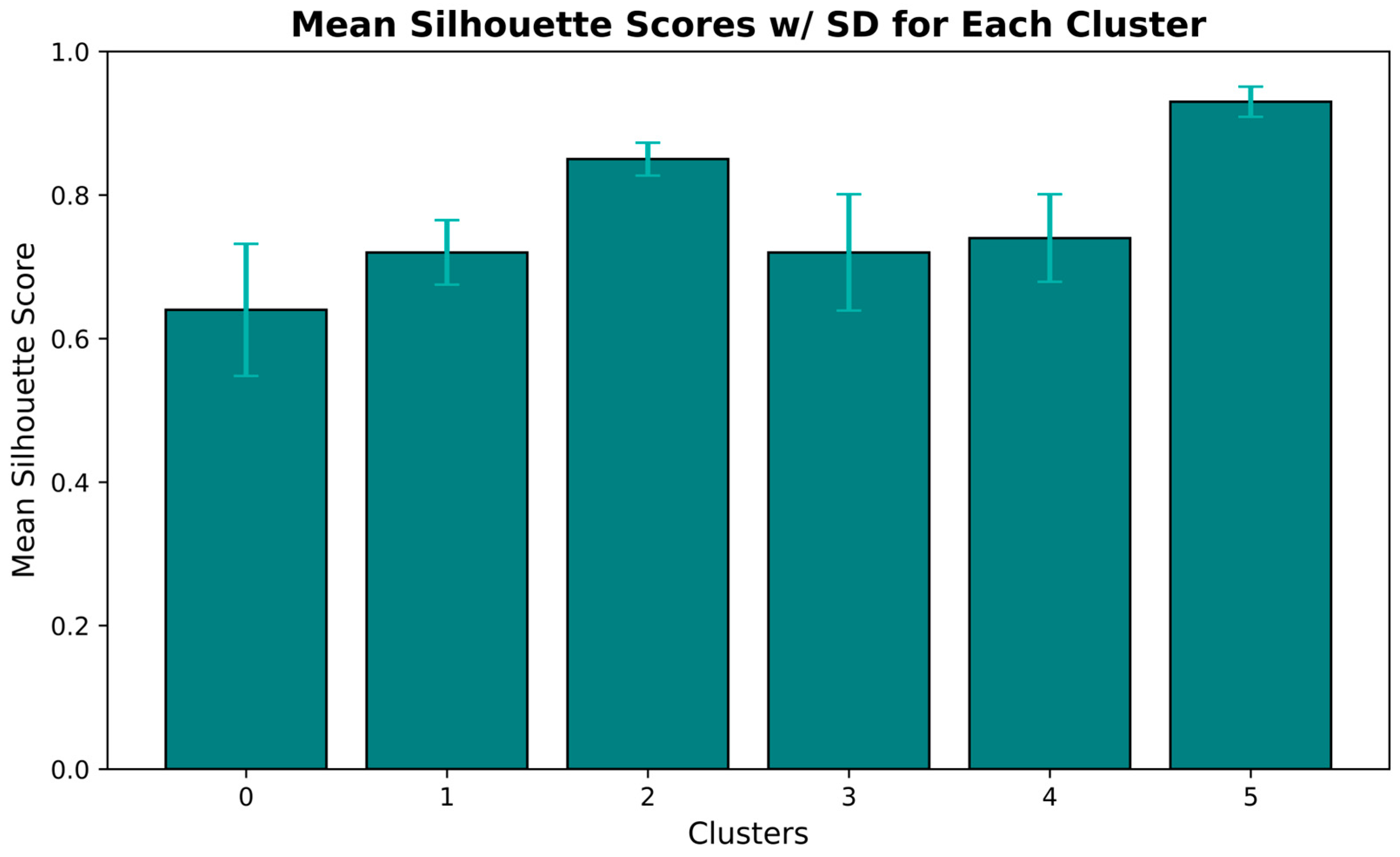

Figure 3.

All clusters have a Silhouette score above 0.6, with cluster 0 having the lowest (0.64), and cluster 5 having the highest (0.93). Furthermore, SD, represented by the respective error bars, is low for all clusters, with cluster 0 having the highest (0.09).

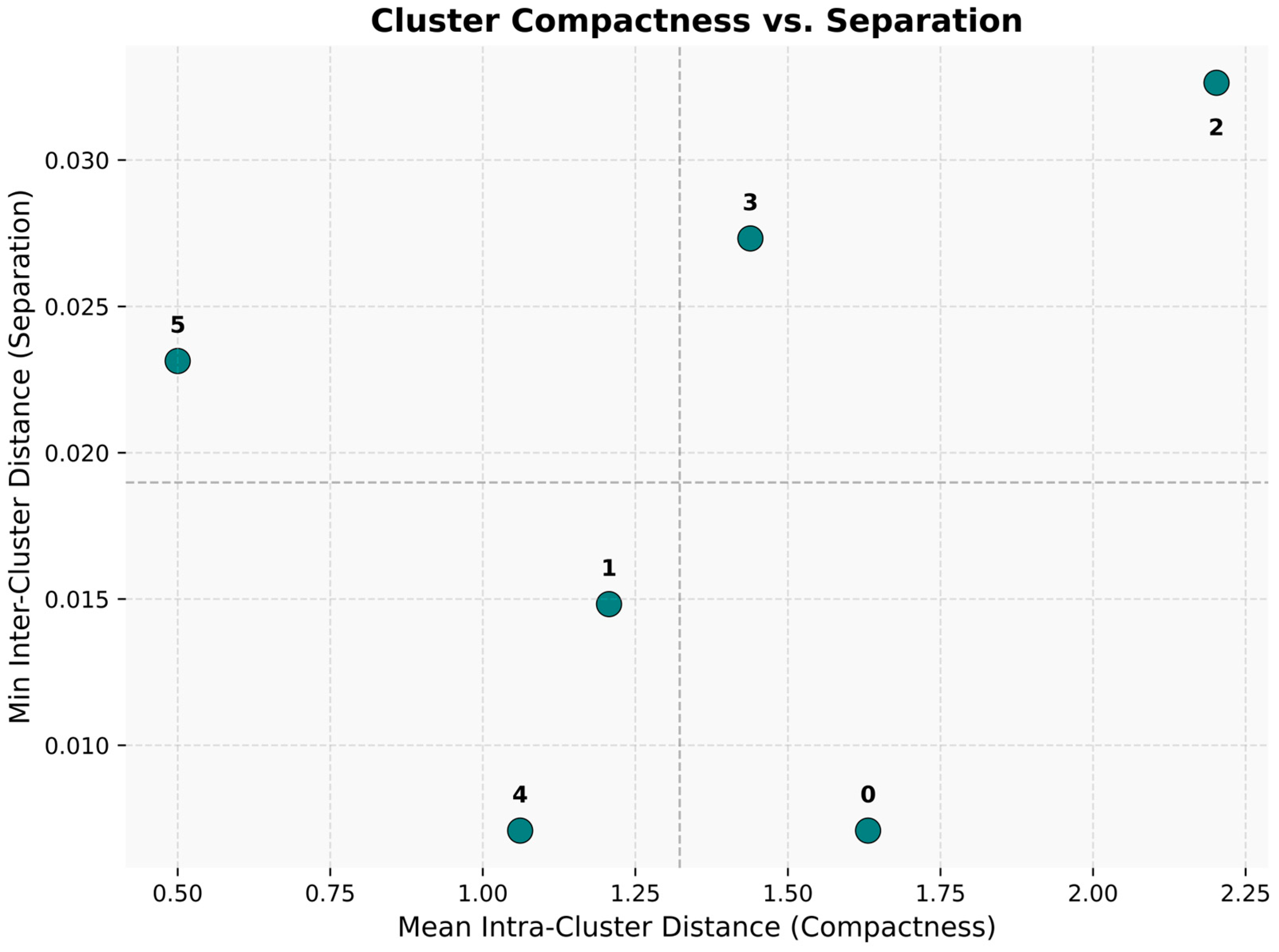

Figure 4 depicts the compactness of each cluster in relation to its separation from other clusters. Compactness is represented by the average distance of points in a cluster to their centroid, while separation is the smallest distance between the centroid of a cluster and any other cluster centroid. Higher values on the x-axis indicate that points are more loosely grouped, while higher values on the y-axis suggest that the cluster is well separated from others.

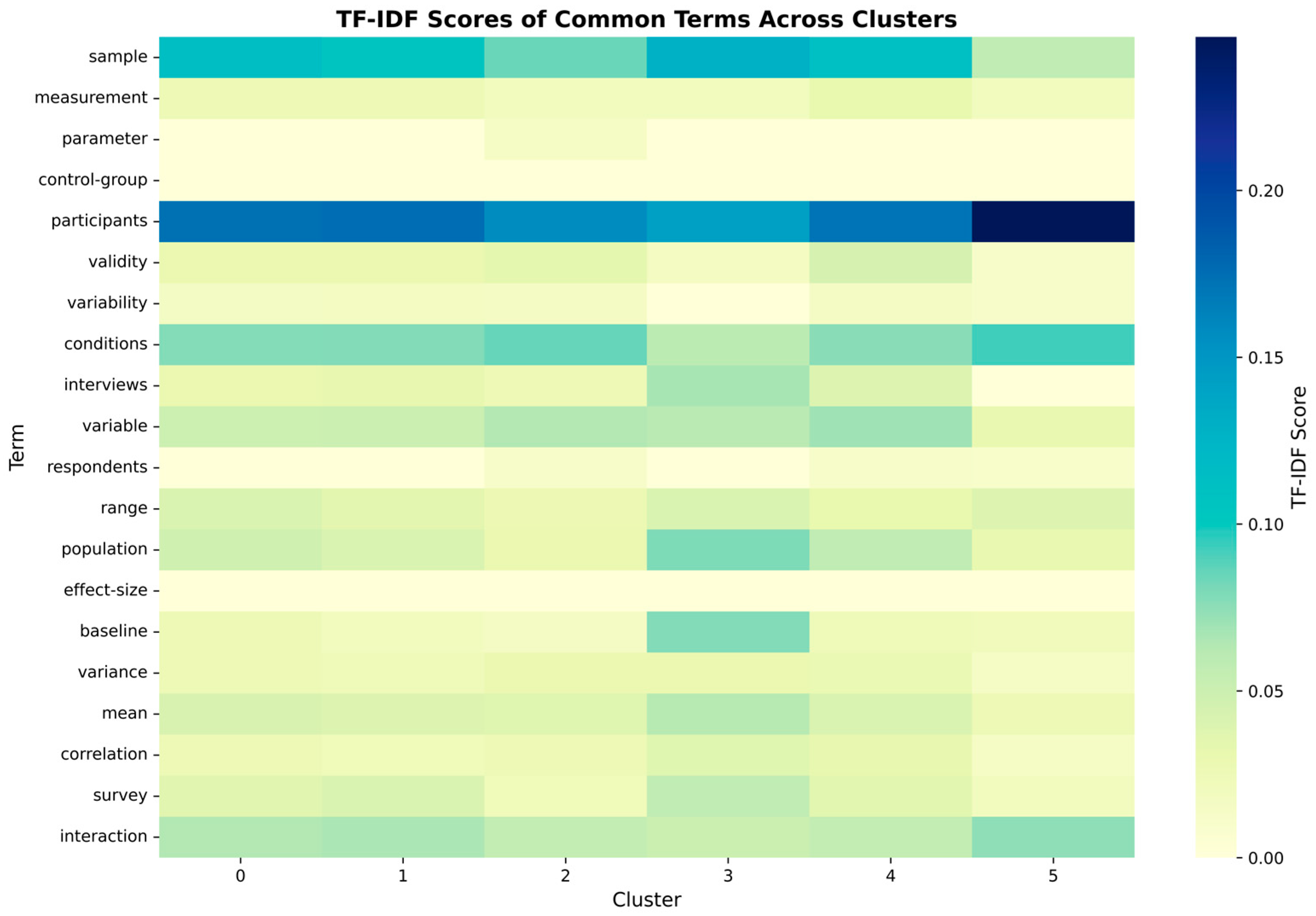

To illustrate the composition of terms within the clusters,

Table 5 presents the total number of terms in each cluster, along with the unique terms out of the total 365 glossary terms identified in each one. Despite the variation in total term count, each cluster contains a relatively small proportion of unique terms, compared to the full glossary, with the number of unique terms ranging from 102 to 223 across the clusters. This suggests that while each cluster is large regarding total terms, the clusters share a significant overlap with the defined glossary, reflecting the consistency of the thematic features across them.

Certain terms are prevalent across all clusters.

Figure 5 visualizes the terms that are shared across the top 30 terms of each cluster. Notably, “participants”, “sample”, “conditions”, “interaction”, and “variable” consistently rank among the top terms in every cluster. In support of this, a heatmap was generated to illustrate the overlap of terms among clusters, using their respective Jaccard similarity scores.

Figure 6 displays this heatmap.

All clusters, except for 5, exhibit strong similarity with Jaccard scores ≥ 0.67. The highest similarity score (0.79) was observed between cluster 1 and cluster 0, which is expected, given that these two clusters contain the highest number of total (56,118 and 34,697, respectively) and unique terms (223 and 215, respectively). Additionally, cluster 5 presents the least similarity with all the other clusters, something also expected given its smaller size, as well as the low number of total and unique terms found in it (1927 and 102, respectively).

4.2. Weighted Unsupervised Clustering

The UMAP configuration that yielded the best clustering results, based on the Silhouette score, had the following parameter settings: 30 n_neighbors, 0.5 min_dist, and 10 n_components. The resulting Silhouette score was 0.4598.

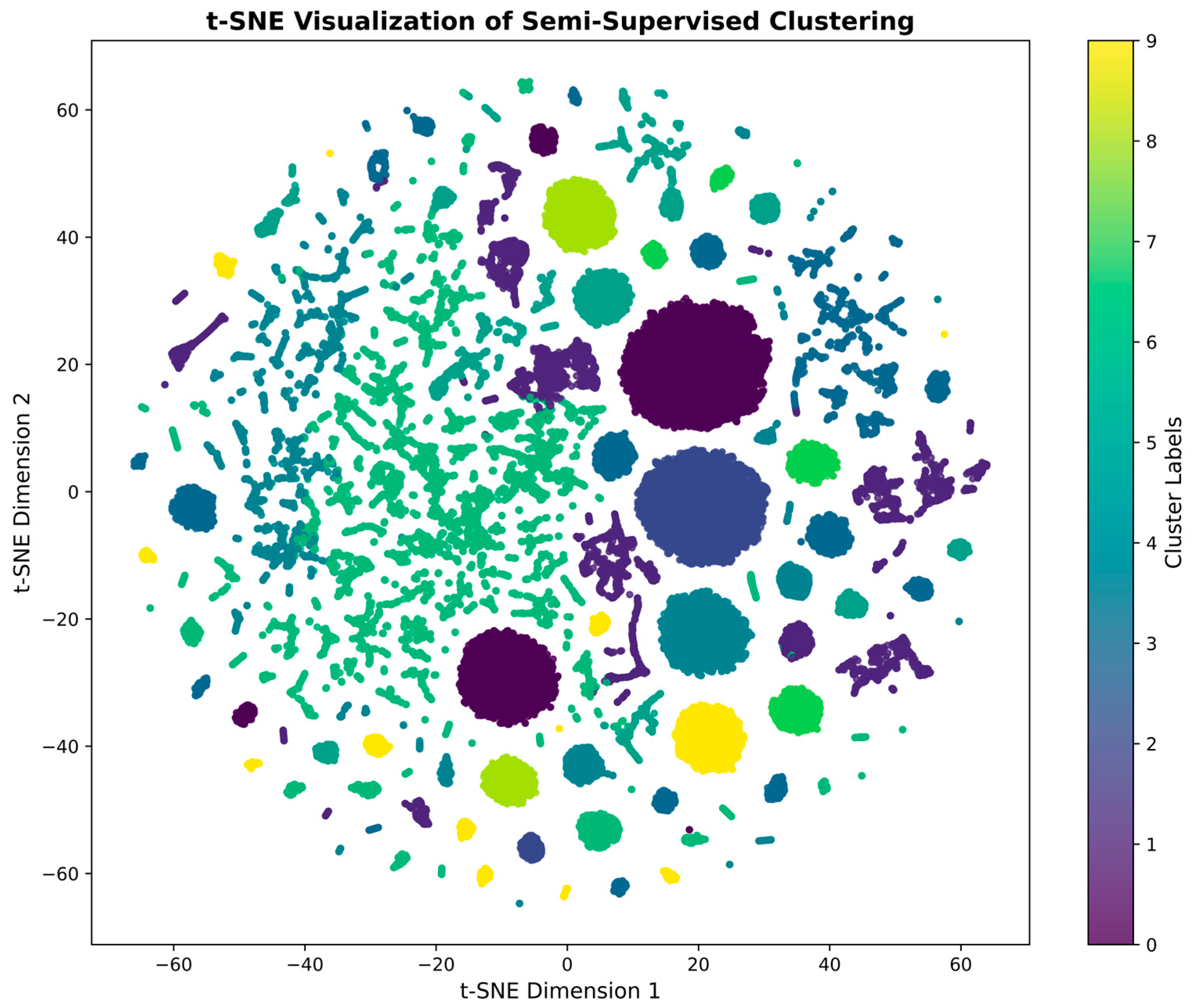

Figure 7 depicts the respective t-SNE visualization.

The number of clusters that were identified was 10, and they varied in size. The number of records in each cluster is presented in

Table 6.

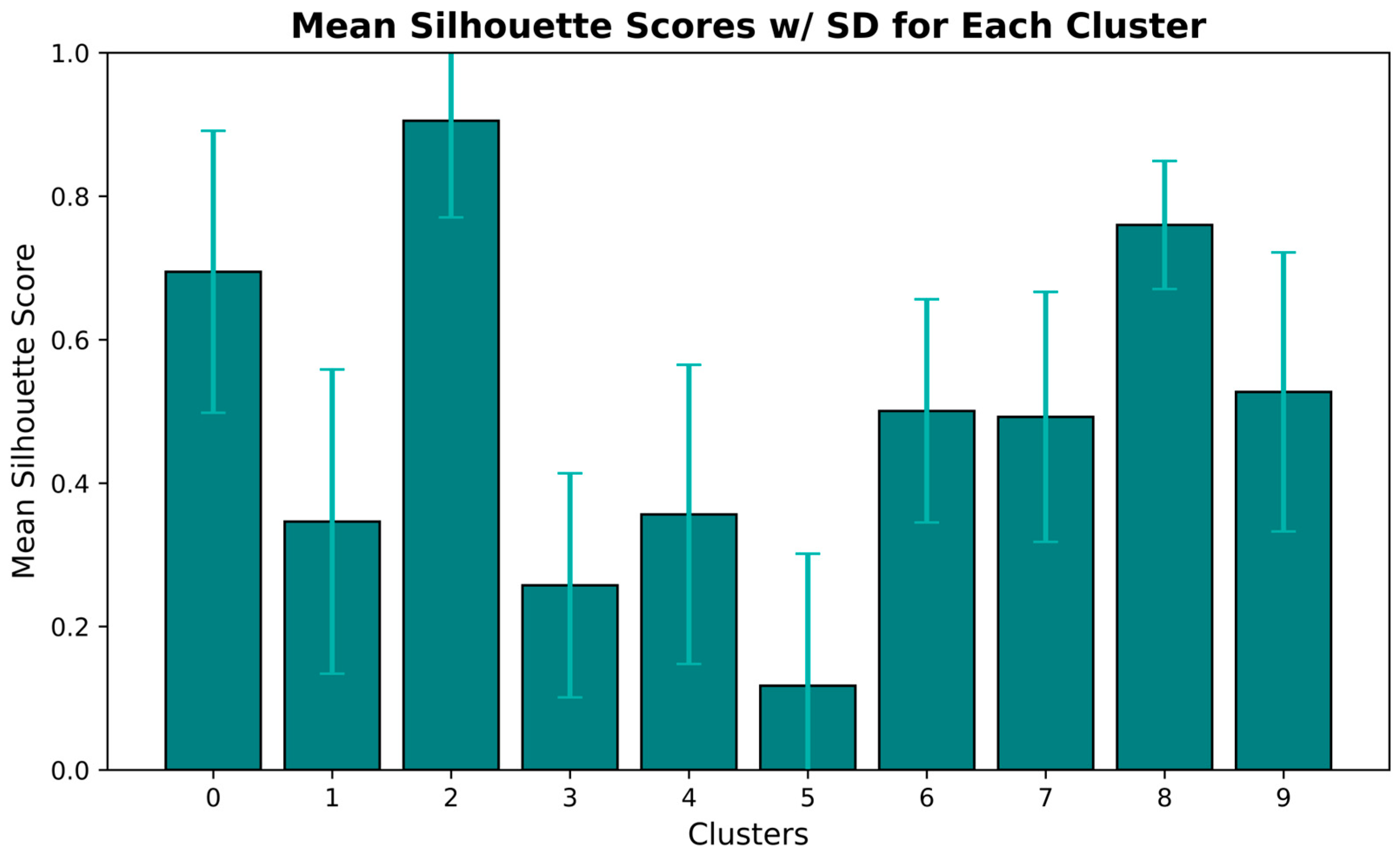

Six out of ten clusters, namely, clusters 0 to 5, are comparable in size, each comprising between approximately 6% and 14% of the dataset. Moreover, almost one-third of the abstracts are placed in cluster 6 (27.1%). The respective Silhouette coefficients, mean, and SD for each cluster can be found in

Figure 8.

Clusters 0, 2, and 8 show a sufficient Silhouette score; however, only the last one comes with a small SD. To illustrate the composition of terms within the clusters,

Table 7 presents the total number of terms in each cluster, along with the unique terms identified in each one.

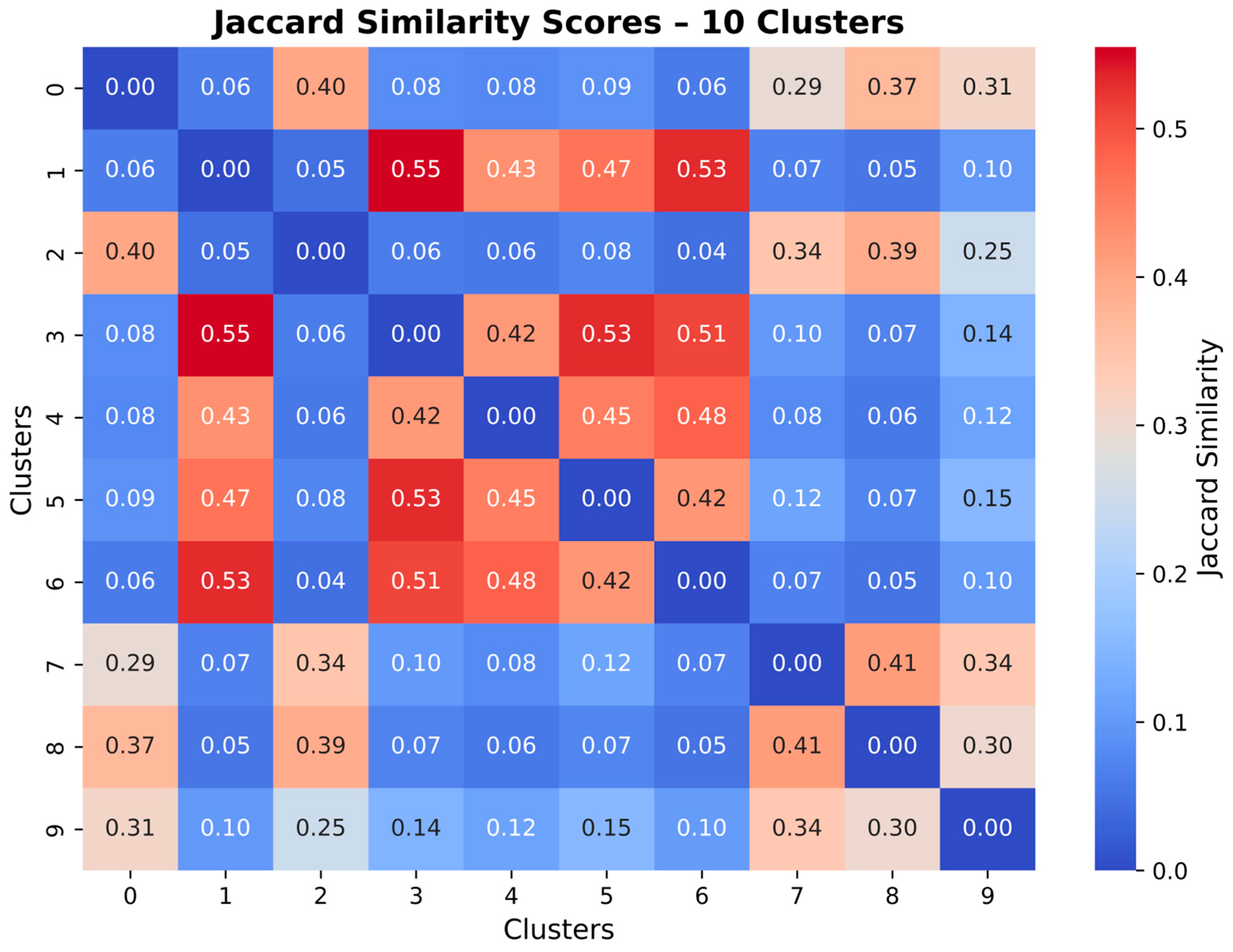

As can be observed, clusters 0, 2, 7, 8, and 9 contain just a fraction of glossary terms. In addition to this, a heatmap was generated to illustrate the overlap in keywords between clusters, using their respective Jaccard similarity scores.

Figure 9 displays this heatmap.

In contrast to the corresponding unweighted results, several clusters exhibit low inter-cluster similarity, with some pairs falling below a similarity score of 0.10. None of the cluster pairs exceed a similarity score of 0.6, a threshold that was commonly observed in the unweighted clustering outcomes. Notably, clusters 1, 3, 4, 5, and 6 demonstrate comparatively higher mutual similarity, with scores ≥ 0.42. Cluster 3 stands out as the only cluster with three similarity scores ≥ 0.51, specifically, with clusters 1, 5, and 6. On the other hand, clusters 0 and 8 show limited overlap with the rest, each having five pairwise similarity scores ≤ 0.09.

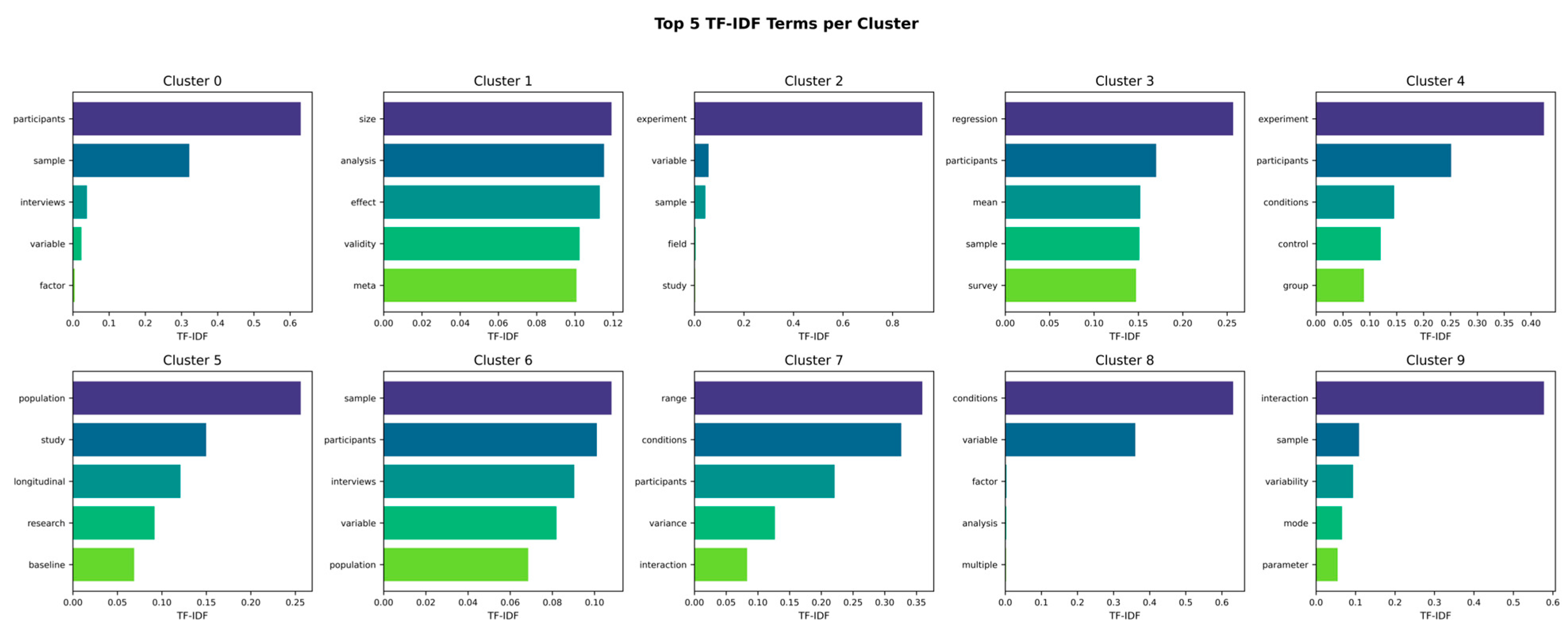

Figure 10 presents the top five terms per cluster.

Notably, clusters 1, 3, and 6 contain terms that are consistently prominent across most abstracts, whereas clusters 0, 2, and 8 feature only a few terms with a significant presence in their respective abstracts.

4.3. Frequency and Trend Analyses

Out of 85,452 abstracts, 66,792 (78.16%) contained glossary terms. On average, each abstract had 1.82 terms, with a median of 1 term per abstract and an SD of 1.62.

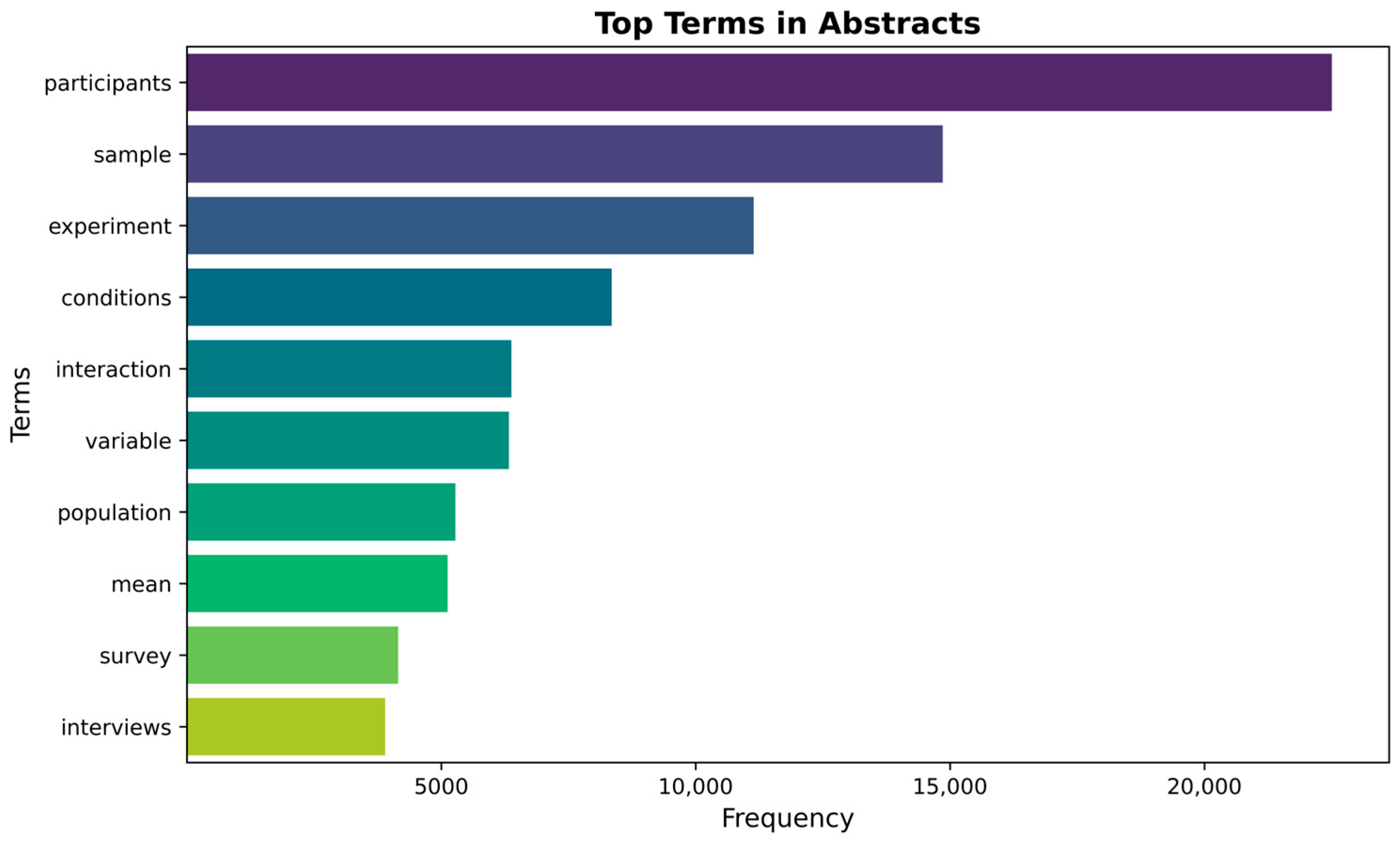

Figure 11 presents the top 10 detected terms. “Sample” and “experiment” were found in more than 10,000 abstracts, while “participants” in more than 20,000. Collectively, the top 10 terms largely reflect foundational aspects of research design and analysis, such as references to participants, variables, and commonly used procedures like surveys or interviews, that frequently appear in quantitative studies.

The calculation of the TF-IDF scores corroborated what was apparent from the term frequencies.

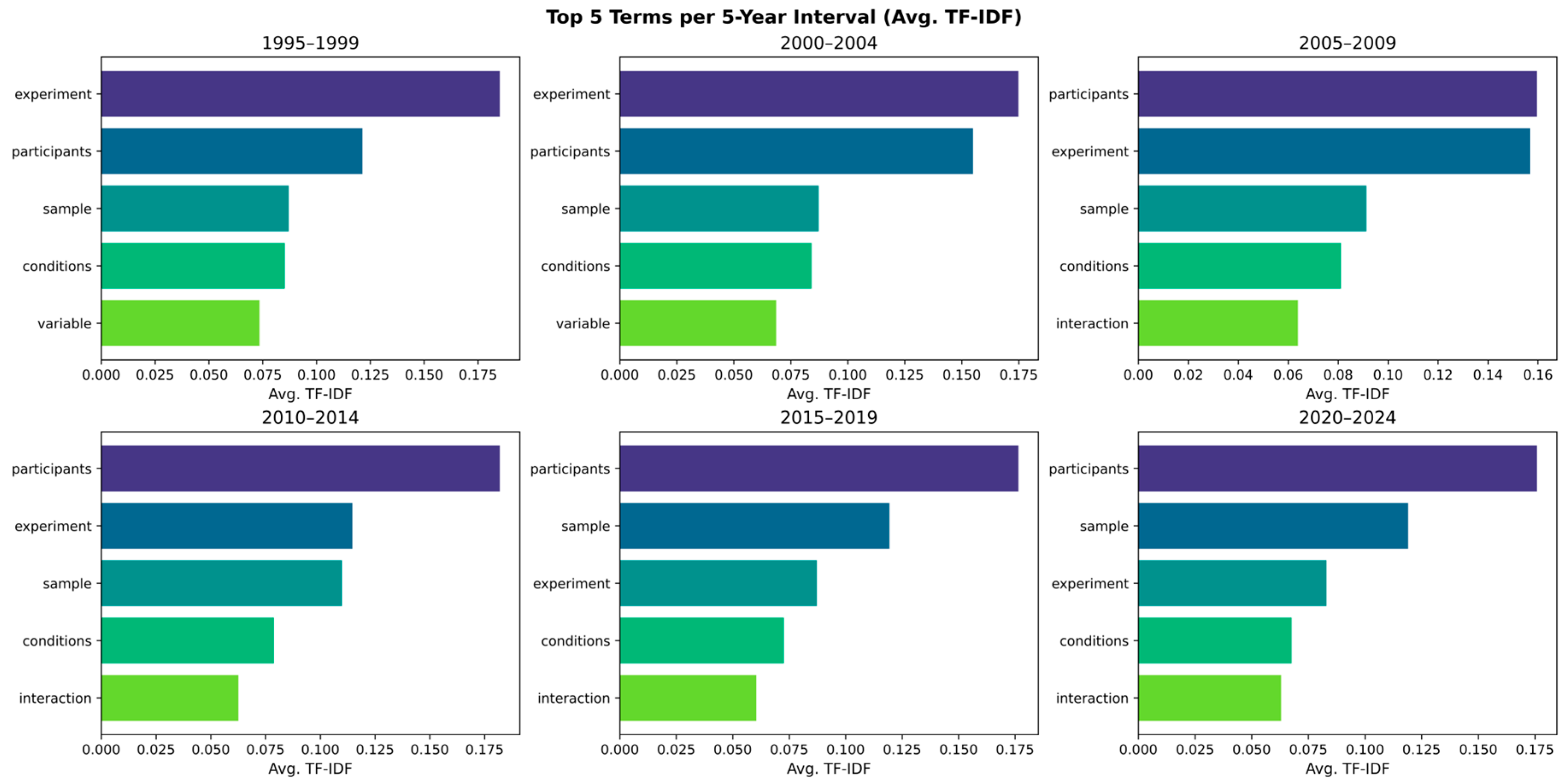

Figure 12 depicts the top five terms per 5-year window. TF-IDF scores have been averaged for each term within each respective time period.

As can be observed, the same five terms are the most prevalent ones across the span of 30 years. This pattern suggests that, while emphasis varies at the margins, the central concepts represented by these terms have maintained a consistent presence in the literature.

Finally,

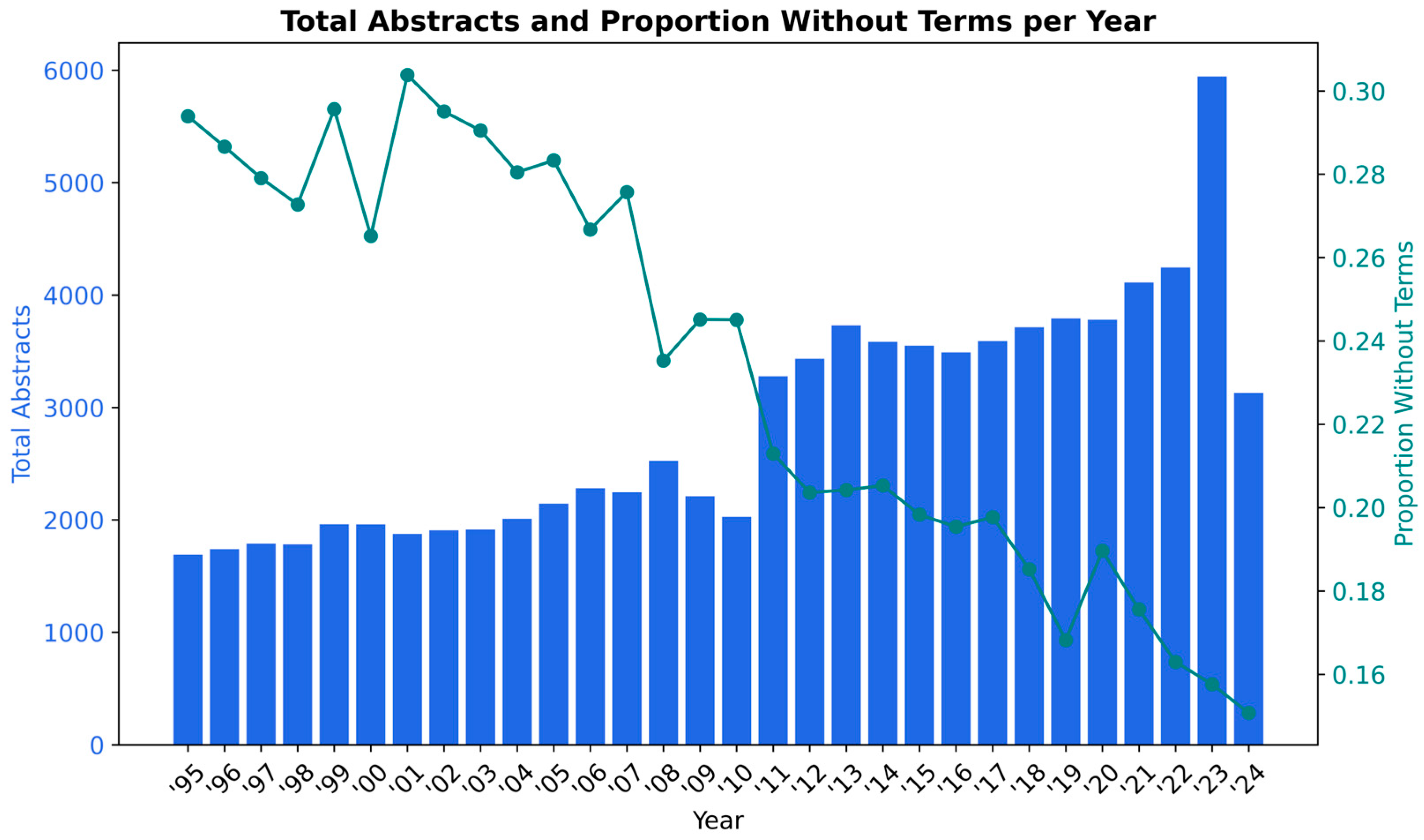

Figure 13 presents the normalized proportion of abstracts per year that contain no detected glossary terms. A clear downward trend is evident, suggesting that over time, methodological terminology has become increasingly embedded in the language of psychology abstracts. Notably, even in 2024, despite the data covering only part of the year, the proportion of abstracts containing terms already exceeds levels seen in years prior to 2011. This pattern points to a growing emphasis on methodological transparency or standardization in psychological reporting practices over the past decade.

5. Discussion

This study investigated methodological terminology in psychology research by analyzing a large corpus of over 85,000 abstracts published over the past 30 years. We applied a comprehensive NLP and clustering pipeline to examine the evolving frequency of method-related terms, which represent established methodological and statistical concepts commonly recognized in the field, and to explore potential latent methodological groupings based on term usage patterns.

A clear trend was observed: terms from the curated methodological glossary have appeared with increasing frequency over time. While this upward trend suggests a growing prominence of methodological discourse in psychological research, frequency alone does not reveal how these terms function in relation to one another. It remains unclear whether this increase reflects more diverse methodological approaches, tighter standardization, or shifts in reporting conventions.

To investigate the underlying structure of this language, we applied clustering techniques to examine whether method-related terms exhibit coherent semantic groupings when analyzed in a high-dimensional embedding space. Using both unsupervised and weighted unsupervised approaches with SciBERT-based term embeddings, we aimed to identify latent methodological structures. However, the resulting clusters showed substantial overlap, with high-frequency, generic terms appearing across multiple groups. Even when weighting strategies were applied to suppress the influence of generic terms, the clusters remained difficult to interpret thematically. While this outcome limits interpretability in terms of distinct methodological categories, it nonetheless reveals an important insight: the methodological language used in psychology abstracts is often diffuse, with shared terminology cutting across various research designs and analytic strategies.

This linguistic entanglement highlights a fundamental challenge in capturing methodological specificity from abstracts alone, while also demonstrating the potential of embedding-based approaches to reveal such diffuse patterns. Notably, our approach diverges from prior topic modeling and trend analyses in psychology and related domains in both scope and design. To the best of our knowledge, no prior studies have applied this combination of techniques to examine methodological language in abstracts across the full breadth of psychology research. As such, direct methodological performance comparisons with previous work are not straightforward, given the different research aims, input representations, and clustering objectives.

Compared to prior studies that focused on narrower subfields, such as clinical psychology [

18] or positive reporting [

11], and employed dynamic keyword extraction from the corpus itself, this study relied on a curated glossary as a fixed reference point. This design choice enabled a targeted examination of widely used method-related terms across psychology research, providing a stable framework to track their presence and evolution over time. At the same time, the reliance on a predefined lexicon may have limited the detection of emerging or context-specific terminology better captured by adaptive term-mining techniques. These findings suggest a potential need in the field to develop more comprehensive, curated glossaries that balance expert knowledge with data-driven discovery. Additionally, unlike prior work where expert filtering occurred after corpus-based term extraction, this study front-loaded expert input via the gold-standard glossary, which may have influenced the semantic landscape used in clustering.

Another possible factor underlying the indistinct cluster formation is the nature of psychological abstracts themselves. While the frequency analysis showed an overall increase in method-related terminology, many abstracts still lack the density or specificity of methodological detail needed to enable fine-grained semantic differentiation. For example, terms that would clearly signal a longitudinal design, such as “multiple time points”, “repeated measures ANOVA”, or “attrition”, appear only sporadically, limiting the ability of embeddings to form coherent methodological profiles. These reporting limitations further complicate the semantic modeling of methodological terminology, motivating the exploration of advanced embedding techniques.

The use of contextualized embeddings generated by SciBERT to represent method-related terms across abstracts constitutes, to our knowledge, a novel application within psychological text analysis. By leveraging SciBERT’s scientific domain pre-training, the approach aimed to capture nuanced meanings of methodological terminology in context, moving beyond traditional frequency-based or static embeddings. This application illustrates the potential of leveraging advanced language models, like SciBERT, to capture semantic properties of methodological terms in scientific text, laying groundwork for further exploration in the automated analysis of research language.

Taken together, this work contributes to the growing field of meta-research by demonstrating how NLP techniques can be used not only to track trends in methodological language, but also to assess the clarity and structure of its communication. By focusing on abstracts, widely available yet often inconsistently detailed summaries, this study highlights both the potential and current limitations of relying on such texts for automated analysis of research practices. These limitations include the sparsity and variability of methodological detail in abstracts, which challenge the ability of embeddings to capture fine-grained semantic distinctions. Nonetheless, our use of contextualized embeddings highlights how such techniques can surface underlying patterns in language use, even when explicit methodological information is inconsistently reported.

The methodological pipeline developed here, particularly the clustering of averaged contextual embeddings, offers a foundation for scalable, discipline-agnostic tools that support research synthesis and knowledge discovery. While rooted in psychology, the approach may be adapted across domains to improve how methodological information is surfaced and organized, reinforcing the broader push for transparency, standardization, and machine-readable scientific communication. By combining expert-curated glossaries with SciBERT-based contextual embeddings and dual-mode clustering, the process followed surpasses traditional and standard keyword-based approaches, providing a more flexible and semantically sensitive approach. Moreover, by substituting the curated glossary with domain-specific vocabularies, this approach could be readily adapted to other fields such as medicine or economics, enabling similar analyses of methodological language and reporting practices in those disciplines. For instance, in biomedical research, dynamic tracking of terms related to clinical trial design or statistical rigor could inform real-time assessments of reporting standards. Likewise, in economics, examining shifts in methodological emphasis (e.g., experimental vs. observational methods) over time could support systematic reviews of research trends. More broadly, the pipeline could serve as a foundation for automated meta-analyses by identifying semantically coherent groupings of studies based on method usage, or as part of real-time manuscript screening tools to monitor methodological transparency and rigor during peer review.

Limitations

As with all scientific research, this study has certain limitations that merit acknowledgment. First, the use of a fixed, curated glossary, while intentionally designed to capture widely used methodological terms in psychology, may have limited the discovery of emerging or more nuanced terminology that might be identified through adaptive, data-driven term extraction methods. Second, the selection of parameter values for UMAP and k-means was not exhaustive; choices were guided by empirical testing and visual inspection but did not involve a full-scale hyperparameter optimization. Additionally, the inclusion of other metadata could potentially enhance cluster interpretability and help refine the identification of thematic groupings. Finally, while this study focused on specific statistical, clustering, and dimensionality reduction techniques, alternative topic modeling and clustering methods, such as LDA, hierarchical clustering, or density-based approaches, could provide complementary insights. Exploring such avenues is encouraged in future research.

6. Conclusions and Future Work

This study sheds light on the evolving presence and semantic landscape of methodological terminology in psychology research, offering an updated perspective on how method-related language is employed in scientific abstracts. Using a curated glossary and contextual embeddings from a scientific language model, we generated average representations for terms based on their usage across a large corpus. These averaged embeddings were analyzed through both unsupervised and weighted clustering approaches to explore potential semantic groupings.

While the observed increase in method-related terminology over time, evidenced by 78.16% of the examined abstracts containing glossary terms with an observable rise after 2011, reflects a growing emphasis on transparency and standardization, clustering outcomes revealed limited semantic coherence, underscoring the challenges of modeling diffuse domain-specific language. These findings also highlight the dispersed and variable nature of methodological language in psychology, underscoring the challenges current clustering methods face in capturing its underlying structure.

Future studies could build on this work by expanding the curated glossary through a hybrid approach that blends expert input with data-driven term extraction. Methods like embedding-based similarity searches and syntactic pattern recognition could support dynamic term mining, helping to surface emerging or context-specific methodological terms. These additions could also contribute to ontology enrichment, broadening structured vocabularies in psychology and enhancing the depth of subsequent analyses.

Improvements could also come from fine-tuning or domain-adapting pre-trained models like SciBERT on psychology-specific corpora, allowing embeddings to better capture the nuances of methodological language. On the clustering side, exploring alternatives such as hierarchical models, graph-based clustering, or co-occurrence networks may lead to more interpretable and thematically coherent groupings. Finally, comparing results against established topic modeling techniques, such as LDA or pLSA, could offer valuable benchmarks and further validate the effectiveness of the current approach.

Nevertheless, this flexible pipeline, including the aggregation of contextual embeddings to capture term-level meaning, lays a strong foundation for future meta-research. Overall, the proposed pipeline offers a generalizable approach for semantic analysis of scientific reporting, with potential applications in meta-research, automated quality assessment, and domain-specific guideline development.