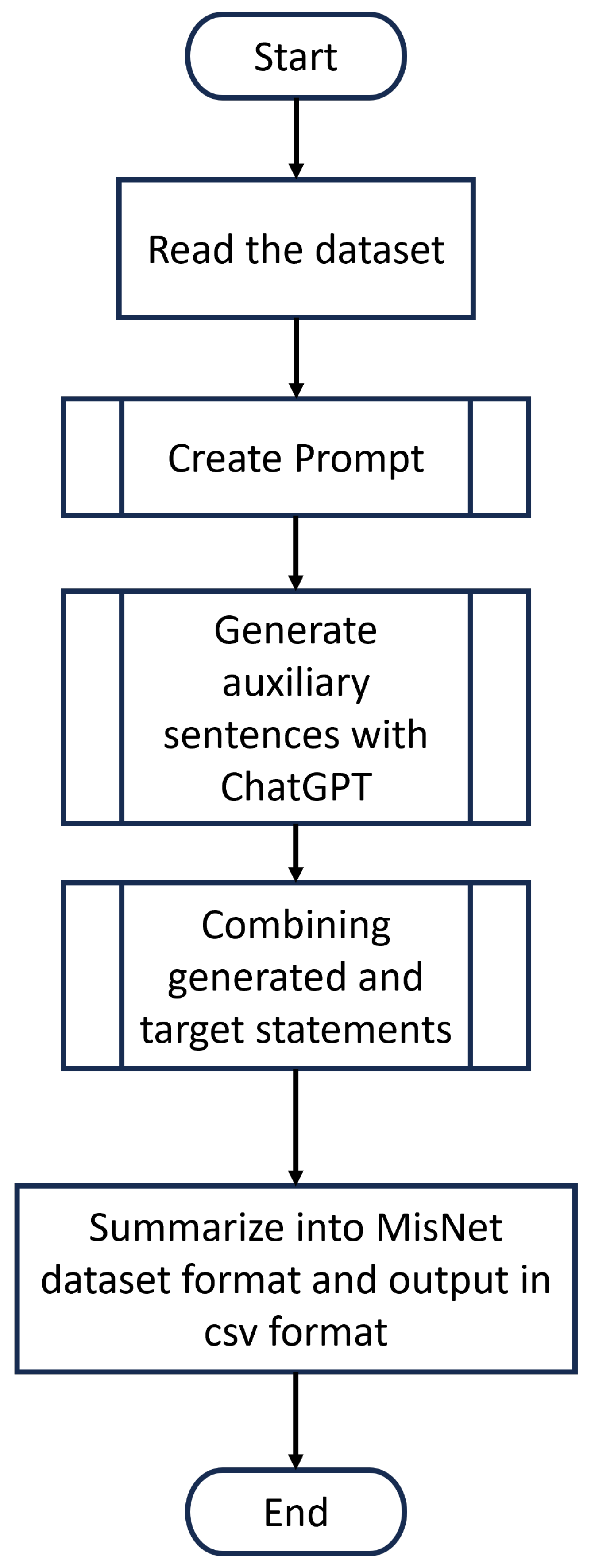

Figure 1.

Flowchart for Creating a Context-Enriched Dataset.

Figure 1.

Flowchart for Creating a Context-Enriched Dataset.

Figure 2.

Flowcharts for two auxiliary context strategies: (a) prior context and (b) following context.

Figure 2.

Flowcharts for two auxiliary context strategies: (a) prior context and (b) following context.

Figure 3.

Flowchart: Auxiliary Context (Prior + Following).

Figure 3.

Flowchart: Auxiliary Context (Prior + Following).

Figure 4.

Example dataset from MisNet (from VUA_All).

Figure 4.

Example dataset from MisNet (from VUA_All).

Table 1.

Computational environment used for auxiliary sentence generation.

Table 1.

Computational environment used for auxiliary sentence generation.

| CPU | Intel Core i7-10510U @1.80–2.30 GHz |

| RAM | 8 GB (7.77 GB usable) |

| GPU | Intel UHD Graphics (unused) |

| OS | Windows 11 Home 22H2 |

| Python | 3.8.8 |

| OpenAI SDK | 1.2.3 |

| pandas | 2.0.1 |

| Date(s) run | 2025-01-15..20 JST |

Table 2.

API configuration used for auxiliary sentence generation.

Table 2.

API configuration used for auxiliary sentence generation.

| Parameter | Value |

|---|

| Model | gpt-3.5-turbo |

| Temperature | 1.0 |

| Top-p | 1.0 |

| Top-k | Not applicable (OpenAI API) |

| Max tokens (response) | 64 (sufficient for 10-word outputs) |

| Stop sequences | Newline, EOS token |

| Seed | Not fixed (non-deterministic) |

| API library | openai v1.2.3 (Python) |

| Date range of generation | 2025-01-15 to 2025-01-20 JST |

| Hardware | See Table 1 |

Table 3.

Complete prompt templates and instantiations used to elicit auxiliary context from ChatGPT. Variables: N (target word budget), pos ∈ {precede, follow}, s (target sentence), w (target word), m∈ {, not} encodes the metaphor label, where denotes the empty string. Examples are shown for both metaphorical and literal cases. Line breaks are added here for readability; actual API calls used single strings.

Table 3.

Complete prompt templates and instantiations used to elicit auxiliary context from ChatGPT. Variables: N (target word budget), pos ∈ {precede, follow}, s (target sentence), w (target word), m∈ {, not} encodes the metaphor label, where denotes the empty string. Examples are shown for both metaphorical and literal cases. Line breaks are added here for readability; actual API calls used single strings.

| Prompt 1 Template | Prompt 2 Template |

|---|

| Generate a N-word sentence that pos “s” and in which ‘w’ in “s” is m used as a metaphor. | ‘w’ in “Ex1” is used as a metaphor. ‘w’ in “Ex2” is not used as a metaphor. Generate a N-word sentence that pos “s” and in which ‘w’ in “s” is m used as a metaphor. |

| Metaphor Case Example (, , w = sharp, s = His words were sharp.) |

| Generate a 5-word sentence that precedes “His words were sharp.” and in which ‘sharp’ in “His words were sharp.” is used as a metaphor. | ‘sharp’ in “Her criticism cut deep.” is used as a metaphor. ‘sharp’ in “The knife is sharp.” is not used as a metaphor. Generate a 5-word sentence that precedes “His words were sharp.” and in which ‘sharp’ in “His words were sharp.” is used as a metaphor. |

| Sample output: Their debate sliced like blades. |

| Literal Case Example (, , w = absorb, s = He will absorb water.) |

| Generate a 5-word sentence that follows “He will absorb water.” and in which ‘absorb’ in “He will absorb water.” is not used as a metaphor. | ‘absorb’ in “The sponge absorbed juice.” is not used as a metaphor. ‘absorb’ in “She absorbed the culture.” is used as a metaphor. Generate a 5-word sentence that follows “He will absorb water.” and in which ‘absorb’ in “He will absorb water.” is not used as a metaphor. |

| Sample output: The towel soaked it quickly. |

Table 4.

Training/evaluation environment.

Table 4.

Training/evaluation environment.

| CPU | AMD Ryzen Threadripper PRO 3955WX (16C/32T) |

| RAM | 256 GB DDR4-3200 |

| GPU | NVIDIA RTX A6000 (48 GB) |

| OS | Ubuntu 20.04 LTS |

| Python | 3.11.3 |

| PyTorch | 2.0.1 |

| Transformers | 4.29.2 |

| scikit-learn | 1.2.2 |

Table 5.

Fine-tuning hyperparameters for MisNet across different datasets.

Table 5.

Fine-tuning hyperparameters for MisNet across different datasets.

| Hyperparameter | MOH-X | VUA_All | VUA_Verb |

|---|

| Learning rate (lr) | 3 × | 3 × | 3 × |

| Training epochs | 15 | 15 | 15 |

| Warm-up epochs | 2 | 2 | 2 |

| Batch size (train) | 16 | 64 | 64 |

| Batch size (validation) | 32 | 64 | 32 |

| Class weight | 1 | 5 | 4 |

| first_last_avg | False | True | True |

| use_pos | False | True | False |

| max_left_len | 25 | 135 | 140 |

| max_right_len | 70 | 90 | 60 |

| Dropout rate | 0.2 | 0.2 | 0.2 |

| Embedding dimension | 768 | 768 | 768 |

| Number of classes | 2 | 2 | 2 |

| Number of attention heads | 12 | 12 | 12 |

| PLM | roberta-base | roberta-base | roberta-base |

| use_context | True | True | True |

| use_eg_sent | True | True | True |

| cat_method | cat_abs_dot | cat_abs_dot | cat_abs_dot |

Table 6.

Dataset Statistics (adapted from [

2]). #Sent. = number of sentences, #Target = number of target words, %Met. = proportion of metaphors, Avg. Len = average sentence length.

Table 6.

Dataset Statistics (adapted from [

2]). #Sent. = number of sentences, #Target = number of target words, %Met. = proportion of metaphors, Avg. Len = average sentence length.

| Dataset | #Sent. | #Target | %Met. | Avg. Len |

|---|

| 6323 | 116,622 | 11.19 | 18.4 |

| 1550 | 38,628 | 11.62 | 24.9 |

| 2694 | 50,175 | 12.44 | 18.6 |

| 7479 | 15,516 | 27.90 | 20.2 |

| 1541 | 1724 | 26.91 | 25.0 |

| 2694 | 5873 | 29.98 | 18.6 |

| 647 | 647 | 48.69 | 8.0 |

| Label | Meaning | Examples |

|---|

| ADJ | Adjective | big, green, incomprehensible |

| ADP | Adposition | in, to, during |

| ADV | Adverb | very, well, exactly |

| CCONJ | Coordinating Conjunction | and, or, but |

| DET | Determiner | the, a, an, my, your, one, ten |

| INTJ | Interjection | ouch, bravo, hello |

| NOUN | Noun | girl, air, beauty |

| NUM | Numeral | 0, 3.14, one, MMXIV |

| PART | Particle | ’s, not |

| PRON | Pronoun | I, you, they, who, everybody |

| PROPN | Proper noun | Mary, NATO, HBO |

| PUNCT | Punctuation | ., (), : |

| SYM | Symbol | %, ©, +, = |

| VERB | Verb | run, ate, eating |

| X | Other | Out-of-vocabulary words |

Table 8.

Evaluation Metrics on MOH-X (Prior Context). Underlined values indicate the best performance in each metric.

Table 8.

Evaluation Metrics on MOH-X (Prior Context). Underlined values indicate the best performance in each metric.

| MOH-X | Acc | Prec | Rec | F1 |

|---|

| Original | 0.8286 | 0.8190 | 0.8445 | 0.8275 |

| P1_5words | 0.8372 | 0.8302 | 0.8393 | 0.8310 |

| P1_10words | 0.8423 | 0.8405 | 0.8378 | 0.8359 |

| P2_5words | 0.8547 | 0.8619 | 0.8392 | 0.8487 |

| P2_10words | 0.8500 | 0.8432 | 0.8653 | 0.8498 |

Table 9.

Evaluation Metrics on VUA_All (Validation and Test, Prior Context). Underlined values indicate the best performance in each metric.

Table 9.

Evaluation Metrics on VUA_All (Validation and Test, Prior Context). Underlined values indicate the best performance in each metric.

| VUA_All | Validation | Test |

|---|

|

Acc

|

Prec

|

Rec

|

F1

|

Acc

|

Prec

|

Rec

|

F1

|

|---|

| Original | 0.9417 | 0.7438 | 0.7755 | 0.7571 | 0.9409 | 0.7719 | 0.7551 | 0.7613 |

| P1_5words | 0.9411 | 0.7444 | 0.7763 | 0.7572 | 0.9399 | 0.7680 | 0.7547 | 0.7592 |

| P1_10words | 0.9409 | 0.7384 | 0.7823 | 0.7571 | 0.9403 | 0.7680 | 0.7603 | 0.7615 |

| P2_5words | 0.9419 | 0.7439 | 0.7810 | 0.7596 | 0.9401 | 0.7668 | 0.7591 | 0.7605 |

| P2_10words | 0.9413 | 0.7451 | 0.7808 | 0.7595 | 0.9401 | 0.7695 | 0.7568 | 0.7606 |

Table 10.

Evaluation Metrics on VUA_Verb (Validation and Test, Prior Context). Underlined values indicate the best performance in each metric.

Table 10.

Evaluation Metrics on VUA_Verb (Validation and Test, Prior Context). Underlined values indicate the best performance in each metric.

| VUA_Verb | Validation | Test |

|---|

|

Acc

|

Prec

|

Rec

|

F1

|

Acc

|

Prec

|

Rec

|

F1

|

|---|

| Original | 0.8112 | 0.6533 | 0.7601 | 0.6932 | 0.8021 | 0.6799 | 0.7374 | 0.6972 |

| P1_5words | 0.8138 | 0.6643 | 0.7523 | 0.6945 | 0.8004 | 0.6832 | 0.7275 | 0.6924 |

| P1_10words | 0.8077 | 0.6587 | 0.7423 | 0.6849 | 0.7957 | 0.6785 | 0.7210 | 0.6859 |

| P2_5words | 0.8103 | 0.6509 | 0.7595 | 0.6917 | 0.8004 | 0.6735 | 0.7426 | 0.6965 |

| P2_10words | 0.8133 | 0.6667 | 0.7479 | 0.6929 | 0.8001 | 0.6887 | 0.7157 | 0.6885 |

Table 11.

Evaluation Metrics on MOH-X (Following Context). Underlined values indicate the best performance in each metric.

Table 11.

Evaluation Metrics on MOH-X (Following Context). Underlined values indicate the best performance in each metric.

| MOH-X | Acc | Prec | Rec | F1 |

|---|

| Original | 0.8286 | 0.8190 | 0.8445 | 0.8275 |

| P1_5words | 0.8469 | 0.8455 | 0.8508 | 0.8429 |

| P1_10words | 0.8315 | 0.8052 | 0.8716 | 0.8335 |

| P2_5words | 0.8376 | 0.8314 | 0.8453 | 0.8340 |

| P2_10words | 0.8333 | 0.8254 | 0.8487 | 0.8333 |

Table 12.

Evaluation Metrics on VUA_All (Validation and Test, Following Context). Underlined values indicate the best performance in each metric.

Table 12.

Evaluation Metrics on VUA_All (Validation and Test, Following Context). Underlined values indicate the best performance in each metric.

| VUA_All | Validation | Test |

|---|

|

Acc

|

Prec

|

Rec

|

F1

|

Acc

|

Prec

|

Rec

|

F1

|

|---|

| Original | 0.9417 | 0.7438 | 0.7755 | 0.7571 | 0.9409 | 0.7719 | 0.7551 | 0.7613 |

| P1_5words | 0.9511 | 0.8123 | 0.7531 | 0.7815 | 0.9480 | 0.8271 | 0.7357 | 0.7787 |

| P1_10words | 0.9522 | 0.8134 | 0.7638 | 0.7878 | 0.9476 | 0.8231 | 0.7370 | 0.7777 |

| P2_5words | 0.9511 | 0.8060 | 0.7629 | 0.7838 | 0.9474 | 0.8225 | 0.7357 | 0.7767 |

| P2_10words | 0.9511 | 0.8097 | 0.7575 | 0.7827 | 0.9480 | 0.8288 | 0.7338 | 0.7784 |

Table 13.

Evaluation Metrics on VUA_Verb (Validation and Test, Following Context). Underlined values indicate the best performance in each metric.

Table 13.

Evaluation Metrics on VUA_Verb (Validation and Test, Following Context). Underlined values indicate the best performance in each metric.

| VUA_Verb | Validation | Test |

|---|

|

Acc

|

Prec

|

Rec

|

F1

|

Acc

|

Prec

|

Rec

|

F1

|

|---|

| Original | 0.8112 | 0.6533 | 0.7601 | 0.6932 | 0.8021 | 0.6799 | 0.7374 | 0.6972 |

| P1_5words | 0.8648 | 0.7705 | 0.7091 | 0.7385 | 0.8442 | 0.7677 | 0.6888 | 0.7261 |

| P1_10words | 0.8718 | 0.7730 | 0.7414 | 0.7569 | 0.8359 | 0.7332 | 0.7115 | 0.7222 |

| P2_5words | 0.8561 | 0.7288 | 0.7414 | 0.7350 | 0.8561 | 0.7288 | 0.7414 | 0.7350 |

| P2_10words | 0.8683 | 0.7926 | 0.6918 | 0.7388 | 0.8423 | 0.8077 | 0.6224 | 0.7030 |

Table 14.

Evaluation Metrics on MOH-X (Prior and Following Context). Underlined values indicate the best performance in each metric.

Table 14.

Evaluation Metrics on MOH-X (Prior and Following Context). Underlined values indicate the best performance in each metric.

| MOH-X | Acc | Prec | Rec | F1 |

|---|

| Original | 0.8286 | 0.8190 | 0.8445 | 0.8275 |

| P1_5words | 0.8511 | 0.8549 | 0.8398 | 0.8444 |

| P1_10words | 0.8453 | 0.8205 | 0.8870 | 0.8493 |

| P2_5words | 0.8529 | 0.8601 | 0.8357 | 0.8460 |

| P2_10words | 0.8386 | 0.8248 | 0.8586 | 0.8385 |

Table 15.

Evaluation Metrics on VUA_All (Validation and Test, Prior and Following Context). Underlined values indicate the best performance in each metric.

Table 15.

Evaluation Metrics on VUA_All (Validation and Test, Prior and Following Context). Underlined values indicate the best performance in each metric.

| VUA_All | Validation | Test |

|---|

|

Acc

|

Prec

|

Rec

|

F1

|

Acc

|

Prec

|

Rec

|

F1

|

|---|

| Original | 0.9409 | 0.7719 | 0.7551 | 0.7613 | 0.9409 | 0.7719 | 0.7551 | 0.7613 |

| P1_5words | 0.9517 | 0.8069 | 0.7682 | 0.7871 | 0.9489 | 0.8265 | 0.7455 | 0.7839 |

| P1_10words | 0.9511 | 0.8122 | 0.7537 | 0.7819 | 0.9477 | 0.8271 | 0.7327 | 0.7770 |

| P2_5words | 0.9507 | 0.7998 | 0.7682 | 0.7837 | 0.9479 | 0.8219 | 0.7423 | 0.7801 |

| P2_10words | 0.9512 | 0.8115 | 0.7553 | 0.7824 | 0.9485 | 0.8304 | 0.7370 | 0.7809 |

Table 16.

Evaluation Metrics on VUA_Verb (Validation and Test, Prior and Following Context). Underlined values indicate the best performance in each metric.

Table 16.

Evaluation Metrics on VUA_Verb (Validation and Test, Prior and Following Context). Underlined values indicate the best performance in each metric.

| VUA_Verb | Validation | Test |

|---|

|

Acc

|

Prec

|

Rec

|

F1

|

Acc

|

Prec

|

Rec

|

F1

|

|---|

| Original | 0.8112 | 0.6533 | 0.7601 | 0.6932 | 0.8021 | 0.6799 | 0.7374 | 0.6972 |

| P1_5words | 0.8666 | 0.7500 | 0.7565 | 0.7532 | 0.8401 | 0.7485 | 0.7030 | 0.7250 |

| P1_10words | 0.8759 | 0.7841 | 0.7435 | 0.7633 | 0.8445 | 0.7657 | 0.6939 | 0.7280 |

| P2_5words | 0.8672 | 0.7484 | 0.7629 | 0.7556 | 0.8418 | 0.7405 | 0.7274 | 0.7339 |

| P2_10words | 0.8625 | 0.7506 | 0.7328 | 0.7415 | 0.8369 | 0.7394 | 0.7041 | 0.7213 |

Table 18.

Two-tailed t-test results () for prior context on MOH-X. Significance levels: † , ‡ , § . A dash (–) indicates that symmetric comparisons were omitted.

Table 18.

Two-tailed t-test results () for prior context on MOH-X. Significance levels: † , ‡ , § . A dash (–) indicates that symmetric comparisons were omitted.

| MOH-X | P1_5w | P1_10w | P2_5w | P2_10w |

|---|

| Original_data | 0.8696 | 0.0687 † | 0.2485 | 0.0613 † |

| P1_5words | – | 0.7731 | 0.6477 | 0.6748 |

| P1_10words | – | – | 0.2173 | 0.7683 |

| P2_5words | – | – | – | 0.8696 |

Table 19.

Two-tailed t-test results () for prior context on VUA_All (Validation Set). Significance levels: † , ‡ , § . A dash (–) indicates that symmetric comparisons were omitted.

Table 19.

Two-tailed t-test results () for prior context on VUA_All (Validation Set). Significance levels: † , ‡ , § . A dash (–) indicates that symmetric comparisons were omitted.

VUA_All

(Validation) | P1_5w | P1_10w | P2_5w | P2_10w |

|---|

| Original_data | § | § |

§ | § |

| P1_5words | – | 0.0386 ‡ | 1.0000 | 0.7897 |

| P1_10words | – | – | 0.0378 ‡ | 0.0700 † |

| P2_5words | – | – | – | 0.7879 |

Table 20.

Two-tailed t-test results () for prior context on VUA_All (Test Set). Significance levels: † , ‡ , § . A dash (–) indicates that symmetric comparisons were omitted.

Table 20.

Two-tailed t-test results () for prior context on VUA_All (Test Set). Significance levels: † , ‡ , § . A dash (–) indicates that symmetric comparisons were omitted.

| VUA_All (Test) | P1_5w | P1_10w | P2_5w | P2_10w |

|---|

| Original_data | § | § | § | § |

| P1_5words | – | 0.0071 § | 0.2083 | 0.4253 |

| P1_10words | – | – | 0.1779 | 0.0643 † |

| P2_5words | – | – | – | 0.6544 |

Table 21.

Two-tailed t-test results () for prior context on VUA_Verb (Validation Set). Significance levels: † , ‡ , § . A dash (–) indicates that symmetric comparisons were omitted.

Table 21.

Two-tailed t-test results () for prior context on VUA_Verb (Validation Set). Significance levels: † , ‡ , § . A dash (–) indicates that symmetric comparisons were omitted.

| VUA_Verb (Validation) | P1_5w | P1_10w | P2_5w | P2_10w |

|---|

| Original_data | 0.00091§ | 0.0272 ‡ | 0.1980 | 0.0136 ‡ |

| P1_5words | – | 0.2637 | 0.0416 ‡ | 0.4424 |

| P1_10words | – | – | 0.3456 | 0.7631 |

| P2_5words | – | – | – | 0.1798 |

Table 22.

Two-tailed t-test results () for prior context on VUA_Verb (Test Set). Significance levels: † , ‡ , § . A dash (–) indicates that symmetric comparisons were omitted.

Table 22.

Two-tailed t-test results () for prior context on VUA_Verb (Test Set). Significance levels: † , ‡ , § . A dash (–) indicates that symmetric comparisons were omitted.

| VUA_Verb (Test) | P1_5w | P1_10w | P2_5w | P2_10w |

|---|

| Original_data | § | 0.2173 | 0.1036 | 0.00045 § |

| P1_5words | – | 0.0048§ | 0.0136 ‡ | 0.6896 |

| P1_10words | – | – | 0.7273 | 0.0213 ‡ |

| P2_5words | – | – | – | 0.0395 ‡ |

Table 23.

Two-tailed t-test results () on MOH-X (Following Context). Significance levels: † , ‡ , § . A dash (–) indicates symmetric comparisons were omitted.

Table 23.

Two-tailed t-test results () on MOH-X (Following Context). Significance levels: † , ‡ , § . A dash (–) indicates symmetric comparisons were omitted.

| MOH-X | P1_5w | P1_10w | P2_5w | P2_10w |

|---|

| Original_data | 0.1318 | 0.3549 | 0.3177 | 0.0768 † |

| P1_5words | – | 0.5168 | 0.6398 | 0.7633 |

| P1_10words | – | – | 0.8789 | 0.3177 |

| P2_5words | – | – | – | 0.4565 |

Table 24.

Two-tailed t-test results () on VUA_All Validation Set (Following Context). Significance levels: † , ‡ , § . A dash (–) indicates symmetric comparisons were omitted.

Table 24.

Two-tailed t-test results () on VUA_All Validation Set (Following Context). Significance levels: † , ‡ , § . A dash (–) indicates symmetric comparisons were omitted.

| VUA_All (Validation) | P1_5w | P1_10w | P2_5w | P2_10w |

|---|

| Original_data | § | § | § | § |

| P1_5words | – | 0.0386 ‡ | 1.0000 | 0.7897 |

| P1_10words | – | – | 0.0378 ‡ | 0.0700 † |

| P2_5words | – | – | – | 0.7879 |

Table 25.

Two-tailed t-test results () on VUA_All Test Set (Following Context). Significance levels: † , ‡ , § . A dash (–) indicates symmetric comparisons were omitted.

Table 25.

Two-tailed t-test results () on VUA_All Test Set (Following Context). Significance levels: † , ‡ , § . A dash (–) indicates symmetric comparisons were omitted.

| VUA_All (Test) | P1_5w | P1_10w | P2_5w | P2_10w |

|---|

| Original_data | § | § | § | § |

| P1_5words | – | 0.0071 § | 0.2083 | 0.4253 |

| P1_10words | – | – | 0.1779 | 0.0643 † |

| P2_5words | – | – | – | 0.6544 |

Table 26.

Two-tailed t-test results () on VUA_Verb Validation Set (Following Context). Significance levels: † , ‡ , § . A dash (–) indicates symmetric comparisons were omitted.

Table 26.

Two-tailed t-test results () on VUA_Verb Validation Set (Following Context). Significance levels: † , ‡ , § . A dash (–) indicates symmetric comparisons were omitted.

| VUA_Verb (Validation) | P1_5w | P1_10w | P2_5w | P2_10w |

|---|

| Original_data | 0.00091§ | 0.0272 ‡ | 0.1980 | 0.0136 ‡ |

| P1_5words | – | 0.2637 | 0.0416 ‡ | 0.4424 |

| P1_10words | – | – | 0.3456 | 0.7631 |

| P2_5words | – | – | – | 0.1798 |

Table 27.

Two-tailed t-test results () on VUA_Verb Test Set (Following Context). Significance levels: † , ‡ , § . A dash (–) indicates symmetric comparisons were omitted.

Table 27.

Two-tailed t-test results () on VUA_Verb Test Set (Following Context). Significance levels: † , ‡ , § . A dash (–) indicates symmetric comparisons were omitted.

| VUA_Verb (Test) | P1_5w | P1_10w | P2_5w | P2_10w |

|---|

| Original_data | § | 0.2173 | 0.1036 | 0.00045 § |

| P1_5words | – | 0.0048 § | 0.0136 ‡ | 0.6896 |

| P1_10words | – | – | 0.7273 | 0.0213 ‡ |

| P2_5words | – | – | – | 0.0395 ‡ |

Table 28.

Two-tailed t-test results () on MOH-X (Prior and Following Context). Significance levels: † , ‡ , § . A dash (–) indicates symmetric comparisons were omitted.

Table 28.

Two-tailed t-test results () on MOH-X (Prior and Following Context). Significance levels: † , ‡ , § . A dash (–) indicates symmetric comparisons were omitted.

| MOH-X | P1_5w | P1_10w | P2_5w | P2_10w |

|---|

| Original_data | 0.2766 | 0.0567 † | 0.2485 | 0.4146 |

| P1_5words | – | 0.3308 | 0.8733 | 0.7240 |

| P1_10words | – | – | 0.2892 | 0.2061 |

| P2_5words | – | – | – | 0.7521 |

Table 29.

Two-tailed t-test results () on VUA_All Validation Set (Prior and Following Context). Significance levels: † , ‡ , § . A dash (–) indicates symmetric comparisons were omitted.

Table 29.

Two-tailed t-test results () on VUA_All Validation Set (Prior and Following Context). Significance levels: † , ‡ , § . A dash (–) indicates symmetric comparisons were omitted.

| VUA_All (Validation) | P1_5w | P1_10w | P2_5w | P2_10w |

|---|

| Original_data | § | § | § | § |

| P1_5words | – | 0.2650 | 0.1155 | 0.4032 |

| P1_10words | – | – | 0.6795 | 0.0509 † |

| P2_5words | – | – | – | 0.0209 ‡ |

Table 30.

Two-tailed t-test results () on VUA_All Test Set (Prior and Following Context). Significance levels: † , ‡ , § . A dash (–) indicates symmetric comparisons were omitted.

Table 30.

Two-tailed t-test results () on VUA_All Test Set (Prior and Following Context). Significance levels: † , ‡ , § . A dash (–) indicates symmetric comparisons were omitted.

| VUA_All (Test) | P1_5w | P1_10w | P2_5w | P2_10w |

|---|

| Original_data | § | § | § | § |

| P1_5words | – | 0.0504 † | 0.1953 | 0.0098 § |

| P1_10words | – | – | 0.0010§ | § |

| P2_5words | – | – | – | 0.1828 |

Table 31.

Two-tailed t-test results () on VUA_Verb Validation Set (Prior and Following Context). Significance levels: † , ‡ , § . A dash (–) indicates symmetric comparisons were omitted.

Table 31.

Two-tailed t-test results () on VUA_Verb Validation Set (Prior and Following Context). Significance levels: † , ‡ , § . A dash (–) indicates symmetric comparisons were omitted.

| VUA_Verb (Validation) | P1_5w | P1_10w | P2_5w | P2_10w |

|---|

| Original_data | 0.4128 | 0.3589 | 0.1176 | 0.0483 ‡ |

| P1_5words | – | 0.0750 † | 0.0153 ‡ | 0.4369 |

| P1_10words | – | – | 0.4915 | 0.3321 |

| P2_5words | – | – | – | 0.0990† |

Table 32.

Two-tailed t-test results () on VUA_Verb Test Set (Prior and Following Context). Significance levels: † , ‡ , § . A dash (–) indicates symmetric comparisons were omitted.

Table 32.

Two-tailed t-test results () on VUA_Verb Test Set (Prior and Following Context). Significance levels: † , ‡ , § . A dash (–) indicates symmetric comparisons were omitted.

| VUA_Verb (Test) | P1_5w | P1_10w | P2_5w | P2_10w |

|---|

| Original_data | 0.3920 | 0.0003 § | § | 0.6121 |

| P1_5words | – | 0.0050 § | § | 0.1630 |

| P1_10words | – | – | 0.1603 | § |

| P2_5words | – | – | – | § |

Table 33.

Representative failure modes observed in -generated auxiliary context. Manual counts ( per dataset).

Table 33.

Representative failure modes observed in -generated auxiliary context. Manual counts ( per dataset).

| Error Type | MOH-X | VUA_All | VUA_Verb | Illustration |

|---|

| Semantic drift (topic mismatch) | 12.0% | 18.0% | 15.0% | Original: He swallowed his words. |

| | | | | Aux: Despite his certainty, doubt engulfed him and made him silent. |

| Polarity flip (label mismatch) | 4.0% | 6.0% | 5.0% | Original (literal): Acknowledge the deed. |

| | | | | Aux: She refused to acknowledge the deed of kindness done for her. |

| Redundancy/near-duplicate | 9.0% | 3.0% | 5.0% | Original: Just trying to. |

| | | | | Aux: Just trying to learn something new. |

| Pronoun coref failure | 0.0% | 11.0% | 8.0% | Original: Come on sweetheart! |

| | | | | Aux: Whispered winds beckon, come on sweetheart! |

| Over-long named entities | 2.0% | 5.0% | 4.0% | Original: The vessel was shipwrecked. |

| | | | | Aux: The storm caused extensive damage, leaving the vessel stranded. |

Table 34.

Semantic fidelity of generated context across metaphor labels (VUA_All train + validation).

Table 34.

Semantic fidelity of generated context across metaphor labels (VUA_All train + validation).

| Label | Instances | Faithful | Fidelity Rate |

|---|

| Literal | 6469 | 6096 | 94.24% |

| Metaphor | 1831 | 1538 | 83.99% |

| Total | 8300 | 7634 | 91.97% |

Table 35.

Token volume, cost, and processing time for each dataset. Instances are not summed in the Total row due to dataset partitioning.

Table 35.

Token volume, cost, and processing time for each dataset. Instances are not summed in the Total row due to dataset partitioning.

| Dataset | Instances | Mean Tokens/Request | Total Tokens | Estimated Cost (USD) | Wall-Clock (h) |

|---|

| MOH-X | 647 | 42 | 27,174 | 0.54 | 0.1 |

| VUA_All | 205,425 | 38 | | 155.8 | 11.2 |

| VUA_Verb | 23,113 | 39 | | 18.0 | 1.6 |

| Total | 229,185 | – | | 174.3 | 12.9 |

Table 36.

Latency statistics (seconds) per prompt type in VUA_All training set ().

Table 36.

Latency statistics (seconds) per prompt type in VUA_All training set ().

| Metric | 1p | 1p-5b | 1p-10b | 2p5b | 2p10b | 1p-5f5b | 1p-10f10b | 2p5f5b | 2p10F10B |

|---|

| Mean | 0.789 | 0.520 | 1.566 | 0.509 | 0.566 | 0.505 | 0.612 | 0.469 | 0.535 |

| Std Dev | 0.507 | 0.183 | 2.009 | 0.136 | 0.214 | 0.168 | 0.253 | 0.132 | 0.174 |

| Min | 0.329 | 0.314 | 0.398 | 0.365 | 0.385 | 0.362 | 0.388 | 0.356 | 0.375 |

| Max | 4.960 | 1.224 | 16.123 | 1.174 | 1.290 | 1.154 | 1.537 | 1.234 | 1.499 |

Table 37.

Latency statistics (seconds) per prompt type in VUA_All validation set ().

Table 37.

Latency statistics (seconds) per prompt type in VUA_All validation set ().

| Metric | 1p | 1p-5b | 1p-10b | 2p5b | 2p10b | 1p-5f5b | 1p-10f10b | 2p5f5b | 2p10f10b |

|---|

| Mean | 0.671 | 0.502 | 0.591 | 0.558 | 0.622 | 0.510 | 0.555 | 0.581 | 0.513 |

| Std Dev | 0.379 | 0.361 | 0.156 | 0.228 | 0.417 | 0.321 | 0.378 | 0.395 | 0.143 |

| Min | 0.387 | 0.291 | 0.412 | 0.364 | 0.422 | 0.352 | 0.340 | 0.308 | 0.330 |

| Max | 2.293 | 0.911 | 1.414 | 1.104 | 1.335 | 1.141 | 1.391 | 1.373 | 1.456 |