1. Introduction

With the advent of the internet, customers have been able to easily share their reviews, opinions, and thoughts on various online platforms. These reviews help organizations and future customers by providing valuable insights into products or services before purchasing. In recent years, customer reviews have seen a substantial increase, serving as a powerful factor in shaping the choices of future buyers. Indeed, when consumers read reviews on social networks and review platforms, they often decide whether to purchase a product or try a service. As a result, consumer reviews have become an essential resource for individuals when making purchasing decisions [

1].

From a company’s point of view, online customer reviews are critical indicators of its viability and performance in the competitive market. These reviews provide essential information about customer satisfaction and product or service performance, acting as a barometer for a company’s reputation. Positive reviews help build a business’s credibility, attract new customers, and strengthen relationships with existing ones, resulting in greater sales and profits. In contrast, an increase in negative reviews may point to underlying problems with products or services, warning potential customers, and potentially reducing revenue.

As consumers increasingly rely on online reviews to make purchasing decisions, businesses that regularly monitor and engage with these reviews demonstrate responsiveness and commitment to their customers. This proactive strategy primarily not only addresses consumer concerns, but also fosters a positive and influential brand image, which is critical for long-term market sustainability and success [

2]. Therefore, the current state of online customer reviews is not only an indicator of present business success but also a key predictor of future viability [

3,

4,

5]. The practice of obtaining fake reviews has been around since the early days of online shopping and review platforms when businesses attempted to improve their reputation and influence customer decisions. Initially, fake reviews were frequently created manually by people hired to write positive (or negative) reviews for pay. As e-commerce advanced, so did the sophistication of review manipulation strategies, with some businesses using automated bots to generate large amounts of bogus reviews. The emergence of large language models such as Generative Pre-training Transformer (GPT) signaled a new era in review production, allowing for highly realistic, human-like reviews at scale. These AI-generated reviews are difficult to distinguish from genuine ones, posing significant challenges for platforms and users in detecting and avoiding fraudulent feedback [

6].

Researchers have long recognized the importance of detecting fake reviews, given their impact on customer decisions and market competition. The initial detection models focused on linguistic indicators such as word choice, review length, and emotional consistency, which could indicate suspicious content [

7,

8]. However, the higher quality and more sophisticated language produced by GPT models has rendered traditional methods ineffective. This shift has motivated researchers to develop new frameworks that integrate machine learning approaches capable of detecting small patterns in AI-generated reviews. These models combine advanced natural language processing techniques and adversarial training to more effectively detect fake reviews [

9].

The widespread availability of ChatGPT [

10] on free online platforms has heightened the challenge of detecting AI-generated, rephrased reviews. ChatGPT, powered by models such as GPT-3.5 and GPT-4, can mimic the tone and texture of authentic human-written reviews with high precision, often preserving the sentiment and nuance of the original text. While these models produce fluent and human-like text, distinguishing between AI-generated and human-authored content has become a pressing challenge, particularly in areas where authenticity and trust are crucial, such as online reviews. These AI-generated or rephrased reviews are especially prevalent in e-commerce, hospitality, and online service platforms, where consumer trust plays a crucial role in decision-making. According to research [

11], approximately 60% of consumers read online product or service reviews at least once a week, with 93% believing they increase purchase accuracy, reduce the chance of regret, and influence their shopping decisions. Moreover, 82% of consumers read reviews before making purchases and 60% refer to reviews on a weekly basis. The study indicates that 93% of consumers consistently read online reviews and rely on them to guide their purchasing decisions. This underscores the potential harm and misinformation caused by AI-generated fake reviews, as businesses may exploit this content to manipulate consumer perception, leading to financial losses or misleading product evaluations. The ability of ChatGPT to generate coherent, well-structured text raises concerns about its potential misuse, including the creation of fake or misleading reviews that can manipulate public perception [

12].

This evolution has exposed vulnerabilities in traditional detection frameworks, which were developed to detect entirely fake reviews rather than subtly rephrased ones. Addressing this challenge requires the development of new detection frameworks that go beyond fundamental linguistic markers, potentially incorporating advanced machine learning approaches to spot complex rephrasing patterns specific to generative models. These frameworks must evolve rapidly to maintain accuracy, as generative models continue to enhance their ability to successfully simulate the human language [

13].

AI is frequently employed in advanced applications; however, its black-box nature makes it difficult to understand and trust the results. Understanding the reason behind an AI model’s decision-making is typically necessary. XAI aims to develop human-interpretable models, particularly for sensitive sectors like military, banking, and healthcare [

14]. Domain specialists require effective problem-solving assistance while receiving meaningful output to build trust. Investigating appropriate outputs is important for both domain specialists and researchers. Improper outputs motivate researchers to analyze the models. AI approaches enable the assessment of current knowledge, the advancement of knowledge, and the development of new theories. XAI researchers aim to achieve an increase in justification, control, improvement, and discovery through explainability. As a result, the demand for XAI strategies to improve trust in AI models has emerged [

15].

The main contributions of this manuscript are summarized as follows:

Developing an explainable deep learning model based on a transformer fine-tuned DistilBERT ML to enhance the classification accuracy and gain knowledge about ChatGPT-rephrased fake review patterns and features.

Developing a robust model which is capable of accurately classifying the ChatGPT-rephrased fake review from real reviews.

Investigating the impact of different pre-processing techniques on model performance.

Generating interpretability of decisions by the proposed fake review detection approach to provide transparency for the factors influencing its predictions.

The remainder of this paper is organized as follows. In

Section 2, existing research on fake review detection is reviewed. In

Section 3, details of the proposed explainable deep learning model are described, including the DistilBERT architecture, the use of different pre-processing, and the deployment of LIME and SHAP XAI techniques.

Section 4 presents the experimental results obtained by evaluating the proposed model on a benchmark dataset of reviews related to restaurants. Finally,

Section 5 highlights the contributions and summarizes the key findings of the conducted study. Also, potential future directions are discussed in the same section.

2. Related Work

Detecting AI-generated and rephrased fake reviews has been widely studied using machine learning and deep learning approaches, ranging from traditional classifiers to advanced transformer-based models. This section presents recent work related to the proposed work in this study.

2.1. Traditional Machine Learning and Hybrid Models

Earlier approaches to fake review detection used machine learning algorithms and deep learning architectures such as convolutional neural network (CNN) and recurrent neural network (RNN), often combined with feature extraction techniques. Although these approaches showed promising results, they required extensive pre-processing and lacked the contextual understanding offered by modern transformer-based models.

Javed et al. in [

16] proposed a novel multi-view learning approach for detecting fake reviews. This approach employs three separate models and combines their outputs through an ensemble aggregation mechanism to enhance prediction accuracy. The core idea is to extract features from review text by integrating bag-of-n-grams with parallel CNNs. By utilizing an n-gram embedding layer with moderate kernel sizes, the architecture effectively captures local context while maintaining the computation efficiency required to train deep and complex CNNs. Furthermore, the proposed approach integrates textual, non-textual, and behavioral features to analyze both review content and reviewer behavior. The dataset used is a publicly available collection from Bing Liu, based on Yelp restaurant and hotel reviews. Due to the dataset’s class imbalance between fake and real reviews, the authors applied sampling techniques to balance the dataset. The proposed approach achieved an impressive F1-score of 92% in classifying fake reviews, demonstrating its effectiveness in detecting fake content.

Nizar Alsharif [

17] proposed a DL-based model for fake review detection on e-commerce platforms, utilizing a recurrent neural network long short-term memory (RNN-LSTM) architecture. To address the challenge of identifying fraudulent opinions, this study used a standard Yelp product review dataset. Before splitting the dataset into training, validation, and testing sets, the authors applied several pre-processing steps, including stop word removal, punctuation removal, elimination of one-word reviews, expansion of contractions, and tokenization, to enhance data quality. To improve the model’s performance, they incorporated linguistic features extracted from the Linguistic Inquiry and Word Count (LIWC) dictionary. These features help distinguish between genuine and fraudulent reviews by analyzing factors such as sincerity, analytical thinking, sentiment polarity (positive and negative terms), and the usage of personal pronouns. The proposed RNN-LSTM model demonstrated superior performance in classifying reviews, with an accuracy of 98%, showing its success in differentiating between fraudulent and genuine reviews.

2.2. Transformer-Based and Advanced Deep Learning Models

With the advancement of transformer-based architectures, fake review detection has significantly improved in both accuracy and contextual understanding. Recent research has explored fine-tuning, knowledge distillation, hybrid models, and explainability techniques to enhance both model interpretability and performance.

Salminen et al. [

9] developed a specialized model, FakeRoBERTa, by fine-tuning the RoBERTa algorithm on a dataset containing original Amazon reviews, denoted as (OR), and fake reviews generated using a fine-tuned GPT-2 model, denoted as (CG). To evaluate the model’s performance, they asked crowd workers to label a sample of both genuine and fake reviews, comparing the accuracy of human annotations to machine categorization using statistical testing. The results showed that FakeRoBERTa outperformed the other approaches, with a remarkable accuracy of 96.64%. In contrast, crowd-sourced human judgment, naive bayes support vector machine (NBSVM), and OpenAI models performed significantly worse, with accuracies of only 55.36%, 95.8%, and 83%, respectively. This demonstrates the superior ability of machine learning to identify fake reviews.

Shu Han et al. proposed a knowledge-integrated fake review detection method based on multimodal features such as term frequency inverse document frequency (TF-IDF) and n-gram, along with knowledge-based characteristics like sentiment for reviews and semantics of comments. This method captures both local and global review dependencies, improving the accuracy of fake review detection. In addition, it combines 1D-CNN, LSTM, and a residual connector to capture sequence dependencies to enhance the model’s robustness. The proposed model was validated on the hotel dataset coming from Cornell University, which includes 400 real reviews and 400 fake reviews. The proposed method achieved an F1-score of 91%. Furthermore, the results were explained using LIME to provide a deeper understanding of the review characteristics [

18].

Prova et al. in [

19] investigated the performance of various machine learning methods, including the XGB Classifier, Support Vector Machine (SVM), and BERT architecture deep learning models, to effectively differentiate AI-generated text from human-generated text. The authors constructed a dataset consisting of 3000 data points, equally divided between human-generated and AI-generated content, and applied pre-processing techniques such as stop word removal, URL removal, word stemming, and lemmatization. The findings indicated that the proposed BERT model outperformed the other methods, achieving a superior performance. Moreover, the authors investigated the societal implications of the research, highlighting potential benefits for various industries, along with considerations related to sustainability, ethics, and the environment. The comprehensive analysis of the current state-of-the-art AI-generated text identification methods revealed that BERT outperformed previous models, achieving an accuracy of 93%, compared to 84% for the XGB Classifier and 81% for SVM.

Le Quang et al. proposed DenyBERT, a deep learning-based model that enhances the BERT framework using deep and light transformation (DeLighT) and knowledge distillation (KD) techniques. This model also reduces computational costs while enhancing accuracy in detecting fake reviews, making it appropriate for real-world applications with limited processing power. Using a dataset from previous research [

9], the authors applied data pre-processing techniques, such as removing punctuation marks and HTML tags, and maintained data consistency by excluding reviews shorter than 100 words through length normalization. After pre-processing, the dataset was tokenized. DenyBERT used only 16.01 million parameters—far fewer than BERT and TinyBERT—but achieved an outstanding accuracy of 96.12% and an F1-score of 96.47% [

20].

Mohawesh et al. in [

21] introduced a semantic- and linguistic-aware approach for fake review detection, leveraging an advanced transformer architecture to enhance detection accuracy. The proposed approach utilizes RoBERTa along with an LSTM layer to capture complex patterns in fake reviews, improving both detection and authentic behavior profiling. Different pre-processing steps were applied to the dataset to remove unnecessary information, such as emojis and URLs, ensuring only essential terms remained. Then, each review was tokenized into individual words to enhance model accuracy and focus on meaningful textual elements. The proposed approach achieved accuracies of 96.03% and 93.15% using the OpSpam dataset and the Deception dataset, respectively. Moreover, to increase transparency, Shapley Additive Explanations (SHAP) and attention approaches were used to provide clear and reasonable explanations for the model’s classification of fake reviews.

Mitrovi et al. [

13], proposed a Transformer-based approach using DistilBERT to classify text as human-generated or ChatGPT-generated and compared it with a perplexity-based approach, which evaluates text predictability and complexity. To enhance understanding of model predictions, SHAP was used to provide insights into the reasoning behind the predictions. The proposed approach utilized an uncased version of DistilBERT, which is lightweight and fine-tuned for sequence classification using HuggingFace Transformers on training data. The Kaggle sentiment analysis dataset, consisting of 1000 human reviews, a ChatGPT-generated set of 395 reviews, and a rephrased ChatGPT dataset of 1000 rephrased human reviews, was utilized to evaluate the proposed approach. The obtained results indicated that the ML-based approach outperformed the perplexity-based method in detecting ChatGPT-generated text in experiment 1, with accuracy scores of 98% for ML-based and 84% for perplexity-based, respectively. However, accuracy dropped to 79% for the ML-based approach and 69% for the perplexity-based method in experiment 2, where rephrasing made it more challenging to distinguish between real and generated text, as rephrased reviews retained human-like characteristics.

Despite the growth of research on fake review detection using traditional machine learning, deep learning, and transformer-based models, most existing studies have focused either on detecting human-written deceptive reviews or fully AI-generated reviews. However, the emerging practice of rephrasing genuine reviews using large language models such as ChatGPT introduces a new, more subtle form of review manipulation. These rephrased reviews often retain the original sentiment and intent but differ in tone, structure, and vocabulary, making them harder to detect. Current datasets and models are not specifically designed to address this hybrid form of content. Therefore, this study proposes an explainable deep learning framework that targets the detection of ChatGPT-rephrased reviews using a custom balanced dataset and leverages linguistic pre-processing along with DistilBERT and XAI techniques. This approach fills an important research gap by focusing on the nuanced challenge of distinguishing rephrased reviews from original ones, which is crucial for maintaining the authenticity of user-generated content in the age of generative AI.

Table 1 illustrates a comparison of the previous state-of-the-art fake review detection models, highlighting their unique methodologies and performance indicators.

3. Methodology

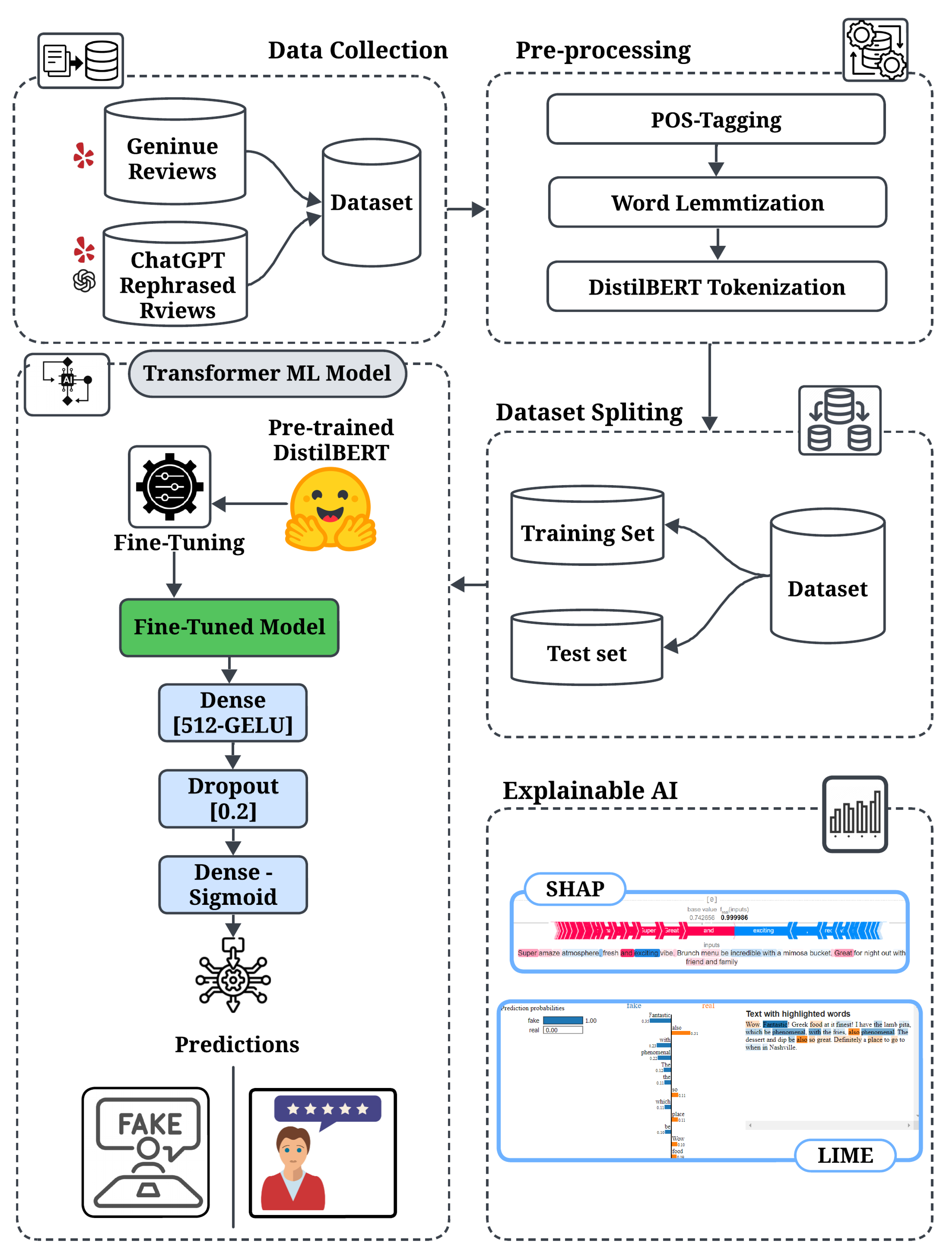

This section describes the proposed XAI-based fake review detection model designed to distinguishing between human-written and ChatGPT-rephrased reviews. As depicted in

Figure 1, the proposed fake review detection approach comprises four key phases, namely data collection and preparation, pre-processing, fake review identification, and XAI.

3.1. Data Collection and Preparation Phase

Existing datasets for fake review detection primarily focus on either human-written deceptive reviews or fully AI-generated text and do not focus on rephrased versions of real human reviews, which pose a unique challenge due to their hybrid nature. This phase aims to construct a balanced dataset that includes an equal number of real-reviews, labeled as 1, and ChatGPT-rephrased reviews, labeled as 0, which is considered (fake) for comprehensive analysis. This balanced dataset is crucial for developing a classification model that can reliably identify the similarities and differences between real and rephrased reviews. Maintaining this balance is important to draw accurate conclusions about the characteristics of ChatGPT-rephrased reviews and their importance for sentiment analysis and fake review detection. The dataset comprises two main sets:

Human Reviews: Consists of 1000 reviews sourced from the Yelp Restaurants dataset, representing real customer reviews, manually annotated as a real review (“1”).

ChatGPT-Rephrased Reviews: Consists of 1000 reviews generated by rephrasing a subset of human reviews and generating rephrased versions of reviews not included in the human set using ChatGPT, representing fake customer reviews, and manually annotated as a fake review (“0”).

Table 2 illustrates some samples of real reviews vs. their rephrased version.

The constructed dataset has the following characteristics:

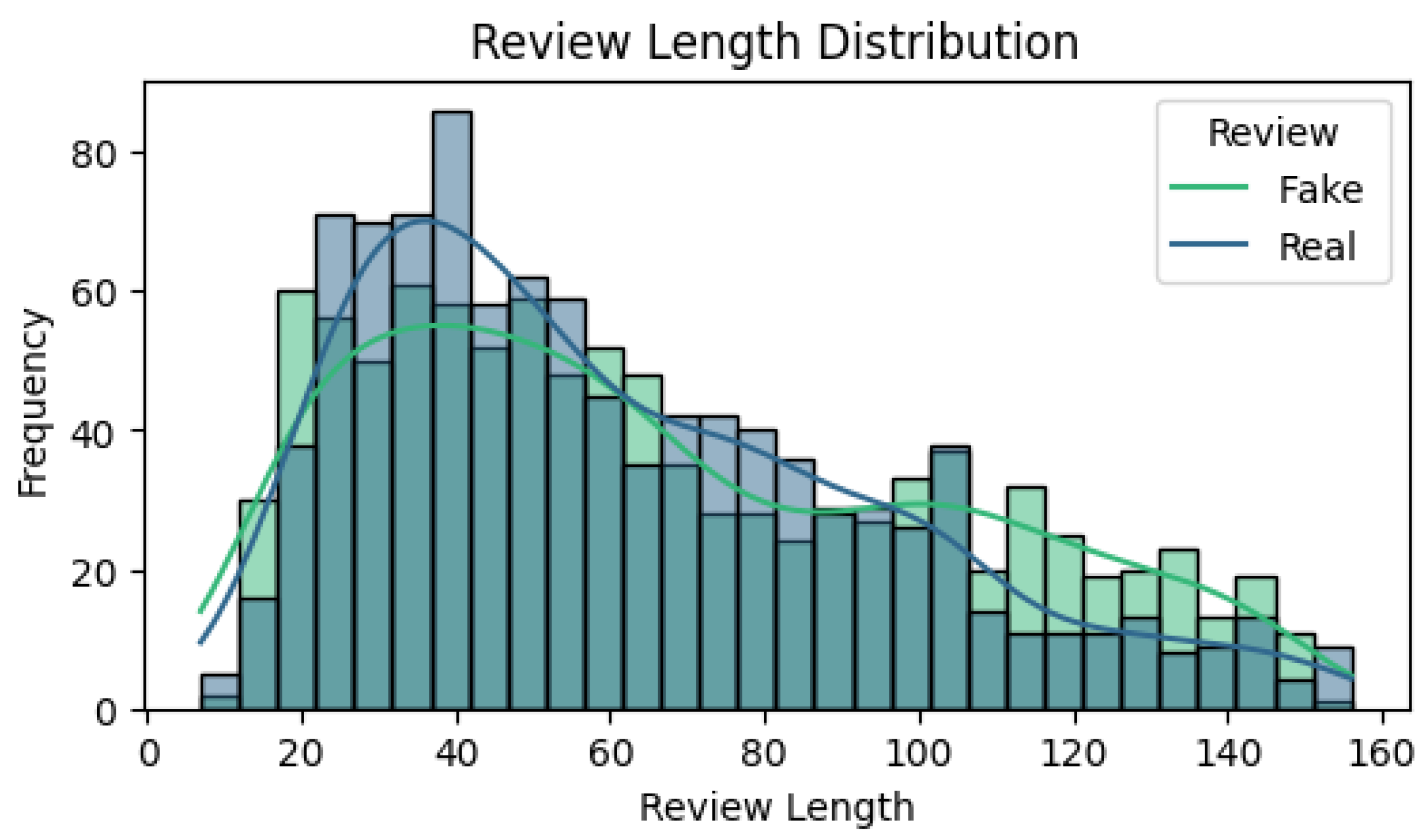

Review Diversity: The dataset contains both human reviews and ChatGPT-rephrased reviews of varying lengths, ranging from 10 to 150 words to cover both short and long reviews. This variation captures diverse language structures, expressions, and levels of detail. Including reviews of different lengths enhances the model’s ability to generalize to real-world data.

Figure 2 illustrates the distribution of review length over the dataset.

Dataset Composition: The dataset is extracted from the Yelp restaurant reviews dataset, focusing on reviews from the last five years. This ensures that recent and relevant customer reviews are captured, reflecting modern language usage and current restaurant trends. Reviews are selected to capture a wide variation in length and content, representing diverse customer sentiments and experiences. Limiting the dataset to recent reviews supports the model’s generalization to recent review-writing patterns, making it more effective for a real-world application.

3.2. Pre-Processing Phase

Data pre-processing serves as a crucial preliminary phase in the proposed approach, having a significant impact on model performance. The steps included in the pre-processing phase are as follows:

POS Rule-Based Tagging: In this step, POS tagging is utilized to identify the grammatical roles of terms in reviews. Understanding phrase structures helps uncover key patterns that differentiate real reviews from rephrased ones. This tagging is essential for highlighting linguistic traits unique to AI-generated text.

Word Lemmatization: After POS tagging, word lemmatization is applied to reduce words to their base forms. For example, the words, “running,” “ran,” and “runner” are all reduced to “run” after lemmatization. This normalization step enables the model to recognize different inflected forms of words as a single base concept, which is essential for effective sentiment analysis. For example, “good” and “better” would be recognized as related, allowing the model to focus on core sentiments rather than surface differences, as shown in

Table 3.

Tokenization: The tokenization process is refined to better capture linguistic features, improving the model’s ability to understand word patterns and contextual meaning, which leads to higher classification accuracy.

To ensure reliable linguistic analysis, Part-of-Speech (POS) tagging and lemmatization are applied prior to model-specific tokenization. Tokenizing first, particularly with subword-based models like DistilBERT, can fragment words or obscure their base form, disrupting grammatical interpretation. For example, in the review `We are going there tonight, and the ambiance will be great,’ early tokenization might split `going’ into [‘go’, ‘##ing’]. This fragmentation requires the model to implicitly piece together the full word and its grammatical function. Performing POS tagging on the original sentence, which identifies ‘going’ as a present participle (VBG), followed by lemmatization to ‘go,’ ensures that DistilBERT then tokenizes the normalized form [‘go’]. This process helps to retain syntactic clarity and semantic consistency, ensuring better alignment with the model vocabulary.

3.3. Fake Review Identification Phase

Various approaches are employed for text classification and feature extraction. The proposed model utilizes Transformer-based architectures, which have recently gained popularity for their ability to capture deep semantic relationships. The uncased version of DistilBERT is an improved variation of the original BERT model trained within a teacher–student framework, with BERT acting as the teacher.

This structure improves DistilBERT efficiency while preserving much of BERT’s rich contextual understanding. DistilBERT additionally performs effectively on General Language Understanding Evaluation (GLUE) tasks, demonstrating its robustness and capability to generalize across a wide range of Natural Language Processing (NLP) benchmarks. The proposed model fine-tuned the pre-trained DistilBERT model using the Hugging Face Transformers library, employing the PyTorch 2.0 Trainer class. This framework provides an intuitive and robust environment for training and evaluating machine learning models, facilitating the implementation of various training techniques and optimization strategies. By maintaining the default hyper-parameters, the fine-tuning phase establishes a baseline performance level, allowing the model to learn the dataset’s complex structure while minimizing the risk of overfitting. The training dataset, consisting of an equal number of genuine and ChatGPT-rephrased reviews, is pivotal for training the model to diverse linguistic patterns and styles.

DistilBERT’s efficiency in capturing intricate features, such as linguistic structures, semantic meanings, and contextual elements, significantly enhances classification. Its advanced language understanding improves classification accuracy by identifying subtle variations in writing styles between human and ChatGPT-rephrased reviews. Furthermore, DistilBERT’s streamlined design improves both processing and inference, making it well-suited for real-time analytic applications. Following fine-tuning, the model proceeds to the inference step, where it uses Hugging Face Transformers for text classification. This pipeline simplifies applying the trained model to new data, enabling rapid classification of reviews based on their origin.

The integration of DistilBERT’s contextual understanding, the structured training process, and the efficiency of the Hugging Face framework aims to develop a robust classification framework that not only distinguishes between human-written and AI-rephrased reviews but also provides valuable insights into the underlying linguistic features that define each category. Finally, this comprehensive method leverages NLP techniques to produce accurate and relevant classification findings, paving the way for future research into the complexities of language formation and its impact in the digital era.

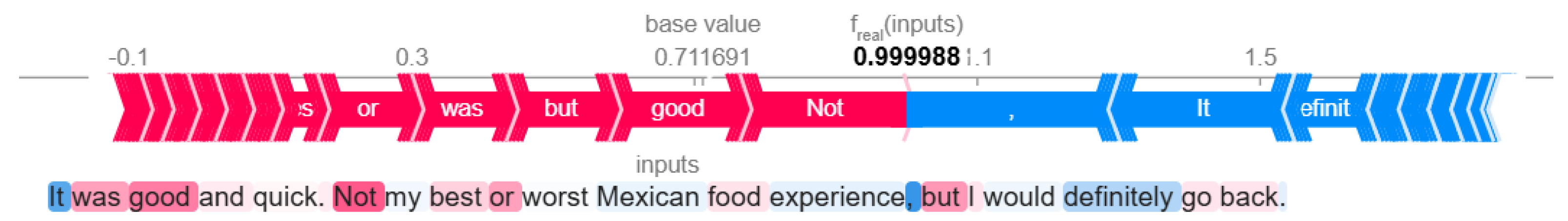

3.4. Explainable AI (XAI)

AI models operate as black boxes, making it more challenging to understand their decision-making processes and complicating the interpretation of outcomes and fairness evaluation. As a result, the need for XAI techniques has grown to improve the trust in AI models. The field of XAI has expanded significantly in recent years, evolving from being an isolated research topic within AI into a highly active research field, now attracting numerous theoretical contributions, empirical investigations, and reviews annually. Therefore, to better understand the model’s predictions and the reasoning behind classification decisions, two different XAI techniques, namely LIME and SHAP, are employed. SHAP and LIME XAI techniques were chosen for this study due to their strong capabilities in enhancing the interpretability of machine learning models, particularly complex deep learning architectures. These methods were employed to ensure transparency and address the unique challenges of text analysis within the research topic. SHAP offers a consistent approach based on cooperative game theory to impute feature contributions, ensuring fair importance distribution and comprehensive model interpretability, while LIME enables model-agnostic local interpretation, facilitating the understanding of features driving individual predictions. Other XAI techniques, such as Grad-CAM (more effective for visual models) and DeepLIFT (with high computational complexity for large datasets), were considered less effective and suffer limitations when handling text data.

Using SHAP values, a consistent and an understandable explanations for the model’s predictions can be generated. SHAP assigns an importance value to each feature for a given prediction, providing a comprehensive perspective of the feature’s contribution. This method not only improves the understanding of the language and sentiment features the model considers important, but it also helps to validate the model’s judgments, ensuring that they are consistent with human expectations.

The LIME technique is able to interpret the model’s predictions by approximating the model locally around a given prediction. By perturbing the input data and observing the changes in output, LIME reveals which features have the greatest influence on the model’s decision for each review. This local interpretability helps to understand how the model distinguishes between real and rephrased reviews. SHAP and LIME can identify key words or phrases in text classification models. Identifying specific elements of the text that drive categorization outcomes can increase the accuracy and interpretability of the model.

4. Experimental Results and Discussion

This section discusses the experiments conducted to assess the efficiency of the proposed model, followed by a detailed analysis of the obtained results. To evaluate the model’s ability to differentiate between human-written reviews and ChatGPT-rephrased reviews, several performance metrics [

22,

23], including accuracy, precision, recall, and F1-score, are calculated using Equations (

1)–(

4) to provide deep insights into the model’s strengths and limitations.

This paper aims to highlight the contributions of the proposed methodology to improving text classification and its implications for understanding the nuances of language generation.

4.1. ChatGPT-Rephrased Reviews Observations

The ChatGPT-rephrased reviews were generated by submitting human-written reviews to the ChatGPT platform, where a “rephrase” prompt was applied, utilizing GPT-3.5 and GPT-4 versions. For example, the prompt was (rephrase “From the incredible food, to the warm atmosphere, to the friendly service, this downtown neighborhood spot does not miss a beat.”), and ChatGPT answered “From the delicious food and inviting atmosphere to the attentive service, this downtown neighborhood gem gets everything right”. The reviews varied in writing style and length to provide a comprehensive understanding of how ChatGPT handles different types of content. Throughout this process, several key aspects of the rephrased reviews were considered, including syntactic structure, tone, and contextual relevance. The objective was to identify distinguishing patterns in the way ChatGPT rephrased the text compared to human-written reviews. As a result of these observations, several key findings were identified:

Due to its training on large datasets, ChatGPT’s rephrased reviews may exhibit predictable language patterns. This regularity can serve as a key distinguishing characteristic when compared to the diverse expressions found in human-written reviews.

ChatGPT frequently generates reviews with a more uniform and polished linguistic structure than human-written assessments, which are more varied and, at times, unique. This consistency may indicate to classifiers a lack of realness.

If the given review contains misspelled words, ChatGPT will rephrase the review without any misspellings.

When rephrasing, ChatGPT tends to use more formal language, considering grammar and sentence structure.

ChatGPT converts numbers into written words.

ChatGPT tends to rephrase short sentences into longer ones.

When writing positive reviews, it uses fancy words rather than simpler ones.

ChatGPT writes any date in a review with the month in word format.

For instance, a real review stated: “Went here 3 times one weekend while in town for a baseball tournament! Great food and service every time. From breakfast meals to sandwiches, everyone was pleased with their choices. It’s a good option for groups and files”. The rephrased version by ChatGPT was: “I visited this place three times in one weekend while in town for a baseball tournament, and every visit was fantastic! The food and service were consistently great. Whether it was breakfast dishes or sandwiches, everyone in the group was happy with their choice. It’s definitely a solid option for groups and families”. As seen, the original review was expanded into a more elaborate structure and tended to use a more polished and formal tone, even though the original human-written review is casual. ChatGPT also rewrote certain phrases or words to ensure correctness, fluency, and readability like the phrase “groups and files” in the original review rephrased to “groups and families,” which is far more contextually appropriate and clear. This change exemplifies the model’s tendency to use a more structured and formal style, differing from the casual tone of human-written reviews.

4.2. Experimental Analysis

The proposed model conducts two experiments to evaluate the efficiency of DistilBERT in classifying human-written and ChatGPT-rephrased reviews. This paper investigates how various pre-processing techniques impact the model’s ability to distinguish between genuine and fake (AI-generated) reviews.

DistilBERT with Default Tokenization involves fine-tuning the DistilBERT model with default hyperparameters and its built-in tokenization as the default pre-processing phase. The purpose of this experiment is to examine DistilBERT’s fundamental capabilities to handle the classification task with minimal additional adjustments. This approach enables the assessment of the model’s ability to differentiate between human-written and ChatGPT-rephrased reviews using only the tokenization procedure.

The Proposed Model Fine-tuned DistilBERT focuses on fine-tuning the DistilBERT model and implementing a pre-processing phase. This phase consists of three key stages: First, POS tagging to identify the grammatical structure of text, followed by word lemmatization to reduce words to their base forms. Finally, tokenization is applied to ensure that the model’s criteria are met while preserving the linguistic properties. This customized pre-processing phase intends to enhance the model’s performance in classifying reviews, as the additional phases supplement the input data and enable the DistilBERT model to better distinguish between human-written and AI-generated text.

Table 4 presents the results for the DistilBERT ML-based classifier with a pre-processing phase and the DistilBERT classifier with only tokenization as pre-processing for each of the two experiments conducted.

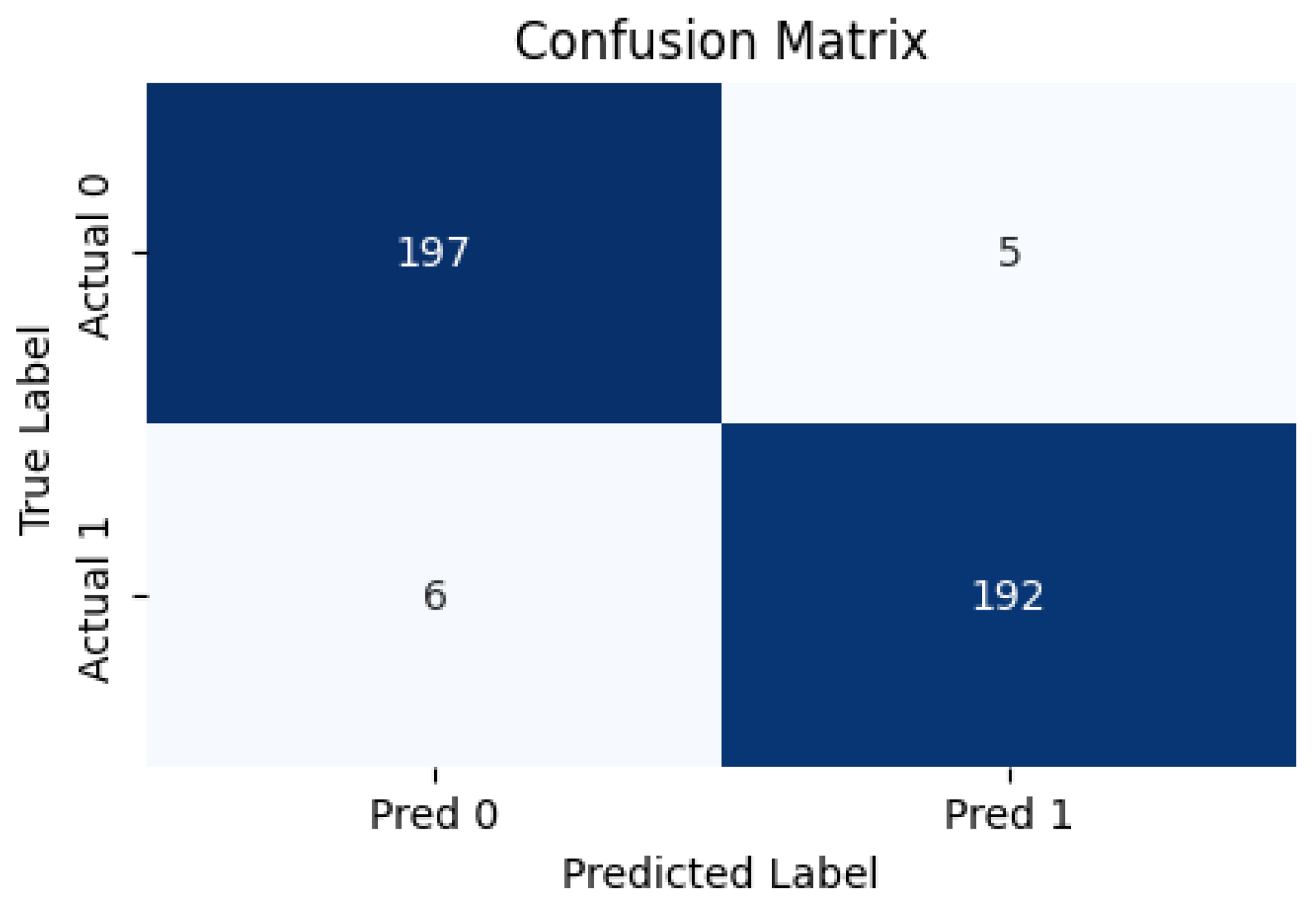

The results show that the classification accuracy of the DistilBERT model reaches 96.8% and 94.25% on the validation and test datasets, respectively. However, after applying the pre-processing phase, the accuracy increases to 98.54% and 97.25% on the validation and test datasets, respectively. To further illustrate the performance of the proposed DistilBERT classifier, a confusion matrix is shown in

Figure 3.

Moreover, the study evaluates the performance of the model using rephrased review samples generated by Gemini and Claude. Specifically, 30 real reviews from Yelp were selected, with 15 rephrased by Gemini and 15 by Claude. The model was tested on these samples to assess its robustness in detecting AI-rewritten content. The results demonstrated a high level of accuracy, achieving 95%, with an F1-score of 95.24%. These findings indicate that the model is not only capable of detecting ChatGPT-generated rephrased text but can also identify rephrased content from other AI platforms, further validating its effectiveness in real-world scenarios.

It is observed that the integration of the expanded pre-processing framework with XAI approaches results in significant improvements in the DistilBERT model’s classification accuracy when distinguishing between ChatGPT-rephrased reviews and real reviews. The key findings are as follows:

Increased Classification Accuracy: The proposed approach resulted in a significant improvement in accuracy during both the validation and testing phases, highlighting that integrating POS tagging, lemmatization, and XAI techniques improves the model’s understanding of the text.

Refined Sentiment Analysis: The model performed better in detecting subtle variations in sentiment, resulting in more accurate classifications. The model analyzes key linguistic features such as syntactic structure, word choice, sentence complexity, and emotional depth. ChatGPT-generated reviews tend to be overly structured, formal, and polished, whereas human-written reviews, even if AI-assisted, often retain inconsistencies, personal tone, and variations in style. This capability is especially relevant for applications that aim to detect and evaluate the authenticity of user-generated text.

Enhanced Robustness: The proposed approach demonstrated effectiveness in handling language variations, enabling the model to adapt to various expressions of sentiment. The model was tested on outputs from both GPT-3.5 and GPT-4 to evaluate its ability to detect rephrased and AI-generated reviews across different versions. The results demonstrate the model’s adaptability to evolving AI-generated text patterns. The slight improvement with GPT-4 suggests that the pre-processing techniques and fine-tuned DistilBERT model effectively capture the linguistic nuances introduced by more advanced language models. This consistency ensures the model’s long-term reliability in identifying AI-rephrased content, even as generative AI continues to advance. Preserving this adaptability is crucial in a world where user-generated content frequently shifts in tone and style.

Actionable Insights from XAI: The implementation of LIME and SHAP provided valuable insights into the model’s decision-making process, helping determine which linguistic elements and sentiment cues are the most influential in classifying reviews. This interpretability ensures that human-written reviews with minor AI assistance are not misclassified as fully AI-generated content. The transparency of LIME and SHAP not only enhances the model’s confidence, but also facilitates future improvments.

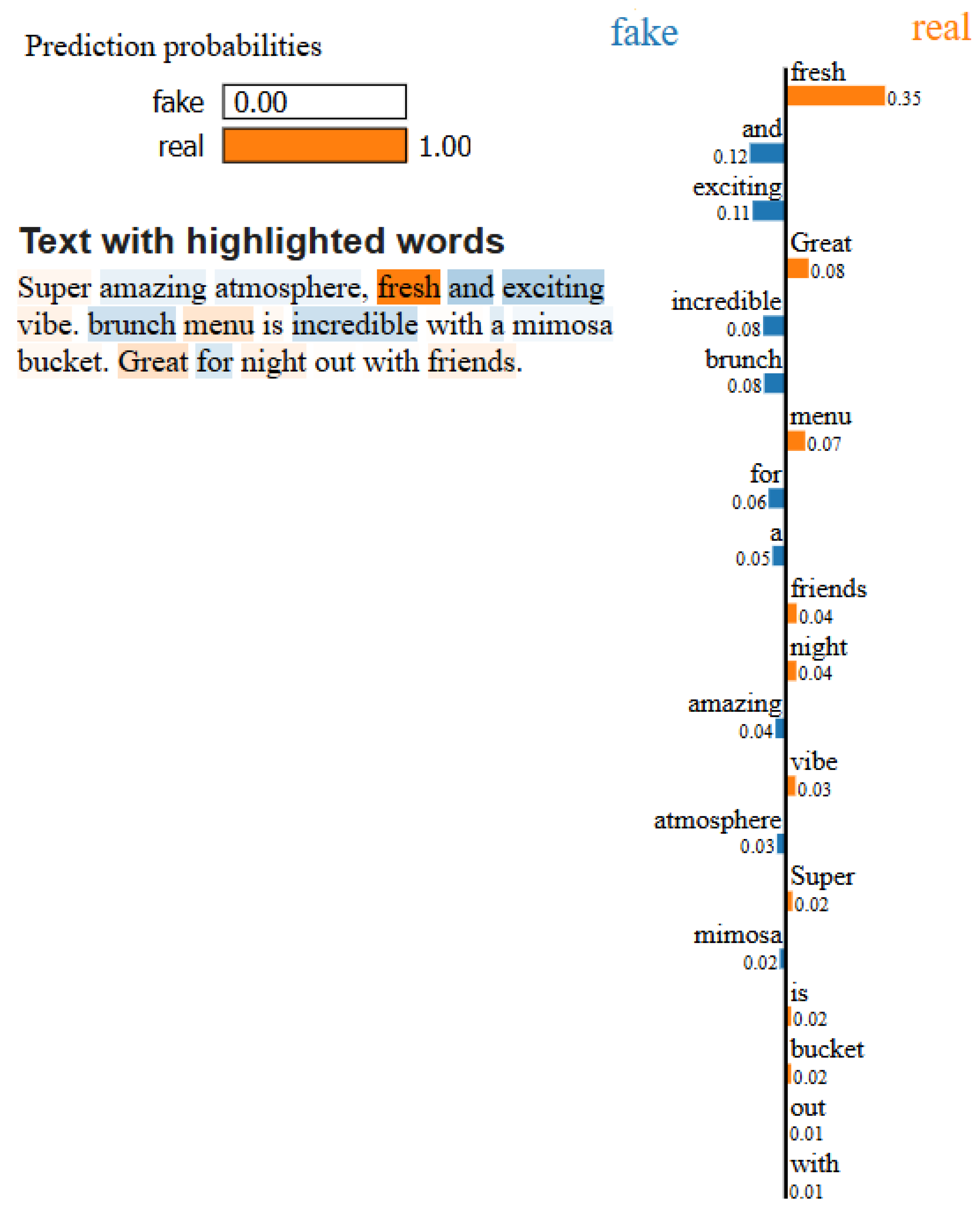

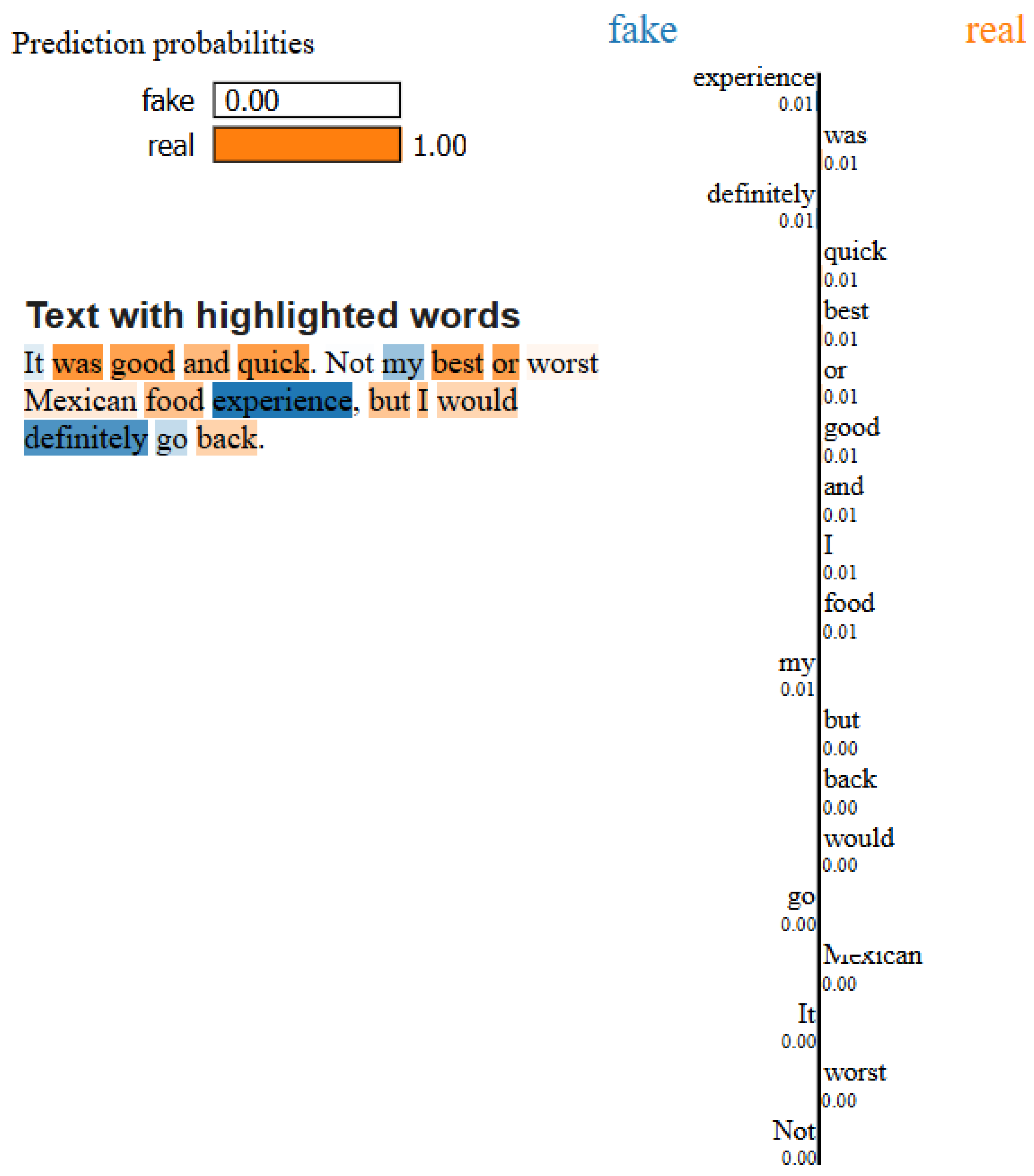

4.3. LIME-Based Model Analysis

To highlight the effectiveness of the proposed approach and assess its performance, the outputs generated by LIME are examined.

Figure 4,

Figure 5 and

Figure 6 showcase LIME prediction probability graphs, illustrating word importance and providing insights into the model’s decision-making process for ChatGPT-rephrased fake review detection. These three review instances illustrated in

Figure 4,

Figure 5 and

Figure 6 demonstrate how LIME enhances the interpretability of the proposed approach. By examining all words in a review, LIME assigns probability scores to each word based on its sentiment (fake or real), contextual relevance, and syntactic structure. The sentiment with the highest probability score is then assigned as the review’s final classification.

Figure 4 illustrates the prediction probabilities for the target real-review: “Super amazing atmosphere, fresh and exciting vibe. Brunch menu is incredible with a mimosa bucket. Great for night out with friends”, and highlights the contribution of each individual word to different classifications.

As shown, the review is classified as a real or fake review with a probability of 1 and 0, respectively. It is noticed that real review is the dominant class with the highest probability.

Words like “fresh”, “exciting”, and “Great” contribute significantly to the “real” classification, with scores 0.35, 0.11, and 0.08, respectively, reflecting the descriptive and enthusiastic tone commonly seen in genuine reviews. Other words such as “incredible”, “brunch”, and “menu”, with contribution scores of 0.08, 0.08, and 0.07, respectively, further strengthen the review’s context, enhancing its credibility and authenticity. These words are identified as key indicators of real reviews, shown in orange bars in

Figure 4. Moreover, words like “vibe”, “friends”, and “atmosphere” have modest contributions with scores of 0.03, 0.04, and 0.03, respectively. While these words are relevant, they are likely neutral because they are commonly found in both real and fake reviews. Generic words such as “Super” and “for” contribute even less, with scores of 0.02 and 0.06, due to their widespread use in all types of text, which reduces their impact in differentiating between real and fake reviews. These words are identified as minor indicators of real reviews. Additionally, the review contains specific references such as “mimosa bucket” and “brunch menu”. Providing such concrete information in the review strengthens its authenticity. This contrasts with AI-rephrased reviews, which may be based on ambiguous or too-general praise. Thus, the LIME analysis reveals that the proposed approach is highly accurate in its prediction, strongly associating the given text with real reviews.

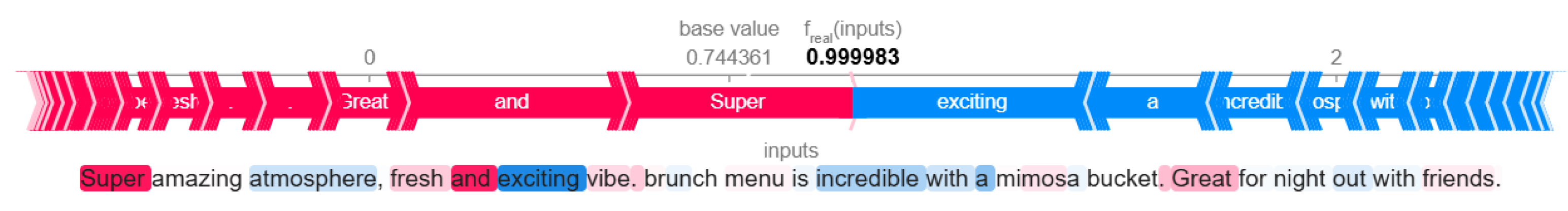

On the other hand,

Figure 5 illustrates the prediction probabilities of the target fake review: “The vegetarian options for main courses are outstanding. I highly suggest trying the crudité appetizer. The ambiance is warm and inviting”, and the contribution of each individual word to different classifications.

As shown in

Figure 5, the review is classified as real or fake review with a probability of 0 and 1, respectively. We notice that fake review is the dominant class with the highest probability. From the bar, LIME gives words such as “suggest”, “The”, “highly”, and “outstanding” high contribution scores of 0.24, 0.24, 0.13, and 0.13, respectively. This is reasonable, as these words are typically related to subjective language, which may be indicator of overstated or overly positive sentiment, commonly used in fake reviews. These words are identified as key indicators of fake reviews, shown in blue bars in

Figure 5. Moreover, words including “appetizer”, “inviting”, and “ambiance” show contribution scores of 0.09, 0.08, and 0.07, respectively, indicating their influence toward fake review classification, as they are commonly used in descriptive, promotional writing.

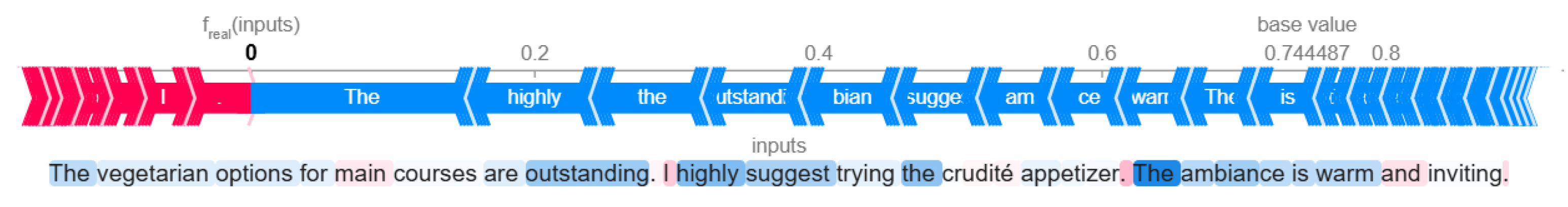

LIME analysis also highlights false positive classifications, as depicted in

Figure 6. Words such as “good”, “quick”, “experience”, and “definitely” are highlighted by LIME; all have the same contribution of around 0.01 to the classification, indicating that no single word strongly affects the classification. This is reasonable, as these words are commonly found in both human-written and AI-generated reviews, explaining why the proposed model classified this review as real. However, the review is short, generic, and missing personal details, indicating a possible false positive. Unlike typical AI-rephrased reviews, these false positives exhibit a more human-like variation in style, making them harder to detect. This suggests that AI-generated content can, in some cases, closely mimic human linguistic diversity.

It is observed from LIME analysis that ChatGPT’s reviews often focus on summarizing the overall experience rather than conveying specific emotional reactions. This results in AI-generated reviews feeling impersonal or generalized, lacking the depth of human emotion. Human reviewers, on the other hand, typically write in a way that reflects personal engagement, using repetition or emphasis to reinforce strong feelings (e.g., “LOVE LOVE LOVE that restaurant!”). They provide specific details that make their feedback feel authentic and individualized. Moreover, human writing exhibits considerable variation in tone and style; each person may have a unique way of expressing excitement, disappointment, or satisfaction.

4.4. SHAP-Based Model Analysis

By applying SHAP to interpret model predictions, significant insights were gained into the different language features that distinguish ChatGPT-rephrased reviews from real human reviews, further strengthening the LIME findings. SHAP analysis highlighted that specific phrases and structures are commonly associated with machine-generated reviews, particularly those with a polished and formal tone. Words like “super”, “fresh”, and “vibe” were identified as strong indications of real reviews with probabilities of 0.468, 0.106, and 0.078, respectively, as shown in

Figure 7.

On the other hand, the words “outstanding” and “highly” are frequently used in ChatGPT outputs with probabilities of −0.069 and −0.88 to indicate pleasant sentiments that may appear overdone or highly polished, along with the use of stop words, as illustrated in

Figure 8.

Furthermore, SHAP highlights that ChatGPT’s reviews usually rely on the repeated use of stop words and punctuation, maintaining a consistent tone throughout various rephrased reviews, as illustrated in

Figure 8 SHAP analysis also reveals instances where ChatGPT-rephrased reviews are misclassified as real. As shown in

Figure 9, words like “good” and “best” influenced the false positive classification. When AI-generated reviews adopt a human tone and avoid formality, they can mislead the model. SHAP highlights how sentiment and conversational phrasing contribute to misclassification. Unlike typical AI-rephrased reviews, these samples exhibit various writing styles, making them harder to detect. This indicates that some ChatGPT outputs may mimic human linguistic diversity.

Another key finding is that ChatGPT’s rephrased reviews have a constant structure regardless of the subject being conveyed, with a balanced, consistent tone. This results in an impression of homogeneity among many AI-generated reviews, as opposed to the diversity of linguistic patterns and structures found in human-authored reviews. When writing reviews, humans employ a variety of expressions, frequently stressing distinctive aspects of their experience through language and tone. This contrast sheds light on how ChatGPT creates language in comparison to human-authored text, as well as providing deeper insights into differentiating patterns between machine-generated and human-generated content.

4.5. Comparative Analysis Against State-of-the-Art Fake Review Detection Approaches

Table 5 illustrates a comparative summary for characteristics of the proposed approach against several state-of-the-art AI-generated and rephrased review detection approaches.

From

Table 5, we notice that the proposed fine-tuned DistilBERT model with LIME and SHAP techniques achieved an F1-score of 97.25%, outperforming models proposed in [

13,

19,

21]. Notably, ref. [

13] achieved only an F1-score of 81% using DistilBERT with SHAP on 1000 ChatGPT-rephrased reviews, representing a significant +15% improvement with the proposed model. Similarly, ref. [

19] used fine-tuned BERT and XGBoost, reporting F1-scores of 93% and 84%, respectively, compared to the proposed model. RoBERTa + LSTM from [

21] achieved a high F1-score of 96.03% and 93.15% on the OpSpam dataset and Deception dataset, respectively, but still lower than the result obtained by the proposed approach, which is 97.25%. This performance gain is attributed to the integration of the advanced pre-processing phase, including POS tagging and lemmatization, which provide a richer linguistic representation compared to traditional methods such as stop-word removal and lowercasing used in previous studies. Moreover, unlike previous works that primarily rely on SHAP for model interpretability, the proposed model incorporates both LIME and SHAP, offering deeper insight into classification decisions and enhancing transparency.

To evaluate the effectiveness of the proposed model, it was compared with several widely used pre-trained language models on the task of distinguishing ChatGPT-rephrased reviews from human-written ones. Perplexity, a metric that measures how well a language model predicts a sequence of text, was used to generate features from two autoregressive models, namely GPT-2 and TinyLLaMA. Lower perplexity indicates that the text is more predictable to the model, which often aligns with the characteristics of ChatGPT-rephrased content. In contrast, human-written text typically exhibits a higher perplexity due to its greater linguistic variability and less predictable structure. For GPT-2 and TinyLLaMA, a perplexity score was computed for each review in the dataset described in

Section 3.1. These scores, along with basic textual features such as review length and capitalization, were used to train a simple classifier. In parallel, the pre-trained masked language models, namely BERT, RoBERTa, and DistilBERT, were fine-tuned directly on the same dataset for the ChatGPT-rephrased fake review detection task.

Table 6 presents a comparative summary of the performance of pre-trained language models and the proposed model on the task of fake review detection, with the models listed in chronological order of their release.

As illustrated in

Table 6, the classifiers built on perplexity scores generated from GPT-2 and TinyLLaMA achieved moderate performance, with F1-scores ranging from 66% to 74%. In contrast, the fine-tuned masked language models demonstrated substantial improvements. RoBERTa and BERT achieved F1-scores of 95% and 94%, respectively, while DistilBERT achieved 94% across all evaluation metrics. The proposed model outperformed all baselines, achieving 97% precision, recall, F1-score, and accuracy. These findings underscore the advantage of incorporating deeper semantic, structural, and lexical features, which go beyond surface-level fluency or perplexity-based cues.

4.6. Limitations

While the proposed approach achieves high accuracy and improved interpretability in detecting ChatGPT-rephrased fake reviews, certain limitations must be acknowledged:

Dependence on ChatGPT-Specific Patterns. The model is fine-tuned on ChatGPT-rephrased reviews, making it effective for detecting this specific manipulation. However, its performance against reviews generated by other models remains uncertain. Future work should explore the generalization of cross-models.

Need for Continuous Model Updates. As generative AI evolves, ChatGPT and similar models improve their ability to mimic human writing. The proposed framework may require periodic retraining in updated AI-generated reviews to maintain detection robustness.

4.7. Real-World Applications and Deployment Feasibility

The proposed model can be integrated into e-commerce platforms, review aggregation websites, and content moderation systems for real-time detection of AI-generated or rephrased reviews. Businesses can implement this as an API-based service, where reviews are automatically screened for suspicious signals, with necessary escalations for human verification. The lightweight DistilBERT architecture ensures computational efficiency, enabling efficient large-scale deployment without extra processing costs. However, periodic retraining is necessary to stay aligned with the evolving nature of AI-generated text, and maintaining a balance between automated decisions and human moderation is essential to minimizing false positives. For real-world deployment, platforms should adopt a tiered detection approach flagging low-confidence cases for review to ensure fairness and accuracy in automated content moderation.

4.8. Ethical Considerations in AI-Driven Fake Review Detection

Privacy and Responsible Use of the Model. Detecting fake reviews involves analyzing textual patterns, which raises important privacy considerations. Unlike some detection models that rely on user metadata (e.g., IP addresses, device information, personal profile, or behavioral tracking), the proposed model strictly operates on linguistic features, ensuring that no personally identifiable information is collected or analyzed. This approach protects user privacy and reduces unintended surveillance risks. Additionally, misuse of the model, such as deploying it to unfairly flag reviews without human supervision, can lead to incorrect penalization of legitimate users. To prevent this, the model is designed to be a supplementary tool rather than a standalone decision-maker. Platforms implementing this system should integrate automated predictions with human moderation to prevent over-reliance on AI-driven decisions.

Addressing Bias and Fairness in Model Predictions. A critical ethical concern in AI-driven review detection is the potential bias against well-structured human-written reviews. Since AI-generated reviews often exhibit coherent sentence structure and formal tone, there is a risk that well-articulated human reviews could be misclassified as AI-generated. To reduce this risk, several actions have been implemented:

Balanced Dataset Curation: The dataset includes a diverse collection of human-written reviews to ensure that the model does not develop biases toward specific linguistic style.

Explainability and Transparency: By using LIME and SHAP, the model provides insights into why a review is classified as AI-generated or rephrased, enabling manual verification and reducing arbitrary misclassification.

Adaptive Thresholding: Instead of binary classification (real vs. fake), the model can assign a confidence score, enabling review platforms to flag only the most suspicious cases for further investigation.

5. Conclusions and Future Work

This paper provides a substantial contribution to the field of natural language processing by addressing the fundamental challenge of distinguishing between ChatGPT-rephrased reviews and real reviews. The balanced dataset is an invaluable resource for training and evaluating classification models, and the proposed framework improves the DistilBERT model’s performance using effective pre-processing approaches and XAI methodologies. As AI-generated text becomes more prevalent, the ability to distinguish between genuine and rephrased reviews is critical for preserving trust in online platforms. The findings highlight the importance of robust classification methods capable of detecting AI rephrasing, paving the way for more authentic user experiences. The experimental results showed that the proposed fine-tuned DistilBERT model with the pre-processing phase achieves a classification accuracy of 97.25%. According to XAI analysis, ChatGPT’s writing style is polite, uses grammatical structure, lacks specific descriptions and information in reviews, and also uses fancy words, is impersonal, and lacks emotional expression.

While this study presents a robust approach to detecting fake reviews rephrased with ChatGPT, there are several opportunities for further improvement and exploration. Future research can focus on the following directions: One direction is to expand dataset diversity by incorporating reviews from multiple online platforms (e.g., Trip-Advisor and Amazon) to enhance model generalizability. This will enable the model to adapt to different review styles and contextual variations, thus improving its robustness in various applications.

Another direction is to explore hybrid architectures, where DistilBERT is combined with CNNs, LSTMs, or meta-learning techniques to enhance both linguistic feature extraction and interpretability. Ensemble learning techniques could also be investigated to combine multiple model predictions, potentially improving classification accuracy and reducing false positives. This would provide a deeper comparative evaluation and determine the most effective architectures to detect AI-rephrased content. By addressing these future directions, this research can further strengthen the reliability, adaptability, and practical applicability of AI-generated fake review detection systems, ensuring their effectiveness as generative models continue to advance.