Abstract

Large-language-model (LLM) APIs demonstrate impressive reasoning capabilities, but their size, cost, and closed weights limit the deployment of knowledge-aware AI within biomedical research groups. At the other extreme, standard attention-based neural language models (SANLMs)—including encoder–decoder architectures such as Transformers, Gated Recurrent Units (GRUs), and Long Short-Term Memory (LSTM) networks—are computationally inexpensive. However, their capacity for semantic reasoning in noisy, open-vocabulary knowledge bases (KBs) remains unquantified. Therefore, we investigate whether compact SANLMs can (i) reason over hybrid OpenIE-derived KBs that integrate commonsense, general-purpose, and non-communicable-disease (NCD) literature; (ii) operate effectively on commodity GPUs; and (iii) exhibit semantic coherence as assessed through manual linguistic inspection. To this end, we constructed four training KBs by integrating ConceptNet (600k triples), a 39k-triple general-purpose OpenIE set, and an 18.6k-triple OpenNCDKB extracted from 1200 PubMed abstracts. Encoder–decoder GRU, LSTM, and Transformer models (1–2 blocks) were trained to predict the object phrase given the subject + predicate. Beyond token-level cross-entropy, we introduced the Meaning-based Selectional-Preference Test (MSPT): for each withheld triple, we masked the object, generated a candidate, and measured its surplus cosine similarity over a random baseline using word embeddings, with significance assessed via a one-sided t-test. Hyperparameter sensitivity (311 GRU/168 LSTM runs) was analyzed, and qualitative frame–role diagnostics completed the evaluation. Our results showed that all SANLMs learned effectively from the point of view of the cross entropy loss. In addition, our MSPT provided meaningful semantic insights: for the GRUs (256-dim, 2048-unit, 1-layer): mean similarity of 0.641 to the ground truth vs. 0.542 to the random baseline (gap 12.1%; ). For the 1-block Transformer: vs. (gap 4%; ). While Transformers minimized loss and accuracy variance, GRUs captured finer selectional preferences. Both architectures trained within <24 GB GPU VRAM and produced linguistically acceptable, albeit over-generalized, biomedical assertions. Due to their observed performance, LSTM results were designated as baseline models for comparison. Therefore, properly tuned SANLMs can achieve statistically robust semantic reasoning over noisy, domain-specific KBs without reliance on massive LLMs. Their interpretability, minimal hardware footprint, and open weights promote equitable AI research, opening new avenues for automated NCD knowledge synthesis, surveillance, and decision support.

1. Introduction

Biomedical knowledge is expanding at a rate that outstrips any human reader. Transforming this deluge of literature into actionable insight therefore requires automated systems that can build, represent, and—critically—reason over large-scale textual data [1]. Two obstacles persist: (i) constructing high-coverage yet affordable knowledge bases (KBs) and (ii) deploying language models that perform robust semantic reasoning under the tight computational budgets typical of clinical and public-health settings.

Conventional relation extraction pipelines and curated knowledge graphs achieve high precision but suffer from low recall and expensive schema design [2,3]. Open Information Extraction (OpenIE) offers a low-cost, high-throughput alternative, yet the resulting noisy triples challenge downstream reasoning tasks [4]. Large language models (LLMs) excel at complex prompting and reasoning strategies—e.g., Chain-of-Thought, Tree-of-Thought, Least-to-Most—yet their compute demands often exceed what most biomedical groups can support [5]. In contrast, Standard Attention-Based Neural Language Models (SANLMs), such as Attention-Based Gated Recurrent Unit (GRU), Long-Short Term Memory (LSTM) networks, and Transformers, can be trained comfortably on commodity GPUs, but their semantic-reasoning limits remain under-explored.

Consequently, the community lacks a lightweight, end-to-end pipeline that (a) builds domain-specific KBs at scale, (b) equips compact SANLMs with robust reasoning skills, and (c) evaluates semantic coherence without costly expert annotation.

We tackle this gap through three questions: How does combining a common sense KB with general-purpose and specialized OpenIE KBs affect the reasoning performance of SANLMs in both generic and non-communicable-disease (NCD) domains? Can a Semantic Textual Similarity (STS)-based metric capture semantic coherence more faithfully than token-level accuracy? How do attention-based GRUs, LSTMs, and Transformers differ in reasoning accuracy and semantic error patterns?

Contributions

- Framework integration: a pipeline that fuses commonsense knowledge with OpenIE- derived KBs centered on NCD literature, aligning large-scale text mining with domain specificity.

- Comparative evaluation: the first statistically rigorous comparison of GRU, LSTM, and Transformer SANLMs on semantic reasoning over general purpose (e.g., commonsense knowledge) and biomedical NCD KBs.

- New metric: the Meaning-Based Selectional Preference Test (MSPT), which masks the object in an SPO triple and scores the model by how much the embedding-level similarity of its prediction to the gold object phrase exceeds a random-chance baseline, thus capturing semantics beyond exact token matches.

- Qualitative error analysis: a detailed linguistic examination of model errors using semantic frames, roles, and selectional preferences, illuminating domain- and architecture-specific failure modes.

Our results show that attention-based GRU models demonstrated better average generalization according to semantic relatedness measures, while Transformers exhibited superior generalization regarding the average validation loss and accuracy (which were ≈20% vs. ≈53% in the best cases, respectively).

Our MSPT exposes complementary strengths: GRUs generalize semantic relatedness better, achieving a mean similarity of 0.641 with a +0.121 gap (12.1% over a 0.542 random baseline; ), whereas Transformers reach a mean similarity of 0.551 with a +0.04 gap (4% over a 0.511 random baseline; ) while being better than GRUs at minimizing and stabilizing loss/accuracy. Although LSTMs show evidence that their generated phrases are not purely random, they still produce senseless sentences that lack contextual relevance to the input subject and object phrases in the NCD domain. Both SANLM architectures operate on commodity hardware (up to 24 GB VRAM gaming GPUs) and effectively learn from noisy, end-to-end knowledge bases. Our approach evaluates semantic coherence in both general-purpose (including commonsense) contexts and non-communicable disease (NCD) domains, offering a computationally efficient alternative to resource-intensive, closed, infeasible, and unaffordable models.

Our findings establish performance baselines for SANLMs in medical knowledge reasoning (both quantitatively and qualitatively), laying a foundation for future research into resource-efficient Artificial Intelligence (AI) systems. Additionally, this framework potentially simplifies the scaling of knowledge-based AI for impactful applications in resource-constrained environments, particularly for public health research, clinical decision support systems, large-scale NCD surveillance, and other public-health tasks.

Section 2 situates our work within the literature; Section 3 provides our rationale for model and data selection; Section 4 reviews theoretical underpinnings; Section 5 describes data and experimental procedures; Section 6 details the proposed pipeline; Section 7 presents and discusses results; Section 8 contains our position statement on noise propagation due to pseudo-labels; and Section 9 concludes and proposes future directions.

2. Related Work

Regarding systems with IE-based semantic reasoning capabilities, we found common traits shared with the seminal work in Chronic Disease Knowledge Graphs (KGs [6]. The authors proposed a data model to organize and integrate the knowledge extracted from text into graphs (ontologies, in fact), and a set of rules to perform reasoning via first-order predicate logic over a predefined dictionary of entities and relations [7]. More recently, association rule learning was proposed for relation extraction for KG construction [8] and neural network-based graph embedding for entity clustering from EMRs. In Ref. [9], the authors constructed a KG of gene–disease interactions from the literature on co-morbid diseases. They predicted new interactions using embeddings obtained from a tensor decomposition method. The authors of Ref. [10] proposed a KG of drug combinations used to treat cancer, which was built from OpenIE triples filtered using different thesaurus [11,12]. The drug combinations were inferred directly from the co-occurrence of different individual drugs with fixed predicate and disease. The authors created their resource from the conclusions of clinical trial reports and clinical practice guidelines related to antineoplastic agents.

An EMR-based KG was used as part of a feature selection method for a support vector machine to successfully diagnose chronic obstructive pulmonary disease [13]. In recent work, deep learning has been used to predict heart failure risks [14]. The authors used a medical KG to guide the attentional mechanism of a recurrent neural network trained with event sequences extracted from EMRs. Previously, the authors of Ref. [15] also predicted disease risk, but for a broader spectrum of NCDs, and using Convolutional Neural Networks in a KG of EMR events. Medical entity disambiguation is an NLP task aimed at normalizing KG entity nodes, and the authors of Ref. [16] approached this problem as one of classification using the Graph Neural Network. Overall, multiple classical NLP methods have been applied to biomedical KGs, including biomedical KG forecasting from the point of view of link prediction (also known as literature-based discovery) [17].

While relation extraction (RE)-based methods have been widely used to construct KBs [18,19], they often suffer from low recall and limited expressiveness due to their reliance on predefined entity and relation vocabularies [3]. These constraints result in KBs that may not fully capture the richness of biomedical literature. In contrast, OpenIE methods extract relational tuples without the need for predefined schemas, leading to more diverse and comprehensive KBs. For instance, Mesquita et al. [20] demonstrated that OpenIE methods could extract a significantly larger set of relations compared to traditional RE methods. Their study showed that using OpenIE led to improve the overall recall in the extraction of relational tuples, enhancing the coverage and utility of the resulting KBs for downstream tasks. This expansion of relational data provides machine learning models with more diverse and expressive training material, which is crucial for developing robust semantic reasoning capabilities.

3. Rationale for Model and Data Choices

In this study, we employ Standard Attention-Based Neural Language Models (SANLMs), specifically GRU, LSTM networks, and Standard Transformers, for semantic reasoning over knowledge bases constructed via Open Information Extraction (OpenIE). This choice is driven by the need to explore the capabilities of basic attentional mechanisms in object phrase generation within knowledge base reasoning, a task underexplored with such foundational models. Additionally, our use of OpenIE reflects a commitment to resource-efficient, data-independent research, enabling the study of model performance in realistic, noisy knowledge base scenarios, particularly in the medical domain of non-communicable diseases (NCDs) literature.

3.1. Novelty of Basic Attentional Models for Object Phrase Generation

Attentional mechanisms have revolutionized natural language processing, yet their application in their most basic forms—GRUs with attention and Standard Transformers—remains largely unexamined for generating object phrases in knowledge base reasoning. This task requires predicting semantically coherent object phrases (e.g., “insulin” for the subject–predicate pair “diabetes is treated with”) within subject–predicate–object (SPO) triples derived from open-vocabulary knowledge bases. While attentional models have been applied to related tasks, such as knowledge graph reasoning [21] and commonsense question answering [22], prior work has primarily focused on complex architectures or alternative model types [5], including Recurrent Neural Networks [23,24], LSTMs [25,26], Latent Feature models [27,28], and Graph-based models [29,30].

Notably, COMET [22] leverages large-scale pre-trained language models, such as GPT variants, to generate commonsense knowledge descriptions (e.g., “a chair is used for sitting”). However, COMET’s focus on commonsense knowledge, still constrained by controlled vocabulary (e.g., “it be hot, HasSubevent, you turn on fan”), differs significantly from our task of semantic reasoning over (open) medical knowledge bases derived from NCD literature, which involve complex, domain-specific terminology and noisy, heterogeneous data. While GPT and other LLMs demonstrate improved performance metrics, this enhancement may stem from task memorization rather than robust reasoning [22,31,32]. Moreover, these models pose interpretability challenges when fine-tuned for object phrase generation, as their complex pre-trained weights obscure task-specific reasoning mechanisms. Our study addresses this gap by evaluating the intrinsic reasoning capabilities of basic attentional models, providing clearer insights into their potential and limitations in medical knowledge reasoning.

3.2. Comparison to Alternative Approaches

To contextualize our model selection, we compare GRUs with attention and Standard Transformers to alternative methods for knowledge base reasoning, as summarized in Table 1. These methods are less suited for our task due to the noisy, open-vocabulary nature of OpenIE-derived knowledge bases and the requirement to generate natural language object phrases.

Table 1.

Comparison of methods for knowledge base reasoning (key limitations for OpenIE-derived knowledge bases are highlighted in bold).

Symbolic methods struggle with scalability and noise, while embedding-based models like TransE are optimized for structured knowledge graphs. Graph-based methods, such as R-GCNs [29], excel in link prediction but are not tailored for generative tasks involving natural language. COMET, while effective for common sense knowledge, has significant drawbacks: it requires intensive computational resources, tends to memorize rather than reason about meanings, and exhibits reduced interpretability when finetuned for object phrase generation.

In contrast, GRUs with attention and Standard Transformers balance simplicity and power. GRUs excel at modeling sequential dependencies in variable-length SPO phrases, while Transformers’ self-attention captures long-range dependencies, ensuring semantic coherence in object phrase generation. These characteristics make them ideal for handling the contextual richness and noise of OpenIE-derived medical knowledge bases.

3.3. Motivation for Using OpenIE

Our use of OpenIE for knowledge base construction is motivated by the goal of creating a resource-efficient, data-independent research framework. Traditional methods for building knowledge graphs or ontologies require significant time, expertise, and financial investment to define schemas and curate relations [5]. OpenIE, by contrast, enables the rapid extraction of relational triples from unstructured NCD literature without predefined schemas, reducing costs and barriers for researchers. This approach simulates real-world scenarios where computational and data resources are limited, testing model robustness in noisy environments and democratizing knowledge-based AI research.

3.4. Noise Mitigation in Knowledge Base Construction

To address potential noise in OpenIE triples, we employed targeted mitigation strategies during the construction of the OIE-GP and OpenNCDKB knowledge bases (see Section 5 for more details). For the OIE-GP KB, we ensured high-quality data by selecting only triples annotated as factually correct from reliable sources, such as ClausIE and MinIE-C, minimizing non-factual or redundant relations. For the OpenNCDKB, we filtered out triples where the subject or object phrase contained only stop words (e.g., “this”, “that”), as these are often uninformative or erroneous, reducing the initial 22,776 triples to 18,616 valid ones. This limited preprocessing approach balances data quality with resource efficiency, enabling rapid KB construction from unstructured NCD literature while maintaining semantic coherence for reasoning tasks.

Additionally, we generated 45,032 negative samples using Artificial Semantic Perturbations (like those used in for leveraging our MPTS evaluation) to evaluate our models in distinguishing valid from invalid triples, showing insights into the models’ robustness to noise. Regarding this idea, we used object phrase randomization in our MSPT to measure semantic coherence beyond token-based loss and therefore have an idea about noise propagation during learning (see Section 4.5 and Section 4.6).

While our noise mitigation strategies are effective for initial evaluations, they represent a minimal preprocessing approach or indirect measurements. Future work could explore advanced techniques, such as triple trustiness estimation [33], which quantifies the reliability of triples based on entity types and descriptions, or canonicalization methods like CESI [34], which reduce redundancy by clustering synonymous phrases. These approaches could further improve model performance in medical knowledge reasoning while maintaining the efficiency of our current methodology.

3.5. Scientific Value and Broader Impact

The scientific value of this work lies in evaluating basic attentional models for object phrase generation in medical knowledge bases, an underexplored area compared to commonsense knowledge tasks. By focusing on GRUs with attention and Standard Transformers, we establish performance baselines that illuminate their reasoning capabilities in handling domain-specific, noisy data. Although the methods we use here are open-domain, this is particularly relevant for NCD literature, where interpretable reasoning is critical for clinical applications. Our use of OpenIE further supports sustainable AI by demonstrating that impactful applications can be developed with cost-effective knowledge resources, with clear performance trade-offs [5]. In addition, our MSPT offers a novel evaluation framework that observes model behavior from the perspective of prediction meaning—beyond mere token matching—and shows promise in assessing semantic coherence. In the future, methods like this may, albeit not entirely, relieve human experts of the burden of validating generated knowledge, thereby facilitating the agile evaluation of knowledge-based systems and expediting their immediate impact in applications for social change or improvement.

4. Theoretical Background

4.1. Open Information Extraction for openKBs

Open Information Extraction (OpenIE) is a paradigm that enables the extraction of relational tuples directly from text without relying on predefined ontologies or relation types. This open-domain and open-vocabulary nature allows OpenIE to capture a wider array of semantic relationships present in biomedical literature, even when classic methods are rule-based [35,36]. The limitations of RE-based methods, such as low recall due to fixed entity and relation sets [2], restrict the expressiveness and contextual richness of the resulting KBs [37]. These constraints can be particularly detrimental when training models for semantic reasoning tasks that require understanding a broad and nuanced range of information.

OpenIE works in such a way that, given the example sentence “habitat loss is recognized as the driving force in biodiversity loss”, it generates semantic relations in the form of SPO triples, e.g., {“habitat loss”, “is recognized as driving”, “biodiversity loss”}. In this triple, in the sense of Dependency Grammars [38] (as opposed to Phrase Structure Grammars (the classic approach), where the predicate includes the verb and the object phrase, in Dependency Grammars, the verbal form is assumed to be the center (or highest hierarchy) of the sentence, linking the subject and the object—this latter is a convenient approach in building KBs, and therefore for OpenIE.), “habitat loss” is the subject phrase, “is recognized as driving” is the predicate phrase, and “biodiversity loss” is the object phrase. In semantics, the subject is the thing that performs actions on the object, which is another thing affected by the action expressed in the predicate. Predicates therefore relate things (the subject and the object) in a directed way from the former to the latter.

OpenIE takes a sentence as input and outputs different versions of its SPO structure. Normally these versions are “sub-SPO” structures contained in the same sentence, e.g., {“habitat loss”, “is recognized as”, “driving biodiversity”}, and {“habitat loss”, “recognized as”, “driving”}. Notice that the last extraction (triplet) may not be factual at all and, depending on the downstream task this kind of output is used for, it may be considered purposeless.

We use the obtained OpenIE triples to build an openKB that organizes knowledge from NCD-related paper abstracts (Section 5.2). In addition, we used already existent extractions to build our OpenIE General Purpose KB (OIE-GP KB) with no specific topics (see Section 5.1). A KB is a special case of a database using a structured schema to store structured and unstructured data. In our case, the structured data constitute the identified elements of semantic triples, i.e., {subject, predicate, object}, while the unstructured part is the open vocabulary text (natural language phrases) of each of these elements.

4.2. Neural Semantic Reasoning Modeling

In this paper, we extend the use of SANLMs originally proposed for Neural Machine Translation (NMT) to learning to infer missing open vocabulary items of semantic structures (SPO triplets). In this section, we show the theoretical background behind the involved methods, which can be seen in Refs. [22,39,40,41] for more details.

Let be a semantic triple, where are subject, predicate, and object phrases, respectively, and is the training openKB. The KBC task here serves to predict given , which gives place to the conditional probability distribution implemented using a neural network model:

where O is the random variable (RV) that takes values on the set of object phrases , and U is the RV that takes values on the set of concatenated subject-predicate phrases, i.e., . The neural network has learnable parameters , which can be interpreted as phrase embeddings of o and u, respectively. From the point of view of NMT, the probability mass function can be used as a sequence prediction model. In this setting, each word of the target sequence (the object phrase) has a temporal dependency on prior words of the same phrase , and on the source sequence embedding (the concatenated subject-predicate phrases):

where is the decoder activation (a softmax function) that computes the probability of decoding the i-th (current) word of the object phrase from both the current hidden state embedding and the prior state embedding , as well as from the source embedding. Notice that, in the case of modeling this sequence prediction problem using Recurrent Neural Networks (RNNs), the i index represents time. In NLP, it is simply the position of a word within a sequence of words.

4.3. Encoder–Decoder with Attention-Based Recurrent Neural Networks (Attentional Seq2Seq)

Based on important improvements previously reported [40,42], in this work, we first observe the performance of an encoder–decoder architecture (originally called Recurrent Autoencoder Network) with Attention-Based Recurrent Neural Networks (i.e., an Attentional Seq2Seq model) in neural reasoning tasks. Attentional mechanisms are feature extraction layers of neural network models that improve the expressiveness of inner representations (the encoder’s hidden states). This expressiveness encodes the so-called attention weights, which indicate what parts of the input are more important to the prediction task through their inner representations in the network. The result of this inner feature extraction layer is , which is called the attention vector [40]. It is the concatenation, denoted by ⊕, of a context vector and the hidden state embedding , which are both representations (contextual and temporal, respectively) of .

Endowing an RNN with an attention mechanism makes it necessary to replace Equation (1) with

where the hidden state embedding of is given by

with being the hidden state activation of the decoder. There are some usual methods to compute the context vector [40], which encodes the features of the source context in which each word of the output is generated as a target. We used the weighted sum method proposed in Ref. [42]:

where the attention weights are computed as

and

is the hidden state of the encoder, so is the corresponding activation. This method, based on an alignment score , helps the model learn to pay more attention to a specific pair of embeddings and when it is expected that their represented words and co-occur in the input (the subject-predicate concatenation) and in the output (the object) at positions , respectively. In other words, in Equation (5) measures the alignment probability that will be generated in the next step as a consequence of observing in the current step. Therefore, the weighted sum in Equation (4) measures such a consequential effect . In the particular case of our experiments, we used the Attentional Seq2Seq GRU (Gated Recurrent Unit) model proposed by Ref. [40] since GRU-based recurrent models have proven to be effective and better options in computationally constrained environments.

4.4. Transformers

Recurrent models take as input only one element per read. This is the natural way of processing sequences since one element is generated at each timestamp. However, the recently proposed Transformer architecture takes advantage of the fact that the data are already generated and stored, so they can process as many elements of a sequence as possible at once.

As first introduced for Attention-Based RNNs [40,42], we use the encoder–decoder Transformer as an SANLM intended to generate object phrases (output) given the concatenated subject–predicate phrases (input) (notice that, in this case, we have sequences of the same length, n, in the input and in the output). The input Transformer encoder block takes as input the n dimensional (learnable) word embeddings of each item of the input sequence u in parallel. Therefore, such input to the encoder is a matrix (whose rows are word embeddings ) accepted by the ℓ-th attention head of the m-headed multi-head self-attention layer [43]:

where is the context matrix resulting from the ℓ-th attention head, with , and is the element-wise softmax activation. The attention matrix is given by

where are fully connected layers with linear activations (simple linear transformation layers), and each entry of is the attention weight, from to each other .

The multi-head self-attention layer builds its output by concatenating the m context matrices:

where then is fed to another linear output fully connected layer, i.e., , whose output is in turn fed to the normalization layer given by

where is applied both to , therefore , and to the output of the block, i.e., , where is an h-dimensional linear output fully connected layer (h is the dimension of the latent space of the encoder–decoder model, i.e., the number of outputs of the encoder; in most cases, ). The user-defined parameters and of are the sample-wise mean and variance, i.e., over each input embedding of the layer.

To build the decoder, a second Transformer block is stacked to an input one just after the first normalization layer of the latter (thus, does not operate for the first block). This way, the output of the first decoder block is taken as the query of the second block, whose key and value are the output of the encoder. As in the case of any encoder–decoder configuration, the decoder takes the target sequence as input and output. The Transformer architecture allows the stacking of multiple blocks (layers), which also extends to the encoder and decoder. In this work, we used the block Transformer encoder–decoder SANLMs.

4.5. Selectional Preference Analysis for Utterance Plausibility

This section outlines a simplified framework for analyzing subject–predicate–object (SPO) utterances (sentences), focusing on core semantic principles: Frame Semantics, Semantic (or Thematic) roles, and Selectional Preferences. This approach prioritizes objectivity and efficiency for pure linguistic analysis, which is desirable in the analysis of scientific literature.

4.5.1. Frame Semantics and Semantic Roles

The concept of Frame Semantics, introduced by Charles J. Fillmore, posits that word meanings are understood within the context of conceptual structures called “frames”. These frames represent typical situations, events, or objects, along with their associated participants and roles.

In this framework, the predicate (verb phrase) serves as the primary activator of a relevant semantic frame. The subject and object are then assigned semantic roles (e.g., Agent, Patient, Theme) based on their relationship to the predicate and the activated frame. This process establishes a structural understanding of the utterance (a sentence in our case), defining the fundamental relationships between its constituents (i.e., the building blocks of a sentence: words, phrases, like noun phrases or verb phrases; or even clauses). The relevance of this process in our manual analysis of a model prediction is that this establishes the basic “who did what to whom” of the sentence, which we compare with the ground truth.

For example, some ground truth sentence can be “The child kicked the ball”, from which one can identify its different parts,

- The child kicked the ball;

- DET child PAST.kick DET ball,

where “Kicked” activates the “kicking” frame, from which the following roles are also identified:

- Agent: child (the doer, capable of kicking);

- Patient: ball (the affected entity, capable of being kicked).

Analysis: in the example, the verb “kicked” brings to mind a frame where a person or thing uses a foot to propel something. The subject “The child” is assigned the role of agent, as it is the entity performing the action. The object “the ball” is assigned the role of patient, because it is the entity that is being propelled (so being affected by the action). Semantic plausibility arises when the assigned thematic roles align with our real-world knowledge and expectations about how events typically unfold. In the particular case of this example, the Agent role implies a capacity for action (child: the doer, is capable of kicking), so an animate entity is typically expected (a human). Therefore, the Patient role implies a capacity for receiving action (ball: the affected entity, is capable of being kicked), so an inanimate entity is expected (an object). In this case, the expectations are filled and the sentence is semantically plausible because children typically kick balls.

In cases where semantic plausibility is not verified, expectations based on real-world knowledge are not filled. For example, in “The table analyzed the data”,

- Agent: table (inanimate, incapable of analysis);

- Patient: data (abstract).

Analysis: The thematic role assignment violates expectations based on real-world knowledge. A table (inanimate ∉ Agent) cannot perform the action of analyzing. This violates our understanding of the capabilities of a table. The thematic role of the Agent requires certain capabilities that a table does not possess. Therefore, the sentence fails in terms of semantic plausibility.

4.5.2. Selectional Preferences

Selectional preferences are constraints on the types of arguments that a verb or other predicate can take. They describe the semantic restrictions that a word imposes on the words that can appear with it. This linguistic analysis framework focuses on the semantic compatibility between a predicate and its arguments. The relationship among the different linguistic theories described in this section is that selectional preferences can be seen as linguistic manifestations of the thematic roles and constraints defined by semantic frames. Therefore, from an end-to-end perspective, frames identified by Frame Semantics analysis provide the basis for preferences. As Resnik explains,

“Although burgundy can be interpreted as either a color or a beverage, only the latter sense is available in the context of Mary drank burgundy, because the verb drink specifies the selection restriction [liquid] for its direct objects”. [44].(the source)

Testing utterances (sentences in our case) for the validation of selectional preferences conveys an analysis where we verify the semantic compatibility between the arguments and their assigned semantic roles [45]. For instance, in the sentence “The dog ate the bone”.

- Frame: Eating

- Roles:

- –

- Agent: dog (animate, capable of eating)

- –

- Patient: bone (edible, capable of being eaten)

- Constraints: dog ∈ animate, and bone ∈ edible (both constraints are satisfied).

Analysis: The verb “eat” imposes preferences for an [animate] Agent and an [edible] Patient. The nontransitivity property of the verb allows for semantic plausibility even when Patient is absent. Therefore, the sentence is semantically valid.

Violations of the constraints means that preferences are not met, therefore indicating potential semantic anomalies. For example, in the sentence “The rock ate the bone”, we have

- Frame: Eating

- Roles:

- –

- Agent: rock (inanimate)

- –

- Patient: bone (edible)

- Constraints: rock ∉ [animate] (conflicts with the required [animate] Agent).

Analysis: The verb “eat” imposes preferences for an [animate] Agent and an [edible] Patient, and these constraints are partially meet by the Patient (bone). However, the Agent constraint is violated because a rock is inanimate, which conflicts with the required [animate] Agent. Therefore, this sentence is semantically anomalous.

4.6. Meaning-Based Selectional Preference Test (MSPT)

When evaluating the reasoning capabilities of language models, traditional performance metrics that rely on exact token matching or token-based statistics may not effectively capture the nuances of natural language semantics and built meanings. This is particularly true given the open vocabulary inherent in natural language and the semantic variability arising from logical inference, synonymy and context. For instance, two phrases can convey the same meaning using different words or structures, rendering exact matching insufficient for assessing semantic reasoning. Moreover, reliance on exact token matching—which drives most current evaluation methods—can lead to the appearance of false positive “hallucinations” [46].

To address these challenges, we introduce a probe that hides the object phrase in withheld subject–predicate–object triples, asks the model to supply that object, and then scores the answer by how much its phrase-embedding similarity to the gold object exceeds a random-choice baseline—so higher MSPT gaps reflect stronger, genuinely semantic selectional preferences rather than lucky token matches, i.e., Meaning-Based Selectional Preference Test (MSPT). This method is designed to measure reasoning quality by assessing semantic relatedness (particularly, meaning similarity) [47,48], specifically focusing on the selectional preferences between subjects, verbs, and objects that drive semantic interpretation [44,49].

From the previous Section 4.5, selectional preferences are semantic constraints that certain verbs impose on their arguments, determining which types of nouns are appropriate as their subjects or objects. For example, the intransitive verb eat typically selects for objects that are edible; contrary to the transitive verb attend, which mostly selects for a place as object, e.g., to the school. Capturing these preferences is crucial for understanding and generating coherent and contextually appropriate language.

In our experiments, we introduce the notion selectional preferences considering phrase meaning, by means of the word embeddings that in turn build phrase embeddings. Let be the phrase embeddings of two phrases and , respectively. These embeddings are obtained by summing the word embeddings of the words in each phrase:

where is the word embedding of word . The phrase similarity between and is measured using the cosine similarity:

where denotes the dot product of and , and is the Euclidean norm of . The cosine similarity quantifies the degree of semantic similarity between the two phrase embeddings, with values closer to 1 indicating higher similarity.

To evaluate the average semantic similarity across a set of m phrase pairs, we compute the mean cosine similarity:

This mean value reflects the average semantic relatedness between the meanings of the model’s predicted phrases and the meanings of the reference/baseline phrases , with .

To assess the reasoning capabilities of the language model, we employ the Student’s t-test to compare the distribution of STS measurements between the predicted object phrases and shuffled ground truth object phrases. The shuffled object phrases serve as a random baseline, simulating a scenario where the correspondence between the subject–predicate pair and the object is disrupted. This randomization effectively perturbs the selectional preferences that are pivotal for semantic coherence.

By disrupting these preferences, we assess whether the model can distinguish between semantically coherent and incoherent phrase tuples. Specifically, we analyze whether the model’s predictions are significantly more semantically similar to the ground truth phrases than to the randomized ones. For example, from a previous section, the word burgundy can refer to either a color or a beverage. However, in the frame induced by the verb drink, only the beverage sense is appropriate for burgundy due to the selectional preference of the verb drink, which specifies a semantic constraint for its object to be of the type [liquid]. Our random baseline becomes the dataset agnostic of these constraints.

Violating selectional restrictions creating semantically incoherent subject–predicate⇒ object triples enables us to quantitatively evaluate how effectively the language model understands and applies selectional preferences during reasoning. In addition, the use of word embeddings for meaning representation allows us to consider the inaccuracy of natural language.

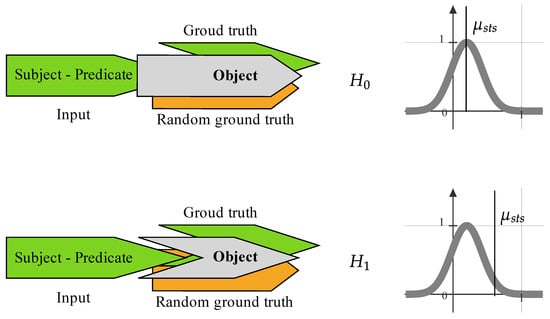

The hypothesis testing framework is as follows (see Figure 1):

Figure 1.

Depiction of our MSPT method.

- Null hypothesis (): There is no significant difference in semantic similarity between the model’s predictions and the ground truth phrases versus the shuffled phrases. Under , the model’s ability to predict/generate object phrases is equivalent to random chance, indicating a lack of semantic reasoning based on meaning and selectional preferences.

- Alternative hypothesis (): The model’s predicted/generated object phrases are significantly more semantically similar to the ground truth phrases than to the shuffled phrases. This suggests that the model effectively captures selectional preferences for meanings and demonstrates genuine semantic reasoning.

The hypothesis testing framework is as follows (see Figure 1):

By performing the t-test on the STS measurements, we determine whether the observed mean similarity between the model’s predictions and the ground truth is statistically greater than that with the randomized baseline. A significant result in favor of indicates that the model’s reasoning is not merely a product of surface-level associations but reflects an understanding of deeper semantic relationships dictated by selectional preferences.

This evaluation method emphasizes the model’s ability to generate meanings that are contextually and semantically appropriate, moving beyond mere token-level accuracy. It provides a robust framework for assessing semantic reasoning in language models, especially in open-vocabulary settings where lexical variability and synonymy are prevalent.

By incorporating selectional preferences and STS into our evaluation, we align the assessment more closely with human language understanding, where context and semantic constraints play crucial roles. This approach enhances the interpretability of evaluation results and offers deeper insights into the semantic capabilities of language models.

5. Data

5.1. The OIE-GP Knowledge Base

5.1.1. Dataset Description

In this work, we created a dataset called OpenIE General Purpose KB (OIE-GP) using manually annotated and artificially annotated OpenIE extractions. The main criterion for selecting the sources of the extractions was that they had some human annotation, either for identifying the elements of the structure or for their factual validity. We considered factual validity as an important criterion because it is important to train SANLMs to reason with factual validity.

ClausIE: This dataset was used to generate a large number of triples that were manually annotated according to their factual validity [50] https://www.mpi-inf.mpg.de/departments/databases-and-information-systems/research/ambiverse-nlu/clausie (accessed on 2 June 2025). The resulting dataset provides annotations indicating whether the triples are either too general or senseless (correct/incorrect), which recent datasets have adopted in some way. For example, it is common to find negative samples (incorrect triples) like, e.g., {“he”; “states”; “such thing”}, {“he”; “states”; “he”}. From this dataset, we took the 3374 OpenIE triples annotated as correct (or positive) samples.

MinIE-C (MinIE-C is the less strict version of MinIE system, and we selected it as it is not restricted in the length of the slots of the triples: https://github.com/uma-pi1/minie (accessed on 2 June 2025)) provided artificially annotated triples that are especially useful to our purposes because they are as natural as possible in the sense of open vocabulary [51]. These are generated by the ClausIE algorithm (as part of MinIE) and are annotated as positive/negative according to the same criterion used by ClausIE. From this dataset, we took the 33216 OpenIE triples annotated as positive samples.

CaRB is a dataset of triples whose structure has been manually annotated (supervised) with n-ary relations [52]. From this dataset, we took the 2235 triples annotated as positive samples.

WiRe57 contains supervised extractions along with anaphora resolution [53]. The 341 hand-made extractions of the dataset are 100% useful as positive samples because they include anaphora resolution.

5.1.2. Noise Mitigation Strategy

Annotation-based selection: The datasets publicly available already include annotations as factually correct or factually incorrect. Only triples annotated as factually correct from sources like ClausIE (3374 triples), MinIE-C (33,216 triples), CaRB (2235 triples), and WiRe57 (341 triples) were selected. This ensures semantic validity and reduces non-factual or senseless triples.

5.2. The OpenNCDKB

5.2.1. Dataset Description

A noncommunicable disease (NCD) is a medical condition or disease that is considered to be non-infectious. NCDs can refer to chronic diseases, which last long periods of time and progress slowly. We created a dataset of scientific paper abstracts related to nine different NCD domains: breast cancer, lung cancer, prostate cancer, colorectal cancer, gastric cancer, cardiovascular disease, chronic respiratory diseases, type 1 diabetes mellitus, and type 2 diabetes mellitus. These are the most prevalent worldwide NCDs, according to the World Health Organization [54].

The domains from which we built our dataset of scientific paper abstracts were for nine different NCDs:

We used the names of the diseases as search terms to retrieve the k∼150 most relevant abstracts from the PubMed (National Library of Medicine, National Center for Biotechnology Information (NCBI): https://pubmed.ncbi.nlm.nih.gov (accessed on 2 June 2025)) (see Table 2). The resulting set of abstracts constituted our NCD dataset. To generate our Open Vocabulary Chronic Disease Knowledge Base (OpenNCDKB), we retrieved a total of 1200 article abstracts that correspond to the NCD-related domains mentioned above.

Table 2.

Different target domains included in the OpenNCDKB and the number of abstracts retrieved from PubMed.

The domains from which we built our dataset of scientific paper abstracts were for nine different NCDs:

To obtain OpenIE triples (and therefore, an open vocabulary KB), we used the CoreNLP and OpenIE-5 libraries [55,56]. First, we took each abstract from the NCD dataset and split it into sentences using the coreNLP library. Afterwards, we took each sentence and extracted the corresponding OpenIE triples using the OpenIE-5 library. By doing so, we obtained a total of 22,776 triples.

5.2.2. Noise Mitigation Strategy

The 22,776 triples obtained from OpenIE were filtered to remove those containing only stop words in the subject or object phrases. After this preprocessing, we were left with 18,616 triples that were considered valid for our purposes.

In addition to the valid triples, we also generated semantically incorrect negative samples (triples). These were generated using the same methods for artificial semantic perturbations and were preprocessed in the same way as the positive ones, giving 45,032 semantically invalid triples. These negative samples were used for quantitatively evaluating selectional preferences (see Section 4.6), and can be used for teaching factual validity to the models in future work, which resemble the plausibility score included in the ConceptNet Common Sense Knowledge Base [57].

5.3. Our Semantic Reasoning Tasks

To perform training, we first used a compact version of the ConceptNet KG converted into a KB (the ConceptNet Common Sense Knowledge Base [57]) (https://home.ttic.edu/~kgimpel/commonsense.html (accessed on 2 June 2025)) consisting of 600k triples. We also used our OIE-GP Knowledge Base (Section 5.1) containing 39,166 triples, i.e., only the (semantically) positive samples of the whole data described in Section 5.

From these KBs, we constructed mixed KBs that include the source task vocabulary and include, as much as possible, the missing vocabulary needed to validate the model on the target task related to NCDs. In this way, we obtained our source KBs:

- OpenNCDKB. We split the 18.6k triples of the OpenNCDKB into 70% (13.03k) for training and 30% for testing (5.58k). We included the OpenNCDKB here because, in the context of the source and target task, we simply split the whole KB into train, test, and validation data. Validation data was considered the target task in this case.

- ConceptNet+NCD. By merging the triples collected from the ConceptNet Knowledge Graph and those from OpenNCDKB, we obtained our ConceptNet+NCD KB containing 429.12k training triples and 183.91k test triples.

- OIE-GP+NCD. We obtained our OIE-GP+NCD KB by merging 39.17k OIE-GP and 13.03k NCD triples to obtain a total of 52.20k triples. These were split into 70% (36.54k) for training and 30% (15.66k) for testing.

- ConceptNet+OIE-GP+NCD. We obtained this large KB that included ConceptNet, general purpose OpenIE (the OIE-GP KB), and NCD OpenIE triples (the OpenNCDKB) by taking the union between ConceptNet+NCD and OIE-GP+NCD. This source training task constitutes 600k + 39.17k + 13.03k = 652.20K total triples. These were split into 70% (456.54k) for training and 30% (195.66k) for testing.

All the mentioned quantities consider that we filtered out the triples whose subject or object phrases were only stopwords. The utilization of OpenIE extractions in constructing the OIE-GP and OpenNCDKB Knowledge Bases ensures that our dataset captures a broad spectrum of semantic relations present in the biomedical literature. This comprehensive approach aligns with our objective to overcome the limitations of RE-based KBs and provides a robust foundation for training our semantic reasoning models.

6. Methodology

DL and NLP researchers recently tested Transformer-based [21,22,41] and recurrent neural network-based [57] SANLMs in general purpose CSKR tasks where open vocabulary is considered. In sight of this progress, our research uses a methodological approach that can be especially useful for the semantic analysis of documents dealing with arbitrary but specialized topics, such as open domain scientific literature.

Building upon the advantages of OpenIE, our methodology integrates these methods to construct the training KBs for our semantic reasoning models. By extracting relational tuples from biomedical literature using Stanford CoreNLP [55], we generated the OpenNCDKB that encompasses a wider range of semantic relations from a dataset of paper abstracts retrieved from PubMed. We also integrate in our experiments already extracted relations from general purpose texts and with different state-of-the-art OpenIE methods described in Section 5.1.

By using our KBs, we trained Transformer and recurrent encoder–decoder SANLMs to address combinations of two similar semantic reasoning tasks that involve general purpose and open vocabulary: the ConceptNet common sense KB, and the OIE-GP, an openKB we built from multiple sources that used OpenIE extractions. The train, test, and validation KBs were enriched with specialized domains, i.e., chronic disease literature abstracts induced via the OpenNCDKB.

Although this open vocabulary approach can add complexity to modeling semantic relationships (and therefore to the learning problem), it also adds expressiveness to the resulting KBs. Such expressiveness can improve the contextual information in semantic structures and thus allow the SANLMs to discriminate useful patterns from those that are not for the semantic reasoning task [58]. We use Standard Transformer and Attention-Based GRU models because they have been instrumental in the advancement of modern language modeling, still demonstrating state-of-the-art performance in diverse NLP tasks. The Transformer architecture, with its self-attention mechanism and parallelization capabilities, has significantly improved the ability to capture long-range dependencies and contextual relationships. Similarly, attention-based GRUs enhance sequential modeling by dynamically weighting input–output relevant information, making them effective for capturing semantic nuances. Given their strengths in contextual reasoning and semantic representation, we believe these models are well-suited for addressing fundamental reasoning questions at the semantic level. In addition to these models, we include attention-based LSTM networks in order to have baseline comparison.

To show the effectiveness of our approach from multiple points of view, we evaluated and analyzed the results for both the Transformer and the recurrent SANLMs in terms of

- Performance metrics on train and test data. In particular, accuracy considers the reasoning quality as an exact prediction of the model with respect to the ground truth tokens. Cross entropy quantifies the degree of information dissimilarity between the probability distribution predicted by the model and the ground truth one. These metrics are effective at learning time because the inner representations of the SANLM acquire knowledge on what are the specific words (for the object phrase in our case) that probably should be next to the input ones (the subject and predicate). This helps to select models for subsequent evaluations and to establish baseline models.

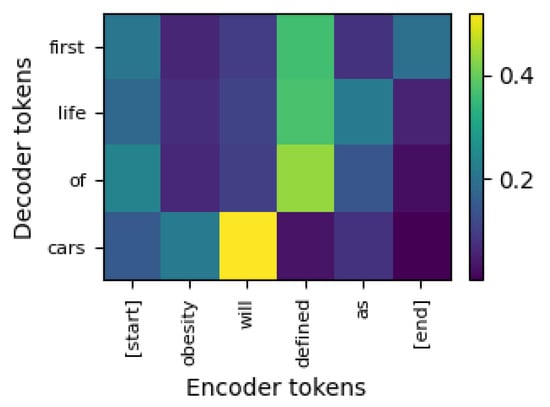

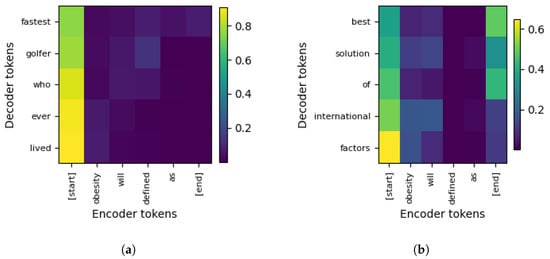

- Attention matrix visualization. In our specific context dealing with semantic reasoning tasks, visualizing the attention matrices using heatmaps would help in understanding how well the models are capturing the semantic nuances and relationships in the data. This provides interesting insights when dealing with complex, domain-specific data like medical literature, where understanding the context and relationships between concepts is crucial. This helps to further select models for subsequent evaluations.

- Meaning-based selectional preference test (MSPT). To evaluate the semantic reasoning quality of model predictions, we conducted hypothesis testing using STS and selectional preference perturbations on test and validation datasets. This approach measures the semantic relatedness between model-generated object phrases, ground truth references, and a random baseline (Section 4.6). Specifically, we designed a three-way comparison framework to assess

- (a)

- The similarity distribution between predicted and ground truth object phrases;

- (b)

- The separation of this distribution from that of shuffled ground truth phrases (random baseline) which simulates misalignment of selectional preferences.

STS scores were calculated to quantify meaning alignment, with a focus on whether predictions significantly diverged from misaligned selectional preferences and rather converged toward gold standard references. Statistical analysis was applied to determine the significance of differences between these distributions. - Manual inspection of the inferences for the test and validation data. To complement quantitative evaluations, we conducted a manual inspection of model inferences across test and validation datasets. This qualitative analysis framework aims to

- (a)

- Identify semantic patterns captured by SANLMs through their generated outputs;

- (b)

- Assess the consistency of these patterns across test and validation model predictions.

By systematically comparing inferred outputs against ground truth references, we evaluated how robustly SANLMs encode semantic regularities (e.g., contextual relationships, lexical coherence) and whether these generalize beyond training data.

From the perspective of using the ConceptNet knowledge base (KB) as training data, it is important to note that, while this KB has been largely compiled manually, it was not originally designed for the direct training of models to generate triplets. Regarding IOE-GP and OpenNCDKB, these KBs are built using extractions of already trained supervised and unsupervised methods, neither of which were designed for direct training of models to generate triplets. Therefore, the training semantic reasoning tasks to address by our SANLMs represent a distantly supervised learning problem for predicting/generating object phrases, given the subject and predicate phrases.

7. Results and Discussion

7.1. Experimental Setup

For our experiments, we used previously validated Transformer and attention-based GRU model hyperparameters to evaluate their performance on our multiple semantic reasoning tasks, i.e., predicting/generating object phrases, given the subject and predicate phrases (see Table 3). Due to the fact that our KBs are smaller than the datasets used for Neural Machine Translation (NMT) by the authors of [39,40] to introduce these models, we decided to use the smallest architectures they reported.

Table 3.

Hyperparameter configurations for model architectures (key contrasting architectural configurations are highlighted in bold).

In the case of the standard Transformer model [39], we used a source and target sequence lengths of , a model dimension of (the input and positional word embeddings), an output Feed Forward Layer (FFL) dimension of (denoted as in the original paper), a number of attention heads of , attention key and value embedding dimensions of , and a number of transformer blocks of . In addition, we considered the possibility that such a “small” model is still too big for our KBs (the largest one has ∼652k triples) compared to the 4.5 million sentence pairs this model consumed in the original paper for NMT tasks. Therefore, we also included an alternative version of the base model using only one Transformer block () in both the encoder and decoder (in the case of the decoder, a single Transformer block refers to two self-attention layers (), whereas refers to three of these layers (i.e., the decoder’s number of blocks is with respect to the encoder).), while keeping all other hyperparameters of the model.

In the case of the attention-based GRU model, we adopted the hyperparameters specified in the corresponding original proposals [40,42] to build baseline recurrent models. In addition, we considered previous work where the additional architecture hyperparameters of Transformers and recurrent models were compared in their performance [59]. Namely, we included two contrasting recurrent architectures (for both encoder and decoder): the first one was labeled as GRU-W (GRU Wide): sequence lengths of and , number of hidden states (units) , each with an embedding dimension of , and training batch size of 128. The second one was labeled GRU-S (GRU Squared): sequence lengths of and , number of hidden states (units) , each with an embedding dimension of , and training batch size of 32.

The models were trained using different KBs constructed from different sources to select the semantic reasoning task and the model that best generalizes the OpenNCDKB validation set. Using the four source KBs and the OpenNCDKB, we obtained four mixed KBs used for training the models (see Section 5.3): ConceptNet+NCD (CN+NCD, for short), OIE-GP+NCD, Concepnet+IOE-GP+NCD (CN+OIE-GP+NCD, for short). Using each of these KBs, we trained eight Transformer models, four models with (i.e., Transformer 1) and four models with (i.e., Transformer 2). In the case of the baseline recurrent models, GRU-W and GRU-S, these were trained with the OIE-GP+NCD and CN+OIE-GP+NCD KBs separately. Thus, we trained four baseline models across different KBs, contrasting architectural dimensionalities through two configurations: GRU-W (wide: large hidden units with smaller embeddings) and GRU-S (squared: balanced hidden/embedding dimensions).

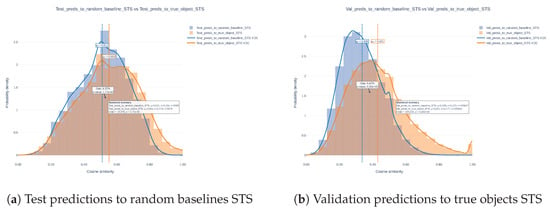

We compared and analyzed the test semantic reasoning tasks using performance metrics (i.e., sparse categorical cross-entropy) of the eight Transformer models and the four GRU models (training and test). We set 40 epochs max. to train and test the models with each KB, but with patience=10 for a minimum improvement of .

We also performed an STS-based hypothesis test on all resulting test and validation data (see Section 4.6). The overall outcome of this STS-based hypothesis test was to verify whether the STS measurements with respect to the true object phrases and with respect to random baselines come from different distributions; that is, to decide whether the null hypothesis, i.e.,

Hypothesis 0 (H0)

semantic similarity measurements with respect to true object phrases and with respect to random baselines come from the same distribution,

can be rejected with confidence and whether this holds for both the test and validation datasets.

The neural word embeddings we used for Meaning-Based Selectional Preference Test (MSPT) were trained on the Wikipedia corpus to obtain good coverage of the set difference between the vocabulary of the test and validation data (PubMed paper abstracts contain a simpler vocabulary than the paper itself, while Wikipedia contains a relatively technical vocabulary). The word embedding method used was FastText, which has shown better performance in representing short texts [60]. The phrase embedding method used to represent object phrases was a simple embedding summation; this was because, at the phrase level, even functional words (e.g., prepositions, copulative and auxiliary verbs) can change the meaning of the represented linguistic sample [48].

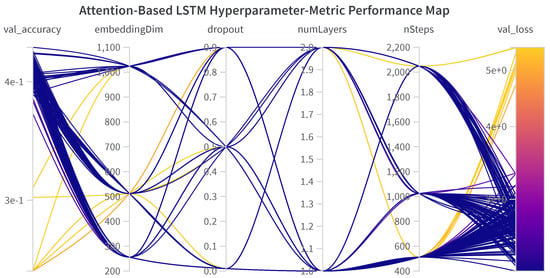

Additionally, we investigate how the demands of semantic reasoning tasks differ from those of neural machine translation (NMT), where GRU hyperparameters have been extensively validated. To this end, we conducted a sensitivity analysis for attention-based GRU and LSTM models trained on our largest combined KB (CN+OIE-GP+NCD) to provide a broader performance evaluation of these standard attentional models, which are less explored in reasoning tasks compared to Transformers. The analysis evaluates the impact of embedding dimensionality, number of hidden units (a.k.a. steps, or states), number of layers, and dropout probability (regularization) on accuracy and loss. To this end, we conducted two sensitivity studies—one for the attention-based GRU (311 trials) and the other for the attention-based LSTM (168 trials). All models were trained on the same KB, ran on four RTX-4090 GPUs, and were tuned with Bayesian optimization; a random forest surrogate model supplied the feature-importance and the Spearman correlations (for all our hyperparameter search analysis, we used the weights and biases (https://shorturl.at/SHw6Y (accessed on 2 June 2025)) Python API).

At the end, we manually inspected and discussed the natural language predictions of the best models using both test and validation data. To do this, we randomly selected five input samples from the test subject–predicate phrases and fed them to the SANLMs that exhibited the highest confidence during our MSPT. We analyzed the predicted object phrases to explore their meaning and the semantic regularities they demonstrated. We then repeated this inspection using validation data from the OpenNCDKB, randomly selecting five subject–predicate inputs not seen during training. This allowed us to verify whether the semantic regularities identified in the test predictions were reproduced in the validation predictions.

7.2. Results

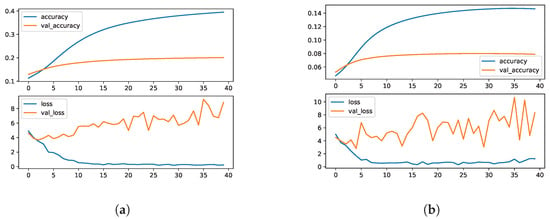

7.2.1. Training- and Validation-Loss Profiles of Attention-Based GRU and Transformer Models

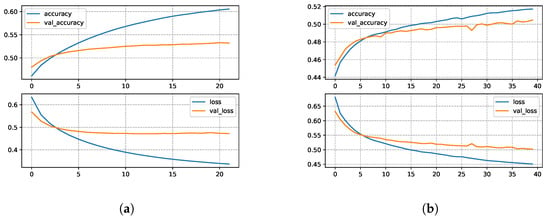

In Figure 2, we start by showing the progress of the performance metrics of the two baseline GRU models (GRU-W and GRU-S) during 40 epochs. This time, both models were trained and validated on the OIE-GP+NCD KB. Figure 2a shows the accuracy and the loss for the GRU-W. Notice that the difference between its train and validation accuracy (accuracy = 39% and val_accuracy = 20%, respectively) is relatively large, stable, and continues to diverge slowly. Something different occurred for the loss function (loss = 0.28 bits and val_loss = 8.25 bits). The divergence is even more prominent while the validation loss (val_loss in the figure) appears much more unstable even though the training loss settles relatively early. This can be the manifestation of overfitting, although this model showed to be relatively stable through the fourteen epochs allowed by the patience hyperparameter. Notice in Figure 2b that a very similar pattern can be seen in the GRU-S model, but it shows a more unstable loss when making predictions (even reaching 10 bits) and with much less accuracy (val_accuracy = 7.8%). Also note that both models are trained on a small KB (36.54k tuples), which we believe is the main cause of this divergent behavior.

Figure 2.

Training and validation (val_) accuracies (upper plots), and training and validation (val_) losses (lower plots) for the GRU models trained with the OIE-GP+NCD KB: (a) GRU-W; seq. len = 10, batch size = 128, embedding dim. = 256, units = 2048, (b) GRU-S; seq. len = 30, batch size = 32, embedding dim. = 1024, units = 1024. For this dataset, we set 40 epochs maximum.

In Figure 3, we have the GRU-W and GRU-S models but now trained on the CN+OIE-GP+NCD KB, which is a larger KB (456.54k train triples). In general, due to the size of this KB, the first improvement we see is that the training and validation curves are much less divergent (both in terms of accuracy and loss). In addition, the instability of the models when making predictions was significantly reduced. In particular, the GRU-W model decreased its validation loss to 2.72 bits with the CN+OIE-GP+NCD KB (Figure 3a), compared to 6.19 bits with the OIE-GP+NCD KB in epoch number 15 (allowed by the patience hyperparameter this time). Furthermore, the difference between the training and validation losses is considerably smaller with the CN+OIE-GP+NCD KB, i.e., 2.72 − 1.13 = 1.59 bits (versus 6.19 − 0.25 = 5.94 bits with the OIE-GP+NCD KB). The training and validation accuracies also show much less difference, and overall, we see that the effect of the training KB size turns out to be a significant reduction in model overfitting in this semantic reasoning task.

Figure 3.

Training and validation (val_) accuracies (upper plots), and training and validation (val_) losses (lower plots) for the GRU models trained with the ConceptNet+OIE-GP+NCD KB: (a) GRU-W; seq. len = 10, batch size = 128, embedding dim. = 256, units = 2048, (b) GRU-S; seq. len = 30, batch size = 32, embedding dim. = 1024, units = 1024. For this KB, we set 100 epochs max., but with patience = 10 for a minimum improvement of = 0.005.

Although the training and validation losses of the GRU-S model resulted in less divergent tendencies (Figure 3b), see that these actually increase as the model is exposed to more epochs. See also that the precision of the corresponding validation loss is small and practically constant, without improvement. We therefore observe that architectural differences between the recurrent models (wide and squared) drive their contrasting behaviors. While GRU-W is characterized by a large number of hidden units (2048) of lower dimensionality (256), the GRU-S model is characterized by half of these hidden units (1024), but 3 times higher dimensionality (1024). Furthermore, GRU-W must predict sequences of 10 words, while GRU-S must predict sequences of 30 words. From these experiments with contrasting attention-based recurrent architectures, we observe that increasing the number of hidden units and reducing their dimensionality is what allows an attention-based recurrent model to improve its generalization in this semantic reasoning task.

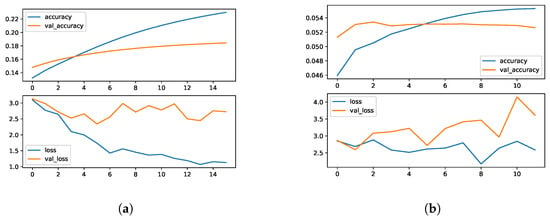

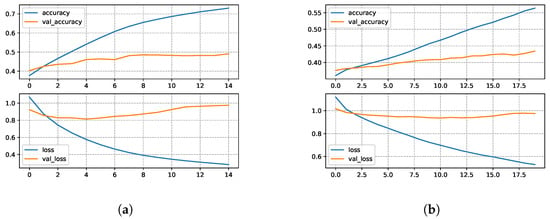

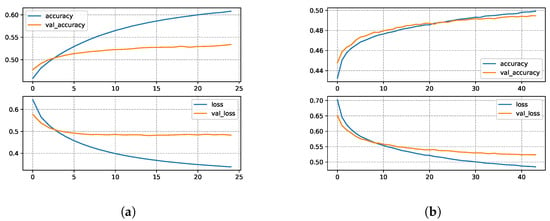

In Figure 4, we show the progress of the performance metrics of the standard Transformer models trained and validated from scratch only on the OpenNCDKB (13k + 5.6k triples, respectively). Figure 4a shows the accuracy and the loss for the one-block Transformer model (Transformer 1). Notice that the difference between train and validation accuracy (accuracy = 80% and val_accuracy = 48%, respectively) is relatively large. What occurred for the loss function with the train and validation losses is a bit different because 1.03 bits is a normal separation for two relatively small values (loss = 1.28 bits and val_loss = 0.25 bits). See that, in the figure, both values are actually near from 0.85 bits. This can be seen as a stable generalization pattern with a relatively small dataset in forty epochs allowed by the patience hyperparameter.

Figure 4.

Training and validation (val_) accuracies (upper plots), and training and validation (val_) losses (lower plots) for the Transformer trained with the OpenNCDKB: (a) N = 1, (b) N = 2.

Similarly to the one-block model, the accuracy and the loss (Figure 4b) for the two-block Transformer (Transformer 2) shows a divergence between the train and validation accuracies but with values reduced by 10% with respect to the Transformer 1. This model stopped to improve two epochs earlier than the one-block model, also with their losses around 0.85 bits and with less than 1 bit of difference.

The models trained with the CN+NCD KB were provided with much more data (429.12k training triples) than the models only using the OpenNCDKB (13k training triples). The accuracy and loss for these models are shown in Figure 5. Figure 5a shows a clear improvement in the validation loss, reaching a minimum near 0.48 bits. On the other hand, the training loss was about 0.13 bits apart from the validation loss, which is less than the same comparison made for the two-block Transformer trained only with the OpenNCDKB. Regarding accuracy, the maximum on validation data was about 53%, showing similar train and validation curves with respect to OpenNCDKB but much less divergent, around 5% (compared to 30%) in 20 training epochs (60% more training steps).

Figure 5.

Training and validation (val_) accuracies (upper plots), and training and validation (val_) losses (lower plots) for the Transformer trained with the CN+NCD KB: (a) N = 1, (b) N = 2.

In the case of the two-block model (Transformer 2) trained with the CN+NCD KB (Figure 5b), the training and validation losses dropped to around 0.475 bits and developed during a much larger number of training steps (we set 40 epochs max.) with an even smaller divergence (0.05 bits), showing thus much better generalization with respect to what we observed in the same model trained only with the OpenNCDKB. The same can be said for the accuracies reaching about ≈53%, that this time showed a tendency to keep improving with a small divergence (only about 1.6%), indicating stability between the train and validation predictions and the low variability of the model when it is exposed to unseen data (generalization). It is worth noting that the model improved throughout the 40 epochs we set as maximum, which suggests that the results could be improved by increasing the total number of epochs.

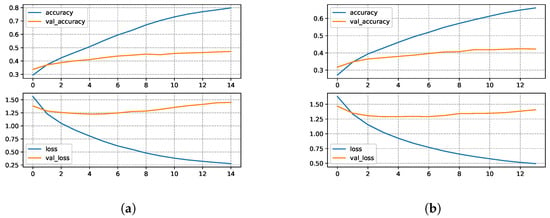

Like in cases analyzed before, the apparent lack of semantic information of our smaller KBs was generally highlighted by the results obtained from the SANLMs trained with them. Now training both Transformer 1 and Transformer 2 using the OIE-GP+NCD KB (36.54k training triples) exhibits similar behaviors than training them only with the OpenNCDKB (13k training triples). Although the OIE-GP KB is twice as large as OpenNCDKB, it is barely one-tenth the size of ConceptNet. The losses and accuracies shown in Figure 6a and Figure 6b, for Transformer 1 and Transformer 2, respectively, indicate that there are no clear improvements in using the OIE-GP KB to enrich the OpenNCDKB. Therefore, 36.54k training triples are still an insufficient dataset size for our SANLMs to generalize in semantic reasoning tasks.

Figure 6.

Training and validation (val_) accuracies (upper plots), and training and validation (val_) losses (lower plots) for the Transformer trained with the OIE-GP+NCD KB: (a) N = 1, (b) N = 2.

Finally, the SANLMs trained using the ConceptNet+OIE-GP+NCD KB show similar results to those obtained with the ConceptNet+NCD KB (see Figure 7). Transformer 1 model (Figure 7a) required two less epochs compared to the same architecture trained on the ConceptNet+NCD KB. Training the Transformer 2 model (Figure 7b) with the ConceptNet+OIE-GP+NCD KB caused a decrease in performance, compared to training the model with the ConceptNet+NCD KB, i.e., 0.03 bits in the case of the loss and 0.01% in the case of accuracy. Despite this decrease, the model showed greater stability, as the train and validation curves were less divergent but showing increasing performance when the ConceptNet+OIE-GP+NCD KB was used.

Figure 7.

Training and validation (val_) accuracies (upper plots), and training and validation (val_) losses (lower plots) for the Transformer trained with the ConceptNet+OIE-GP+NCD KB: (a) N = 1, (b) N = 2.

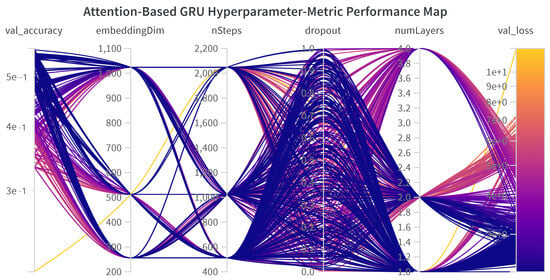

7.2.2. Sensitivity Analysis for Attention-Based GRU Models

To understand the impact of hyperparameters on our attention-based GRU models’ performance, we conducted a sensitivity analysis from 311 experiments total to evaluate their importance in influencing the loss function, which is minimized using the Bayesian optimization of the hyperparameters to optimize semantic reasoning for object phrase generation. In this analysis, we assessed which hyperparameters contribute the most to changes in the loss function using a random forest regression surrogate model, following the approach of Probst et al. [61]. Regarding hardware, we used four Nvidia RTX-4090 GPUs to train 311 models. We used Adafactor optimizer which adjusts the learning rate based on parameter scale. For this analysis we used our largest KB (the ConceptNet+OIE-GP+NCD KB).

The surrogate model predicts the loss function’s value based on hyperparameter configurations, treating each hyperparameter as a feature. Feature importance scores are computed using Gini importance, which measures the total reduction in node impurity attributed to each hyperparameter across the random forest’s trees. Additionally, we calculated Spearman’s rank correlation coefficient to quantify the monotonic relationship between each hyperparameter’s values and the loss function, providing insight into whether increases in a hyperparameter are associated with higher (worse) or lower (better) loss.

Table 4 presents the importance scores and Spearman correlation coefficients for the hyperparameters of our attention-based GRU models, sorted by importance in descending order. The table includes only variable hyperparameters, as batch size (64), number of epochs (5), and sequence length (30) were held constant to ensure stable training conditions. Importance scores range from 0 to 1, with higher values indicating greater influence on the loss function. Spearman correlations range from −1 to 1, where positive values indicate that higher hyperparameter values are associated with higher loss (and thus lower performance), and negative values suggest improved performance with higher values.

Table 4.

Hyperparameter Importance and Correlation Analysis (sorted in descending order by importance score).

The results in the table reveal that the number of layers has the highest importance score (0.363), indicating it is the most influential hyperparameter in determining the loss function’s value. Its strong positive Spearman correlation (0.515) suggests that increasing the number of layers (from 1 to 4) is associated with higher loss, implying reduced model performance. This may indicate overfitting in deeper models, as additional layers increase complexity, potentially capturing noise in the OpenIE-derived medical knowledge base rather than generalizable patterns.

This trend is visually confirmed in the performance map (see Figure 8), where purple lines (low val_loss) cluster at lower numLayers values (e.g., 1.2–2.0), while yellow lines (high val_loss) are more frequent at higher values (e.g., 3.0–3.8). The dense packing of lines in these regions underscores the consistency of this pattern across numerous experiments. This implies that deeper models are prone to overfitting, capturing noise in the training data rather than generalizable patterns, which degrades performance on the validation set. For optimal generalization, shallower architectures (e.g., 1 or 2 layers) are likely preferable within this tested range.

Figure 8.

Global view of the experiments with attention-based GRU models, mapping hyperparameters to the loss function. Metrics are in logarithmic scale to ease visualization.

Hidden units, with an importance score of 0.248 and a correlation of 0.410, also significantly affect the loss, with larger hidden unit sizes (e.g., 2048) leading to higher loss, possibly due to increased model capacity exacerbating overfitting on the noisy dataset. In Figure 8, larger hiddenUnits values may align with yellow lines, though specific clustering is not detailed.

The dropout rate (dropout) is uniformly distributed over the range [0.0, 1.0], covering no regularization (0.0) to full regularization (1.0). Table 4 shows moderate importance score of 0.288, indicating a notable but secondary influence on val_loss compared to numLayers. Its Spearman correlation is weakly negative at −0.095, suggesting a slight tendency for higher dropout rates to reduce validation loss. The performance map shows a uniform distribution of dropout values across the full range, with a mix of purple and yellow lines throughout near to 0.5. This indicates no clear clustering of low val_loss (purple lines) or high val_loss (yellow lines) around this specific dropout rate, with weak and inconsistent effect on model performance. The regularization provided by dropout may mitigate overfitting to some extent as it tends to higher values, but its impact appears highly dependent on interactions with other hyperparameters, such as the number of layers.

The embedding dimension has the lowest importance (0.1) and a near-zero correlation (0.029), indicating minimal impact on the loss function. This suggests that the model’s performance is relatively insensitive to changes in embedding size within the tested range (256 to 1024), possibly because the semantic reasoning task relies more on structural hyperparameters (e.g., layers, hidden units) than on embedding size.

The dropout rate (dropout) is uniformly distributed over the range [0.0, 1.0], covering no regularization (0.0) to full regularization (1.0). Table 4 shows moderate importance score of 0.288, indicating a notable but secondary influence on val_loss compared to numLayers. Its Spearman correlation is weakly negative at -0.095, suggesting a slight tendency for higher dropout rates to reduce validation loss. The performance map shows a uniform distribution of dropout values across the full range, with a mix of purple and yellow lines throughout near to 0.5. This indicates no clear clustering of low val_loss (purple lines) or high val_loss (yellow lines) around this specific dropout rate, with a weak and inconsistent effect on model performance. The regularization provided by dropout may mitigate overfitting to some extent as it tends towards higher values, but its impact appears highly dependent on interactions with other hyperparameters, such as the number of layers.

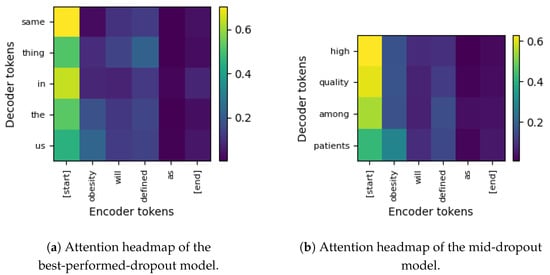

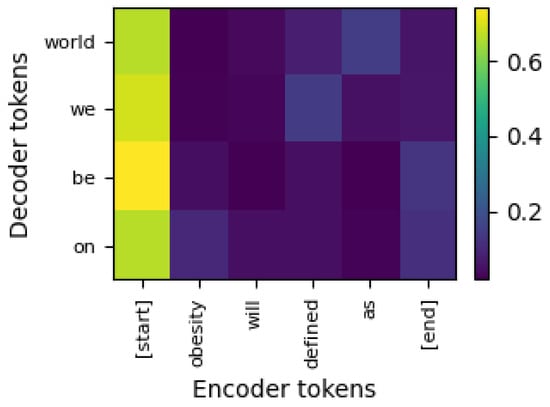

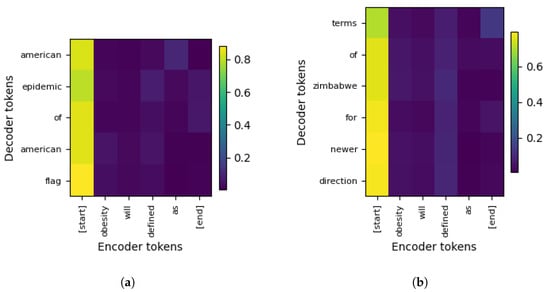

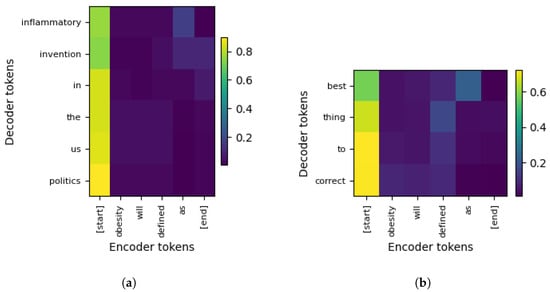

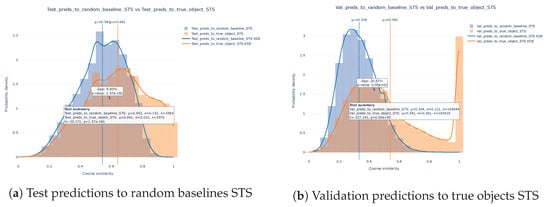

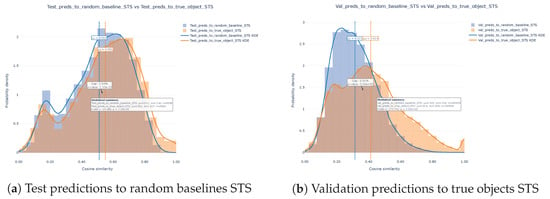

The embedding dimension has the lowest importance (0.1) and a near-zero correlation (0.029), indicating minimal impact on the loss function. This suggests that the model’s performance is relatively insensitive to changes in embedding size within the tested range (256 to 1024), possibly because the semantic reasoning task relies more on structural hyperparameters (e.g., layers, hidden units) than on embedding size.