1. Introduction

Retrieval-Augmented Generation (RAG) architectures have significantly improved the factual grounding of Large Language Models (LLMs) by incorporating external evidence into response generation. However, the integration of retrieved content does not guarantee answer accuracy or faithfulness, as hallucinations and redundant statements still frequently emerge, especially in complex, multi-source environments. While manual evaluations of LLM outputs remain the standard for hallucination detection, they are time-consuming, non-scalable, and often lack granularity. Recent advancements in LLM-based evaluators now offer the potential for scalable, automated, and statement-level assessments of faithfulness, including hallucination, repetition, and source attribution errors.

At the same time, the evolution of agentic RAG architectures, which orchestrates multiple retrieval pipelines (e.g., embeddings, graph traversal, web search), introduces new layers of complexity, and opportunity, for monitoring the origins and quality of generated responses. In contrast to single RAG systems, agentic systems can attribute individual answer components to specific retrieval tools, making it possible to assess hallucination risk and redundancy per pipeline, aiming for further fine-tuning. However, the benefits of this tool-specific granularity for automated evaluation have not yet been rigorously studied.

This study develops and validates an LLM-based framework to address these challenges for automated hallucination at the statements level, with a particular focus on evaluating the role of attribution and architecture in enhancing interpretability, traceability, and system-level reliability.

In addition, we conduct a sophisticated performance evaluation of the most common RAG architectures, analyzing their effectiveness across Question Answering (QA), reasoning depths, and different retrieval modes to comprehensively assess their suitability for structured, policy-relevant AI deployment.

This study aims to address two key objectives:

RQ1: What is the effectiveness and accuracy of content generated by RAG architecture, based on state-of-the-art LLMs for evaluation of both standards and agentic RAG pipelines?

This question explores the effectiveness and accuracy of state-of-the-art LLM judges for identifying unfaithful or repetitive content, comparing their performance across standard RAG and multi-agent retrieval architectures.

RQ2: To what extent does tool-specific attribution within agentic pipelines support more interpretable and granular monitoring of answer faithfulness, compared to single-source RAG systems, under LLM-based evaluation?

This question investigates whether segmenting generated content by RAG tool (e.g., graph, embedding, web) enables better interpretability and quality of answer generation.

1.1. Aims and Objectives

The central aim of this study is to design, implement, and evaluate an automated framework for statement-level hallucination and redundancy detection in RAG systems, with particular emphasis on comparing agentic and non-agentic architectures. This involves exploring how LLM-based evaluators can provide an overview of unfaithful content, and whether tool-specific attribution in multi-agent systems supports deeper insights into retrieval quality and output trustworthiness.

To achieve this aim, the research first constructs a representative evaluation dataset, generating answers from both traditional single-source RAG systems and modular agentic pipelines. It uses e-governance sources to evaluate three common RAG architectures: knowledge graphs, embeddings, and real-time web content.

With e-governance related dataset, the study then overviews the performance of LLM evaluators in detecting hallucinated claims, redundant statements, and unsupported assertions across both architectures. A key objective is to assess whether segmenting responses by their originating retrieval tools, available in agentic pipelines, improves the interpretability, and diagnostic clarity of evaluation outputs.

The study also aims to analyze patterns in hallucination frequency and location across pipelines, enabling insights into retrieval-specific vulnerabilities. Finally, the research seeks to define actionable design recommendations for building faithfulness-aware RAG architectures that are not only robust and informative, but also easier to monitor, explain, and regulate, laying the groundwork for more trustworthy and ethically deployable LLM systems in the public sector and beyond. In addition, we present a sophisticated performance evaluation of graph creation, demonstrating use cases that support e-government services, public communication, and policy intelligence grounded in survey data.

1.2. Study Significance

As RAG systems continue to evolve into modular, agent-driven frameworks, the need for granular, automated faithfulness evaluation becomes increasingly urgent, particularly in governance, legal, and regulatory contexts. This study addresses a pressing gap in current research, by systematically evaluating whether LLM-based hallucination detection methods can meaningfully characterize output quality across diverse retrieval sources. By comparing standard RAG systems to agentic architectures with tool attribution, the study contributes to an understanding of how architectural transparency influences evaluation performance.

Furthermore, our research offers practical tools and reproducible methods for faithfulness auditing contributing to the development of more trustworthy, traceable, and verifiable LLM systems, capable of supporting real-world decision-making in complex and high-stakes domains, such as e-government.

The remainder of this paper is organized as follows:

Section 2 reviews related work on RAG architectures and faithfulness evaluation in LLM-based systems.

Section 3 presents the proposed modular framework, dataset, and experimental design.

Section 4 reports the main results and comparative analyses across RAG pipelines, while

Section 5 discusses key findings, limitations, and practical implications. Finally,

Section 6 concludes the study and outlines future research directions.

2. Background

As LLMs continue to redefine the boundaries of natural language processing, their integration with RAG frameworks has significantly improved factual grounding in open-domain and task-specific question answering. Despite these advances, LLMs remain prone to hallucination, the generation of fluent but incorrect content, and redundancy, particularly in multi-hop or information-dense contexts. Traditional RAG systems, which rely on flat document retrieval, often fail to capture semantic relationships between entities and provide limited support for attribution.

GraphRAG addresses these limitations by incorporating structured representations, such as knowledge graphs and layout-aware textual graphs. This enables relational reasoning, subgraph extraction, and provenance tracking, making GraphRAG particularly well-suited to knowledge-intensive tasks. However, hallucinations persist even in graph-based systems due to noise propagation and ambiguous entity resolution. At the same time, emerging agentic RAG pipelines, which orchestrate multiple retrieval tools (e.g., symbolic graphs, semantic embeddings, and web search), offer modularity and transparency, but their evaluation frameworks remain underdeveloped.

While early work such as [

1] introduced the RAG paradigm by combining parametric and non-parametric memories, subsequent research has increasingly focused on the trustworthiness and faithfulness of RAG outputs. For instance, ref. [

2] demonstrates that citation correctness does not guarantee faithfulness, and [

3] proposes fine-grained monitoring of unfaithful segments in RAG systems. Recent surveys [

4,

5] have also begun to explore graph-based RAG architectures and domain-specific applications, highlighting gaps in retrieval quality, source attribution, and evaluation benchmarks. More recently, ref. [

6] proposes self-supervised faithfulness optimization, signaling the evolving sophistication of this area. By integrating these contributions with our statement-level evaluation framework, our study extends the literature by comparing simple, graph-based, and agentic RAG pipelines in an e-governance domain, using multi-LLM evaluators and a detailed analysis of hallucination and attribution errors.

This study investigates the intersection of these architectures by focusing on statement-level hallucination detection and tool-specific attribution within both simple and agentic systems. By leveraging LLM-based evaluation frameworks, we aim to improve transparency, faithfulness, and trustworthiness of complex QA pipelines across structured domains.

2.1. Symbolic and Graph-Based RAG Architectures

LLMs have transformed natural language understanding and generation, but remain constrained by their static pretraining, often producing hallucinations, confidently generated yet ungrounded or incorrect information. RAG frameworks were introduced to address this limitation by conditioning generation on retrieved external knowledge. However, traditional RAG systems typically rely on unstructured or flat document retrieval, limiting their interpretability, multi-hop reasoning, and transparency in knowledge attribution. GraphRAG has emerged as a promising evolution of RAG, incorporating structured knowledge representations, such as knowledge graphs (KGs) or textual graphs. By enabling explicit reasoning over entity relationships, subgraph traversal, and chain-of-thought generation, it improves both answer fidelity and interpretability. Studies, such as G-Retriever and Graphusion, have demonstrated the potential of graphs in knowledge-intensive tasks, showing their ability to retrieve semantically coherent subgraphs and generate structured responses grounded in high-quality KGs [

7,

8].

GraphRAG encodes knowledge in graph-based structures that capture entity relationships, document layouts, and multi-modal associations, enabling nuanced, semantically grounded, and explainable retrievals [

9]. Unlike flat, vector-based similarity retrieval, GraphRAG supports multi-hop reasoning, semantic disambiguation, and context-aware retrieval [

4]. Recent designs further modularize GraphRAG architectures with dual-level retrieval strategies, combining symbolic graph traversal and embedding-based soft matching to enhance adaptability, domain transferability, and noise reduction [

10].

Frameworks, such as HuixiangDou2, implement dual-level retrieval (keyword-level and logic-form) with fuzzy matching and multi-stage verification, achieving substantial gains in accuracy from 60% to 74.5% with the Qwen2.5-7B-Instruct model on domain-specific datasets, highlighting GraphRAG’s applicability to structured reasoning and hallucination mitigation [

11]. SubgraphRAG employs adaptive triple scoring and Directional Distance Encoding (DDE) to retrieve semantically rich subgraphs without fine-tuning, relying on in-context learning to produce answers and reasoning chains. It further encourages abstention, when evidence is insufficient, reducing hallucinations and improving robustness across LLM sizes [

12].

The GraphRAG pipeline can be formalized into three stages: (1) Graph-Based Indexing (G-Indexing), (2) Graph-Guided Retrieval (G-Retrieval), and (3) Graph-Enhanced Generation (G-Generation). By integrating textual and relational structures, this architecture enhances interpretability and faithfulness, with successful applications in domains such as scientific publishing [

13], government transparency [

14], and chronic disease management [

15].

Traditional RAG approaches, which focus on semantic similarity-based retrieval from flat corpora, often suffer from fragmented retrieval, contextual drift, and loss of semantic relationships, thereby exacerbating hallucination risks. GraphRAG addresses these shortcomings by employing entity-centric subgraph generation and multi-hop reasoning [

13,

16]. Nevertheless, vulnerabilities remain; for instance, GraphRAG under Fire illustrates that while graph-based indexing improves robustness against naïve poisoning attacks, shared entity relations can propagate misinformation across multiple queries [

17].

Hallucination, defined as the generation of factually incorrect content, remains a critical issue for both standard and graph-based RAG systems. While RAG aims to improve factual accuracy by conditioning on retrieved evidence, models still hallucinate when context is insufficient or misaligned with the query. Traditional evaluation metrics (e.g., BLEU, ROUGE) fail to capture factual consistency, prompting the adoption of LLM-based evaluators that assess hallucination and faithfulness at the statement level by cross-validating claims with retrieved evidence [

10].

Structured retrieval variants, such as PathRAG and PolyG reduce noise via path pruning and adaptive traversal [

18,

19], while HyperGraphRAG extends coverage to n-ary relational facts for richer domain-specific representations [

20]. Recent advances, such as SuperRAG, introduce layout-aware graph modeling (LAGM) to preserve structural hierarchies like section headers, tables, and diagrams, enabling more accurate retrievals in multimodal contexts [

21]. PathRAG and PolyG similarly manage graph complexity and retrieval overhead, while maintaining relevance [

9,

22]. Frameworks, including GraphRAG-FI, DynaGRAG, and CG-RAG, combine symbolic reasoning, dynamic evidence weighting, and hierarchical citation networks to improve retrieval accuracy, semantic richness, and attribution [

23,

24].

Agentic RAG pipelines extend this paradigm by orchestrating multiple retrieval modalities, symbolic graph traversal, dense semantic search, and real-time web retrieval through a unified orchestration layer. This enables fine-grained, tool-specific attribution, tracing which pipeline component contributed to each segment of a generated response, and thereby improving transparency and faithfulness evaluation. Graphusion advances this concept with fusion-based graph operations, such as entity merging and conflict resolution between expert-annotated and LLM-generated subgraphs, proving particularly effective for scientific knowledge curation from ACL papers and enhancing relation consistency across models like GPT-4 and LLaMA3 [

8].

2.2. Embedding, Web Search and Hybrid Retrieval

Parallel efforts in LLM-based evaluation frameworks, such as MLLM-as-a-Judge and GraphEva, investigate how large models can serve as reliable evaluators for generated content, enabling automated hallucination and faithfulness scoring at the statement level. Despite their promise, current evaluation methods often lack fine-grained tool attribution, making it difficult to trace specific factual claims in generated answers back to their retrieval sources, whether symbolic graphs, semantic embeddings, or live web search [

25,

26]. RAG has become a pivotal strategy to mitigate hallucinations and strengthen the factual grounding of LLMs by enriching prompts with contextually relevant knowledge. Nevertheless, traditional RAG systems typically operate on flat, unstructured corpora and lack visibility into how retrieved information contributes to generated outputs. This opacity poses challenges for diagnosing hallucinations and redundancies, especially in high-fidelity domains, such as medicine, law, and scientific research [

27].

A complementary research direction examines the limitations of current LLM-based evaluation methods. State-of-the-art models like GPT-4 and GPT-4o are increasingly employed, not only for content generation, but also as evaluators of factuality, redundancy, and consistency. LLM-as-a-Judge (LLM-J) frameworks assess faithfulness at the statement level, providing interpretability aligned with human judgment. However, these evaluations are typically holistic, lacking the granularity to identify which retrieval tool, embedding-based search, web search, or graph traversal produced each specific output segment [

28].

Addressing this gap, recent works incorporate tool-specific attribution into agentic RAG pipelines, where modularized agents specialize in different retrieval modes and annotate generated responses with provenance tags. This segmentation enables statement-level hallucination diagnosis, detection of redundant agent contributions, and dynamic routing of sub-queries to the most suitable tool depending on semantic or structural requirements [

27,

28].

Several retrieval architectures complement these evaluation-focused innovations. SubgraphRAG implements scalable, lightweight retrieval by scoring graph triples and extracting semantically compact subgraphs. This adaptability allows it to accommodate LLM context length constraints and to tailor retrieval strategies for both small and large models, while reducing hallucinations by grounding reasoning in structured subgraphs.

SuperRAG advances multimodal reasoning by transforming text, tables, and diagrams into layout-aware graphs, a capability critical for domains like e-government, where document structure and multi-modal context heavily influence extraction quality. It integrates layout analysis, graph construction, and multi-retriever pipelines (e.g., vector search, graph traversal) to enhance both retrieval relevance and downstream QA performance.

Comparative studies, such as RAG vs. GraphRAG, reveal that GraphRAG surpasses vanilla RAG on multi-hop QA and complex summarization tasks, whereas RAG excels in retrieving fine-grained factoid information. These findings highlight the value of combining both paradigms, particularly in hybrid or agent-based systems designed to minimize hallucinations through fine-grained content tracing and tool-specific attribution [

25].

Traditional RAG architectures are typically monolithic, treating retrieval as a single unified process. In contrast, agentic RAG frameworks decompose retrieval into modular, source-specific agents, such as graph retrievers, dense embedding retrievers, and web search modules, enabling more granular attribution of evidence to system components. This modular design enhances scalability, interpretability, and the potential for tool-specific hallucination detection and redundancy mitigation [

29].

Similarly, approaches like GRAG and FastRAG adopt hybrid, task-adaptive retrieval pipelines, combining structural, semantic, and symbolic methods to achieve superior factual grounding and reduced hallucinations, particularly in domains involving semi-structured data, healthcare, and scientific reasoning [

30,

31]. Recent comparative analyses across tasks such as single-hop QA, multi-hop QA, and query-focused summarization confirm that, while RAG remains strong in factoid retrieval and short-form QA, GraphRAG outperforms in multi-hop reasoning, complex summarization, and managing incomplete or distributed knowledge. In scenarios requiring traversal of entity chains or synthesis of contrasting viewpoints (e.g., multi-year, multi-country survey analysis), GraphRAG consistently provides a performance edge.

Moreover, hybrid approaches, either dynamically selecting between RAG and GraphRAG based on query type or integrating their outputs, achieve statistically significant improvements over method alone [

25,

32]. These results support the rationale for hybrid architectures that combines symbolic (graph) and dense (vector + web) retrieval within policy intelligence systems.

2.3. Terminology and Conceptual Definitions

To ensure terminological clarity, the following definitions are adopted throughout this study:

Faithfulness refers to the degree to which a model’s generated content accurately reflects and aligns with the retrieved or provided source evidence. It serves as the overarching criterion for factual reliability in RAG systems.

Factual grounding denotes the process of conditioning a model’s responses on explicit, verifiable information from external sources, such as databases, graphs, or web content. It represents the mechanism through which faithfulness is achieved.

Hallucination describes any generated statement that lacks support in the retrieved sources or contradicts the underlying evidence.

While these concepts are closely related, they serve distinct roles in our evaluation framework: factual grounding is a design property of the RAG pipeline, faithfulness is the measurable outcome of that property, and hallucination represents its primary failure mode. This consistent terminology is applied across all sections of the paper to avoid ambiguity.

2.4. Hallucination and Redundancy Evaluation Frameworks

By systematically evaluating hallucination and redundancy at the statement level and incorporating attribution-aware evaluation, this study advances the goal of trustworthy generation in structured retrieval contexts. The proposed approach evaluates RAG pipelines not only on output quality but also on the transparency, granularity, and reliability of their internal processes. Leveraging LLM-based evaluators that operate at the statement level and embedding tool-specific provenance enables a deeper understanding of how different components symbolic, semantic, or real-time, contribute to final answers, improving both performance and trustworthiness.

Hallucinations remain a critical limitation of LLMs, particularly when the content generated is unsupported by either pre-trained knowledge or retrieved evidence. While RAG architectures mitigate this risk by grounding responses in retrieved text or structured data, they are not immune to introducing hallucinations. Systems without fine-grained evaluation mechanisms often inject irrelevant or misleading information, due to poor retrieval precision or overreliance on extraneous data. In parallel, redundancy repeated inclusion of similar or overlapping content reduces efficiency and interpretability [

33].

To address these issues, new evaluation frameworks use LLMs not only as generators but also as judges, assessing hallucination, redundancy, and factual alignment at the statement level. Benchmarks, such as DeepEval, MLLM-as-a-Judge, and SELF-RAG, exemplify this trend, employing attention scores, logits, and context-aware scoring to quantify and explain hallucination sources [

33,

34].

Structured retrieval approaches further reduce hallucinations by constraining generation to validated domain-grounded facts. For example, GraphRAG for Finance and G-Retriever leverage knowledge graphs to focus reasoning on verified triples, while HybridRAG and FactRAG apply pre-generation filtering to construct and validate subgraphs before passing them to the LLM. Notably, G-Retriever formalizes retrieval as a Prize-Collecting Steiner Tree optimization problem, ensuring subgraph construction balances relevance, connectivity, and semantic coverage enhancing both explainability and accuracy [

7,

10].

Recent advancements shift from monolithic RAG architectures toward multi-agent (agentic) systems, where specialized retrieval modules graph-based, dense embedding, and web-search agents operate in parallel or sequence. These agentic frameworks enable tool-specific attribution, allowing evaluators and users to trace each segment of an answer back to its originating source. Such provenance tracking is vital for fine-grained error diagnosis, as demonstrated by CypherBench for structured query evaluation [

35] and GraphEval for viewpoint-based content scoring [

36]. Moreover, modular architectures can reduce hallucinations through internal redundancy checks and cross-agent verification, particularly when paired with statement-level evaluators. Tools like MLLM-as-a-Judge, SELF-RAG, and GraphEval-GNN exemplify this next generation of automated faithfulness scoring, offering rigorous and scalable validation pipelines [

15,

36].

The move toward statement-level diagnostics allows LLM evaluators to verify individual claims within generated outputs, improving detection of nuanced hallucinations that may be contextually subtle or structurally embedded in complex answers. Approaches such as IdepRAG and Hypergraph-based GraphRAG increase robustness by encoding multivariate relationships and prioritizing evidence based on statement-specific usefulness scores, reducing the likelihood of superficial or semantically misaligned responses [

37]. This capability is especially important in domains requiring structured, interconnected knowledge, such as legislative corpora, citation networks, or scientific datasets, where single-source RAG may overlook critical relational dependencies.

Such approaches are particularly relevant in applications involving structured, interconnected information (e.g., legislative texts, citation graphs, and scientific datasets), where single-source RAG may miss critical relational dependencies. Statement-level evaluation frameworks, when combined with tool-specific attribution, allow researchers to dissect whether hallucinations arise from model bias, retrieval error, or faulty integration.

LLM-based evaluators now present a viable alternative to human-in-the-loop validation, offering the ability to assess hallucinations, redundancy, and factual consistency at the sentence or claim level—capabilities far beyond traditional BLEU-style metrics. For example, GraphEval applies viewpoint decomposition and graph-based label propagation to score originality and correctness, revealing prompt sensitivity and latent biases in LLMs [

36]. Systematic comparisons further show that RAG performs well for short, factoid-style QA, while GraphRAG excels in tasks requiring reasoning, aggregation, or document-wide synthesis. Hybrid strategies, whether via query-based routing (selection) or output integration consistently outperform single-method approaches, reinforcing the value of combining symbolic (graph) and dense (vector + web) retrieval in architectures designed to maximize faithfulness and minimize hallucination.

2.5. Trustworthiness, Verification & Advanced RAG Control

Recent advances in RAG aim to address core limitations of LLMs, such as hallucinations, knowledge cutoffs, and lack of attribution, by dynamically integrating external knowledge sources into the generation process [

38]. Beyond conventional pipelines, emerging approaches leverage hybrid retrieval techniques, domain-specific knowledge graphs, and adaptive query strategies to improve factual grounding and reasoning capabilities.

Adaptive and graph-based RAG architectures, such as WeKnow-RAG, combine multi-stage web retrieval with structured knowledge representations to enhance accuracy and trustworthiness in real-world scenarios [

39]. In long-form and complex question answering, reinforcement learning frameworks like RioRAG optimize informativeness and factual completeness via nugget-level reward modeling, reducing unsupported content, while preserving coherence [

40]. These developments align with a broader shift toward faithfulness-aware evaluation and optimization, a focus central to this study’s aim of assessing and improving the reliability of agentic RAG systems in knowledge-intensive applications.

Persistent challenges in RAG pipelines stem from both retrieval and generation stages, including incomplete or inaccurate evidence and inconsistencies between generated outputs and supporting references. The Chain-of-Verification RAG (CoV-RAG) framework addresses these issues through a verification module that scores, judges, and revises retrieved documents and generated responses, enabling iterative refinement via query reformulation and factuality checks [

41]. Complementary to such technical advances, recent surveys have stressed the need for trustworthiness in RAG encompassing reliability, privacy, safety, fairness, explainability, and accountability especially in high-stakes domains, where factual grounding and bias mitigation are critical [

42].

Several architectures explicitly focus on improving factual grounding. FaithfulRAG introduces fact-level conflict modeling to detect and mitigate discrepancies between retrieved content and generated text. SFR-RAG integrates semantic filtering and reranking to improve contextual fidelity, while other work distinguishes correctness from faithfulness, noting that accurate answers may still lack evidence support. Domain-specific systems, such as context-aware travel assistants, demonstrate the benefits of hybrid retrieval approaches (e.g., HyDE, GraphRAG) and persona-based augmentation for improving retrieval precision and generative relevance. Collectively, these advances emphasize a dual focus on retrieval optimization and generative verification, aligning closely with this study’s objective of strengthening faithfulness in agentic RAG [

43,

44].

While many evaluations assess only answer or citation correctness, correctness alone does not ensure grounding in retrieved evidence. The concept of citation faithfulness addresses this by requiring a causal link between cited documents and generated statements, avoiding post-rationalization and misplaced trust [

2]. Synchronous Faithfulness Monitoring (SYNCHECK) offers a lightweight, real-time detection mechanism that combines sequence likelihood, uncertainty estimation, context influence, and semantic alignment to identify unfaithful sentences during decoding [

45]. These synchronous interventions, combined with faithfulness-oriented decoding, improve the grounding of generated outputs, while balancing informativeness and reliability.

For domain personalization, context-aware assistants integrate strategies like HyDE and GraphRAG with persona-driven prompt adaptation to enhance contextual relevance and reduce hallucinations [

46]. On the structured knowledge side, FiDeLiS combines deductive-verification beam search with Path-RAG reasoning to anchor inference in verifiable KG steps, lowering hallucination rates, while maintaining computational efficiency [

47]. Together, these approaches demonstrate the value of coupling retrieval optimization with reasoning verification to achieve faithful, interpretable, and contextually grounded outputs.

Traditional RAG pipelines, which follow a static retrieve-then-generate pattern, often suffer from shallow reasoning, limited adaptability, and retrieval inadequacy in complex tasks. This has led to Synergized RAG–Reasoning systems, where reasoning guides retrieval and evidence iteratively informs reasoning, enabling deeper inference and improved factuality [

48]. Trustworthiness efforts in these settings include metrics like TRUST-SCORE, which measures groundedness via refusal accuracy, answer correctness, and citation validity, and alignment methods like TRUST-ALIGN, which train models to refuse when evidence is insufficient and produce verifiable citations [

49]. These methods signal a shift toward adaptive, evidence-grounded agentic RAG frameworks.

Retrieval-Free Generation (RFG) offers speed advantages, but is more prone to hallucinations, due to reduced explicit grounding. Methods such as RA2FD distill the faithfulness capacity of RAG “teacher” models into efficient RFG “students” using sequence-level distillation and contrastive learning, narrowing the trade-off between accuracy and efficiency [

50]. Evaluation frameworks like FRANQ complement these efforts by differentiating faithfulness (alignment with retrieved evidence) from factuality (objective correctness), enabling targeted uncertainty quantification for improved hallucination detection [

51]. These works highlight the importance of integrating knowledge infusion, faithfulness-aware evaluation, and efficiency-driven design for developing trustworthy LLM pipelines in both simple and agentic RAG scenarios.

Although large-context models can process entire documents, studies show that well-designed RAG pipelines can match or exceed their performance, while reducing computational cost and latency [

52]. Research in this area emphasizes the role of retrieval strategies, ranking mechanisms, and prompt engineering in ensuring generated output remains grounded in retrieved evidence, rather than relying on parametric memory [

53]. Additional work demonstrates that combining retrieval quality metrics with output verification improves both correctness and contextual grounding, underscoring the need for systematic evaluation frameworks in high-trust settings.

In multimodal contexts, RAG introduces further risks of selection hallucination and context-generation hallucination when retrieved visual or textual elements are irrelevant or incorrectly processed. The RAG-Check framework addresses this with relevance scores (RS) and correctness scores (CS) to quantify retrieval quality and response faithfulness in multimodal RAG [

54]. Recent advances also shift toward agentic search, where models autonomously plan, iteratively query, and integrate information from multiple sources. Conventional evaluation frameworks often fail to capture such complexity, focusing narrowly on final answers or overlooking intermediate reasoning and tool usage. RAVine addresses these gaps with fine-grained, attributable nugget-based assessments combined with process-oriented and efficient metrics, enabling more comprehensive analysis of task completeness, faithfulness, and tool effectiveness [

55]. Complementary efforts, including RAG-Check and large-context comprehension benchmarks, reinforce the need for robust, interpretable, and cost-efficient evaluation methods that reflect real-world, multi-agent, and multimodal information seeking scenarios [

56].

2.6. Necessity and Feasibility of the Proposed Study

Recent advances in LLMs have significantly improved text generation quality; however, the faithfulness and factual reliability of RAG systems remain underexplored particularly in high-stakes, evidence-dependent domains, such as e-Governance. Existing research primarily focuses on domain-general question answering or open-domain retrieval, with limited emphasis on statement-level evaluation and agentic multi-tool reasoning. This gap highlights the necessity for a framework capable of systematically quantifying hallucination, redundancy, and attribution across diverse RAG architectures.

The feasibility of the proposed approach is ensured by the maturity of current LLM APIs (e.g., GPT-4.1, Claude Sonnet-4.0, Gemini 2.5 Pro), which enable consistent, scalable, and reproducible evaluation at the statement level, as well as by the structured nature of publicly available European Commission (EC) press releases, which provide a reliable and policy-relevant testbed. Together, these factors establish both the need and practical viability of the study’s experimental design.

2.7. Main Innovation Points of This Study

Building on the identified research gaps, this study introduces several key innovations that advance the evaluation of RAG systems:

Statement-Level Faithfulness Evaluation: We utilize a fine-grained, LLM-driven framework that evaluates factual alignment at the statement level, capturing nuanced forms of redundancy and conflict often overlooked in prior work.

Cross-Evaluator Consistency Analysis: The framework leverages three distinct LLM families (GPT-4.1, Claude Sonnet-4.0, Gemini 2.5 Pro) to assess inter-model reliability and mitigate evaluator bias.

Application to E-Governance Contexts: We apply the framework to official EC press releases, offering the first large-scale assessment of faithfulness in high-stakes, policy-relevant AI systems.

Open and Reproducible Design: All prompt templates, low-code source code, and evaluation scripts are documented and released for replication and extension.

These innovations contribute to a practical, transparent, and domain-grounded methodology for advancing trustworthy LLM applications in public-sector decision support.

3. Technical Architecture and Solution

The technical architecture presented in this research is designed to be modular and rigorously evaluate and improve the factual reliability of RAG systems for e-governance applications, from single-pipeline configurations to complex agent-based orchestration. Using publicly available e-governance data from the EC’s official online platform for media and public communications, Press Corner integrates four complementary components.

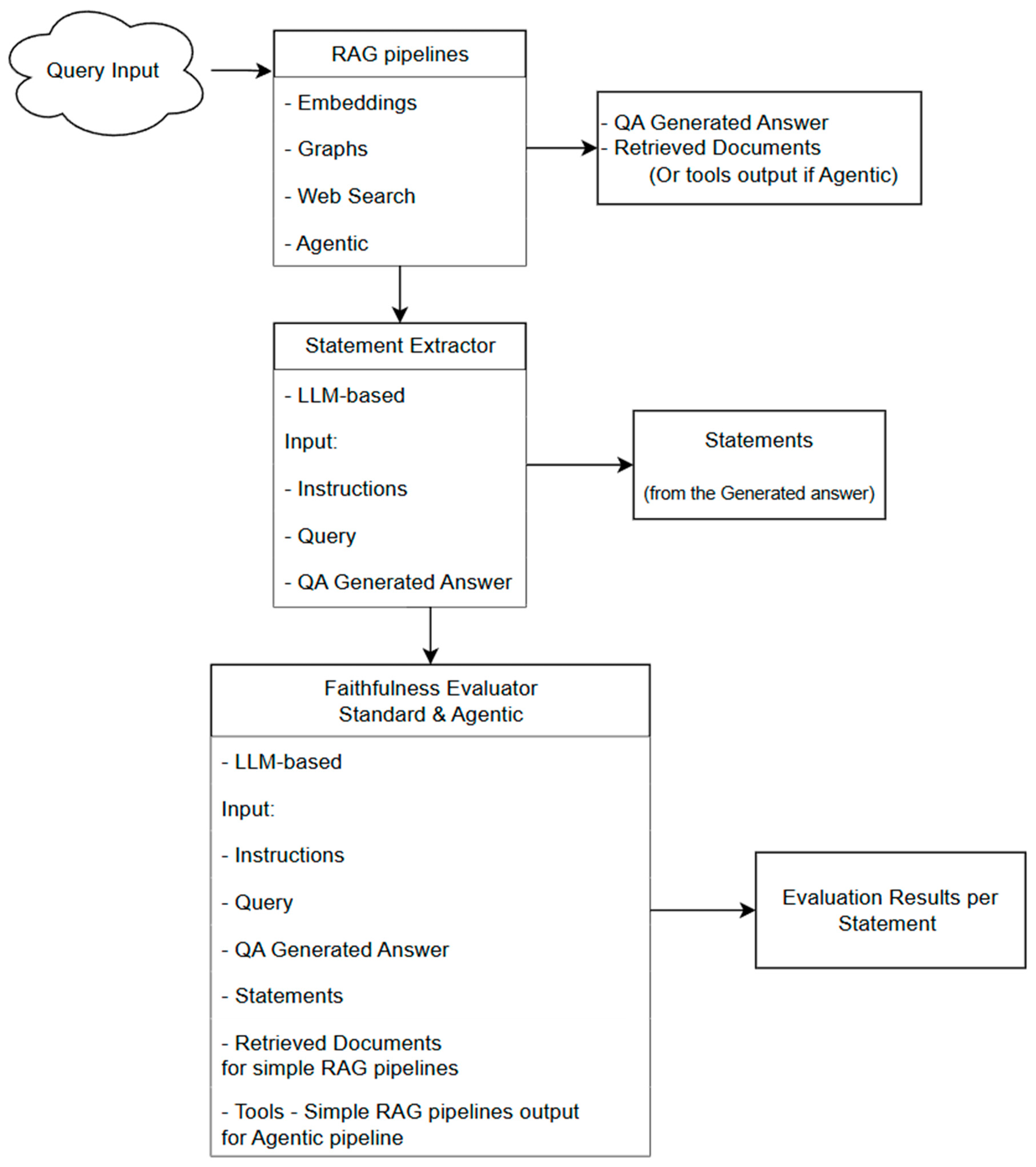

Firstly, a modular RAG orchestration framework, as presented in

Figure 1, built on embedding-based, graph-based, and web-based retrieval pipelines. Secondly, a triples evaluation configuration for verifying the accuracy and completeness of knowledge graph construction. Thirdly, a faithfulness assessment configuration for measuring the factual grounding of standard RAG pipelines. Lastly, an agentic RAG faithfulness assessment, enabling fine-grained evaluation of multi-source reasoning, attribution, and conflict handling. Each component is designed to be reproducible, model-agnostic, and traceable, with standardized prompts, deterministic evaluation settings, and structured outputs that facilitate large-scale benchmarking. For the experiments, Goggle Colab was used (Intel Xeon CPU at 2.20 GHz, 12.67 GiB RAM, NVIDIA Tesla T4 with 14.74 GiB memory, CUDA 12.4 support, Operating Environment: 64-bit x86_64 architecture with little-endian byte order, Python 3.11.13. The low-code source is openly available at

https://github.com/gpapageorgiouedu/Evaluating-Faithfulness-in-Agentic-RAG-Systems-for-e-Governance-applications-LLM-Based (accessed on 9 September 2025).

The faithfulness evaluation process of the proposed framework, as presented in

Figure 1, begins with an input query that is passed through three baseline RAG pipelines and an agentic multi-tool pipeline. Each pipeline generates an answer, along with its supporting retrieved documents. The generated answer, together with the original query, is then processed by an LLM-based statement extractor, which identifies and isolates individual factual statements. These statements are subsequently evaluated by LLM-based judges against the retrieved documents and the generated answer to determine factual consistency and potential redundancy; in the agentic case, this judgment is instead grounded in the outputs of the baseline RAG pipelines, which function as its evaluated evidence layer. This modular workflow enables a systematic, pipeline-agnostic comparison of hallucination and redundancy patterns across both traditional and agentic RAG architectures.

Together, these elements provide a transparent and extensible solution for assessing and improving RAG performance in domains that demand high accuracy, explainability, and evidence-backed reasoning.

3.1. Data Sources

For our chosen use case, centered on the public sector and e-governance, we sourced data from the Press Corner, the EC’s official online platform for media and public communications. To focus on specific topics and find related data, we conducted a search for four key subjects: the Clean Industrial Deal, Invest EU, US tariffs and REPowerEU. For those topics, aiming to conduct a broader experiment covering different subjects, we selected the 36 most recent press releases and statements; ten for each, with four documents to overlap between subjects, resulting in total 36 documents. In addition to the main content, we captured titles and publication dates as supplementary metadata.

We also integrated SerpAPI [

57] via Haystack’s [

58] live content retrieval components, using it to perform a Google search and extract the five most relevant results. To support modular data orchestration within Haystack, our setup allows for easy inclusion of additional sources in various formats (e.g., PDF, CSV, TXT), including the corresponding converters, enabling users to upload and process their own selected content with minimal adjustments.

3.2. RAG Orchestration

The assessed RAG orchestration is based on three standard RAG pipelines, one based on embeddings, one based on graphs and a last one based on Web Search. The complete information regarding architecture can be found in [

59]. This work is based on previous research as referenced, using the same architecture, with a slight modification to the RAG backend prompt, which was optimized for conducting a complete LLM-based faithfulness evaluation. Each of those pipelines has a distinctive indexing process, based on the data handling, using embedding-ada-002 model from OpenAI [

60] for embeddings calculation, along with GPT-4.1-mini [

61] (gpt-4_1-mini-2025-04-14) for text generation, with a temperature of 0 (fully deterministic generation) and top_p of 0 (restricting output to the highest-probability tokens only).

The GraphRAG pipeline is built on two core processes, the indexing and the retrieval/generation, while both were designed to be modular and with adjustable configuration. In the indexing phase, we used GPT-4.1-mini to extract structured factual triples from unstructured documents, which were then stored in a Neo4j graph database. Each triple (subject, relation, object) was linked to its source, ensuring semantic richness and traceability. For the initial X documents, this process yielded X triples, X unique relationship types, and X unique entity types, all captured reliably and without ingestion errors.

For retrieval and answer generation, the system leverages the knowledge graph to respond to user queries. Search terms are extracted and used to generate dynamic Cypher queries to find relevant entities and relationships. Retrieved results are re-ranked for relevance using the cross-encoder model intfloat/simlm-msmarco-reranker, which predicts how well each document matches the query. The top documents are then used to build a prompt for GPT-4.1-mini, which generates a markdown-formatted answer strictly grounded in the graph-based knowledge, with inline citations to original sources. This approach enables accurate, transparent, and explainable responses, well-suited for domains that demand high reliability.

The embedding pipeline adopted a modular two-stage process, comprising embedding-based indexing and querying, designed for adaptability across diverse applications. During indexing, JSON documents containing text, titles, and source URLs were cleansed and then embedded using OpenAI’s embedding-ada-002 model to generate semantic vectors, which were stored in Neo4j with cosine similarity enabled for efficient retrieval. The system supported different embedding models as needed, and all document processing was managed to ensure clean, high-quality input for embedding.

For querying, the pipeline used a RAG approach: user queries are embedded and matched against stored document vectors to emerge the most relevant content. Results were then reranked for relevance with intfloat/simlm-msmarco-reranker, before being passed to a prompt builder for answer generation, using GPT-4.1-mini. The prompt enforced strict grounding in the retrieved documents and includes inline HTML links to the original sources for transparency. This setup maintained high flexibility, allowing integration of various open-source and commercial models, ensuring reliable, context-aware responses grounded in cited evidence.

The web-based RAG pipeline was built for real-time content retrieval and generation, leveraging GPT-4.1-mini for answer synthesis. In this approach, live web searches were performed using SerpAPI, and content was extracted from URLs with LinkContentFetcher, allowing the pipeline to collect up-to-date information in direct response to user queries dynamically. Retrieved web pages were processed to extract clean text, titles, and source URLs, ensuring all content was structured consistently for downstream processing.

To ensure relevance and diversity, the pipeline used intfloat/simlm-msmarco-reranker to refine the top five document snippets before generating a response. The prompt builder then enforced strict grounding in the retrieved content, structuring answers in Markdown and citing sources with inline HTML links for transparency. If no sufficient evidence was found, the system returns an “inconclusive” statement, maintaining clarity and reliability. By combining live web search, RAG methods, and modular components, this pipeline delivered timely, well-sourced, and transparent answers grounded in the latest available information.

The agentic configuration combined the embedding pipeline, the GraphRAG pipeline, and web search as modular tools, allowing each to leverage its unique retrieval method for comprehensive and adaptable responses. Queries were processed through all three specialized pipelines, embedding-based internal document search, graph-based retrieval internal document search, and real-time web search, with outputs standardized for seamless multi-tool integration. Each pipeline-maintained modularity and processed its respective data sources independently, ensuring scalability and consistency within the agentic framework.

When a query was received, an agent-based RAG approach coordinated the three retrieval tools in parallel, synthesizing structured reasoning using insights from embeddings, the knowledge graph, and live web results. A customizable system prompt guided the agent and used GPT-4.1 [

61] as the final answer generator, enforcing step-by-step logic and clear separation between internal and web-derived information. Responses were formatted into defined sections “thought process”, internal search findings, web insights, and any conflicts identified between sources, with inline HTML links for source attribution. This design supports transparent, robust, and nuanced answers, making it ideal for complex domains such as policy research and e-government.

3.3. Triples Evaluation Configuration

The triples evaluation configuration is designed to provide a rigorous, transparent assessment of the accuracy and completeness of extracted knowledge triples. For each document, using GPT-4.1, the original text and its corresponding set of extracted triples are submitted to an evaluation workflow powered by a large language model with the prompt configuration in

Table A2 in

Appendix A. This process systematically reviewed each generated triple, determining if it was “Correct” (fully supported by the underlying text) or “Hallucinated” (not directly substantiated by the passage). Additionally, the LLM-based evaluator supplied concise, context-sensitive explanations for each classification, offering clear insights into why a triple is considered accurate or inaccurate.

In addition to validating existing triples, the configuration is attentive to recall by instructing the evaluator to identify any factual triples present in the text that were not captured by the extraction process, reporting these as “missed triples.” This dual focus ensured that both over-generation (hallucinations) and under-generation (misses) were captured, providing a more holistic view of extraction performance.

The evaluation methodology emphasizes structure and consistency, requiring the LLM to return all results in a standardized, machine-readable format suitable for further analysis and benchmarking, with the prompt configuration presented in

Table A1 in

Appendix A. Moreover, detailed metadata, such as processing latency and input token counts, were logged for each evaluation, supporting robust comparisons of extraction faithfulness across large document sets.

By leveraging LLM-driven evaluation with transparent output requirements and comprehensive coverage of both precision and recall, the built LLM-based triples evaluation component configuration delivers a highly explainable and reproducible framework for diagnosing strengths and weaknesses in information graph creation. The approach is designed with the aim of being well-suited for complex domains where accuracy, completeness, and traceability are critical.

3.4. Simple RAG Faithfulness Assessment Configuration

To assess the factual reliability of the RAG pipelines, a dedicated faithfulness evaluation component was built and employed. This component is designed as a modular architecture that can interface with different language model providers. It supports multiple evaluation backends, allowing the use of state-of-the-art models from OpenAI (GPT-4.1) [

61], Anthropic (Claude Sonnet-4.0) [

62], and Google (Gemini-2.5-Pro) [

63] to independently assess the grounding of each generated answer. First, for every generated answer, using GPT-4.1, the model was instructed as presented in

Table A1 in the

Appendix A, to extract factual statements from the generated answer.

For every question, the associated context passages and the list of extracted factual statements are passed to the evaluator. The evaluator then prompts the chosen model with standardized scoring instructions, with the prompt configuration, as presented in

Table A4 (

Appendix A), ensuring a consistent assessment regardless of the provider. Each statement is assigned a score based on whether it is explicitly supported by the retrieved evidence, unsupported, or marked as inconclusive. Special handling is applied for inconclusive cases, distinguishing between justified uncertainty, where the context truly lacks sufficient information, and unjustified uncertainty, where a definitive answer was in fact available.

Multiple evaluator models are employed intentionally, with each model independently assessing the faithfulness of a single pipeline. This approach leverages the complementary strengths of different providers, reducing the risk of evaluator bias and increasing the robustness of the evaluation. By incorporating diverse evaluation perspectives, it ensures a more reliable and model-agnostic comparison of pipeline performance.

The evaluation results include per-statement scores and justifications, along with an aggregated score representing the overall alignment between the answer and its supporting evidence. This architecture enables the interchangeable use of multiple evaluator models while preserving a unified output format, ensuring comparability across the embedding-based, graph-based, and web-based retrieval pipelines.

3.5. Agentic RAG Faithfulness Assessment Configuration

Moreover, we assessed the Agentic pipeline by building and using a custom LLM-based evaluator as a haystack component. The agent faithfulness evaluation configuration was designed to strictly assess the grounding, attribution, and conflict handling of answers produced by the agent-based RAG orchestration. This evaluation, similar to the standard RAG pipelines evaluator, was conducted using three independent evaluator backends, OpenAI’s GPT-4.1 (gpt-4_1-2025-04-14), Anthropic’s Claude Sonnet-4.0 (claude-sonnet-4-20250514), and Google’s Gemini-2.5-Pro (gemini-2.5-pro), all configured with temperature 0 (and top_p of 0 for GPT) to ensure deterministic and reproducible results aimed at producing stable scoring outputs across repeated runs, enabling reliable benchmarking of agent performance.

Each evaluation instance was provided with the following inputs: the original query, the predicted answer generated by the agent, the embedding search context, the graph search context, the web search context, and a pre-extracted list of factual statements from the answer. The evaluator was explicitly instructed, as presented in

Table A5 (

Appendix A), not to extract statements on their own, but to analyze and score only the provided list, ensuring controlled and auditable scoring.

For each statement, the evaluator applied a standardized (configurable) scoring rubric (discrete, not continuous) tailored to multi-source RAG assessment. This rubric covered a broad set of conditions, as follows:

Whether a statement was supported exclusively by the embedding search context, the graph search context, or by both (scored 1, 2, or 3, respectively).

Whether a statement represented a conflict between internal knowledge and web search findings, and if so, whether this conflict was correctly identified and explicitly marked (score 4 for correct conflict identification, score 5 for incorrectly marked conflicts, and score 6–7 for unmarked conflicts).

Whether a statement was unsupported by any context (score 0).

Whether a statement originated from the web context and was properly attributed (score 9 for correct attribution, or −9 if the web attribution was missing).

Whether the answer explicitly indicated the absence of conflict, where none existed (score 8).

Each score was accompanied by a concise justification, referencing the specific context(s) that either supported the statement or demonstrated its conflict. This ensured traceability and a clear link between each evaluation decision and the underlying evidence.

The evaluation prompt was carefully designed to enforce strict JSON output formatting, with one object per statement, maintaining the exact wording of each statement. This structure enabled automated aggregation of evaluation results and further analysis of agent behavior. By combining a deterministic evaluation setup, a detailed conflict-aware scoring rubric, and multi-model redundancy across three evaluator backends, the faithfulness evaluation provided a transparent and reproducible method to measure how well the agent grounded its answers in retrieved evidence and properly attributed its sources.

This configuration ensured that evaluation results not only highlighted factual correctness but also exposed weaknesses in conflict handling and source attribution, providing a fine-grained diagnostic framework for improving multi-source RAG agent reliability.

4. Results

The following section presents the results of a comprehensive evaluation of the simple RAG and Agentic pipelines, focusing on factual grounding, retrieval accuracy, and source attribution. Results start with the triple extraction component, which underpins the graph-based retrieval pipeline and was assessed for accuracy and completeness using a precision-recall framework. This is followed by a detailed comparison of the embedding-based, graph-based, and web-based RAG pipelines, evaluated independently by three leading large language models: GPT-4.1, Claude Sonnet-4.0, and Gemini 2.5 Pro. Each model assessed the factual alignment of generated responses with the retrieved context across pipelines. Finally, the section concludes with the evaluation of the agent-based RAG orchestration, which integrates all retrieval modes. This part examines how effectively the agent reasons over multi-source inputs, flags and resolves conflicts, and attributes evidence. The tables and narrative that follow detail the performance of each component, providing a grounded view of reliability and areas for improvement across the system.

4.1. Triples Extraction Evaluation

The triple extraction component was evaluated on a set of 36 documents, resulting in the assessment of 1591 extracted factual triples, while 1589 were classified as correct, and only 2 were identified as hallucinated, reflecting an extremely high precision of 0.999 as presented in

Table 1. This indicates that the system almost never generates unsupported triples. Moreover, the evaluation identified 63 missed triples; factual information present in the text that was not captured during extraction, resulting in a recall score of 0.962. While slightly lower than precision, this still represents a strong ability to extract relevant facts comprehensively. Precision ensures reliability by minimizing hallucinations, ensuring that the factual information is captured, while high recall is critical when the goal is comprehensive coverage of official documents. Lastly, the combined F1 score of 0.980 demonstrates a high overall performance, balancing accuracy with completeness.

These results confirm that the triple extraction pipeline is highly reliable and exhibits minimal hallucination, making it a solid foundation for graph-based retrieval systems in domains like e-governance that demand traceable factual accuracy.

4.2. Simple RAG Pipelines Faithfulness Evaluation

The following subsection presents a comparative assessment of the three RAG pipelines, embedding-based, graph-based, and web-based, based on evaluations conducted by GPT-4.1, Claude Sonnet-4.0, and Gemini 2.5 Pro. Each model independently judged the factual grounding of responses, highlighting the relative reliability of each retrieval approach and revealing subtle differences in how each model interprets support, conflict, and uncertainty. The results below provide a breakdown of performance per model across all three retrieval pipelines.

Regarding GPT-4.1-based faithfulness evaluation (

Table 2), all three simple RAG pipelines exhibited strong factual alignment, with consistently high support rates and minimal uncertainty. Specifically, the embedding-based pipeline performed reliably, with 97.7% of statements marked as supported and only 2.2% as unsupported. Moreover, the graph-based pipeline achieved slightly higher accuracy, with 98.4% of statements supported and only 1.5% unsupported, reflecting strong consistency in structured retrieval. Additionally, the web-based pipeline reached 99.9% support and only two unsupported cases, making it the most faithful pipeline in this evaluation. Across all modes, GPT-4.1 detected just four inconclusive cases, all correctly identified, highlighting strong confidence in the quality of evidence used across the pipelines.

Claude Sonnet-4.0 (

Table 3) provided a consistent and moderately more conservative evaluation of the RAG pipelines. The embedding pipeline scored 97.0% supported and 2.9% unsupported statements, reflecting strong, but slightly reduced alignment compared to GPT-4.1. Additionally, in the graph pipeline, performance dipped slightly further, with 96.2% supported and 3.7% unsupported. In comparison, the web-based pipeline remained a strong performer under Claude’s assessment, with 99.4% support and just 0.6% unsupported statements. Like GPT-4.1, Claude identified two correct inconclusive cases per pipeline and no incorrect ones. Overall, Claude’s evaluation reinforces the relative strength of the web-based pipeline, while identifying slightly more gaps in internally retrieved content.

Gemini 2.5 Pro (

Table 4) exhibited more selective evaluation patterns across the simple RAG pipelines, with lower support rates and higher identification of unsupported statements. Based on the results, the embedding-based pipeline reached 95.9% support, with 4.0% of statements marked as unsupported, while the graph-based pipeline showed a similar trend, with 95.8% support and 4.1% unsupported. Moreover, the web-based pipeline still performed well, achieving 98.4% supporting statements and 1.6% unsupported. Gemini identified two correct inconclusive cases in the internal pipelines and none in the web mode, with no incorrect inconclusive reported. This suggests that while all pipelines maintained high faithfulness, Gemini applied stricter standards, especially to non-web-based retrievals.

4.3. Agentic RAG Faithfulness Evaluation

The agent-based RAG orchestration was evaluated across multiple evidence types and conflict-handling scenarios by three top-tier language models. Across all evaluators, the results reveal strong grounding fidelity based on individual pipelines’ outputs, robust multi-source integration, and effective conflict handling, with slight variation in sensitivity between models.

Internal Evidence Use was prominent: the majority of statements were supported by internal sources, either embeddings, graphs, or both. GPT-4.1 rated the highest proportion as supported by both internal sources (36.5%), followed by Claude Sonnet-4.0 (32.8%) and Gemini 2.5 Pro (28.5%). Embedding-only and graph-only supports remained relatively low across all three, confirming balanced multi-source utilization (

Table 5).

Web-based support was also frequently recognized. All evaluators flagged around 35–39% of statements as supported by web evidence. GPT-4.1 showed perfect attribution with no unmarked web-supported statements, while Gemini marked a notable 7.3% as web-supported but unmarked, suggesting a potential attribution issue in that context. Claude reported 3.3% unmarked.

Conflict handling was largely accurate, but with some divergence. Claude and Gemini identified more correctly flagged conflicts (1.7% and 2.6%, respectively) than GPT (1.2%), but Gemini also surfaced a small number of unflagged or unacknowledged conflicts, indicating stricter scrutiny. Only Gemini found a case of unacknowledged conflict.

Unsupported statements were rare. GPT flagged only one (0.1%), Claude seven (0.4%), and Gemini forty (2.2%), again suggesting that Gemini applies more rigorous standards in evaluating factual alignment.

5. Discussion

To assess the factual reliability of each RAG pipeline for e-governance use cases, we analyzed the performance of embedding-based, graph-based, web-based, and agentic retrieval strategies using evaluations conducted by three top-tier language models: GPT-4.1, Claude Sonnet-4.0, and Gemini 2.5 Pro. The evaluation focused on question-level fidelity, particularly emphasizing the rate of fully supported answers evidence. This was the most meaningful metric in contexts demanding high accountability such as e-government service delivery and policy decision support.

As presented in

Table 6, the embedding-based pipeline produced fully supported answers in 85.0% of the 120 evaluated cases, whereas the graph-based pipeline achieved 90.0%. The difference becomes particularly relevant when considering that graph-based indexing is designed for structured, traceable fact storage, and is thus well-suited to governance-related tasks such as benefits eligibility, policy audits, or regulation retrieval, where factual completeness and low hallucination rates are paramount. The same table shows that hallucinations appeared in 13.3% of embedding queries versus only 8.3% in the graph pipeline, supporting the observation that embeddings, while flexible, are more prone to surface semantically relevant yet unsupported material.

The same pattern is confirmed in

Table 7, where the graph pipeline slightly outperforms embeddings again, producing 80.8% fully supported answers compared to 80.0%. Claude’s more conservative judgment also raises the hallucination rates for both pipelines, but the relative gap remains.

Similarly,

Table 8 further supports this finding, showing 79.2% fully supported answers for graph-based queries compared to 75.8% for embeddings. Across all models, then, the graph pipeline demonstrates more consistent grounding, which is especially relevant for applications requiring strict traceability of public-sector knowledge graphs, such as legislation mapping or procurement tracking.

Web-based RAG emerged as the most faithful among standalone pipelines, achieving the highest rate of fully supported answers across all evaluators, 98.3% under GPT-4.1, 96.7% under Claude, and 85.8% under Gemini. These results reflect the benefits of real-time retrieval and effective synthesis for answering queries tied to dynamic public information, such as election updates or policy changes.

However, despite low hallucination rates, the credibility of retrieved web sources remains a critical factor, while, in contrast to internal documents, which are inherently trustworthy, web content may introduce inaccuracies if not properly sourced. Therefore, even when factual alignment appears strong, the overall accuracy of the answer still depends on the reliability of the underlying sources.

Moreover, it is critical that attribution issues, such as missing web citations or unmarked content conflicts, are carefully examined. While this study did not evaluate the credibility of web domains, it is worth noting that information from non-accredited or unverified publishers can potentially introduce inaccuracies. In comparison, when using internal documents, identifying and addressing potential conflicts within the context retrieved can help mitigate these risks and enhance trust in the final output.

The agentic RAG pipeline, which integrates all three retrieval modes (embedding, graph, and web), demonstrated the highest faithfulness scores in evaluation. However, this result is not directly comparable to the standalone pipelines, because the agent was not grounded in raw documents; instead, it operated on the already-generated outputs of the embedding, graph, and web systems. In practice, this means the agent’s faithfulness reflects a second-layer verification task, assessing how effectively it synthesizes, reconciles, and preserves factual content distilled by the base pipelines, rather than performing first-order retrieval grounding itself.

According to GPT-4.1’s coverage results, 119 out of 120 answers (99.2%) were fully supported, with only one hallucinated case. Claude’s results also show strong reliability, with 95.8% of agentic answers fully supported. Even Gemini’s stricter evaluation confirmed the robustness of the agentic strategy, with 84.2% fully supported answers, marginally above the web-only pipeline and ahead of embedding and graph-based retrievals. These outcomes are detailed in the agentic column of each model’s table’s results, reinforcing the benefit of multi-source evidence fusion in complex reasoning tasks.

What distinguishes the agentic pipeline is its architectural design, which explicitly separates evidence sources and applies step-by-step logic for synthesis, allowing it to identify and manage conflicts, attribute sources correctly, and maximize contextual alignment. This is especially critical in governance applications involving multi-agency data, evolving legislation, or public transparency initiatives, where conflicting or incomplete information must be overseen responsibly.

Across pipelines, the evaluators operated over markedly different token volumes, which shape how comparative results should be interpreted, as presented in

Table 9. The embedding, graph, and web-based pipelines each supplied full retrieved evidence to the LLM judges, averaging 7063, 11,029, and 8628 input tokens per evaluation, respectively.

The agentic evaluation was considerably more compact at 4891 tokens, not due to reduced context depth, but because the agent’s evaluation prompt did not include raw documents; instead, it passed the generated answers produced by the individual pipelines, meaning the evaluator assessed an abstraction layer above the source retrieval. This positions the agentic scoring process as second-order faithfulness verification, where the model is judged on how well it maintains factual integrity when summarizing, merging, and reconciling evidence already distilled by embedding, graph, and web retrieval streams.

Importantly, all three evaluators, GPT-4.1, Claude Sonnet-4.0, and Gemini 2.5 Pro, were presented with the same input prompt per question, ensuring that differences in scoring reflect evaluative behavior, rather than variation in instructions or evidence exposure. Additionally, token estimates for all augmented prompts were computed using the GPT-4.1 tokenizer, meaning absolute values reflect that encoding standard and should be interpreted with this baseline in mind, particularly given that the agentic configuration is assessed at a more distilled knowledge layer rather than directly against full document context.

Lastly, the evaluation results captured across all evaluators confirm that the graph-based pipeline offers a measurable advantage over embeddings in internal document retrieval tasks, particularly in terms of producing fully supported answers and minimizing hallucination. The web-based pipeline is accurate for up-to-date factual coverage and real-world responsiveness, especially by accredited domains retrieval, while the agentic configuration delivers a combination of those pipelines, making it a promising candidate for large-scale, high-stakes e-governance deployments, where different architects and sources are combined. These findings underscore the importance of selecting pipeline architectures aligned with the fidelity, traceability, and recency demands of specific public-sector use cases.

Limitations and Threats to Validity

While this study controls three independent LLMs (GPT-4.1, Claude Sonnet-4.0, and Gemini 2.5 Pro) as automated evaluators, it does not yet incorporate a human-annotated gold standard for calibration. Establishing such a baseline would enable quantitative comparison of LLM judgments with human agreement levels and help validate their reliability in detecting hallucinations and redundancy. Although large-scale manual annotation was beyond the practical scope of the current work, we performed spot-checks on a random sample of evaluation outputs to ensure that model-judged labels aligned with human expectations. Nevertheless, future extensions of this framework will include a systematic human validation study using dual independent annotators and agreement measurement, in order to strengthen external validity and provide a reference standard for automated faithfulness evaluation.

The experiments presented in this study are intended primarily to demonstrate the proposed framework rather than to establish a benchmark dataset or comprehensive empirical evaluation. The set of 120 questions used for illustration was selected to cover representative topics within the e-government domain and serves as an example of how the framework can be applied in practice. The main contribution lies in the modular design and open availability of the framework itself; therefore, users can readily reproduce the workflow using their own question sets or domain data.

This study primarily aims to propose and demonstrate a modular framework for faithfulness evaluation in RAG systems, rather than to perform formal statistical hypothesis testing. The reported precision, recall, and fully supported answer rates serve as illustrative metrics to showcase how the framework can operate comparative analysis across different RAG architectures. Given the exploratory and methodological focus, the present version does not include formal significance testing or bootstrapped confidence intervals. The dataset size (36 press releases) was chosen to provide representative coverage of the e-government domain sufficient for framework validation. To mitigate evaluator variance, we report aggregated averages across three independent LLM judges, offering a stability check for the framework’s evaluation layer. Future work will extend this framework to larger datasets and include statistical testing (e.g., bootstrapped confidence intervals and paired significance tests) to support more generalizable inferences.

A potential limitation of this study arises from evaluator circularity, as LLMs were used both as content generators and as evaluators of faithfulness. For example, different models could apply slightly different interpretive heuristics when judging “support” or “redundancy.” Future work will include human-in-the-loop calibration to empirically assess evaluator bias by comparing human judgments with each LLM family’s scoring trends, thereby quantifying the extent of circularity, and improving interpretive robustness.

6. Conclusions and Future Work

The comparative evaluation of the three standalone RAG pipelines on e-governance data, embedding-based, graph-based, and web-based pipelines, demonstrates distinct strengths. Across all evaluators, the graph-based pipeline consistently outperformed embeddings in internal document retrieval, delivering higher rates of fully supported answers and fewer hallucinations. This superiority is particularly evident under GPT-4.1 (90.0% vs. 85.0% fully supported), Claude (80.8% vs. 80.0%), and Gemini (79.2% vs. 75.8%), as shown in

Table 6,

Table 7 and

Table 8. Starting with the graph pipeline, its advantage is derived from its ability to store and retrieve structured, traceable knowledge, making it well-suited for applications where factual completeness and verifiable provenance are essential, such as policy audits, legislative mapping, and regulatory compliance checks.

Continuing with the embedding-based pipeline, while showing slightly lower factual grounding than the graph approach, it offers significant advantages in speed, scalability, and operational cost. Embedding-based indexing and retrieval are computationally lighter, allowing for near-instantaneous query responses and efficient processing of large, diverse document collections. This makes it highly suitable for governance scenarios requiring rapid turnaround times and broad semantic coverage, such as citizen inquiry handling, public information portals, or early-stage policy research where comprehensiveness and timeliness outweigh the need for absolute traceability.

The web-based pipeline emerged as a strong performer retrieving content from the web in real-time, excelling in scenarios that demand live access to changing information. It achieved the highest rates of fully supported answers among the three pipelines, 98.3% under GPT-4.1, 96.7% under Claude, and 85.8% under Gemini, but its reliability strongly relies on the credibility of the retrieved sources. Unlike internal documents, web content can introduce inaccuracies if not drawn from accredited domains or trusted publishers. Even with low hallucination rates, attribution must be transparent, and issues such as missing web citations or unmarked content conflicts should be proactively addressed to sustain trust in outputs.

Moreover, by integrating the structured precision of graph retrieval, the flexibility and speed of embeddings, and the timeliness of web search, the agentic pipeline maintained strong factual support across evaluators, being 99.2% fully supported under GPT-4.1, 95.8% under Claude, and 84.2% under Gemini, with minimal hallucinations. Its architectural separation of evidence sources, explicit conflict handling, and step-by-step reasoning make it especially resilient for governance applications involving multi-agency datasets, evolving regulations, or situations where conflicting information must be responsibly reconciled. However, we should highlight the importance of the valid output of the tools (simple RAGs), the synthesized answer relies on them.

Moreover, it should be highlighted that the results reflect the specific pipeline configurations, indexing strategies, and prompting parameters used in this study. Performance may vary under different implementations, datasets, or evaluation settings, meaning that while the relative patterns are instructive, the absolute scores are tied to the evaluated setup.

Taken together, these results underscore that no single retrieval strategy is universally optimal; instead, pipeline selection should be driven by the faithfulness, traceability, and recency requirements of the target application. Graph-based retrieval offers the most dependable grounding for authoritative internal records. Embedding-based retrieval provides a good, faster, and more cost-efficient option, ideal for high-volume, low-latency use cases, but with a trade-off in precision and traceability. Web-based retrieval delivers unmatched responsiveness for dynamic information needs but demands strict source accreditation and attribution controls.

Agentic orchestration achieved high overall accuracy, reflecting its capacity to integrate and reason over the outputs of multiple specialized RAG pipelines. In this setup, however, its performance cannot be interpreted or compared independently of those pipelines as presented in

Table 6,

Table 7 and

Table 8, since the agent’s grounding is based on their generated answers rather than on the original documents.

Based on the presented results, agentic reliability reflects a second-order assessment, faithfulness to already-processed knowledge, rather than a direct evaluation against source material. This underscores the critical role of well-calibrated and domain-aligned base pipelines in ensuring factual integrity and interpretability within the orchestration process. Therefore, future work should extend evaluation to compare agent outputs against raw source material, rather than only pipeline-generated summaries.

Beyond its accuracy, the Agentic RAG framework offers enhanced source attribution by explicitly linking final responses to their originating pipelines, thereby improving traceability and transparency. Moreover, by operating on condensed, pipeline-level outputs rather than the entire raw retrieval context, it effectively reduces the context window required for reasoning, an advantage for efficiency and scalability in large-scale deployments.

Nevertheless, deploying Agentic systems in real-world e-governance scenarios presents notable challenges. Their multi-tool orchestration and iterative reasoning structures demand greater computational resources and longer inference times, while reliance on external APIs introduces potential latency, rate-limit, and data-privacy concerns. Consequently, their adoption must align with infrastructure readiness, governance standards, and ethical safeguards. Future research should focus on optimizing these architectures through techniques such as caching, model distillation, and hybrid offline–online retrieval setups to enhance their efficiency, reliability, and suitability for trustworthy large-scale deployment.

Future Work

Future research will focus on expanding the proposed evaluation framework to additional retrieval modalities and domains beyond the current structured settings. While this study primarily benchmarks hallucination and redundancy detection in simple and agentic GraphRAG systems, future work should explore multi-modal agentic pipelines that integrate textual, tabular, and visual knowledge sources, where attribution and faithfulness assessment become even more challenging. Incorporating temporal and streaming data could also enable dynamic provenance tracking in real-time decision-making scenarios, such as policy monitoring or crisis management, where context evolves continuously.