Enhancing POI Recognition with Micro-Level Tagging and Deep Learning

Abstract

1. Introduction

- Introducing micro-level tagging, a method that systematically generates enriched contextual metadata by identifying and annotating detailed attributes of individual objects, such as colors, within images.

- Developing a specialized Transformer-based deep learning model trained on micro-level contextual metadata, enhancing scene recognition with precise contextual understanding beyond standard classification approaches.

- Evaluating the proposed model within a POI-based recommendation system, showcasing its potential and robustness.

2. Materials and Methods

2.1. Related Work

2.2. Transformer Models

2.3. Proposed Model

- Class Head: A fully connected classification layer responsible for predicting a single label from a predefined set of categories, each representing a type of POI image (e.g., amusement park, airport).

- Tags Head: A multi-label prediction head designed to identify all relevant descriptive tags associated with an image (e.g., crowded, colorful_cars).

- Objects Head: A prediction branch introduced to model object presence information derived from structured annotations (e.g., car, person, truck).

2.3.1. Datasets

2.3.2. Data Pipeline Preparation

- Image: the filename of the processed image;

- Place: the class of the processed image, indicating the scene;

- Tags: contextual tags automatically generated based on predefined rules which are applied on the detected objects;

- Objects: the object classes (e.g., “person”, “truck”) and the values are their respective counts in the image. Furthermore, it separates each item based on other categories (i.e., color), counting each variation of the object separately.

2.3.3. Training of the Model

- Tags Head: outputs logits for multiple descriptive tags;

- Class Head: predicts a single scene category;

- Objects Head: performs multi-label prediction for object presence.

2.4. Recommender Systems

2.4.1. Content-Based Filtering

2.4.2. Collaborative Filtering

- User-based collaborative filtering, which recommends locations based on the preferences of users with similar profiles.

- Item-based collaborative filtering, which suggests locations that share user visit patterns with previously visited POIs.

2.4.3. Hybrid Approaches

2.4.4. Integrating WORLDO with Recommender System

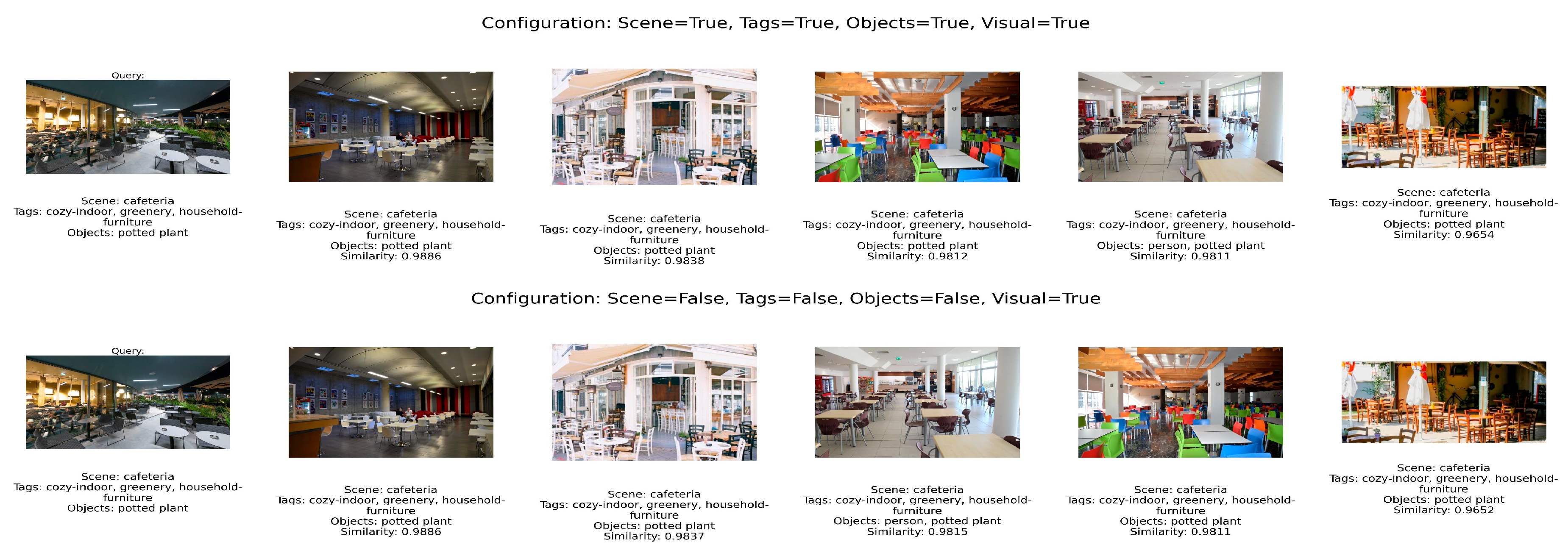

- Visual Embedding: The ViT-based visual features are present in the recommendation process but can be disabled, thus the system will rely only on semantic features (scene, tags, and objects) for the recommendations.

- Scene Classification: This includes the category of the POI (e.g., amusement park, airport, beach). If enabled, the system will incorporate scene class information into the feature vector for similarity calculation. Disabling this feature means the model will ignore the scene category when comparing POIs.

- Tags: Tags are descriptive labels (e.g., crowded, wildlife, colorful_cars) assigned to POIs. These tags are multi-hot encoded and can be used in the recommendation process. If disabled, the system will not consider the tags when comparing POIs.

- Objects: Objects represent the specific items present in the scene, such as cars, people, or plants. Objects, just like tags, are encoded in a multi-hot format. If disabled, object-based information is ignored during similarity computation.

3. Results

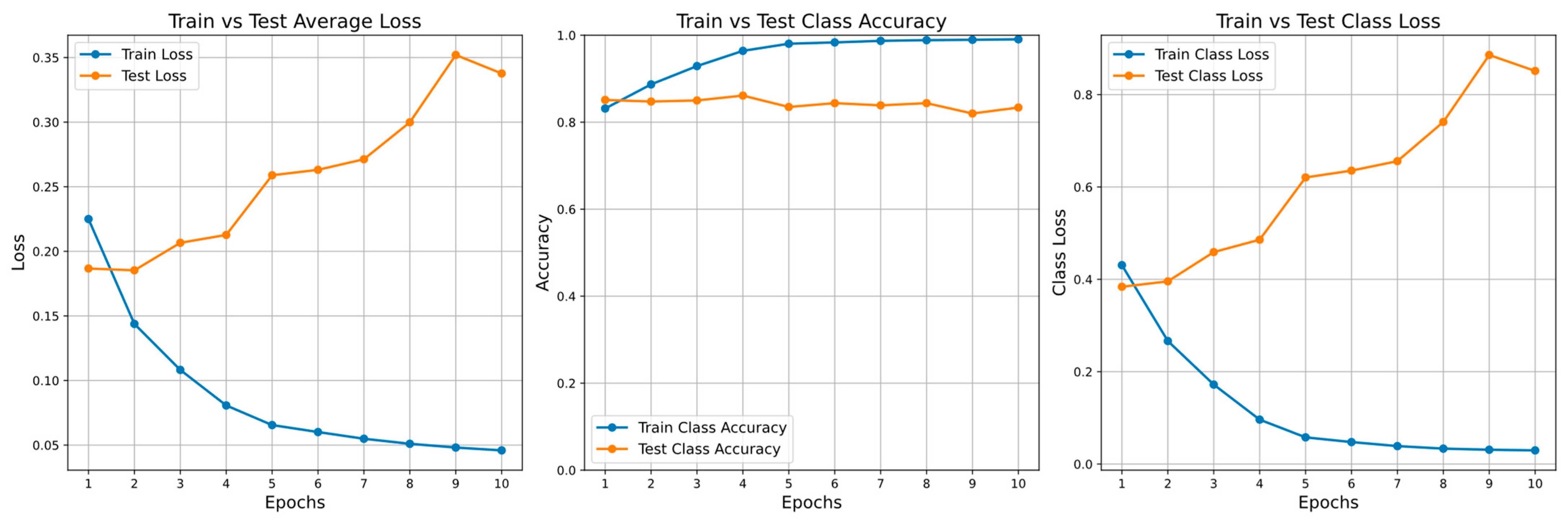

3.1. Classification Performance

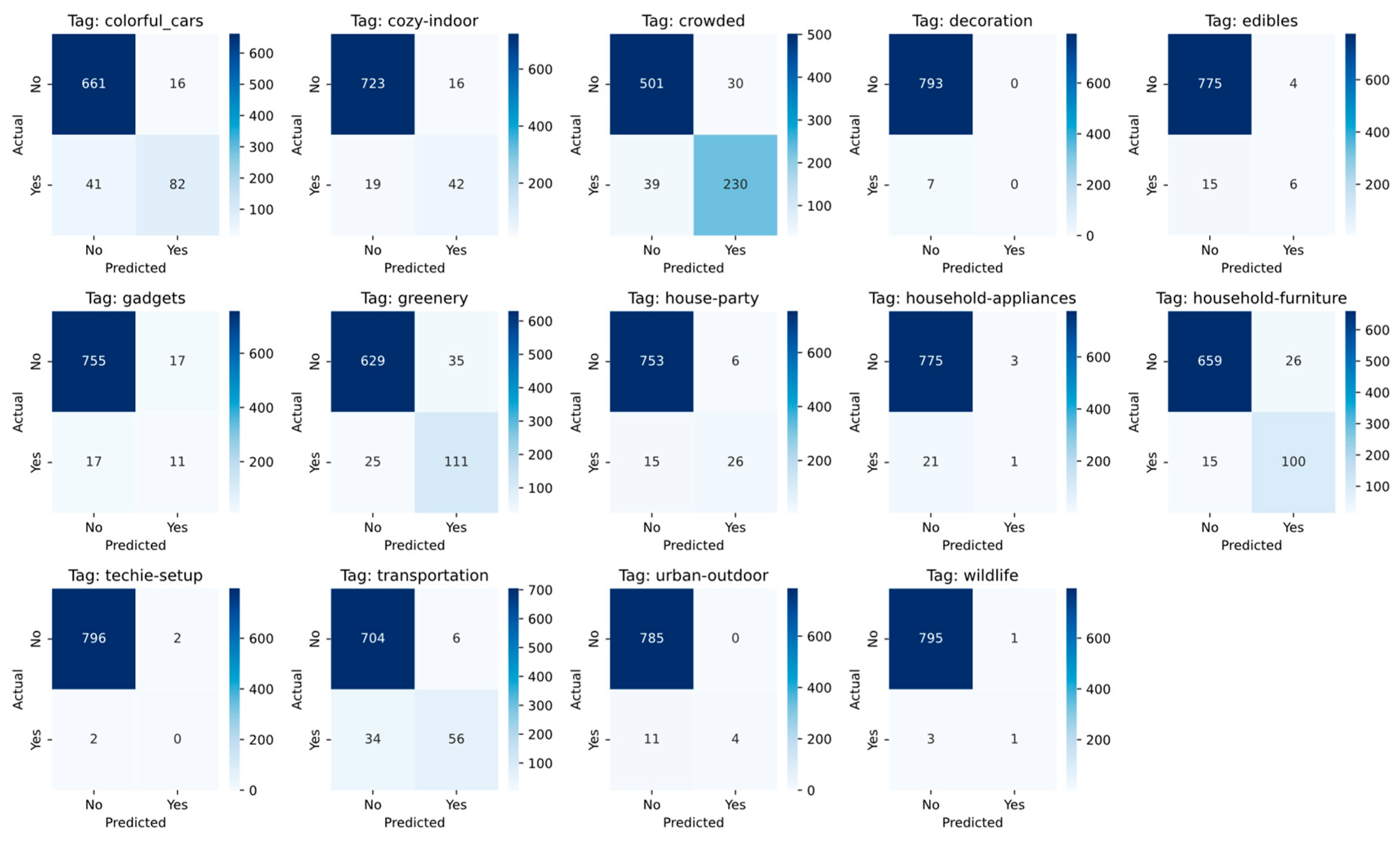

3.2. Multi-Label Performance

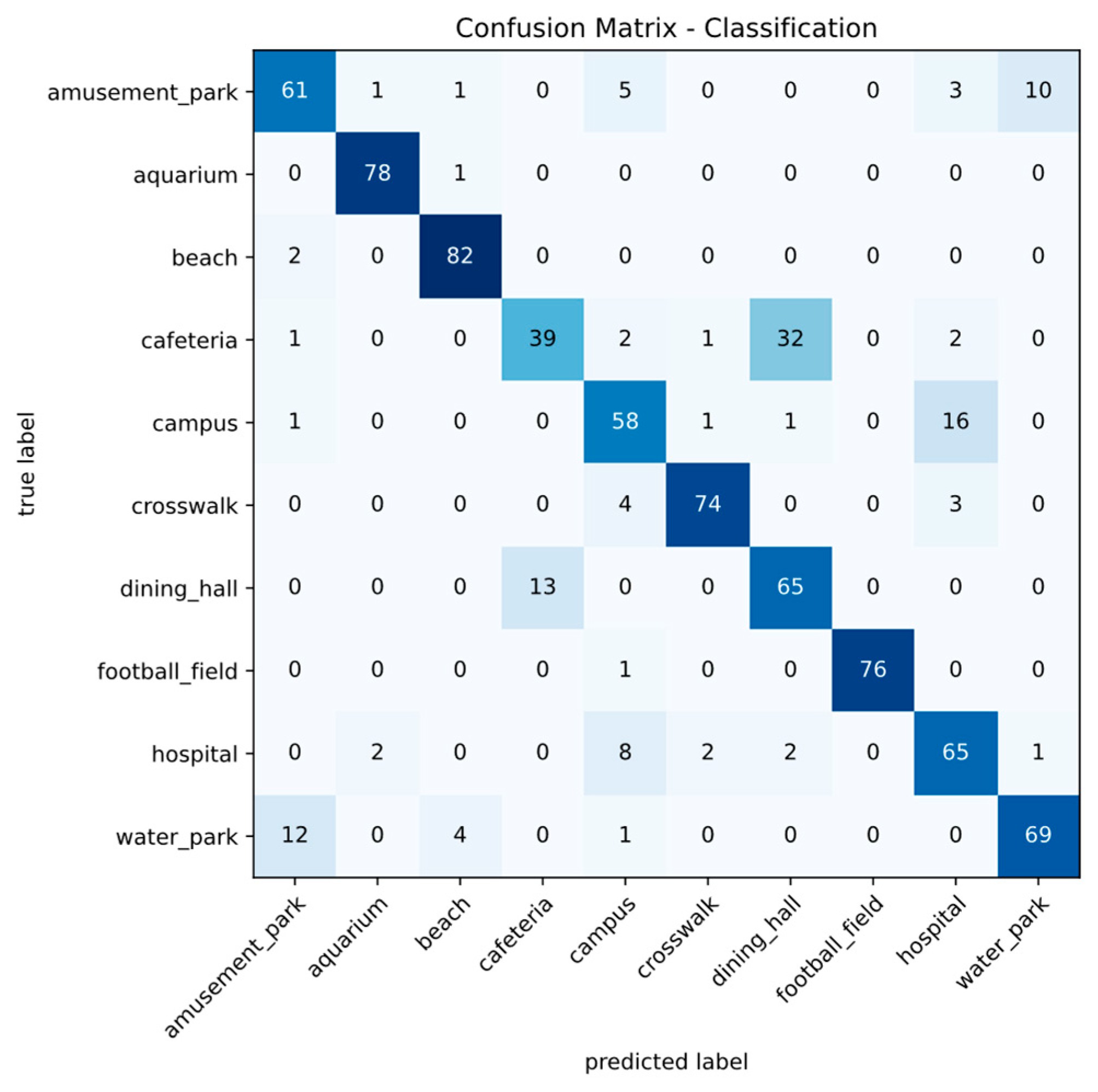

3.3. Error Analysis Using Confusion Matrices

3.4. Model-Level Ablation Study

- A0 (Full) (α, β, γ) = (1, 1, 1)

- A1 (-Objects) = (1, 1, 0)

- A2 (-Tags) = (0, 1, 1)

- A3 (-Class) = (1, 0, 1)

3.5. Comparative Evaluation with CNN Baselines

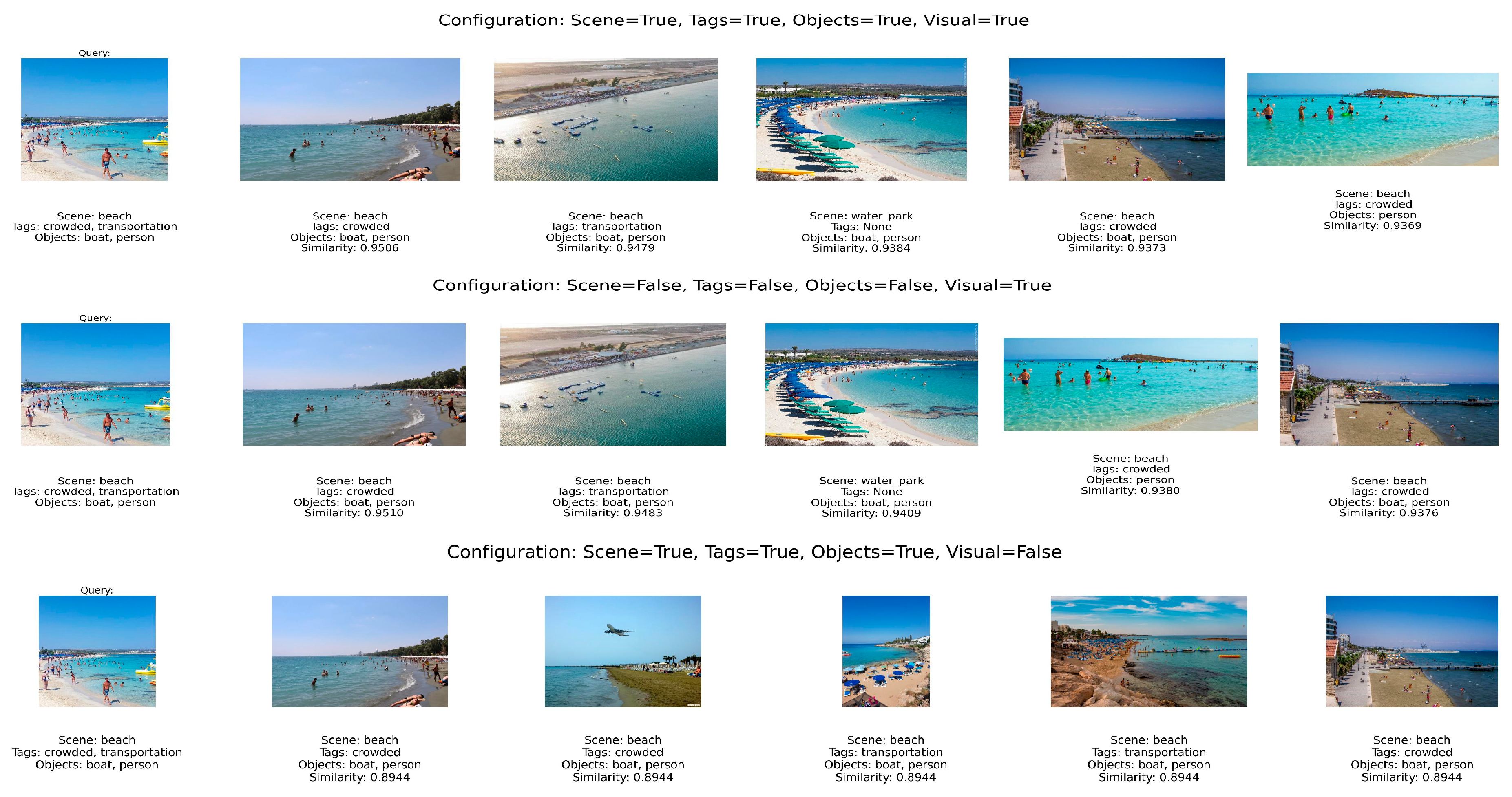

3.6. Recommender System Evaluation

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| POI | Points-of-Interest |

| ViT | Vision Transformer |

| WORLD | Weight Optimization for Representation and Labeling Descriptions |

| WORLDO | WORLD with Objects |

| CNN | Convolutional Neural Network |

| DETR | DEtection TRansformer |

| BoO | Bag-of-Objects |

| BERT | Bidirectional Encoder Representations from Transformers |

| COCO | Common Objects in Context |

| JSON | JavaScript Object Notation |

References

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2323. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.H.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. In Proceedings of the ICLR 2021—9th International Conference on Learning Representations, Virtual, 3–7 May 2021. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Messios, P.; Dionysiou, I.; Gjermundrød, H. Automated Contextual Tagging in Points-of-Interest Using Bag-of-Objects. In Proceedings of the Fourth International Conference on Innovations in Computing Research (ICR’25), London, UK, 25–27 August 2025; pp. 62–73. [Google Scholar]

- Ye, M.; Yin, P.; Lee, W.-C.; Lee, D.-L. Exploiting geographical influence for collaborative point-of-interest recommendation. In Proceedings of the 34th International ACM SIGIR Conference on Research and Development in Information-SIGIR ’11, Beijing, China, 25–29 July 2011; p. 325. [Google Scholar]

- Cheng, C.; Yang, H.; King, I.; Lyu, M. Fused matrix factorization with geographical and social influence in location-based social networks. In Proceedings of the AAAI Conference on Artificial Intelligence, Toronto, ON, Canada, 22–26 July 2012; Volume 1, pp. 17–23. [Google Scholar]

- Zhang, J.-D.; Chow, C.-Y. iGSLR: Personalized geo-social location recommendation. In Proceedings of the 21st ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems; Association for Computing Machinerty: New York, NY, USA, 2013; pp. 334–343. [Google Scholar]

- Zhang, J.D.; Chow, C.Y.; Li, Y. LORE: Exploiting sequential influence for location recommendations. In Proceedings of the SIGSPATIAL’14: 22nd SIGSPATIAL International Conference on Advances in Geographic Information Systems, Dallas, TX, USA, 4–7 November 2014; pp. 103–112. [Google Scholar]

- Zhang, J.-D.; Chow, C.-Y. GeoSoCa. In Proceedings of the 38th International ACM SIGIR Conference on Research and Development in Information Retrieval; Association for Computing Machinerty: New York, NY, USA, 2015; pp. 443–452. [Google Scholar]

- Li, H.; Ge, Y.; Hong, R.; Zhu, H. Point-of-Interest Recommendations. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 975–984. [Google Scholar]

- Li, H.; Ge, Y.; Lian, D.; Liu, H. Learning User’s Intrinsic and Extrinsic Interests for Point-of-Interest Recommendation: A Unified Approach. In Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence, Melbourne, Australia, 19–25 August 2017; pp. 2117–2123. [Google Scholar]

- Manotumruksa, J.; Macdonald, C.; Ounis, I. A Personalised Ranking Framework with Multiple Sampling Criteria for Venue Recommendation. In Proceedings of the 2017 ACM Conference on Information and Knowledge Management (CIKM ’17), Singapore, 6–10 November 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 1469–1478. [Google Scholar]

- He, J.; Li, X.; Liao, L. Category-aware Next Point-of-Interest Recommendation via Listwise Bayesian Personalized Ranking. In Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence, Melbourne, Australia, 19–25 August 2017; pp. 1837–1843. [Google Scholar]

- Feng, S.; Cong, G.; An, B.; Chee, Y.M. POI2Vec: Geographical latent representation for predicting future visitors. In Proceedings of the 31st AAAI Conference on Artificial Intelligence AAAI 2017, San Francisco, CA, USA, 4–9 February 2017; pp. 102–108. [Google Scholar]

- Han, P.; Li, Z.; Liu, Y.; Zhao, P.; Li, J.; Wang, H.; Shang, S. Contextualized Point-of-Interest Recommendation. In Proceedings of the Twenty-Ninth International Joint Conference on Artificial Intelligence, Yokohama, Japan, 7–15 January 2021; Volume 2021-Janua, pp. 2484–2490. [Google Scholar]

- Wu, L.; He, X.; Wang, X.; Zhang, K.; Wang, M. A Survey on Accuracy-oriented Neural Recommendation: From Collaborative Filtering to Information-rich Recommendation. IEEE Trans. Knowl. Data Eng. 2022, 35, 4425–4445. [Google Scholar] [CrossRef]

- Dacrema, M.F.; Cremonesi, P.; Jannach, D. Are we really making much progress? A worrying analysis of recent neural recommendation approaches. In Proceedings of the 13th ACM Conference on Recommender Systems, Copenhagen, Denmark, 16–20 September 2019; pp. 101–109. [Google Scholar]

- Darcet, T.; Oquab, M.; Mairal, J.; Bojanowski, P. Vision Transformers Need Registers. In Proceedings of the 12th International Conference on Learning Representations ICLR 2024, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Lepori, M.; Tartaglini, A.; Vong, W.K.; Serre, T.; Lake, B.M.; Pavlick, E. Beyond the Doors of Perception: Vision Transformers Represent Relations Between Objects. Adv. Neural Inf. Process. Syst. 2024, 37, 131503–131544. [Google Scholar]

- Guo, H.; Wang, Y.; Ye, Z.; Dai, J.; Xiong, Y. big.LITTLE Vision Transformer for Efficient Visual Recognition. arXiv 2024, arXiv:2410.10267. [Google Scholar] [CrossRef]

- Liu, Q.; Hu, J.; Xiao, Y.; Zhao, X.; Gao, J.; Wang, W.; Li, Q.; Tang, J. Multimodal Recommender Systems: A Survey. ACM Comput. Surv. 2024, 57, 1–17. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5999–6009. [Google Scholar]

- Messios, P.; Dionysiou, I.; Gjermundrød, H. Comparing Convolutional Neural Networks and Transformers in a Points-of-Interest Experiment. In Proceedings of the Third International Conference on Innovations in Computing Research (ICR’24), Lecture Notes in Networks and Systems; Daimi, K., Al Sadoon, A., Eds.; Springer Nature Switzerland: Cham, Switzerland, 2024; Volume 1058, pp. 153–162. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Computer Vision—ECCV 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2014; Volume 8693, pp. 740–755. [Google Scholar]

- Zhou, B.; Lapedriza, A.; Khosla, A.; Oliva, A.; Torralba, A. Places: A 10 Million Image Database for Scene Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 1452–1464. [Google Scholar] [CrossRef] [PubMed]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2009, Miami Beach, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Transformers Hugging Face Library. Available online: https://pypi.org/project/transformers/ (accessed on 30 January 2025).

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. In Proceedings of the 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Kingma, D.P.; Ba, J.L. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015-Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Agrawal, S.; Roy, D.; Mitra, M. Tag Embedding Based Personalized Point Of Interest Recommendation System. Inf. Process. Manag. 2021, 58, 102690. [Google Scholar] [CrossRef]

- Su, X.; Khoshgoftaar, T.M. A Survey of Collaborative Filtering Techniques. Adv. Artif. Intell. 2009, 2009, 421425. [Google Scholar] [CrossRef]

- Çano, E.; Morisio, M. Hybrid recommender systems: A systematic literature review. Intell. Data Anal. 2017, 21, 1487–1524. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; IEEE: New York, NY, USA, 2016; pp. 770–778. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015-Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNetV2: Smaller Models and Faster Training. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; Volume 139, pp. 10096–10106. [Google Scholar]

| Step | Formula | Description | |

|---|---|---|---|

| ViT Pooler Output | , | Extracts visual features from input image | (1) |

| ViT Projection | , | Projects ViT output into latent space | (2) |

| Class Prediction | , | Predicts scene category | (3) |

| Tags Prediction | , | Predicts multi-label tag outputs | (4) |

| Object Prediction | , | Predicts multi-label object presence | (5) |

| Total Loss Function | , | Weighted sum of task-specific losses | (6) |

| Class Name | # of Training Images | # of Testing Images |

|---|---|---|

| amusement_park | 4027 | 81 |

| aquarium | 3998 | 79 |

| beach | 3974 | 84 |

| cafeteria | 4032 | 77 |

| campus | 3936 | 77 |

| crosswalk | 4023 | 81 |

| dining_hall | 3968 | 78 |

| football_field | 4055 | 77 |

| hospital | 4027 | 80 |

| Tags | Objects | Threshold |

|---|---|---|

| crowded | person | 5 |

| wildlife | bird, cat, dog, horse, sheep, cow, elephant, bear, zebra, giraffe | 3 |

| transportation | car, bus, truck, motorcycle, bicycle, train, boat, airplane | 3 |

| edibles | banana, apple, orange, broccoli, carrot, hot dog pizza, donut, cake, sandwich | 2 |

| greenery | potted plant | 1 |

| household-furniture | chair, couch, bed dining table, bench toilet | 2 |

| household-appliances | microwave, oven, toaster, sink, refrigerator | 1 |

| gadgets | tv, laptop, mouse, remote, keyboard, cell phone | 1 |

| decoration | book, clock, vase, teddy bear | 2 |

| Epoch | Train Average Loss | Train Class Loss | Train Class Acc | Test Average Loss | Test Class Loss | Test Class Acc |

|---|---|---|---|---|---|---|

| 1 | 0.2250 | 0.4307 | 0.8315 | 0.1867 | 0.3839 | 0.8512 |

| 2 | 0.1438 | 0.2665 | 0.8869 | 0.1853 | 0.3956 | 0.8475 |

| 3 | 0.1082 | 0.1721 | 0.9290 | 0.2066 | 0.4590 | 0.8500 |

| 4 | 0.0807 | 0.0962 | 0.9640 | 0.2127 | 0.4858 | 0.8612 |

| 5 | 0.0655 | 0.0578 | 0.9804 | 0.2589 | 0.6206 | 0.8350 |

| 6 | 0.0601 | 0.0476 | 0.9834 | 0.2631 | 0.6354 | 0.8438 |

| 7 | 0.0549 | 0.0389 | 0.9870 | 0.2713 | 0.6559 | 0.8387 |

| 8 | 0.0510 | 0.0334 | 0.9886 | 0.2999 | 0.7408 | 0.8438 |

| 9 | 0.0481 | 0.0310 | 0.9894 | 0.3520 | 0.8858 | 0.8200 |

| 10 | 0.0459 | 0.0296 | 0.9906 | 0.3377 | 0.8512 | 0.8337 |

| Variant | α | β | γ | Class Acc | Tag F1 | Obj F1 |

|---|---|---|---|---|---|---|

| A0 (Full) | 1 | 1 | 1 | 0.8488 | 0.6633 | 0.5814 |

| A1 (-Objects) | 1 | 1 | 0 | 0.8375 | 0.6643 | 0.0443 |

| A2 (-Tags) | 0 | 1 | 1 | 0.8263 | 0.1100 | 0.5274 |

| A3 (-Class) | 1 | 0 | 1 | 0.0563 | 0.7242 | 0.6442 |

| Model | Test Accuracy | Weighted F1 |

|---|---|---|

| VGG16 | 0.7500 | 0.747 |

| EfficientNetV2 | 0.7500 | 0.744 |

| ResNet50 | 0.7688 | 0.760 |

| WORLDO (class-only) | 0.7937 | 0.791 |

| Picture | Full Features | Class Disabled | Tags Disabled | Obj Disabled | Visual Features Disabled |

|---|---|---|---|---|---|

| Beach | 5/5 (0.9422) | 5/5 (0.9424) | 5/5 (0.9432) | 5/5 (0.9432) | 5/5 (0.8944) |

| Dining Hall | 5/5 (0.8651) | 5/5 (0.9335) | 5/5 (0.9338) | 5/5 (0.9343) | 5/5 (0.8651) |

| Coffee Shop | 5/5 (0.9800) | 5/5 (0.9800) | 5/5 (0.9799) | 5/5 (0.9800) | 5/5 (1) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Messios, P.; Dionysiou, I.; Gjermundrød, H. Enhancing POI Recognition with Micro-Level Tagging and Deep Learning. Big Data Cogn. Comput. 2025, 9, 293. https://doi.org/10.3390/bdcc9110293

Messios P, Dionysiou I, Gjermundrød H. Enhancing POI Recognition with Micro-Level Tagging and Deep Learning. Big Data and Cognitive Computing. 2025; 9(11):293. https://doi.org/10.3390/bdcc9110293

Chicago/Turabian StyleMessios, Paraskevas, Ioanna Dionysiou, and Harald Gjermundrød. 2025. "Enhancing POI Recognition with Micro-Level Tagging and Deep Learning" Big Data and Cognitive Computing 9, no. 11: 293. https://doi.org/10.3390/bdcc9110293

APA StyleMessios, P., Dionysiou, I., & Gjermundrød, H. (2025). Enhancing POI Recognition with Micro-Level Tagging and Deep Learning. Big Data and Cognitive Computing, 9(11), 293. https://doi.org/10.3390/bdcc9110293