Explainable Multi-Hop Question Answering: A Rationale-Based Approach

Abstract

1. Introduction

2. Related Works

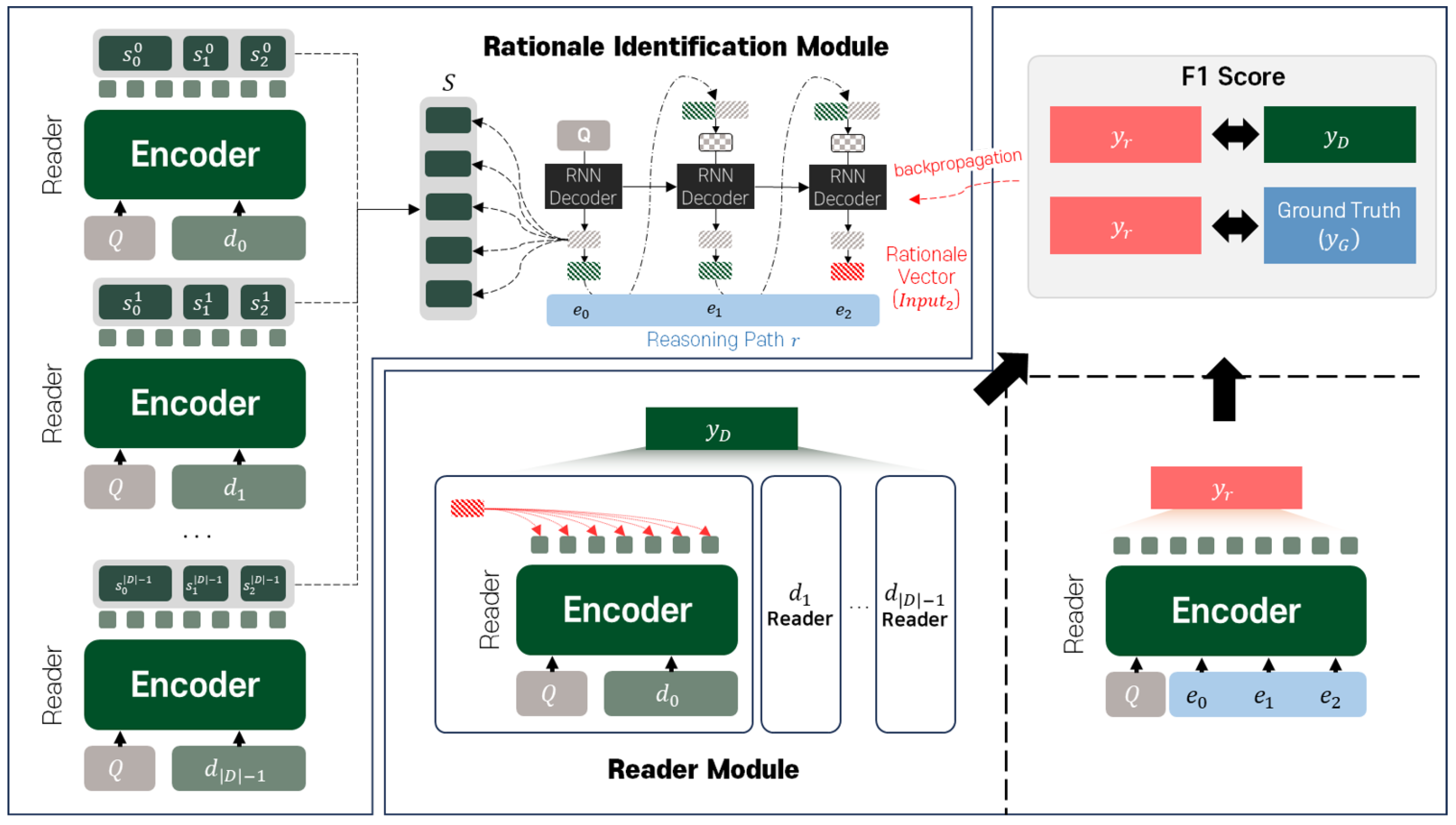

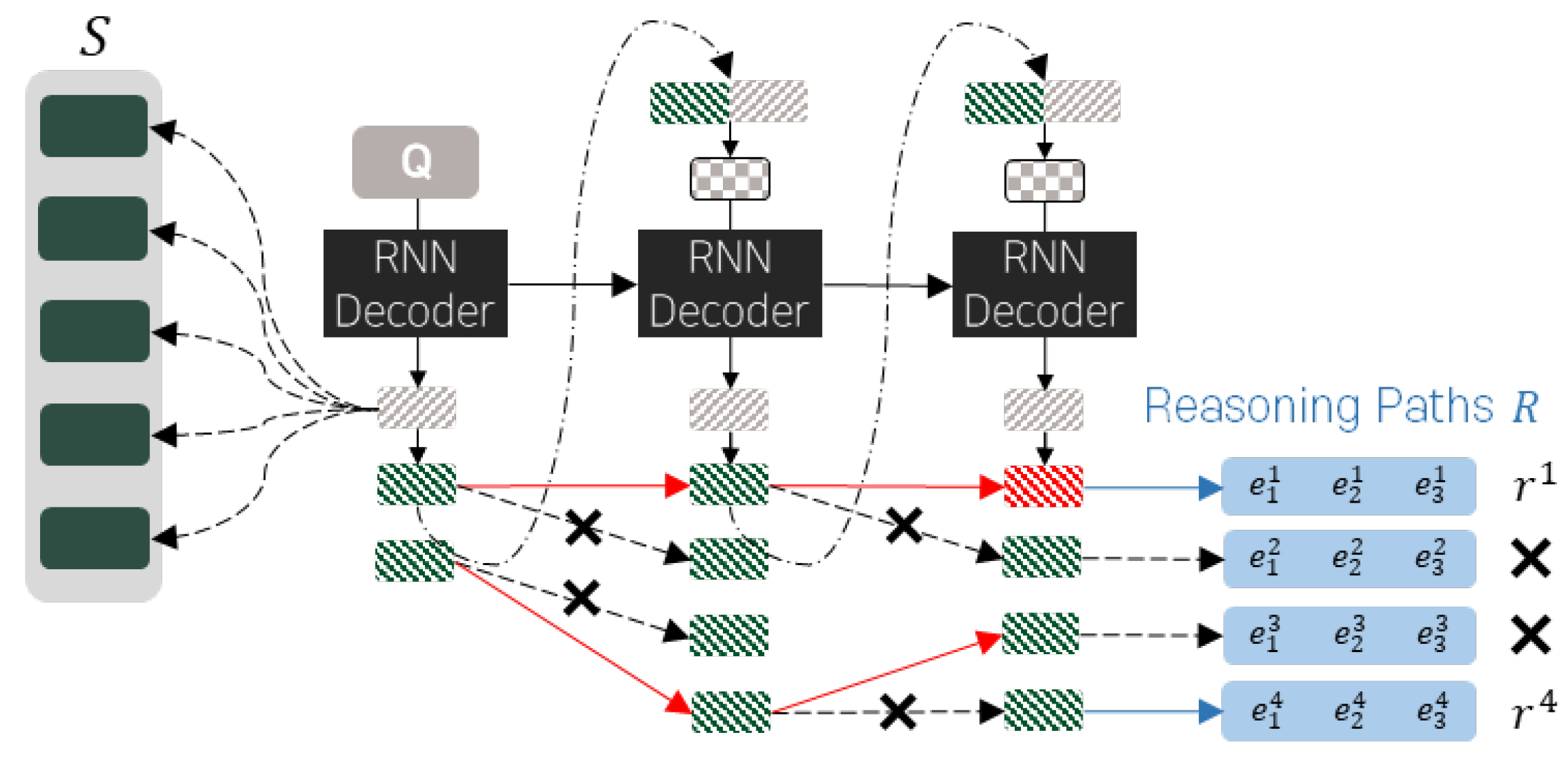

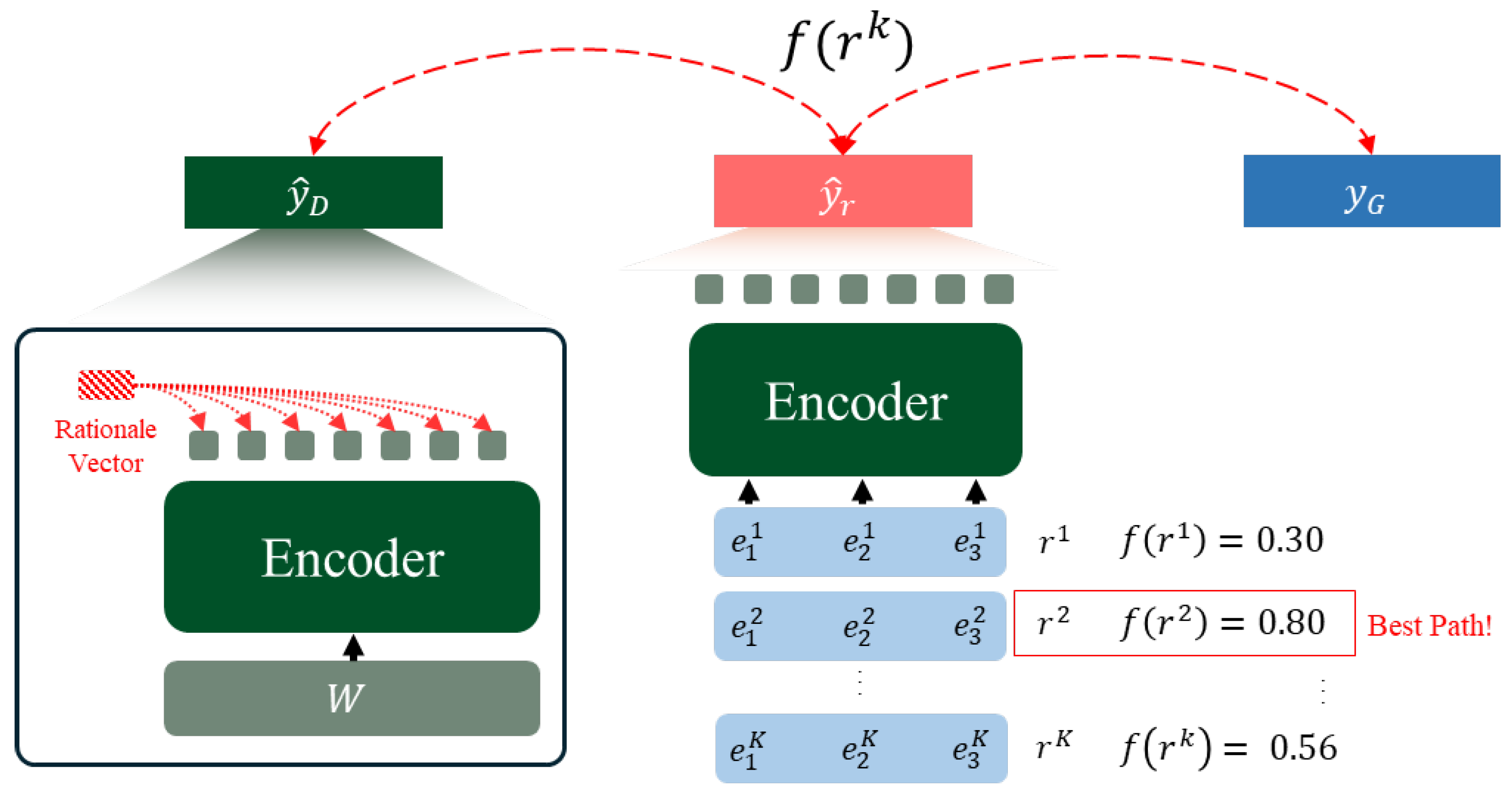

3. Methodology

4. Experiments

4.1. Dataset and Experimental Setup

4.2. Baseline

4.3. Metrics

- 0 points: The provided rationale does not substantiate or is irrelevant to the model’s prediction at all.

- 1 point: The provided rationale somewhat supports the model’s prediction but is not decisive; it is partially inferred but unclear.

- 2 points: The provided rationale fully substantiates the model’s prediction, and the same answer can be inferred solely based on the given rationale.

Prompt Details for GPT-Based Evaluation

- Task Description: A clear explanation of the evaluation task.

- Predicted Answer: The model’s predicted answer for the given question.

- Rationale Sentences: The evidence sentences inferred by the model, to be evaluated by GPT-4o mini.

4.4. Comparison Models

4.5. Experimental Results

4.5.1. Quantitative Evaluation

4.5.2. GPT Score Evaluation

4.5.3. Ablation Study

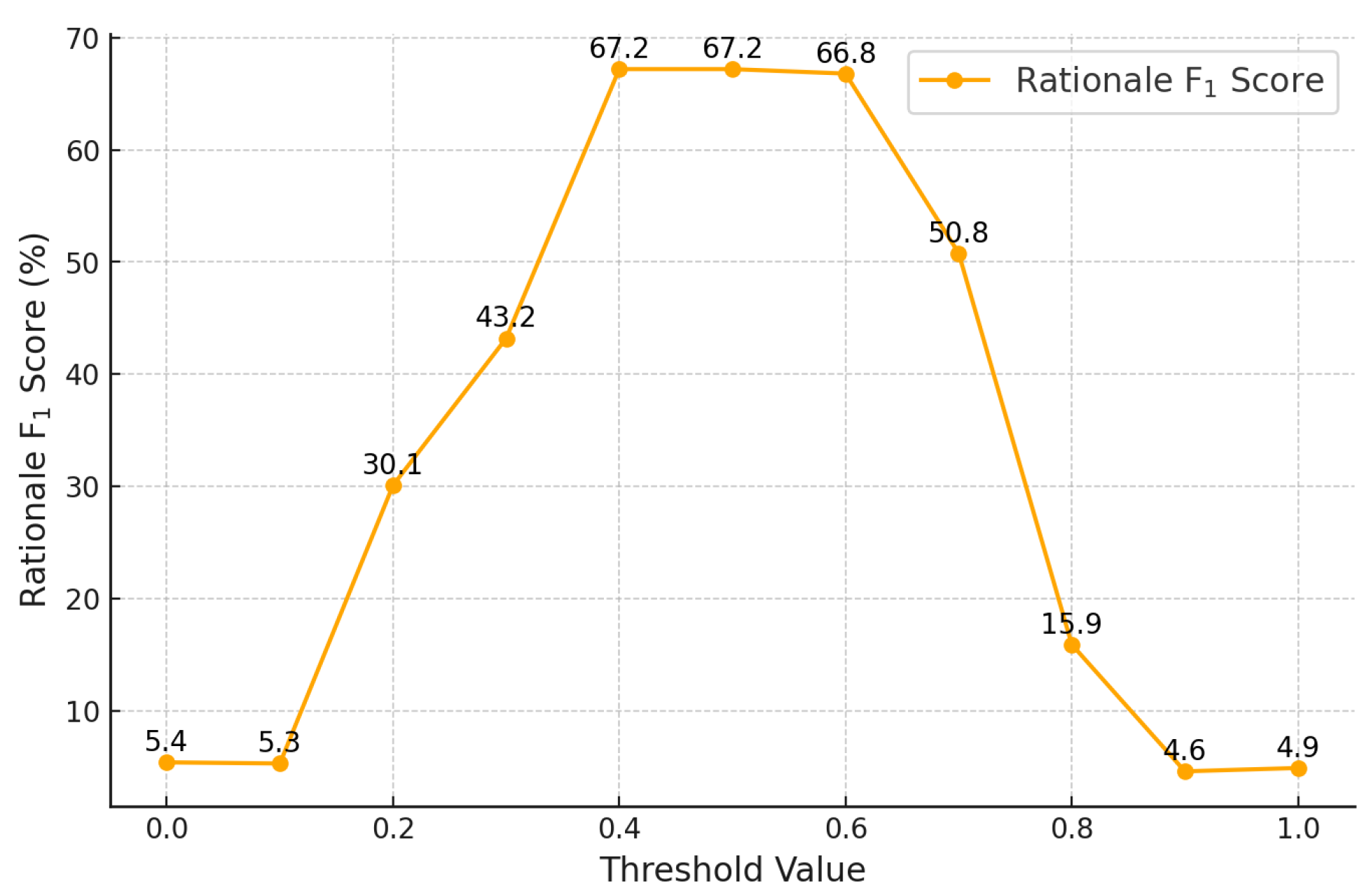

4.5.4. Further Analysis

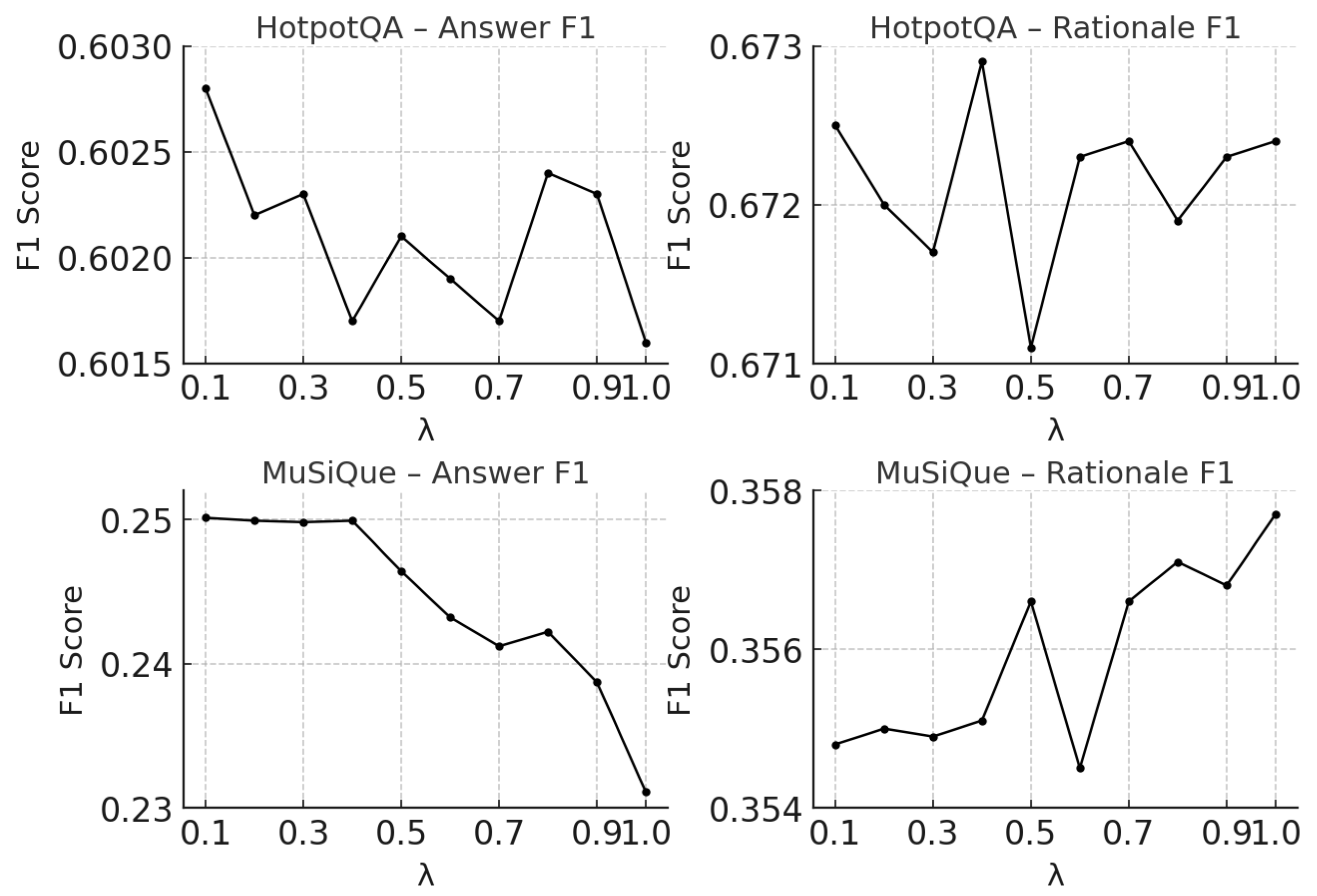

4.5.5. Sensitivity to the Consistency Weight

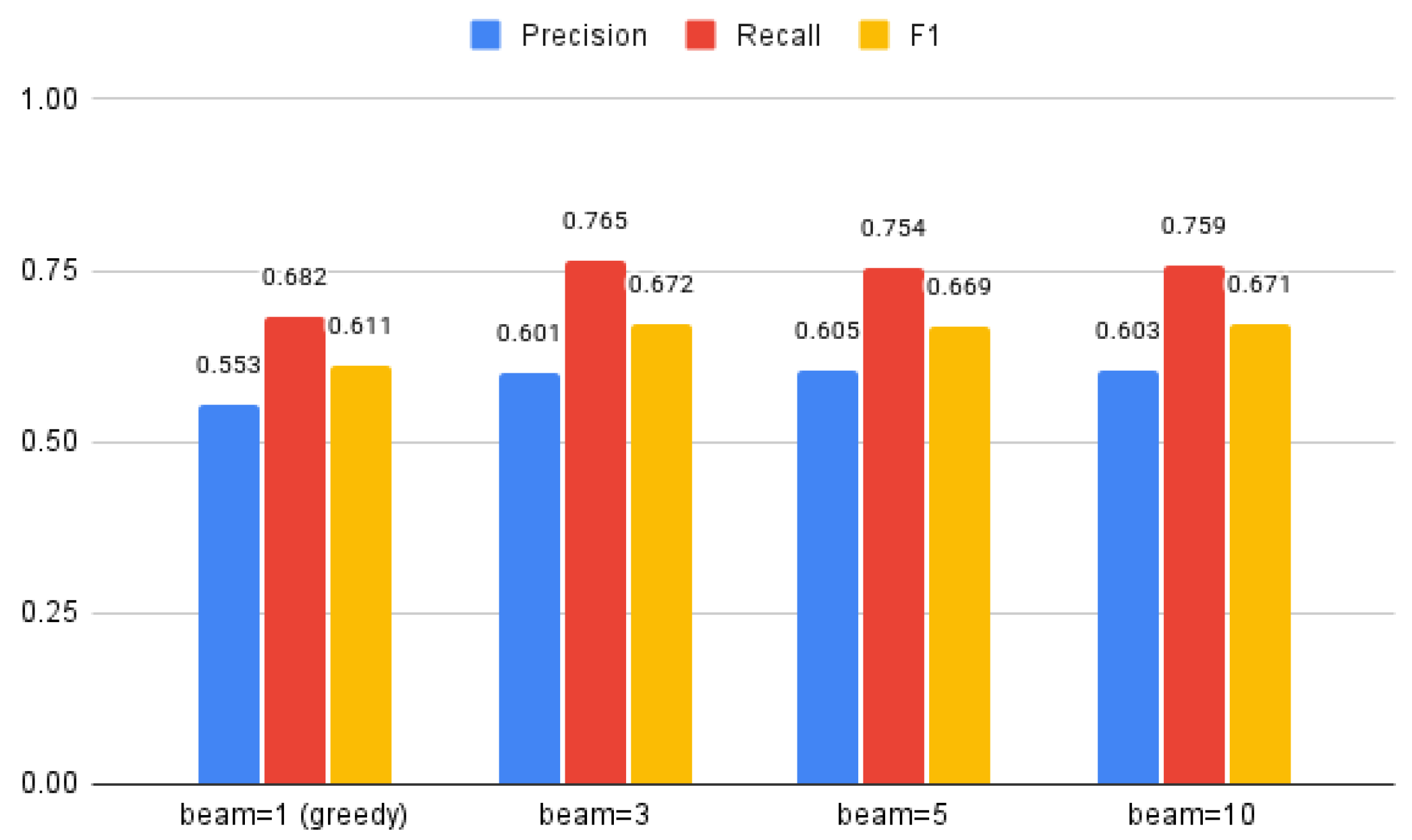

4.5.6. Effect of Beam Width on Performance

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Implementation Details

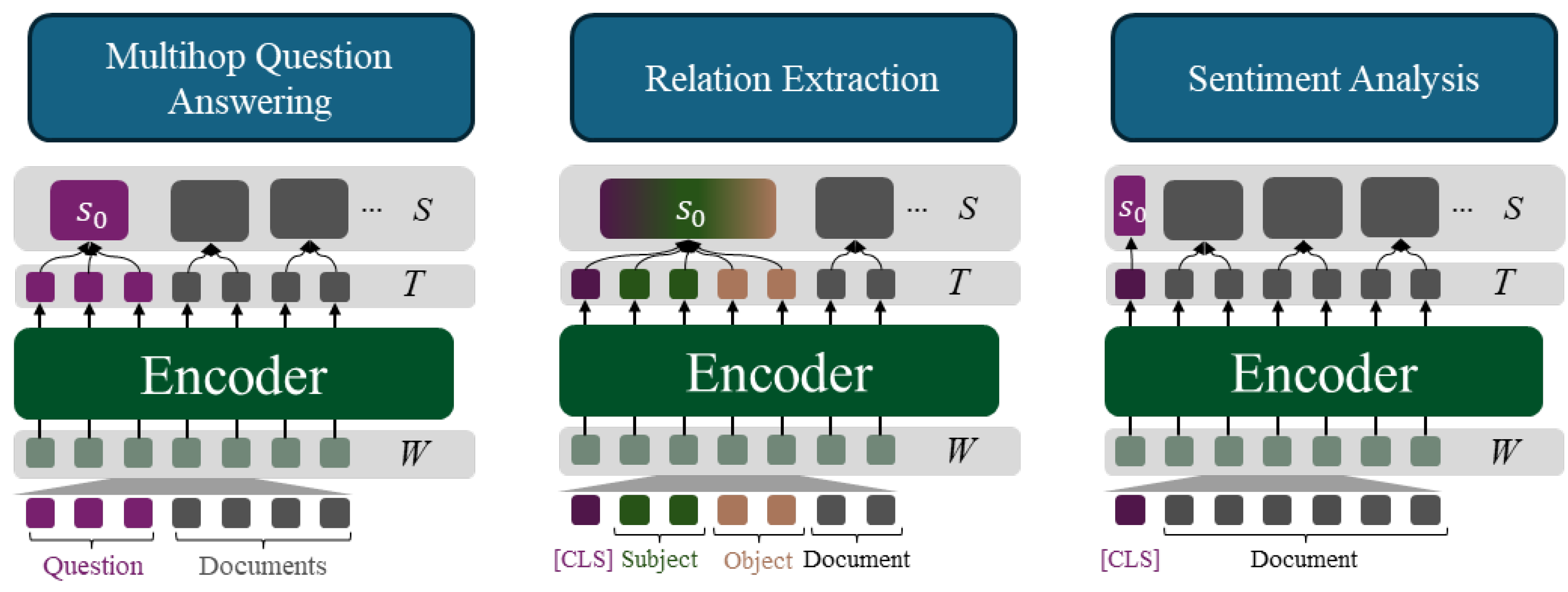

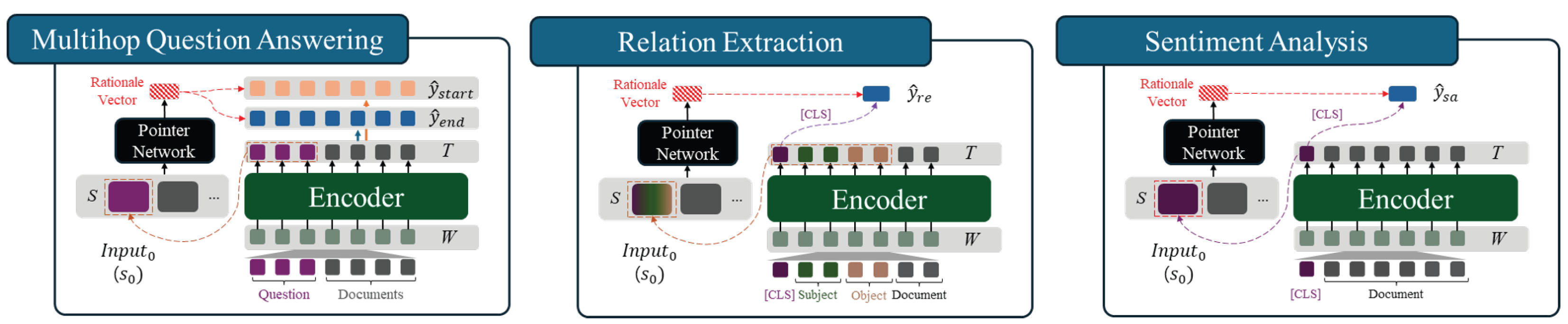

- Multi-Hop Question Answering:

- −

- The input sequence T follows the format: ‘ Question Document ’. The encoded token representations T are then processed to extract key evidence for reasoning.

- −

- Since each question is associated with multiple documents, each mini-batch includes multiple document-question pairs for the same question.

- Document-Level Relation Extraction:

- −

- The input T consists of one or more sentences containing entity mentions, and the relationships between entities are inferred. ELECTRA encodes these token embeddings V, capturing contextual information to analyze entity relationships.

- −

- In this task, each data sample is processed as an individual document, and each mini-batch includes different documents.

- Binary Sentiment Classification:

- −

- The input T consists of a review text or a short comment, where the final token representations V are used for sentiment classification.

- −

- Similar to relation extraction, each sample corresponds to a single document, and the mini-batch contains different texts.

- For detailed implementation equations of multi-hop question answering, refer to Equation (6).

- Relation extraction involves classifying the relationship between a subject and an object within a given sentence or document. To achieve this, the input sequence follows the structure shown in Figure A2. A probability distribution over relation labels is then computed based on , which encodes the contextual information of the input sequence. This process is described in Equation (A1) below.

- Sentiment analysis, as a form of sentence classification, identifies the sentiment within the input text and classifies it as positive, negative, or neutral. The input and output structure for this task is shown in Figure A2. Similar to relation extraction, a probability distribution over sentiment labels is computed according to Equation (A2).

Appendix B. GPT-Based Evaluation Instructions and Inputs for All Tasks

| Instruction |

| Determine how validly the provided <Sentences>support the given <Answer>to the <Question>. Your task is to assess the supporting sentences based on their relevance and strength in relation to the answer, regardless of whether the answer is correct. The focus is on evaluating the validity of the evidence itself. Even if the answer is incorrect, a supporting sentence can still be rated highly if it is relevant and strong. <Score criteria> - 0 : The sentences do not support the answer. They are irrelevant, neutral, or contradict the answer. - 1 : The sentences provide partial or unclear support for the answer. The connection is weak, lacking context, or not directly related to the answer. - 2 : The sentences strongly support the answer, making it clear and directly inferable from them. <Output format> <Score>: <0, 1, or 2> |

| Input |

| <Question> When did the park at which Tivolis Koncertsal is located open? </Question> <Answer> 15 August 1843 </Answer> <Sentences> Tivolis Koncertsal is a 1660-capacity concert hall located at Tivoli Gardens in Copenhagen, Denmark. The building, which was designed by Frits Schlegel and Hans Hansen, was built between 1954 and 1956. The park opened on 15 August 1843 and is the second-oldest operating amusement park in the world, after … </Sentences> <Score>: |

| Instruction |

| Determine whether the relationship between the given <Subject> and <Object> can be inferred solely from the provided <Sentences>. The <Relationship>may not be explicitly stated, and it might even be incorrect. However, your task is to evaluate whether the sentences themselves suggest the given relationship, regardless of its accuracy. <Score criteria> - 0: The sentences do not suggest the relationship at all. The sentences are neutral, irrelevant, or contradict the relationship. - 1: The sentences somewhat suggest the relationship but are not conclusive. The relationship is partially inferred but not clearly established. - 2: The sentences fully suggest the relationship. The relationship can be clearly and directly inferred from the sentences alone. <Output format> Score: <0, 1, or 2> |

| Input |

| <Sentences> The discovery of the signal in the chloroplast genome was announced in 2008 by researchers from the University of Washington. Somehow, chloroplasts from V. orcuttiana, swamp verbena ( V. hastata) or a close relative of these had admixed into the G. bipinnatifida genome. </Sentences> <Subject> mock vervains </Subject> <Object> Verbenaceae </Object> <Relationship> parent taxon: closest of the taxon in question </Relationship> <Score>: |

| Instruction |

| Determine whether the given <Sentiment> can be derived solely from the <Supporting Sentences> for the given <Review>. The given <Sentiment>may not be the correct answer, but evaluate whether the <Supporting Sentences> alone can support it. <Score criteria> - 0: The supporting sentences do not support the sentiment at all. The facts are neutral, irrelevant to the sentiment, or contradict the sentiment. - 1: The supporting sentences somewhat support the sentiment but are not conclusive. The sentiment is partially inferred but not clearly. The facts suggest the sentiment but do not decisively establish it. - 2: The supporting sentences fully support the sentiment. The sentiment can be clearly and directly inferred from the facts alone. <Output format> Score: <0, 1, or 2> |

| Input |

| <Sentiment> positive </Sentiment> <Supporting Sentences> A trite fish-out-of-water story about two friends from the midwest who move to the big city to seek their fortune. They become Playboy bunnies, and nothing particularly surprising happens after that. </Supporting Sentences> <Score>: |

Appendix C. GPT-Based Answer and Supporting Sentence Extraction for All Tasks

| Instruction |

| Answer the given <Question> using only the provided <Reference documents>. Some documents may be irrelevant. Keep the answer concise, extracting only key terms or phrases from the <Reference documents> rather than full sentences. Extract exactly 3 supporting sentences—no more, no less. For each supporting sentence, provide its sentence number as it appears in the reference documents. <Output format> <Answer>: <Generated Answer> <Supporting Sentences>: <Sentence Number 1>, <Sentence Number 2>, <Sentence Number 3> |

| Input |

| <Question> When did the park at which Tivolis Koncertsal is located open? </Question> <Reference documents> Document 1: Tivolis Koncertsal [1] Tivolis Koncertsal is a 1660-capacity concert hall located at Tivoli Gardens in Copenhagen, Denmark. [2] The building, which was designed by Frits Schlegel and Hans Hansen, was built between 1954 and 1956. Document 2: Tivoli Gardens [3] Tivoli Gardens (or simply Tivoli) is a famous amusement park and pleasure garden in Copenhagen, Denmark. [4] The park opened on 15 August 1843 and is the second-oldest operating amusement park in the world, after … Document 3: Takino Suzuran Hillside National Government Park [5] Takino Suzuran Hillside National Government Park is a Japanese national government park located in Sapporo, Hokkaido. [6] It is the only national government park in the northern island of Hokkaido. [7] The park area spreads over 395.7 hectares of hilly country and ranges in altitude between 160 and 320 m above sea level. [8] Currently, 192.3 is accessible to the public. … </Reference documents> <Answer>: <Supporting Sentences>: |

| Instruction |

| Determine the relationship between the given <Subject>and <Object>. The relationship must be selected from the following list: ‘head of government’, ‘country’, ‘place of birth’, ‘place of death’, ‘father’, ‘mother’, ‘spouse’, … After selecting the appropriate relationship, provide two key sentence numbers that best support this relationship. <Output format> <Relationship>: <Extracted Relationship> <Supporting Sentences>: <Sentence Number 1>, <Sentence Number 2> |

| Input |

| <Document> [1] Since the new chloroplast genes replaced the old ones, it may be that the possibly … [2] Glandularia, common name mock vervain or mock verbena, is a genus of annual and perennial herbaceous flowering … [3] They are native to the Americas. [4] Glandularia species are closely related to the true vervains and sometimes still … … </Document> <Subject> mock vervains </Subject> <Object> Verbenaceae </Object> <Relationship>: <Supporting Sentences>: |

| Instruction |

| Classify the sentiment of the given <Sentence> as either ‘positive’ or ‘negative’. After selecting the appropriate sentiment, extract **only two** key sentences that best support this sentiment. <Output format> <Sentiment>: <Extracted Sentiment> <Supporting Sentences>: <Sentence Number 1>, <Sentence Number 2> |

| Input |

| <Document> [1] This movie was awful. [2] The ending was absolutely horrible. [3] There was no plot to the movie whatsoever. [4] The only thing that was decent about the movie was the acting done by Robert … … </Document> <Sentiment>: <Supporting Sentences>: |

Appendix D. Qualitative Example of Rationale Selection

| Question | What is the name of the fight song of the university whose main campus is in Lawrence, Kansas and whose branch campuses are in the Kansas City metropolitan area? |

| Answer | Kansas Song |

| Context | North Kansas City, Missouri |

| North Kansas City is a city in Clay County, Missouri, United States that despite … of the Kansas City metropolitan area. | |

| The population was 4208 at the 2010 census. | |

| … | |

| It was named after the iconic Kansas City Scout Statue that exists in Penn Valley Park, overlooking Downtown Kansas City. | |

| Kansas Song | |

| Kansas Song (We’re From Kansas) is a fight song of the University of Kansas. | |

| … | |

| The University of Kansas, often referred to as KU or Kansas, is a public research university in the U.S. state of Kansas. | |

| The main campus in Lawrence, one of the largest college towns in Kansas, is on Mount Oread, the highest elevation in Lawrence. | |

| Two branch campuses are in the Kansas City metropolitan area: the Edwards Campus … and hospital in Kansas City. |

Appendix E. Runtime and Efficiency Analysis

| Model | Parameters | Training Time/Epoch | Inference Speed (Examples/s) | Relative Efficiency |

| HUG [11] | 340 M | 5.8 h | 65 | 1.0× |

| RAG [14] | 400 M | 6.3 h | 48 | 0.7× |

| Proposed (PointerNet-XAI) | 110 M | 2.4 h | 150 | 2.4× |

Appendix F. Implementation Details

| Component | Description |

|---|---|

| Optimizer | AdamW |

| Learning Rate | 2 × 10−5 (initial) |

| Learning Rate Scheduler | Linear decay with 10% warmup steps |

| Batch Size | 16 (per GPU) |

| Epochs | 10 |

| Early Stopping | Based on validation loss (patience = 3) |

| Gradient Clipping | 1.0 |

| Weight Decay | 0.01 |

| Dropout | 0.1 |

| Warmup Ratio | 0.1 |

| Random Seeds | 42 (for all runs) |

| Validation Criterion | Lowest validation loss across epochs |

| Implementation Framework | PyTorch 2.2.2 + HuggingFace Transformers |

References

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S.; et al. Gpt-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Touvron, H.; Martin, L.; Stone, K.; Albert, P.; Almahairi, A.; Babaei, Y.; Bashlykov, N.; Batra, S.; Bhargava, P.; Bhosale, S.; et al. Llama 2: Open foundation and fine-tuned chat models. arXiv 2023, arXiv:2307.09288. [Google Scholar] [CrossRef]

- Zhao, W.X.; Zhou, K.; Li, J.; Tang, T.; Wang, X.; Hou, Y.; Min, Y.; Zhang, B.; Zhang, J.; Dong, Z.; et al. A survey of large language models. arXiv 2023, arXiv:2303.18223. [Google Scholar]

- Dubey, A.; Jauhri, A.; Pandey, A.; Kadian, A.; Al-Dahle, A.; Letman, A.; Mathur, A.; Schelten, A.; Yang, A.; Fan, A.; et al. The llama 3 herd of models. arXiv 2024, arXiv:2407.21783. [Google Scholar] [CrossRef]

- Bender, E.M.; Gebru, T.; McMillan-Major, A.; Shmitchell, S. On the dangers of stochastic parrots: Can language models be too big? In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, Virtually, 3–10 March 2021; pp. 610–623. [Google Scholar]

- Bommasani, R.; Hudson, D.A.; Adeli, E.; Altman, R.; Arora, S.; von Arx, S.; Bernstein, M.S.; Bohg, J.; Bosselut, A.; Brunskill, E.; et al. On the opportunities and risks of foundation models. arXiv 2021, arXiv:2108.07258. [Google Scholar] [CrossRef]

- Rudin, C.; Chen, C.; Chen, Z.; Huang, H.; Semenova, L.; Zhong, C. Interpretable machine learning: Fundamental principles and 10 grand challenges. Stat. Surv. 2022, 16, 1–85. [Google Scholar] [CrossRef]

- Shwartz, V.; Choi, Y. Do neural language models overcome reporting bias? In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 8–13 December 2020; pp. 6863–6870. [Google Scholar]

- Chen, J.; Lin, S.t.; Durrett, G. Multi-hop question answering via reasoning chains. arXiv 2019, arXiv:1910.02610. [Google Scholar]

- Wu, H.; Chen, W.; Xu, S.; Xu, B. Counterfactual supporting facts extraction for explainable medical record based diagnosis with graph network. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Online, 6–11 June 2021; pp. 1942–1955. [Google Scholar]

- Zhao, W.; Chiu, J.; Cardie, C.; Rush, A.M. Hop, Union, Generate: Explainable Multi-hop Reasoning without Rationale Supervision. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–10 December 2023; pp. 16119–16130. [Google Scholar]

- Yang, Z.; Qi, P.; Zhang, S.; Bengio, Y.; Cohen, W.; Salakhutdinov, R.; Manning, C.D. HotpotQA: A Dataset for Diverse, Explainable Multi-hop Question Answering. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 2369–2380. [Google Scholar]

- Qi, P.; Lin, X.; Mehr, L.; Wang, Z.; Manning, C.D. Answering Complex Open-domain Questions Through Iterative Query Generation. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 2590–2602. [Google Scholar]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.t.; Rocktäschel, T.; et al. Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks. Adv. Neural Inf. Process. Syst. 2020, 33, 9459–9474. [Google Scholar]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why should i trust you?” Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 1135–1144. [Google Scholar]

- Gunning, D.W.; Aha, D. DARPA’s explainable artificial intelligence program. AI Mag 2019, 40, 44. [Google Scholar] [CrossRef]

- Jiang, Z.; Xu, F.F.; Araki, J.; Neubig, G. How can we know what language models know? Trans. Assoc. Comput. Linguist. 2020, 8, 423–438. [Google Scholar] [CrossRef]

- Arras, L.; Montavon, G.; Müller, K.R.; Samek, W. Explaining Recurrent Neural Network Predictions in Sentiment Analysis. In Proceedings of the 8th Workshop on Computational Approaches to Subjectivity, Sentiment and Social Media Analysis WASSA 2017: Proceedings of the Workshop, Copenhagen, Denmark, 29 August 2017; pp. 159–168. [Google Scholar]

- Scott, M.; Su-In, L. A unified approach to interpreting model predictions. Adv. Neural Inf. Process. Syst. 2017, 30, 4765–4774. [Google Scholar]

- Alvarez-Melis, D.; Jaakkola, T.S. On the Robustness of Interpretability Methods. arXiv 2018, arXiv:1806.08049. [Google Scholar] [CrossRef]

- Gilpin, L.H.; Bau, D.; Yuan, B.Z.; Bajwa, A.; Specter, M.; Kagal, L. Explaining explanations: An overview of interpretability of machine learning. In Proceedings of the 2018 IEEE 5th International Conference on Data Science and Advanced Analytics (DSAA), Turin, Italy, 1–3 October 2018; pp. 80–89. [Google Scholar]

- Jiang, Z.; Zhang, Y.; Yang, Z.; Zhao, J.; Liu, K. Alignment rationale for natural language inference. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Bangkok, Thailand, 1–6 August 2021; pp. 5372–5387. [Google Scholar]

- Jain, S.; Wallace, B.C. Attention is not explanation. In Proceedings of the NAACL-HLT 2019, Minneapolis, MN, USA, 3–5 June 2019; pp. 3543–3556. [Google Scholar]

- Serrano, S.; Smith, N.A. Is attention interpretable? In Proceedings of the ACL 2019, Austin, TX, USA, 4–13 October 2019; pp. 2931–2951. [Google Scholar]

- Wiegreffe, S.; Pinter, Y. Attention is not not explanation. In Proceedings of the EMNLP-IJCNLP 2019, Hong Kong, China, 3–7 November 2019; pp. 11–20. [Google Scholar]

- Jacovi, A.; Goldberg, Y. Towards faithfully interpretable NLP systems: How should we define and evaluate faithfulness? In Proceedings of the ACL 2020, Online, 5–10 July 2020; pp. 4198–4205. [Google Scholar]

- Lei, T.; Barzilay, R.; Jaakkola, T. Rationalizing neural predictions. In Proceedings of the EMNLP 2016, Austin, TX, USA, 22 October 2016; pp. 107–117. [Google Scholar]

- DeYoung, J.; Jain, S.; Rajani, N.F.; Lehman, E.; Xiong, C.; Socher, R.; Wallace, B.C. ERASER: A benchmark to evaluate rationalized NLP models. In Proceedings of the ACL 2020, Online, 5–10 July 2020; pp. 4443–4458. [Google Scholar]

- Welbl, J.; Stenetorp, P.; Riedel, S. Constructing datasets for multi-hop reading comprehension across documents. Trans. Assoc. Comput. Linguist. 2018, 6, 287–302. [Google Scholar] [CrossRef]

- Yu, X.; Min, S.; Zettlemoyer, L.; Hajishirzi, H. CREPE: Open-Domain Question Answering with False Presuppositions. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, Canada, 9–14 July 2023; pp. 10457–10480. [Google Scholar]

- Zhao, H.; Chen, H.; Yang, F.; Liu, N.; Deng, H.; Cai, H.; Du, M. Explainability for Large Language Models: A Survey. ACM Trans. Intell. Syst. Technol. 2024, 15, 1–38. [Google Scholar] [CrossRef]

- Bilal, A.; Ebert, D.; Lin, B. LLMs for Explainable AI: A Comprehensive Survey. arXiv 2025, arXiv:2504.00125. [Google Scholar] [CrossRef]

- Huang, S.; Zhou, Y.; Liu, X.; Zhang, J.; Wang, W.; Huang, M. Can Large Language Models Explain Themselves? A Study of LLM-Generated Self-Explanations. arXiv 2023, arXiv:2310.11207. [Google Scholar] [CrossRef]

- Turpin, M.; Du, Y.; Michael, J.; Wu, Z.; Cotton, C.; Raghu, M.; Uesato, J. Language Models Don’t Always Say What They Think: Unfaithful Explanations in Chain-of-Thought Prompting. Adv. Neural Inf. Process. Syst. 2023, 36, 74952–74965. [Google Scholar]

- Atanasova, P.; Simonsen, J.G.; Lioma, C.; Augenstein, I. Diagnostics-guided explanation generation. In Proceedings of the AAAI Conference on Artificial Intelligence 2022, Online, 22 February–1 March 2022; Volume 36, pp. 10445–10453. [Google Scholar]

- Glockner, M.; Habernal, I.; Gurevych, I. Why do you think that? exploring faithful sentence-level rationales without supervision. arXiv 2020, arXiv:2010.03384. [Google Scholar] [CrossRef]

- Min, S.; Zhong, V.; Zettlemoyer, L.; Hajishirzi, H. Multi-hop Reading Comprehension through Question Decomposition and Rescoring. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 6097–6109. [Google Scholar]

- Mao, J.; Jiang, W.; Wang, X.; Liu, H.; Xia, Y.; Lyu, Y.; She, Q. Explainable question answering based on semantic graph by global differentiable learning and dynamic adaptive reasoning. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, Abu Dhabi, United Arab Emirates, 7–11 December 2022; pp. 5318–5325. [Google Scholar]

- Yin, Z.; Wang, Y.; Hu, X.; Wu, Y.; Yan, H.; Zhang, X.; Cao, Z.; Huang, X.; Qiu, X. Rethinking label smoothing on multi-hop question answering. In Proceedings of the China National Conference on Chinese Computational Linguistics, Harbin, China, 3–5 August 2023; pp. 72–87. [Google Scholar]

- Tishby, N.; Zaslavsky, N. Deep learning and the information bottleneck. In Proceedings of the 2015 IEEE Information Theory Workshop (ITW) 2015, Jeju Island, Republic of Korea, 11–15 October 2015; pp. 1–5. [Google Scholar]

- Clark, K. Electra: Pre-training text encoders as discriminators rather than generators. arXiv 2020, arXiv:2003.10555. [Google Scholar]

- Vinyals, O.; Fortunato, M.; Jaitly, N. Pointer networks. Adv. Neural Inf. Process. Syst. 2015, 28. [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar] [CrossRef]

- Brown, P.F.; Della Pietra, S.A.; Della Pietra, V.J.; Mercer, R.L. The mathematics of statistical machine translation: Parameter estimation. Comput. Linguist. 1993, 19, 263–311. [Google Scholar]

- Trivedi, H.; Balasubramanian, N.; Khot, T.; Sabharwal, A. MuSiQue: Multihop Questions via Single-hop Question Composition. Trans. Assoc. Comput. Linguist. 2022, 10, 539–554. [Google Scholar] [CrossRef]

- Yao, Y.; Ye, D.; Li, P.; Han, X.; Lin, Y.; Liu, Z.; Liu, Z.; Huang, L.; Zhou, J.; Sun, M. DocRED: A Large-Scale Document-Level Relation Extraction Dataset. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 764–777. [Google Scholar]

- Maas, A.L.; Daly, R.E.; Pham, P.T.; Huang, D.; Ng, A.Y.; Potts, C. Learning Word Vectors for Sentiment Analysis. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, Portland, OR, USA, 19–27 June 2011; pp. 142–150. [Google Scholar]

- Kullback, S.; Leibler, R.A. On information and sufficiency. Ann. Math. Stat. 1951, 22, 79–86. [Google Scholar] [CrossRef]

- Robertson, S.E.; Walker, S.; Jones, S.; Hancock-Beaulieu, M.M.; Gatford, M. Okapi at TREC-3; British Library Research and Development Department: London, UK, 1995; pp. 109–126. [Google Scholar]

- You, H. Multi-grained unsupervised evidence retrieval for question answering. Neural Comput. Appl. 2023, 35, 21247–21257. [Google Scholar] [CrossRef]

- OpenAI. Gpt-4o Mini: Advancing Cost-Efficient Intelligence. 2024. Available online: https://openai.com/index/gpt-4o-mini-advancing-cost-efficient-intelligence/ (accessed on 17 September 2025).

| Dataset | Train Set | Test Set | Sampled Test Set |

|---|---|---|---|

| HotpotQA | 90,564 | 7405 | 1000 |

| MuSiQue | 25,494 | 3911 | 1000 |

| DocRED | 56,195 | 17,803 | 1000 |

| IMDB | 25,000 | - | 1000 |

| Models | Answer | Rationale | ||

|---|---|---|---|---|

| Precision | Recall | |||

| BM25 | - | - | - | 40.5 |

| RAG-small | 62.8 | - | - | 49.0 |

| Semi-supervised | 66.0 | - | - | 64.5 |

| You (2023) | 50.9 | - | 74.2 | - |

| HUG | 66.8 | - | - | 67.1 |

| Proposed Model | 60.2 ± 0.2 | 60.1 | 76.5 | 67.2 ± 0.3 |

| Upperbound | 61.1 | 82.8 | 80.5 | 80.7 |

| Models | Answer | Rationale |

|---|---|---|

| BM25 | - | 12.9 |

| RAG-small | 24.2 | 32.0 |

| HUG | 25.1 | 34.2 |

| Proposed Model | 25.0 ± 0.4 | 35.4 ± 0.5 |

| Models | Answer | Rationale |

|---|---|---|

| GPTpred | 41.8 | 21.8 |

| Proposed Model | 81.8 ± 0.1 | 54.9 ± 0.2 |

| Models | Dataset | Overall | |||

|---|---|---|---|---|---|

| HotpotQA | MuSiQue | DocRED | IMDB | ||

| GPTpred | 92.4 | 81.0 | 51.0 | 95.4 | 79.9 |

| GPTgold | 95.5 | 82.6 | 65.0 | - | - |

| Proposed Model | 87.5 | 55.9 | 87.2 | 88.7 | 79.8 |

| Models | Answer | Evidence | ||

|---|---|---|---|---|

| Precision | Recall | |||

| Proposed Model | 60.2 | 60.1 | 76.5 | 67.2 |

| - Loss | 60.5 | 47.7 | 61.1 | 52.9 |

| - Beam | 60.8 | 55.3 | 68.2 | 61.1 |

| - Loss & Beam | 60.4 | 47.3 | 60.9 | 52.7 |

| Models | Test Set | Sampled Test Set |

|---|---|---|

| Proposed Model (All Document Input ⇔ Only Rationale Sentences Input) | 84.9 | 93.3 |

| Proposed Model (Ground Truth ⇔ Only Rationale Sentences Input) | 60.2 | 90.3 |

| Proposed Model (All Document Input ⇔ Ground Truth) | 60.2 | 100 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, K.; Jang, Y.; Kim, H. Explainable Multi-Hop Question Answering: A Rationale-Based Approach. Big Data Cogn. Comput. 2025, 9, 273. https://doi.org/10.3390/bdcc9110273

Han K, Jang Y, Kim H. Explainable Multi-Hop Question Answering: A Rationale-Based Approach. Big Data and Cognitive Computing. 2025; 9(11):273. https://doi.org/10.3390/bdcc9110273

Chicago/Turabian StyleHan, Kyubeen, Youngjin Jang, and Harksoo Kim. 2025. "Explainable Multi-Hop Question Answering: A Rationale-Based Approach" Big Data and Cognitive Computing 9, no. 11: 273. https://doi.org/10.3390/bdcc9110273

APA StyleHan, K., Jang, Y., & Kim, H. (2025). Explainable Multi-Hop Question Answering: A Rationale-Based Approach. Big Data and Cognitive Computing, 9(11), 273. https://doi.org/10.3390/bdcc9110273