5.1. Students

The student cohort was predominantly 21+ years (377; 65.2%), with 18–20 years comprising 201 (34.8%),

Table 1. The gender split was 55.01% female (318) and 44.98% male (260). Reported fields of study clustered mainly in Computing/IT/AI (183; 31.7%), with smaller proportions in Engineering (89; 15.39%), Economics/Business (101; 17.47%), and Education (120; 20.76%); the remainder were Other (85; 14.70%). Academic standing spanned Year 1–5+, with the largest groups in Year 1 (139; 24.0%) and Year 2 (122; 21.1%), followed by Year 3 (115; 19.9%), Year 4 (104; 18.0%), and Year 5+ (98; 17.0%).

The study explored university students’ perceptions, usage patterns, and expectations regarding Generative AI (GenAI) in academic contexts. Thematic analysis of open-ended survey responses revealed diverse perspectives, with results categorised into key domains: emotional response (excitement and concern), preferred institutional response, actual use cases, and overall sentiment.

5.1.1. Emotional Response Toward GenAI

Among responses addressing emotional reactions to GenAI, 28.81% were classified as neutral or expressing no concerns, while 33.90% were too vague or unclassifiable. The remaining responses revealed both enthusiasm and apprehension. Positive themes such as “efficiency and convenience” (6.78%) and “accessibility of knowledge” that emphasised GenAI’s utility in accelerating tasks like writing, coding, and summarising. However, concerns were more pronounced: 12.71% highlighted overreliance and potential cognitive decline, 9.32% focused on academic integrity risks, and 5.08% feared misinformation. A smaller fraction (3.39%) worried about uniformity and loss of originality.

These results suggest that while some students appreciate GenAI’s academic support capabilities, a substantial segment fears erosion of critical thinking and ethical boundaries. Notably, fewer students emphasised the positive aspects of GenAI compared to those voicing concerns.

5.1.2. Institutional Response Preferences

When asked how universities should respond to GenAI use in learning, the dominant theme (39.32%) again fell under “Other/Unclassified”, indicating a lack of clarity or specificity in student expectations. However, a significant portion (23.93%) supported the integration of GenAI into learning, while 11.11% called for guidance and training on responsible use. A small minority (3.42%) advocated for openness and experimentation, and only 0.85% suggested regulation or control.

These findings reveal a student body generally open to GenAI, with a preference for structured support rather than restrictive policies. The minimal support for prohibition highlights the need for balanced governance that fosters responsible experimentation and literacy.

5.1.3. Patterns of GenAI Use

Analysis of usage patterns indicated that students primarily utilise GenAI for idea generation (125 responses) and summarising readings (91), which align with preparatory and cognitively active tasks. Coding assistance (83) and proofreading (58) were also common. However, 66 students admitted to using GenAI for drafting assignments, raising potential concerns about academic integrity if these texts are submitted without proper modification or acknowledgment.

This usage pattern highlights a tension between legitimate academic support and possible misconduct. While most uses fall into acceptable or grey areas, the prevalence of assignment drafting necessitates clearer institutional policies on ethical boundaries.

5.1.4. Sentiment Distribution and Gender Differences

A sentiment analysis of the qualitative data showed a dominance of neutral responses (n = 250), with only 55 negative and 20 positive reactions. The lack of strong sentiment may reflect uncertainty or limited awareness of GenAI’s implications. Gender differences were modest: females contributed more neutral and slightly more negative responses, while both genders offered similarly low levels of positive sentiment. This cautious emotional landscape indicates a need for awareness-building among students to support informed engagement with GenAI.

5.1.5. Synthesis and Educational Implications

Students overwhelmingly desire integration, training, and guidance, not bans or restrictions. The rarity of prohibition-oriented suggestions (only two respondents) signals an opportunity for universities to lead with constructive, transparent frameworks. Simultaneously, the large proportion of vague or neutral responses, especially regarding how institutions should act, suggests a lack of student preparedness or engagement with GenAI policy discourse. Together, these findings imply that student attitudes toward GenAI are potentially marked by ambivalence, curiosity, and caution, with a clear demand for institutional leadership. Educational strategies should therefore prioritise: (a) developing GenAI literacy and critical usage skills; (b) embedding ethical and creative uses of AI within curricula; (c) encouraging informed experimentation within academic integrity boundaries.

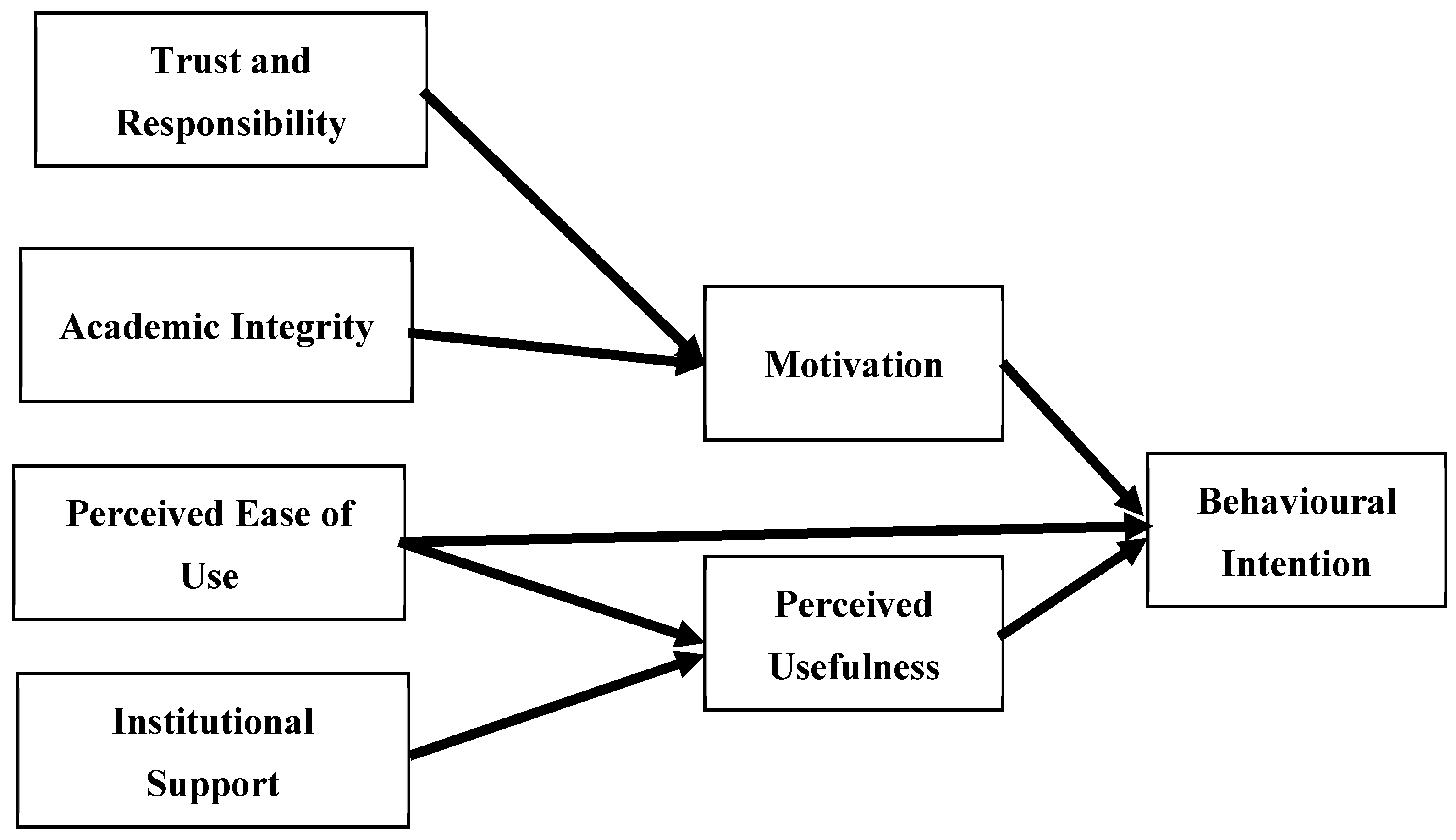

5.1.6. Structural Model Assessment

The structural model was evaluated using SmartPLS, incorporating key TAM-based and contextual constructs: perceived ease of use (EOU), perceived usefulness (PU), motivation (Mov), institutional support (Ins-Sup), academic integrity, trust-responsibility (T-R), and intention to use GenAI. Model performance was assessed using multiple criteria, including path coefficients, R2 values, mediation analysis, reliability and validity statistics, multicollinearity diagnostics, and model fit indices.

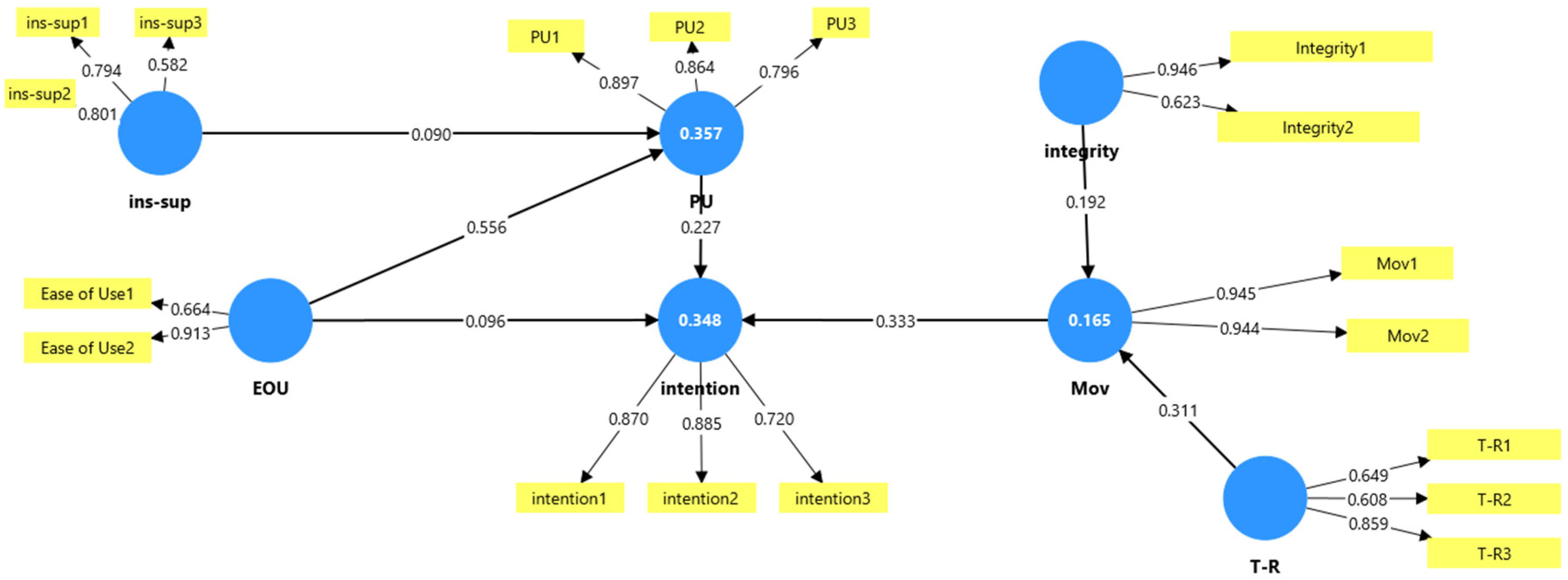

Measurement Model: Factor Loadings and Indicator Validity

For the construct Perceived Ease of Use (EOU), two indicators were used: Ease of Use1 and Ease of Use2, which exhibited factor loadings of 0.664 and 0.913, respectively (

Table 2). While the first item was slightly below the recommended 0.70 threshold, it was retained due to acceptable AVE (0.638) and composite reliability (0.774), indicating adequate convergent validity. Motivation (Mov) was measured using two items (Mov1 and Mov2), which both showed exceptionally high factor loadings of 0.945 and 0.944, respectively (

Table 2). These values reflect excellent indicator reliability and strong representation of the underlying latent construct. This was further supported by the construct’s AVE of 0.892 and composite reliability of 0.943. For perceived usefulness (PU), three items were included with loadings of 0.897 (PU1), 0.864 (PU2), and 0.796 (PU3) (

Table 2). All values exceeded the minimum recommended threshold, indicating a strong reflective measurement model for this construct. The high internal consistency of PU was further confirmed by a composite reliability of 0.889 and AVE of 0.728.

Trust-responsibility (T-R) was assessed using three items (T-R1 = 0.649, T-R2 = 0.608, T-R3 = 0.859). Although two of the indicators fell slightly below the 0.70 threshold, the average variance extracted (AVE = 0.509) was still within acceptable limits, suggesting that the construct retains adequate convergent validity. Given the theoretical relevance of this construct and acceptable internal consistency (composite reliability = 0.753), the items were retained for further analysis. The construct institutional support (Ins-Sup) also included three items: ins-sup1 (0.794), ins-sup2 (0.801), and ins-sup3 (0.582). Two of the three indicators showed high loadings, while one fell slightly below 0.60. Despite this, the composite reliability was acceptable at 0.773, and AVE was 0.537; suggesting sufficient convergent validity for a three-item construct in an exploratory study. Integrity was measured by two items with factor loadings of 0.946 (Integrity1) and 0.623 (Integrity2). The first item strongly represents the construct, while the second is marginally below the optimal threshold. Nevertheless, the construct’s AVE (0.641) and composite reliability (0.774) support its validity.

Finally, the construct intention to use was captured using three items. The indicators exhibited loadings of 0.870 (Intention1), 0.885 (Intention2), and 0.720 (Intention3), indicating a well-functioning measurement model. The internal consistency of this construct is also high (composite reliability = 0.867; AVE = 0.686), suggesting reliable and valid measurement. In summary, while a few items had loadings below the ideal threshold of 0.70, the overall measurement model demonstrates good psychometric properties in terms of convergent validity, internal consistency, and indicator reliability. The constructs are deemed robust enough for further structural modelling and hypothesis testing.

Cross-loadings (

Table 3) indicate that each indicator loads highest on its intended construct, supporting discriminant validity. Most primary loadings exceed the 0.70 guideline (e.g., PU1–PU3 = 0.796–0.897; Mov1-Mov2 = 0.944–0.945; Intention1–Intention3 = 0.720–0.885; EOU2 = 0.913; Integrity1 = 0.946), with acceptable primary loadings ≥ 0.60 for the remaining items (e.g., T-R1–T-R2 = 0.608–0.649; ins-sup3 = 0.582; Integrity2 = 0.623). Cross-loadings on non-target constructs are consistently lower, with typical separations ≥ 0.20 (e.g., EOU2: 0.913 on EOU vs. 0.659 on Mov; Mov1–Mov2: 0.944–0.945 on Motivation vs. 0.661–0.722 on PU; PU1–PU3: 0.796–0.897 on PU vs. 0.608–0.651 on Mov). A small number of conceptually adjacent constructs (Motivation ↔ PU; EOU ↔ Motivation; T-R ↔ Integrity) show moderate secondary associations, which is theoretically consistent with the model (ease can facilitate motivation; motivation relates to perceived usefulness; responsible use relates to integrity). Overall, the cross-loading pattern, together with our Fornell–Larcker and HTMT results (reported elsewhere), supports convergent validity of the intended constructs and adequate discriminant validity across the measurement model.

Construct Reliability and Validity

Internal consistency reliability was assessed using Cronbach’s alpha, composite reliability, and average variance extracted (AVE). Most constructs met the recommended thresholds (Cronbach’s alpha > 0.70, AVE > 0.50), with some exceptions. For instance, EOU had a low alpha (0.464) but acceptable composite reliability (0.774) and AVE (0.638), suggesting minimal risk despite limited indicators (

Table 4). Constructs such as Mov (α = 0.879, AVE = 0.892), PU (α = 0.813, AVE = 0.728), and intention (α = 0.772, AVE = 0.686) all demonstrated strong reliability and convergent validity (

Table 4). Discriminant validity was verified using inter-construct correlations and Fornell–Larcker criteria (

Table 5). All square roots of AVE were higher than inter-construct correlations, supporting discriminant validity. High correlations between PU, Mov, and intention were expected due to the theoretical links among them, but did not exceed AVE thresholds (

Table 5).

It is noticed that the correlation between PU and Mov exceeds the threshold of 0.7 (

Table 5). This is because PU and Mov are theoretically adjacent in TAM/SDT models; we examined inner collinearity among predictors of each endogenous construct. All inner VIFs were below recommended thresholds (3.3/5.0): Mov → Intention = 2.471, PU → Intention = 2.298, EOU → Intention = 1.764; EOU → PU = 1.189, Institutional Support → PU = 1.189; Integrity → Motivation = 1.074, Trust/Responsibility = 1.074. Item-level VIFs were similarly low (maximum 2.589). These diagnostics indicate no harmful multicollinearity. Discriminant validity is supported by the updated Fornell–Larcker matrix (√AVE on the diagonal exceeds inter-construct correlations) and by HTMT, where the largest value (PU–Mov = 0.869) remains below the 0.90 guideline.

Coefficient of Determination (R2) and Predictive Power

The R

2 values represent the proportion of variance in the dependent variables explained by the predictors. The R

2 for motivation (Mov) was 0.165, for perceived usefulness (PU) it was 0.357, and for intention to use it was 0.348 (

Table 6). These values suggest moderate explanatory power for PU and intention, and relatively lower explanatory power for Mov. The adjusted R

2 values were closely aligned with the unadjusted figures, indicating model stability.

Effect Sizes (f2) and Contribution of Predictors

Effect size analysis revealed that EOU had a large effect on PU (f

2 = 0.405), consistent with its theoretical importance in TAM (

Table 7). Moderate effect sizes were observed for T-R → Mov (f

2 = 0.108) and Mov → Intention (f

2 = 0.069), while PU → Intention had a smaller but meaningful effect (f

2 = 0.034)-

Table 7. Other paths, such as Ins-Sup → PU (f

2 = 0.011) and Integrity → Mov (f

2 = 0.041)-

Table 7 had small effect sizes, and the direct effect of EOU on Intention (f

2 = 0.008) was minimal, emphasising the mediated role of PU.

The reliability analysis indicates that most constructions achieved satisfactory internal consistency and measurement stability, supporting confidence in the reported findings. Cronbach’s α and composite reliability values exceeded or approached the accepted thresholds (α ≥ 0.70; CR ≥ 0.70), and all AVE scores were above 0.50, confirming adequate convergent validity. Although a few constructs, specifically Ease of use (α = 0.46) and integrity (α = 0.50), showed lower alpha values, their composite reliabilities remained acceptable, suggesting that indicator homogeneity was sufficient for exploratory research. Moreover, discriminant validity checks (Fornell–Larcker and HTMT) and low inner-VIF statistics confirmed that multicollinearity was not a concern. Together, these results demonstrate that the measurement model is statistically reliable and that the observed relationships among constructs are unlikely to be artifacts of measurement error. Nevertheless, the moderate R2 values (0.16–0.35) imply that additional unmeasured factors may influence GenAI adoption and should be explored in future studies to further strengthen reliability and generalisability.

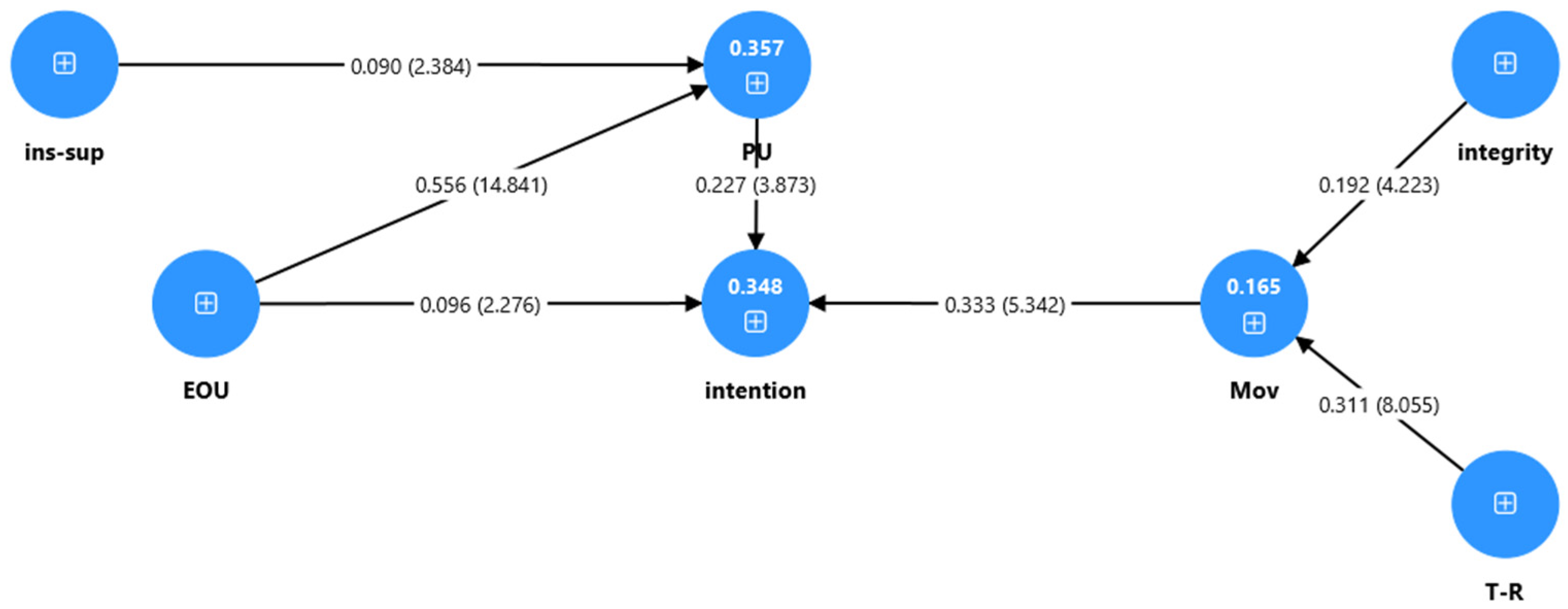

Path Coefficients and Hypothesis Testing

The direct path analysis (

Figure 2 and

Figure 3) revealed several statistically significant relationships. EOU → PU was highly significant (β = 0.555,

t = 14.841,

p < 0.001), confirming the foundational TAM hypothesis that perceived ease of use positively affects perceived usefulness (

Figure 3). Similarly, PU → Intention (β = 0.227,

t = 3.873,

p < 0.001) and Mov → Intention (β = 0.334,

t = 5.342,

p < 0.001) were both strong predictors of behavioural intention, with motivation emerging as the strongest direct influence. The path from T-R → Mov was also significant (β = 0.314,

t = 8.055,

p < 0.001), indicating that ethical responsibility and trust contribute meaningfully to internal motivational states. The influence of Institutional Support → PU (β = 0.094,

t = 2.384,

p = 0.017) was statistically significant but relatively weaker in magnitude. Furthermore, the model confirmed a modest yet significant effect from EOU → Intention (β = 0.097,

t = 2.276,

p = 0.023), suggesting some direct influence beyond the mediated PU pathway. Lastly, the path from Integrity → Mov was also significant (β = 0.193,

t = 4.223,

p < 0.001), affirming the role of personal ethical alignment in shaping students’ motivation to use GenAI tools.

5.2. Lecturer’s Perspectives

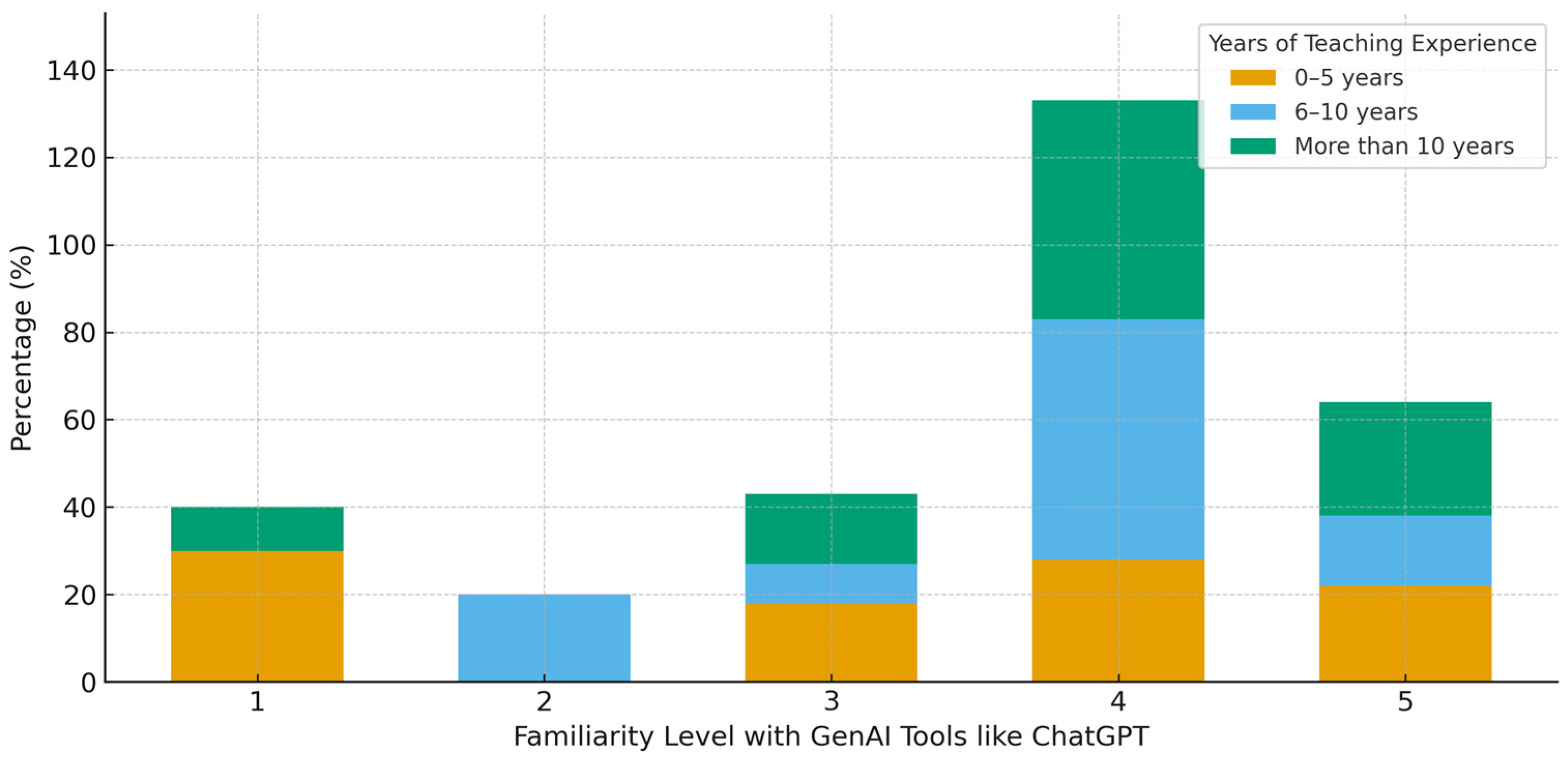

Data was collected from 309 lecturers where lecturers who have teaching experience exceeds 10-year represent the majority (~%75, 232). Meanwhile lecturers with 6–10 years represent 11.33%, followed by lecturers with experience of 0–5 years.

A cross-tabulation was performed to explore the relationship between lecturers’ teaching experience and their self-reported familiarity with Generative AI tools such as ChatGPT. The results, visualised in

Figure 4, show notable differences across experience groups. Lecturers with more than 10 years of experience displayed the highest levels of GenAI familiarity, with 50% identifying at level 4 and 25.86% at level 5. This suggests that senior academics may have more exposure or institutional encouragement to experiment with emerging technologies. In contrast, lecturers with 0–5 years of experience showed greater polarisation: approximately 30.95% reported minimal familiarity (level 1) while a similar proportion reported moderate to high familiarity. Interestingly, lecturers with 6–10 years of experience had the highest proportion in the “very familiar” category (level 4, at 54.29%) but a noticeable drop in the lowest and middle categories. Overall, the results suggest that teaching experience is not linearly associated with GenAI familiarity. Rather, both early-career and veteran lecturers demonstrate bimodal familiarity patterns, while mid-career lecturers appear to consolidate their confidence primarily at higher levels of familiarity. These trends underscore the importance of targeted professional development, particularly for newer faculty who may require structured exposure to GenAI tools to build confidence and responsible integration in teaching practices.

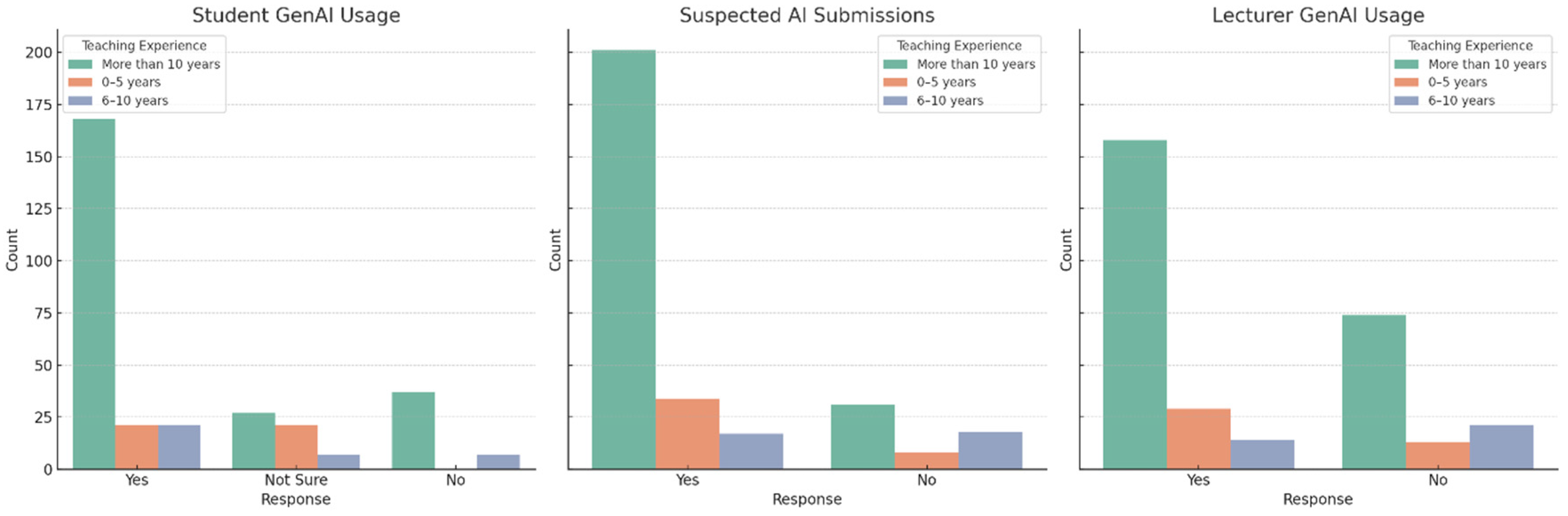

To further explore lecturers’ experience with GenAI, we examined three key aspects: their beliefs about student usage of GenAI in assignments, experiences of suspected considerably GenAI-generated submissions, and their own use of GenAI in generating teaching materials. The analysis, stratified by years of teaching experience, reveals notable differences in perception and practice. Lecturers with more than 10 years of experience were the most likely to believe their students used GenAI in assignments. A clear majority in this group responded “Yes”, suggesting heightened awareness or detection ability stemming from longer academic exposure. In contrast, lecturers in the 0–5 years category showed a more even distribution between “Yes”, “No”, and “Not Sure”, indicating uncertainty or less confidence in detecting AI-assisted work.

Again, senior lecturers (

Figure 5) were more likely to report having encountered suspicious submissions. This may stem from their experience in identifying non-authentic writing patterns or deviations from student norms. Conversely, early-career lecturers were more likely to respond “No”, which may reflect either a lack of detection experience or lower expectations regarding student AI usage. When asked about their own use of GenAI tools for teaching or preparation, the mid-career group (6–10 years) showed relatively higher usage rates, suggesting this group may be more proactive in exploring instructional innovations. In contrast, early-career lecturers had the highest proportion of non-use, possibly due to a lack of institutional support or uncertainty about acceptable AI integration. Interestingly, many senior lecturers also reported high levels of GenAI use, likely owing to more autonomy and access to institutional resources.

5.2.1. Main Concerns About GenAI in Student Assignments

Main Concerns Raised were (a) Overreliance and laziness- students may rely entirely on GenAI without putting in effort to understand, think critically, or verify content. GenAI use can reduce student motivation, cognitive effort, and promote laziness. (b) Loss of learning and academic skills-Risk of students not learning anything, skipping learning objectives, and missing out on acquiring essential knowledge, skills, and values. GenAI has (some of them believe) decreased the development of research, analytical, and verification skills. (c) Academic integrity issues-Increased potential for plagiarism and academic dishonesty; GenAI use may undermine academic integrity and degrade original thinking. (d) Creativity and critical thinking degradation-concerns that AI may hinder students’ creativity and reduce their ability to think “out of the box”; it may harm students’ ability to engage in deep, independent thinking. (e) ethical and unregulated use- Use without ethical considerations or institutional regulations; concern over lack of guidelines or training on responsible AI usage. Some lecturers feel outdated or challenged by rapid student adoption of AI tools. A few acknowledged that GenAI can be helpful for learning and simplifying assignment development, but stressed it must be used responsibly and not as a substitute for understanding. Emphasis on training students on both the advantages and drawbacks of GenAI use.

5.2.2. Institutions’ Support in Responding to GenAI

The main types of support suggested by lecturers to effectively respond to the rise of Generative AI in academic settings centre around six key areas. Training and workshops were the most frequently cited form of support. Participants emphasised the need for organised workshops, seminars, and hands-on training sessions for both faculty and students. These should cover practical applications, best practices, and the ethical integration of GenAI into teaching and learning processes. A second major area involves the establishment of clear guidelines and institutional policies. Respondents recommended that universities develop standardised regulations that define acceptable levels of GenAI usage and specify appropriate use-cases, particularly concerning student assignments.

Another widely supported suggestion was providing access to AI detection and plagiarism tools, such as Turnitin. This would assist faculty in identifying potential misuse or over-reliance on GenAI, thereby supporting academic integrity. Lecturers also highlighted the importance of facilitating access to AI tools and resources. Institutions were encouraged to offer subscriptions to professional GenAI platforms and invest in virtual labs, along with financial support to enable educators to explore and incorporate AI tools meaningfully in their pedagogy. A more proactive recommendation was to promote innovation by encouraging faculty to experiment with and develop custom AI applications tailored to educational needs. Sharing successful examples of GenAI integration was seen as a way to increase faculty confidence and stimulate wider adoption.

Finally, a smaller subset of participants proposed restrictions on GenAI usage in specific environments, such as computer labs or exam settings, to preserve the integrity of independent learning and assessment outcomes.

5.2.3. Motivation and Autonomy

Regarding students may use GenAI to enhance, not bypass, learning; most lecturers responded positively: nearly 46% agreed (rating 4), and about 12% strongly agreed (rating 5), suggesting a broad belief that GenAI can support genuine learning when used responsibly. Only 6% disagreed (ratings 1–2), while 36% remained neutral. Meanwhile, lecturers showed concerns regarding the assumption that GenAI may demotivate deep effort in assessments. Over 50% agreed (rating 4), and 12% strongly agreed (rating 5), indicating a strong perceived risk that GenAI might reduce students’ academic effort. Only about 8% disagreed. Therefore, lecturers appear to simultaneously recognise the constructive and disruptive potentials of GenAI. In contrast, many believe that students could use these tools to support meaningful learning, an equally strong contingent worries that GenAI may undermine academic effort and intrinsic motivation, especially in assessments. This duality highlights the need for careful policy framing and pedagogical support: enabling learning-enhancing uses while discouraging shortcuts or dependency. Lecturers with 6–10 years of experience expressed the highest agreement that GenAI can enhance learning (mean ≈ 4.00) and the strongest concern that it may demotivate student effort (mean ≈ 4.03) (

Figure 6). Those with more than 10 years reported slightly more neutral views, suggesting a more tempered or nuanced stance likely informed by broader pedagogical experience. Early-career lecturers (0–5 years) showed the lowest belief in GenAI’s constructive role (mean ≈ 3.36) and least concern about demotivation (mean ≈ 3.61) (

Figure 6), possibly reflecting lower exposure or confidence in evaluating GenAI’s academic implications.

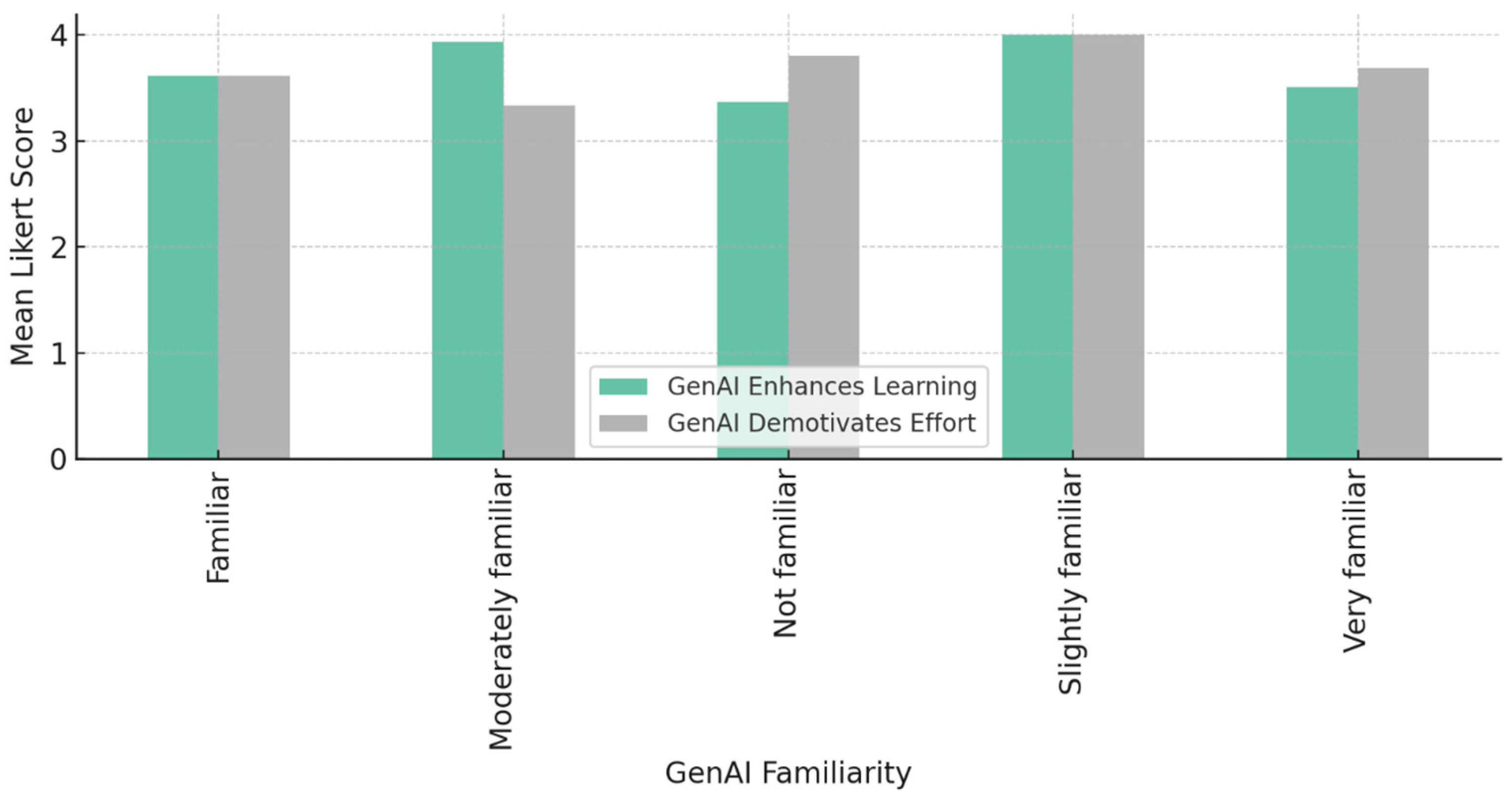

Lecturers who are slightly familiar with GenAI had the strongest dual perceptions: very high belief in GenAI’s potential for learning (mean = 4.00) and high concern about its potential to reduce student effort (mean = 4.00) (

Figure 7). Those not familiar with GenAI showed lower optimism (mean = 3.37) and more concern (mean = 3.80) (

Figure 7), suggesting that uncertainty might amplify suspicion. Interestingly, those who are moderately to very familiar demonstrated more balanced responses, suggesting that deeper familiarity with GenAI tempers both overly positive and overly negative assumptions.

The correlation analysis reinforces a key insight: familiarity and experience shape more balanced views on GenAI’s educational role. Professional development programs should aim to elevate both GenAI literacy and pedagogical reflection, especially among early-career and unfamiliar lecturers. This dual focus can help institutions foster evidence-based adoption without overlooking risks to academic motivation and integrity.

5.2.4. Influence of Teaching Experience and Familiarity with GenAI on Perceptions of GenAI’s Demotivational Impact

The analysis revealed a statistically significant effect of teaching experience on lecturers’ perceptions, F (2, 306) = 10.07, p < 0.001. This result indicates that at least one teaching experience group differed significantly in its views regarding GenAI’s demotivational influence. To identify the specific group differences, a Tukey HSD post-hoc test was performed. The results showed that lecturers with 6–10 years of experience reported significantly stronger agreement with the demotivation statement compared to both those with 0–5 years (mean difference = 0.66, p = 0.004) and those with more than 10 years of experience (mean difference = 0.73, p < 0.001). No significant difference was found between the 0–5 years and more than 10 years groups (p = 0.90).

These findings suggest that mid-career lecturers (6–10 years) are most concerned about GenAI’s potential to erode student motivation in assessments. This group may possess sufficient pedagogical experience to detect understated shifts in student engagement, while still being actively involved in assessment design. In contrast, early-career lecturers may lack enough exposure to judge student adaptation to GenAI, and senior faculty may rely on longer-term trends or hold more nuanced views shaped by accumulated instructional resilience. These results empirically support the inclusion of teaching experience as a moderating contextual variable in conceptual models of GenAI integration in higher education.

Finally, the one-way ANOVA results, regarding lecturers’ familiarity with GenAI as a predictor of their belief that GenAI may demotivate deep student effort, revealed no statistically significant differences among the familiarity groups, F (4, 304) = 1.87, p = 0.116. This suggests that familiarity alone does not substantially shape perceptions of GenAI’s motivational impact.

5.2.5. Perceived Threat of AI to Traditional Assessment Credibility

The analysis of lecturers’ responses to the item “AI threatens traditional assessment credibility” (T-R1) reveals a strong perception of concern: (a) a majority of lecturers agreed (56%) or strongly agreed (24%), indicating widespread acknowledgment that AI poses a serious challenge to the integrity of traditional assessment methods. (b) Only 2.6% disagreed, while 17.5% remained neutral, suggesting that skepticism is minimal, and uncertainty exists mainly among a minority. This response pattern underscores the urgency for rethinking assessment practices in higher education in light of GenAI tools. It also validates the inclusion of trust-related constructs in conceptual models exploring GenAI adoption in academic contexts.

A one-way ANOVA was conducted to determine whether this perception differed significantly based on lecturers’ familiarity with GenAI tools like ChatGPT. The results showed a statistically significant effect, F (4, 304) = 9.13, p < 0.001. This indicates that lecturers’ concern about AI’s impact on assessment credibility varies significantly by how familiar they are with such technologies. To explore these differences further, a Tukey HSD post hoc test was performed. The analysis revealed a significant difference between lecturers who were not familiar (Group 1) and those who were moderately familiar with GenAI tools (Group 3), with the latter reporting significantly higher concern (mean difference = 0.75, p < 0.001). No other pairwise comparisons reached statistical significance. These results suggest that moderate familiarity may represent a critical threshold of awareness, where educators begin to understand the implications of GenAI use in academic contexts but may not yet have fully adapted their assessment strategies. In contrast, those with little exposure may underestimate the risks, while highly familiar lecturers may have developed mitigation strategies or more nuanced views. This highlights the importance of professional development initiatives that foster informed, critical engagement with AI technologies in education. Finally, the groups of year of experience showed no statistically significant differences, F(2, 306) = 2.86, p = 0.059, suggesting broadly shared concerns across experience levels.

5.2.6. Lecturer and Institutional Actions to Mitigate AI Misuse in Assessments

This section evaluates how lecturers and institutions are responding to the risks of GenAI misuse in academic settings by examining two key questions:

“I have adapted my assessments to reduce AI misuse” (lecturer action);

“My institution has provided guidance on handling GenAI” (institutional support).

A large proportion of lecturers reported taking action to adapt their assessments: 47% agreed, and 10% strongly agreed, suggesting widespread adaptation; and only 7.8% disagreed, and 35% were neutral, indicating some uncertainty or institutional inaction. Meanwhile, regarding institutional support, responses were more evenly spread: only 25% agreed or strongly agreed that their institution had provided guidance; nearly 46% were neutral or disagreed, and 7% strongly agreed, revealing a perceived gap in institutional policy and support.

One-Way ANOVA: Teaching Experience

For lecturer action, there were no significant differences across teaching experience groups, F (2, 306) = 1.01, p = 0.366, suggesting lecturers at all career stages are equally likely to adapt assessments in response to AI.

For institutional support, there was a significant difference, F(2, 306) = 7.15, p < 0.001. This implies that perceptions of institutional support differ by teaching experience, possibly reflecting longer exposure to institutional policy processes or greater involvement in strategic decisions among senior staff. The post hoc comparison for institutional support across teaching experience levels revealed that: (a) lecturers with more than 10 years of experience reported significantly higher agreement with the availability of institutional guidance than those with 0–5 years (mean diff = 0.47, p = 0.0008) and 6–10 years (mean diff = 0.83, p < 0.001). (b) This suggests that more experienced faculty may be more engaged with or aware of institutional policy development concerning GenAI or may have access to strategic-level communications not typically shared with junior staff.

One-Way ANOVA: Familiarity with GenAI

For lecturer action, significant differences emerged by familiarity level, F (4, 304) = 9.07, p < 0.001. This suggests that lecturers who are more familiar with GenAI are more likely to adapt their assessments to mitigate misuse. The Tukey HSD test showed statistically significant differences between lecturers with low familiarity (Group 1: Not familiar) and those with higher familiarity levels:

Group 1 vs. Group 3 (moderately familiar): mean diff = −1.52, p < 0.001;

Group 1 vs. Group 4 (familiar): mean diff = −0.83, p < 0.001;

Group 1 vs. Group 5 (very familiar): mean diff = −1.10, p < 0.001;

Group 2 (slightly familiar) also significantly differed from Group 3 (moderately familiar): mean diff = −1.98, p = 0.0001.

These results indicate that lecturers who are more familiar with GenAI tools are significantly more likely to adapt their assessment methods to prevent misuse. In contrast, those with little or no familiarity demonstrate lower levels of action, potentially due to a lack of awareness or perceived urgency. Similarly, institutional support differed significantly across familiarity levels, F(4, 304) = 12.70, p < 0.001. Lecturers more engaged with GenAI likely perceive or demand greater institutional guidance, highlighting the importance of professional development.

The findings illustrate a strong individual effort among lecturers to address GenAI misuse, but institutional responses appear less consistent and are perceived differently depending on teaching experience and GenAI familiarity. These results highlight the need for targeted institutional policies and professional training programs to support responsible AI integration across all academic levels.

5.3. Students vs. Lecturers on Shared TAM/SDT Constructs (Nonparametric Analysis)

For constructs administered identically across both groups, we report medians with interquartile ranges (IQRs), the bootstrap (median difference) with 95% CIs, Mann–Whitney U-tests with Holm-adjusted p-values, and rank-biserial effect sizes with 95% CIs.

PU. Students (n = 578) median = 4.33 (IQR 3.67–5.00) vs. Lecturers (n = 309) median = 4.00 (IQR 3.67–4.33). Median difference = 0.33 (bootstrap 95% CI 0.00 to 0.33), indicating Students are higher on this construct. Mann–Whitney U = 96,247.000, p = 0.000 (Holm-adjusted p = 0.000). Rank-biserial r = −0.25 (95% CI −0.32 to −0.17).

EOU. Students (n = 578) median = 4.00 (IQR 3.50–4.50) vs. Lecturers (n = 309) median = 4.00 (IQR 3.67–4.00). Median difference = 0.00 (bootstrap 95% CI 0.00 to 0.00), indicating students are similar on this construct. Mann–Whitney U = 86,996.500, p = 0.002 (Holm-adjusted p = 0.004). Rank-biserial r = −0.13 (95% CI −0.20 to −0.05).

Intention. Students (n = 578) median = 4.33 (IQR 3.67–5.00) vs. Lecturers (n = 309) median = 4.00 (IQR 3.67–4.67). Median difference = 0.33 (bootstrap 95% CI 0.00 to 0.33), indicating students are higher on this construct. Mann–Whitney U = 86,401.500, p = 0.004 (Holm-adjusted p = 0.004). Rank-biserial r = −0.12 (95% CI −0.20 to −0.04).