Integrating Graph Retrieval-Augmented Generation into Prescriptive Recommender Systems

Abstract

1. Introduction

1.1. Contributions

- A comprehensive review is provided on how to improve prescriptive analytics with LLMs, KGs, and GraphRAG. The review highlights where these methods can best support different steps of the decision-making process.

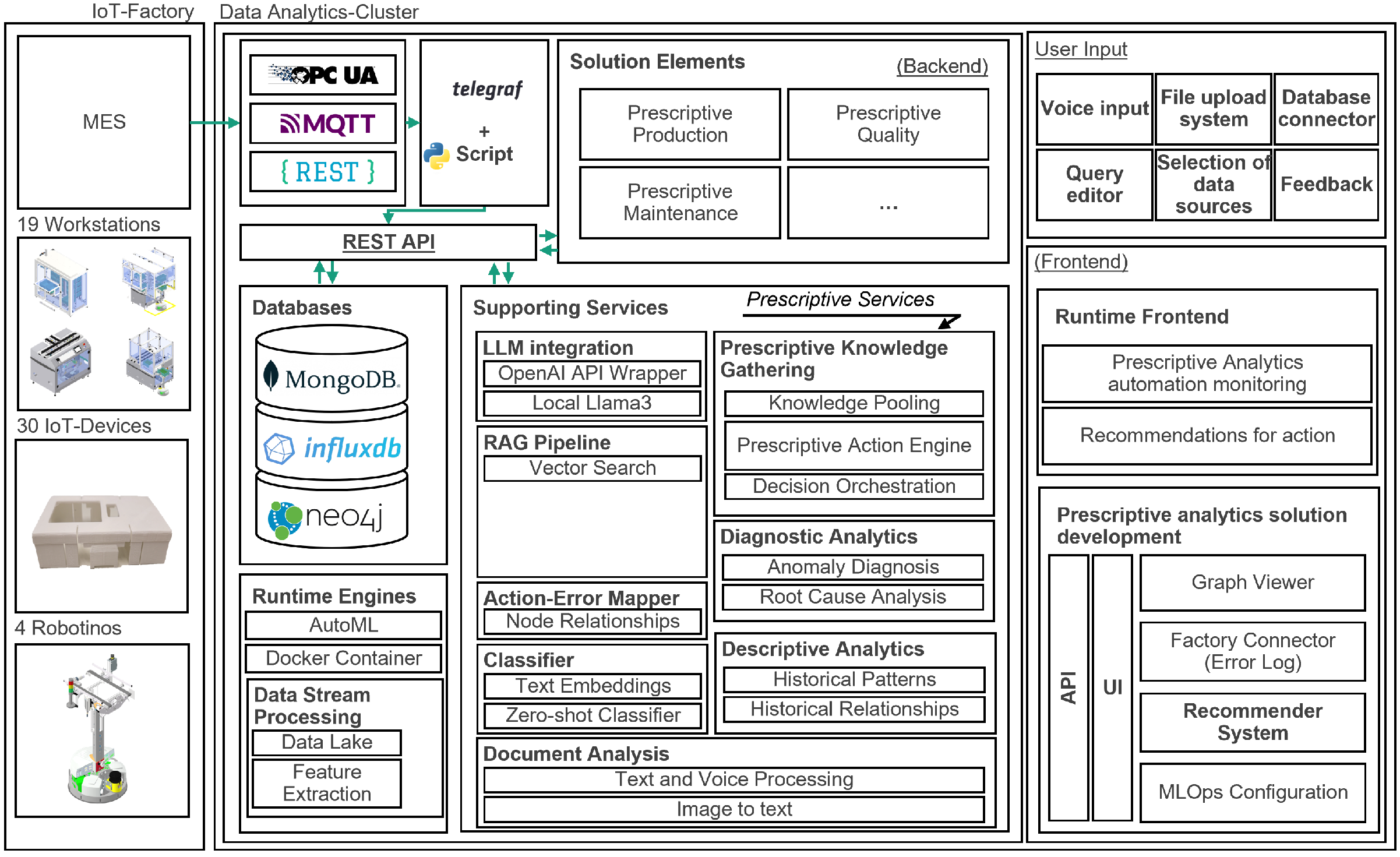

- A prescriptive analytics platform is proposed and validated, combining classical data analytics components with GraphRAG. The platform has been integrated into and evaluated within the IoT-Factory research environment.

- Future directions are discussed, focusing on how the integration of various LLMs into prescriptive analytics workflows can enhance decision support systems. Remaining risks and limitations are outlined, from which future research challenges are derived.

1.2. Research Questions

- RQ1. How can LLMs and graph-based approaches be effectively integrated into document and time series procedures to enhance prescriptive analytics, and what are the limitations of current methodologies?

- RQ2. In the context of the proposed prescriptive analytics platform, which traditional components can be replaced or enhanced by LLMs to improve performance in real-world applications?

- RQ3. What are the practical challenges and limitations of integrating LLMs together with graph-based approaches into the prescriptive analytics platform for document analysis in industrial environments?

2. Background and Literature Review

2.1. Linking and Distinguishing Prescriptive Analytics and Recommender Systems

2.2. Retrieval-Augmented Generation (RAG)

- Naive RAG is the simplest version, where the system retrieves relevant information and uses it to generate an answer. However, this version has several limitations, such as retrieving irrelevant or incomplete information, which can still lead to hallucinations.

- Advanced RAG reorganizes retrieved information and prioritizes key details. The retrieved information may be compressed or simplified to focus on relevant aspects, and the query can be rewritten to improve clarity and add context.

- Modular RAG is the most advanced and flexible version. Each component is separated into its own module, allowing replacement, improvement or customization for specific tasks. It includes a search module to extract relevant information from multiple sources and a memory module to reuse past queries or results. This version is tailored for the specialization of specific document types, such as medical records or reports. Additionally, modular RAG supports step by step retrieval: based on a question, the system retrieves relevant information, generates a (partial) answer, identifies knowledge gaps and performs subsequent targeted retrievals to refine the information.

2.3. Knowledge Graphs

2.4. GraphRAG

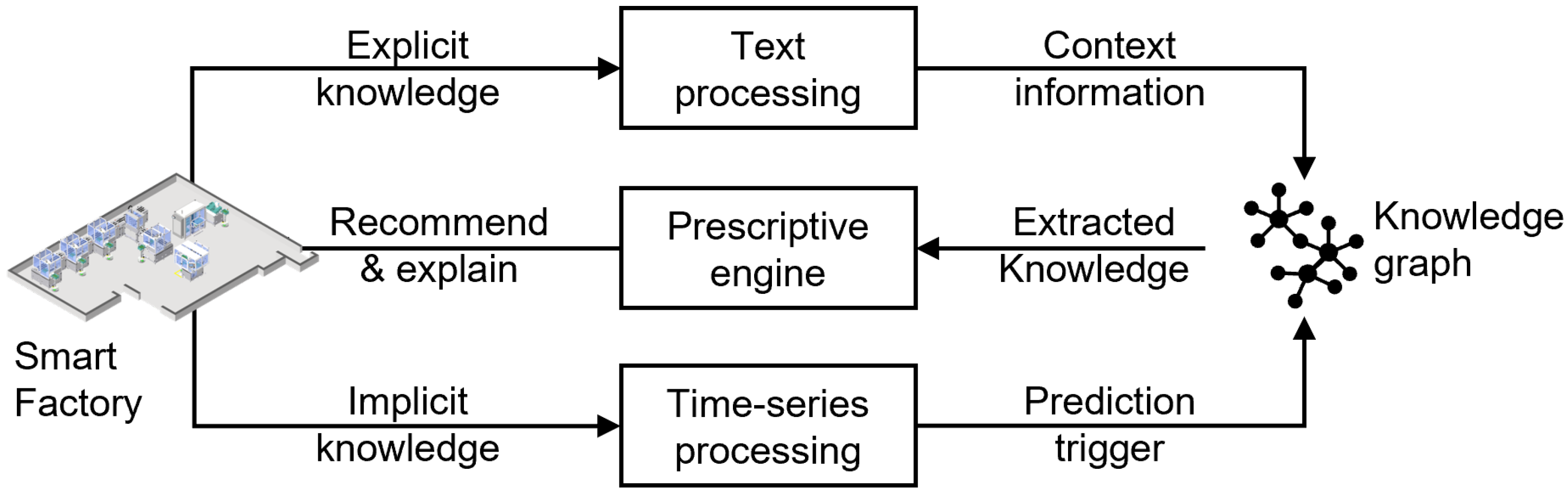

3. Prescriptive Analytics Platform

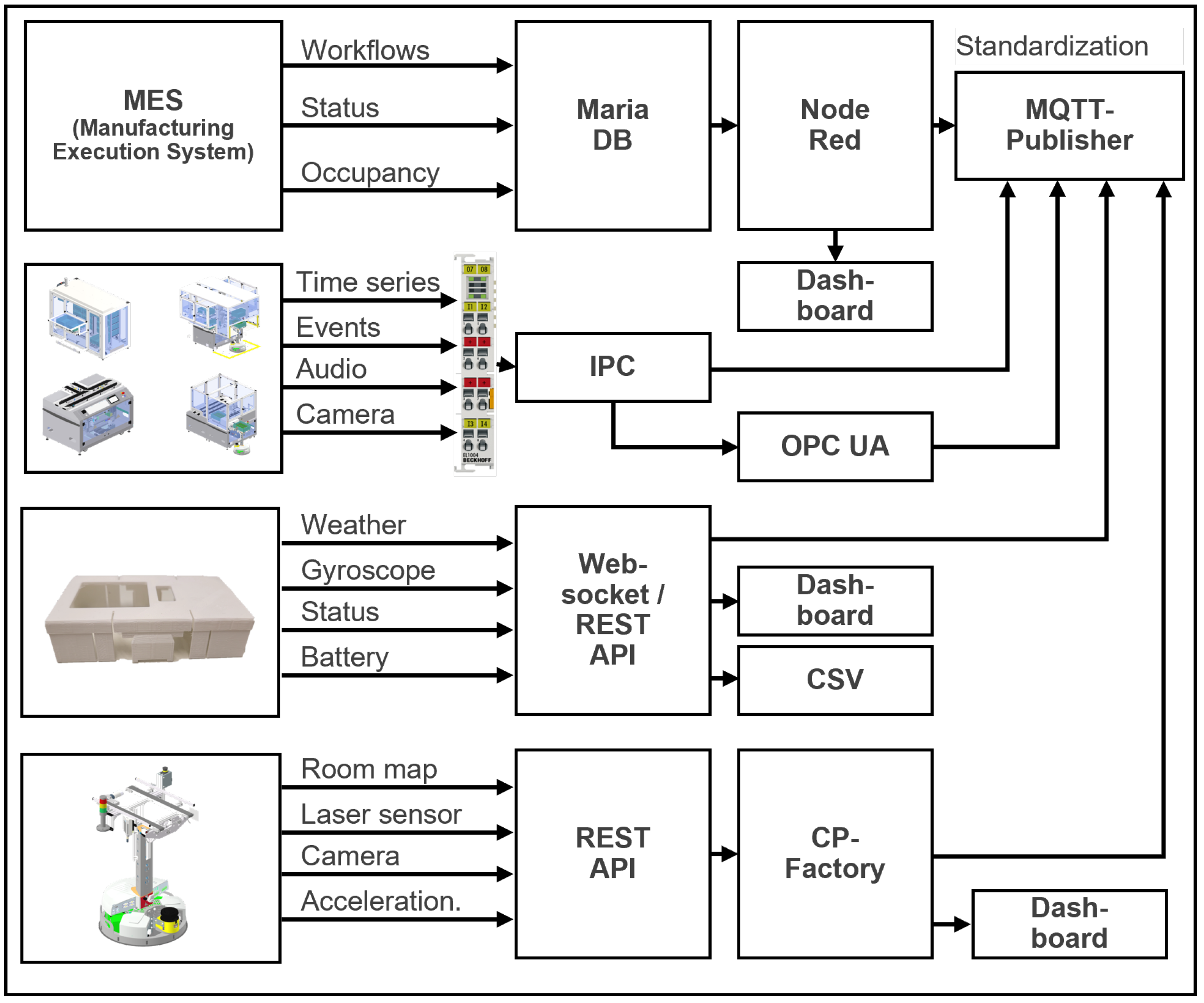

3.1. IoT-Factory

3.2. Natural Language Processing Pipeline

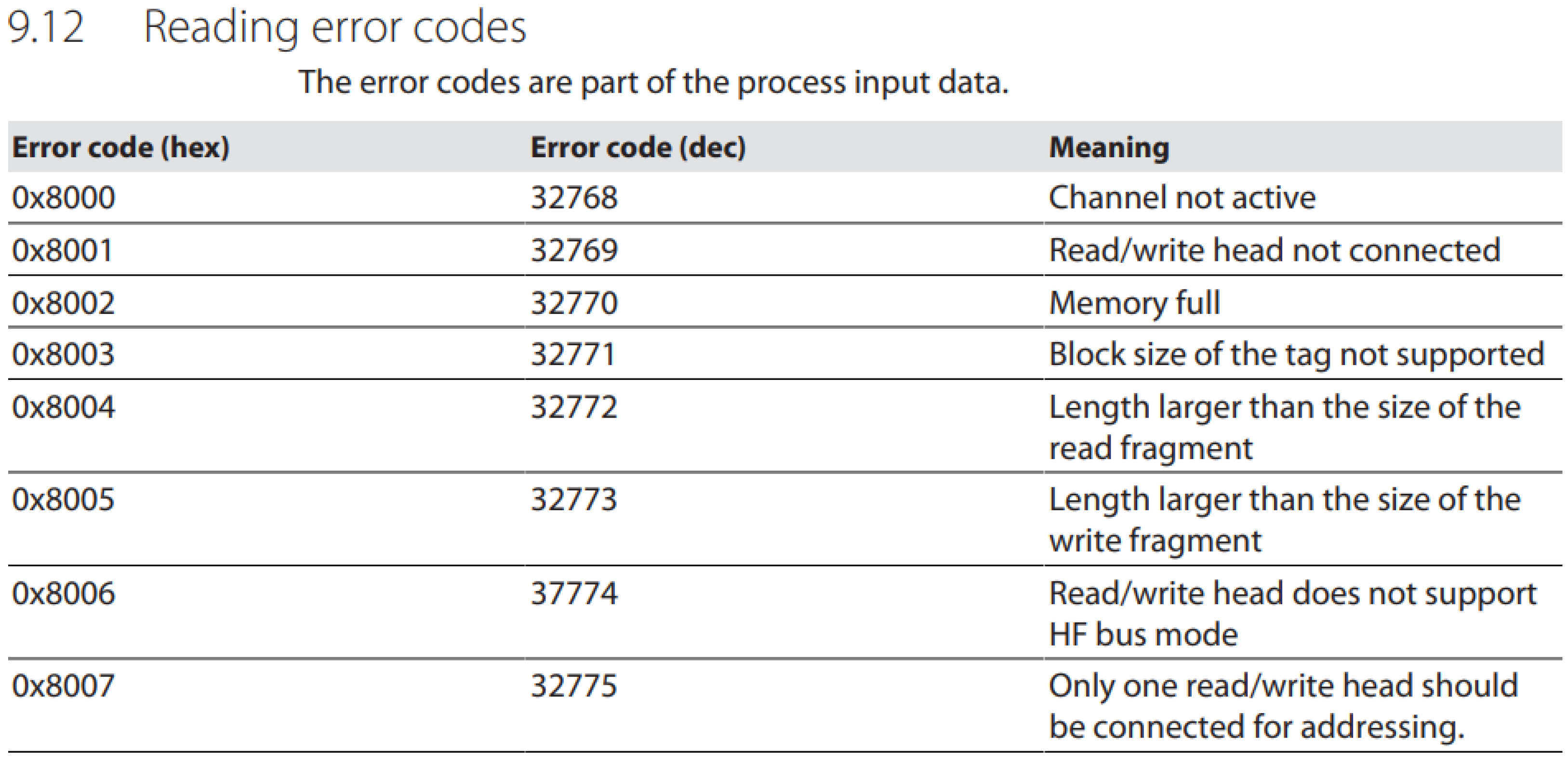

Document Analysis

3.3. Our Ontology

3.3.1. Text Embeddings

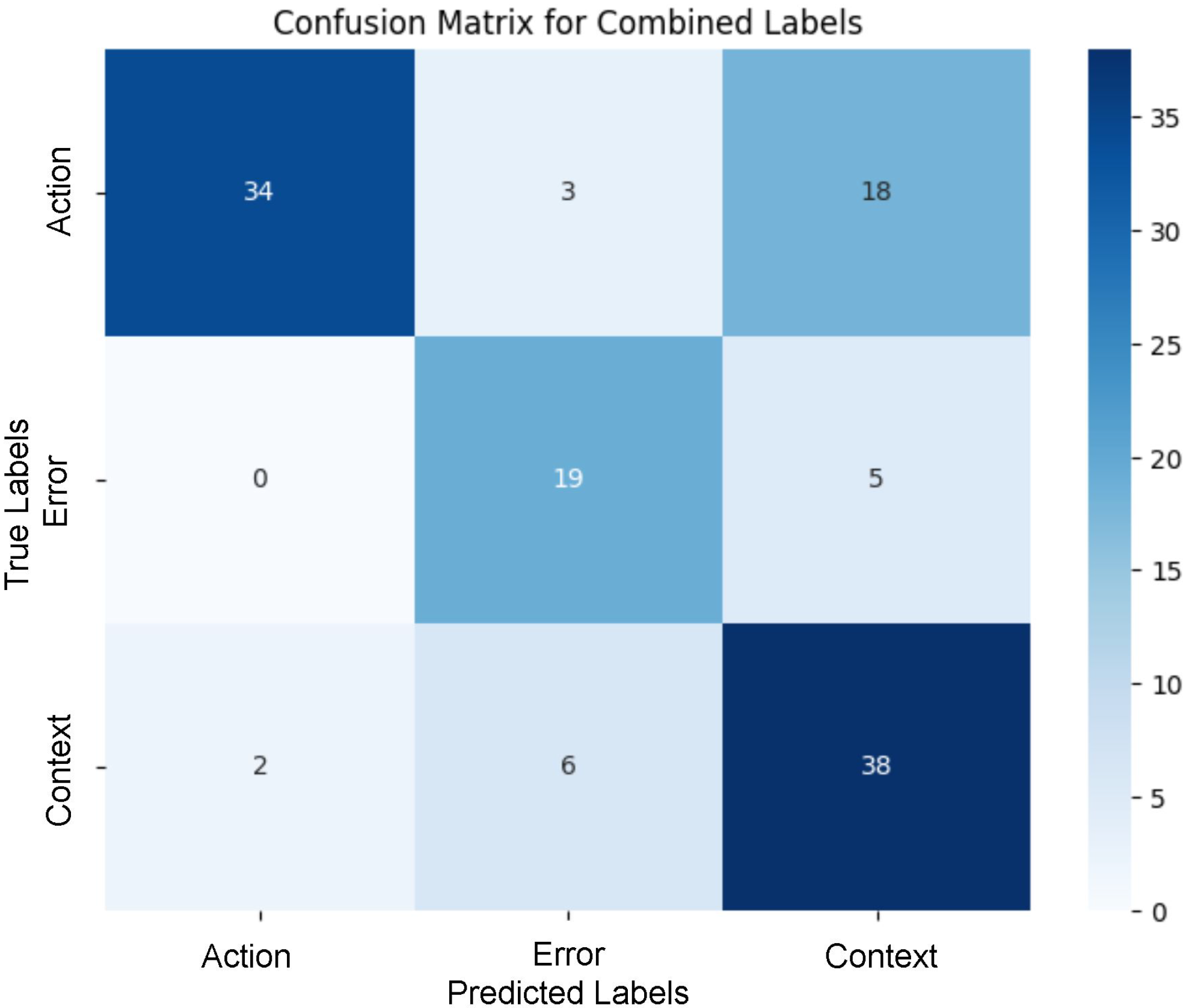

3.3.2. Text Classifier and Relationships

3.3.3. Best Action Retriever

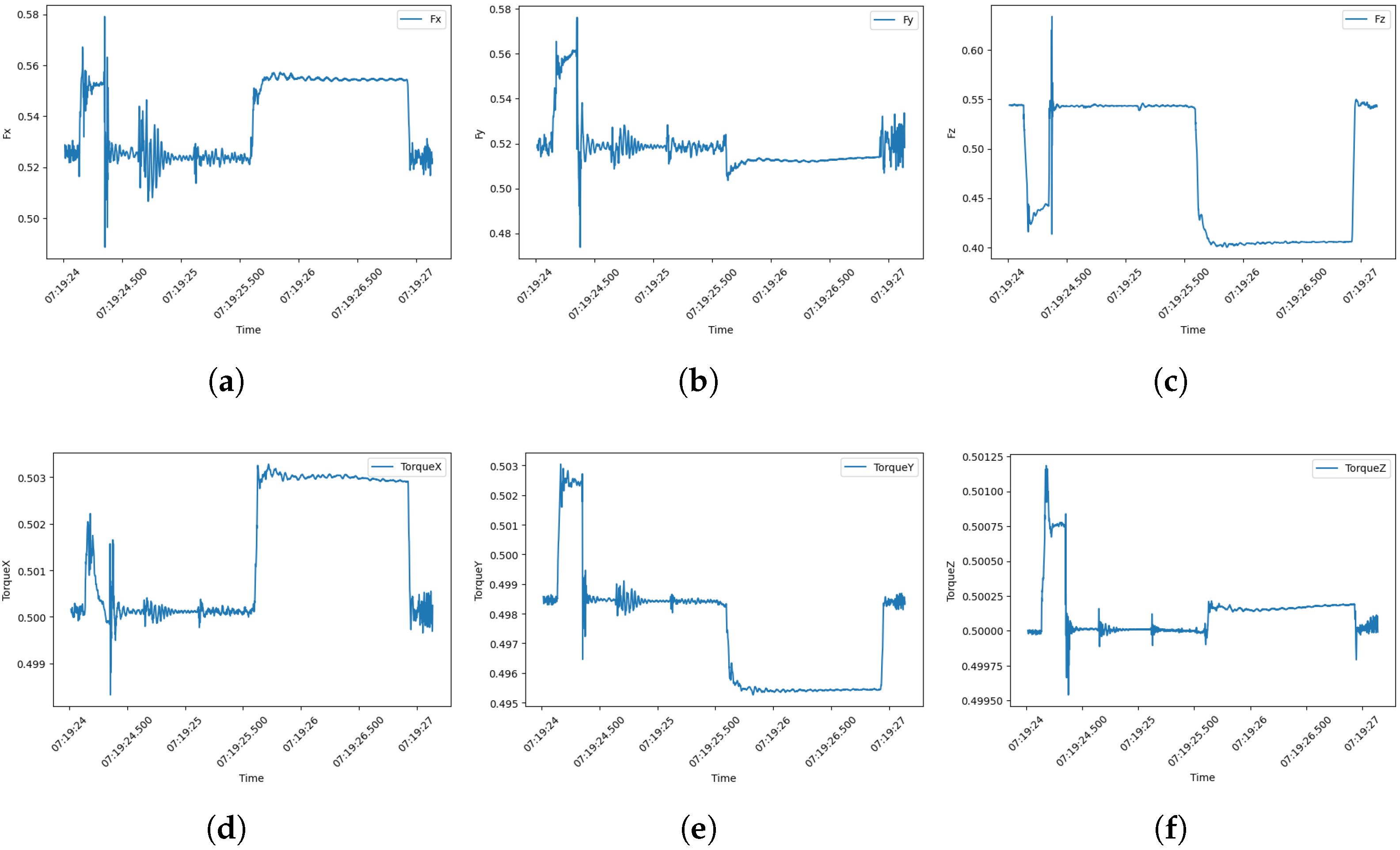

3.4. Time Series Processing Pipeline

3.5. Prescriptive Action Engine

4. Detailed View of the Prescriptive Analytics Platform

5. User Validation and Discussions

5.1. User Validation in Customer Clinics

5.2. Discussion

5.3. Future Research Directions

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Supplementary Information on the Prescriptive Platform SLR

Appendix B. Models, Hyperparameters, and Training Setup

| Zero-Shot Prompt |

|---|

| You are a classifier for the technical documentation of a smart factory. Your task is to categorize each short text chunk into one of three categories: 1. Action: Describes a step, procedure, instruction, or measure to be performed. 2. Error: Describes a problem, fault, failure, unexpected behavior, or issue. 3. Context Information: Provides background, explanations, rationale, environment, or general information that is not an action or error. Classify the following text into **exactly one category**. Be concise and precise. Text: “{text}” Output: {“category”: “Action | Error | Context Information”} |

Appendix C. Question and Answer Example

| Instruction |

|---|

| You are an expert in troubleshooting in an IoT-Factory. Your answer must contain a description of the error and a description of the action to be taken. Only use the information provided to answer. |

| Actual Question (User prompt) |

| The error StaubliRobot._Error has |

| occurred at the RASS3 assembly station. |

| Response |

| Error description: |

| StaubliLimitSwitch: End stop of an axis reached.

StaubliOvrTempCastings: Overtemperature on the housing. StaubliOvrTempDsiBoard: Overtemperature on the DSI board. StaubliOvrTempGlobalPtc: Overtemperature at one of the PTC sensors in the robot arm. StaubliOvrTempMotor135: Overtemperature at the motors of axes 1, 3 and 5. StaubliOvrTempMotor246: Overtemperature at the motors of axes 2, 4 and 6. StaubliSpeedCtrlFault: Error on the speed controller. StaubliProgramAbort: Program aborted. GripperCloseError: Gripper cannot be closed, check sensors BG1/BG3. RassGripperOpenError: Gripper cannot be opened, check sensors BG1/BG3. |

| Action description: |

| StaubliLimitSwitch: Action: Release axis individually (joint movement). StaubliOvrTempCastings: Action: check and rectify overtemperature on the housing according to the Stäubli robot manual. StaubliOvrTempDsiBoard: Action: Check and rectify overtemperature on the DSI board according to the Stäubli robot manual. StaubliOvrTempGlobalPtc: Action: Check and rectify overtemperature on one of the PTC sensors in the robot arm according to the Stäubli robot manual. StaubliOvrTempMotor135: Action: Check and rectify overtemperature on the motors of axes 1, 3 and 5 according to the Stäubli robot manual. StaubliOvrTempMotor246: Action: Check and rectify overtemperature on the motors of axes 2, 4 and 6 according to the Stäubli robot manual. StaubliSpeedCtrlFault: Action: check and rectify fault on speed controller according to Stäubli robot manual. StaubliProgramAbort: Action: manually move robot to home position to restart. GripperCloseError: Action: Check function of the gripper, adjust sensors BG1/BG3. RassGripperOpenError: Action: Check function of the gripper, adjust sensors BG1/BG3. Meta information: Most similar error case: CP-F-RASS-Stäubli-D-A003.pdf Page:151 |

Appendix D. Table A3

| Input Data | Protocols | Database | Data | Hardware | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Contribution | Sales Data | Health Data | MES | Process | Quality | Logistic | Machine | Images | MQTT | OPC-UA | Rest | SQL | NOSQL | HDFS | Pre-Processed | Historic | Real-Time | Edge | Cloud |

| [50] | X | X | X | X | X | X | X | X | |||||||||||

| [51] | X | X | X | X | X | X | X | X | X | X | X | X | X | ||||||

| [52] | X | X | X | X | X | X | X | ||||||||||||

| [53] | X | X | X | ||||||||||||||||

| [54] | X | X | X | X | X | X | X | X | |||||||||||

| [55] | X | X | X | X | X | ||||||||||||||

| [56] | X | ||||||||||||||||||

| [57] | X | X | X | ||||||||||||||||

| [58] | X | X | X | X | |||||||||||||||

| [59] | X | X | |||||||||||||||||

| [60] | X | X | X | X | X | X | |||||||||||||

| [61] | X | ||||||||||||||||||

| [62] | X | X | X | X | |||||||||||||||

| [63] | X | X | X | ||||||||||||||||

| [64] | X | X | X | X | X | X | X | ||||||||||||

| [65] | X | X | X | X | |||||||||||||||

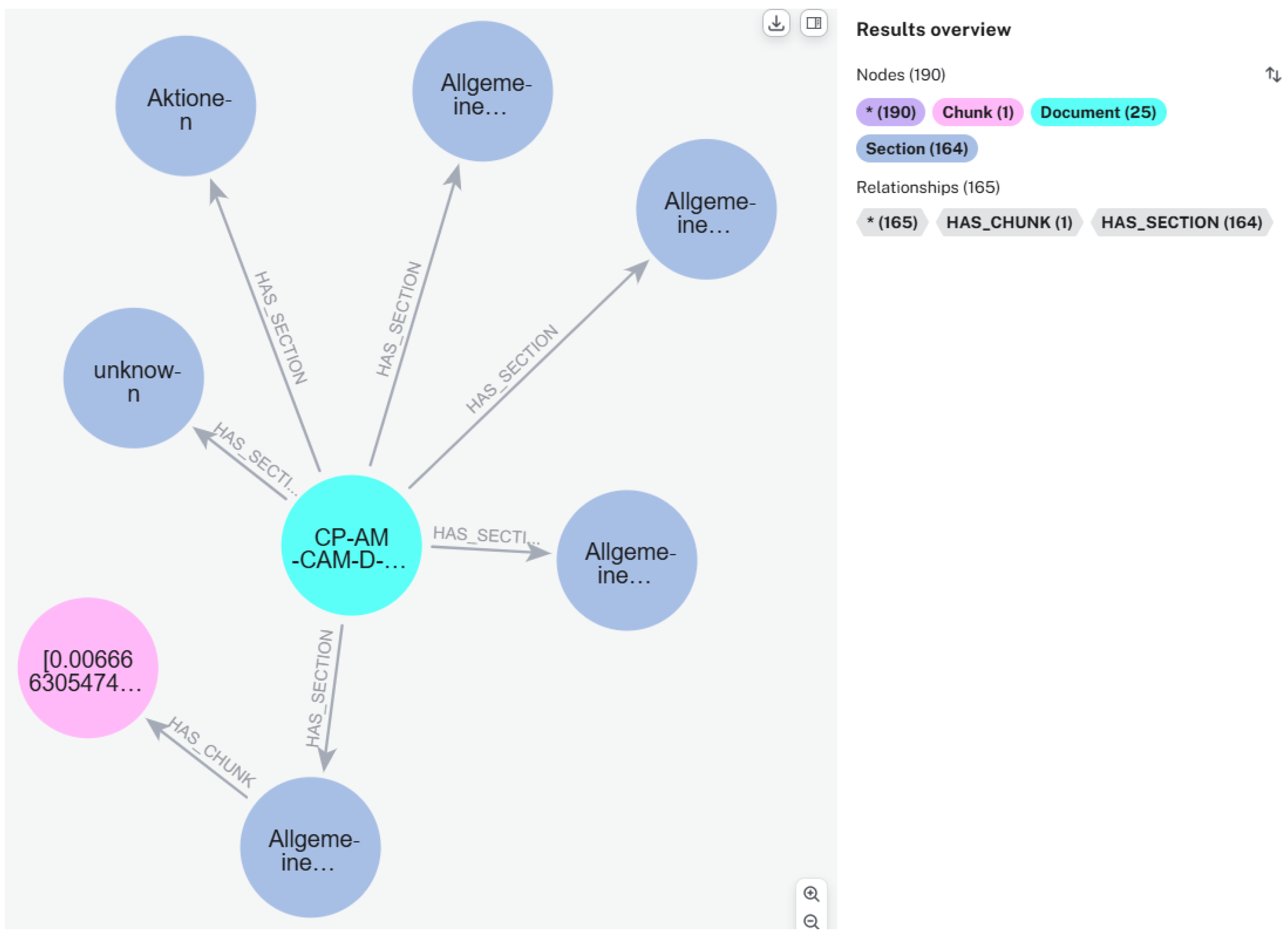

Appendix E. Knowledge Graph Viewer

References

- Johnson, J. Chapter 12—Human Decision-Making is Rarely Rational. In Designing with the Mind in Mind, 3rd ed.; Johnson, J., Ed.; Morgan Kaufmann: Burlington, MA, USA, 2021; pp. 203–223. [Google Scholar] [CrossRef]

- Richter, D. Demographic change and innovation: The ongoing challenge from the diversity of the labor force. Manag. Rev. 2014, 25, 166–184. [Google Scholar] [CrossRef]

- Khuzadi, M. Knowledge capture and collaboration—Current methods. Neurocomputing 2011, 26, 17–25. [Google Scholar] [CrossRef]

- Brynjolfsson, E.; McElheran, K. Data in Action: Data-Driven Decision Making in U.S. Manufacturing. In CES Working Paper No. CES-WP-16-06; Rotman School of Management Working Paper No. 2722502; Rotman School of Management: Toronto, ON, Canada, January 2016. [Google Scholar] [CrossRef]

- Balali, F.; Nouri, J.; Nasiri, A.; Zhao, T. Data Intensive Industrial Asset Management, 1st ed.; Springer eBook Collection, Springer International Publishing and Imprint Springer; Springer: Cham, Switzerland, 2020. [Google Scholar] [CrossRef]

- Kim, B.; Park, J.; Suh, J. Transparency and accountability in AI decision support: Explaining and visualizing convolutional neural networks for text information. Decis. Support Syst. 2020, 134, 113302. [Google Scholar] [CrossRef]

- Alshammari, M.; Nasraoui, O.; Sanders, S. Mining Semantic Knowledge Graphs to Add Explainability to Black Box Recommender Systems. IEEE Access 2019, 7, 110563–110579. [Google Scholar] [CrossRef]

- Kartikeya, A. Examining correlation between trust and transparency with explainable artificial intelligence. 10 August 2021. In Explainable AI: Interpreting, Explaining and Visualizing Deep Learning; Springer: Cham, Switzerland, 2022; pp. 310–325. [Google Scholar] [CrossRef]

- Ehsan, U.; Wintersberger, P.; Liao, Q.V.; Mara, M.; Streit, M.; Wachter, S.; Riener, A.; Riedl, M.O. Operationalizing Human-Centered Perspectives in Explainable AI. In Proceedings of the Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems; Kitamura, Y., Quigley, A., Isbister, K., Igarashi, T., Eds.; ACM: New York, NY, USA, 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Eigner, E.; Händler, T. Determinants of LLM-assisted Decision-Making. arXiv 2024, arXiv:2402.17385. [Google Scholar] [CrossRef]

- Gaur, M.; Faldu, K.; Sheth, A. Semantics of the Black-Box: Can Knowledge Graphs Help Make Deep Learning Systems More Interpretable and Explainable? IEEE Internet Comput. 2021, 25, 51–59. [Google Scholar] [CrossRef]

- Feng, C.; Zhang, X.; Fei, Z. Knowledge Solver: Teaching LLMs to Search for Domain Knowledge from Knowledge Graphs. arXiv 2023, arXiv:2309.03118. [Google Scholar] [CrossRef]

- Liu, Y.; He, H.; Han, T.; Zhang, X.; Liu, M.; Tian, J.; Zhang, Y.; Wang, J.; Gao, X.; Zhong, T.; et al. Understanding LLMs: A Comprehensive Overview from Training to Inference. Neurocomputing 2024, 620, 129190. [Google Scholar] [CrossRef]

- Gao, Y.; Xiong, Y.; Gao, X.; Jia, K.; Pan, J.; Bi, Y.; Dai, Y.; Sun, J.; Wang, M.; Wang, H. Retrieval-Augmented Generation for Large Language Models: A Survey. arXiv 2023, arXiv:2312.10997. [Google Scholar] [CrossRef]

- Peng, B.; Zhu, Y.; Liu, Y.; Bo, X.; Shi, H.; Hong, C.; Zhang, Y.; Tang, S. Graph Retrieval-Augmented Generation: A Survey. arXiv 2024, arXiv:2408.08921. [Google Scholar] [CrossRef]

- Niederhaus, M.; Migenda, N.; Weller, J.; Schenck, W.; Kohlhase, M. Technical Readiness of Prescriptive Analytics Platforms: A Survey. In Proceedings of the 2024 35th Conference of Open Innovations Association (FRUCT), Tampere, Finland, 24–26 April 2024; pp. 509–519. [Google Scholar]

- Shah, K.; Salunke, A.; Dongare, S.; Antala, K. Recommender systems: An overview of different approaches to recommendations. In Proceedings of the 2017 International Conference on Innovations in Information, Embedded and Communication Systems (ICIIECS), Coimbatore, India, 17–18 March 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Leoni, M.D.; Dees, M.; Reulink, L. Design and Evaluation of a Process-aware Recommender System based on Prescriptive Analytics. In Proceedings of the 2020 2nd International Conference on Process Mining (ICPM), Padua, Italy, 5–8 October 2020; pp. 9–16. [Google Scholar] [CrossRef]

- Fan, W.; Ding, Y.; Ning, L.; Wang, S.; Li, H.; Yin, D.; Chua, T.S.; Li, Q. A Survey on RAG Meeting LLMs: Towards Retrieval-Augmented Large Language Models. arXiv 2024. [Google Scholar] [CrossRef]

- Edwards, C. Hybrid Context Retrieval Augmented Generation Pipeline: LLM-Augmented Knowledge Graphs and Vector Database for Accreditation Reporting Assistance. arXiv 2024, arXiv:2405.15436. [Google Scholar] [CrossRef]

- Weller, J.; Migenda, N.; Kühn, A.; Dumitrescu, R. Prescriptive Analytics Data Canvas: Strategic Planning For Prescriptive Analytics in Smart Factories; Publish-Ing.: Hannover, Germany, 2024. [Google Scholar] [CrossRef]

- Hofer, M.; Obraczka, D.; Saeedi, A.; Köpcke, H.; Rahm, E. Construction of Knowledge Graphs: Current State and Challenges. Information 2024, 15, 509. [Google Scholar] [CrossRef]

- Tiddi, I.; Schlobach, S. Knowledge graphs as tools for explainable machine learning: A survey. Artif. Intell. 2022, 302, 103627. [Google Scholar] [CrossRef]

- Wang, Q.; Mao, Z.; Wang, B.; Guo, L. Knowledge Graph Embedding: A Survey of Approaches and Applications. IEEE Trans. Knowl. Data Eng. 2017, 29, 2724–2743. [Google Scholar] [CrossRef]

- Wan, Y.; Liu, Y.; Chen, Z.; Chen, C.; Li, X.; Hu, F.; Packianather, M. Making knowledge graphs work for smart manufacturing: Research topics, applications and prospects. J. Manuf. Syst. 2024, 76, 103–132. [Google Scholar] [CrossRef]

- Kommineni, V.K.; König-Ries, B.; Samuel, S. From human experts to machines: An LLM supported approach to ontology and knowledge graph construction. arXiv 2024, arXiv:2403.08345. [Google Scholar] [CrossRef]

- Delile, J.; Mukherjee, S.; van Pamel, A.; Zhukov, L. Graph-Based Retriever Captures the Long Tail of Biomedical Knowledge. arXiv 2024, arXiv:2402.12352. [Google Scholar] [CrossRef]

- Ji, Z.; Liu, Z.; Lee, N.; Yu, T.; Wilie, B.; Zeng, M.; Fung, P. RHO (ρ): Reducing Hallucination in Open-domain Dialogues with Knowledge Grounding. In Findings of the Association for Computational Linguistics: ACL 2023; ACL: Stroudsburg, PA, USA, 3 December 2022; pp. 4504–4522. [Google Scholar] [CrossRef]

- Shu, D.; Chen, T.; Jin, M.; Zhang, C.; Du, M.; Zhang, Y. Knowledge Graph Large Language Model (KG-LLM) for Link Prediction. In Proceedings of the 16th Asian Conference on Machine Learning (ACML), Hanoi, Vietnam, 5–8 December 2024; PMLR; Volume 260, pp. 143–158. Available online: https://proceedings.mlr.press/v260/shu25a.html (accessed on 8 October 2025).

- Yang, S.; Gribovskaya, E.; Kassner, N.; Geva, M.; Riedel, S. Do Large Language Models Latently Perform Multi-Hop Reasoning? arXiv 2024, arXiv:2402.16837. [Google Scholar] [CrossRef]

- Nguyen, M.V.; Luo, L.; Shiri, F.; Phung, D.; Li, Y.F.; Vu, T.T.; Haffari, G. Direct Evaluation of Chain-of-Thought in Multi-hop Reasoning with Knowledge Graphs. In Findings of the Association for Computational Linguistics: ACL 2024; ACL: Stroudsburg, PA, USA, 17 February 2024; pp. 2862–2883. [Google Scholar] [CrossRef]

- Kojima, T.; Gu, S.S.; Reid, M.; Matsuo, Y.; Iwasawa, Y. Large Language Models Are Zero-Shot Reasoners. In Proceedings of the 36th International Conference on Neural Information Processing Systems (NeurIPS 2022), New Orleans, LA, USA, 28 November–9 December 2022. [Google Scholar]

- Morris, J.X.; Kuleshov, V.; Shmatikov, V.; Rush, A.M. Text Embeddings Reveal (Almost) As Much As Text. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing (EMNLP 2023), Singapore, 6–10 December 2023; pp. 12448–12460. [Google Scholar] [CrossRef]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient Estimation of Word Representations in Vector Space. In Proceedings of the 1st International Conference on Learning Representations (ICLR 2013 Workshop Track), Scottsdale, AZ, USA, 2–4 May 2013. [Google Scholar] [CrossRef]

- Synergistic Union of Word2Vec and Lexicon for Domain Specific Semantic Similarity; IEEE: New York, NY, USA, 2017.

- OpenAI. GPT-4o Mini. 2024. Available online: https://openai.com/index/introducing-gpt-4o-mini (accessed on 8 October 2025).

- Meta AI. Meta-Llama 3 (8B and 70B Models). 18 April 2024. Available online: https://ai.meta.com/blog/meta-llama-3/ (accessed on 8 October 2025).

- Ollama. Ollama: Run Large Language Models Locally. 2024. Available online: https://ollama.com (accessed on 8 October 2025).

- Jin, M.; Wang, S.; Ma, L.; Chu, Z.; Zhang, J.Y.; Shi, X.; Chen, P.Y.; Liang, Y.; Li, Y.F.; Pan, S.; et al. Time-LLM: Time Series Forecasting by Reprogramming Large Language Models. In Proceedings of the 12th International Conference on Learning Representations (ICLR 2024), Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Gruver, N.; Finzi, M.; Qiu, S.; Wilson, A.G. Large Language Models Are Zero-Shot Time Series Forecasters. 11 October 2023. NeurIPS 2023. Available online: https://github.com/ngruver/llmtime (accessed on 19 August 2024).

- Garza, A.; Challu, C.; Mergenthaler-Canseco, M. TimeGPT-1. arXiv 2023, arXiv:2310.03589. [Google Scholar] [CrossRef]

- Rasul, K.; Ashok, A.; Williams, A.R.; Ghonia, H.; Bhagwatkar, R.; Khorasani, A.; Bayazi, M.J.D.; Adamopoulos, G.; Riachi, R.; Hassen, N.; et al. Lag-Llama: Towards Foundation Models for Probabilistic Time Series Forecasting. 12 October 2023. First Two Authors Contributed Equally. All Data, Models and Code Used Are Open-Source. GitHub. Available online: https://github.com/time-series-foundation-models/lag-llama (accessed on 24 July 2024).

- Zhou, T.; Niu, P.; Wang, X.; Sun, L.; Jin, R. One Fits All: Power General Time Series Analysis by Pretrained LM. In Proceedings of the 36th Conference on Neural Information Processing Systems (NeurIPS 2023), Vancouver, BC, Canada, 23 February 2023; Available online: https://proceedings.neurips.cc/paper_files/paper/2023/hash/86c17de05579cde52025f9984e6e2ebb-Abstract-Conference.html (accessed on 8 October 2025).

- Huguet Cabot, P.L.; Navigli, R. REBEL: Relation Extraction By End-to-end Language generation. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2021; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 2370–2381. [Google Scholar] [CrossRef]

- Chen, Z.; Guo, C. A pattern-first pipeline approach for entity and relation extraction. Neurocomputing 2022, 494, 182–191. [Google Scholar] [CrossRef]

- Kovalerchuk, B.; Fegley, B. LLM Enhancement with Domain Expert Mental Model to Reduce LLM Hallucination with Causal Prompt Engineering. arXiv 2025, arXiv:2509.10818. [Google Scholar] [CrossRef]

- Beijing Academy of Artificial Intelligence. bge-small-en-v1.5. 2024. Available online: https://huggingface.co/BAAI/bge-small-en-v1.5 (accessed on 8 October 2025).

- Beijing Academy of Artificial Intelligence. bge-base-en-v1.5. 2024. Available online: https://huggingface.co/BAAI/bge-base-en-v1.5 (accessed on 8 October 2025).

- Muennighoff, N.; Tazi, N.; Magne, L.; Reimers, N. MTEB: Massive Text Embedding Benchmark. arXiv 2022, arXiv:2210.07316. [Google Scholar] [CrossRef]

- Vater, J.; Schlaak, P.; Knoll, A. A Modular Edge-/Cloud-Solution for Automated Error Detection of Industrial Hairpin Weldings using Convolutional Neural Networks. In Proceedings of the 2020 IEEE 44th Annual Computers, Software, and Applications Conference (COMPSAC), Madrid, Spain, 13–17 July 2020; pp. 505–510. [Google Scholar] [CrossRef]

- Vater, J.; Harscheidt, L.; Knoll, A. A Reference Architecture Based on Edge and Cloud Computing for Smart Manufacturing. In Proceedings of the 2019 28th International Conference on Computer Communication and Networks (ICCCN), Valencia, Spain, 29 July–1 August 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Perea, R.V.; Festijo, E.D. Analytics Platform for Morphometric Grow out and Production Condition of Mud Crabs of the Genus Scylla with K-Means. In Proceedings of the 2021 4th International Conference of Computer and Informatics Engineering (IC2IE), Depok, Indonesia, 14–15 September 2021; pp. 117–122. [Google Scholar] [CrossRef]

- Bashir, M.R.; Gill, A.Q.; Beydoun, G. A Reference Architecture for IoT-Enabled Smart Buildings. SN Comput. Sci. 2022, 3, 493. [Google Scholar] [CrossRef]

- Gröger, C. Building an Industry 4.0 Analytics Platform. Datenbank-Spektrum 2018, 18, 5–14. [Google Scholar] [CrossRef]

- Filz, M.A.; Bosse, J.P.; Herrmann, C. Digitalization platform for data-driven quality management in multi-stage manufacturing systems. J. Intell. Manuf. 2024, 35, 2699–2718. [Google Scholar] [CrossRef]

- Ribeiro, R.; Pilastri, A.; Moura, C.; Morgado, J.; Cortez, P. A data-driven intelligent decision support system that combines predictive and prescriptive analytics for the design of new textile fabrics. Neural Comput. Appl. 2023, 35, 17375–17395. [Google Scholar] [CrossRef]

- Von Bischhoffshausen, J.K.; Paatsch, M.; Reuter, M.; Satzger, G.; Fromm, H. An Information System for Sales Team Assignments Utilizing Predictive and Prescriptive Analytics. In Proceedings of the 2015 IEEE 17th Conference on Business Informatics, Lisbon, Portugal, 13–16 July 2015; pp. 68–76. [Google Scholar] [CrossRef]

- Divyashree, N.; Nandini Prasad, K.S. Design and Development of We-CDSS Using Django Framework: Conducing Predictive and Prescriptive Analytics for Coronary Artery Disease. IEEE Access 2022, 10, 119575–119592. [Google Scholar] [CrossRef]

- Hentschel, R. Developing Design Principles for a Cloud Broker Platform for SMEs. In Proceedings of the 2020 IEEE 22nd Conference on Business Informatics (CBI), Antwerp, Belgium, 22–24 June 2020; pp. 290–299. [Google Scholar]

- Madrid, M.C.R.; Malaki, E.G.; Ong, P.L.S.; Solomo, M.V.S.; Suntay, R.A.L.; Vicente, H.N. Healthcare Management System with Sales Analytics using Autoregressive Integrated Moving Average and Google Vision. In Proceedings of the 2020 IEEE 12th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM), Manila, Philippines, 3–7 December 2020; pp. 1–6. [Google Scholar]

- Lepenioti, K.; Bousdekis, A.; Apostolou, D.; Mentzas, G. Human-Augmented Prescriptive Analytics with Interactive Multi-Objective Reinforcement Learning. IEEE Access 2021, 9, 100677–100693. [Google Scholar] [CrossRef]

- Sam Plamoottil, S.; Kunden, B.; Yadav, A.; Mohanty, T. Inventory Waste Management with Augmented Analytics for Finished Goods. In Proceedings of the 2023 Third International Conference on Artificial Intelligence and Smart Energy (ICAIS), Coimbatore, India, 2–4 February 2023; pp. 1293–1299. [Google Scholar]

- Rehman, A.; Naz, S.; Razzak, I. Leveraging big data analytics in healthcare enhancement: Trends, challenges and opportunities. Multimed. Syst. 2022, 28, 1339–1371. [Google Scholar] [CrossRef]

- Adi, E.; Anwar, A.; Baig, Z.; Zeadally, S. Machine learning and data analytics for the IoT. Neural Comput. Appl. 2020, 32, 16205–16233. [Google Scholar] [CrossRef]

- Mustafee, N.; Powell, J.H.; Harper, A. RH-RT: A data analytics framework for reducing wait time at emergency departments and centres for urgent care. In Proceedings of the 2018 Winter Simulation Conference (WSC), Gothenburg, Sweden, 9–12 December 2018; pp. 100–110. [Google Scholar]

| Question | Time Spent per Question [min] | Average [min] | Our Solution [min] | ||

|---|---|---|---|---|---|

| Group 1 | Group 2 | Group 3 | |||

| The error StaubliRobot.Error occurred on RASS3 | 01:11 | 00:52 | 01:30 | 01:11 | 00:18 |

| The Kuka PickandSort brake test failed | 01:49 | 01:42 | 02:30 | 02:03 | 00:21 |

| Conveyor belt pneumatic commissioning failed | 04:43 | 03:26 | 05:00 | 04:23 | 00:17 |

| Throttle check valve GRO-QS-4 operating pressure too high | 05:14 | 11:17 | - | 08:16 | 00:25 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Niederhaus, M.; Migenda, N.; Weller, J.; Kohlhase, M.; Schenck, W. Integrating Graph Retrieval-Augmented Generation into Prescriptive Recommender Systems. Big Data Cogn. Comput. 2025, 9, 261. https://doi.org/10.3390/bdcc9100261

Niederhaus M, Migenda N, Weller J, Kohlhase M, Schenck W. Integrating Graph Retrieval-Augmented Generation into Prescriptive Recommender Systems. Big Data and Cognitive Computing. 2025; 9(10):261. https://doi.org/10.3390/bdcc9100261

Chicago/Turabian StyleNiederhaus, Marvin, Nico Migenda, Julian Weller, Martin Kohlhase, and Wolfram Schenck. 2025. "Integrating Graph Retrieval-Augmented Generation into Prescriptive Recommender Systems" Big Data and Cognitive Computing 9, no. 10: 261. https://doi.org/10.3390/bdcc9100261

APA StyleNiederhaus, M., Migenda, N., Weller, J., Kohlhase, M., & Schenck, W. (2025). Integrating Graph Retrieval-Augmented Generation into Prescriptive Recommender Systems. Big Data and Cognitive Computing, 9(10), 261. https://doi.org/10.3390/bdcc9100261