Abstract

For many complex industrial applications, traditional attribute reduction algorithms are often inefficient in obtaining optimal reducts that align with mechanistic analyses and practical production requirements. To solve this problem, we propose a recursive attribute reduction algorithm that calculates the optimal reduct. First, we present the notion of priority sequence to describe the background meaning of attributes and evaluate the optimal reduct. Next, we define a necessary element set to identify the “individually necessary” characteristics of the attributes. On this basis, a recursive algorithm is proposed to calculate the optimal reduct. Its boundary logic is guided by the conflict between the necessary element set and the core attribute set. The experiments demonstrate the proposed algorithm’s uniqueness and its ability to enhance the prediction accuracy of the hot metal silicon content in blast furnaces.

1. Introduction

Introduced by Pawlak in 1982, rough set theory serves as a mathematical tool for dealing with vague, imprecise, and uncertain knowledge, garnering increasing attention in computer sciences, artificial intelligence, medical applications, etc. [1,2]. Attribute reduction, a core aspect of rough set theory, simplifies datasets by eliminating irrelevant attributes, which is commonly known as feature selection in machine learning [3,4,5]. Over the last decades, researchers have developed many heuristic reduction algorithms based on the positive region [6,7,8,9], the discernibility matrix [10,11], and information entropy [12,13,14]. While these algorithms have achieved efficiency in running time and storage, they are not always effective in obtaining the optimal reduct when used in complex industrial applications, especially in blast furnace smelting, because they overlook the underlying industrial mechanisms embedded in the data. Without these mechanistic insights, current techniques may discard essential attributes or retain unnecessary ones, leading to suboptimal reductions with limited practical utility.

Blast furnace smelting is the most energy-consuming process in iron and steel production [15,16]. Its internal state is difficult to measure directly because of the influence of high temperature and high pressure. Fortunately, extensive research has demonstrated that the silicon content in hot metal exhibits a close relationship with its thermal state [17,18,19]. Thus, the accurate prediction of the hot metal silicon content is key to the optimal control of blast furnaces.

As a classical complex industrial process, the accuracy of a silicon content prediction depends not only on excellent nonlinear models but also on high-quality datasets. However, due to the strong parameter coupling and nonlinear characteristics of blast furnace systems, original datasets often contain a large number of redundant attributes. It is therefore necessary to calculate a reduction set to improve the training speed and prediction accuracy.

According to rough set theory, there may be multiple complete reduction sets in a dataset. Traditional algorithms, however, only compute one of them at random. From the perspective of blast furnace mechanisms, each attribute has its specific physical meaning and reflects different aspects of the furnace. This means that different reduction sets represent different information, and there should be an optimal reduction set corresponding to both the mechanistic analysis and practical production. Therefore, determining the optimal reduction set represents a meaningful research task.

In addition, how the optimal reduction set of a blast furnace is evaluated is also necessary to consider. In previous studies, many researchers have used the test costs of attributes to evaluate a reduction set, believing that the minimum cost is optimal [20,21,22,23]. However, related smelting mechanism analyses and practical production experience have shown that it is hard to set the exact numerical cost for all attributes. Instead, researchers have achieved better results when analyzing which attributes are more important.

Motivated by the above observations, we suggest an importance sequence, called the attribute priority sequence, in this paper to describe the background meaning of the attributes and prior knowledge related to the blast furnace. On this basis, a priority-optimal reduct is defined, and a novel attribute reduction algorithm using recursion technology is proposed to calculate it. Some experimental results on UCI datasets show differences between the proposed algorithm, classical method, and state-of-the-art method. We also applied this algorithm on a real dataset obtained from a blast furnace, and we trained a machine learning model to show the proposed algorithm’s performance. The major contributions of this paper are as follows:

- (1)

- We propose a novel heuristic reduction construction.

Existing heuristic reduction algorithms commonly adopt the following three kinds of construction: addition–deletion constructions (Algorithm 1), deletion constructions, and addition constructions. In this paper, we propose a novel recursion construction that is effective in obtaining special reducts, such as the optimal reduct, minimal reduct, and minimal cost reduct.

| Algorithm 1. Traditional heuristic attribute reduction algorithm. |

| Input: Information table and priority sequence Output: Reduct . Step 1: Construct the discernibility matrix, ; calculate the core attribute set, ; and the delete elements discerned by . Step 2: Delete the attributes belonging to from the priority sequence; then, the new priority sequence is }. Step 3: Addition: , k = 1. While is not a super reduct of , do End Step 4: Deletion: While , do If is a super reduct then End k = k − 1 End Step 5: Output |

- (2)

- We define a new optimal reduct.

Traditional rough set theories often treat a minimal reduct as optimal because they ignore related prior knowledge. Many researchers have applied the notion of “cost” to describe prior knowledge and treated a minimal cost reduct as optimal. As mentioned above, “cost” is not always effective in complex situations because it is hard to set the exact costs for all attributes. Therefore, we propose the notion of an attribute priority sequence and define a priority-optimal reduct to represent an optimal reduct. The new definition of the optimal reduct is simple and suitable for complex applications.

- (3)

- We provide a recursive reduction algorithm and illustrate its validity based on UCI datasets and a real application on silicon content prediction.

The proposed algorithm is the first recursion-based reduction algorithm, and the detailed reasoning, as well as the experimental results, show its validity. Furthermore, the proposed algorithm heuristically identifies a priority-optimal reduct.

The rest of this study is organized as follows. Section 2 presents some basic knowledge on Pawlak rough set. Section 3 presents the definition of the priority-optimal reduct and proposes an attribute reduction algorithm using recursion technology to obtain the priority-optimal reduct. Section 4 discusses the experiments and results of hot metal silicon content prediction in blast furnaces.

2. Preliminary Knowledge on Pawlak Rough Set

In rough set theory, data are presented in an information table S [7].

where U is the universe, At is a finite non-empty set of attributes, Va is a non-empty set of values of attribute a, and is an information function that maps an object in to exactly one value in Va. As a special type, an information table S is also referred to as a decision table if , where is the condition attribute set and is the decision attribute set. A decision table is inconsistent if it contains two objects with the same condition values but different decision values.

Definition 1.

Given a subset of attributes

an indiscernibility relationship

is defined as follows.

The equivalence class (or granule) of object with respect to is . The union of all the granules with respect to is referred to as a partition of the universe, described as . Granule is exact if it has only one decision value; otherwise, it is rough. The union of all the exact granules with respect to is referred to as the positive region, described as . Based on the indiscernibility relationship and the positive region, a discernibility matrix is defined as follows.

Definition 2.

Given an information table

a discernibility matrix

based on the positive region is defined as

Definition 3.

Given an information table

an attribute set

is called a reduct if it satisfies the following two conditions [7]:

- (1)

- ;

- (2)

- .

If a discernibility matrix

is constructed, then the two conditions mentioned above can be described as follows:

- (1)

- ;

- (2)

- .

A reduct is a subset of attributes that is “jointly sufficient and individually necessary” to represent the knowledge equivalent to the attribute set . In general, an information table may have multiple reducts. The set of these reducts is denoted as , and the intersection of all reducts is the core set, or . If an attribute subset only satisfies the first condition, then it is referred to as a super reduct, i.e., .

3. Recursive Attribute Reduction Algorithm Based on Priority Sequence

In this section, a priority-optimal reduct is defined first. Subsequently, a heuristic approach is discussed, and an attribute reduction algorithm based on a priority sequence is proposed using recursion.

3.1. Priority Sequence and Priority-Optimal Reduct

In this paper, prior knowledge of a blast furnace is treated as a priority sequence of attributes. For a priority sequence , attribute has the highest priority, and has the lowest priority. In practical applications, we can determine this sequence based on empirical knowledge or mechanistic analysis results. In other words, when assigning numbers to these attributes, those deemed more important are placed at the front. The priority-optimal reduct is thus defined as follows.

Definition 4.

Given a discernibility matrix

and a priority sequence

, a reduct

is called the priority-optimal

reduct () if

, where

is the priority of attribute

, and

.

Based on Definition 4, it is straightforward to conclude that POR is unique for a given priority sequence. In other words, each priority sequence can accurately map a corresponding POR. Hence, if the priority sequence represents prior knowledge, then POR is the optimal solution.

However, even though the priority sequence is taken into account, it is still difficult for the traditional attribute reduction algorithm (shown in Algorithm 1) to obtain the POR. A typical example is shown in Example 1.

Example 1.

Suppose a discernibility matrix

has four non-empty elements:

, and

.

For a priority sequence

, it obtains

after step 3, and the attribute “a” is removed in step 4, yielding the

reduct

. However, the matched

is

because attribute “a” has a higher priority.

Example 1 illustrates that the traditional attribute reduction algorithm is not suitable for calculating POR because high-priority attributes may be removed during the reduction process. In other words, a combination of lower-priority attributes replaces an attribute with a higher priority. Therefore, to obtain POR, new approaches are necessary to avoid this outcome.

3.2. Calculation Method on POR

For a heuristic reduction algorithm, each condition attribute is evaluated in turn according to the given priority sequence . The key challenge is to determine whether the evaluated condition attribute belongs to POR. Next, we analyze how to identify the first attribute of POR and the remaining attributes, respectively.

3.2.1. Calculation of the First Attribute of POR

Theorem 1.

For a priority sequence

, if

so that

, then

.

Proof.

For , if , it has > max(p(c′)), where . According to Definition 4, R’ is not the POR. In other words, POR includes attribute . □

According to Theorem 1, the first attribute of POR can be found using the following approach.

Approach 1.

Attribute

is the first attribute of

if it satisfies the following two conditions:

, ;

, then .

However, calculating all the reducts is impractical in a heuristic algorithm. Thus, the notions of free matrix and absolute redundant attribute set are proposed in this paper to identify the first attribute of POR.

Definition 5.

The absolute redundant attribute set of a decision table

is defined as

is also referred to as

if a discernibility matrix

is considered. Based on Definition 5, one can derive the following properties:

- (1)

- ;

- (2)

- , if , then , ;

- (3)

- .

Theorem 2.

Given a discernibility matrix

and an attribute priority sequence

, if

, then

is the first attribute of

.

Proof.

Based on Definitions 4 and 5, since , . Considering that has the highest priority, must belong to POR, and it is its first attribute. □

If , one can remove from the discernibility matrix to calculate the first attribute of POR. The resulting discernibility matrix is referred to as a free matrix, defined as follows.

Definition 6.

Discernibility matrix

is a free matrix if, for any non-empty elements

, it holds that

.

The free matrix M does not contain any absolute redundant attribute, and the relevant analysis is presented below.

Theorem 3.

Given discernibility matrix

, if

,

, then

.

Proof.

For any attribute , if is a core attribute, then . If attribute is not a core attribute, let , where , . Select an element and define . One can construct a new discernibility matrix . Matrix clearly has the following features: (1) attribute is a core attribute of and (2) under the existing condition (), . Thus, for any non-empty element , . Hence, based on Definition 3, is a super reduct of and . Since , there exists a reduct that includes . Therefore, attribute is not a redundant attribute in , and . □

Based on Theorems 2 and 3, we obtain an important approach.

Approach 2.

The highest priority attribute of a free matrix is the first attribute of POR.

The free matrix is constructed by Algorithm 2.

| Algorithm 2. Construct the free matrix. |

| Input: Discernibility matrix . Output: The related free matrix. Step 1: Sort all the non-empty elements by ; let be the number of non-empty elements. Step 2: Set While , do ; While , do If then delete from , Else . End End End Step 3: Output the free matrix. |

3.2.2. Calculation on Other Attributes of POR

Besides the first attribute, the other attributes of POR are checked according to Theorem 4.

Theorem 4.

For attribute

, if

,

, then

, where

and

.

Proof.

Let . According to the definition of , the attribute set is a subset of . Since there exists a reduct that includes and attribute , cannot discern all elements of matrix , i.e., . Suppose . In that case, the reduct is not POR. However, , satisfies . This conflicts with Definition 4. Hence, the above assumption is invalid, implying . □

According to Theorem 4, the conclusion is that the key step is to verify whether there exists a reduct that contains both and the attribute set .

Considering that a reduct should be “jointly effective and individually necessary,” we introduce the notion of the necessary element set to represent “individually necessary.”

Definition 7.

Given a discernibility matrix , the necessary element set () of attribute c with respect to attribute set

is

.

The notion of is similar to that of a core set, since both are related to “necessary”. The core set represents the attributes that are necessary for all reducts. Meanwhile, indicates whether attribute is necessary to attribute set . It has the following:

- (1)

- ;

- (2)

- If then .

If , then the attribute set is not a reduct because it cannot discern the non-empty elements in . Conversely, if , then , . This means that all elements discerned by attribute are also discerned by . Thus, attribute set is not a reduct.

Based on the above analysis, we redefine the reduct using .

Definition 8.

An attribute set R is called a reduct if and only if it satisfies the following conditions:

- (1)

- For each non-empty element , ;

- (2)

- For each attribute , .

Based on Definition 8, we have the following conclusion about .

Theorem 5.

Given a discernibility matrix

and an attribute set

, if there exists a

reduct

that includes the attribute set

, then

.

Proof.

Based on Definition 8, satisfies , . Because , we have since . □

According to Theorem 5, if , then no reduct can include .

Based on the above discussions, we propose a recursive algorithm to determine the other attributes of POR. Recursion is a kind of self-relation and is represented as a function capable of calling itself within a program. Once a recursive function calls itself, it reduces a problem into sub-problems. The recursive call process continues until it reaches an end point where the sub-problem cannot be reduced further. Thus, there are two elements in a recursive method: simple repeated logic and a termination condition. In this paper, an additional border logic is also adopted to ensure that the result is a reduct.

- Simple Repeated Logic

Repeated logic refers to the similarity between a problem and its sub-problems, which constitutes the main part of recursion.

In this method, repeated logic is utilized to verify the existence of a reduct that includes , and it can be divided into two parts: (1) calculation and (2) judgment logic. The calculation of is based on Definition 7, and further descriptions are unnecessary. For the judgment logic, there are two cases.

- (1)

- If , then we accept , and we call this method with the updated parameter set.

- (2)

- If , then we reject , and we remove from the discernibility matrix.

A simple example is described as follows.

Example 2.

Given

and the priority sequence

,

is a free matrix.

First, according to Approach 2, attribute is the first attribute of POR. Next, B1 = {a} and the other attributes are evaluated in turn.

For attribute , . Attribute is accepted, and the attribute set becomes .

For attribute , . Since , attribute c is rejected and the new matrix is .

- Additional Border Logic

Since the logic above uses only a necessary condition to test attributes in priority sequence order, we will encounter a border during recursion, referred to here as core conflict.

Definition 9.

Given a discernibility matrix

, attribute set, and a core attribute

, the core conflict between

and

() is described as follows:

,

.

Since , must belong to all reducts. However, due to this core conflict, no reduct can include both and . Thus, we present the following approach.

Approach 3.

Given a discernibility matrix

and an attribute set, if there is a core conflict between

and a core attribute, then no

reduct can include

.

At the start of this method, no core attribute exists in the free matrix because of step 1 of Algorithm 1. As recursion proceeds, some attributes are removed from , which causes certain attributes to become core attributes in the new discernibility matrix . If these core attributes conflict with higher-priority attributes, a core conflict will arise in the recursion.

The occurrence of core conflicts implies that at least one redundant attribute is present. The simplest way to address this is to remove any attributes for which from the related discernibility matrix. By doing so, we eventually obtain a reduct. However, this reduct may not be POR. A simple example is provided in Example 3.

Example 3.

Given

,,,,

and the priority sequence

,

is a free matrix, and attribute

is the first attribute of POR. Using repeated logic, we obtain

. For the next attribute

, we find

, and

is not a core attribute. Attribute

is then removed from

, producing

. For the last attribute

,

,

. Since

is a core attribute, it cannot be removed. In this case, the core attributeconflicts with two higher-priority attributes

and

. If the conflict is ignored, the attribute set would be obtained by removing

and. Clearly,

is not POR, as there exists another

reduct

in which attribute

has higher priority than any of the attributes in

Thus, the recursive process must stop if a core conflict arises, i.e., the method reaches a wrong border and must backtrack. This example illustrates the crucial role of core conflict. It helps the algorithm identify situations where accepting the current attribute would conflict with higher-priority attributes. Hence, an additional border logic is proposed to address this type of problem.

The additional border logic identifies the last attribute that can be removed from , allowing the method to restart from a new start point. Because the border logic operates at multiple levels of recursion, it can be split into two parts: (1) judgment of core conflict in the current level and (2) handling the return operation in the subsequent level.

There are three cases:

Case 1. The current attribute is not in (rejected by Simple Repeated Logic in the previous step); continue the return operation.

Case 2. The current attribute is in , but it is a core attribute that cannot be deleted; continue the return operation.

Case 3. The current attribute is in , but it is not a core attribute; then, refuse it, remove it from , and enter the next recursion from the new start point.

- The Terminate Condition

The terminate condition marks the endpoint of the recursion and is triggered once all attributes in the priority sequence have been tested. If the core conflict is treated as an unsuccessful border, then the terminate condition can be regarded as a successful border. In practical programming, we use a to distinguish between the two situations.

3.2.3. The Complete Reduction Algorithm Based on Recursion

Based on the above discussions, the complete algorithm is described as shown in Algorithms 3 and 4. In Algorithm 3, we provide the framework of the complete attribute reduction algorithm, and the details of its recursion function are described in Algorithm 4.

| Algorithm 3. Reduction algorithm based on recursion for calculating POR. |

| Input: Information table and attribute priority sequence . Output: The corresponding . Step 1: Construct the discernibility matrix , calculate the core attribute set , and delete the elements from that can be discerned by . Step 2: Construct the free matrix and delete attributes in the attribute priority sequence that do not appear in the free matrix ( and ). The new attribute priority sequence is , and the attribute with the highest priority in is , i.e., . Step 3: Use Algorithm 4 to test other attributes in with the input (), and output (). Step 4: Output . |

| Algorithm 4. Recursive function . |

| Input: Attribute set , priority sequence , discernibility matrix , . Output: Attribute set , . Step 1: If then Return with /Valid reduct found, recursion ends End Step 2: For each attribute in , calculate based on . Step 3: If then Call with input ()//Accept , recursive call Else Refuse and test if is a core set of If is a core set, then Return with //Core conflict detected, backtrack Else Delete from Call with input ()//Recursive call without End End Step 3: Process the result returned: If not be rejected not a core of Refuse and delete from Call with input () and return the result returned directly Else Return the result returned directly End |

A simple proof of Algorithm 3 is as follows.

Since we evaluate each attribute using the priority sequence, and a high-priority attribute is deleted only if no reduct includes the chosen attribute set, the main problem is to prove that the selected attribute set is a reduct. First, due to the repeated logic, the finally obtained attribute set must satisfy . Second, suppose is not a super reduct of ; then, there exists at least one element that cannot be discerned by the attribute set. Owing to the deletion mechanism in the repeated logic, any element that cannot be discerned will lead to a core conflict, which prevents such an element from remaining. Thus, the attribute set obtained by Algorithm 3 is exactly the POR.

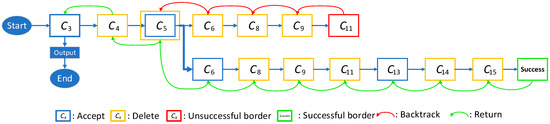

We also illustrate the complete calculation process in Example 3 in Figure 1.

Figure 1.

Calculation in Example 3.

First, attributes , , and are accepted by the repeated logic, whereas d is rejected. The new discernibility matrix is denoted by M1.

It is observed that attribute is a core attribute of and conflicts with {}. Hence, the algorithm reaches an unsuccessful border. Then, the algorithm gradually backtracks using the additional border logic. First, attribute d satisfies Case 1, so the algorithm continues the return operation. Next, attribute satisfies Case 3 and is deleted because it is not a core attribute.

Now, the algorithm moves to the next recursion level from the new start point (attribute d), where . Attribute d is rejected by the repeated logic because . After removing attribute d, the discernibility matrix becomes . It is determined that attribute e is a core attribute and conflicts with {}. Accordingly, the algorithm gradually backtracks to the previous level where the last accepted attribute was .

Third, attribute is deleted and the other attributes are checked in order until a successful border appears. Eventually, the output POR is {a,c,d}.

We also compared the proposed algorithm with a classical heuristic algorithm, a recently reported algorithm from the literature [7], and the state-of-the-art distributed attribute reduction algorithm RA-MRS described in [3]. The related experimental results are listed below.

These tested datasets originate from UCI and are uniformly discretized if they have continuous attribute values. For example, Sonar_16 indicates that the Sonar dataset’s continuous attribute values are uniformly discretized into 16 intervals.

In Table 1, underscores highlight the differences from other algorithms, and numbers represent the index of attributes. The experimental results demonstrate that our algorithm effectively identifies the priority-optimal reduct, while the compared algorithm does not necessarily. Moreover, the reduction set from our algorithm is generally larger than those of the compared algorithms. That is, it is difficult for our algorithm to obtain a minimum reduct because data backgrounds are taken into consideration and some high-priority attributes must remain.

Table 1.

Experimental results.

4. Application in Blast Furnace Smelting

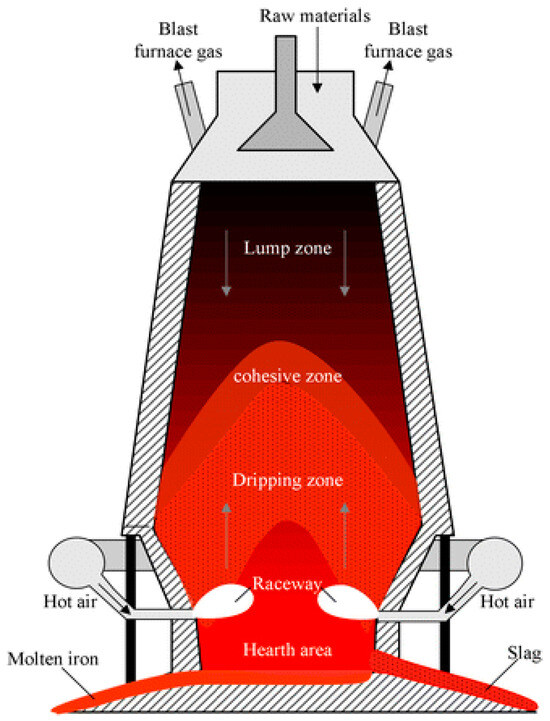

Blast furnace smelting is a complex, nonlinear, and high-dimensional dynamic process, as shown in Figure 2. Raw materials such as iron and coke are fed from the top. As they move downward, oxygen-enriched hot air and pulverized coal are conveyed from the bottom of the blast furnace and eventually flow upward. Complex reactions of various materials occur in multi-phase states while a variety of physical changes and chemical reactions occur simultaneously during the two-directional motion [16].

Figure 2.

Blast furnace structural diagram.

The main behavior of silicon in the blast furnace smelting process is the reduction reaction. First, during the SiO2 reduction by coke carbon or carbon dissolved in hot metal, part of the silicon mixes with hot metal in a liquid phase as follows:

Meanwhile, most of the silicon transforms to gaseous SiO by the following reaction:

SiO then rises with blast furnace gas and is dissolved by both slag and hot metal from the cohesive zone. The dissolved SiO reacts again with the coke in metal:

The thermal state of the blast furnace is one of the most important factors, as temperature significantly influences reduction reactions. Due to the enclosed nature of blast furnaces, directly obtaining the thermal state poses a challenge. Therefore, the silicon content is used as an indicator to determine the blast furnace state. Predicting silicon content has also become a focus of study, and many machine learning models—such as support vector regression and neural networks—have been applied to this task. One of the most important factors of machine learning performance is data quality. Thus, selecting an optimal feature set is essential for providing high-quality inputs.

In this section, we first determine the attribute priority sequence related to silicon content. Next, the reduction algorithm based on priority sequence is applied for feature selection. Finally, a long short-term memory recurrent neural network (LSTM-RNN) is employed to predict silicon content and verify the validity of POR.

4.1. Data Description and Priority Sequence

Data were collected from the No. 2 blast furnace of Liuzhou Steel in China, which has a volume of 2650 m3. A total of 1200 data groups are available to validate the method; 800 of them are used as the training set and 400 as the test set. In the dataset related to the hot metal silicon content, there are sixteen condition attributes and one decision attribute.

The following principles are followed when determining the attribute priority sequence.

- (1)

- The mechanisms of blast furnace smelting should be considered first. For example, since blast furnace smelting is a continuous process, the silicon content at the last time point strongly influences the current silicon content.

- (2)

- We also considered the staff’s experience, since they know which attributes carry the greatest importance during operation.

- (3)

- Correlation analysis between the condition attributes can serve as a reference for the attribute priority sequence.

Considering the above factors comprehensively, we obtain the following attribute priority sequence: {latest silicon content, theoretical burning temperature, bosh gas index, bosh gas volume, actual wind speed, standard wind speed, gas permeability, blast momentum, furnace top pressure, oxygen enrichment percentage, cold wind flow, hot blast temperature, pressure difference, hot blast pressure, cold wind pressure, oxygen enrichment pressure}. For convenience, we use – to represent these condition attributes, and the subscript of a symbol represents its position in the priority sequence, i.e., its priority.

4.2. Attribute Reduction

In our work, we uniformly discretized the data for every attribute into 10 intervals. We then ran the proposed attribute reduction procedure as follows.

First, we constructed the discernibility matrix based on Definition 2. Then, we calculated the core attribute set and deleted the elements that can be discerned by from . Next, the free matrix was constructed and we obtained . Thus, the new attribute priority sequence is ,, with as the highest-priority attribute. Finally, Algorithm 3 was executed. The specific calculation process is shown in Figure 3.

Figure 3.

Recursive process.

According to the simple repeated logic, we added ; deleted ; added ; and deleted . Then, it was determined that is a core attribute that conflicts with {}. This meant that the algorithm reached an unsuccessful border. Next, this algorithm gradually backtracked to the level where the last attribute was accepted. In the following steps, was removed and the attributes left were checked in order until a successful border was reached. Eventually, we obtained {} and generated POR = .

To demonstrate the effectiveness of our algorithm, we compared it to the traditional addition–deletion algorithm, as shown in Algorithm 1. The results are presented in Table 2.

Table 2.

Results of attribute reduction.

For ease of description, we denote the reduct obtained by the proposed recursion algorithm as , and the other as . From Table 2, with the exception of the attributes both algorithms share, holds the highest priority in , whereas does so in . Since is much more important than based on the priority sequence, the reduct is more aligned with the data-driven priority sequence. These reducts also show that the classic addition–deletion algorithm is less effective in calculating the priority-optimal reduct. By retaining those high-priority attributes, our reduction sets, while slightly larger in the number of attributes, perform better in capturing domain knowledge.

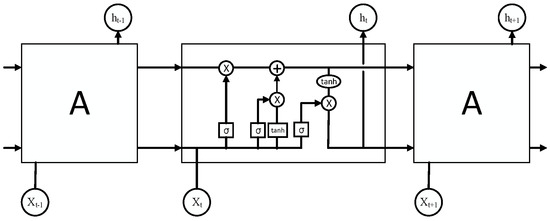

4.3. Prediction with LSTM-RNN

A blast furnace functions as a dynamic delay system, in which its current state depends on its previous state. LSTM-RNN is a gated recurrent neural network whose structure is shown in Figure 4. Each output of LSTM-RNN is also related to the previous state. Moreover, LSTM-RNN can selectively use previous state information to predict the current state based on the input, which makes it more flexible and suitable for the prediction of hot metal silicon content.

Figure 4.

Structure of LSTM-RNN.

Considering the above discussion and the complexity of blast furnace smelting, we adopted LSTM-RNN to predict the hot metal silicon content. The actual model consists of one LSTM layer with a 10-dimensional output, and one output layer with a 1-dimensional output. Additional parameter settings of the LSTM layer are shown in Table 3 (the deep learning framework used is Keras 2).

Table 3.

Parameter settings of LSTM.

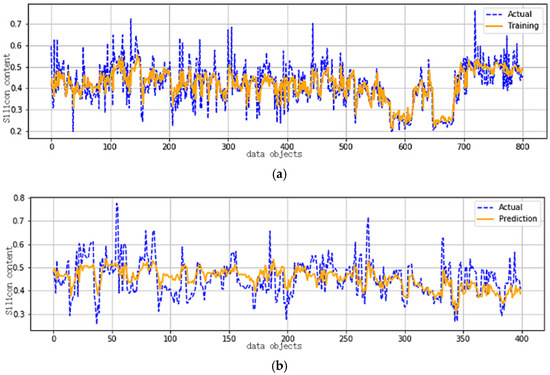

To ensure a valid comparison, we adjust the validation set five times during the training process. The validation set is a continuous part of the training set, and the remaining part of the training set is used to train the neural network. The results are shown in Table 4 (tests with the same test number share the same validation set). MSE and Hit are calculated as

.

Table 4.

Prediction results.

From Table 4, models trained with achieve better performance than those trained with . Specifically, the training set results are almost the same (MSE about 0.043, Hit about 88%). However, the situation is different for the test set. The average Test_MSE and Hit for are 0.0049 and 86.3%, respectively, while 0.0055 and 82.9% for . Compared with , the Test_MSE decreases by 10.9% and Hit increases by 4.1%.

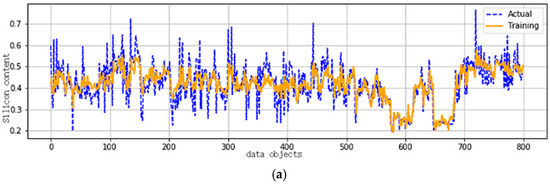

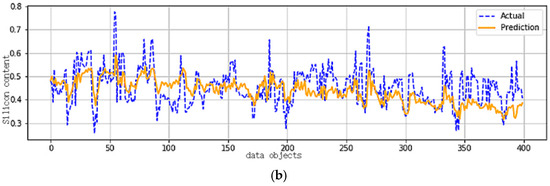

For further analysis, we selected the most representative test, i.e., test number 3, as shown in Figure 5 and Figure 6, for observation and comparison. For the training set, the figures are almost the same. However, for the test set, models trained based on fail to track the change in silicon content once the time point exceeds 300, whereas the models trained based on exhibit superior performance and effectively capture the trend of silicon content.

Figure 5.

Predictive results based on (a) Training set. (b) Test set.

Figure 6.

Predictive results based on (a) Training set. (b) Test set.

Through the above analysis, it is shown that the priority-optimal reduct retains more precise and relevant information than the classical reduction sets, and the related models demonstrate stronger generalization abilities. Therefore, the attribute reduction algorithm based on recursion proves to be practical in real-world applications.

5. Conclusions

In this study, we introduced a new definition of the priority-optimal reduct for complex industrial processes within rough set theory. Based on this, a recursive attribute reduction algorithm was developed. As the first recursive construction in the history of rough sets, it has important research value. Moreover, the results of experiments on silicon content prediction in a blast furnace demonstrate the effectiveness of our algorithm under complex blast furnace conditions.

Our work successfully applied the new attribute reduction to the feature selection of hot metal silicon content data from the blast furnace. In addition to the description of prior knowledge, the characteristics of the data itself should also be considered. Since the data are numerical, a discernibility relation that relies on discrete data may introduce quantization error. Thus, further investigation of tolerance relations, fuzzy relations, or a new discernibility relation is expected to yield better performance for this problem.

It should be noted that the performance of the proposed algorithm in practical applications heavily depends on a reasonable priority sequence, which is usually derived from experiential knowledge or mechanism analysis results. Consequently, there exists a potential overfitting risk when applying it to specific domain datasets. We leave a more robust priority sequence determination method to future work.

Author Contributions

Conceptualization, L.Y. and P.C.; methodology, L.Y.; software, P.C., Y.G. and Z.L.; validation, P.C., Y.G. and Z.L.; formal analysis, Z.L.; investigation, P.C., L.Y. and Z.L.; resources, L.Y.; data curation, P.C., Y.G. and Z.L.; writing—original draft preparation, L.Y. and P.C.; writing—review and editing, L.Y. and Z.L.; visualization, Y.G. and Z.L.; supervision, L.Y.; project administration, L.Y.; funding acquisition, L.Y. and Z.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China Grant Number [61773406] and the Natural Science Foundation of Hunan Grant Number [2021JJ30877]. And The APC was funded by [2021JJ30877].

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

These tested datasets originate from UCI.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Taher, D.I.; Abu-Gdairi, R.; El-Bably, M.K.; El-Gayar, M.A. Decision-making in diagnosing heart failure problems using basic rough sets. AIMS Math. 2024, 9, 21816–21847. [Google Scholar] [CrossRef]

- Zhang, Q.; Xie, Q.; Wang, G. A survey on rough set theory and its applications. CAAI Trans. Intell. Technol. 2016, 1, 323–333. [Google Scholar] [CrossRef]

- Yin, L.; Cao, K.; Jiang, Z.; Li, Z. RA-MRS: A high efficient attribute reduction algorithm in big data. J. King Saud Univ.-Comput. Inf. Sci. 2024, 36, 102064. [Google Scholar] [CrossRef]

- Qin, L.; Wang, X.; Yin, L.; Jiang, Z. A distributed evolutionary based instance selection algorithm for big data using Apache Spark. Appl. Soft Comput. 2024, 159, 111638. [Google Scholar] [CrossRef]

- Akram, M.; Ali, G.; Alcantud, J.C.R. Attributes reduction algorithms for m-polar fuzzy relation decision systems. Int. J. Approx. Reason. 2022, 140, 232–254. [Google Scholar] [CrossRef]

- Liu, G.; Feng, Y. Knowledge granularity reduction for decision tables. Int. J. Mach. Learn. Cyber. 2022, 13, 569–577. [Google Scholar] [CrossRef]

- Yin, L.; Qin, L.; Jiang, Z.; Xu, X. A fast parallel attribute reduction algorithm using Apache Spark. Knowl.-Based Syst. 2021, 212, 106582. [Google Scholar] [CrossRef]

- Xie, X.; Gu, X.; Li, Y.; Ji, Z. K-size partial reduct: Positive region optimization for attribute reduction. Knowl.-Based Syst. 2021, 228, 107253. [Google Scholar] [CrossRef]

- Turaga, V.K.H.; Chebrolu, S. Rapid and optimized parallel attribute reduction based on neighborhood rough sets and MapReduce. Expert Syst. Appl. 2025, 260, 125323. [Google Scholar] [CrossRef]

- Kumar, A.; Prasad, P.S.V.S.S. Enhancing the scalability of fuzzy rough set approximate reduct computation through fuzzy min–max neural network and crisp discernibility relation formulation. Eng. Appl. Artif. Intell. 2022, 110, 104697. [Google Scholar] [CrossRef]

- Sowkunta, P.; Prasad, P.S.V.S.S. MapReduce based parallel fuzzy-rough attribute reduction using discernibility matrix. Appl. Intell. 2022, 52, 154–173. [Google Scholar] [CrossRef]

- Xu, W.; Yuan, K.; Li, W.; Ding, W. An Emerging Fuzzy Feature Selection Method Using Composite Entropy-Based Uncertainty Measure and Data Distribution. IEEE Trans. Emerg. Top. Comput. Intell. 2023, 7, 76–88. [Google Scholar] [CrossRef]

- Sang, B.; Chen, H.; Yang, L.; Li, T.; Xu, W. Incremental Feature Selection Using a Conditional Entropy Based on Fuzzy Dominance Neighborhood Rough Sets. IEEE Trans. Fuzzy Syst. 2022, 30, 1683–1697. [Google Scholar] [CrossRef]

- Ji, X.; Li, J.; Yao, S.; Zhao, P. Attribute reduction based on fusion information entropy. Int. J. Approx. Reason. 2023, 160, 108949. [Google Scholar] [CrossRef]

- Fontes, D.O.L.; Vasconcelos, L.G.S.; Brito, R.P. Blast furnace hot metal temperature and silicon content prediction using soft sensor based on fuzzy C-means and exogenous nonlinear autoregressive models. Comput. Chem. Eng. 2020, 141, 107028. [Google Scholar] [CrossRef]

- Nistala, S.H.; Kumar, R.; Parihar, M.S.; Runkana, V. metafur: Digital Twin System of a Blast Furnace. Trans. Indian. Inst. Met. 2024, 77, 4383–4393. [Google Scholar] [CrossRef]

- Jiang, K.; Jiang, Z.; Xie, Y.; Pan, D.; Gui, W. Prediction of Multiple Molten Iron Quality Indices in the Blast Furnace Ironmaking Process Based on Attention-Wise Deep Transfer Network. IEEE Trans. Instrum. Meas. 2022, 71, 2512114. [Google Scholar] [CrossRef]

- Liu, C.; Tan, J.; Li, J.; Li, Y.; Wang, H. Temporal Hypergraph Attention Network for Silicon Content Prediction in Blast Furnace. IEEE Trans. Instrum. Meas. 2022, 71, 2521413. [Google Scholar] [CrossRef]

- Li, J.; Yang, C.; Li, Y.; Xie, S. A Context-Aware Enhanced GRU Network with Feature-Temporal Attention for Prediction of Silicon Content in Hot Metal. IEEE Trans. Ind. Inf. 2022, 18, 6631–6641. [Google Scholar] [CrossRef]

- Wang, W.; Huang, B.; Wang, T. Optimal scale selection based on multi-scale single-valued neutrosophic decision-theoretic rough set with cost-sensitivity. Int. J. Approx. Reason. 2023, 155, 132–144. [Google Scholar] [CrossRef]

- Shu, W.; Xia, Q.; Qian, W. Neighborhood multigranulation rough sets for cost-sensitive feature selection on hybrid data. Neurocomputing 2023, 565, 126990. [Google Scholar] [CrossRef]

- Yang, J.; Kuang, J.; Liu, Q.; Liu, Y. Cost-Sensitive Multigranulation Approximation in Decision-Making Applications. Electronics 2022, 11, 3801. [Google Scholar] [CrossRef]

- Su, H.; Chen, J.; Lin, Y. A four-stage branch local search algorithm for minimal test cost attribute reduction based on the set covering. Appl. Soft Comput. 2024, 153, 111303. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).