Abstract

Currently, closed-set object detection models represented by YOLO are widely deployed in the industrial field. However, such closed-set models lack sufficient tuning ability for easily confused objects in complex detection scenarios. Open-set object detection models such as GroundingDINO expand the detection range to a certain extent, but they still have a gap in detection accuracy compared with closed-set detection models and cannot meet the requirements for high-precision detection in practical applications. In addition, existing detection technologies are also insufficient in interpretability, making it difficult to clearly show users the basis and process of judgment of detection results, causing users to have doubts about the trust and application of detection results. Based on the above deficiencies, we propose a new object detection algorithm based on multi-modal large language models that significantly improves the detection effect of closed-set object detection models for more difficult boundary tasks while ensuring detection accuracy, thereby achieving a semi-open set object detection algorithm. It has significant improvements in accuracy and interpretability under the verification of seven common traffic and safety production scenarios.

1. Introduction

Object detection has always been a crucial task in the field of computer vision [1,2,3]. Its aim is to accurately identify and locate specific target objects, including pedestrians, vehicles, animals, etc., from images or videos and to determine their location, size, and category, among other information. This holds great significance for numerous application fields, such as autonomous driving, security monitoring, industrial inspection, and medical diagnosis.

On the one hand, due to the limitations of their model architectures, closed-set object detection models lack adaptability in special situations. This results in frequent missed detections and false detections, which severely impact the accuracy and reliability of the detection results. On the other hand, although open-set object detection models like GroundingDINO have expanded the detection range to some extent, there remains a gap in detection accuracy compared to closed-set detection models. Consequently, it is challenging to meet the requirements for high-precision detection in practical applications [4].

Additionally, traditional tuning of object detection models usually demands a large amount of data support. This not only raises the cost of data collection and processing but also renders the model-tuning process cumbersome and time-consuming. When faced with new scenarios of missed and false detections, existing models struggle to adapt and adjust rapidly and effectively, thereby impeding the continuous optimization of detection performance.

Moreover, existing detection technologies also suffer from deficiencies in interpretability. As a result, it is hard to clearly present users with the judgment basis and process of detection results, which leads to users having doubts when it comes to trusting and applying these results.

To address these issues, we propose two innovative points.

First, we employ a multi-modal large model to resolve the problem of missed detections and false detections in closed-set object detection models. Based on this multi-modal large model, our invention utilizes prompt engineering technology that emphasizes creative description and structured writing to automatically generate description data for object detection scenarios and detected objects. Through instruction fine-tuning of the multi-modal large model, it can identify various common objects that are often subject to missed or false detections. In this manner, after the initial inspection by the object detection model, a secondary re-inspection is conducted, and a new confidence determination is provided in a highly interpretable way. Consequently, this significantly enhances the accuracy of the existing detection framework.

Second, we adopt local low-rank adaptation fine-tuning technology to reduce the data volume requirements for model tuning. Based on the local low-rank adaptation matrix, our invention constructs a bypass matrix within the multi-modal large model. Subsequently, prompt engineering is used to build a few-shot fine-tuning dataset for training. This tuning process can be further fine-tuned when new missed-detection and false-detection scenarios arise. This iterative framework significantly improves the detection effect of closed-set object detection models for more challenging boundary tasks while guaranteeing detection accuracy, thereby realizing “semi-open set” detection.

2. Related Work

Object detection algorithms have always been an extremely important task in the field of computer vision [5]. This task aims to accurately identify and locate specific target objects from images or videos, such as pedestrians, vehicles, animals, etc., and determine their positions, sizes, and categories. This is of crucial significance for many application fields, such as autonomous driving, security monitoring, industrial inspection, medical diagnosis, and so on.

2.1. Traditional Object Detection Algorithms

Traditional algorithms, such as the HOG (histogram of oriented gradients) [6] detector and the DPM (deformable part model) detector [7], once played an important role in past computer vision tasks. However, with the development of technology, they have gradually exposed obvious limitations. The HOG detector mainly describes image features by calculating the histogram of oriented gradients in local regions of the image. However, this method has relatively weak adaptability to complex image scenes and multi-pose changes in the target. In the face of complex backgrounds, target occlusion, or multi-pose situations, the feature expression ability of the HOG detector appears limited, making it difficult to accurately capture the key features of the target, which easily leads to inaccurate detection results. The DPM detector is based on the deformable part model, which assumes that the target can be decomposed into several deformable parts, and target detection is achieved through the detection and combination of these parts. Although this method can handle the deformation of the target to a certain extent, its adaptability to situations such as occlusion and illumination changes is still poor. In addition, the computational complexity of the DPM detector is relatively high, requiring a large number of feature calculations and matching operations, resulting in a slower detection speed, making it difficult to meet the application scenarios with higher real-time requirements.

2.2. Deep Learning Object Detection Algorithms

Deep learning algorithms based on neural networks, such as R-CNN (Region-based Convolutional Neural Network) [8], Faster R-CNN (Faster Region-based Convolutional Neural Network) [9], SSD (Single Shot MultiBox Detector) [10], RetinaNet [11], and the YOLO (You Only Look Once) series [12] of detection algorithms, have made significant progress in the field of computer vision, bringing revolutionary changes to the task of object detection.

The R-CNN algorithm uses Selective Search [13] to generate candidate regions and then performs feature extraction using a convolutional neural network (CNN) for each region, followed by classification and regression. The advantage of this method is that it can utilize the powerful feature extraction ability of CNNs, but due to the need for repeated feature extraction and calculation for a large number of candidate regions, the efficiency is relatively low. Specifically, when dealing with large-scale image data, R-CNN consumes a lot of computing resources and time because it needs to process each candidate region separately, including operations such as convolution and pooling. This repeated calculation makes it difficult for R-CNN to meet the requirements in application scenarios with high real-time requirements, such as autonomous driving and video surveillance.

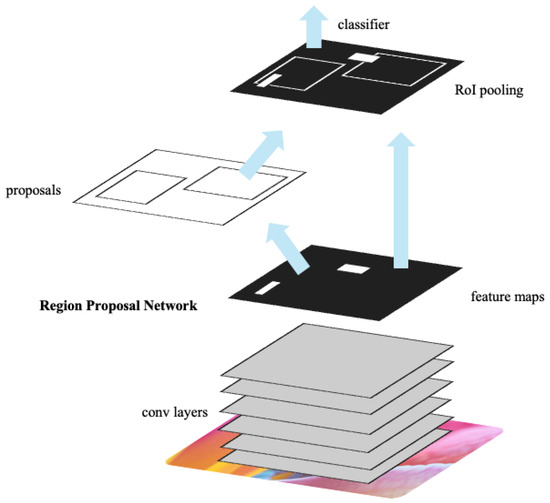

As shown in Figure 1, Faster R-CNN is a widely utilized technology in the field of object detection, designed to address the limitations of previous models by integrating region proposal generation directly into the neural network. It offers a sophisticated mechanism for detecting objects within images by leveraging deep learning architectures.

Figure 1.

Faster R-CNN is a single, unified network for object detection. The RPN module serves as the ‘attention’ mechanism of this unified network.

At the heart of Faster R-CNN is the Region Proposal Network (RPN), which plays a crucial role in improving the efficiency of the object detection process. The architecture of the RPN is designed to generate object proposals from an input image quickly. It does this by sliding a small network over the convolutional feature map output by the backbone network, which is usually a pre-trained convolutional neural network like VGG or ResNet. The RPN operates by using anchors at various scales and aspect ratios to identify regions in the image with high object probability, significantly reducing the number of potential object regions needing further analysis.

Proposals, referred to as region proposals, are rectangular regions in the image that are likely to contain an object. The RPN generates these proposals by predicting the likelihood of an object being within a region and refining the coordinates of the bounding boxes around these regions. This process allows the model to focus computational resources on more promising areas of the image, enhancing detection speed and accuracy.

Feature maps in Faster R-CNN are multi-dimensional arrays derived from input images through convolutional layers of the backbone network. These feature maps are of reduced spatial dimensions as a result of pooling and convolution operations; the exact dimensions depend on the network architecture used. Typically, they encapsulate the essential features of the image needed for detecting objects, and their depth corresponds to the number of filters used in convolutional layers, capturing rich spatial hierarchies corresponding to various object features.

RoI (Region of Interest) pooling is a crucial component in the Faster R-CNN architecture. It standardizes the size of input features for the subsequent fully connected layers, enabling a fixed-dimensional input regardless of the region proposal’s size. This process is essential because fully connected layers require uniform input dimensions. RoI pooling achieves this by dividing each proposal into a fixed number of smaller regions (typically a 7 × 7 grid) and applying max pooling within each sub-region. This fixed-size output facilitates efficient batch processing and ensures consistent input to the network’s classifier and regressor, enhancing computational efficiency and model accuracy.

The final step in the Faster R-CNN pipeline is classification. For this purpose, the model typically employs a softmax classifier to predict the class of the detected object. In parallel, a bounding box regressor fine-tunes the coordinates of the bounding boxes, ensuring they accurately fit around the detected objects. The classifier effectively distinguishes between objects of different classes based on the features extracted and pooled from the RoI pooling layer, while the regressor adjusts the bounding box dimensions for precise object localization.

Overall, Faster R-CNN seamlessly integrates object proposal, classification, and bounding box regression into a single network, making it a powerful and efficient choice for object detection tasks in various applications.

The SSD algorithm improves the detection speed by predicting targets of different scales on different feature maps. It adopts a multi-scale feature fusion strategy and can detect targets of different sizes simultaneously. However, the detection effect of the SSD for small targets still needs to be improved. Since small targets have less information on the feature maps, the SSD may have difficulty accurately identifying and locating small targets. In addition, the SSD also has some difficulties in handling the occlusion and overlap of targets, which may lead to false detections or missed detections.

The RetinaNet algorithm solves the problem of class imbalance by introducing the Focal Loss function, which is the situation where the number of background samples is much larger than the number of target samples in object detection. The Focal Loss function can reduce the weight of easily classified samples, making the model pay more attention to difficult-to-classify samples, thereby improving the accuracy of detection. However, RetinaNet still has certain limitations in handling complex backgrounds and multi-scale targets. When the background is very complex or the scale of the target changes greatly, RetinaNet may have difficulty accurately separating the target from the background, resulting in inaccurate detection results.

The YOLO series of algorithms performs well in terms of speed and real-time performance and can quickly process images and perform object detection. It adopts an end-to-end detection method, directly predicting on the entire image without the need to generate candidate regions. This method greatly improves the detection speed, making the YOLO series of algorithms applicable to scenarios with very high real-time requirements. However, YOLO is sometimes inferior to some other more complex algorithms in terms of detection accuracy. Its detection ability for small targets is relatively weak because small targets occupy a relatively small proportion in the image, and YOLO may have difficulty accurately capturing their features. In addition, the positioning accuracy of YOLO for the target may not be accurate enough, and it may not be able to provide sufficiently precise detection results when detecting small objects in the distance or the edge details of the target.

2.3. Open-Set Objection Detection Algorithms

The rapidly developing open-set object detection algorithms, such as GroundingDINO [14] and RAM [15], have brought new ideas and methods to the field of object detection. GroundingDINO is a cutting-edge framework designed for integrating text and image data to achieve advanced tasks like object detection with natural language cues. This technology emphasizes the seamless interaction between visual and textual information, enabling models to understand and locate specific objects described by text within an image.

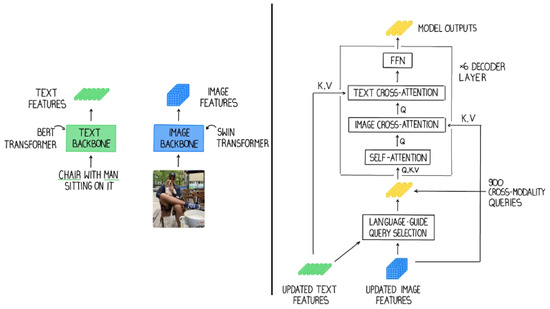

As shown in Figure 2, In GroundingDINO, the text backbone is responsible for processing input text and transforming it into a meaningful representation known as vanilla text features. These features are extracted using transformer-based models capable of encoding the semantic meaning of text into a continuous vector form. The text backbone captures intricate linguistic details, ensuring the model understands not just individual words but their contextual relationships within sentences. The feature enhancer in GroundingDINO utilizes a series of transformer layers aimed at refining the initial multi-modal embeddings. This component enhances the expressiveness and contextual relevance of the features derived from both text and images. By adopting mechanisms like self-attention, the feature enhancer ensures that these features are well prepared for further processing and integration. Text features in GroundingDINO are structured as a sequence of embeddings corresponding to tokens in the input text. These embeddings maintain a fixed dimensionality, ensuring compatibility within the model’s architecture. The structure typically aligns with commonly used embedding dimensions such as 512 or 768, enabling the efficient representation of various lengths and complexities of input text.

Figure 2.

The extraction process of the backbone encoders of text and image, respectively, in GroundingDINO and the following language-guided query selection and cross-modality decoder, The green in the figure represents the text modal information, the blue represents the image modal information, and the yellow represents the multi-modal information after fusion.

The image features in GroundingDINO are extracted from high-level feature maps derived from a powerful backbone network, such as a ResNet or Vision Transformer (ViT). The dimensions of these features often take the form of multi-dimensional arrays with spatial dimensions and a depth that corresponds to the number of channels, capturing detailed visual information necessary for precise object detection. Language-guided query selection is a pivotal component in the model that aligns image features with textual input, guiding attention to relevant image regions. This is accomplished through cross-attention mechanisms where text features act as a guide to select and focus on specific queries related to image features. This process enhances the model’s ability to pinpoint the regions of interest within an image effectively.

The cross-modality decoder is crucial for merging text and image features into a unified representation. It leverages transformer layers to build connections between the two modalities using cross-attention. The decoder is trained on paired textual and visual data, optimizing it to create coherent outputs that align with the expected predictions, thus providing a robust understanding of both text and image content.

GroundingDINO integrates text features with decoded outputs through a careful synthesis of information streams. By using attention mechanisms, the model focuses on essential contributions from each modality to produce a cohesive output. This integration aligns semantic content from text with corresponding visual elements, ensuring a seamless synthesis for accurate model predictions.

Self-attention in GroundingDINO enables the model to weigh the importance of each feature element relative to others in the sequence. This mechanism allows for capturing dependencies and relationships within both text and image data, enhancing the model’s ability to represent complex patterns through effective feature aggregation.

Deformable self-attention in GroundingDINO is designed to increase computational efficiency by limiting attention to a sparse, essential subset of positions in the feature map. This architectural choice enables better scaling and handling of high-dimensional inputs, focusing resources on the most informative sections of the data. Image-to-text cross-attention facilitates the interaction between visual and textual features by allowing image features to query relevant text features. This step enhances the contextual alignment between the two modalities, ensuring that underlying textual information can dynamically refine and interpret visual data for better model outputs. The Feed-Forward Network (FFN) in GroundingDINO acts as a position-wise enhancement mechanism following attention layers. Comprising linear transformations and activation functions like ReLU, the FFN enhances the model’s capacity to generate rich and complex feature representations, contributing to its overall predictive performance and efficacy in multi-modal tasks.

However, these algorithms also have some problems. For example, when dealing with large-scale data and complex scenes, GroundingDINO may be limited by computing resources, resulting in a decrease in detection efficiency. Especially when processing high-resolution images or large-scale video data, the computing cost will increase significantly. When facing data with imbalanced categories, the RAM algorithm may have poor detection performance for some rare categories. When the number of samples of certain categories is small, the RAM algorithm may not be able to fully learn the features of these categories, thereby affecting the accuracy of detection. The large-model algorithm based on the idea of text retrieval and contrastive learning may be affected by the accuracy and completeness of the text description, thereby affecting the accuracy of detection. If the text description is ambiguous, incomplete, or inaccurate, the algorithm may have a deviation in the understanding of the target, which will lead to errors in the detection results.

3. Method

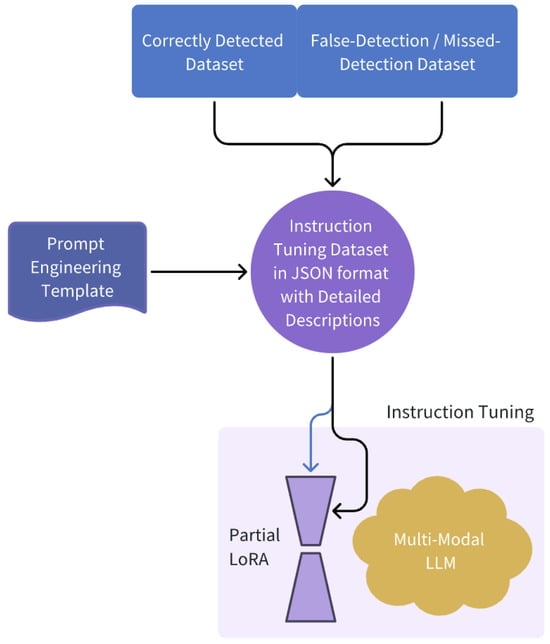

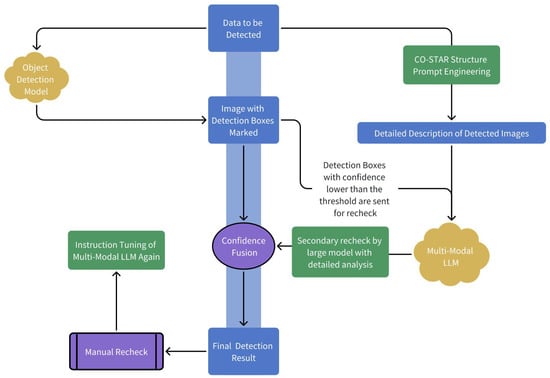

Our approach leverages the rich pre-trained knowledge and VQA capabilities of the multi-modal large language model to conduct rapid instruction tuning with a small number of samples [16], obtaining the model version localized to a specific scene. Subsequently, the localized multi-modal large model is integrated into the recheck process of object detection, simulating the traditional manual inspection process in the form of VQA. The CO-STAR prompt engineering [17] is employed to enhance the output ability of the model, and thereby the recheck confidence of the model is output. After fusion with the original confidence, the final output is acquired. This approach significantly optimizes the interpretability of object detection, can be rapidly integrated into the existing closed-set object detection model framework, and accomplishes rapid effect enhancement. The following will provide a further detailed description of the present invention in combination with Figure 3, Figure 4 and Figure 5.

Figure 3.

The training framework diagram of the instruction tuning stage. Here, a customized PEFT method is used, which emphasizes the influence of image tokens on model output. In this way, it reduces the hallucination problem of the model and improves the generalization of its VQA ability.

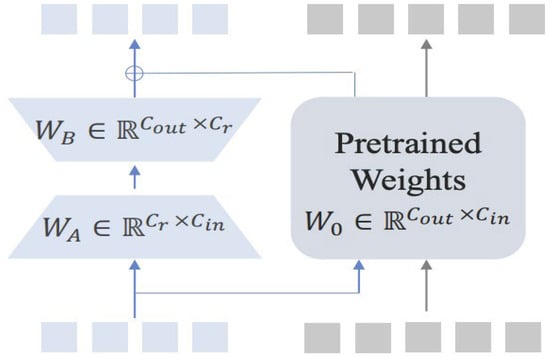

Figure 4.

An illustration of the Partial-LoRA. The blue tokens signify the visual tokens, while the gray tokens denote the language tokens. Notably, the Partial-LoRA is solely applied to the visual tokens.

Figure 5.

This diagram shows how the multi-modal LLM is integrated into the inference process of the object detection model. After combining the description of the original scene and the detected image, the LLM gives a new confidence score and corresponding description for potential targets. These two outputs are respectively used for Confidence Fusion and manual recheck.

3.1. Multi-Modal LLM Training Based on Instruction Tuning

First, select specific few-shot scenarios, such as hardhat detection at construction sites, electric bicycle detection in elevator rooms, and other scene data. According to the pre-designed number of few-shot training samples, taking 20 samples (20-shot) as an example, 1/5, i.e., 4 samples, are correctly detected target samples. Then, 2/5, i.e., 8 samples, are missed target samples. Finally, 2/5 are false-detected non-target samples. Construct an instruction-tuned dataset in a ratio of 1:2:2.

Utilize the CO-STAR structure to construct prompts as follows:

(C) Context: Provide background information related to the task, such as the scene of the detection task and the surveillance at the intersection of city x, province x.

(O) Objective: Define the task that the LLM is expected to focus on executing, that is, provide detailed descriptions for correctly detected data and detailed analyses of the error reasons for missed and false-detected data.

(S) Style: Specify the writing style that the LLM is expected to use. Here, it is set to be complete, rigorous, and analyzed step by step.

(T) Tone: Set the response attitude. Here, it is required to be neutral and not contain emotions.

(A) Audience: Determine the target audience for the response to ensure that it is appropriate and understandable in the context you need. Here, it is set to provide data for large-model fine-tuning.

(R) Response: Provide the response format. This ensures that the LLM outputs the format required for your downstream tasks. Here, it is output in the JSON multi-round dialogue format required for instruction tuning.

Obtain the corresponding instruction-tuned data and start training. In this experiment, the model used is InternLM-Xcomposer 2.5.

Regarding LLM fine-tuning methods, through the exploration of mainstream PEFT methods (such as LoRA [18], Adaptor [19], and AdaLoRA [20]), we find that existing methods have limitations. Treating visual and language tokens equally overlooks the inherent property distinctions between modalities. Considering them as completely distinct entities leads to a substantial alignment cost. To address this issue, this method chooses to use the Partial-LoRA technology. It is inspired by the original LoRA and incorporates a low-rank adaptation specifically for the new modality portion of input tokens, which is highly targeted. When applied to all visual tokens, it can effectively capture and integrate the unique characteristics of the visual modality without sacrificing the capabilities of the LLM, improving the performance of multi-modal models and reducing the alignment cost.

As shown in 4, for each linear layer L0 in the LLM blocks, we denote its weight matrix W0 ∈ R(Cout × Cin) and bias B0 ∈ RCout, where Cin and Cout are the input and output dimensions. Its corresponding Partial LoRA contains two low-rank matrices WA ∈ RCr×Cin and WB ∈ RCout×Cr. With a given input x = [xv, xt], we have the output feature xˆ by

where xv and xt are the visual tokens and language tokens of the input sequence, respectively.

xˆt = W0xt + B0

xˆv = W0xv + WBWAxv + B0

xˆ = [xˆv, xˆt]

3.2. Enhanced Object Detection Framework Incorporating Multi-Modal LLM

Use a maturely deployed object detection model such as YOLOv8 for initial detection. Here, no confidence threshold is set or a relatively low confidence threshold is set to provide sufficient capacity for subsequent rechecks.

Based on the CO-STAR framework, generate background descriptions for undetected original data to eliminate hallucinations, which is based on a large model. Next, based on the background description and initial detection results, using the powerful visual question-answering ability of the large model, infer the reconfidence score for each detection box. The confidence of samples considered as false detections by the large model is set to 0, and detailed analyses are provided for each detection.

Fuse the confidences of the two detections by setting weights for the two confidences, respectively, and then adding them together as follows:

Tfinal = w1 · T1 + w2 · T2 (w1 + w2 = 1)

The last step is an optional step. If further optimization of the rechecking ability of the multi-modal large model is needed, after obtaining the final detection result, manual rechecking can be performed. Combined with the rechecking output of the multi-modal large model, problem localization can be carried out. Then, return to the first part and perform instruction tuning on the model again.

4. Experiment

To verify the fusibility and enhancement effect of the present invention with currently commonly used closed-set object detection algorithms in real scenarios, two classical model baselines are designed and deployed in this model, namely YOLOv8 [21] and Faster RCNN. Among them, Faster R-CNN is deployed using the mmDetection framework. Then, through the algorithm design of the present invention, the multi-modal large model is integrated into the detection process for verification. To be close to the actual production scenario, this verification is based on seven types of common traffic and safety production monitoring scenarios accumulated privately, specifically including hard hats, smoking, making phone calls, fire extinguishers, electric bicycles entering elevators, and reflective vests. Each scenario contains 100 to 300 test samples. The average accuracy with a confidence threshold of 50 and the average accuracy with a threshold of 50–95 are respectively taken as the two levels of evaluation indicators.

As shown in Table 1, the object detection framework reconstructed using the present invention performs better in both indicators and two baselines. Among them, the enhanced framework based on YOLOv8 has increased mAP50-95 by 0.035 and mAP50 by 0.027. The enhanced mAP50 reaches 0.944, while the enhanced framework based on Faster R-CNN has also increased mAP50-95 by 0.035 and mAP50 by 0.024. Thus, it can be seen that the fusion effect of the YOLOv8 model and this framework is the best. The reason for this phenomenon, as we analyze it, is that the single-stage detection characteristic of the YOLO model endows the multi-modal large model with more detection space in complex scenes, thereby achieving better detection precision.

Table 1.

The precision comparison of integrating this framework in YOLOv8 and Faster RCNN.

To further verify that the multi-modal large model can effectively eliminate the hallucination of the large model and has excellent error correction ability for various missed-detection and false-detection samples under the training process of the present invention, this verification is designed based on the common false-detection data in seven types of detection scenarios. To verify the effect of improving the ability of few-shot training, 5–50 training data points are selected for each category, and 200 verification data points are selected for each category. For the JSON dataset of instruction tuning, the description is generated by using prompt engineering, and then the details are manually optimized. At the same time, in order to verify the optimality of the local fine-tuning method of the present invention, the original version of low-rank adaptation fine-tuning and the low-rank adaptation fine-tuning fused with adapters are set as control groups. The evaluation criterion of this verification is the error correction rate, and its calculation method is the following:

It can be seen in Table 2 that as the number of training samples increases, the error correction rate continues to improve. Moreover, the improvement is most significant in the range of 5-shot–20-shot, and there is a slight improvement in the range of 20-shot–50-shot. Moreover, the Partial-LoRA method has achieved the highest correction rate under all shots. Thus, it can be seen that emphasizing the status of visual information in model fine-tuning is reasonable. This approach is more suitable for such fine-grained and cross-scenario complex visual understanding tasks compared to the traditional LoRA.

Table 2.

Correction rates of different PEFT methods.

5. Conclusions

We introduce an innovative approach to tackle the issues of missed and false detections in closed-set object detection models. By utilizing a multi-modal large model and prompt engineering techniques, the invention generates descriptive data for target detection scenarios and objects and fine-tunes the model to identify common missed- and false-detection objects. This leads to a secondary recheck, enhancing the accuracy of the existing detection framework with high interpretability.

The local low-rank adaptation fine-tuning technique is used to reduce the data requirements for model optimization. An iterative framework is established, which significantly improves the detection effect for difficult boundary tasks, achieving “semi-open set” detection while ensuring accuracy.

The experiments show that the reconstructed target detection framework using this invention outperforms common closed-set target detection algorithms in real-world scenarios. The evaluation based on common monitoring scenarios demonstrates its superiority in both average accuracy at a confidence threshold of 50 and in the threshold range of 50–95 for different baselines.

The experiments also validate the ability of the multi-modal large model to eliminate the hallucination of the large model and correct errors in missed- and false-detection samples. The correction rate increases with the increase in training samples, with the most significant improvement in the range of 5–20 and a slight improvement in the range of 20–50.

Future work includes the following:

- Exploring the application of the model in more diverse and complex scenarios to improve its generalization ability;

- Investigating the integration of additional modalities or features to obtain more comprehensive and accurate detection results;

- Studying the interpretability and explainability of the model to better understand its decision-making process and increase its reliability.

Author Contributions

Conceptualization, L.X., K.W. and Y.W.; methodology, Y.W., L.X. and K.W.; software, Y.W. and K.W.; validation, X.H., J.Y. and Y.G.; formal analysis, L.X., Y.W. and Z.J.; resources, X.Z., W.W. and Y.X.; data curation, K.W., J.Y. and Y.W.; writing—original draft preparation, Y.W. and L.X.; writing—review and editing, Y.W. and L.X.; supervision, L.X., A.M. and Z.J.; project administration, K.W. and A.M.; All authors have read and agreed to the published version of the manuscript.

Funding

There is no funding information in this work.

Informed Consent Statement

Informed consent was obtained from all subjects involved in this study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author due to privacy restrictions. The dataset is an internal company asset of Beijing Zhuoshizhitong Technology Co., Ltd.

Conflicts of Interest

Authors Jinyu Yan and Yang Guo are employed by the company Beijing Zhuoshizhitong Technology Co. Ltd., Xing Zhang and Wei Wang is employed by the company China Resources Digital Co. Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object detection in 20 years: A survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Liu, L.; Ouyang, W.; Wang, X.; Fieguth, P.; Chen, J.; Liu, X.; Pietikäinen, M. Deep learning for generic object detection: A survey. Int. J. Comput. Vis. 2020, 128, 261–318. [Google Scholar] [CrossRef]

- Zhao, Z.Q.; Zheng, P.; Xu, S.T.; Wu, X. Object detection with deep learning: A review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef] [PubMed]

- Dhamija, A.; Gunther, M.; Ventura, J.; Boult, T. The overlooked elephant of object detection: Open set. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, The Westin Snowmass Resort, CO, USA, 2–5 March 2020; pp. 1021–1030. [Google Scholar]

- Dhillon, A.; Verma, G.K. Convolutional neural network: A review of models, methodologies and applications to object detection. Prog. Artif. Intell. 2020, 9, 85–112. [Google Scholar] [CrossRef]

- Navneet, D. Histograms of oriented gradients for human detection. Int. Conf. Comput. Vis. Pattern Recognit. 2005, 2, 886–893. [Google Scholar]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.; Ramanan, D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 1627–1645. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Uijlings, J.R.; Van De Sande, K.E.; Gevers, T.; Smeulders, A.W. Selective search for object recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar] [CrossRef]

- Liu, S.; Zeng, Z.; Ren, T.; Li, F.; Zhang, H.; Yang, J.; Li, C.; Yang, J.; Su, H.; Zhu, J.; et al. Grounding dino: Marrying dino with grounded pre-training for open-set object detection. arXiv 2023, arXiv:2303.05499. [Google Scholar]

- Zhang, Y.; Huang, X.; Ma, J.; Li, Z.; Luo, Z.; Xie, Y.; Qin, Y.; Luo, T.; Li, Y.; Liu, S.; et al. Recognize anything: A strong image tagging model. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–18 June 2024; pp. 1724–1732. [Google Scholar]

- Yin, S.; Fu, C.; Zhao, S.; Li, K.; Sun, X.; Xu, T.; Chen, E. A survey on multimodal large language models. arXiv 2023, arXiv:2306.13549. [Google Scholar] [CrossRef]

- Ding, W.; Wang, X.; Zhao, Z. CO-STAR: A collaborative prediction service for short-term trends on continuous spatio-temporal data. Future Gener. Comput. Syst. 2020, 102, 481–493. [Google Scholar] [CrossRef]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. Lora: Low-rank adaptation of large language models. arXiv 2021, arXiv:2106.09685. [Google Scholar]

- Pfeiffer, J.; Rücklé, A.; Poth, C.; Kamath, A.; Vulić, I.; Ruder, S.; Cho, K.; Gurevych, I. Adapterhub: A framework for adapting transformers. arXiv 2020, arXiv:2007.07779. [Google Scholar]

- Zhang, Q.; Chen, M.; Bukharin, A.; Karampatziakis, N.; He, P.; Cheng, Y.; Chen, W.; Zhao, T. AdaLoRA: Adaptive budget allocation for parameter-efficient fine-tuning. arXiv 2023, arXiv:2303.10512. [Google Scholar]

- Sohan, M.; Sai Ram, T.; Reddy, R.; Venkata, C. A review on yolov8 and its advancements. In Proceedings of the International Conference on Data Intelligence and Cognitive Informatics; Springer: Berlin/Heidelberg, Germany, 2024; pp. 529–545. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).