Semi-Supervised Classification with A*: A Case Study on Electronic Invoicing

Abstract

1. Introduction

- Categorization Complexity: At the heart of electronic invoicing is the categorization of invoice entries within the general book. Each entry must adhere to a specific system of categories, offering a high degree of flexibility. Individual firms often customize these categories to meet their unique needs. Consequently, the same invoice entry may yield different categorizations across different firms. Additionally, one issuer may provide multiple services or products with distinct invoice reasons, making automatic categorization a non-trivial task [6].

- Evolving Accounting Legislation: Another layer of complexity arises from the continuously evolving accounting regulations designed to adapt to the dynamic economy. These changes can significantly impact the logic and procedures involved in the invoicing process, necessitating adaptive solutions [4].

- Rule-Based Systems: To address these complexities, many software programs have introduced rule-based systems. While effective in simple scenarios, these systems struggle to maintain the vast array of rules required to handle complex and varied situations [7].

- Automate the Electronic Invoicing: the primary goal is to reduce the necessity of extensive and manual intervention in the accounting process.

- Enhance Classification Accuracy: to achieve the primary goal, we need to improve the accuracy, and reduce the missclassifications and errors of the machine learning algorithm.

- Develop a Semi-Supervised Framework: design and implement a semi-supervised learning algorithm capable of integrating supervised and unsupervised data.

- Overcome pseudo-label generation challenges and leverage the group-instance structure: Investigate, propose and implement methodologies that effectively generate high-quality pseudo-labels for unsupervised data using a graph search algorithm (A*).

2. Literature Review

2.1. Automatic Invoice Labeling

2.2. Semi-Supervised Learning

2.3. Graph Search

2.4. Literature Summary

3. Problem Description

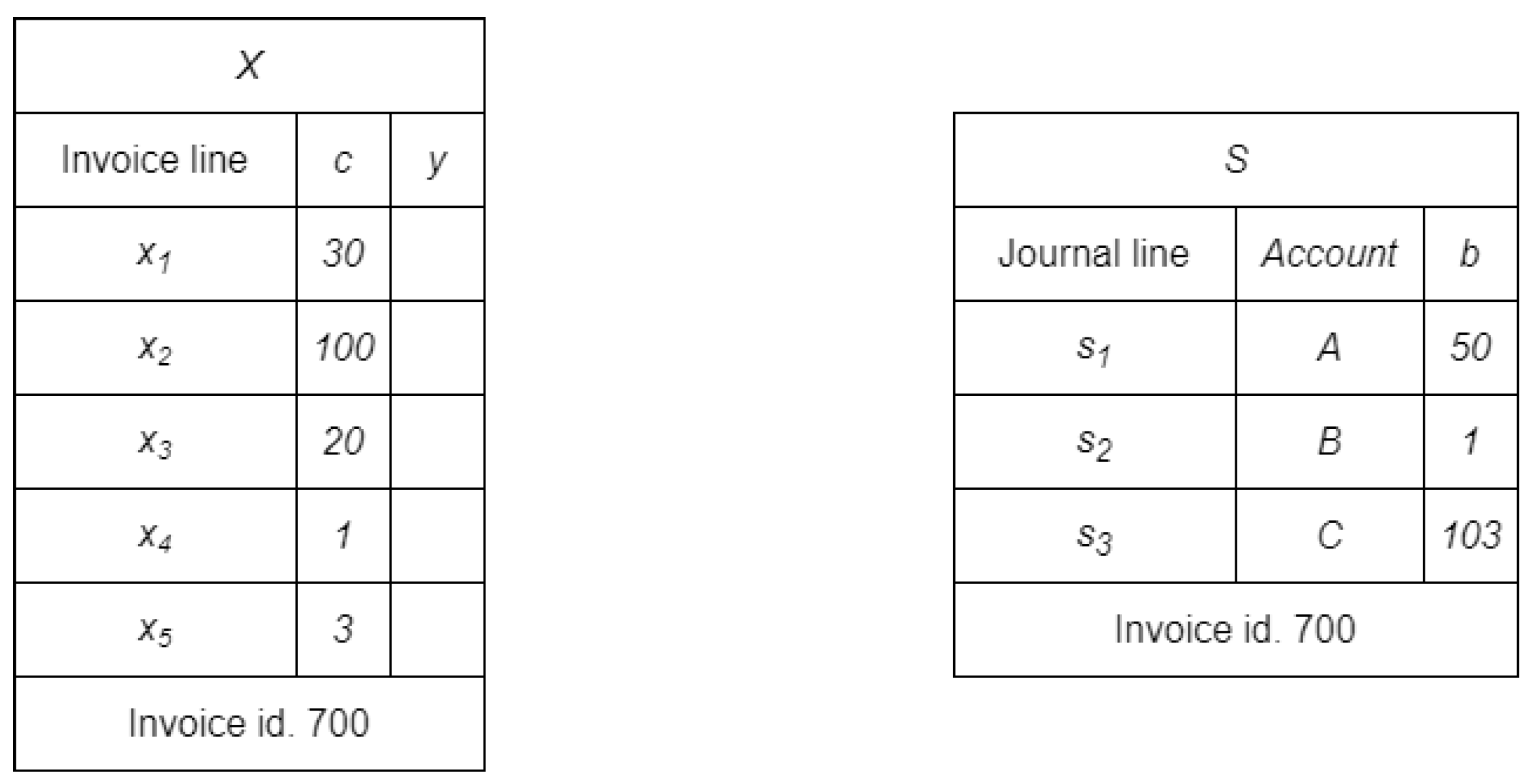

- On the one hand, we have a collection of invoices , each one with multiple lines , , where is the number of lines in . The lines are the inputs of the problem and must be associated to a target label ;

- On the other hand, we have the account journal, where the financial transactions are recorded, divided into corresponding sections , with registrations in various accounts , , where is the number of account codes in . because during the transaction registration an unknown number of invoice lines of the same nature are aggregated into a single record. S is the document from where the target labels are extracted as described below.

4. Methodological Proposal

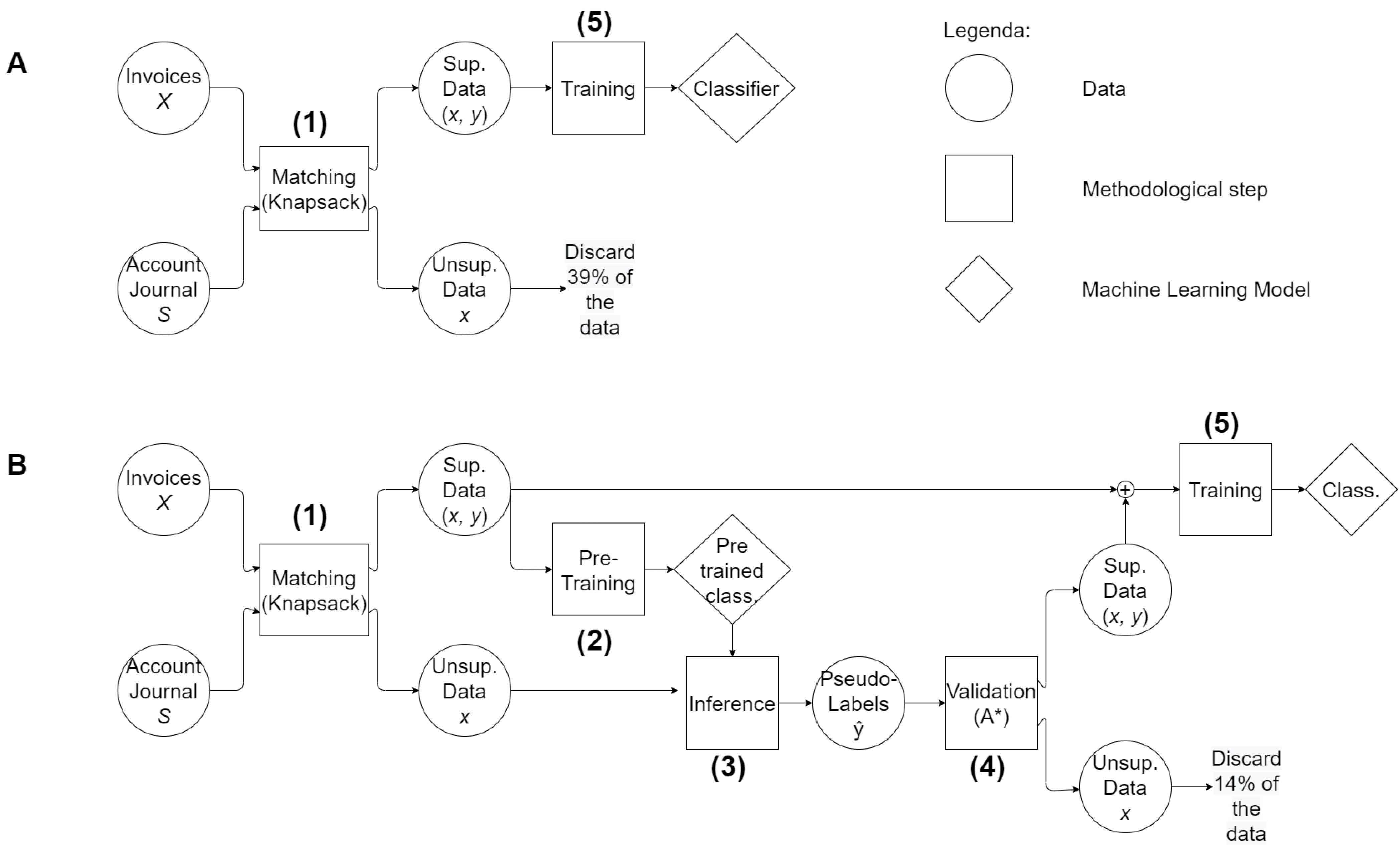

- Matching—Knapsack Problem: we build the dataset from invoices and journal entries. For every invoice, we set up a combinatorial knapsack problem to assign lines to accounting accounts, resulting in two datasets: a supervised one and an unsupervised one, depending if the knapsack problem is solvable or not.

- Pre-Training: we train a first version of the Machine Learning classifier on the supervised dataset.

- Inference: we use the previously trained classifier on the unsupervised dataset to assign a pseudo-label to each line.

- Pseudo-Labels Validation: we exploit the journal entries to validate the pseudo-labels assigned by the initial classifier. More precisely, the initial classifier prediction is a candidate solution of the knapsack problem at step 1. If the solution solves the knapsack problem we validate the pseudo-labels, otherwise we use a search algorithm to find a valid solution to the knapsack problem.

- Training: in the final phase, the augmented dataset, now enriched with unsupervised data complemented by pseudo-labels, serves as the foundation for the retraining of the classifier.

4.1. Matching—Knapsack Problem

4.2. Pre-Training

4.3. Inference

4.4. Pseudo-Labels Validation—A*

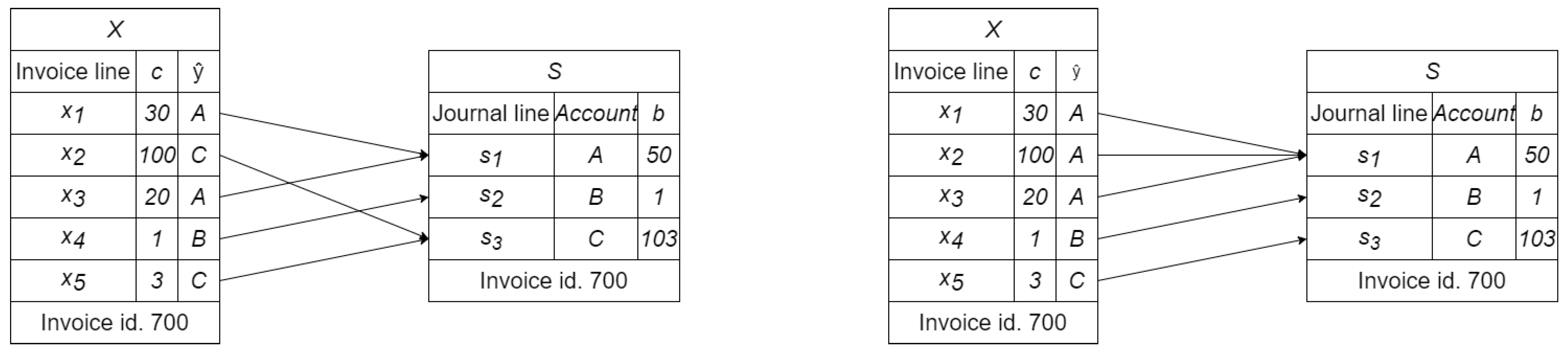

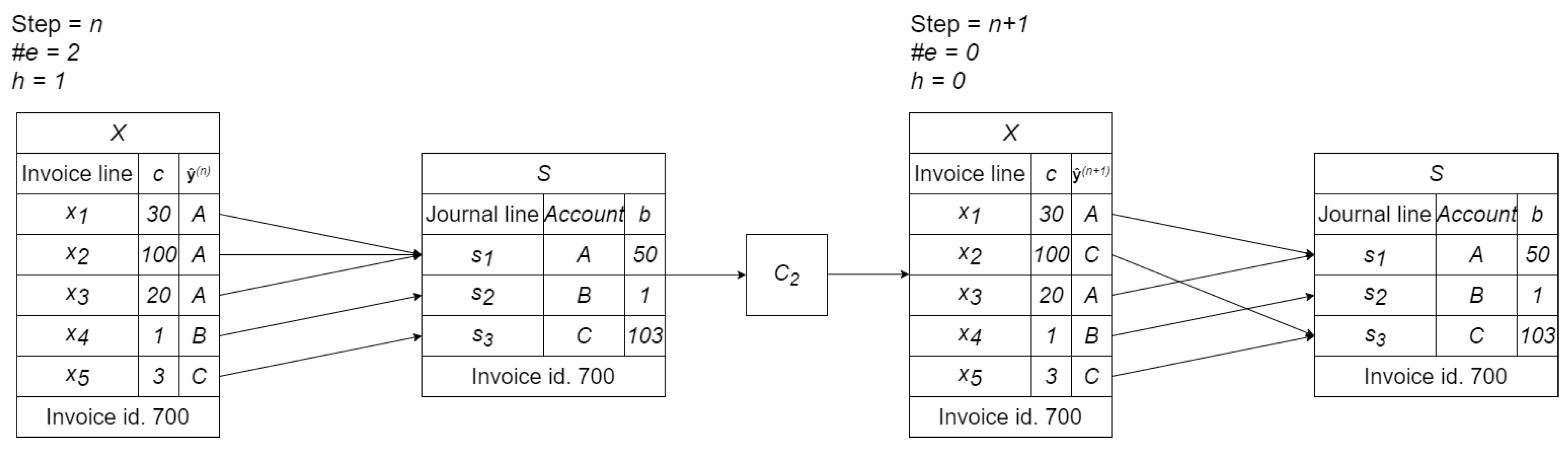

- State Space Definition: the state vector of this problem is , so the generic node of the graph explored by the search algorithm during its n-th step has a state vector that is denoted by . Each element of this state vector can be assigned to one of the M account codes present in S;

- Goal Definition: the goal is achieved when the search leads to a node where, by grouping the invoice rows according to the pseudo-labels in the state vector and summing the prices, the same aggregate amounts are found in S, just as in the left-hand panel of Figure 5;

- Initial State: the search starts from the state vector proposed by the pre-trained classifier;

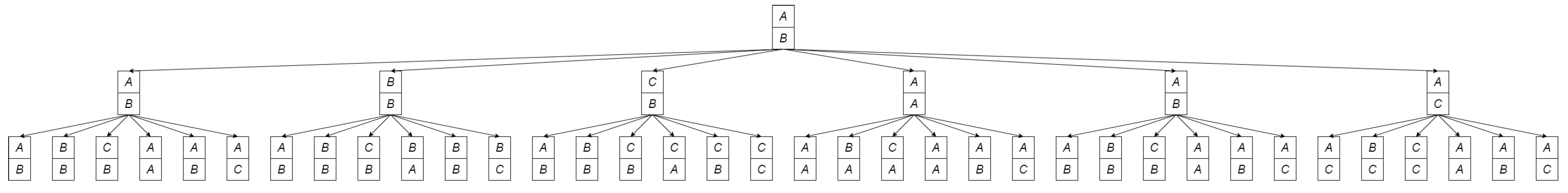

- Actions: in each state, the range of possible actions is the substitution of one of the pseudo-labels in the state vector with a different account code found in S. Let M be the number of different accounts present in S, then the branching factor for the invoice X, i.e., the number of possible actions in each state, is NM. By way of example only, suppose one of the accounts in S is represented by the code C, then the action modifying the j-th pseudo-label in C will be indicated by ;

- Transition Model: Figure 5 below depicts how the transition from one node to another occurs following the application of an action. The action chosen is the one just presented with , and therefore changes the second pseudo-label to C, leaving the others unchanged for the -th step;

- Path Cost: each step on the graph involves the modification of only one element and therefore has a unit cost.

- stands for the random variable describing the number of rows in X correctly assigned (as a first approximation, we consider the rows of the same invoice X to be independent, which allows us to express the sum of Bernoulli variables as a Binomial variable);

- d stands for the depth of the goal node, i.e., the distance in steps between the initial node and the goal node along the optimal path;

4.5. Training

5. Experiments

5.1. Data Pre-Processing

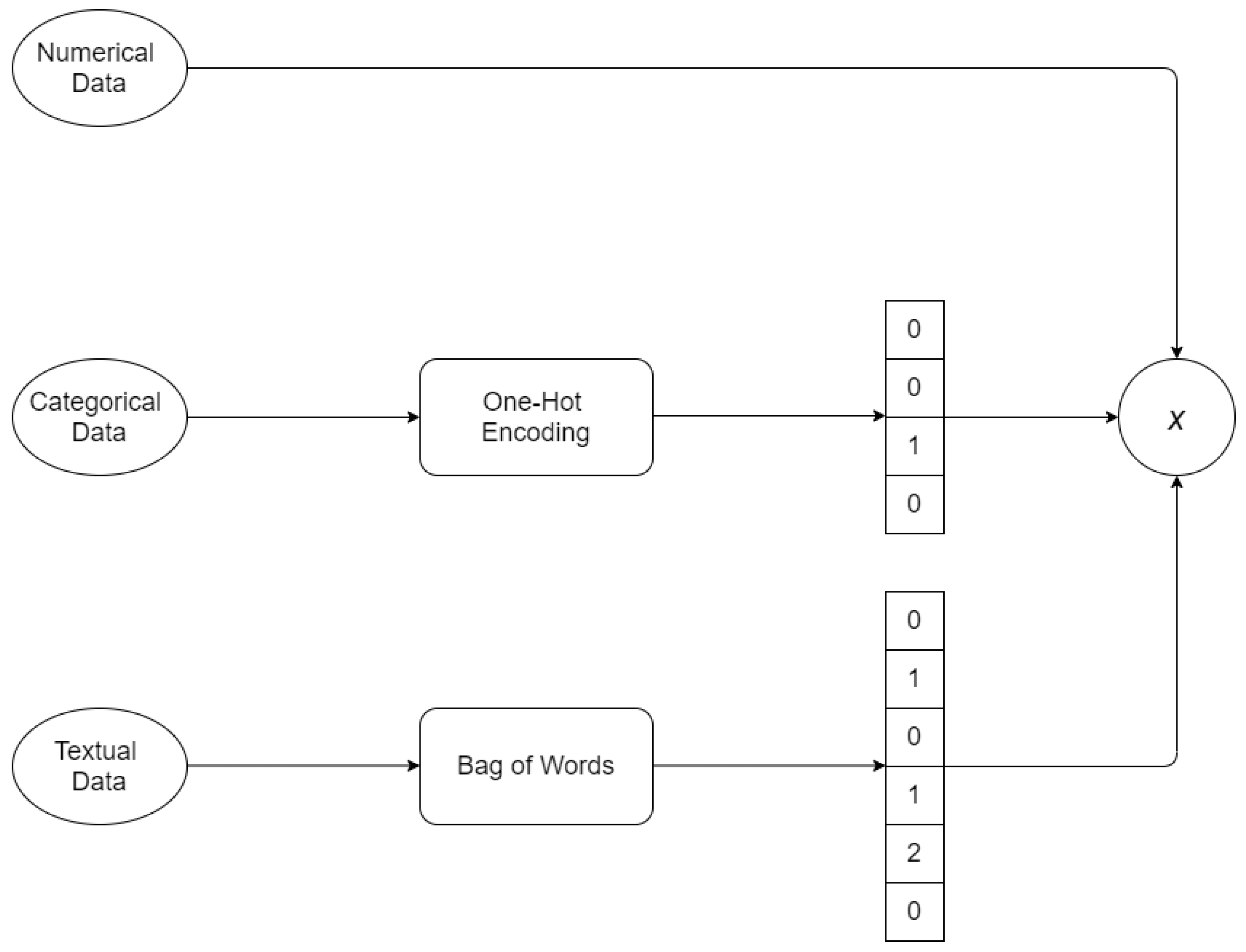

- Numerical data: are used as they are (e.g., total price, tax rate);

- Categorical data: are converted into a numerical vector thanks to a One-Hot Encoding procedure [35] (e.g., code of the business partner, method of payment);

- Textual data: are transformed into a numeric vector thanks to the Bag of Words approach [36] (e.g., description of the invoice row, which contains very important information for deducing the nature of the product or service exchanged).

5.2. Pipeline Application

- Matching—Knapsack Problem: for many invoices the knapsack problem described in Equation (1) is not solvable. To be precise the input-output relationship was reconstructed without any ambiguity only for 61% of the invoice rows available in the raw dataset giving pairs , thus the discard rate is 39%;

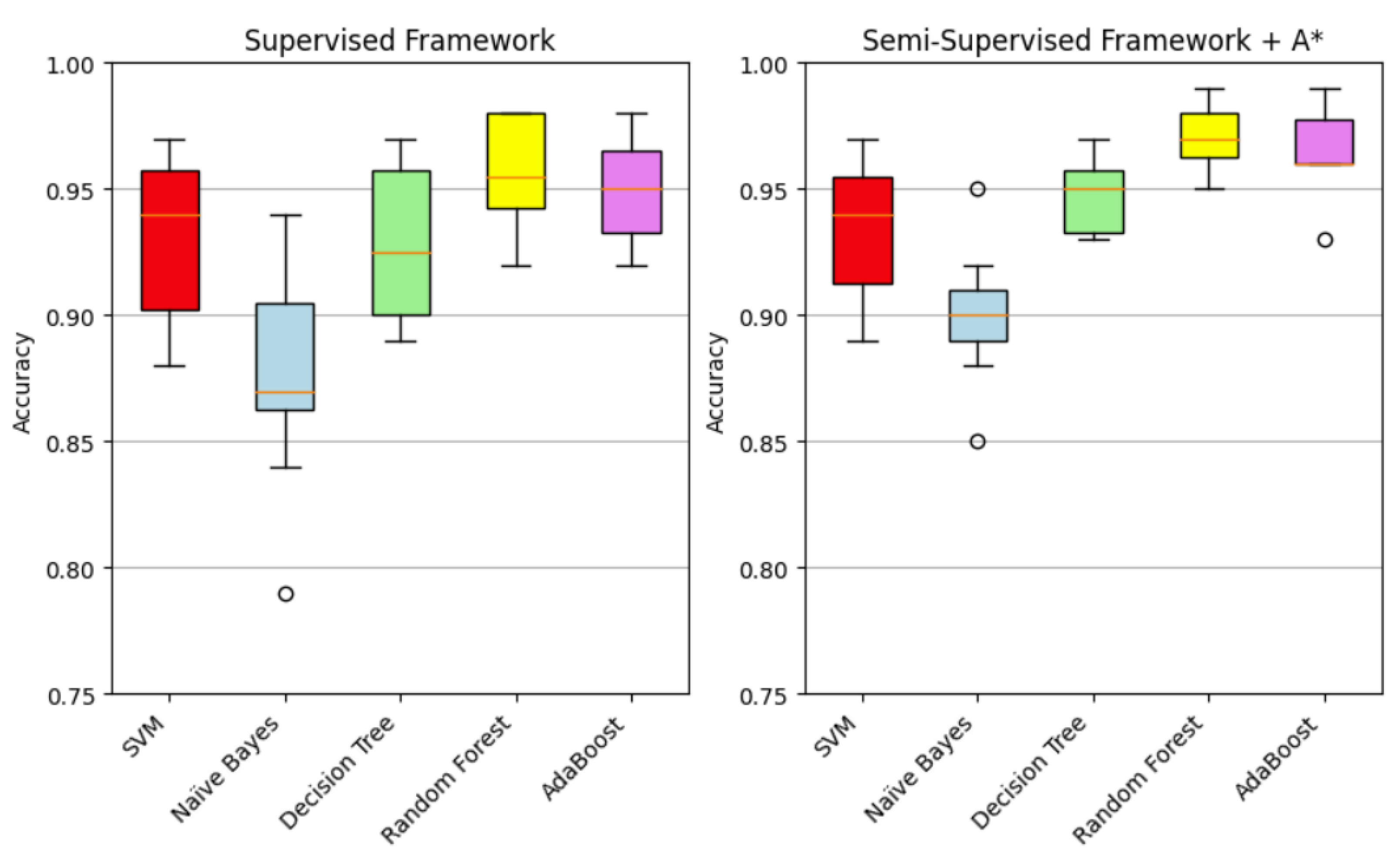

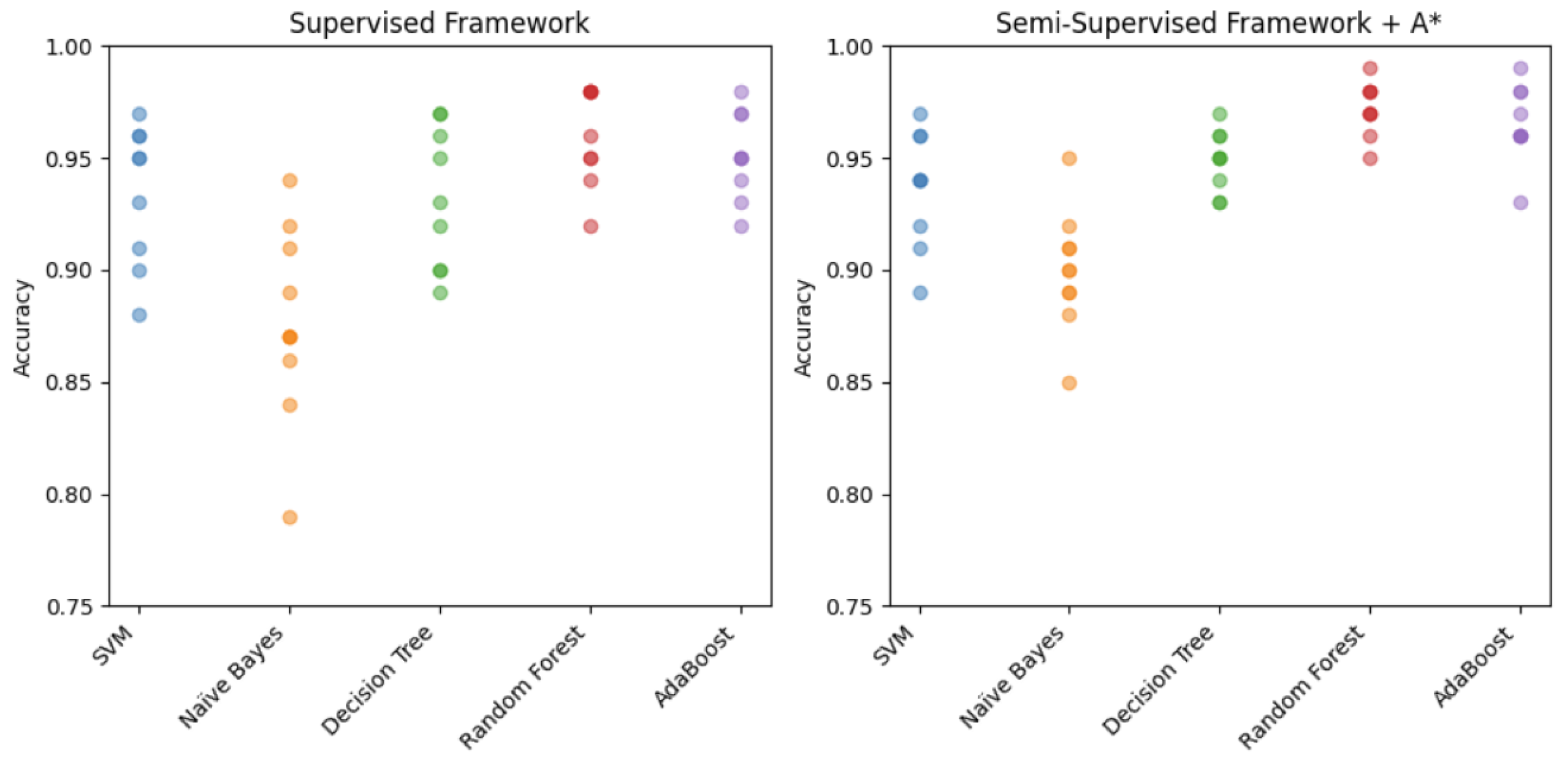

- Pre-Training: The multi-class classification problem that the model must learn to solve is the association of an account code with the vector . Since a large amount of data was not available, Deep Learning models, which require a lot of data to learn [37], were excluded. Several of the best models available in the literature and in the Python Scikitlearn library for multi-class classification were tried out: Support Vector Machine (SVM) [38], Naïve Bayes [39], Decision Tree [40], Random Forest [41] and AdaBoost [42]. The performances of these models were assessed by means of a 10-fold cross-validation and, of all, the models based on an ensemble of trees proved to be the best for this task. In fact, already in this initial training on a limited supervised dataset they achieved an accuracy of 91%. The quantitative results recorded during the experiments will be discussed in more detail in the next section, also with the support of tables and images;

- Inference: the pre-trained classifier was then used to infer the pseudo-label for the lines of the invoices in W;

- Pseudo-Labels Validation—A*: thanks to the high accuracy of the pre-trained classifier, most of the pseudo-labels were correct. As a consequence, referring to Equation (3), the search has to be done on a shallow tree and A* converged in most of the cases (out of 1156 unlabeled data, 747 got a label thanks to this procedure).

- 5.

- Training: the same model was trained and evaluated for the second time and improved the accuracy between 1% and 4%, depending on its data efficiency.

5.3. Quantitative Results

- Supervised Framework: only data for which the knapsack problem has reconstructed the input-ouptut relation are used. This is the baseline method with which this problem has been approached so far in the literature [8]. It basically consists in applying only steps 1 and 5 of the entire pipeline: refer to diagram A in Figure 2.

- Standard Semi-Supervised Framework: in a classic semi-supervised approach, pseudo-labels are inferred by the pre-trained classifier and may not be correct, introducing noise into the dataset D. This method corresponds to steps 1, 2, 3 and 5.

- Semi-Supervised Framework + A*: this is the innovative framework we propose in which only data with certified labels are used, visually described by diagram B in Figure 2.

6. Conclusions and Discussion

6.1. Result Discussion

6.2. Work Summary

6.3. Future Works

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Glossary

| Variable Name | Variable Description |

|---|---|

| D | Dataset |

| X | Invoices |

| S | Account journal dedicated to invoices registration |

| x | Invoice line |

| s | Journal line that represents the registration in a specific account |

| i | Index to identify a specific invoice and the corresponding journal section |

| j | Row index inside an invoice |

| k | Row index inside a journal section |

| N | Number of lines in an invoice |

| M | Number of lines in a journal section |

| y | True target label for an invoice line |

| Target label inferred by the classifier for a single invoice line | |

| Vector with all the target labels inferred for an invoice | |

| c | Numerical value associated to an invoice line (i.e., price) |

| b | Numerical value associated to a journal line |

| z | Binary variable of the knapsack problem |

| Z | Matrix collecting all the binary variables for an invoice X |

| U | Set of all the invoice indexes |

| V | Subset of all the invoice indexes for which the knapsack problem has been solved |

| W | Subset of all the invoice indexes for which the knapsack problem has not been solved |

| n | Step of the searching algorithm |

| h | Heuristic function |

| d | Distance of the solution node on the graph from the initial state |

| Number of wrong predictions in | |

| Number of steps required to conclude the search |

Appendix B. Data Description

- Electronic invoices: in XML format, as specified by italian legislation (The structure of these documents is standardized at national level and a description of all their fields can be found in https://www.fatturapa.gov.it/it/norme-e-regole/documentazione-fattura-elettronica/formato-fatturapa/ (accessed on 14 September 2023))

- Account journal: which is a document drawn up by every accountant freely, in our case it was presented as an Excel v2309 file.

| No. | Description | Price |

|---|---|---|

| 0 | **** LIEVITO FRESCO PAKMAYA PZ 500 GR | 19.80 |

| 1 | **** CODA ARAGOSTA–MICRO CT 7 KG COD.AK... | 40.13 |

| 2 | PAC GEL SFOGLIATA RICCIA MIGNON GR 30 CT 5 KG | 24.47 |

| 3 | PAC GEL SFOGLIATA SEMIDOLCE RUSTICA CT 4 KG | 17.96 |

| 4 | RISPO RUSTICI MIGNON CF 2.5 KG | 25.00 |

| 5 | **** TAPPI GRANDI CT 150 PZ | 19.61 |

| 6 | ***** FARINA–TIPO AMERICANA CARTA CF 25 KG | 36.40 |

| 7 | ****** FARINA–TIPO 00 EXTRA CF 25 KG | 25.95 |

| 8 | VANDEMOORTELE MIX GOLD CUP CROISSANT CF … | 61.91 |

| 9 | CELLOPHANE & PAPER CARTA DA FORNO 40 × 60 CF 500PZ | 17.84 |

| 10 | GOURMET LINE MIX CREAM AMIDO CF 10 KG | 28.48 |

| 11 | **** NOCCIOLATA ***** CF 13 KG COD. 1010151 | 48.35 |

| 12 | TORRENTE ****** CT 3X4.1 KG | 11.04 |

| 13 | ******* CIGARETTES SURPRISE CT 1,5 KG | 21.24 |

| 14 | ******* CANNOLI CIOCCOLATO MIGNON CT 3.5 KG | 31.23 |

| 15 | ****** ANANAS MIGNON 33 FETTE CF 850 GR | 9.55 |

| 16 | IRCA **** NEUTRO CF 1KG COD.70508 | 5.85 |

| 17 | ****** PIROTTINI TONDI COLORATI MIS. 3 H 18.5... | 7.10 |

| 18 | IRCA CHOCOCREAM ***** PISTACCHIO ***** CF KG 5... | 44.36 |

| 19 | SALVI PIATTI ALA ORO CM 26 CF 10 KG | 15.40 |

| 20 | PININ PERO ZUCCHERO VELO CF 5 KG | 4.79 |

| 21 | IRCA SCAGLIETTA ***** PURO FONDENTE ***** CF 1... | 5.12 |

| 22 | ***** SEMOLA DI GRANO DURO **RIPIENO SFOGLIA... | 4.34 |

| 23 | ******* PREP. VEG. HOPLA’ EASY TOP 1LT | 61.94 |

| 24 | SWEET D.e D. ZUCCHERO CF 25 KG | 15.41 |

| 25 | REVIVA PIROTTINI OVALI COLORATI MIS 3 CF 1000 PZ | 5.52 |

| 26 | Spese Varie | 0.30 |

| Code | Account Name | Amount |

|---|---|---|

| 66/05/006 | MATERIE PRIME C/ACQ. P/PROD.SERV. | 445.4 |

| 66/20/005 | MATERIE DI CONSUMO C/ACQUISTI | 65.47 |

| 66/25/509 | FARINA | 66.69 |

| 66/25/006 | MERCI C/ACQUISTI P/PROD.SERV. | 31.23 |

| 66/30/055 | SPESE ACCESSORIE SU ACQUISTI | 0.3 |

References

- Frey, C.B.; Osborne, M.A. The future of employment: How susceptible are jobs to computerisation? Technol. Forecast. Soc. Chang. 2017, 114, 254–280. [Google Scholar] [CrossRef]

- Tater, T.; Gantayat, N.; Dechu, S.; Jagirdar, H.; Rawat, H.; Guptha, M.; Gupta, S.; Strak, L.; Kiran, S.; Narayanan, S. AI Driven Accounts Payable Transformation. Proc. AAAI Conf. Artif. Intell. 2022, 36, 12405–12413. [Google Scholar] [CrossRef]

- Koch, B. E-Invoicing/E-Billing. Significant Market Transition Lies Ahead. Billentis. 2017. Available online: https://www.billentis.com/einvoicing_ebilling_market_report_2017.pdf (accessed on 14 September 2023).

- Cedillo, P.; García, A.; Cárdenas, J.D.; Bermeo, A. A Systematic Literature Review of Electronic Invoicing, Platforms and Notification Systems. In Proceedings of the 2018 International Conference on eDemocracy & eGovernment (ICEDEG), Ambato, Ecuador, 4–6 April 2018; pp. 150–157, ISSN: 2573-1998. [Google Scholar] [CrossRef]

- Poel, K.; Marneffe, W.; Vanlaer, W. Assessing the electronic invoicing potential for private sector firms in Belgium. Int. J. Digit. Account. Res. 2016, 16, 8517. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Bergdorf, J. Machine Learning and Rule Induction in Invoice Processing: Comparing Machine Learning Methods in Their Ability to Assign Account Codes in the Bookkeeping Process. Available online: http://urn.kb.se/resolve?urn=urn:nbn:se:kth:diva-235931 (accessed on 14 September 2023).

- Azman, N.A.; Mohamed, A.; Jamil, A.M. Artificial Intelligence in Automated Bookkeeping: A Value-added Function for Small and Medium Enterprises. JOIV Int. J. Inform. Vis. 2021, 5, 224–230. [Google Scholar] [CrossRef]

- Bardelli, C.; Rondinelli, A.; Vecchio, R.; Figini, S. Automatic Electronic Invoice Classification Using Machine Learning Models. Mach. Learn. Knowl. Extr. 2020, 2, 617–629. [Google Scholar] [CrossRef]

- van Engelen, J.E.; Hoos, H.H. A survey on semi-supervised learning. Mach. Learn. 2020, 109, 373–440. [Google Scholar] [CrossRef]

- Cho, S.; Vasarhelyi, M.A.; Sun, T.S.; Zhang, C.A. Learning from Machine Learning in Accounting and Assurance. J. Emerg. Technol. Account. 2020, 17, 10718. [Google Scholar] [CrossRef]

- Bertomeu, J. Machine learning improves accounting: Discussion, implementation and research opportunities. Rev. Account. Stud. 2020, 25, 1135–1155. [Google Scholar] [CrossRef]

- Tarawneh, A.S.; Hassanat, A.B.; Chetverikov, D.; Lendak, I.; Verma, C. Invoice Classification Using Deep Features and Machine Learning Techniques. In Proceedings of the 2019 IEEE Jordan International Joint Conference on Electrical Engineering and Information Technology (JEEIT), Amman, Jordan, 9–11 April 2019; pp. 855–859. [Google Scholar] [CrossRef]

- Ha, H.; Horák, A. Information extraction from scanned invoice images using text analysis and layout features. Signal Process. Image Commun. 2021, 102, 116601. [Google Scholar] [CrossRef]

- Li, M. Smart Accounting Platform Based on Visual Invoice Recognition Algorithm. In Proceedings of the 2022 6th International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 29–31 March 2022; pp. 1436–1439. [Google Scholar] [CrossRef]

- Subramani, N.; Matton, A.; Greaves, M.; Lam, A. A Survey of Deep Learning Approaches for OCR and Document Understanding. arXiv 2021, arXiv:2011.13534. [Google Scholar]

- Thorat, C.; Bhat, A.; Sawant, P.; Bartakke, I.; Shirsath, S. A Detailed Review on Text Extraction Using Optical Character Recognition. In Proceedings of the ICT Analysis and Applications; Lecture Notes in Networks and Systems. Fong, S., Dey, N., Joshi, A., Eds.; Springer: Singapore, 2022; pp. 719–728. [Google Scholar] [CrossRef]

- Ding, K.; Lev, B.; Peng, X.; Sun, T.; Vasarhelyi, M.A. Machine learning improves accounting estimates: Evidence from insurance payments. Rev. Account. Stud. 2020, 25, 1098–1134. [Google Scholar] [CrossRef]

- Li, Z.; Zheng, L. The Impact of Artificial Intelligence on Accounting; Atlantis Press: Sanya, China, 2018; ISSN 2352-5398. [Google Scholar] [CrossRef]

- Johansson, S. Classification of Purchase Invoices to Analytic Accounts with Machine Learning. Master Thesis, Aalto University, Espoo, Finland, 2022. Available online: https://aaltodoc.aalto.fi/bitstream/handle/123456789/119486/master_Johansson_Samuel_2023.pdf?sequence=1&isAllowed=y (accessed on 14 September 2023).

- Bengtsson, H.; Jansson, J. Using Classification Algorithms for Smart Suggestions in Accounting Systems. Available online: https://hdl.handle.net/20.500.12380/219162 (accessed on 14 September 2023).

- Kieckbusch, D.S.; Filho, G.P.R.; Di Oliveira, V.; Weigang, L. Towards Intelligent Processing of Electronic Invoices: The General Framework and Case Study of Short Text Deep Learning in Brazil. In Proceedings of the Web Information Systems and Technologies, Porto, Portugal, 25–27 April 2023; Lecture Notes in Business Information Processing. Marchiori, M., Domínguez Mayo, F.J., Filipe, J., Eds.; Springer: Cham, Switzerland, 2023; pp. 74–92. [Google Scholar] [CrossRef]

- Munoz, J.; Jalili, M.; Tafakori, L. Hierarchical classification for account code suggestion. Knowl.-Based Syst. 2022, 251, 109302. [Google Scholar] [CrossRef]

- Severin, K.; Gokhale, S.; Dagnino, A. Keyword-Based Semi-Supervised Text Classification. In Proceedings of the 2019 IEEE 43rd Annual Computer Software and Applications Conference (COMPSAC), Milwaukee, WI, USA, 4–8 June 2019; pp. 417–422. [Google Scholar] [CrossRef]

- Gururangan, S.; Dang, T.; Card, D.; Smith, N.A. Variational Pretraining for Semi-supervised Text Classification. arXiv 2019, arXiv:1906.02242. [Google Scholar]

- Johnson, R.; Zhang, T. Supervised and Semi-Supervised Text Categorization using LSTM for Region Embeddings. In Proceedings of the 33rd International Conference on Machine Learning, PMLR, New York, NY, USA, 19–24 June 2016; pp. 526–534, ISSN: 1938-7228. [Google Scholar]

- Miyato, T.; Dai, A.M.; Goodfellow, I. Adversarial Training Methods for Semi-Supervised Text Classification. arXiv 2021, arXiv:1605.07725. [Google Scholar]

- Berthelot, D.; Carlini, N.; Goodfellow, I.; Papernot, N.; Oliver, A.; Raffel, C.A. MixMatch: A Holistic Approach to Semi-Supervised Learning. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2019; Curran Associates, Inc.: Long Beach, CA, USA, 2019; Volume 32. [Google Scholar]

- Ouali, Y.; Hudelot, C.; Tami, M. An Overview of Deep Semi-Supervised Learning. arXiv 2020, arXiv:2006.05278. [Google Scholar]

- Gong, C.; Tao, D.; Liu, W.; Liu, L.; Yang, J. Label Propagation via Teaching-to-Learn and Learning-to-Teach. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 1452–1465. [Google Scholar] [CrossRef]

- Iscen, A.; Tolias, G.; Avrithis, Y.; Chum, O. Label Propagation for Deep Semi-supervised Learning. arXiv 2019, arXiv:1904.04717. [Google Scholar] [CrossRef]

- Assi, M.; Haraty, R. A Survey of the Knapsack Problem. 2018. Available online: https://ieeexplore.ieee.org/document/8672677 (accessed on 14 September 2023).

- Rizve, M.N.; Duarte, K.; Rawat, Y.S.; Shah, M. In Defense of Pseudo-Labeling: An Uncertainty-Aware Pseudo-label Selection Framework for Semi-Supervised Learning. arXiv 2021, arXiv:2101.06329. [Google Scholar]

- Candra, A.; Budiman, M.A.; Hartanto, K. Dijkstra’s and A-Star in Finding the Shortest Path: A Tutorial. In Proceedings of the 2020 International Conference on Data Science, Artificial Intelligence, and Business Analytics (DATABIA), Medan, Indonesia, 16–17 July 2020; pp. 28–32. [Google Scholar] [CrossRef]

- Russell, S.J.; Norvig, P. Artificial Intelligence: A Modern Approach, 4th ed.; Pearson Series in Artificial Intelligence; Pearson: Hoboken, NJ, USA, 2021. [Google Scholar]

- Seger, C. An Investigation of Categorical Variable Encoding Techniques in Machine Learning: Binary versus One-Hot and Feature Hashing. Available online: https://urn.kb.se/resolve?urn=urn:nbn:se:kth:diva-237426 (accessed on 14 September 2023).

- Zhang, Y.; Jin, R.; Zhou, Z.H. Understanding bag-of-words model: A statistical framework. Int. J. Mach. Learn. Cybern. 2010, 1, 43–52. [Google Scholar] [CrossRef]

- Pasupa, K.; Sunhem, W. A comparison between shallow and deep architecture classifiers on small dataset. In Proceedings of the 2016 8th International Conference on Information Technology and Electrical Engineering (ICITEE), Yogyakarta, Indonesia, 5–6 October 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Suthaharan, S. Support Vector Machine. In Machine Learning Models and Algorithms for Big Data Classification: Thinking with Examples for Effective Learning; Suthaharan, S., Ed.; Integrated Series in Information Systems; Springer: Boston, MA, USA, 2016; pp. 207–235. [Google Scholar] [CrossRef]

- Yang, F.J. An Implementation of Naive Bayes Classifier. In Proceedings of the 2018 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 12–14 December 2018; pp. 301–306. [Google Scholar] [CrossRef]

- Priyanka; Kumar, D. Decision tree classifier: A detailed survey. Int. J. Inf. Decis. Sci. 2020, 12, 246–269. [Google Scholar] [CrossRef]

- Rigatti, S.J. Random Forest. J. Insur. Med. 2017, 47, 31–39. [Google Scholar] [CrossRef] [PubMed]

- Schapire, R.E. Explaining AdaBoost. In Empirical Inference: Festschrift in Honor of Vladimir N. Vapnik; Schölkopf, B., Luo, Z., Vovk, V., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 37–52. [Google Scholar] [CrossRef]

- Schooltink, W.T. Testing the Sensitivity of Machine Learning Classifiers to Attribute Noise in Training Data; University of Twente: Twente, The Netherlands, 2020. [Google Scholar]

- Hasan, M.A.; Zaki, M.J. A Survey of Link Prediction in Social Networks. In Social Network Data Analytics; Aggarwal, C.C., Ed.; Springer: Boston, MA, USA, 2011; pp. 243–275. [Google Scholar] [CrossRef]

- Lü, L.; Zhou, T. Link prediction in complex networks: A survey. Phys. A Stat. Mech. Its Appl. 2011, 390, 1150–1170. [Google Scholar] [CrossRef]

- Chuan, P.M.; Son, L.H.; Ali, M.; Khang, T.D.; Huong, L.T.; Dey, N. Link prediction in co-authorship networks based on hybrid content similarity metric. Appl. Intell. 2018, 48, 2470–2486. [Google Scholar] [CrossRef]

| Work | Computer Vision | ML for Estimates | Transaction Classification | Semi-Supervised | Graph Search |

|---|---|---|---|---|---|

| [12,13,14,15,16] | ✓ | ||||

| [12,17,18,19] | ✓ | ||||

| [6,8,20,21] | ✓ | ✓ | |||

| [23,24,25,26] | ✓ | ✓ | |||

| [27,28,29,30] | ✓ | ✓ | |||

| Our Proposal | ✓ | ✓ | ✓ | ✓ |

| Model | Supervised Framework | Standard Semi-Supervised Framework | Semi-Supervised Framework + A* |

|---|---|---|---|

| SVM | 89% | 84% | 90% |

| Naïve Bayes | 88% | 86% | 90% |

| Decision Tree | 88% | 88% | 92% |

| Random Forest | 91% | 91% | 93% |

| AdaBoost | 91% | 91% | 93% |

| Metric | Fold 1 | Fold 2 | Fold 3 | Fold 4 | Fold 5 | Fold 6 | Fold 7 | Fold 8 | Fold 9 | Fold 10 | Mean |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Accuracy | 58% | 96% | 95% | 98% | 98% | 98% | 99% | 97% | 97% | 97% | 93% |

| F1 Weighted | 49% | 95% | 94% | 97% | 96% | 97% | 99% | 97% | 97% | 96% | 92% |

| F1 Macro | 79% | 97% | 89% | 88% | 97% | 96% | 89% | 86% | 98% | 94% | 91% |

| Metric | Supervised Framework | Semi-Supervised Framework + A* | Improvement |

|---|---|---|---|

| Accuracy | [88%, 91%] | [90%, 93%] | [+1%, +4%] |

| Discard Rate | 39% | 14% | −25% |

| Supervised Data | 1805 | 2661 | +747 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Panichi, B.; Lazzeri, A. Semi-Supervised Classification with A*: A Case Study on Electronic Invoicing. Big Data Cogn. Comput. 2023, 7, 155. https://doi.org/10.3390/bdcc7030155

Panichi B, Lazzeri A. Semi-Supervised Classification with A*: A Case Study on Electronic Invoicing. Big Data and Cognitive Computing. 2023; 7(3):155. https://doi.org/10.3390/bdcc7030155

Chicago/Turabian StylePanichi, Bernardo, and Alessandro Lazzeri. 2023. "Semi-Supervised Classification with A*: A Case Study on Electronic Invoicing" Big Data and Cognitive Computing 7, no. 3: 155. https://doi.org/10.3390/bdcc7030155

APA StylePanichi, B., & Lazzeri, A. (2023). Semi-Supervised Classification with A*: A Case Study on Electronic Invoicing. Big Data and Cognitive Computing, 7(3), 155. https://doi.org/10.3390/bdcc7030155