ID2SBVR: A Method for Extracting Business Vocabulary and Rules from an Informal Document

Abstract

1. Introduction

2. Related Works

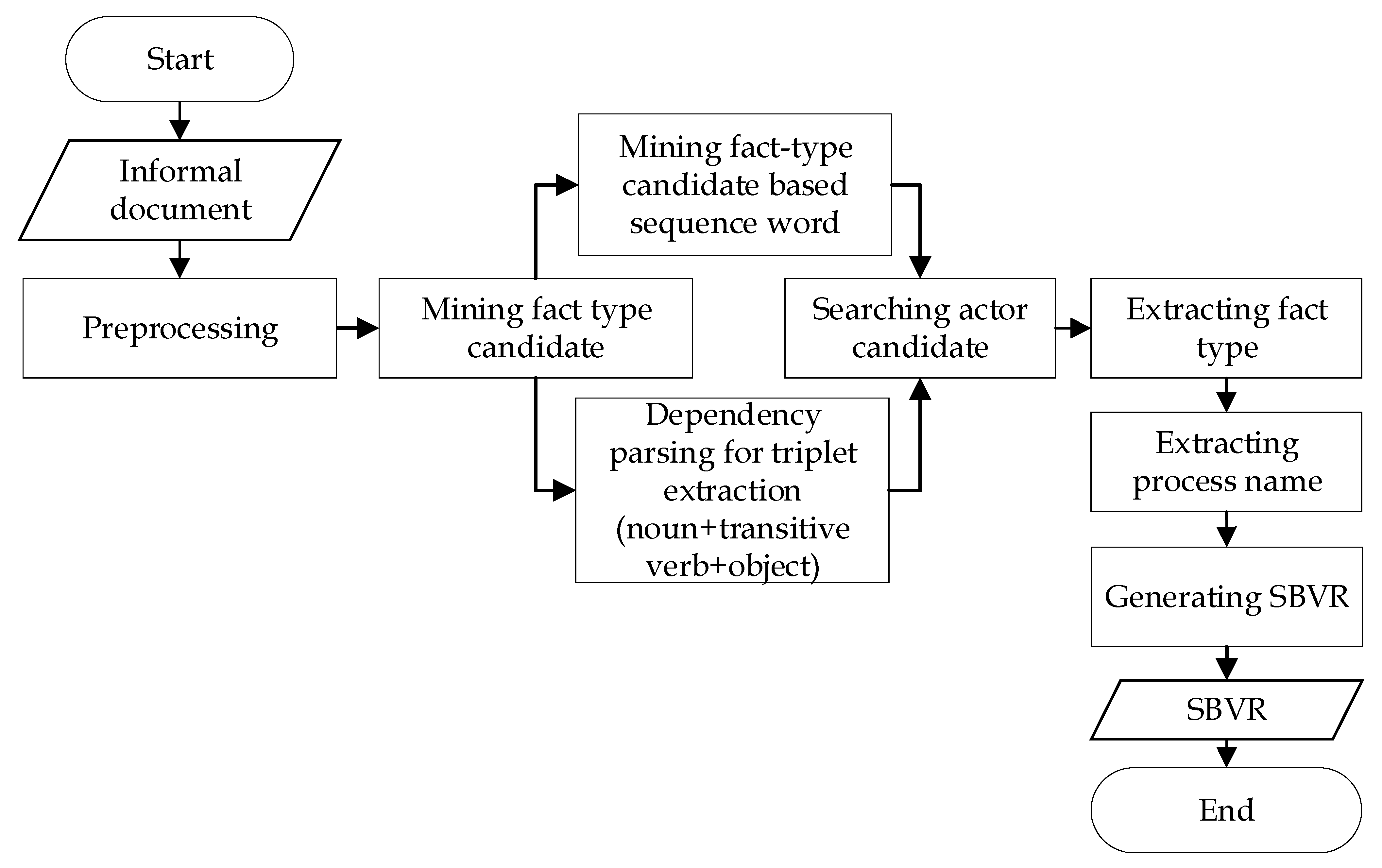

3. Materials and Methods

3.1. Research Objectives

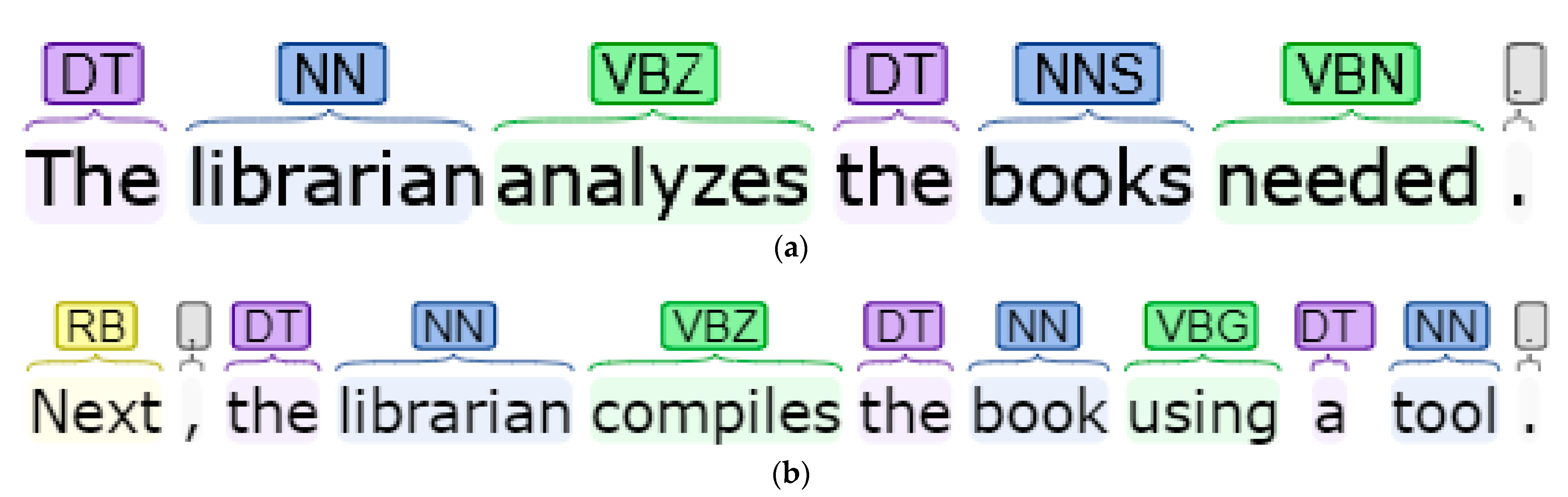

3.2. Mining Fact Type Candidate

- The general concept is a noun concept. It is classified by its typical properties, e.g., noun person, noun place, noun thing;

- The verb concept can be auxiliary verbs, action verbs, or both.

- term of a noun concept that is part of used or defined vocabulary.

- name for individual concepts and numerical values

- verb for a fact type that is usually a verb or preposition, or both

- keyword that accompanies designations or expressions; for example, obligatory, each, at most, at least, etc.

- Sentence 1: ‘The librarian analyzes the books needed’.

- Sentence 2: ‘Next, the librarian compiles the book using a tool’.

- ‘Librarian analyzes the books needed.’

- ‘Librarian compiles the book using a tool.’

- ’It is obligatory that (fact type sentence 2) after (fact type sentence 1)’.

- ’It is obligatory that librarian compiles the book using a tool after librarian analyzes the books needed’.

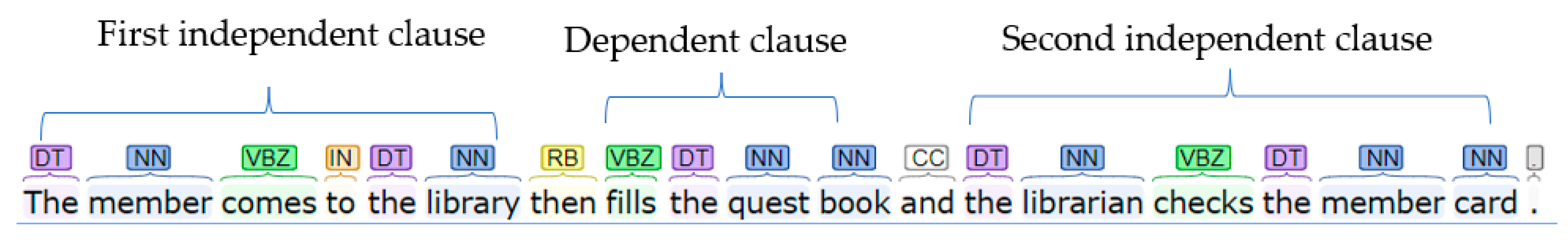

3.2.1. Mining the Fact Type Candidate Indicated by Sequence Words

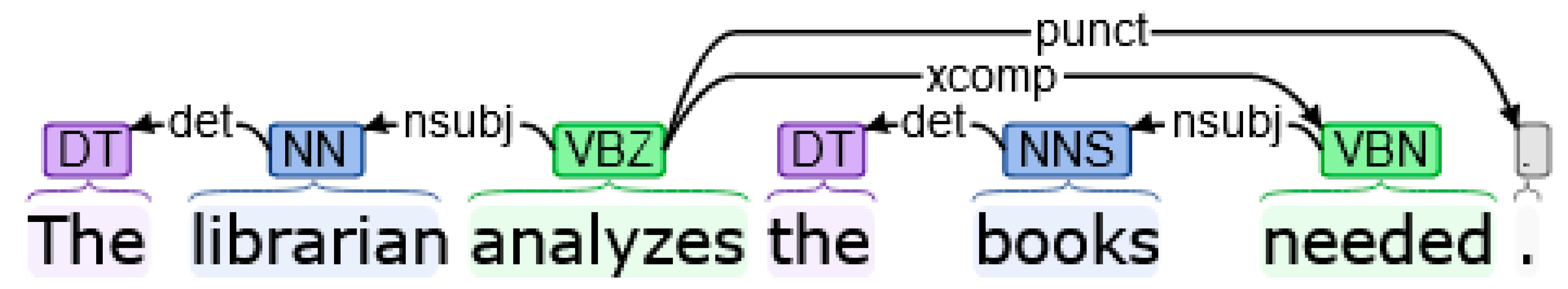

3.2.2. Mining the Fact Type Candidate Indicated by Dependency Parsing

| Algorithm 1. Fact type candidate based on sequence words |

| Data: input = answer Output: fact type candidate sequence word 1 = [‘begins’, ‘starts’, ‘firstly’, ‘secondly’, ‘first’, ‘after’, ‘then’, ’next’, ’after’, ’that’, ‘when’, ‘finally’, ‘furthermore’, ‘at the end’] sequence word 2 = [‘after’, ’then’, ’next’, ’after that’ data_clean = [] or answer in data_interview: change answer into lowercase change answer into token if answer is not in sequence word 1: if(index i is not = answer length—1): insert next sentence into data temporary change data temporary into token if answer is not in sequence word 2 insert answer into fact type candidate end end end else: insert answer into fact type candidate end |

- Noun (NN) as noun subject (nsubj):‘librarian’

- verb, 3rd person singular present simple (VBZ) as a transitive verb: ‘analyzes’.

- object consists of noun plural (NNS), verb past participle (VBN): ‘the books needed’.

| Algorithm 2. Fact type candidate based on triplet extraction |

| Data: input = answer Output: Fact type candidate for answer in enumerate (data_interview) if answer not in fact type candidate check noun subject in answer if noun subject exist in answer for index w in answer change answer to token if answer in tokens insert token with noun subject into data temporary insert token with verb into data temporary if token with verb = ‘of’: #check temporary verb with of for iteration as many as nlp check initialization= True if answer exist verb and answer is not exist ‘of’ check initialization= False if check = False: take index before the sentence insert sentence after index before the sentence end end end else: insert temporary subject insert temporary verb for iteration as many as nlp check initialization = True if answer verb followed by determiner (the) and object check initialization = False end else if answer verb followed by object check initialization = False if check initialization = False: take index before the sentence insert sentence after index before the sentence end end end end end end |

3.3. Searching Actor Candidate

| Algorithm 3. Actor candidate |

| Data: data_interview Output: actor candidate for answer in enumerate (data_interview) process answer to nlp check result nlp process exist noun object if result nlp process exist noun object change answer to token for index w iteration as many answer exits noun subject if index w exist token insert token with noun subject into data temporary insert token with verb into data temporary if token with verb = ‘of’ for index i, t iteration as many nlp result check = True if answer exist verb and not an object if answer exist punct insert subject with answer end else: if the next word is followed by pucnt: insert answer to data temporary end else: insert answer with space to data temporary end end end if answer exist noun subject if previous word exist determiner insert previous word to data temporary else: insert subject into temporary subject if answer exist verb and answer is not exist ‘of’ check = False if check = False: if data temporary subject is not noun subject: show subject data temporary reset temporary break end end end end end end |

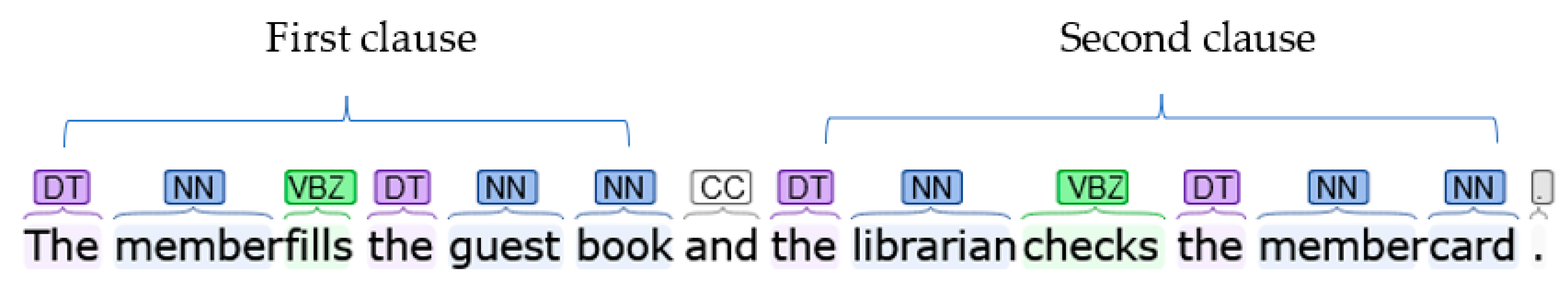

3.4. Extracting Fact Type

- Fact type 1: ‘Member fills the guest book’.

- Fact type 2: ‘Librarian checks the member card’.

| Algorithm 4. Fact type from compound sentence |

| Data: Fact type candidate Output: Fact type sentence=compound sentence split data (r ‘and| for| nor| but| or| yet| so’, sentence) show data |

- Fact type 1: ‘Librarian checks the book’.

- Fact type 2: ‘Librarian writes the logs of returning book on the borrowing card’.

- Fact type 1: ‘Member comes to the library’.

- Fact type 2: ‘Member fills the guest book’.

- Fact type 3: ‘Librarian checks the member card’.

| Algorithm 5. Fact type from compound-complex sentence |

| Data: Fact type candidate Output: Fact type sentence = ‘compound-complex sentence’ Split data (r ’and| for| nor| but| or| yet| so| after| once| until| although| then| provided that| when| as| rather than| whenever| because| since| where| before| so that| whereas| even if| than| wherever| even though| that| whether| if| though| while| in order that| unless| why’, sentence) Show data |

3.5. Extracting Fact Type

| Algorithm 6. Extracting process name |

| Data: Input = Question Output: Process name If previous question is not question data: Show new line show question process question to nlp process for index t iteration as many result of nlp process if question exist compound type if previous word of question exist amod type insert result of nlp process with amod type to temporary data for iteration compound type until the last word if result of nlp exist punct type insert result of nlp process to temporary data show temporary data end end end end end |

3.6. Generating SBVR

- ‘It is obligatory that <fact type2> after <fact type1>’.

| Algorithm 7. Fact type operational rule in SBVR |

| Data: Fact type candidate Output: SBVR #prosessbvr topic = 0 sbvr S = result of sbvr process interview data sbvr C = result of sbvr process check sentence for index i iteration as many as result of sbvr process if index i = 0 or sbvr is not exist previous sbvr show topic end if index i is not exist complete sentences and sbvr S exist next sbvr S and sbvr C is 0 and next sbvr C is 0 print (‘It is obligatory that’, next complete sentence, ’after’, complete sentence) end end |

3.6.1. Conjunction

- Response 1: ‘Firstly, the librarian determines the exhibition themes’.

- Response 2 with conjunction: ‘The librarian selects material, librarian determines design, librarian prepares support event, and librarian prepares promotion concept’.

- Response 3: ‘Then, the librarian does the exhibition together with the team’.

- Fact type 1: ‘librarian determines the exhibition themes’.

- Fact type 2 with conjunction: ‘librarian selects materials, librarian determines design, librarian supports event, and librarian prepares promotion concept’.

- Fact type 3: ‘librarian does the exhibition together with the team’.

3.6.2. Exclusive Disjunction

- fact type: ‘member active registered’

- fact type: ‘member allowed to enter the library else not allowed to enter’.

3.6.3. Inclusive Disjunction

- ‘librarian categorizes scientific paper as thesis’

- ‘librarian categorizes scientific paper as dissertation’

- ‘librarian categorizes other scientific paper’

- ‘librarian puts the scientific paper in the cabinet.’

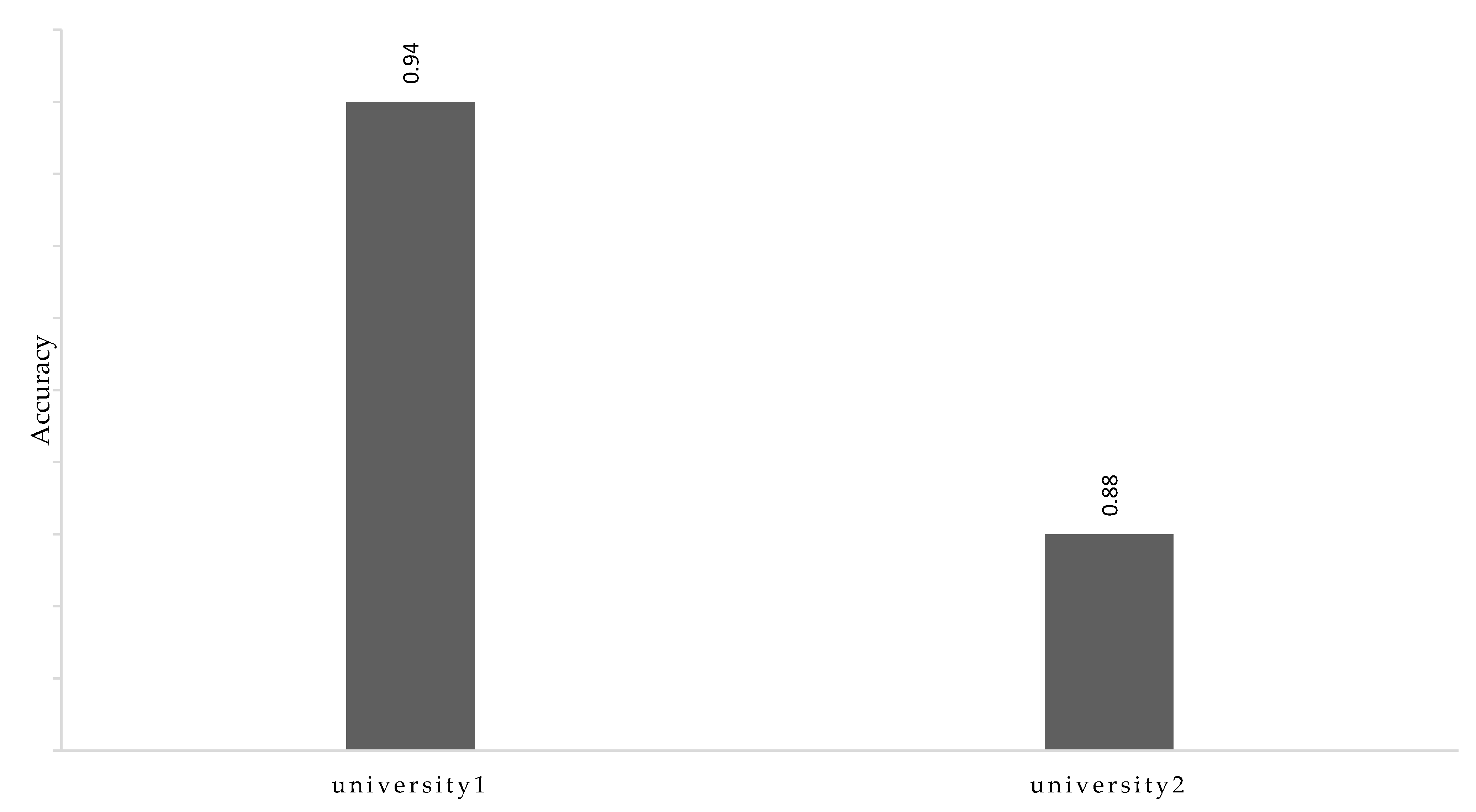

4. Results and Discussion

4.1. Scenario

4.2. Description of University Library Case Study

4.3. Extracting Fact Type

- fact type: librarian submits into the circulation or librarian submits into reference sub section afterwards.

- fact type: librarian submits into the circulation.

- fact type: librarian submits into reference sub section afterwards.

- ‘First, member hands over the book and receipt’.

- ‘Next, staff files borrowing receipts to its shelf’.

- ‘member hands over the book and receipt’.

- ‘staff files borrowing receipts to its shelf’.

- ‘then, student scans the id card barcode’.

- ‘if student chooses to save the file, then the file will be saved on the storage device, else prints the file’.

- fact type: ‘student scans the id card barcode’.

- fact type: ‘student chooses to save the file’.

- fact type: ‘the file will be saved on the storage device’.

- fact type: ‘student prints the file’.

4.4. Generating SBVR

5. Threat to Validity

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Skersys, T.; Danenas, P.; Butleris, R. Model-Based M2M Transformations Based on Drag-and-Drop Actions: Approach and Implementation. J. Syst. Softw. 2016, 122, 327–341. [Google Scholar] [CrossRef]

- Lopez, H.A.; Marquard, M.; Muttenthaler, L.; Stromsted, R. Assisted Declarative Process Creation from Natural Language Descriptions. In Proceedings of the IEEE International Enterprise Distributed Object Computing Workshop, EDOCW, Paris, France, 28–31 October 2019; pp. 96–99. [Google Scholar] [CrossRef]

- Deng, J.; Deng, X. Research on the Application of Data Mining Method Based on Decision Tree in CRM. J. Adv. Oxid. Technol. 2018, 21, 20–28. [Google Scholar] [CrossRef]

- Praveen, S.; Chandra, U. Influence of Structured, Semi-Structured, Unstructured Data on Various Data Models. Int. J. Isc. Eng. Res. 2017, 8, 67–69. [Google Scholar]

- Baig, M.I.; Shuib, L.; Yadegaridehkordi, E. Big Data Adoption: State of the Art and Research Challenges. Inf. Process. Manag. 2019, 56, 102095. [Google Scholar] [CrossRef]

- McConnell, C.R.; Fallon, F.L. Human Resource Management in Health Care; Jones & Bartlett Publishers: Burlington, MA, USA, 2013. [Google Scholar]

- Tangkawarow, I.; Sarno, R.; Siahaan, D. Modeling Business Rule Parallelism by Introducing Inclusive and Complex Gateways in Semantics of Business Vocabulary and Rules. Int. J. Intell. Eng. Syst. 2021, 14, 281–295. [Google Scholar] [CrossRef]

- Mickeviciute, E.; Butleris, R.; Gudas, S.; Karciauskas, E. Transforming BPMN 2.0 Business Process Model into SBVR Business Vocabulary and Rules. Inf. Technol. Control 2017, 46, 360–371. [Google Scholar] [CrossRef]

- Mickeviciute, E.; Skersys, T.; Nemuraite, L.; Butleris, R. SBVR Business Vocabulary and Rules Extraction from BPMN Business Process Models. In Proceedings of the 8th IADIS International Conference Information Systems 2015, IS 2015, Funchal, Portugal, 4–16 March 2015; pp. 211–215. [Google Scholar]

- Danenas, P.; Skersys, T.; Butleris, R. Natural Language Processing-Enhanced Extraction of SBVR Business Vocabularies and Business Rules from UML Use Case Diagrams. Data Knowl. Eng. 2020, 128, 101822. [Google Scholar] [CrossRef]

- Skersys, T.; Danenas, P.; Butleris, R. Extracting SBVR Business Vocabularies and Business Rules from UML Use Case Diagrams. J. Syst. Softw. 2018, 141, 111–130. [Google Scholar] [CrossRef]

- Mishra, A.; Sureka, A. A Graph Processing Based Approach for Automatic Detection of Semantic Inconsistency between BPMN Process Model and SBVR Rules. In Mining Intelligence and Knowledge Exploration; Lecture Notes in Computer Science (including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2015; Volume 9468, pp. 115–129. [Google Scholar] [CrossRef]

- Dumas, M.; La Rosa, M.; Mendling, J.; Reijers, H.A. Fundamentals of Business Process Management; Springer: Berlin/Heidelberg, Germany, 2013; pp. 8–10. [Google Scholar] [CrossRef]

- Leon, R.D.; Rodríguez-Rodríguez, R.; Gómez-Gasquet, P.; Mula, J. Business Process Improvement and the Knowledge Flows That Cross a Private Online Social Network: An Insurance Supply Chain Case. Inf. Process. Manag. 2020, 57, 102237. [Google Scholar] [CrossRef]

- Kluza, K.; Nalepa, G.J. Formal Model of Business Processes Integrated with Business Rules. Inf. Syst. Front. 2019, 21, 1167–1185. [Google Scholar] [CrossRef]

- Kluza, K.; Nalepa, G.J. A Method for Generation and Design of Business Processes with Business Rules. Inf. Softw. Technol. 2017, 91, 123–141. [Google Scholar] [CrossRef]

- Aiello, G.; Di Bernardo, R.; Maggio, M.; Di Bona, D.; Re, G.L. Inferring Business Rules from Natural Language Expressions. In Proceedings of the IEEE 7th International Conference on Service-Oriented Computing and Applications, SOCA 2014, Matsue, Japan, 17–19 November 2014; pp. 131–136. [Google Scholar] [CrossRef]

- Arshad, S.; Bajwa, I.S.; Kazmi, R. Generating SBVR-XML Representation of a Controlled Natural Language. In Communications in Computer and Information Science; Springer: Singapore, 2019; Volume 932, pp. 379–390. [Google Scholar] [CrossRef]

- Akhtar, B.; Mehmood, A.A.; Mehmood, A.A.; Noor, W. Generating RDFS Based Knowledge Graph from SBVR. In Intelligent Technologies and Applications. INTAP 2018. Communications in Computer and Information Science; Springer: Singapore, 2019; Volume 932, pp. 618–629. [Google Scholar] [CrossRef]

- Mohanan, M.; Samuel, P. Natural Language Processing Approach for UML Class Model Generation from Software Requirement Specifications via SBVR. Int. J. Artif. Intell. Tools 2018, 27, 6. [Google Scholar] [CrossRef]

- Skersys, T.; Kapocius, K.; Butleris, R.; Danikauskas, T. Extracting Business Vocabularies from Business Process Models: SBVR and BPMN Standards-Based Approach. Comput. Sci. Inf. Syst. 2014, 11, 1515–1536. [Google Scholar] [CrossRef]

- Tantan, O.; Akoka, J. Automated Transformation of Business Rules into Business Processes From SBVR to BPMN. In Proceedings of the International Conference on Software Engineering and Knowledge Engineering, SEKE, Vancouver, BC, Canada, 1–3 July 2014; Volume 25, pp. 684–687. [Google Scholar]

- Kluza, K.; Kutt, K.; Wozniak, M. SBVRwiki (Tool Presentation). CEUR Workshop Proc. 2014, 1289, 703–713. [Google Scholar] [CrossRef]

- Kluza, K.; Honkisz, K. From SBVR to BPMN and DMN Models. Proposal of Translation from Rules to Process and Decision Models. In Artificial Intelligence and Soft Computing: 15th International Conference, ICAISC 2016; Lecture Notes in Computer Science; Springer: Zakopane, Poland, 2016; Volume 9693, pp. 453–462. [Google Scholar] [CrossRef]

- Rodrigues, R.D.A.; Azevedo, L.G.; Revoredo, K.C. BPM2Text: A Language Independent Framework for Business Process Models to Natural Language Text. ISys-Braz. J. Inf. Syst. 2016, 10, 38–56. [Google Scholar] [CrossRef]

- Ferreira, R.C.B.; Thom, L.H.; Fantinato, M. A Semi-Automatic Approach to Identify Business Process Elements in Natural Language Texts. In Proceedings of the ICEIS 2017: 19th International Conference on Enterprise Information Systems, Porto, Portugal, 26–29 April 2017; Volume 3, pp. 250–261. [Google Scholar] [CrossRef]

- Delicado, L.; Sànchez-Ferreres, J.; Carmona, J.; Padró, L. NLP4BPM—Natural Language Processing Tools for Business Process Management. CEUR Workshop Proc. 2017, 1920, 1–5. [Google Scholar]

- Iqbal, U.; Bajwa, I.S. Generating UML Activity Diagram from SBVR Rules. In 2016 6th International Conference on Innovative Computing Technology, INTECH 2016; IEEE Xplore: Dublin, Ireland, 2017; pp. 216–219. [Google Scholar] [CrossRef]

- Mohanan, M.; Bajwa, I.S. Requirements to Class Model via SBVR. Int. J. Open Source Softw. Process. 2019, 10, 70–87. [Google Scholar] [CrossRef]

- Bazhenova, E.; Zerbato, F.; Oliboni, B.; Weske, M. From BPMN Process Models to DMN Decision Models. Inf. Syst. 2019, 83, 69–88. [Google Scholar] [CrossRef]

- da Purificação, C.E.P.; da Silva, P.C. A Controlled Natural Language Editor for Semantic of Business Vocabulary and Rules. In Advances in Intelligent Systems and Computing; Springer: Berlin/Heidelberg, Germany, 2018; Volume 738, pp. 499–508. [Google Scholar] [CrossRef]

- Object Management Group; OMG; Object Management Group. Semantics of Business Vocabulary and Business Rules Version 1.0. OMG Document Number: Formal/19-10-02. 2019. Available online: https://www.omg.org/spec/SBVR/1.5/PDF (accessed on 20 January 2021).

- Object Management Group. Semantics of Business Vocabulary and Business Rules V.1.5 Annex H- The RuleSpeak Business Rule Notation. OMG Document Number: Formal/19-10-07. 2019. Available online: https://www.omg.org/spec/SBVR/1.5/About-SBVR/ (accessed on 23 January 2021).

- Business Rule Solutions, L.; RuleSpeak. Business Rule Solutions, LLC. Available online: http://www.rulespeak.com/en/ (accessed on 12 March 2021).

- Aluthman, E.S. A Cross-Disciplinary Investigation of Textual Metadiscourse Markers in Academic Writing. Int. J. Linguist. 2018, 10, 19–38. [Google Scholar] [CrossRef][Green Version]

- Altınel, B.; Ganiz, M.C. Semantic Text Classification: A Survey of Past and Recent Advances. Inf. Process. Manag. 2018, 54, 1129–1153. [Google Scholar] [CrossRef]

- Wang, Y. A Functional Analysis of Text-Oriented Formulaic Expressions in Written Academic Discourse: Multiword Sequences vs. Single Words. Engl. Specif. Purp. 2019, 54, 50–61. [Google Scholar] [CrossRef]

- Uysal, A.K.; Gunal, S. The Impact of Preprocessing on Text Classification. Inf. Process. Manag. 2014, 50, 104–112. [Google Scholar] [CrossRef]

- Steven, B.; Loper, E.; Klein, E. Natural Language Processing with Python*; O’Reilly Media Inc.: Sebastopol, CA, USA, 2009. [Google Scholar]

- Demirezen, M. Determining the Intonation Contours of Compound-Complex Sentences Uttered by Turkish Prospective Teachers of English. Procedia-Soc. Behav. Sci. 2015, 186, 274–282. [Google Scholar] [CrossRef][Green Version]

- Stapor, K.; Ksieniewicz, P.; García, S.; Woźniak, M. How to Design the Fair Experimental Classifier Evaluation. Appl. Soft Comput. 2021, 104, 107219. [Google Scholar] [CrossRef]

- Pereira, R.B.; Plastino, A.; Zadrozny, B.; Merschmann, L.H.C. Correlation Analysis of Performance Measures for Multi-Label Classification. Inf. Process. Manag. 2018, 54, 359–369. [Google Scholar] [CrossRef]

- Darma, I.W.A.S.; Suciati, N.; Siahaan, D. Neural Style Transfer and Geometric Transformations for Data Augmentation on Balinese Carving Recognition Using MobileNet. Int. J. Intell. Eng. Syst. 2020, 13, 349–363. [Google Scholar] [CrossRef]

- Mauro, N.; Ardissono, L.; Petrone, G. User and Item-Aware Estimation of Review Helpfulness. Inf. Process. Manag. 2021, 58, 102434. [Google Scholar] [CrossRef]

- Joshi, B.; Macwan, N.; Mistry, T.; Mahida, D. Text Mining and Natural Language Processing in Web Data Mining. In Proceedings of the 2nd International Conference on Current Research Trends in Engineering and Technology, Gujarat, India, 9 April 2018; Volume 4. [Google Scholar]

- Rashid, J.; Shah, S.M.A.; Irtaza, A. Fuzzy Topic Modeling Approach for Text Mining over Short Text. Inf. Process. Manag. 2019, 56, 102060. [Google Scholar] [CrossRef]

| Question | Process | Number of | Sentence | |||||

|---|---|---|---|---|---|---|---|---|

| ID | Name | Sentence | Word Response | Verb | Sequence Word | Compound | Complex | Compound-Complex |

| q1 | - | 2 | 24 | 3 | - | - | - | - |

| q2 | procurement section | 12 | 146 | 27 | 6 | 3 | 1 | - |

| q3 | processing section | 6 | 74 | 6 | 5 | 1 | 2 | - |

| … | … | … | … | … | … | … | … | |

| q16 | inventarisation | 6 | 61 | 5 | 4 | - | - | 1 |

| Total | 110 | 1247 | 171 | 61 | 11 | 10 | 7 | |

| Question | Process | Number of | Sentence | |||||

|---|---|---|---|---|---|---|---|---|

| ID | Name | Sentence | Word Response | Verb | Sequence Word | Compound | Complex | Compound-Complex |

| r1 | - | 4 | 63 | 6 | - | 5 | - | - |

| r2 | LPBP Lelang | 8 | 60 | 8 | 7 | - | - | - |

| r3 | LPENGOLBP book | 7 | 51 | 7 | 6 | - | - | - |

| … | … | … | … | … | … | … | … | |

| r33 | Training, seminars, and workshops held in library | 18 | 264 | 17 | 6 | 3 | 1 | - |

| Total | 288 | 2585 | 337 | 195 | 17 | 13 | 16 | |

| Question ID | Precision | Recall | Specificity | Accuracy |

|---|---|---|---|---|

| q1 | - | - | - | |

| q2 | 1.00 | 1.00 | 1.00 | 1.00 |

| q3 | 0.83 | 0.83 | 0.83 | 0.83 |

| q4 | - | - | - | |

| q5 | 1.00 | 1.00 | 1.00 | 1.00 |

| q6 | 0.88 | 0.88 | 0.88 | 0.88 |

| q7 | 1.00 | 1.00 | 1.00 | 1.00 |

| q8 | 1.00 | 1.00 | 1.00 | 1.00 |

| q9 | 1.00 | 1.00 | 1.00 | 1.00 |

| q10 | 1.00 | 1.00 | 1.00 | 1.00 |

| q11 | 1.00 | 1.00 | 1.00 | 1.00 |

| q12 | 1.00 | 1.00 | 1.00 | 1.00 |

| q13 | 1.00 | 1.00 | 1.00 | 1.00 |

| q14 | 1.00 | 1.00 | 1.00 | 1.00 |

| q15 | 1.00 | 1.00 | 1.00 | 1.00 |

| q16 | 1.00 | 1.00 | 1.00 | 1.00 |

| Average | 0.98 | 0.98 | 0.98 | 0.98 |

| Question ID | Precision | Recall | Specificity | Accuracy |

|---|---|---|---|---|

| r1 | - | - | - | - |

| r2 | 1.00 | 1.00 | 1.00 | 1.00 |

| r3 | 1.00 | 1.00 | 1.00 | 1.00 |

| r4 | 1.00 | 1.00 | 1.00 | 1.00 |

| r5 | 1.00 | 1.00 | 1.00 | 1.00 |

| r6 | 1.00 | 1.00 | 1.00 | 1.00 |

| r7 | 1.00 | 1.00 | 1.00 | 1.00 |

| r8 | 1.00 | 1.00 | 1.00 | 1.00 |

| r9 | 1.00 | 1.00 | 1.00 | 1.00 |

| r10 | 1.00 | 1.00 | 1.00 | 1.00 |

| r11 | 1.00 | 1.00 | 1.00 | 1.00 |

| r12 | 0.86 | 0.75 | 0.88 | 0.81 |

| r13 | 1.00 | 0.80 | 1.00 | 0.90 |

| r14 | 1.00 | 0.86 | 1.00 | 0.93 |

| r15 | 1.00 | 1.00 | 1.00 | 1.00 |

| r16 | 1.00 | 0.88 | 1.00 | 0.94 |

| r17 | 1.00 | 1.00 | 1.00 | 1.00 |

| r18 | 1.00 | 1.00 | 1.00 | 1.00 |

| r19 | 0.80 | 0.67 | 0.83 | 0.75 |

| r20 | 1.00 | 1.00 | 1.00 | 1.00 |

| r21 | 1.00 | 0.89 | 1.00 | 0.94 |

| r22 | 1.00 | 1.00 | 1.00 | 1.00 |

| r23 | 1.00 | 1.00 | 1.00 | 1.00 |

| r24 | 1.00 | 0.81 | 1.00 | 0.91 |

| r25 | 1.00 | 0.83 | 1.00 | 0.92 |

| r26 | 1.00 | 1.00 | 1.00 | 1.00 |

| r27 | 0.87 | 0.76 | 0.88 | 0.82 |

| r28 | 0.83 | 0.63 | 0.88 | 0.75 |

| r29 | 1.00 | 1.00 | 1.00 | 1.00 |

| r30 | 0.95 | 0.83 | 0.96 | 0.89 |

| r31 | 1.00 | 0.77 | 1.00 | 0.88 |

| r32 | 1.00 | 0.67 | 1.00 | 0.83 |

| r33 | 1.00 | 1.00 | 1.00 | 1.00 |

| Average | 0.96 | 0.89 | 0.98 | 0.93 |

| Question ID | SBVR | Error Due to Wrong Fact Type | Error Due to Wrong Sequencing | Accuracy |

|---|---|---|---|---|

| q1 | - | - | - | - |

| q2 | 11 | - | - | 1.00 |

| q3 | 5 | 2 | - | 0.60 |

| q4 | - | - | - | - |

| q5 | 3 | - | - | 1.00 |

| q6 | 5 | 2 | - | 0.60 |

| q7 | 4 | - | - | 1.00 |

| q8 | 4 | - | - | 1.00 |

| q9 | 6 | - | - | 1.00 |

| q10 | 8 | - | - | 1.00 |

| q11 | 5 | - | - | 1.00 |

| q12 | 8 | - | - | 1.00 |

| q13 | 10 | - | - | 1.00 |

| q14 | 13 | - | - | 1.00 |

| q15 | 15 | - | - | 1.00 |

| q16 | 11 | - | - | 1.00 |

| Average | 0.94 | |||

| Variance | 0.02 | |||

| Standard Deviation | 0.15 |

| Question ID | SBVR | Error Due to Wrong Fact Type | Error Due to Wrong Sequencing | Accuracy |

|---|---|---|---|---|

| r1 | - | - | - | - |

| r2 | 7 | - | - | 1.00 |

| r3 | 6 | - | - | 1.00 |

| r4 | 6 | - | - | 1.00 |

| r5 | 6 | - | - | 1.00 |

| r6 | 3 | - | - | 1.00 |

| r7 | 9 | - | - | 1.00 |

| r8 | 3 | - | - | 1.00 |

| r9 | 4 | - | - | 1.00 |

| r10 | 6 | - | - | 1.00 |

| r11 | 5 | - | - | 1.00 |

| r12 | 5 | - | 2 | 0.60 |

| r13 | 3 | - | 1 | 0.67 |

| r14 | 5 | - | 1 | 0.80 |

| r15 | 6 | - | - | 1.00 |

| r16 | 5 | - | - | 1.00 |

| r17 | 5 | - | - | 1.00 |

| r18 | 3 | - | - | 1.00 |

| r19 | 5 | 2 | 1 | 0.40 |

| r20 | 18 | - | - | 1.00 |

| r21 | 7 | - | 1 | 0.86 |

| r22 | 8 | - | - | 1.00 |

| r23 | 5 | - | - | 1.00 |

| r24 | 12 | - | 3 | 0.75 |

| r25 | 5 | 1 | 1 | 0.60 |

| r26 | 7 | - | - | 1.00 |

| r27 | 11 | - | 2 | 0.82 |

| r28 | 4 | 1 | 1 | 0.50 |

| r29 | 7 | - | - | 1.00 |

| r30 | 13 | 2 | 2 | 0.69 |

| r31 | 7 | - | 1 | 0.86 |

| r32 | 3 | - | 1 | 0.67 |

| r33 | 10 | - | - | 1.00 |

| Average | 0.88 | |||

| Variance | 0.03 | |||

| Standard deviation | 0.18 | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tangkawarow, I.; Sarno, R.; Siahaan, D. ID2SBVR: A Method for Extracting Business Vocabulary and Rules from an Informal Document. Big Data Cogn. Comput. 2022, 6, 119. https://doi.org/10.3390/bdcc6040119

Tangkawarow I, Sarno R, Siahaan D. ID2SBVR: A Method for Extracting Business Vocabulary and Rules from an Informal Document. Big Data and Cognitive Computing. 2022; 6(4):119. https://doi.org/10.3390/bdcc6040119

Chicago/Turabian StyleTangkawarow, Irene, Riyanarto Sarno, and Daniel Siahaan. 2022. "ID2SBVR: A Method for Extracting Business Vocabulary and Rules from an Informal Document" Big Data and Cognitive Computing 6, no. 4: 119. https://doi.org/10.3390/bdcc6040119

APA StyleTangkawarow, I., Sarno, R., & Siahaan, D. (2022). ID2SBVR: A Method for Extracting Business Vocabulary and Rules from an Informal Document. Big Data and Cognitive Computing, 6(4), 119. https://doi.org/10.3390/bdcc6040119