Abstract

The scientific study of teamwork in the context of long-term spaceflight has uncovered a considerable amount of knowledge over the past 20 years. Although much is known about the underlying factors and processes of teamwork, much is left to be discovered for teams who operate in extreme isolation conditions during spaceflights. Thus, special considerations must be made to enhance teamwork and team well-being for long-term missions during which the team will live and work together. Being affected by both mental and physical stress during interactional context conversations might have a direct or indirect impact on team members’ speech acoustics, facial expressions, lexical choices and their physiological responses. The purpose of this article is (a) to illustrate the relationship between the modalities of vocal-acoustic, language and physiological cues during stressful teammate conversations, (b) to delineate promising research paths to help further our insights into understanding the underlying mechanisms of high team cohesion during spaceflights, (c) to build upon our preliminary experimental results that were recently published, using a dyadic team corpus during the demanding operational task of “diffusing a bomb” and (d) to outline a list of parameters that should be considered and examined that would be useful in spaceflights for team-effectiveness research in similarly stressful conditions. Under this view, it is expected to take us one step towards building an extremely non-intrusive and relatively inexpensive set of measures deployed in ground analogs to assess complex and dynamic behavior of individuals.

1. Introduction

Assessing team cohesion and effectiveness with pervasive sensing technologies in real-life settings is a growing field in human research [1,2,3,4,5,6,7]. In attempting to understand what makes teamwork effective, an initial step would be to define what makes the members of a team stay committed to the team. Thus, the term team cohesion was introduced [8] to refer to the bonds that keep team members together. As such, team cohesion refers to the outcome of all forces acting on team members to remain in the team. The concept of team cohesion has a wide appeal in the research fields of applied psychology, in organizational behavior, in military psychology, in sport psychology, etc. Much of this interest is inspired by the widely shared assumption that keeping teams together is important for successful functioning of systems relying on teamwork. Among the first works that studied the relation between team cohesion and team performance and effectiveness is [9]. According to the authors’ outcome, team cohesion should be approached as a construct according to which the aspect of cohesion is related with team members’ commitment to task performance. Moreover, it has been shown according to [10] that work overload in military teams decreases the team members’ cohesion (conceptualized as a team’s task and emotional peer support), which in turn results in perceptions of inferior performance at both the individual and team levels. Finally, it has been shown that when team members perceive strong team cohesion, they tend to experience less stress, stresses that could be expressed through depression, confusion, mood disturbance, etc. [11].

Excessive stress may lead to a variety of psychological, physiological and psychosomatic health conditions such as anxiety and stress [12]. For example, a lack of proper physiological function can lead to a degradation in performance and even cardiovascular issues [13]. As such, the automatic detection of stress, through vocal-acoustic, language, facial and physiological cues, can be very useful as it can provide an early warning to the user and even help reduce stress-related physical and mental health issues due to long-term stress exposure.

Stress consists of several complementary and interacting components ranging from cognitive, affective, central and peripheral physiological [14] and composes the organism’s response to changing internal and/or external conditions and demands [15]. Historically, the potential negative impact of stress on body physiology and health has long been recognized [16]. Although stress is a subjective and multifaceted phenomenon and as such is hard to measure comprehensively through objective means, current research attempts to shed light on the characteristics of the stressor itself and the various biological and psychological vulnerabilities and ways that stress may be observable through facial, vocal-acoustics and physiological (i.e., higher heart rate, sweaty palms) characteristics.

More specifically, stress can be detected through biosignals that quantify physiological measures. Also, speech features are affected particularly in stress conditions, and the fundamental voice frequency has been mostly investigated [17,18]. The heart rate also has an impact on the human face, as facial skin hue varies in accordance to concurrent changes in blood volume transferred from the heart. Additionally, the heart rate increases during conditions of stress [19,20,21,22] and heart rate variability parameters differentiate stress and neutral states [23,24]. The detection of stress is not only reflected in a person’s internal or external state, but also in how they interact with others, especially in a collaborative setting. Considering that collaborative interactions are patterned by a sequence of dynamic indicators, one team member approaches another through physical movement, heart rate fluctuations, facial expressions and vocalization, leading to engagement or not by one member or the other. Similarly, NASA team members of a spaceflight convert inputs to outcomes through cognitive, verbal and behavioral processes while organizing task work (situational context) to achieve collective goals [25].

This research work studies whether individual behavior and social interactions provide useful information in assessing affective states and teammate cohesion. According to psychology, individual affect is influenced by peers’ emotion [26] and the strength of social links in teammates [27]. Our paper analyzes the affective state of team members in isolated, confined and extreme conditions [1]. Specifically, we analyze a dyadic team corpus to examine the association between the “defusers” physiological signals following the “instructor’s” questions to further predict whether they occurred in a short or long turn-taking period of time. Our results suggest that an association does exist between turn taking and inner affective state. We should keep in mind that during in-the-wild scenarios, confined teams are unable to obtain help from outside to moderate their conflicts. Therefore, being able to infer these states is highly desirable and as such, in these scenarios, the team effect on individual affective state is considered a very important factor.

1.1. Article’s Objectives

This work’s objective is to explore and highlight the value of monitoring long-term team behaviors during demanding operational tasks such as diffusing bombs and to further replicate them at a later point in spaceflight conditions. According to extensive behavioral research in group studies [28,29,30,31], it has been shown that team states are associated with unique patterns of individual behaviors, social interactions and team cohesion [32]. Taking into account that cohesion can be more specifically defined as the tendency for a team to be in unity while working towards a common goal, a number of important aspects of cohesion are included, ranging from its multidimensionality, to its dynamic nature, to its emotional dimension. This definition can be further generalized to most groups such as team sports, work groups or military units. Studies have shown that cohesion and performance are two aspects that are complementary and non-overlapping [9,33,34,35,36]. This observation is still widely accepted even when cohesion is defined as attraction [33], task commitment or group pride. In general, it could be said that, when cohesion is expressed through all these ways, it is positively related to performance [33]. However, attempting to measure the strength of the cohesion–performance association, some teams may have a stronger cohesion–performance relationship than others. Smaller teams have a better cohesion–performance relationship than larger teams [9]. Maintaining healthy affective states and cohesion within a team is of crucial importance for its performance. Under this view, we aim to investigate to what extent we can make inferences about both individual affective states and teammate cohesion. Our work focuses on the following types of interactional behavior. First, the strength of communications through facial interaction, specifically, the amount of subscribed face-to-face interactions has been related to several group concepts. For example, the amount of face-to-face interactions was shown to infer personal relationships [37], roles within groups and group performance in prior works [38,39]. The second type of behavior is related to teammates’ vocal activities, which have been associated with excitement and high levels of interest. Variations in vocal activities usually correspond to fluctuations in people’s mood [39]. The last behavior patterns refer to physiological bodily expressions, such as heart rate fluctuations and sweating palms, which affect the dynamics within dyadic teammate interactions.

1.2. The Bomb Diffusion Dataset

Team cohesion is considered to be a dynamic phenomenon. Unlike prior studies focused on a short period of team collaborations, for example, meetings [40], our work investigates team cohesion in longitudinal settings. Particularly, the dyadic cooperative team corpus [41] employed a 2 × 2 between-subjects design resulting in a total of two experimental conditions with 20 gender-matched pairs in the following two conditions: The Ice Breaker conversation (IB) condition, which consisted of allowing teammates to garner familiarity through a series of “getting to know you” questions prior to the start of the task and the Control (CT) condition, where teammates simply began the task with no prior familiarity. The corpus consists of a series of simulated “bomb defusion” scenarios. In each scenario, one team member served as the “defuser” and one team member served as the “instructor”. The “instructor” was given a manual with instructions on how to diffuse the bomb. The “instructor” was told that it was their responsibility to provide information that would allow the defuser to successfully complete the task. After each separate task, the team members switched roles (i.e., each team member was given the opportunity to be both the “defuser” and the “instructor” twice during the main task), which resulted in a total of four main tasks, each lasting an average of 5 mins. For this work, we take into account only 10 gender-matched pairs (five from each condition) and we examine only the situation in which participant A is the instructor and participant B is the defuser, (i.e., we did not examine the case of asking members to switch roles).

1.3. Situational Context

During collaborative tasks, teammates convert inputs to outcomes through cognitive, verbal and behavioral processes while organizing task work (situational context) to achieve collective goals [25]. Incorporating context into human affective behavior analysis lies at the intersection of context-aware affective analysis and affect-aware intelligent human–computer interaction since contextual information cannot be discounted in doing automatic analysis of human affective behavior. Thus, by tackling the issues of context-aware affect analysis, through the careful study of contextual information and its relevance in context-aware systems, its representation, its modeling and its incorporation, it is possible to outline a roadmap showing the most important steps still to be made towards real-world affect analysis.

There is no doubt that “behavioral production” is affected by the situational context that is taking place at the moment, such as the resources that are nearby, what the participant is doing, the situation that is occurring, the identities of the people around the user as well as the degree of expressiveness. Any other complementary contextual information examined assists us in responding to the question “what is more likely to happen”, thus diverting the classifier from more likely/relevant categories. Without the framework, even people can misinterpret their observable expressions. With this in mind, previous research efforts focused on developing novel computational ways to analyze behavior in a specific context (context-aware behavior analysis) [42].

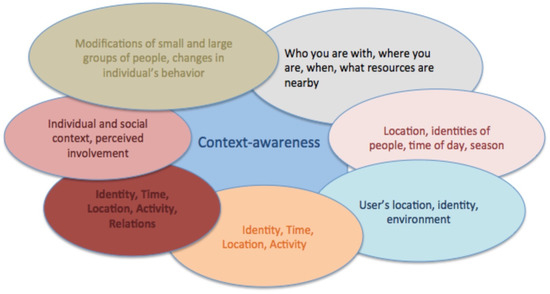

According to the first work, which introduced the term context awareness, the important aspects of context are the following: Who you are with, Where you are, When, What resources are nearby [43]. Thus, context-aware systems examine what the user is doing and further use this information to determine why the situation is taking place. An additional definition has been given in [44], in which authors define context as place (location), the identities of the people around the user, the time of day, season, temperature, etc. Similarly, [45] refer to the term “context” as the user’s location, environment, identity and time, while others [46,47] simply use synonyms for that term. For a more extended overview on context awareness, the reader is referred to [48].

With respect to context incorporation in multiparty human communicative behavior, the context model, which was formalized as the combination of “Identity”, “Time”, “Location” and “Activity” as presented in [48], was enriched in [49] to include the contextual type “Relations” to encapsulate any possible relation that a person may establish with others during an interaction. The “Relations” type expresses a dependency that emerges among the interlocutors and also acts as to other sources of contextual information. For example, given a person’s affiliation, related information, such as social associations, teammates, co-workers, and relatives, can be acquired.

Recently, the term Relations has been used to refer to the relation between the individual and the social context in terms of perceived involvement [50] and to the changes detected in a group’s involvement in a multiparty interaction [51]. Thus, it is clear that understanding the multiparty human communicative behavior implies an understanding of the modifications of the social structure and dynamics of small [52] and large groups (friends, colleagues, families, students, etc.) [53] and the changes in individuals’ behaviors and attitudes that occur because of their membership in social and situational settings [54]. For a more broad overview of how the term context has been defined, the reader is directed to [55]. We conclude, as presented in Figure 1, that the ability of humans to effectively interact socially relies heavily on their awareness of the situational context (spaceflight conditions) in which the interaction takes place.

Figure 1.

Definitions of the term context according to the bibliography covering the past 20 years [42].

2. Defining and Detecting Stress through Physiological, Vocal and Language Characteristics and Studying the Interplay among Them

Stress has become a serious problem affecting different life situations and carrying a wide range of health-related diseases, ranging from cardiovascular to cerebrovascular disease [56]. In the research area of well-being, the problem of managing stress has been receiving increasing attention [57]. Technologies that automatically recognize stress can become a powerful tool to motivate people to adjust their behavior and lifestyle to achieve a better stress balance. Technology such as sensors can be used for obtaining an objective measurement of stress level. Currently, two models are still used. The General Adaptation Syndrome (GAS) model, proposed by [58] identifies various stages of stress response, depending on the physical (noise, excessive heat or cold) or psychological (extreme emotion or frustration) stimuli. The other model [59] emphasizes the concepts of individuals’ efforts to handle and solve the situation.

2.1. Stress Detection from Physiology

The autonomic nervous systems (ANS) within the human body control our organs such as the heart, stomach and intestines. ANS can be divided into sympathetic and parasympathetic nervous systems. The parasympathetic nervous system is responsible for nourishing, calming the nerves to return to regular function, while the sympathetic nervous system is responsible for activating the glands and organs for defending the body from a threat. The activation of the sympathetic nervous system can be accompanied by many bodily reactions, such as an increase in the heart rate (HR), rapid blood flow to the muscles, activation of sweat glands and increase in the respiration rate (RR) and reflect physiological changes, such as blood pressure (BP), blood volume pulse (BVP), galvanic skin response (GSR), which can be measured objectively.

In our previously published research work, we have studied such physiological features [60]. To be more precise, we attempted to elaborate on these physiological features in terms of their importance with respect to the “bomb defusion” task (“defuser”-“instructor”). We observed that, for 50% of the defusers, the most relevant physiological signal was HR. Based on this, we assumed that HR was associated with arousal levels and was of high importance for the examined task. Regarding the remaining selected physiological features, we noticed that these features ranged across defusers. This finding enhanced the original assumption of the uniqueness of individual personal traits across participants. Finally, the former finding was also aligned with our experimental results and observations that suggested that the “teammate prior familiarity” parameter did not have an impact on task performance.

2.2. Stress Detection from Voice Production Characteristics

Although the above mentioned methods provide quite satisfactory accuracy for stress detection, they need intrusive and, in some cases, invasive methods to predict stress through physiological signals. Under this context, speech scientists have recently been inspired to harness prosodic emotional cues to predict stress [61]. Particularly, respiration has been found to be highly correlated with certain emotional situations. For example, when an individual experiences a stressful situation, their respiration rate increases and, as a result, a further increase in pitch (fundamental frequency) during the voiced section [62] is noticed. At the same time, an increase in the respiration rate causes a shorter duration of speech between breaths which, in turn, affects the speech articulation rate.

Moving from ground to space, the environmental conditions vary significantly. With this in mind, we consider any variability in astronauts’ speech production mechanism reasonable. To be more precise, speech production is a unique human ability as well as a highly complex process involving a huge amount of articulatory organs and muscles, making it a hard process to be fully understood, especially for non-neutral environments. Studying astronauts’ speech in space allows for an understanding of human speech production under extraordinary conditions. Previous research conducted with respect to speech production under non-neutral conditions suggested that individuals adjust the manner in which they generate speech in the presence of noise. Other studies have also investigated the influences of the speaker’s cognitive state [63,64] and physical state [65,66] on the speech production mechanism, suggesting that human speech production is sensitive to both the speaker state and the surrounding conditions.

2.3. Stress Detection from Language Characteristics

The effect of stress in relation to language features has been well studied over the past decades. The way a person writes has been found to vary depending on their stress levels and, as a result, analyzing text linguistics can add additional value for analyzing a person’s stress levels.

According to the current research, a number of linguistic tools dominate in the field of analysis of linguistic features. Among these tools, LIWC (http://www.liwc.net/), Harvard General Inquirer (GI) (http://www.wjh.harvard.edu/inquirer/), as well as SentiStrength (http://sentistrength.wlv.ac.uk/) are included. The former tools are mainly applied to linguistic texts to both measure writing performance in users by means of lexical diversity measures or directly analyze the “feelings” of the text by measuring their polarity, (i.e., their positivity or negativity) and their strength (i.e., the degree in which they are positive or negative), or investigate how cognition words are used to express cognitive operations such as thinking. The main linguistic features examined include lexical and content diversity, the rate of unique and content words (noun, verbs, etc.), average word length, the passive tense rate, the third person pronoun rate as well as average sentence length.

Another branch of recent research on determining detection from language cues refers to the duration of response utterances. This has been reported to be very important, as it can be indicative of conflicting mental and stress procedures [67]. Considering that physiological indicators reflect aspects of underlying mental states and specifically the amount of distress [68], we suggested in the past to explore whether physiological signals of long and short response utterance durations exhibit different physiological patterns [60]. We hypothesized that the investigation of physiological changes during the response periods can provide a better understanding of a team’s dynamics. Additional works have associated language cues with work on turn-taking behavior in dialogue systems [67] suggesting that these systems could benefit from an understanding of the interpersonal dynamics of the physiological stress response during a cooperative task [69].

2.4. Relationship among Speech-Acoustic, Language and Physiological Characteristics

Based on the previously presented examples, speech-acoustic, language and physiological cues have been well studied and employed separately to detect stress. Therefore, another interesting area of research is to explore the relationship among them. Predicting physiological responses from speech acoustics during stressful conversations could provide some insight into why and how stress leads to both vocal and physiological activations and how these two are related.

Identifying acoustic features that are related to physiological signals can also help in the development of a multimodal stress detection system. Moreover, estimating the raw values of physiological signals can provide higher-resolution quantitative metrics for the intensity of stress as opposed to just detecting the presence of stress. However, to the best of our knowledge, only a few attempts have been made in the past to tackle the problem of predicting physiological signals only from audio (speech) or text (transcripts). The authors of [70] tried to predict HR from the pronunciation of vowels. Schuller and his colleagues [71] analyzed correlation, regression and classification results for the tasks of vowel pronunciation and reading a sentence out loud with and without physical load.

Furthermore, the authors of [72] presented a research review with respect to the association of psychological, physiological, facial and speech modalities, along with contextual measurements, to automatically detect stress under working conditions (office environment). Additionally, in [73], the authors investigated a total of 343 features extracted from sensors, smartphone logs (text messages), behavioral surveys, communication and phone usage, location patterns and weather information to predict next-day health, stress and happiness. At the same time, Ref. [74] explored the automatic detection of drivers’ stress levels from multimodal sensors and data. Aligned with these research works, Ref. [75] introduced a methodology for analyzing multimodal stress detection results through the examination of the following signals: BVP, electrocardiography (ECG), electromyogram (EMG), GSR, HR, heart rate variability (HRV) (physiological signals), as well as speech signals, body and head movement and eye gaze. At the same time, the authors of [76] investigated how vocal signals and body movements convey stress and how they can be further used for automatic stress analysis. Finally, in [77], the authors presented a review summary of previous and current algorithmic efforts to perceive multimodal inputs that would lead to a wearable device capable of detecting physiological signals related to deep brain activation. To conclude with, the authors of [78] presented a survey of detection stress within the affective computing field to achieve improved physical health. Nevertheless, we should underline at this point that even though a number of state-of-the-art works highlight the association between multimodal signals and stress detection, we are not aware of any research work that intends to examine the underlying mechanisms of detecting stress through multimodal signals (speech, facial, calendar logs, physiological) during a stressful military task (bomb diffusion), which could further provide useful insights with respect to long-term spaceflights for team-effectiveness.

As a result, being able to identify, monitor and alter our emotional states in real time is an important next step towards developing more effective interventions for improving quality of life. When it comes to astronauts, for example, their routine activities have been linked to improved well-being. In addition to emotional states, the quality of their relationships plays a central role in their mental and physical health. Interpersonal conflicts, such as arguments with teamworkers, greatly impact their daily mood, behavior and physiological reactions. Although detecting psychological states in uncontrolled environments is difficult, developing this ability could ideally mitigate a number of NASA risks with respect to the astronauts’ well-being.

3. Understanding the Teammate Needs: From Ground to Space Analogs

There is no doubt that understanding the astronauts’ behavioral production mechanism is hard. With this in mind, changes in their production mechanism when they are in space could be achieved by replicating the success of behavioral technologies from ground to space [79].

The first step towards building a cohesive team is team engagement. Currently, the majority of the foundation is based on cross-sectional data, and thus, it is static. What we need is “intensive” longitudinal research on long-term team functioning under isolated, confined and extreme conditions that are similar to those of long-duration spaceflights and are expected to serve as appropriate proxies to teaming in spaceflight.

As a result, we suppose that teams in environments that are analogous to spaceflight, such as military teams or teams that perform demanding operational tasks in slightly less extreme environments, undergo typical team phases of forming, (non)-prior familiarity, storming, norming and performing. We also believe that team composition, teamwork, team dynamics, team adaptation and development are, indeed, challenging to study in these environments, so new ways of studying such team characteristics during a bomb defusal task could provide insight for teams who operate in extreme isolation conditions during spaceflights.

Studying the relationship among language, physiological responses, and speech is one way of addressing the challenges of studying team dynamics. Among these ways [60], the investigation of the relationship among acoustic, language and physiological features (particularly HR) in terms of correlation and regression analyses of spontaneous stressful conversations among teammates is included. To the best of our knowledge, this is among the first attempts to explore the relationship among the three aforementioned modalities for stressful conversations. Additionally, providing regression analysis on the actual value of the physiological signals to better address the strengths and limitations of the connection between physiology, speech and language during unconstrained and stressful long duration conversations is of significant importance.

3.1. Preprocessing the Audio Signals

As an initial step, every session of the bomb defusal task should be passed through a denoising module (using VOICEBOX), which does speech enhancement using a minimum mean squared error (MMSE) estimate of spectral amplitude [80]. To reject the silence regions from audio streams, two different techniques are usually employed. The first one is done by forced alignment of the audio with the transcript using the Gentle toolkit (https://github.com/lowerquality/gentle) or implementation of robust Long Short Term Memory (LSTM) based voice activity detection (VAD) [81] using the OPENSMILE toolkit [82]. Speaker diarization should also applied to delineate the utterances of the teammates in the given session. Finally, the forced alignment technique is combined with a hierarchical agglomerative clustering implementation from the LIUM speaker diarization tool (http://www-lium.univ-lemans.fr/diarization/doku.php/welcome).

3.2. Extracting Acoustic Features

With respect to the extraction process, one should extract the 88 dimensional extended GEMAPS or eGEMAPS features [83] from the speech over the whole session using the OPENSMILE toolkit [82]. The eGEMAPS features mainly consist of the mean and the coefficient of variation [83] (statistical functionals) of frequency-related parameters like pitch, jitter and format frequencies; energy/amplitude related parameters like shimmer, loudness and the harmonics-to-noise ratio; and spectral balance parameters such as alpha ratio and harmonic differences. Additionally, a number of temporal features such as the rate of loudness and the mean length of voiced regions are included as well as the mel-frequency cepstral coefficients (MFCCs) and the spectral flux, which are known as cepstral features. The reader is directed to [83] for the complete list of the features that we have used.

Moreover, during experimentation, we expect that computing correlation made separately for male and female speakers will give much better results. We expect to notice similar behaviors in the regression analysis as well. This hypothesis is based on the fact that there are fundamental differences between some of the acoustics features (e.g., pitch) among men and women. Based on this observation, using gender-dependent models is considered the most appropriate method.

3.3. Extracting Language Features

To transcribe the recordings, Linguistic Inquiry and Word Count (LIWC) software should also be used. For the theoretically driven features, dictionaries representing personal pronouns (such as “I” and “we”), certainty words (such as “always” and “must”) and negative emotion words (such as “tension”) should be preset. Testing the unimodal combinations of features should be further accomplished, by using four preset LIWC categories, including linguistic factors (25 features including personal pronouns, word count and verbs), physiological constructs (32 features such as words relating to emotions and thoughts), personal concern categories (seven features such as work, home and money) and paralinguistic variables (three features such as assents and fillers). Also, applying more advanced lexical modeling such as topic modeling is expected to better capture word usage and word choice and unfold all aspects of the defuser’s specific grammar employed in such stressful interactions.

Moreover, considering that our prior study [60] relied on observational cues concerning turn-taking duration measures, we expected that the examination of expressive cues with a more detailed analysis of participants’ lexical features would provide insight into whether these can be linked with their inner physiological signals. In terms of the lexical features, the focus should be on the number of words, the length of the utterances, the number of laughs, the richness of the vocabulary as well as the use of backchannels in terms of short feedback such as “mm-hmm”, “yeah”,etc. Additionally, the investigation of singular pronouns (I, me, mine), assents (OK, yes), non-fluencies (hm, umm), fillers (I mean, you know) and prepositions or words indicating prior familiarity could extend the pool of used features. Such an investigation could provide insight with respect to the relevant vocabulary used in such particular tasks and the speaking style of every team member as the sessions progress.

Note also that in our previous analysis [60] we approached the sense of language features through the main indicators of an interactional speech episode often known as the “turn-taking” episode. The former is defined as the time duration between the end of someone’s turn and the beginning of the other interlocutor’s corresponding turn. Turn-taking responses may span from very short to very long, which may indicate shorter or longer emotional and stressful episodes. In a similar way, in the teammate corpus that was used, we hypothesized that longer turn-taking behavioral responses provided valuable information about the defuser’s perceived cognition and affective state, reflected their external observable as well as implicit inner affective states [60]. We chose to investigate that type of interactional context between the two teammates, motivated by the fact that the instructor’s behavior is more controllable, thus minimizing the effect of the instructor’s variability on the defuser’s behavior. After carefully inspecting various turn-taking behavioral instances in our corpus, we came across a number of interesting tendencies such as overlapped speech and very short utterances, phenomena that are aligned with high levels of stress in highly demanding operational tasks [84].

3.4. Regression of Physiological Variables

Our prior study [60] was based on the use of the Moving Ensemble Average Program (MEAP) [85] to extract features from the data by computing an ensembled average over each epoch. Particularly, a BIOPAC MP150, with a standard lead II electrode configuration was used to record ECG, real-time changes in blood pressure, and impedance cardiography (ZKG). Continuous data were recorded for each participant throughout each task and analyzed offline. The raw time series for each task segmented into 30 second intervals relative to the end of each session, so that a few seconds from the beginning of the “bomb defusion” task were cut out. This was done because a minimum of 30 seconds of cardiovascular data were necessary for further analysis [86].

Twenty-four cardiovascular features were extracted and the most frequently selected are presented in Table 1. Some of these features included HR, LVET (left ventral (systolic) ejection time), p_time, s_time, t_time, x_time, systole_time, pre-ejection period (PEP), ventricular contractility (VC), cardiac output (CO) and total peripheral resistance (TPR). PEP is the time from the onset of the heart muscle depolarization to the opening of the aortic valve. When PEP decreases, VC increases. VC has been shown to be related to task engagement [87,88]. CO is the amount of blood pumped in liters per minute. TPR reflects vasodilation (more blood flow) and vasoconstriction (less blow flow), which are related to parasympathetic and sympathetic activity, respectively. Prior work has shown that TPR unambiguously increases when an individual is in a threat state and decreases in a challenge state, whereas CO either remains unchanged or decreases in a threat state and increases in a challenge state [89].

Table 1.

Most frequently selected physiological features for 10 defusers, 5 each from the Ice Breaker (IB) and Control (CT) conditions, respectively, during the “bomb defusion” task. The features are labeled as follows: heart rate (hr), left ventral (systolic) ejection time (LVET) and pre-ejection period (pep).

To further provide regression analysis on the actual value of the physiological signals and thus to better address the strengths and limitations of the physiological, speech and language connection during unconstrained, fluent and stressful long duration conversations, a promising research pathway would be to employ the AdaBoost regressor [90] with a decision tree regressor as the base estimator for estimating raw or normalized values of HR from acoustic features. All the physiological features are expected to be used by the regressor model and further apply a five-fold stratified cross validation. Particular attention will be given to avoid including speech of the same speaker in both training and testing data during the regression process. Optimal number of base estimators and the learning rate [90] of the regressor would be chosen through three-fold cross validation on the training set and searching the parameter space by grid search.

3.5. Investigating and Extending the Robustness of the Results

Observing our prior experimental results, we came across a number of interesting tendencies. The former ranged from 43.75% to 88.89%, suggesting that physiological signals contained information relevant to the amount of behavioral verbal replies. Additionally, we noticed a great difference in performance across defusers, underlying once again the individual traits of every defuser. Particularly, the selected physiological cues of defusers P100, P102, P202, P203 and P204 appear to be more closely associated to the type of behavioral reply instances (short/long) compared to the corresponding patterns of P101, P103, P104 and P201 (P100-P104 refer to the IB condition, while P200-204 refer to the CT condition) defusers.

Our results suggested that physiological responses conveyed information about the defuser’s inner state, reflected the amount of their verbal responses with respect to a stimuli and could be further linked with the amount of underlying socio-cognitive activity, which is not always obvious through traditional observational methods. As discussed, there was a wide variability across defusers with respect to the given task. This observation indicated that there might be mechanisms triggered in defusers with high learning accuracy, reflected in their physiological signals, which were not present in defusers with low learning performance (i.e., P101, P103, P201). It was also noteworthy that for these three defusers, the selected physiological feature was HR. To further elaborate on this tendency, we went through the audiovisual recordings and the HR signals. We noticed that there was a difference in the arousal levels (i.e., stress) with respect to the type of behavioral replies (short/long) and that arousal affectivity is present in both short and long turn-taking responses, depending on the defuser.

More specifically, we came across examples of defusers who took a long time to respond after having given a wrong answer once and were asked to try again to confirm the bomb defusion steps. Hence, it appears that the task was a sufficiently stressful stimulus for them. In these long turn-taking examples, it is also reasonable to assume that high cognitive activity or stressor events occurred. At the same time, high levels of arousal are noticed in short turn-taking examples, in which for example the defuser uses words such ok/yes/no. This tendency was not aligned with the “bomb defusion” task, considering that we were expecting that short turn-taking examples would reflect low levels of arousal.

On the contrary, our observation suggested that even though there may be no obvious (audible/visible) signals of arousal, physiological signals may provide a complementary, but not overlaid, view of a person’s state. This finding was of particular importance, especially in cognitively demanding tasks in which one of the teammates manipulates the discussion and is also aligned with previous research studies [89,91].

With regard to these insights, analyzing further the correlation and regression performance should be the next step. Particularly with the regression results, it is expected that we will have a better picture about how accurately we can predict physiological signals from speech acoustics for stressful conversations between humans. It is additionally expected to shed light on our idea of quantifying the intensity of stress instead of just detecting it. Finally, it would be interesting to further investigate on finding better acoustic features, to apply deep learning models and to exploit any available temporal pattern in the speech signal that could help us predict physiological responses more accurately. Moreover, the connection between physiology and acoustics could be studied in depth without any human labeling, opening up possibilities for larger data collections and more robustly associating the two modalities via advanced modeling.

Thus, addressing the study of multimodal behavior of team in environments that are analogous to spaceflights (i.e., teams that operate on demanding tasks) is significant for mitigating the NASA risks with respect to human living and working conditions in space. Having annotated data and samples in hand, researchers would be able to rapidly conduct studies in realistic analog environments to learn more about team dynamics.

4. Expected Significance of Mitigating the NASA Risks with Respect to Human Living and Working Conditions in Space

4.1. Treating Team Members as Individuals and as Part of a Team: Selecting the Right Team Members

There is no doubt that long-duration spaceflights are physically, mentally and emotionally demanding procedures compared to simply selecting individual crew members [92]. As a result, mission success depends highly on selecting the right team members who will both perform their respective mission roles effectively as well as work well together. Team-composition research has shown that selecting the most qualified person to perform each designated role does not necessarily yield the most effective team during a collaborative task.

Thus, an emphasis on team composition should be considered as a foundational context for understanding how a team is likely to work together, even when membership is unchangeable. Particularly, among the variables that are important to consider when composing teams for long-duration exploration missions, cultural and gender differences, personality, abilities, expertise, background, team size, compatibility, communication and trust should be taken into account (individual team member’s characteristics). However, all teams are not created equal and certainly those performing long-duration spaceflights are no exception. So, a richer and deeper framework of team cohesion is needed. As such, we define team cohesion as a dynamic, multifaceted phenomenon, that is dependent on the task and teammate characteristics. Additionally, we should keep in mind that each team has unique features and sensitivities with respect to cohesion variability. For example, some teams are highly sensitive to both external (i.e., weather, workload) and internal (i.e., interpersonal conflict) shocks. Thus, although benchmark data are useful for establishing general expectations regarding team cohesion, each team should still be treated as a unique ecosystem.

4.2. Monitoring, Measuring and Enhancing Teamwork Communication in Spaceflights

Astronauts are among the most monitored and measured populations on Earth. However, current monitoring techniques will have to adapt along with the advent of long-duration exploration missions. Currently, psychologists conducting research in lab settings benefit from data collection that does not necessarily interfere with natural processes of human behaviors and interactions. Thus, it is of high importance to allow astronauts to conduct any type of high complex team task without interrupting them. Under this context, collecting data in an unobtrusive way to conduct almost real-time analysis may allows team members to foresee future negative outcomes and prompt team members to further prevent them.

Wearing sensors would be a potential way of great significance to measure the proximity of individuals, when facing each other, as well as their vocal intensity (i.e., a marker of emotions and stress). These sensors could provide important information with respect to the quality of team interactions such as how frequently they interact with each other. Facial analysis could be conducted to provide information related to team behavioral interactions and psychosocial states [93]. Lexical analysis of speech and text, collected from communication logs, could also indicate stress at both the individual and team level [94]. For example, we are aware that the valence of chosen words denotes emotional state and usage of individual pronouns or collective pronouns indicates group affiliation or group performance [95]. Data from diaries and crew journals could further indicate that social and task cohesion would be predictive of performance; whereas, positive emotion words would refer to good performance. Thus, it is possible to go a step forward and rather than simply detect an increase in negative emotions, to determine the cause of emotional changes over time and whether the team is maintaining high levels of communication and cohesion.

Under this context, it is expected that team members will perform more effectively with respect to the situational context on tasks by ensuring all parties understand the information [96] and share the same mental model. Effective communication is key for both team task performance and team well-being [97]. Stress of working and living in demanding environments may manifest in the form of degraded comprehension and oversimplified vocabulary (problematic in complex task situations), and may affect the team’s performance [98]. Cohesive teams tend to exhibit enhanced coordination and performance. Additionally, effective communicators can act as social support for their crew mates through participation and reduce interpersonal tensions [99].

4.3. Advancing Behavioral Technology

With respect to space missions, behavioral technology such as automatic speech recognition (ASR), keyword spotting, speaker identification, vocal, language, physiological and cognitive state detection has the potential of becoming an important part of future space missions [100]. While a simple command recognition system has been successfully tested on the International Space Station (ISS) [101], the applications of behavioral technology on more complicated tasks such as monitoring astronauts’ behavioral health and performance [102,103,104,105,106,107,108] requires a deep understanding of astronauts’ behavioral characteristics during space missions. For example, little research has considered how far astronaut speech production mechanisms change when they are experiencing physical stress such as weightlessness, high g-force and an alternating pressure based environment, which are common during space missions. Since training state-of-the-art behavioral systems for space missions depends highly on the data collected on the ground, the performance of those systems is expected to drop significantly if, for example, astronauts’ behavioral characteristics change in space.

5. Limitations, a Generalization of the Results and the Way Forward

One of the limitations of this study is related to the small amount of data from the highly stressed task (bomb diffusion) we examined, which has been the base for providing fruitful insights and further generalizing to space flight crews. Particularly, for this work, with respect to the bomb diffusion task, we took into account only 10 gender-matched pairs (five from each condition) and examined only the situation in which participant A is the instructor and participant B is the defuser (i.e., we did not examine the case of asking members to switch roles). As such, we expect the overall findings to generalize to other teams that may find themselves in such stressful settings for extended periods of time. Some principles that emerge from this research are also likely to apply to military teams and NASA missions.

Considering that this work verified behavior markers inspired from existing psychology research, our ultimate goal is to provide insight with respect to allowing the team to function automatically when critical situations happen. This is particularly important in military, aviation and health care scenarios when no instant external intervention is available. The success of the space industry and corporations is contingent upon performing teams.

Although our method is applied to assess affect and cohesion, the behavior features could be generalized to understand how teammate interactions and individual behavior patterns influence other team outcomes, such as performance and productive behavior within teams, task performance, causes of task behavior, occupational stress, workplace stressors, task commitment, task beliefs, reward system and team member satisfaction.

In summary, we suggest that capturing dynamic team-member interactions through several modalities, including team members interaction through facial, vocal and physiological activities has the potential to provide insight into team relationships, cohesion and performance, as well as individual team members’ affective and cognitive states. Being able to identify candidate combinations of assessment techniques, data sources and psychosocial states of interest to NASA, we decided to focus on a similar team that performed a demanding operational task. Particularly, in our recently presented work [60], we provided an analysis of physiological signals in a dyadic team “bomb defusion” scenario in association with their expressive behavioral cues. Our preliminary results suggested that physiological responses convey information about the defuser’s inner state. They also reflected the amount of the defuser’s verbal responses with respect to a stimuli and could be further linked with the amount of underlying socio-cognitive activity, which is not always obvious through traditional observational methods.

In a similar way, we believe that at a later point we will be able to identify and track the emotional states of astronauts keeping blogs or journals as well as to find correlations between topics the astronauts write about and discuss and their positive and negative shifts in emotional states. We already have a proven track record in the area of automatic behavior analysis [55,60,109,110,111,112]. Utilizing these strengths, adding expertise in extreme spaceflight conditions and further developing novel advanced approaches to assess complex and dynamic behavior of individuals are expected to further help our understanding of the underlying mechanisms of high team cohesion. Understanding these mechanisms will allow us to predict and control dynamic interaction among humans and enable technology in human–system interaction to enhance and sustain cohesion as a human team member would.

Finally, being able to determine how best to conceptualize and measure team cohesiveness in a meaningful way could capture affective states over time. Further work is expected to shed light into clarifying the “social roles” that team members need to fulfill in addition to their technical roles. Such progress in these areas is expected to help NASA prepare for future space missions, is also likely to yield tools and techniques of interest to other team researchers and finally will provide insights that can be applied to teams in various settings.

Author Contributions

Conceptualization, A.V.; methodology and software, A.V.; writing—original draft preparation, A.V.; writing—review and editing, A.V., A.H. and P.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kozlowski, S.W.; Chao, G.T.; Chang, C.; Fernandez, R. Team dynamics: Using “big data” to advance the science of team effectiveness. In Big Data at Work: The Data Science Revolution and Organizational Psychology; Routledge: Abingdon-on-Thames, UK, 2015; pp. 273–309. [Google Scholar]

- Pentland, A. Society’s nervous system: Building effective government, energy, and public health systems. IEEE Comput. 2012, 45, 31–38. [Google Scholar] [CrossRef]

- Salas, E.; Tannenbaum, S.I.; Kozlowski, S.W.; Miller, C.A.; Mathieu, J.E.; Vessey, W.B. Teams in space exploration: A new frontier for the science of team effectiveness. Curr. Dir. Psychol. Sci. 2015, 24, 200–207. [Google Scholar] [CrossRef]

- Chiniara, M.; Bentein, K. The servant leadership advantage: When perceiving low differentiation in leader-member relationship quality influences team cohesion, team task performance and service OCB. Leadersh. Q. 2018, 29, 333–345. [Google Scholar] [CrossRef]

- Kao, C.C. Development of team cohesion and sustained collaboration skills with the sport education model. Sustainability 2019, 11, 2348. [Google Scholar] [CrossRef]

- Acton, B.P.; Braun, M.T.; Foti, R.J. Built for Unity: Assessing the Impact of Team Composition on Team Cohesion Trajectories. Available online: https://link.springer.com/article/10.1007/s10869-019-09654-7 (accessed on 15 July 2020).

- Susskind, A.M.; Odom-Reed, P.R. Team member’s centrality, cohesion, conflict, and performance in multi-university geographically distributed project teams. Commun. Res. 2019, 46, 151–178. [Google Scholar] [CrossRef]

- Festinger, L.; Schachter, S.; Back, K. Social Pressures in Informal Groups: A Study of Human Factors in Housing. Available online: https://psycnet.apa.org/record/1951-02994-000 (accessed on 15 July 2020).

- Mullen, B.; Copper, C. The relation between group cohesiveness and performance: An integration. Psychol. Bull. 1994, 115, 210. [Google Scholar] [CrossRef]

- Griffith, J. Test of a Model Incorporating Stress, Strain, and Disintegration in the Cohesion-Performance Relation 1. J. Appl. Soc. Psychol. 1997, 27, 1489–1526. [Google Scholar] [CrossRef]

- Henderson, J.; Bourgeois, A.; Leunes, A.; Meyers, M.C. Group cohesiveness, mood disturbance, and stress in female basketball players. Small Group Res. 1998, 29, 212–225. [Google Scholar] [CrossRef]

- Cohen, S.; Kessler, R.C.; Gordon, L.U. Measuring Stress: A Guide for Health and Social Scientists; Oxford University Press: Oxford, UK, 1997. [Google Scholar]

- Cacioppo, J.T.; Tassinary, L.G.; Berntson, G.G. Psychophysiological science. Handb. Psychophysiol. 2000, 2, 3–23. [Google Scholar]

- Schneiderman, N.; Ironson, G.; Siegel, S.D. Stress and health: Psychological, behavioral, and biological determinants. Annu. Rev. Clin. Psychol. 2005, 1, 607–628. [Google Scholar] [CrossRef]

- Selye, H. The stress syndrome. Am. J. Nurs. 1965, 65, 97–99. [Google Scholar]

- Vitetta, L.; Anton, B.; Cortizo, F.; Sali, A. Mind-body medicine: Stress and its impact on overall health and longevity. Ann. N. Y. Acad. Sci. 2005, 1057, 492–505. [Google Scholar] [CrossRef] [PubMed]

- Wittels, P.; Johannes, B.; Enne, R.; Kirsch, K.; Gunga, H.C. Voice monitoring to measure emotional load during short-term stress. Eur. J. Appl. Physiol. 2002, 87, 278–282. [Google Scholar] [CrossRef]

- Sharma, N.; Gedeon, T. Objective measures, sensors and computational techniques for stress recognition and classification: A survey. Comput. Methods Programs Biomed. 2012, 108, 1287–1301. [Google Scholar] [CrossRef]

- Gorman, J.M.; Sloan, R.P. Heart rate variability in depressive and anxiety disorders. Am. Heart J. 2000, 140, S77–S83. [Google Scholar] [CrossRef] [PubMed]

- Vrijkotte, T.G.; van Doornen, L.J.; de Geus, E.J. Effects of Work Stress on Ambulatory Blood Pressure, Heart Rate, and Heart Rate Variability. Hypertension 2000, 35, 880–886. [Google Scholar] [CrossRef]

- Katsis, C.D.; Katertsidis, N.S.; Fotiadis, D.I. An integrated system based on physiological signals for the assessment of affective states in patients with anxiety disorders. Biomed. Signal Process. Control. 2011, 6, 261–268. [Google Scholar] [CrossRef]

- Healey, J.A.; Picard, R.W. Detecting stress during real-world driving tasks using physiological sensors. IEEE Trans. Intell. Transp. Syst. 2005, 6, 156–166. [Google Scholar] [CrossRef]

- McDuff, D.; Gontarek, S.; Picard, R. Remote measurement of cognitive stress via heart rate variability. In Proceedings of the 36th Annual International Conference of the Engineering in Medicine and Biology Society, Chicago, IL, USA, 26–30 August 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 2957–2960. [Google Scholar]

- Schubert, C.; Lambertz, M.; Nelesen, R.; Bardwell, W.; Choi, J.B.; Dimsdale, J. Effects of stress on heart rate complexity—A comparison between short-term and chronic stress. Biol. Psychol. 2009, 80, 325–332. [Google Scholar] [CrossRef]

- Marks, M.A.; Mathieu, J.E.; Zaccaro, S.J. A temporally based framework and taxonomy of team processes. Acad. Manag. Rev. 2001, 26, 356–376. [Google Scholar] [CrossRef]

- Kelly, J.R.; Barsade, S.G. Mood and emotions in small groups and work teams. Organ. Behav. Hum. Decis. Process. 2001, 86, 99–130. [Google Scholar] [CrossRef]

- Moturu, S.T.; Khayal, I.; Aharony, N.; Pan, W.; Pentland, A. Using social sensing to understand the links between sleep, mood, and sociability. In Proceedings of the Third International Conference on Privacy, Security, Risk and Trust and Third International Conference on Social Computing, Boston, MA, USA, 9–11 October 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 208–214. [Google Scholar]

- Fiore, S.M.; Carter, D.R.; Asencio, R. Conflict, trust, and cohesion: Examining affective and attitudinal factors in science teams. In Team Cohesion: Advances in Psychological Theory, Methods and Practice; Emerald Group Publishing Limited: Bingley, UK, 2015. [Google Scholar]

- Grossman, R.; Rosch, Z.; Mazer, D.; Salas, E. What matters for team cohesion measurement? A synthesis. In Team Cohesion: Advances in Psychological Theory, Methods and Practice; Emerald Group Publishing Limited: Bingley, UK, 2015. [Google Scholar]

- Yammarino, F.J.; Mumford, M.D.; Connelly, M.S.; Day, E.A.; Gibson, C.; McIntosh, T.; Mulhearn, T. Leadership models for team dynamics and cohesion: The Mars mission. In Team Cohesion: Advances in Psychological Theory, Methods and Practice; Emerald Group Publishing Limited: Bingley, UK, 2015. [Google Scholar]

- Britt, T.W.; Jex, S.M. Organizational Psychology: A Scientist-Practitioner Approach; John Wiley & Sons: Hoboken, NJ, USA, 2008. [Google Scholar]

- Kozlowski, S.W.; Chao, G.T. Unpacking team process dynamics and emergent phenomena: Challenges, conceptual advances, and innovative methods. Am. Psychol. 2018, 73, 576. [Google Scholar] [CrossRef] [PubMed]

- Beal, D.J.; Cohen, R.R.; Burke, M.J.; McLendon, C.L. Cohesion and performance in groups: A meta-analytic clarification of construct relations. J. Appl. Psychol. 2003, 88, 989. [Google Scholar] [CrossRef] [PubMed]

- Carron, A.V.; Brawley, L.R. Cohesion: Conceptual and measurement issues. Small Group Res. 2000, 31, 89–106. [Google Scholar] [CrossRef]

- Forsyth, D.R.; Zyzniewski, L.E.; Giammanco, C.A. Responsibility diffusion in cooperative collectives. Personal. Soc. Psychol. Bull. 2002, 28, 54–65. [Google Scholar] [CrossRef]

- Oliver, L.W. The Relationship of Group Cohesion to Group Performance: A Research Integration Attempt; US Army Research Institute for the Behavioral and Social Sciences: Fort Belvoir, VA, USA, 1988; Volume 807. [Google Scholar]

- Eagle, N.; Pentland, A.S. Reality mining: Sensing complex social systems. Pers. Ubiquitous Comput. 2006, 10, 255–268. [Google Scholar] [CrossRef]

- Gloor, P.A.; Grippa, F.; Putzke, J.; Lassenius, C.; Fuehres, H.; Fischbach, K.; Schoder, D. Measuring social capital in creative teams through sociometric sensors. Int. J. Organ. Des. Eng. 2012, 2, 380–401. [Google Scholar] [CrossRef]

- Olguín Olguín, D. Sensor-Based Organizational Design and Engineering. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2011. [Google Scholar]

- Nanninga, M.C.; Zhang, Y.; Lehmann-Willenbrock, N.; Szlávik, Z.; Hung, H. Estimating verbal expressions of task and social cohesion in meetings by quantifying paralinguistic mimicry. In Proceedings of the 19th ACM International Conference on Multimodal Interaction, Glasgow, UK, 13–17 November 2017; ACM: New York, NY, USA, 2017; pp. 206–215. [Google Scholar]

- Neubauer, C.; Woolley, J.; Khooshabeh, P.; Scherer, S. Getting to know you: A multimodal investigation of team behavior and resilience to stress. In Proceedings of the 18th International Conference on Multimodal Interaction (ICMI), Tokyo, Japan, 12–16 November 2016; ACM: New York, NY, USA, 2016; pp. 193–200. [Google Scholar]

- Vlachostergiou, A. Emotion and Sentiment Analysis. Ph.D. Thesis, National Technical University of Athens, Athens, Greece, 2018. [Google Scholar]

- Schilit, B.; Adams, N.; Want, R. Context-Aware Computing Applications. In Proceedings of the First Workshop on Mobile Computing Systems and Applications, Santa Cruz, CA, USA, 8–9 December 1994; IEEE: Piscataway, NJ, USA, 1994; pp. 85–90. [Google Scholar]

- Brown, P.J.; Bovey, J.D.; Chen, X. Context-aware applications: From the laboratory to the marketplace. IEEE Pers. Commun. 1997, 4, 58–64. [Google Scholar] [CrossRef]

- Ryan, N.S.; Pascoe, J.; Morse, D.R. Enhanced Reality Fieldwork: The Context-aware Archaeological Assistant. Available online: https://proceedings.caaconference.org/paper/44_ryan_et_al_caa_1997/ (accessed on 15 July 2020).

- Brown, P.J. The Stick-e Document: A Framework for Creating Context-aware Applications. In Proceedings of the EP’96, Palo Alto, CA, USA, 23 September 1996; pp. 182–196. [Google Scholar]

- Franklin, D.; Flaschbart, J. All gadget and no representation makes jack a dull environment. In Proceedings of the AAAI 1998 Spring Symposium Series on Intelligent Environments, Palo Alto, CA, USA, 23–25 March 1998. [Google Scholar]

- Abowd, G.D.; Dey, A.K.; Brown, P.J.; Davies, N.; Smith, M.; Steggles, P. Towards a better understanding of context and context-awareness. In Handheld and Ubiquitous Computing; Springer: Berlin/Heidelberg, Germany, 1999; pp. 304–307. [Google Scholar]

- Zimmermann, A.; Lorenz, A.; Oppermann, R. An Operational Definition of Context. In Modeling and Using Context; Springer: Berlin/Heidelberg, Germany, 2007; pp. 558–571. [Google Scholar]

- Bonin, F.; Bock, R.; Campbell, N. How Do We React to Context? Annotation of Individual and Group Engagement in a Video Corpus. In Proceedings of the Privacy, Security, Risk and Trust (PASSAT), International Conference on Social Computing (SocialCom), Amsterdam, The Netherlands, 3–5 September 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 899–903. [Google Scholar]

- Bock, R.; Wendemuth, A.; Gluge, S.; Siegert, I. Annotation and Classification of Changes of Involvement in Group Conversation. In Proceedings of the Humaine Association Conference on Affective Computing and Intelligent Interaction, Geneva, Switzerland, 2–5 September 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 803–808. [Google Scholar]

- Gatica-Perez, D. Automatic nonverbal analysis of social interaction in small groups: A review. Image Vis. Comput. 2009, 27, 1775–1787. [Google Scholar] [CrossRef]

- Choudhury, T.; Pentland, A. Modeling Face-to-Face communication using the Sociometer. Interactions 2003, 5, 3–8. [Google Scholar]

- Wöllmer, M.; Eyben, F.; Schuller, B.W.; Rigoll, G. Temporal and Situational Context Modeling for Improved Dominance Recognition in Meetings. In Proceedings of Interspeech; ISCA: Singapore, 2012; pp. 350–353. [Google Scholar]

- Vlachostergiou, A.; Caridakis, G.; Kollias, S. Context in Affective Multiparty and Multimodal Interaction: Why, Which, How and Where? In Proceedings of the 2014 Workshop on Understanding and Modeling Multiparty, Multimodal Interactions; ACM: New York, NY, USA, 2014; pp. 3–8. [Google Scholar]

- Cacioppo, J.T.; Petty, R.E.; Tassinary, L.G. Social psychophysiology: A new look. In Advances in Experimental Social Psychology; Elsevier: Amsterdam, The Netherlands, 1989; Volume 22, pp. 39–91. [Google Scholar]

- Bakker, J.; Holenderski, L.; Kocielnik, R.; Pechenizkiy, M.; Sidorova, N. Stress@ work: From measuring stress to its understanding, prediction and handling with personalized coaching. In Proceedings of the 2nd International Health Informatics Symposium; ACM: New York, NY, USA, 2012; pp. 673–678. [Google Scholar]

- Selye, H. Stress and the general adaptation syndrome. Br. Med J. 1950, 1, 1383. [Google Scholar] [CrossRef] [PubMed]

- Lazarus, R.S.; Folkman, S. Stress, Appraisal, and Coping; Springer: Berlin/Heidelberg, Germany, 1984. [Google Scholar]

- Vlachostergiou, A.; Dennison, M.; Neubauer, C.; Scherer, S.; Khooshabeh, P.; Harrison, A. Unfolding the External Behavior and Inner Affective State of Teammates through Ensemble Learning: Experimental Evidence from a Dyadic Team Corpus. In Proceedings of the Eleventh International Conference on Language Resources and Evaluation (LREC 2018), Miyazaki, Japan, 7–12 May 2018. [Google Scholar]

- Lech, M.; He, L. Stress and emotion recognition using acoustic speech analysis. In Mental Health Informatics; Springer: Berlin/Heidelberg, Germany, 2014; pp. 163–184. [Google Scholar]

- Rajasekaran, P.; Doddington, G.; Picone, J. Recognition of speech under stress and in noise. In Proceedings of the International Conference on Acoustics, Speech, and Signal Processing, Tokyo, Japan, 7–11 April 1986; IEEE: Piscataway, NJ, USA, 1986; Volume 11, pp. 733–736. [Google Scholar]

- Lieberman, P.; Michaels, S.B. Some aspects of fundamental frequency and envelope amplitude as related to the emotional content of speech. J. Acoust. Soc. Am. 1962, 34, 922–927. [Google Scholar] [CrossRef]

- Streeter, L.A.; Macdonald, N.H.; Apple, W.; Krauss, R.M.; Galotti, K.M. Acoustic and perceptual indicators of emotional stress. J. Acoust. Soc. Am. 1983, 73, 1354–1360. [Google Scholar] [CrossRef]

- Johannes, B.; Wittels, P.; Enne, R.; Eisinger, G.; Castro, C.A.; Thomas, J.L.; Adler, A.B.; Gerzer, R. Non-linear function model of voice pitch dependency on physical and mental load. Eur. J. Appl. Physiol. 2007, 101, 267–276. [Google Scholar] [CrossRef] [PubMed]

- Godin, K.W.; Hansen, J.H. Analysis of the effects of physical task stress on the speech signal. J. Acoust. Soc. Am. 2011, 130, 3992–3998. [Google Scholar] [CrossRef]

- Raux, A.; Eskenazi, M. A finite-state turn-taking model for spoken dialog systems. In Proceedings of the Human Language Technologies: The Annual Conference of the North American Chapter of the Association for Computational Linguistics, ACL, Boulder, CO, USA, 31 May–5 June 2009; pp. 629–637. [Google Scholar]

- El-Sheikh, M.; Cummings, E.M.; Goetsch, V.L. Coping with adults’ angry behavior: Behavioral, physiological, and verbal responses in preschoolers. Dev. Psychol. 1989, 25, 490. [Google Scholar] [CrossRef]

- Dennison, M.; Neubauer, C.; Passaro, T.; Harrison, A.; Scherer, S.; Khooshabeh, P. Using cardiovascular features to classify state changes during cooperation in a simulated bomb diffusal task. In Proceedings of the Physiologically Aware Virtual Agents Workshop; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar]

- Skopin, D.; Baglikov, S. Heartbeat feature extraction from vowel speech signal using 2D spectrum representation. In Proceedings of the 4th International Conference on Information Technology (ICIT), Amman, Jordan, 3–5 June 2009. [Google Scholar]

- Schuller, B.; Friedmann, F.; Eyben, F. Automatic recognition of physiological parameters in the human voice: Heart rate and skin conductance. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 7219–7223. [Google Scholar]

- Alberdi, A.; Aztiria, A.; Basarab, A. Towards an automatic early stress recognition system for office environments based on multimodal measurements. J. Biomed. Inf. 2016, 59, 49–75. [Google Scholar] [CrossRef]

- Jaques, N.; Taylor, S.; Nosakhare, E.; Sano, A.; Picard, R. Multi-task learning for predicting health, stress, and happiness. In Proceedings of the NIPS Workshop on Machine Learning for Healthcare, Barcelona, Spain, 8 December 2016. [Google Scholar]

- Rastgoo, M.N.; Nakisa, B.; Rakotonirainy, A.; Chandran, V.; Tjondronegoro, D. A critical review of proactive detection of driver stress levels based on multimodal measurements. ACM Comput. Surv. (CSUR) 2018, 51, 1–35. [Google Scholar] [CrossRef]

- Aigrain, J.; Spodenkiewicz, M.; Dubuiss, S.; Detyniecki, M.; Cohen, D.; Chetouani, M. Multimodal stress detection from multiple assessments. IEEE Trans. Affect. Comput. 2016, 9, 491–506. [Google Scholar] [CrossRef]

- Lefter, I.; Burghouts, G.J.; Rothkrantz, L.J. Recognizing stress using semantics and modulation of speech and gestures. IEEE Trans. Affect. Comput. 2015, 7, 162–175. [Google Scholar] [CrossRef]

- Picard, R.W. Automating the recognition of stress and emotion: From lab to real-world impact. IEEE Multimed. 2016, 23, 3–7. [Google Scholar] [CrossRef]

- Greene, S.; Thapliyal, H.; Caban-Holt, A. A survey of affective computing for stress detection: Evaluating technologies in stress detection for better health. IEEE Consum. Electron. Mag. 2016, 5, 44–56. [Google Scholar] [CrossRef]

- Pandiarajan, M.; Hargens, A.R. Ground-Based Analogs for Human Spaceflight. Front. Physiol. 2020, 11, 716. [Google Scholar] [CrossRef] [PubMed]

- Ephraim, Y.; Malah, D. Speech enhancement using a minimum-mean square error short-time spectral amplitude estimator. IEEE Trans. Acoust. Speech Signal Process. 1984, 32, 1109–1121. [Google Scholar] [CrossRef]

- Eyben, F.; Weninger, F.; Squartini, S.; Schuller, B. Real-life voice activity detection with LSTM recurrent neural networks and an application to Hollywood movies. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 483–487. [Google Scholar]

- Eyben, F.; Wöllmer, M.; Schuller, B. Opensmile: The munich versatile and fast open-source audio feature extractor. In Proceedings of the 18th International Conference on Multimedia; ACM: New York, NY, USA, 2010; pp. 1459–1462. [Google Scholar]

- Eyben, F.; Scherer, K.R.; Schuller, B.W.; Sundberg, J.; André, E.; Busso, C.; Devillers, L.Y.; Epps, J.; Laukka, P.; Narayanan, S.S.; et al. The Geneva minimalistic acoustic parameter set (GeMAPS) for voice research and affective computing. IEEE Trans. Affect. Comput. 2016, 7, 190–202. [Google Scholar] [CrossRef]

- Heldner, M.; Edlund, J. Pauses, gaps and overlaps in conversations. J. Phon. 2010, 38, 555–568. [Google Scholar] [CrossRef]

- Cieslak, M. Moving Ensemble Averaging Program. Available online: https://github.com/mattcieslak/MEAP (accessed on 1 August 2017).

- Neubauer, C.; Chollet, M.; Mozgai, S.; Dennison, M.; Khooshabeh, P.; Scherer, S. The relationship between task-induced stress, vocal changes, and physiological state during a dyadic team task. In Proceedings of the 19th ACM International Conference on Multimodal Interaction; ACM: New York, NY, USA, 2017; pp. 426–432. [Google Scholar]

- Spangler, D.P.; Friedman, B.H. Effortful control and resiliency exhibit different patterns of cardiac autonomic control. Int. J. Psychophysiol. 2015, 96, 95–103. [Google Scholar] [CrossRef]

- Seery, M.D. Challenge or threat? Cardiovascular indexes of resilience and vulnerability to potential stress in humans. Neurosci. Biobehav. Rev. 2011, 35, 1603–1610. [Google Scholar] [CrossRef]

- Tomaka, J.; Blascovich, J.; Kibler, J.; Ernst, J.M. Cognitive and physiological antecedents of threat and challenge appraisal. J. Personal. Soc. Psychol. 1997, 73, 63. [Google Scholar] [CrossRef]

- Drucker, H. Improving Regressors Using Boosting Techniques. In ICML; Morgan Kaufmann: Burlington, MA, USA, 1997; Volume 97, pp. 107–115. [Google Scholar]

- Gellatly, I.R.; Meyer, J.P. The effects of goal difficulty on physiological arousal, cognition, and task performance. J. Appl. Psychol. 1992, 77, 694. [Google Scholar] [CrossRef]

- Flynn, C.F. An operational approach to long-duration mission behavioral health and performance factors. Aviat. Space, Environ. Med. 2005, 76, B42–B51. [Google Scholar]

- Dinges, D.; Metaxas, D.; Zhong, L.; Yu, X.; Wang, L.; Dennis, L.; Basner, M. Optical Computer Recognition of Stress, Affect and Fatigue in Space Flight. Available online: http://nsbri.org/researches/optical-computer-recognition-of-stress-affect-and-fatigue-in-space-flight/ (accessed on 15 July 2020).

- Driskell, T.; Salas, E.; Driskell, J.; Iwig, C. Inter-and intra-crew differences in stress response: A lexical profile. In Proceedings of the NASA Human Research Program Investigators’ Workshop, Galveston, TX, USA, 23–26 January 2017. [Google Scholar]

- Gonzales, A.L.; Hancock, J.T.; Pennebaker, J.W. Language style matching as a predictor of social dynamics in small groups. Commun. Res. 2010, 37, 3–19. [Google Scholar] [CrossRef]

- Love, S.G.; Bleacher, J.E. Crew roles and interactions in scientific space exploration. Acta Astronaut. 2013, 90, 318–331. [Google Scholar] [CrossRef]

- Guzzo, R.A.; Dickson, M.W. Teams in organizations: Recent research on performance and effectiveness. Annu. Rev. Psychol. 1996, 47, 307–338. [Google Scholar] [CrossRef]

- Orasanu, J.M. Enhancing Team Performance for Long-Duration Space Missions. Available online: https://ntrs.nasa.gov/search.jsp?R=20110008663 (accessed on 15 July 2020).

- Burrough, B. Dragonfly: NASA and the Crisis Aboard the MIR; HarperCollins: New York, NY, USA, 1998. [Google Scholar]

- Yu, C.; Liu, G.; Hahm, S.; Hansen, J.H. Uncertainty propagation in front end factor analysis for noise robust speaker recognition. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing, Florence, Italy, 4–9 May 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 4017–4021. [Google Scholar]

- Rayner, M.; Hockey, B.A.; Renders, J.M.; Chatzichrisafis, N.; Farrell, K. Spoken dialogue application in space: The Clarissa procedure browser. In Speech Technology; Springer: Berlin/Heidelberg, Germany, 2010; pp. 221–250. [Google Scholar]

- Salas, E.; Driskell, J.; Driskell, T. Using real-time lexical indicators to detect performance decrements in spaceflight teams: A methodology to dynamically monitor cognitive, emotional, and social mechanisms that influence performance. In Proceedings of the NASA Human Research Program Investigators’ Workshop, Galveston, TX, USA, 12–13 February 2014; p. 3089. [Google Scholar]

- Sangwan, A.; Kaushik, L.; Yu, C.; Hansen, J.H.; Oard, D.W. ‘houston, we have a solution’: Using NASA apollo program to advance speech and language processing technology. In Proceedings of the Interspeech, Lyon, France, 25–29 August 2013; pp. 1135–1139. [Google Scholar]

- Oard, D.; Sangwan, A.; Hansen, J.H. Reconstruction of apollo mission control center activity. In Proceedings of the First Workshop on the Exploration, Navigation and Retrieval of Information in Cultural Heritage (ENRICH 2013), Dublin, Ireland, 1 August 2013. [Google Scholar]

- Beven, G.E. NASA’s Behavioral Health and Performance Services for Long Duration Spaceflight Missions. Available online: https://ntrs.nasa.gov/search.jsp?R=20200001705 (accessed on 15 July 2020).

- Vander Ark, S.; Holland, A.; Picano, J.J.; Beven, G.E. Long Term Behavioral Health Surveillance of Former Astronauts at the NASA Johnson Space Center. In Proceedings of the Aerospace Medicine Association’s 90th Annual Scientific Meeting, Las Vegas, NV, USA, 5–9 May 2018. [Google Scholar]

- Sirmons, T.A.; Roma, P.G.; Whitmire, A.M.; Smith, S.M.; Zwart, S.R.; Young, M.; Douglas, G.L. Meal replacement in isolated and confined mission environments: Consumption, acceptability, and implications for physical and behavioral health. Physiol. Behav. 2020, 219, 112829. [Google Scholar] [CrossRef] [PubMed]

- Friedl, K.E. Military applications of soldier physiological monitoring. J. Sci. Med. Sport 2018, 21, 1147–1153. [Google Scholar] [CrossRef]

- Vlachostergiou, A.; Caridakis, G.; Kollias, S. Investigating context awareness of affective computing systems: A critical approach. Procedia Comput. Sci. 2014, 39, 91–98. [Google Scholar] [CrossRef]

- Vlachostergiou, A.; Caridakis, G.; Raouzaiou, A.; Kollias, S. Hci and natural progression of context-related questions. In International Conference on Human-Computer Interaction; Springer: Berlin/Heidelberg, Germany, 2015; pp. 530–541. [Google Scholar]

- Vlachostergiou, A.; Marandianos, G.; Kollias, S. Context incorporation using context—Aware language features. Proceeding of the 25th European Signal Processing Conference (EUSIPCO), Kos, Greece, 28 August–2 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 568–572. [Google Scholar]

- Vlachostergiou, A.; Caridakis, G.; Mylonas, P.; Stafylopatis, A. Learning representations of natural language texts with generative adversarial networks at document, sentence, and aspect level. Algorithms 2018, 11, 164. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).