Abstract

Traditional tools, such as canes, are no longer enough to subsist the mobility and orientation of visually impaired people in complex environments. Therefore, technological solutions based on computer vision tasks are presented as promising alternatives to help detect obstacles. Object detection models are easy to couple to mobile systems, do not require a large consumption of resources on mobile phones, and act in real-time to alert users of the presence of obstacles. However, existing object detectors were mostly trained with images from platforms such as Kaggle, and the number of existing objects is still limited. For this reason, this study proposes to implement a mobile system that integrates an object detection model for the identification of obstacles intended for visually impaired people. Additionally, the mobile application integrates multimodal feedback through auditory and haptic interaction, ensuring that users receive real-time obstacle alerts via voice guidance and vibrations, further enhancing accessibility and responsiveness in different navigation contexts. The chosen scenario to develop the obstacle detection application is the Specialized Educational Unit Dr. Luis Benavides for impaired people, which is the source of images for building the dataset for the model and evaluating it with impaired individuals. To determine the best model, the performance of YOLO is evaluated by means of a precision adjustment through the variation of epochs, using a proprietary data set of 7600 diverse images. The YOLO-300 model turned out to be the best, with a mAP of 0.42.

1. Introduction

In order to guide visually impaired persons, traditional mobility aids are used. For example, a walking stick is used to detect obstacles but has limitations, such as a limited detection range and potential physical fatigue. Although these mobility aids provide some safety and help detect nearby ground-level obstacles, their capacity is insufficient to identify moving or more distant obstacles. This lack of coverage can leave visually impaired people exposed to constant risk in their daily lives. The integration of sensors in traditional sticks has been proposed as a solution to recognize and detect objects, helping to avoid collisions or interruptions during mobility [1,2,3,4]. Alternatively, robotic-based autonomous systems have been developed to achieve the same goal [5]. However, challenges remain for real applications, including the effective implementation of real-time devices and reliability in object recognition. This gap between research and practical application highlights the need to optimize object detection systems to guide visually impaired individuals.

Current solutions require significant user interaction, which can reduce their practicality in complex environments. Furthermore, their effectiveness can be compromised in specific environments, such as crowded spaces or the detection of low-lying obstacles. In response to these limitations, technological solutions based on deep learning and computer vision have emerged as viable and effective alternatives to improve mobility and autonomy for visually impaired individuals [6,7,8]. Deep learning architectures have significantly enhanced object detection because they offer more accurate and efficient solutions for various applications, including assistive technologies for visually impaired persons [8,9]. By training models on large datasets, deep learning models can learn to detect objects in diverse environments, improving their ability to detect obstacles of various types and sizes [10]. Object detection models such as YOLO (You Only Look Once) [11] offer real-time processing capabilities that can be integrated into mobile applications, providing immediate assistance through auditory and haptic feedback to warn of obstacles in the environment.

This paper proposes an efficient mobile-based system for obstacle detection. The proposed system provides a real-time obstacle detector to aid visually impaired persons in their mobility. This approach focuses on improving the safe and efficient mobility of visually impaired persons. In order to achieve this goal, this system is evaluated for visually impaired students of the Specialized Educational Unit Dr. Luis Benavides in Ecuador. The obstacle detection model, which is based on YOLOv5s, is integrated into a mobile application with the objective of optimizing the detection of obstacles near high school students, thereby helping to avoid collisions. Additionally, we introduce a new dataset with 7600 images with 76 classes. The images of the dataset are captured using a 200 MP camera with an f/1.65 aperture, and 1/1.4-inch sensor to ensure high-resolution and stable visual data for model training. This dataset is referred to as the Detectra dataset and is available at https://www.kaggle.com/datasets/jhontroya/dectectra-dataset (accessed on 30 April 2025). Additionally, the source code is available at https://github.com/pxmore91/vision-aid-mobile (accessed on 30 April 2025).

2. Related Work

People with visual impairments need assistance with everyday tasks, such as navigating their surroundings and detecting obstacles, to maintain independence indoors and outdoors [12]. Several solutions have been developed to address the challenges faced by visually impaired people. Despite the fact that current solutions have achieved significant advances, they still face limitations in accuracy, computational efficiency, or adaptability to real-world environments. Therefore, numerous computer vision-based systems have been proposed in recent years [7,8]. Rahman et al. [6] propose a wearable electronic guidance system for visually impaired individuals. This system is designed to detect obstacles, humps, and falls providing real-time monitoring and navigation support. The system integrates ultrasonic sensors, a PIR motion sensor, an accelerometer, a microcontroller, and a smartphone application. On the other hand, Rahmad et al. [13] use the Harris corner detection method to locate objects in images captured by a phone camera to assist visually impaired people by identifying objects in their surroundings. The object position and distance estimation are based on the number and location of detected corners. Once enough corners are detected, a triangle rule is used to calculate distance based on estimations of the phone’s distance to the land and the camera’s point of view. While it can detect objects’ position and distance, many calculations are performed based on estimations that can impact the precision of the calculated distance.

While Rahmad et al. [13] focused on utilizing the Harris corner detection method to estimate object position and distance, relying heavily on estimations that can affect precision, Alshahrani et al. [14] took a different approach by leveraging deep learning techniques. They introduced Basira, an intelligent mobile application designed to enhance the navigation capabilities of visually impaired individuals through real-time object detection and auditory feedback. Using the Flutter framework, which allows multi-platform application development from a single code base, Basira incorporates deep learning models such as YOLOv5s for identifying objects, obstacles, and road-crossing scenarios. This object detection allows the application to facilitate street crossings, identify obstacles, and locate objects through users’ input voice. By offering an accessible interface and voice guidance in Arabic, Basira aims to improve mobility and independence for users in diverse environments. Another mobile framework is proposed in [15], introducing SOMAVIP, an outdoor mobility aid designed for visually impaired individuals. This system integrates custom-trained YOLOv5 and Mask R-CNN models to detect and classify eight kinds of obstacles, such as e-scooters, trash bags, and benches, which are common hazards for visually impaired pedestrians. However, there is a data imbalance in two of the identified obstacles, e-scooters and trash bags, that are augmented to balance the data.

Moreover, a machine-learning-based approach is proposed in [3]. This system calculates the distance between the visually impaired individual and obstacles in their path while identifying objects using the Viola–Jones algorithm [16] and the TensorFlow Object Detection API. Ashiq et al. [9] introduce a CNN-based object recognition and tracking system designed to assist visually impaired individuals (VIPs) by improving mobility and safety. Using the lightweight MobileNet architecture, the system operates efficiently on low-power devices such as Raspberry Pi and provides real-time audio feedback to help them identify objects in their environment. In [17], the authors develop a mobile application that uses portable cameras and YOLO3 technology to detect and locate objects in the environment, determining their distance and direction to guide blind users. Additionally, Ali et al. [18] presents a framework based on YOLO3 for developing an autonomous UAV system capable of avoiding obstacles and localizing itself in GPS-denied environments to support structural health monitoring tasks. The goal is to enhance UAV usability in complex environments such as bridges or indoor structures where GPS is unreliable or unavailable.

Although previous studies have successfully employed YOLO as a base model for tasks such as obstacle detection and structural health monitoring, our approach is distinct in its specific focus on supporting visually impaired individuals through a mobile-based application. Unlike generic implementations, this study introduces a custom dataset—captured directly from the real environments encountered by visually impaired students —to train and fine-tune the detection model. The goal is not only technical accuracy but also practical usability in daily navigation scenarios. Therefore, our proposed system is based on the YOLOv5s model, which is a lightweight and efficient object detection model. It is suitable for real-time applications on resource-constrained devices. With its small model size, YOLOv5s requires less memory and computational power, which enables deployment on mobile platforms. By adapting YOLOv5s and tailoring the detection targets to the specific obstacles relevant to the visually impaired, this work advances the application of deep learning in assistive technologies beyond prior generalized efforts.

3. Materials and Methods

This section presents the proposed mobile obstacle detection system. CRISP-DM [19] is adopted to train and evaluate the object detection model, while SCRUMBAN is used for the mobile system’s development.

3.1. CRISP-DM Methodology

In order to define the best obstacle detection model, we follow CRISP-DM. This methodology consists of six phases: Business Understanding, Data Understanding, Data Preparation, Modeling, Evaluation, and Deployment.

The business understanding determines, through an analysis of mobility challenges using observation, interviews, and field diagnostics, that there are some difficulties in allowing optimal mobilization due to the presence of obstacles that hinder a safe movement within the institution. The problem enables the need for a real-time obstacle detection system, which will not only enhance the students’ autonomy but also complement the “Mobility and Orientation” subject in order to serve as a practical and educational tool. Thus, key considerations that are taken into account during the business understanding process include the identification of the types of obstacles to be detected and the determination of the effective integration of the system into a mobile application.

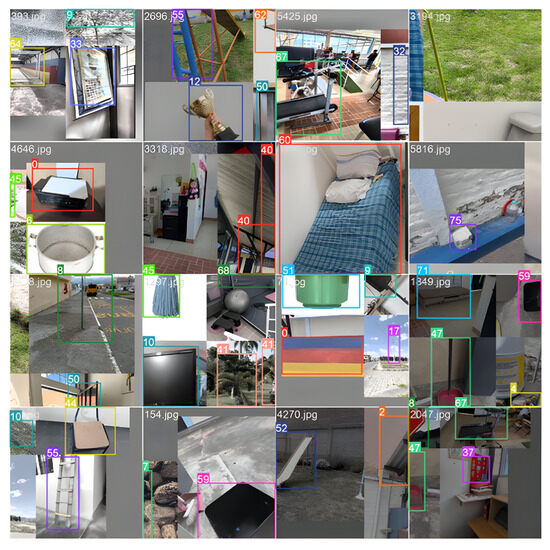

The data understanding stage aims to analyze the data in order to know what is in store and how it could be used. In this case, data are represented by images of the environment, which are used to train the system to enhance detection. In order to collect and preprocess the data needed to perform the YOLO fine-tuning, we introduce the Detectra dataset, which consists of 7600 images captured directly at the Dr. Luis Benavides Specialized Educational Unit, representing the specific obstacles that visually impaired students encounter in their daily lives. The images are taken using a smartphone equipped with a 200-megapixel main camera, f/1.65 aperture, a 1/1.4-inch sensor, and optical image stabilization, enabling the capture of high-resolution and stable images critical for accurate object detection. Figure 1 shows some images from the Detectra dataset. In addition, it was required to split the dataset into training, testing, and validation sets. This is essential to ensure efficient training and accurate evaluation of the YOLO model. The final representation of the data is shown in Table 1.

Figure 1.

Sample images from the Detectra dataset.

Table 1.

Detectra dataset.

The data preparation consists of adapting the data in order to improve the final results. The dataset contains 7600 images and consists of 76 classes corresponding to objects from the Educational Unit, which have been classified into indoor and outdoor categories. Additionally, there are 100 images per class representing obstacles present in the Educational Unit. All of the images are standardized to a resolution format of 1024 × 1024 px to ensure a consistent range of values, and every image is in RGB color format.

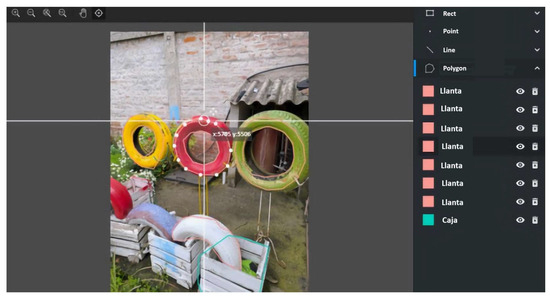

The images need to be tagged with the present objects, and this process involved defining and labelling the objects of interest, specifically the obstacles in the Educational Unit, using the Make Sense tool. To improve accuracy, the polygon function was used, allowing for the precise contouring of objects, especially in complex scenarios with irregularly shaped or partially hidden obstacles, as shown in Figure 2. This ensured higher-quality training data for the YOLO model.

Figure 2.

Polygon image tagging.

In this study, the YOLOv5s model was selected as the object detection backbone due to its proven balance between detection accuracy, computational efficiency, and real-time performance. Although newer versions of YOLO offer advancements in specific tasks, they typically require greater computational resources and are optimized for high-end hardware. Given the objective of deploying the system on mobile devices to assist visually impaired users in real-world environments, model size and inference speed are critical considerations. YOLOv5s, with its lightweight architecture and robust performance, provides a suitable compromise, allowing for accurate obstacle detection while maintaining the low latency required for mobile-based applications.

Moreover, the model’s performance is evaluated in terms of accuracy, precision, recall, and mean average precision (mAP). The evaluation ensures that the system meets real-time detection requirements and provides reliable assistance to visually impaired users. Finally, the optimized model is integrated into a mobile application using frameworks such as TensorFlow Lite and PyTorch ExecuTorch Mobile for efficient performance on mobile devices. For the development of the mobile application, we adopted SCRUM. Final testing was conducted with real users to validate the system’s usability, followed by the application’s release in the Specialized Educational Unit Dr. Luis Benavides

3.2. SCRUBAM Methodology

The SCRUMBAN methodology [20] was applied to streamline the development of the proposed mobile application, which integrates the obstacle detection model based on YOLOv5s. Each sprint focuses on specific tasks, such as designing and implementing an intuitive user interface that leverages auditory and haptic feedback to assist visually impaired users. As the primary programming language, Python 3.11 enables robust development and compatibility with machine learning frameworks.

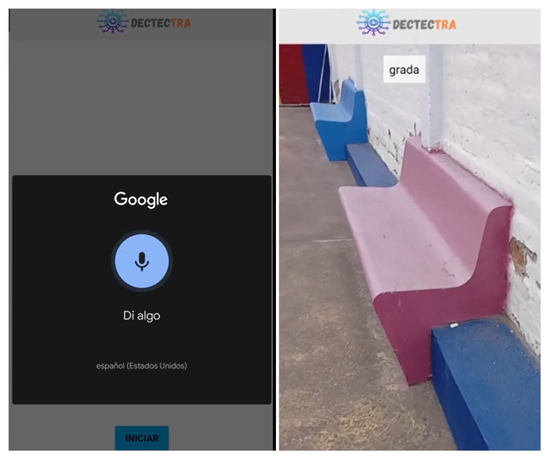

The mobile app interface design opted for has a simplified structure with only two screens. The first screen welcomes the user, and facilitates a brief initial interaction with the app; the second screen displays the images captured by the camera in real time, using the YOLO integration for object detection. Figure 3 shows the mobile application’s user interfaces, considering that the principal interaction mechanism is the user’s voice.

Figure 3.

The developed mobile application.

4. Results

This section details the dataset utilized and the corresponding results obtained during the experiments and analysis. The experiments are designed to evaluate the effectiveness of the proposed system.

4.1. Dataset

As mentioned above, the Detectra dataset consists of 7600 RGB images of 1024 × 1024 pixels. The dataset contains 76 classes such as Furniture, Ball, Door, Tree, Chair, Slide, and so on. The dataset classes are listed in Table 2. Each image contains 3, 4, or 5 common obstacles within the Educational Unit, as shown in Figure 4. Each class appears in 100 images. In order to determine which objects should be classified as obstacles, we conducted interviews with both visually impaired students and faculty members at the Dr. Luis Benavides Specialized Educational Unit. These interviews provided first-hand insights into the specific environmental elements that pose real challenges during daily navigation, such as stairs, open doors, chairs, and misplaced objects. Based on this input, we annotated our dataset to include only those items that were consistently identified as potential hazards. This user-informed approach ensures that the detection model focuses on obstacles that are both contextually relevant and practically significant for the target users.

Table 2.

Obstacle classification: interior and exterior.

Figure 4.

Image depicting several obstacles annotated with bounding boxes. Each bounding box highlights a detected object, labeled according to its class.

4.2. Experimental Results

The proposed obstacle detection model is based on YOLOv5s due to its well-documented advantages in balancing speed and accuracy. Several variations in hyperparameter configurations are applied to comprehensively evaluate the proposed system’s performance, and models are trained across different epochs (50, 100, and 300) with the Detectra dataset. Testing YOLOv5s with different numbers of epochs is necessary to determine the point at which the model achieves optimal performance without overfitting or underfitting. By testing multiple epoch settings, we can observe how the model improves over time, identify the stage where the validation accuracy stabilizes, and select the most efficient training duration. The evaluation is done by using performance metrics, including mean Average Precision (mAP), recall, precision, and accuracy, providing a multidimensional understanding of the model’s strengths and potential areas for improvement.

Table 3 shows the evaluation results. YOLO-300, YOLO-50, and YOLO-100 represent the models trained for 300, 50, and 100 epochs, respectively. YOLO-50 and YOLO-100 prove insufficient for achieving the mAP, recall, precision, and accuracy required in real-time obstacle detection applications. In the case of YOLO-50, the model achieves a low mAP of 0.02, which reflects a limited ability to identify objects in images correctly. On the other hand, YOLO-100 shows improved performance compared to YOLO-50 but still falls short of achieving accurate detection. The mAP increased to 0.22, and precision and accuracy slightly improved (0.69 and 0.65, respectively). In contrast, YOLO-300 demonstrates the highest performance, achieving a mAP of 0.42, recall of 0.77, precision of 0.79, and accuracy of 0.76. Due to its superior performance, YOLO-300 was selected for integration into the developed mobile application.

Table 3.

Evaluation results on Detectra dataset.

5. Discussion

The proposed application leverages recent advancements in assistive technologies for visually impaired individuals while addressing specific limitations found in prior works. Compared to traditional approaches such as Harris corner detection [13], which heavily rely on estimations in distance calculations, our model leverages deep learning to provide a more reliable and adaptable detection mechanism. Similarly, while wearable guidance systems, such as those proposed by Rahman et al. [6], integrate multiple sensors for real-time obstacle detection, they require additional hardware components, which can increase costs and reduce accessibility for some users. In contrast, our application is fully integrated into a mobile application, making it more accessible by leveraging the computational power of smartphones without requiring external sensors.

The proposed obstacle detection application based on YOLOv5s presents significant implications for Human–Computer Interaction (HCI), particularly in the context of accessibility for visually impaired individuals. Thanks to the integration of auditory and haptic feedback, our application enhances user awareness by providing real-time alerts through voice guidance and vibrations, which allow users to navigate their environment safely. Therefore, this multimodal interaction ensures that visually impaired individuals receive immediate obstacle notifications. In addition, our proposed mobile application improves real-time interaction by combining an obstacle detection model with sensory feedback. In this context, our study reinforces the importance of designing assistive technologies that prioritize both functionality and user experience. Future work could explore optimizing adaptive haptic feedback by implementing dynamic vibration patterns that vary in intensity and frequency based on the proximity and type of obstacle, improving spatial awareness and responsiveness in real-time navigation.

6. Conclusions

This study proposes the Detectra application for visually impaired individuals, which is the result of the integration of the YOLO-300 model for obstacle detection into a mobile application with text-to-speech (TTS) and haptic feedback. The comparative analysis of computer vision technologies identified YOLO as the most suitable model for this project due to its ability to operate in real-time and provide an optimal balance between accuracy and performance. Although other technologies, such as SSD and Faster R-CNN, excelled in certain aspects, YOLO stands out as the most efficient solution for integration into mobile devices through its Lite version, making it ideal for achieving the project objectives. This choice is supported by the structured and agile development facilitated by the combination of CRISP-DM and SCRUMBAN methodologies, which ensured both the precise real-time detection of obstacles and the design of the Detectra application.

Furthermore, integrating text-to-speech (TTS) and haptic feedback through vibrations provides clear and timely information about the detected obstacles, which significantly enhances user interaction and understanding of the system. The implemented model demonstrated robust performance, achieving an of 0.42, surpassing the baseline value of 0.374, and an accuracy of 76.7 %. These results demonstrate that our proposed system can correctly identify real-time obstacle detection under various lighting conditions. Therefore, the DETECTRA mobile application contributes to the autonomous and safe mobility of visually impaired users within their educational environment and demonstrates the feasibility of integrating advanced technology to solve real-world problems, with a direct positive impact on the quality of life of the end users.

Author Contributions

Conceptualization, N.S.O.Y., J.C.T.C. and G.K.B.-G.; methodology, N.S.O.Y., J.C.T.C., M.A.P.A. and G.K.B.-G.; software, N.S.O.Y., J.C.T.C. and P.X.M.-V.; validation, G.K.B.-G., M.A.P.A., P.X.M.-V. and P.R.M.-C.; formal analysis, G.K.B.-G., M.A.P.A., P.X.M.-V. and P.R.M.-C.; investigation, N.S.O.Y., J.C.T.C., G.K.B.-G., M.A.P.A., P.X.M.-V. and P.R.M.-C.; resources, N.S.O.Y., J.C.T.C. and G.K.B.-G.; data curation, N.S.O.Y., J.C.T.C. and G.K.B.-G.; writing—original draft preparation, N.S.O.Y., J.C.T.C. and G.K.B.-G.; writing—review and editing, P.X.M.-V. and P.R.M.-C.; visualization, G.K.B.-G., M.A.P.A., P.X.M.-V. and P.R.M.-C.; supervision, G.K.B.-G.; project administration, G.K.B.-G. and M.A.P.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Ethical review and approval were waived for this study due to the fact that only humans tested the application, without any interventions that could pose risks to their well-being. The study did not involve medical, psychological, or behavioral experiments, nor did it collect personal or sensitive data. The testing was limited to usability evaluation in a real-world setting, where participants interacted voluntarily with the developed system under normal conditions, ensuring no ethical concerns requiring formal review.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The original data presented in the study are openly available in Kaggle at https://www.kaggle.com/datasets/jhontroya/dectectra-dataset (accessed on 22 February 2025).

Acknowledgments

The authors would like to thank the Specialized Educational Unit Dr. Luis Benavides for allowing us to implement and evaluate the proposed obstacle detection mobile application within their facilities. Their collaboration and valuable insights contributed significantly to the development and validation of this study.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Hoang, V.N.; Nguyen, T.H.; Le, T.L.; Tran, T.H.; Vuong, T.P.; Vuillerme, N. Obstacle detection and warning system for visually impaired people based on electrode matrix and mobile Kinect. Vietnam J. Comput. Sci. 2017, 4, 71–83. [Google Scholar] [CrossRef]

- Chang, W.J.; Chen, L.B.; Chen, M.C.; Su, J.P.; Sie, C.Y.; Yang, C.H. Design and implementation of an intelligent assistive system for visually impaired people for aerial obstacle avoidance and fall detection. IEEE Sens. J. 2020, 20, 10199–10210. [Google Scholar] [CrossRef]

- Masud, U.; Saeed, T.; Malaikah, H.M.; Islam, F.U.; Abbas, G. Smart assistive system for visually impaired people obstruction avoidance through object detection and classification. IEEE Access 2022, 10, 13428–13441. [Google Scholar] [CrossRef]

- Suman, S.; Mishra, S.; Sahoo, K.S.; Nayyar, A. Vision navigator: A smart and intelligent obstacle recognition model for visually impaired users. Mob. Inf. Syst. 2022, 2022, 9715891. [Google Scholar] [CrossRef]

- Fujimori, A.; Kubota, H.; Shibata, N.; Tezuka, Y. Leader—Follower formation control with obstacle avoidance using sonar-equipped mobile robots. Proc. Inst. Mech. Eng. Part I J. Syst. Control Eng. 2014, 228, 303–315. [Google Scholar] [CrossRef]

- Rahman, M.M.; Islam, M.M.; Ahmmed, S.; Khan, S.A. Obstacle and fall detection to guide the visually impaired people with real time monitoring. SN Comput. Sci. 2020, 1, 219. [Google Scholar] [CrossRef]

- Mahendran, J.K.; Barry, D.T.; Nivedha, A.K.; Bhandarkar, S.M. Computer vision-based assistance system for the visually impaired using mobile edge artificial intelligence. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 2418–2427. [Google Scholar]

- Vijetha, U.; Geetha, V. Obs-tackle: An obstacle detection system to assist navigation of visually impaired using smartphones. Mach. Vis. Appl. 2024, 35, 20. [Google Scholar] [CrossRef]

- Ashiq, F.; Asif, M.; Ahmad, M.B.; Zafar, S.; Masood, K.; Mahmood, T.; Mahmood, M.T.; Lee, I.H. CNN-based object recognition and tracking system to assist visually impaired people. IEEE Access 2022, 10, 14819–14834. [Google Scholar] [CrossRef]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object detection in 20 years: A survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Redmon, J. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Mashiata, M.; Ali, T.; Das, P.; Tasneem, Z.; Badal, M.F.R.; Sarker, S.K.; Hasan, M.M.; Abhi, S.H.; Islam, M.R.; Ali, M.F.; et al. Towards assisting visually impaired individuals: A review on current status and future prospects. Biosens. Bioelectron. X 2022, 12, 100265. [Google Scholar] [CrossRef]

- Rahmad, C.; Rawansyah, T.K.; Arai, K. Object Detection System to Help Navigating Visual Impairments. Int. J. Adv. Comput. Sci. Appl. IJACSA 2019, 10, 140–143. [Google Scholar] [CrossRef]

- Alshahrani, A.; Alqurashi, A.; Imam, N.; Alghamdi, A.; Alzahrani, R. Basira: An Intelligent Mobile Application for Real-Time Comprehensive Assistance for Visually Impaired Navigation. Int. J. Adv. Comput. Sci. Appl. IJACSA 2024, 15. [Google Scholar] [CrossRef]

- Khan, W.; Hussain, A.; Khan, B.M.; Crockett, K. Outdoor mobility aid for people with visual impairment: Obstacle detection and responsive framework for the scene perception during the outdoor mobility of people with visual impairment. Expert Syst. Appl. 2023, 228, 120464. [Google Scholar] [CrossRef]

- Chaudhari, M.N.; Deshmukh, M.; Ramrakhiani, G.; Parvatikar, R. Face Detection Using Viola Jones Algorithm and Neural Networks. In Proceedings of the 2018 Fourth International Conference on Computing Communication Control and Automation (ICCUBEA), Pune, India, 16–18 August 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Meenakshi, R.; Ponnusamy, R.; Alghamdi, S.; Khalaf, O.I.; Alotaibi, Y. Development of Mobile App to Support the Mobility of Visually Impaired People. Comput. Mater. Contin. 2022, 73, 3473–3495. [Google Scholar] [CrossRef]

- Waqas, A.; Kang, D.; Cha, Y.J. Deep learning-based obstacle-avoiding autonomous UAVs with fiducial marker-based localization for structural health monitoring. Struct. Health Monit. 2024, 23, 971–990. [Google Scholar] [CrossRef] [PubMed]

- Wirth, R.; Hipp, J. CRISP-DM: Towards a standard process model for data mining. In Proceedings of the 4th International Conference on the Practical Applications of Knowledge Discovery and data Mining, Manchester, UK, 11–13 April 2000; Volume 1, pp. 29–39. [Google Scholar]

- Alqudah, M.; Razali, R. An empirical study of Scrumban formation based on the selection of scrum and Kanban practices. Int. J. Adv. Sci. Eng. Inf. Technol. 2018, 8, 2315–2322. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).