Towards Emotionally Expressive Virtual Human Agents to Foster L2 Production: Insights from a Preliminary Woz Experiment

Abstract

:1. Introduction

2. Literature Review

2.1. Emotion and Second Language Production

2.2. Animated Pedagogical Agents and Learning Support

2.3. Facial Actions Coding System

3. Research Objective

- Propose a method for achieving emotionally expressive computer-based agents that could display attentive nonverbal signals while listening to human conversation partners.

- Investigate the extent to which such agents are capable of conveying sufficient empathy to regulate second language learners’ emotional experience and promote their production of the target language.

4. Approach

4.1. Virtual Agent’s Feedback-Generation Module

4.2. Wizard’s Face Expression Detection Module

5. Woz Experiment

5.1. Instruments and Participants

5.2. Flow of Interactions

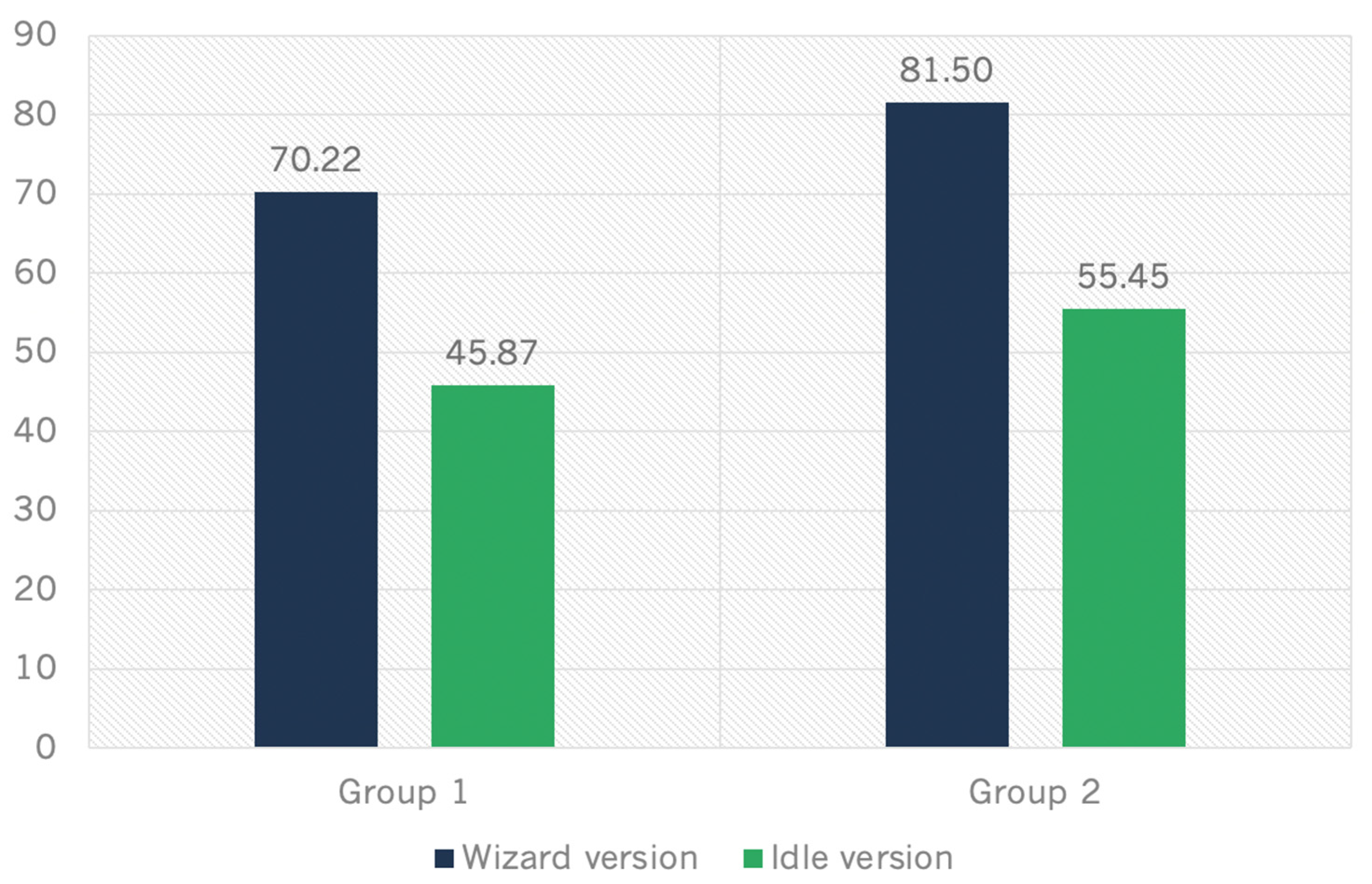

5.3. Results

5.4. Discussion

6. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Peters, E. Affect and Emotion. In Fischhoff, Baruch, Communicating Risks and Benefits: An Evidence Based User’s Guide; U.S. Deptartment of Health and Human Services: Washington DC, USA, 2012; pp. 89–99. [Google Scholar]

- Horstmann, G. What Do Facial Expressions Convey: Feeling States, Behavioral Intentions, or Actions Requests? Emotion 2003, 3, 150–166. [Google Scholar] [CrossRef]

- Buck, R. Nonverbal Behavior and the Theory of Emotion: The Facial Feedback Hypothesis. J. Personal. Soc. Psychol. 1980, 38, 811–824. [Google Scholar] [CrossRef]

- Krashen, S. Principles and Practice in Second Language Acquisition; Prentice-Hall: Hoboken, NJ, USA, 1982. [Google Scholar]

- Heckman, C.E.; Wobbrock, J.O. Put your best face forward: Anthropomorphic agents, e-commerce consumers, and the law. In Proceedings of the Fourth International Conference on Autonomous Agents, Barcelona, Spain, 3–7 June 2000; pp. 435–442. [Google Scholar]

- Ekman, P.; Friesen, W.V. Measuring facial movement. Environ. Psychol. Nonverbal Behav. 1976, 1, 56–75. [Google Scholar] [CrossRef]

- Ekman, P.; Keltner, D. Universal facial expressions of emotion. In Nonverbal Communication: Where Nature Meets Culture; Segerstrale, U.P., Molnar, P., Eds.; Lawrence Erlbaum Associates Inc: Mahwah, NJ, USA, 1997; pp. 27–46. [Google Scholar]

- Piaget, J. The relation of affectivity to intelligence in the mental development of the child. Bull. Menn. Clin. 1962, 26, 129. [Google Scholar]

- Langer, J. Theories of Devezlopment; Holt, Rinehart & Winston Inc: Austin, TX, USA, 1969. [Google Scholar]

- Swain, M.; Lapkin, S. Problems in output and the cognitive processes they generate: A step toward second language learning. Appl. Linguist. 1995, 16, 371–391. [Google Scholar] [CrossRef]

- MacIntyre, P.D.; Clément, R.; Dörnyei, Z.; Noels, K.A. Conceptualizing willingness to communicate in a L2: A Situational Model of L2 Confidence and Affiliation. Mod. Lang. J. 1998, 82, 545–562. [Google Scholar] [CrossRef]

- Reinders, H.; Wattana, S. Can I say something? The effects of digital game play on willingness to communicate. Lang. Learn. Technol. 2014, 18, 101–123. [Google Scholar]

- Isoda, T. Developing a Scale of Unwillingness to Speak English. Hiroshima Stud. Lang. Lang. Educ. 2008, 11, 41–49. [Google Scholar]

- Wolf, J.P. The effects of backchannels on fluency in L2 oral task production. System 2008, 36, 279–294. [Google Scholar] [CrossRef]

- Fries, C. The Structure of English; Harcourt Brace: New York, NY, USA, 1952. [Google Scholar]

- Kendon, A. Some functions of gaze direction in social interaction. Acta Psychol. 1967, 26, 22–63. [Google Scholar] [CrossRef]

- Dittmann, A.T.; Llewellyn, L.G. Relationship between vocalizations and head nods as listener responses. J. Pers. Soc. Psychol. 1968, 9, 79–84. [Google Scholar] [CrossRef]

- Yngve, V. On getting a word in edgewise. In Papers from the Sixth Regional Meeting of the Chicago Linguistic Society; Chicago Linguistic Society: Chicago, IL, USA, 1970; pp. 567–578. [Google Scholar]

- White, S. Backchannels across cultures: A study of Americans and Japanese. Lang. Soc. 1989, 18, 59–76. [Google Scholar] [CrossRef]

- Vo, Y. Effects of Integrated and Independent Speaking Tasks on Learners’ Interactional Performance; Minnesota State University: Mankato, MN, USA, 2018. [Google Scholar]

- Abadikhah, S.; Mosleh, Z. EFL learners’ proficiency level and attention to linguistic features during collaborative output activities. Iran. EFL J. 2011, 7, 179–198. [Google Scholar]

- Adams, R.; Nuevo, A.M.; Egi, T. Explicit and implicit feedback, modified output, and SLA: Does explicit and implicit feedback promote learning and learner–learner interactions? Mod. Lang. J. 2011, 95, 42–63. [Google Scholar] [CrossRef]

- Mackey, A.; Adams, R.; Stafford, C.; Winke, P. Exploring the relationship between modified output and working memory capacity. Lang. Learn. 2010, 60, 501–533. [Google Scholar] [CrossRef]

- Nuevo, A.M.; Adams, R.; Ross-Feldman, L. Task complexity, modified output, and L2 development in learner–learner interaction. In Second Language Task Complexity: Researching the Cognition Hypothesis of Language Learning and Performance; John Benjamins Publishing: Amsterdam, The Netherlands, 2011; pp. 175–202. [Google Scholar]

- Swain, M. The output hypothesis: Theory and research. In Handbook of Research in Second Language Teaching and Learning; Routledge: London, UK, 2005; pp. 495–508. [Google Scholar]

- Atkinson, R.K.; Mayer, R.E.; Merrill, M.M. Fostering social agency in multimedia learning: Examining the impact of an animated agent’s voice. Contemp. Educ. Psychol. 2005, 30, 117–139. [Google Scholar] [CrossRef]

- Graesser, A.C.; Chipman, P.; Haynes, B.C.; Olney, A. AutoTutor: An intelligent tutoring system with mixed-initiative dialogue. IEEE Trans. Educ. 2005, 48, 612–618. [Google Scholar] [CrossRef]

- Woo, C.W.; Evens, M.W.; Freedman, R.; Glass, M.; Shim, L.S.; Zhang, Y.; Zhou, Y.; Michael, J. An intelligent tutoring system that generates a natural language dialogue using dynamic multi-level planning. Artif. Intell. Med. 2006, 38, 25–46. [Google Scholar] [CrossRef]

- VanLehn, K.; Jordan, P.W.; Rosé, C.P.; Bhembe, D.; Böttner, M.; Gaydos, A.; Makatchev, M.; Pappuswamy, U.; Ringenberg, M.; Roque, A.; et al. The architecture of Why2-Atlas: A coach for qualitative physics essay writing. In Proceedings of the International Conference on Intelligent Tutoring Systems, San Sebastián, Spain, 2–7 June 2002; Springer: Berlin/Heidelberg, Germany, 2002; pp. 158–167. [Google Scholar]

- Ohmaye, E. Simulation-based language learning: An architecture and a multi-media authoring tool. In Inside Multi-Media Case Based Instruction; Lawrence Erlbaum Associates Inc: Mahway, NJ, USA, 1998; pp. 1–100. [Google Scholar]

- Compton, L. Using text chat to improve willingness to communicate. In Computer-Assisted Language Learning: Concepts, Contexts and Practices; Son, J.-B., Ed.; iUniverse, Inc.: New York, NY, USA, 2004; pp. 123–144. [Google Scholar]

- Nakaya, K.; Murota, M. Development and evaluation of an interactive english conversation learning system with a mobile device using topics based on the life of the learner. Res. Pract. Technol. Enhanc. Learn. 2013, 8, 65–89. [Google Scholar]

- Johnson, W.L.; Valente, A. Tactical language and culture training systems: Using AI to teach foreign languages and cultures. AI Mag. 2009, 30, 72. [Google Scholar] [CrossRef]

- Ayedoun, E.; Hayashi, Y.; Seta, K. Adding communicative and affective strategies to an embodied conversational agent to enhance second language learners’ willingness to communicate. Int. J. Artif. Intell. Educ. 2019, 29, 29–57. [Google Scholar] [CrossRef]

- Drolet, A.L.; Morris, M.W. Rapport in conflict resolution: Accounting for how face-to-face contact fosters mutual cooperation in mixed-motive conflicts. J. Exp. Soc. Psychol. 2000, 36, 26–50. [Google Scholar] [CrossRef]

- Goldberg, M.C.; Mostofsky, S.H.; Cutting, L.E.; Mahone, E.M.; Astor, B.C.; Denckla, M.B.; Landa, R.J. Subtle executive impairment in children with autism and children with ADHD. J. Autism Dev. Disord. 2005, 35, 279–293. [Google Scholar] [CrossRef] [PubMed]

- Kopp, S.; Allwood, J.; Grammer, K.; Ahlsen, E.; Stocksmeier, T. Modeling Embodied Feedback with Virtual Humans. In ZiF Research Group International Workshop. LNCS (LNAI), 4930; Wachsmuth, I., Knoblich, G., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 18–37. [Google Scholar]

- Morency, L.P.; de Kok, I.; Gratch, J. A probabilistic multimodal approach for predicting listener backchannels. Auton. Agents Multi-Agent Syst. 2010, 20, 70–84. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V.; Ellsworth, P. Emotion in the Human Face: Guide-Lines for Research and an Integration of Findings; Pergamon Press: New York, NY, USA, 1972. [Google Scholar]

- Ekman, P.; Friesen, W.V. Facial Action Coding System; Consulting Psychologist Press: Palo Alto, CA, USA, 1978. [Google Scholar]

- Hjortsjö, C.H. Man’s Face and Mimic Language; Nordens Boktryckeri: Malmö, Sweden, 1970. [Google Scholar]

- Cohn, J.F.; Ambadar, Z.; Ekman, P. Observer-based measurement of facial expression with the Facial Action Coding System. Handb. Emot. Elicitation Assess. 2007, 1, 203–221. [Google Scholar]

- Ekman, P.; Friesen, W.V.; Hager, J.C. The Facial Action Coding System: A Technique for the Measurement of Facial Movement; Consulting Psychologists Press: San Francisco, CA, USA, 2002. [Google Scholar]

- Valstar, M.; Pantic, M. Fully automatic facial action unit detection and temporal analysis. In Proceedings of the 2006 Conference on Computer Vision and Pattern Recognition Workshop (CVPRW’06), New York, NY, USA, 17–22 June 2006; IEEE Computer Society: Washington DC, USA, 2006; p. 149. [Google Scholar]

- Ekman, P.; Friesen, W.V.; O’Sullivan, M.; Chan, A.; Diacoyanni-Tarlatzis, I.; Heider, K.; Krause, R.; LeCompte, W.A.; Pitcairn, T.; Ricci-Bitti, P.E.; et al. Universals and cultural differences in the judgments of facial expressions of emotion. J. Personal. Soc. Psychol. 1987, 53, 712. [Google Scholar] [CrossRef]

- Ekman, P.; Rosenberg, E.L.; Hager, J.C. Facial Action Coding System Interpretive Database (FACSAID); Unpubl Manuscr Univ Calif San Franc Hum Interact Lab: San Francisco, CA, USA, 1998. [Google Scholar]

- Ekman, P.; Rosenberg, E.L. What the Face Reveals: Basic and Applied Studies of Spontaneous Expression Using the Facial Action Coding System (FACS), 2nd ed.; Oxford University Press: New York, NY, USA, 2005. [Google Scholar]

- Carolis, B.D.; Pelachaud, C.; Poggi, I.; Steedman, M. APML, a markup language for believable behavior generation. In Life-like Characters; Springer: Berlin, Germany, 2004; pp. 65–85. [Google Scholar]

- Wang, I.; Ruiz, J. Examining the Use of Nonverbal Communication in Virtual Agents. Int. J. Hum.–Comput. Interact. 2021, 37, 1648–1673. [Google Scholar] [CrossRef]

- Ayedoun, E.; Tokumaru, M. Towards Emotionally Expressive Virtual Agent to Foster Independent Speaking Tasks: A Preliminary Study. In HCI International 2022 Posters. HCII 2022. Communications in Computer and Information Science; Stephanidis, C., Antona, M., Ntoa, S., Eds.; Springer: Cham, Switzerland, 2022; Volume 1582. [Google Scholar]

- Available online: https://www.trulience.com/ (accessed on 1 July 2022).

- Available online: https://imotions.com/blog/facial-action-coding-system/#emotions-action-units (accessed on 15 March 2022).

- Available online: https://github.com/justadudewhohacks/face-api.js/ (accessed on 15 March 2022).

- Huber, P. Real-Time 3D Morphable Shape Model Fitting to Monocular In-The-Wild Videos. Ph.D. Thesis, University of Surrey, Guildford, UK, 2017. [Google Scholar]

- Kelley, J.F. An Empirical Methodology for Writing User-Friendly Natural Language Computer Applications. In Proceedings of the CHI’ 83 Conference on Human Factors in Computing Systems, Boston, MA, USA, 12–15 December 1983; pp. 193–196. [Google Scholar]

- Howitt, D.; Cramer, D. Introduction to SPSS Statistics in Psychology, 6th ed.; Pearson Education: London, UK, 2011. [Google Scholar]

- Reeves, B.; Nass, C. The Media Equation: How People Treat Computers, Television, and New Media like Real People; CSLI Publications: Stanford, CA, USA, 1996. [Google Scholar]

- Self, J. The defining characteristics of intelligent tutoring systems research: ITSs care, precisely. Int. J. Artif. Intell. Educ. 1999, 10, 350–364. [Google Scholar]

- du Boulay, B.; Avramides, K.; Luckin, R.; Martínez-Mirón, E.; Méndez, G.R.; Carr, A. Towards systems that care: A conceptual framework based on motivation, metacognition and affect. Int. J. Artif. Intell. Educ. 2010, 20, 197–229. [Google Scholar]

| 1. Left_EyesLidsSquint | 2. Left_EyesLidsLookLeft | 3. Left_BrowsIn | 4. Left_NoseWrinkler | 5. All_SmileLeft |

| 6. Right_EyesLidsSquint | 7. Right_EyesLidsLookLeft | 8. Right_BrowsIn | 9. Right_NoseWrinkler | 10. Left_SmileLispOpenLeft |

| 11. Left_EyesLidsCloseHard | 12. Left_EyesLidsLookUp | 13. Left_BrowsInRaised | 14. Left_NoseScrunch | 15. All_SmileRight |

| 16. Right_EyesLidsCloseHard | 17. Right_EyesLidsLookUp | 18. Right_BrowsInRaised | 19. Right_NoseScrunch | 20. Left_SmileLispOpenRight |

| 21. Left_EyesLidsScrunch | 22. Left_EyesLidsLookUpLeft | 23. Left_BrowsDownScrunchEyes | 24. Left_NoseSneer | 25. Left_Frown |

| 26. Right_EyesLidsScrunch | 27. Right_EyesLidsLookUpLeft | 28. Right_BrowsDownScrunchEyes | 29. Right_NoseSneer | 30. Right_Frown |

| 31. Left_EyesLidsBlink | 32. Left_EyesLidsLookUpRight | 33. Left_BrowsSneer | 34. Left_NoseBrowsDown | 35. Left_Kiss |

| 36. Right_EyesLidsBlink | 37. Right_EyesLidsLookUpRight | 38. Right_BrowsSneer | 39. Right_NoseBrowsDown | 40. Right_Kiss |

| 41. Left_EyesLidsHalfClosed | 42. Left_EyesLidsWide | 43. Left_CheekRaiser | 44. Left_NoseBrowsIn | 45. All_LipsLeft |

| 46. Right_EyesLidsHalfClosed | 47. Right_EyesLidsWide | 48. Right_CheekRaiser | 49. Right_NoseBrowsIn | 50. All_LipsRight |

| 51. Left_EyesLidsBlinkLowerLidRaised | 52. Left_EyesLidsCheekRaiser | 53. Left_CheekScrunch | 54. Left_NosePull | 55. Left_FunnelBigCH |

| 56. Right_EyesLidsBlinkLowerLidRaised | 57. Right_EyesLidsCheekRaiser | 58. Right_CheekScrunch | 59. Right_NosePull | 60. Right_FunnelBigCH |

| 61. Left_EyesLidsLookDown | 62. Left_EyesLidsSmile | 63. Left_CheekSmile | 64. Left_NostrilDilator | 65. Left_FunnelClosed |

| 66. Right_EyesLidsLookDown | 67. Right_EyesLidsSmile | 68. Right_CheekSmile | 69. Right_NostrilDilator | 70. Right_FunnelClosed |

| 71. Left_EyesLidsLookDownLeft | 72. Left_BrowsUp | 73. All_CheekSmileLeft | 74. Left_LipsNoseWrinkler | 75. Left_UpperLipRaiser |

| 76. Right_EyesLidsLookDownLeft | 77. Right_BrowsUp | 78. All_CheekSmileRight | 79. Right_LipsNoseWrinkler | 80. Right_UpperLipRaiser |

| 81. Left_EyesLidsLookDownRight | 82. Left_BrowsOuterUp | 83. Left_CheekSneer | 84. Left_SmileSharp | 85. Left_LowerLipDepresser |

| 86. Right_EyesLidsLookDownRight | 87. Right_BrowsOuterUp | 88. Right_CheekSneer | 89. Right_SmileSharp | 90. Right_LowerLipDepresser |

| 91. Left_EyesLidsLookRight | 92. Left_BrowsDown | 93. Left_CheekLipRaiser | 94. All_Smile | 95. Left_SneerUpperLipFunnel |

| 96. Right_EyesLidsLookRight | 97. Right_BrowsDown | 98. Right_CheekLipRaiser | 99. All_SmileLispOpen | 100. Right_SneerUpperLipFunnel |

| Group 1 (n = 6) | Group 2 (n = 6) | |

|---|---|---|

| Step 1 | Initial Guidance | |

| Step 2 | Wizard condition1 | Idle condition |

| Step 3 | Idle condition2 | Wizard condition |

| Step 4 | Preference Survey | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ayedoun, E.; Tokumaru, M. Towards Emotionally Expressive Virtual Human Agents to Foster L2 Production: Insights from a Preliminary Woz Experiment. Multimodal Technol. Interact. 2022, 6, 77. https://doi.org/10.3390/mti6090077

Ayedoun E, Tokumaru M. Towards Emotionally Expressive Virtual Human Agents to Foster L2 Production: Insights from a Preliminary Woz Experiment. Multimodal Technologies and Interaction. 2022; 6(9):77. https://doi.org/10.3390/mti6090077

Chicago/Turabian StyleAyedoun, Emmanuel, and Masataka Tokumaru. 2022. "Towards Emotionally Expressive Virtual Human Agents to Foster L2 Production: Insights from a Preliminary Woz Experiment" Multimodal Technologies and Interaction 6, no. 9: 77. https://doi.org/10.3390/mti6090077

APA StyleAyedoun, E., & Tokumaru, M. (2022). Towards Emotionally Expressive Virtual Human Agents to Foster L2 Production: Insights from a Preliminary Woz Experiment. Multimodal Technologies and Interaction, 6(9), 77. https://doi.org/10.3390/mti6090077