Abstract

Videogame research needs to identify how game features impact learning outcomes. This study explored the impact of the game feature–human interaction on training outcomes (i.e., affective states and declarative knowledge), and examined possible mechanisms (i.e., perceived value and active learning) that mediate this relationship. Participants included 385 undergraduate students: 122 trained alone and 263 trained with a team. All participants completed a computer-based training with four learning objectives (i.e., accessing the game, using the main controls, playing the game scenarios, knowing the game stations) prior to playing the game. After accounting for the indirect effects in the model, human interaction (i.e., playing with a team) had a significant direct effect on affective states, but not declarative knowledge. Learners who trained with a team reported greater positive affective states (indicated by psychological meaning, perceived enjoyment, motivation, and emotional engagement), but no difference in declarative knowledge (i.e., participants knowledge of team roles and responsibilities). Further analyses showed game-based training with a team impacted the affective states of learners through mechanisms of perceived value and active learning, while only active learning mediated the relationship between human interaction and declarative knowledge.

1. Introduction

A videogame is a virtually constructed environment, with rules and goals that challenge the player while engaging their attention with a series of decisions [1,2,3]. Videogame environments are well-suited for teaching because they provide a high degree of control over the features and characteristics of the game. This flexibility is beneficial to organizations because game scenarios can be easily adapted to different needs [4,5]. Recent years have seen a rise in the application of videogames for purposes other than entertainment [6]. Sources have projected continued growth in the development and application of videogames for novel purposes, such as being used in the classroom to teach elementary school children, in interactive displays for museum exhibits, and within organizations to train and develop employees [7]. Despite the increasing use of videogames for training purposes, the research evidence used to justify the investment in and implementation of videogame-based learning is limited [4]. Research on videogame-based learning has found evidence that both supports and discredits the claim that videogames enhance learning outcomes [8,9]. The primary purpose of this study is to present and test an alternative methodology for videogame-based learning that could help explain previous contradictory findings and clarify future research results. This proposed methodology is an extension of a model of videogame-based learning [10] that isolates game characteristics and identifies specific mechanisms through which different training outcomes are affected. In this current study, one game characteristic (i.e., human interaction) will be evaluated to determine the direct effects on two types of training criteria (i.e., affective states and declarative knowledge), and to test two indirect effects (i.e., perceived value and active learning).

1.1. Human Interaction

Some researchers have posited that videogame features lead to different outcomes, which may help understand how the results of a particular game relate to the larger body of research on game-based learning. Using the taxonomy of game attributes presented by [11], this study focuses on human interaction—the degree of interaction an individual has with someone else while playing the game [11]. Any of the videogame characteristics might affect learning outcomes, while human interaction presents a simple and feasible intervention that organizations could easily manipulate within existing programs and could easily incorporate into future training programs. While individuals can interact with others in a variety of ways, a common way is as members of the same team. There are specific methods inherent to team work that make this process distinct from work performed by an individual [12]. This is because teams coordinate their efforts in various ways to divide up the workload, catering to individuals’ strengths and preferences on tasks. To study the effects of human interaction, individual learning will be compared to team learning (i.e., those who complete the videogame training alone compared to those who complete the training within a team).

There have been a handful of studies that directly compare individuals who played with a team to individuals who played alone in a training setting [13,14,15,16,17]. A similar stream of research has reported on the positive impact that teamwork can have on individual outcomes [18,19]. A general trend in these studies has been that those training in a team have demonstrated greater learning outcomes than those who trained individually [13]. Previous research has shown that positive team interactions lead to beneficial team processes, such as knowledge sharing [20], transactive memory e.g., [21], and information elaboration [22], which can be beneficial for learning [23]. The potential impact human interaction will have on different training outcomes will be explored, along with the likely mechanisms whereby these relationships are mediated.

1.2. Affective States

Affective states represent the temporary state that trainees experience as part of this particular videogame-based training activity. These will measure attitudes specific to the training, but not an attitude change that would persist long after the training. Other training researchers have presented similar approaches, such as Ford and colleagues [24], who measured goal orientation and self-efficacy as mediating states following a training intervention. This direction reflects the transition that the team training literature has made in moving away from the traditional IPO model towards a nuanced model of emergent states that teams experience during interactions [25]. For the current study, four affective states will be examined and discussed below.

1.2.1. Psychological Meaning

Psychological meaning is defined as an individual’s positive evaluation of their experience in the videogame-based training activity, based on their value system [26]. Psychological meaning benefits training via an association with increased motivation [26]. It is anticipated that individuals who train with a team will report greater perceptions of meaningfulness from the training. This is based on previous research findings that working with others in a game can improve the meaningfulness of the content being taught [27].

1.2.2. Perceived Enjoyment

Perceived enjoyment is defined as satisfaction with the training related to attaining personal needs or expectations in the training [28]. Previous research has shown that when grouped with a virtual team, individuals reported higher levels of enjoyment [4]. It is anticipated that participants who are placed within a team will report significantly higher levels of enjoyment from the training.

1.2.3. Emotional Engagement

Emotional engagement is the level of emotional arousal experienced during the training [29]. Previous research has shown that individuals experienced more engagement through their participation with a team [4]. It is believed that individuals who train with a team will feel more emotional engagement in the training.

1.2.4. Motivation

A common claim is that games are inherently motivating, and they involve trainees in the content and gameplay [30]. Further, other researchers have shown that interaction with others during a game was motivating for the player [27]. This increased motivation has been shown to relate to other positive outcomes [31,32,33,34]. Based on these findings, it is believed that human interaction will lead to increased levels of motivation.

1.3. Affective States as a Latent Construct

The variables above describe positive affective states, indicating a positive evaluation of their experience during the videogame. Some researchers have argued in favor of measuring discrete dimensions of affective measures [35,36], and there is evidence that affective measures are positively and significantly correlated [37,38]. Other researchers have grouped affective measures together with evidence they load onto the same factor [15]. Based on this evidence that affective measures tend to be positively correlated with one another, the current measures of affective states will be treated as indicators of a broader latent variable, provided they load together.

Hypothesis 1:

Training with a team will result in significantly higher positive affective states.

1.4. Declarative Knowledge

Declarative knowledge is the factual information an individual is able to retain and recall from the videogame-based training [39,40]. While some studies have shown that individuals demonstrate superior performance when compared to individuals trained as a team [41], other researchers have found that interactions with other players during a game create a beneficial environment in which players can guide, explain or clarify things that are misunderstood in the game [42]. Researchers who posit that human interactions aid learning often cite claims that human interaction aids in the learning process through increased access to resources and input from others [43]. This is consistent with the Online Knowledge Sharing Model (OKSM), which proposes that individual learning in an online context occurs through online knowledge sharing, which encompasses complex behaviors beyond meeting and discussing the content [43,44,45,46]. This theory states that individuals develop a detailed understanding of the content and its implications through additional interactions, such as observing and mimicking others [47].

Teams can engage in transactive memory, in which the coordination between team members allows them to fill in the knowledge or skill gaps of other team members [12,21]. When teams coordinate their efforts in an effective way, this can positively affect their efficiency, quality of work, and organizational level outcomes, such as profits and board evaluations [12,48]. Researchers have aggregated research evidence to demonstrate that team-based training is effective for numerous positive outcomes, including cognitive outcomes, affective outcomes, teamwork processes and performance outcomes [49]. In review, the majority of previous studies have found that human interaction will have a positive impact on learning outcomes [50,51]. Based on these findings, it is predicted that human interaction will promote learning and training performance during the videogame-based training simulation. Learning and training performance are operationalized as declarative knowledge. Based on prior findings, it is hypothesized that those trained with a team will have significantly higher levels of knowledge than those trained individually. It is anticipated that affective states and declarative knowledge will be moderately correlated with one another.

Hypothesis 2:

Training with a team will result in significantly higher declarative knowledge.

1.5. Training Processes

One purpose of the current study is to provide evidence on the processes that occur during the training experience [52]. Some researchers have begun to explore possible mediating relationships when evaluating performance in a game as the process through which affective reactions are experienced [53]. The current study will explore several possible indirect effects related to the relationships between human interaction and two training outcomes (i.e., affective states and declarative knowledge).

Although there has been some support for the direct relationship between videogames and affective states such as motivation [54,55,56], there is little empirical evidence to show indirect relationships between game characteristics and learning outcomes [57]. As discussed above, there is a gap in the research on the exploration of the mechanisms through which game-based learning is experienced. Other researchers have called for more research examining complex relationships between games and learning outcomes [57,58].

Some researchers have described the gaming experience as unique to each individual. There is a consistent belief that a positive user experience in the game is associated with positive outcomes such as enjoyment and motivation [1,59,60]. Previous research has shown that interaction with others while playing a serious game can have a positive impact on different game outcomes [31]. This is likely due in part to various mechanisms that trainees experience during the training process [61,62]. Many researchers have theorized mediating relationships, but few have empirically tested these assertions. As part of this study, several potential processes will be tested to understand which mechanisms mediate the relationship between videogame characteristics and training outcomes.

1.5.1. Perceived Value

Perceived value is the extent to which participants believe the training has instrumental value and would be useful in some way [63]. It is believed that human interaction will lead to increased levels of perceived value, which will in turn improve the participant’s affective states. The first part of this relationship, whereby human interaction will improve perceived learning, is supported by previous research that has shown teams were more likely to perceive information as valuable when made aware of its uses [64]. In general, the more time there is to interact with a team in training, the more they will have opportunities to learn the various uses of the information gained. It is predicted that these interactions with a team will lead to increased perceptions regarding the value of the training content, as those individuals will share their knowledge with their teammates during the activity in order to be successful. To support the second part of this predicted relationship, whereby perceived value will improve affective states, previous research has further shown that demonstrating the instrumental value of an activity to a participant will improve their affective impressions of that activity, which can in turn improve learning [32,65,66]. Based on these findings, it is believed that human interaction will lead to increased levels of perceived value, which will in turn be associated with improved affective states and declarative knowledge.

Hypothesis 3a:

Training with a team will lead to increased affective states through an increase in perceived value.

Hypothesis 3b:

Training with a team will lead to increased declarative knowledge through an increase in perceived value.

1.5.2. Active Learning

Active learning is defined as the active attention an individual gave to the videogame-based training activity, including effort exerted in thinking about or interacting with the components of the videogame [67,68,69]. Evaluating active learning in a videogame-based learning context is valuable given that games are believed to inherently promote interest and draw attention [52,70], which are primary components of active learning. It is being argued that human interaction will promote active learning, which will in turn be associated with higher levels of declarative knowledge. Previous research has demonstrated that team interactions can lead to both active participation and improved performance [71,72,73,74]. However, the relationships between these variables will be further examined to determine if active learning is a mechanism through which human interaction improves learning.

The first part of this proposed indirect effect is that interactions within a team will lead to active learning. This has been supported by previous studies that have demonstrated that working with a team promotes active learning by holding one another accountable for paying attention to the tasks [32,75,76]. Team members are often motivated to encourage team participation, particularly in interdependent tasks when their own success is dependent on the actions and behaviors of their fellow teammates. The second part of this proposed indirect effect is that active learning will enhance learning. Previous research supports this assertion that active learning has a positive association with learning outcomes [24,68]. It is predicted that participants who complete the activity as part of a team will demonstrate significantly higher levels of active learning, which will in turn be associated with higher levels of affective states and declarative knowledge compared with individuals who completed the activity alone.

Hypothesis 4a:

Training with a videogame will lead to increased affective states through an increase in active learning.

Hypothesis 4b:

Training with a videogame will lead to increased declarative knowledge through an increase in active learning.

2. Materials and Methods

2.1. Power Analysis

A power analysis was conducted using pilot data from a study of 513 participants with similar yet different construct measures. A single-structure equation model was used to test the proposed hypotheses in Mplus 7.4 [77]. The results demonstrate sufficient power to test the study hypotheses (i.e., H1 and H2 need 100 participants for 90% power; H3 and H4 need 200 participants for 80% power). However, it is important to consider that the pilot sample was from a single study and the current findings may differ.

2.2. Participants

The participants were 389 undergraduate students from a university in the western United States. Participation was voluntary in exchange for class credit. Four participant responses were removed due to incomplete data from technical errors. A final sample of 385 participants remained. All participants were randomly assigned to one of two conditions: 122 individuals completed the training alone, while 263 participants completed the training with a team.

2.3. Procedure

Upon arrival, participants reviewed an online consent form and agreed to participate in the study. Participants completed a self-paced computer-based training (CBT), which covered the skills and knowledge needed to undertake the videogame-based training. Participants completed survey 1, which was comprised of demographic questions, a measure for videogame experience, and a pretest of declarative knowledge. Participants were randomly assigned to complete the videogame-based training either alone (i.e., low human interaction) or with a team (i.e., high human interaction). Participants spent 20 min in training, which was recorded, capturing audio and video activity. Participants then completed survey 2, which consisted of a declarative knowledge posttest and the remaining measures described below. Participants were debriefed with a four-minute video describing the purpose of the study before being dismissed, which has been shown to promote learning [78,79]. The experiment took 60 min to complete.

2.4. Computer-Based Training

The computer-based training (CBT) program was developed using Captivate software. The design followed guidelines from [80]. For development, subject matter experts (SMEs; i.e., those with tens of hours of experience playing the videogame-based training) met and discussed the major goals and objectives in the videogame. The SMEs discussed the knowledge needed to execute the tasks of the game. This discussion produced a list of learning objectives. Content was generated to teach the learning objectives. Below is a segment of the script from the CBT where the learning objectives were reviewed at the beginning of the training. This CBT provided closed captioning for all narration.

“Here is a quick preview of the four topics we will cover in this training. These objectives include: Accessing the Game—Participants will be able to locate and join the ship without assistance. Using the Main Controls-Participants can demonstrate knowledge of main controls and can navigate the main controls screen. Playing the Game Scenarios—Participants will understand the scenarios of the game and the rules and goals for playing. Knowing the Ship Stations—Participants know the purpose and abilities for the different stations of the ship and how to effectively play each station. Our overall goal is to go through some basic information that will help you understand the aspects of the game better and to provide you with strategies that will help you perform better in the game.”

2.5. Team Size

All teams were composed of three individuals. When a third participant was not available, a trained research assistant acted as a confederate to ensure a full number of participants were in each team of three. Although some researchers have defined teams as groups involving two or more individuals [81], others argue that there is a distinction between dyads and teams of three or more [82]. Thus, it was decided that only teams of exactly three would be used to avoid having team size as a possible confounding variable in the study. The analyses reported in the Results section were repeated using only data from the teams with three participants, and no confederates. These analyses did not change the outcome of the results, demonstrating that there was no significant change having used confederates for a portion of the data.

2.6. Videogame-Based Training

The videogame-based training was a science fiction videogame named Quintet [83], in which players assumed the role of crew members aboard a spaceship and carried out different missions. The game required players to learn the different roles available on the ship (i.e., Captain, Helm, Tactical, Engineer, Scientist) and to manage the tasks in each role to meet the different mission objectives (e.g., aid an ally ship in distress, escort a cargo ship transporting materials). Participants scored points for meeting the mission objectives. When played as a team, the game connected team members virtually to communicate and work together, and team members took on different roles. When played individually, the participant switched between roles to complete the different tasks in the game. Researchers followed a script when running the experiment to ensure consistency across iterations of the study.

There are features of this game that make it a particularly useful potential tool for educators. Because this game is freely available online, the tool becomes an easily accessed resource for educators. Additionally, the game sets up an immersive open world in which students can become immersed and engaged. There are also characteristics of the game that make it a likely beneficial experience for students. In particular, this game can serve as a communication-building exercise that promotes teamwork and interpersonal communication. This would be particularly useful in courses that include group projects or other interpersonal skill development. The reason this game lends itself well to this content is because the roles of the ship are highly interdependent. Although players could try to play independently, they could not make much progress in the game without discussing and coordinating their actions. For example, while the Helm can control the ship, they cannot use the navigating maps that only the Captain has access to, and while the Captain sees the changing mission objectives, the other crewmates do not know this unless the information is verbally shared. Further, unless the Scientist identifies a type of matter and scans for that material, the scan results will not show up on the maps and cannot be targeted by the Helm. After a few minutes of gameplay, it becomes clear that game progress depends on team members sharing information and coordinating their actions. Students playing the game will benefit from learning how to communicate their experience in the game and coordinate their actions with others in ways that lead to effective outcomes.

2.7. Declarative Knowledge Assessments

Declarative knowledge was measured using a 14-item pretest in survey 1 (difficulty M = 0.63) and a 26-item posttest in survey 2 (difficulty M = 0.67). Because assessment questions tap different objectives, they are heterogeneous domains, and internal consistency is not relevant [84]. Research-based principles from [85,86] were followed to construct the pretest and posttest assessments. Six SMEs generated a pool of 123 multiple-choice items with four response options and one correct answer. Items were removed due to redundancy or item quality. The remaining 113 items were pilot tested with a sample of 28 undergraduate student volunteers who completed the CBT and test in exchange for class credit. Items were reviewed for difficulty, quality of distractors, and relationship to the overall exam score (i.e., point–biserial correlation). Based on these analyses, 48 items were retained and pilot tested with 174 undergraduates. The final list of 38 items demonstrated reasonable difficulty (0.30–0.90) and discrimination (point-biserial r > 0.25). At least two items were retained from each learning objective to ensure content coverage.

The Internal Referencing Strategy (IRS) was proposed by Haccoun and colleagues [87]. This method was employed to explore possible threats to the internal validity of this design [88,89,90]. The IRS was designed to improve the accuracy of estimating gains in participant knowledge by identifying the degree of change in knowledge for items not expected to change from the training intervention. These content-irrelevant items were included on both assessments and were expected to not change, or change very little between the pretest and posttest. According to this strategy, changes in content-irrelevant items between the pretest and posttest can offer another explanation for score differences, such as the practice effect [87].

For this particular study, team-based processes gave the factual information that participants needed to learn and then be tested on after the training. During game play, individuals had to take on different roles. Teams who played as individuals, and who did not communicate or collaborate during the game, may have learned their role, but would not learn the functions or shared tasks that collaborative teams would learn. For example, the Captain can see and read the current mission objectives but must communicate those objectives with the team, so that certain actions can be coordinated and executed by the team to meet the objective. If the current mission said to locate a nearby planet with a large composition of a certain mineral, the Helm would need to fly the ship within range of a planet, and the Scientist would need to select that planet, choose what was being scanned for and start the scan. The questions on the declarative knowledge assessment would ask questions such as “Which role on the ship has the ability to scan for certain components?”, which only the scientist can do. The other roles would only know the scientist could do this if they had discussed the mission and coordinated their actions. Thus, individuals who scored higher on the declarative knowledge assessment demonstrated higher levels of knowledge on the team’s roles and responsibilities, which indicated teams who had coordinated their actions better.

2.8. Measures

All scales, excluding demographics, used a 5-point Likert type scale—1 = Strongly Disagree to 5 = Strongly Agree. Phrasing modifications were made to several items to reflect a videogame context rather than work or school. Demographic measures included age, sex, and ethnicity. The measures used in this study are provided in the Supplemental Materials.

2.8.1. Videogame Experience

Videogame experience is the amount of expertise a participant has developed through playing videogames, and the amount of time they have spent doing so. This was measured using a shortened 6-item scale based on the Video Game Pursuit scale developed by [91]. The data produced a reliability of a = 0.94.

2.8.2. Psychological Meaning

Psychological meaning is defined as the participant’s evaluation of the videogame experience as it relates to their personal value system [26]. This six-item measure was taken from [26]. The reliability was a = 0.88.

2.8.3. Perceived Enjoyment

Perceived enjoyment is defined as the level of satisfaction a participant experienced during the videogame [63]. This three-item scale was taken from [92]. The reliability was a = 0.94.

2.8.4. Motivation

Motivation is defined as the extent to which the participant felt interested in the videogame and would be interested in playing the videogame again in the future. The six-item scale was adapted from the attention subscale in the Instructional Materials Motivation Survey [93]. Six items from the original scale were not applicable to this study and were dropped. The reliability was a = 0.84.

2.8.5. Emotional Engagement

Emotional engagement is defined as the emotional connection the participant felt to the videogame-based training activity they were in [29]. The six-item scale was taken from the emotional subscale of the Job Engagement measure from Rich et al. [29]. Reliability using the study data was a = 0.93.

2.8.6. Perceived Value

Perceived value is the extent to which the participant felt the activities in the videogame-based training were useful and had instrumental value [63]. This six-item scale was adapted from the relevance subscale in the Instructional Materials Motivation Survey [93]. Three items from the original scale were not applicable to the current study and were dropped. The reliability of the scale in this study was a = 0.78.

2.8.7. Active Learning

Active learning is the active attention and involvement devoted to the learning process during the videogame-based training activity [94]. This was measured using the three-item active learning subscale from the Distance Education Learning Environments Survey (DELES) [94]. Reliability for these data was a = 0.83.

3. Results

Participants (n = 385) in the final sample were 51% female and 73% Caucasian, with an average age of 19 years old (SD = 2). A summary of study means, standard deviations, and correlations is provided in Table 1.

Table 1.

Means, standard deviations, and correlations for observed study variables.

3.1. Declarative Knowledge Assessment

Using the Internal Referencing Strategy [87,95], there was no significant difference (F1, 769 = 0.12, p = 0.73, R2 < 0.001) between the content-irrelevant items from the pretest (M = 0.22, SD = 0.22) to the posttest (M = 0.22, SD = 0.20). The responses at both time points reflected scores comparable to an individual guess on each item (close to 25% correct). These results also show that playing in the videogame-based training did not improve declarative knowledge outcomes beyond the level of knowledge demonstrated by participants after completing the CBT and the pretest. There was no significant difference (F1, 769 = 1.59, p = 0.21, R2 < 0.002) from the pretest (M = 0.63, SD = 0.21) to the posttest (M = 0.62, SD = 0.17) on content-relevant items, meaning no significant improvement occurred in declarative knowledge between the pretest and the posttest assessments. This lack of change between pretest and posttest scores may be attributed to a number of factors (e.g., effective CBT training, difficult posttest questions). This lack of change may contribute to an inability to see knowledge differences in the subsequent testing of the hypotheses.

3.2. Assumption of Independence

Participating as a team violated the assumption of independent observations. Intra-class correlations for each measure were examined (i.e., ICCs ranged from 0.02 to 0.19), which indicated inconsistency in the variation of responses within a given team. The data were clustered by teams, and we tested the final structural equation model with and without clustering. The outcomes did not change (i.e., results and fit were practically the same for clustered and un-clustered models). For simplicity, the results are reported without clustering.

3.3. Measurement Model

Analyses in MPlus 7.4 [77] used maximum likelihood estimation to estimate the model fit indices [95]. (Analyses applied overall model fit criteria recommended by Hu and Bentler [96]: Root Mean Square Error of Approximation (RMSEA), with a value <0.08 indicating adequate fit, and Standardized Root Mean Square Residual (SRMR), with a value <0.08 indicating good fit. The Comparative Fit Index (CFI) and the Tucker–Lewis Index (TLI) were also considered, and both indicated acceptable fit with a value >0.90. The Chi-Square test for model fit was reviewed with a non-significant estimate indicating good fit.) The measurement model tested whether items were loaded onto the factor they were intended to measure. To address concerns of poor fit, items were removed from the model that did not load well onto the scale factor (i.e., λ < 0.30) or that had large item discrepancies (i.e., residual correlations >0.15) with other items in the model [95,97]. Two items were removed from the measure of perceived value, one item from motivation, and one item from perceived enjoyment.

The factor scores for the four affective states all loaded onto a second-order factor are as follows: psychological meaning (λ = 0.86), perceived enjoyment (λ = 0.92), motivation (λ = 0.95) and emotional engagement (λ = 0.92). Each of these constructs similarly indicates a positive affective state regarding the participants’ experience in the game-based training. However, a model collapsing all four scales into a single common factor for affective states did not fit well, indicating that (as expected) they are related but distinguishable constructs. All remaining items loaded onto a single factor for each scale. The final measurement model demonstrated good fit (χ2 (1192) = 2461.44, p < 0.001, RMSEA = 0.05 (0.05, 0.06), p = 0.07; CFI = 0.92; TLI = 0.92; SRMR = 0.05).

3.4. Hypothesis Testing

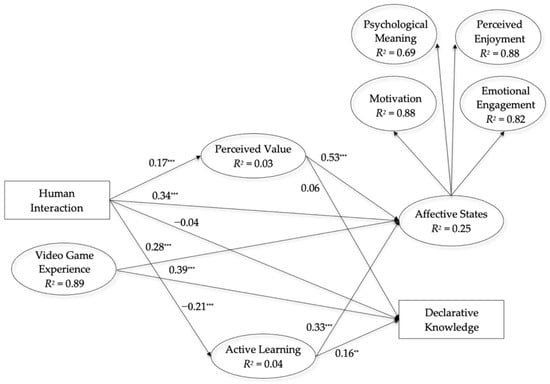

Hypotheses 1–4 were tested in one structural equation model (SEM), controlling for videogame experience. This structural equation model demonstrated good fit—see Figure 1. The overall fit indices were within acceptable ranges (χ2 (1200) = 2556.71, p < 0.001, RMSEA = 0.05 (0.05, 0.06), p = 0.009; CFI = 0.92; TLI = 0.91; SRMR = 0.11). In this model, the two study outcomes (i.e., affective states and declarative knowledge) were not significantly correlated, r = −0.001, p = 0.78. Despite theoretical indication that these outcomes would be related, this was not the case after accounting for the other relationships in the model.

Figure 1.

Standardized Estimates for Direct and Indirect Effects. ** p < 0.01, *** p < 0.001.

3.4.1. Direct Effects

Hypothesis 1 stated that individuals who completed the training with a team would experience significantly higher affective states than individuals who played alone. The results support this hypothesis. The total direct effect for human interaction influencing affective states was as follows: b = 0.17, SE = 0.07, p = 0.01, 95% CIs = 0.04, 0.30, β = 0.11. The direct effects were also evaluated in the full model, which also included the hypothesized mediation paths. The remaining direct effect is the effect after accounting for the other indirect effects in the model. The remaining direct effect was also significant, as reported in Table 2, indicating that the effect of human interaction on affective states was only partially mediated by the mediating variables proposed in the model.

Table 2.

Direct and indirect effect results.

Hypothesis 2 states that individuals who played with a team would demonstrate significantly greater levels of declarative knowledge than individuals who played alone. Our results do not support this hypothesis: the direct effect between human interaction and declarative knowledge was significant, but in the opposite direction from what was predicted (b = −0.01, SE = 0.01, p = 0.02, 95% CIs = −0.06, −0.006, β = −0.03); individuals demonstrated significantly higher declarative knowledge. When evaluated in the full model, the remaining direct effect was not significant, as shown in Table 2. This is the residual direct effect after accounting for the indirect effects in the model. This is interpreted to mean that human interaction (i.e., playing the videogame alone) had a small but significant direct effect on declarative knowledge until accounting for the indirect effects in the model. Once accounting for the mediating effects of the process variables, there is no significant direct effect of human interaction on declarative knowledge. The posttest declarative knowledge scores for individuals who played with a team (M = 0.67, SD = 0.17) were not statistically different from the scores for individuals who played alone (M = 0.67, SD = 0.20) when accounting for all the variables in the full model.

3.4.2. Indirect Effects

Hypotheses 3 and 4 predicted indirect effects for the relationship between human interaction and the two outcomes (i.e., affective states and declarative knowledge). The standardized estimates are provided for the structural equation model in Figure 1. The asymmetrical confidence intervals (ACIs) were assessed to ensure they did not contain zero, which is considered an indication of statistical significance for examining indirect effects. This method avoids the issue of lost statistical power when examining non-normal distributions as a product of the two regression slopes [98]. A 95% bias-corrected bootstrapped confidence interval was used, based on 1000 bootstrapped samples, and the Pm was examined as an indicator of effect size using Pm = (ab)/c, a ratio of indirect to total effect [99]. Larger values indicate a larger effect size. Due to limited studies applying the Pm value, standardized values indicating small, medium or large effects have not been established.

Hypotheses H3a and H3b predicted that perceived value would mediate the relationships of human interaction with affective states and declarative knowledge, respectively. The bias-corrected bootstrapped confidence intervals demonstrate that H3a was statistically significant (human interaction → perceived value → affective states, b = 0.14, SE = 0.05, 95% CIs = 0.05, 0.23 (Pm = 0.37, SE = 0.10, p < 0.001)), while H3b was not significant (i.e., human interaction → perceived value → declarative knowledge, b = 0.004, SE = 0.01, 95% CIs = −0.01, 0.01 (Pm = 0.34, SE = 0.64, ns)). Hypotheses H4a and H4b predicted that active learning would mediate the relationships of human interaction with affective states and declarative knowledge, respectively. The bias-corrected bootstrapped confidence intervals demonstrate that H4a was statistically significant (human interaction → active learning → affective states, b = −0.10, SE = 0.03, 95% CIs = −0.16, −0.04 (Pm = −0.25, SE = 0.10, p = 0.01)), while H4b was not supported (human interaction → active learning → declarative knowledge, b = −0.01, SE = 0.01, 95% CIs = −0.02, −0.002 (Pm = 2.00, SE = 5.56, p = 0.72)). This effect (Pm = 2.00) is not significant because the direct effect is close to 0, c = −0.02, making the valuable unreliable. Although there appears to be some evidence that playing the game alone will lead to higher active learning, which in turn will have a positive association with declarative knowledge, this effect appears to be negligible.

These findings overall demonstrate that human interaction has a direct impact on affective states, with participants who play the video game having a significantly more positive experience than individuals who play the game alone. However, there is no difference between teams and individuals when accounting for other variables in the model. The results also demonstrate that the processes through which human interaction influences affective states are different from the processes through which human interaction influences declarative knowledge. Together, these results indicate that both perceived value and active learning mediate the relationship between human interaction and affective states, with more human interaction leading to more positive affective states through perceived value, and less human interaction leading to more positive affective states through active learning. However, declarative knowledge was only impacted through active learning, which was a small effect. This provides valuable evidence for future researchers in supporting the assertion that thoughtful design needs to be considered regarding the intended outcomes of the training, as the mechanism in the game processes will affect training outcomes differently.

4. Discussion

The purpose of this paper was to explore the impact of human interaction in a videogame-based training tool on participant outcomes. It further sought to understand the possible mechanisms that mediate this relationship (i.e., between game characteristics and training outcomes). To accomplish this purpose, individuals who played the training videogame alone were compared with those who played the training videogame with a team. Measures of the training process (e.g., perceived value of the training) and outcomes (affective states and declarative knowledge) were gathered. The primary findings demonstrate that after accounting for the indirect effects in the model, human interaction had a significant direct effect on affective states, but not on declarative knowledge. Participants who trained as part of a team reported significantly greater levels of positive affective states (indicated by psychological meaning, perceived enjoyment, motivation and emotional engagement), but no significant difference in declarative knowledge. Further analyses show that game-based training with a team impacted the affective states of players through the mechanisms of perceived value and active learning. Additionally, active learning mediated the relationship between human interaction and declarative knowledge.

This study was developed in response to the mounting number of contradictory findings regarding the effectiveness of videogames as a learning tool. While some studies show that videogames are effective tools for promoting learning, e.g., [100], other studies have failed to find such effects [101]. Recent research has called for studies to examine the variables that lead to effective game-based learning [102]. Some researchers have stated that there is a need for specific studies that isolate game elements to determine their effects [103]. However, researchers have been calling for these studies since the mid-1980s, e.g., [104], leading some researchers to criticize the lack of progress that has been made in the field over the last three decades [105]. Given the amount of time and money invested in producing games for learning, it is apparent that a resolution to this contradictory research is needed.

It is important for game-based research to address these challenges by conducting high-quality studies that examine how game characteristics impact learning. The current study provided an expanded version of [10] game-based learning model and addressed current challenges in the research literature. Specifically, one game characteristic (i.e., human interaction) was isolated that has been proposed to influence training outcomes. Several potential variables (i.e., perceived value and active learning) were further tested as possible mechanisms through which game-based learning influences training outcomes. Finally, two training outcomes (i.e., affective states and declarative knowledge) were measured to observe differences between these outcomes. This is a valuable contribution to the research literature, given the ongoing debate regarding the effectiveness of using game-based learning as a tool to train individuals. This model followed a new approach to the research question, exploring the context in which game-based learning leads to improved training outcomes.

The current study indicated that game characteristics may influence training outcomes. The results show that human interaction significantly impacted affective states. Those who played the game with a team experienced significantly more positive affective states than individuals who played the game alone. This is consistent with previous research that has shown that many individuals prefer to work with a group over alone [106], and that working with a team can lead to positive affective outcomes [107]. This means that individuals will likely experience more positive affective outcomes from the training if they are allowed to interact with other individuals as they play the game. Thus, designing or selecting a game with a multiplayer option may be beneficial in applications in which the goal is to improve a player’s affective state. It is not clear whether other game characteristics can improve affective states; this warrants further exploration. Human interaction was found to have not had a direct effect on declarative knowledge after accounting for the indirect effects in the model. When examining the posttest scores, there was no significant difference between groups (i.e., if they played alone or with a team) as regards declarative knowledge.

4.1. Implications and Future Research

It is beneficial to re-examine the primary outcomes of the current study in order to understand major implications for future research. These results have valuable implications for future research in a number of ways, and indicate areas in which additional research is needed.

4.1.1. Impact of Game Characteristics

The results of this study demonstrate that game characteristics can have an impact on training outcomes. Because only one game characteristic (i.e., human interaction) was explored in the current study, more research is needed to clarify the effects of other game characteristics and their impacts on other training outcomes. However, it is still clear that game characteristics can impact training outcomes, and should be considered from a design perspective for future game-based learning tools. Examining other game characteristics could shed light on the complex relationships between game characteristics, mediating variables, and training outcomes, e.g., [74]. For example, the game characteristic assessment (characterized by the extent of feedback an individual receives during the training) could have a greater impact on mediating factors such as active learning and cognitive engagement, which could then lead to a greater effect on learning outcomes. By only examining one game characteristic (i.e., human interaction), the ability to find the relationships that were hypothesized may have been limited. Thus, another research opportunity is for other studies to systematically explore multiple game characteristics across studies, while tracking the characteristics and combinations of characteristics that produce the strongest effect. This would benefit researchers and practitioners by clarifying which characteristics they may choose to include in the design of a game, and what combinations would best match the unique goals of the training.

Since game characteristics can have an impact on training outcomes, it is concerning when researchers do not disclose sufficient information about a game for the game characteristics can be inferred. A primary benefit of disclosing detailed information about a game is that the effects of the game characteristics can be identified. Without considering the effects of the game characteristics, the findings from the study may be misattributed to other factors (e.g., condition assignment). Thus, future researchers should disclose information about the game in sufficient detail that the game characteristics can be identified.

Another beneficial practice would be describing the game characteristics using a common framework or taxonomy. Using a standardized framework to describe game characteristics would help future researchers understand and synthesize the results of game-based learning across studies. This would allow more accurate comparisons and interpretations to be made across studies, and would provide a clearer picture of the impact of game characteristics within the larger context of game-based learning. However, research synthesis and cumulative research (building on what others have found) is limited by poor descriptions or mischaracterizations of game characteristics by researchers or game manufacturers. Research would benefit from the disclosure of game characteristics using a standard framework, such as presenting a profile of game characteristics.

One primary benefit of expanding the literature on game characteristics is that research findings can lead to intentional game design decisions, such as using specific game characteristics or combinations of characteristics to meet training objectives. The goal of understanding game characteristics is that research findings can inform the future design and development of videogames used for learning. As scientific findings provide clear evidence about the game characteristics that lead to specific training outcomes, games can be customized by developers or chosen by clients specifically for the intended training outcomes. An example from the present study is that a game designed to include a multiplayer option would lend itself to a training intention of improved affective states. Previous research has demonstrated that specific training design can influence training outcomes [108], and it is advantageous to consider research findings during the development phase to inform the decisions made regarding a training program.

4.1.2. Human Interaction

In the current study, human interaction led to decreased levels of active learning. This is potentially due to the additional attention that team members paid to interacting with others when collaborating and coordinating their efforts. In this study, participants interacted only once for 20 min. It is possible that the initial interaction detracts from the attention paid to the learning process, but that later interactions may be different, once teams have had sufficient opportunity to develop collaborative relationships. This may indicate that teams need additional time in game-based training to interact and be effective, which is consistent with previous research that has shown that the benefits of teams may take time and multiple interactions to emerge [109]. Practitioners could design training with a multiplayer component to allow extra time for team interaction, to emphasize the importance of active learning, or to allow the team ample time to prepare before the game (e.g., preparing a strategy of working together).

Other social mechanisms could contribute to the differences observed between individuals and teams, such as diffusion of responsibility [110]. That is, participants playing with a team may not feel that they are responsible for learning all the components of the game if they have others who they can depend on to help them be successful during the game. Although participants were warned they would complete a test at the end of the study, they may still have experienced this diffusion when working with other team members. It would be valuable for future researchers to more closely observe the social interactions and experiences that teams are having in the game, and to explore how these interactions impact learning outcomes. Increasing awareness of the processes that occur during the game cycle and building in new features to the game may change the training process and improve training outcomes.

4.1.3. Mechanisms in the Game Cycle

Based on the game-based learning model [10], inputs are believed to impact training outcomes via various training processes in the game cycle. In previous research, these game cycle mechanisms have been considered a black box of training processes [111]. Other researchers have identified the need to examine variables that may exist in this black box of game cycle processes and that mediate or moderate the relationships between game characteristics and training outcomes [112]. However, there is little empirical research examining these mechanisms. Therefore, several mechanisms that were expected to exist in this game cycle were explored.

It was found that perceived value mediated the relationship between human interaction and affective states. This is consistent with previous research, which has shown that understanding the value of a training program has an important association with participants’ experience and the outcomes of the training [64]. In one previous study, researchers found that perceived value of the learning process led to increased levels of participant enjoyment [113]. It was also found that active learning is a mechanism through which human interaction impedes both affective states and declarative knowledge. Thus, my study was one of the first to identify specific game processes that are influenced by game characteristics.

Despite the complications in studying the game cycle, it is important to evaluate the user experience so as to understand the role it plays in affecting training outcomes [1]. The cycle of game elements leading to a unique user experience, which influences training outcomes, has been described as a feedback loop that can be designed to lead to beneficial states such as happiness, engagement, flow, and satisfaction [1,114,115]. Thus, it is important to understand the processes from a design perspective, in order to help optimize the user experience and the effectiveness of the training towards the intended outcomes. It would be valuable for game designers to intentionally build in opportunities to enhance the game cycle according to the intended goals of the training.

One critical next step for future research on game-based learning for workplace outcomes is for researchers to study the actual games that organizations are using to develop employee knowledge and skills. Empirical studies of games used in business environments rarely examine the games used by training practitioners. There may be a number of reasons for this. Understandably, concerns about sharing proprietary information or programs may limit a company’s willingness to share their training products for scientific exploration. However, it would benefit both practitioners and researchers to generate evidence on the effectiveness of game-based learning using the actual games used in organizations. At present, many researchers use games that are readily available (e.g., commercial games, entertainment-based games) as proxy measures for the training games used by organizations. There is a dearth of research studying the game-based tools that organizations are using today. In this study and other similar studies, the generalizability of the results is limited by the tools being used in the study. The present study used a game focused on building teams of individuals to coordinate their efforts on a ship in order to meet specific mission objectives. An immediate concern of this game is that participants will rarely see any real-world value in developing their skills in this game. Not only does the game lack face validity for real world application, but the skills the participants are developing (e.g., communication and knowledge sharing) are secondary skills, and not the primary focus of the game. Future studies could address this concern by studying games currently being used within workplace settings. This would allow more clear connections to be made between the research and the application of the results.

4.1.4. Links to Job Performance

Finally, future research should establish a clear link between game-based training and job performance. My study demonstrates that human interaction impacts affective states and declarative knowledge, but stops short of showing impacts on job performance. Prior research has demonstrated a positive correlation between declarative knowledge and transfer of training, but not as clearly between affective reactions and transfer of training [116]. There is some theoretical support for the statement that some positive affective states experienced in the training (e.g., motivation) can benefit the acquisition of declarative knowledge, which may increase the likelihood that transfer of training will occur [117]. However, there are many factors that affect transfer, such as workplace support, and opportunity to practice and apply the skill [117]. Considering the number of factors that can influence this outcome, it is important to gather direct transfer measures rather than relying on the relationship between transfer of training and other available measures. Thus, there is little that can be said regarding the generalizability of the current results to direct work-related outcomes, such as job performance. However, future researchers could design their studies to demonstrate these connections. Using a real-world sample of workers would strengthen this area of research and provide stronger evidence about the effectiveness of game-based learning for transfer of training and job performance. There are several benefits that would come with using a real-world sample. For example, student participants may be less motivated to pay attention and do their best in a lab experiment because there is little incentive for them to put forth effort in the study. In addition to this, workers have previous skills and experiences that could be applicable to the experiment, and could influence the results of the study in a meaningful way. Thus, these differences between a subject pool of students and a sample of workers could impact the results, and it is important to recognize that future studies using real-world samples may benefit from these differences and provide a more accurate indication of game-based learning in an organizational setting.

In a design that coordinated with an organization to use a game-based training with employees and then gathered job performance data for the employees, the impact that the training had on relevant work-related outcomes such as job performance could be clearly seen. Providing evidence about the implications of game-based training for job performance can help an organization better understand the return on investment for game-based learning. A major limitation of existing research in this area, and of the current study, is that implications for transfer of training and job performance are being inferred using proxy measures of learning (e.g., declarative knowledge). Being able to draw these connections directly could aid organizations in making decisions about investing in and developing future game-based learning programs. Thus, the current platform of using a lab-based experiment limits the generalizability of these results, as the measures and methodologies did not resemble the real-world context.

4.2. Limitations

There are several important factors to consider when interpreting the results of the current study. One concerns conclusions of causality. Because human interaction was manipulated, we can have some confidence in arguing for a causal effect between a game characteristic and outcome variables (i.e., affective states and declarative knowledge). However, because the mediating variables and outcome variables were measured simultaneously, causal inferences cannot be drawn for any mediating relationships. While the mediating and outcome variables were related in my study, there is not enough evidence to show that the mediators occurred prior to the training outcomes, or that these variables were the process through which human interaction affected the training outcomes. Ideally, future studies examining gameplay processes would measure potential mediators during the flow of the game. For example, the researchers could pause the game and measure the potential mechanisms, or utilize a think-aloud protocol to capture participants’ thoughts during the training. Another alternative could be a repeated-measures design, in which participants complete multiple iterations of the game and report on the process variables between these interactive periods. These methods would better align the measurement of the potential mediator with the time point in which it operates, and strengthen the inference that the mediators have a causal impact on the training outcomes.

Further, as noted above, although the paths from the mediating variables to the outcomes were generally significant and in the predicted direction, the paths from human interaction to the mediating variables were weak. It may be that the human interaction manipulation was not robust enough to create the expected main effects. Specifically, the differences between the experiences of those who played alone and those who played with a group may have been too similar. The experiment could have been redesigned to allow multiple iterations of the game. With several phases of team interaction, the distinctions between the individual and the team group may have been clearer and had a stronger effect on the tested process variables.

An important consideration when making the comparison between individuals and teams is that a multilevel analysis is a more robust approach to analyzing the data. Although it is reported that the clustered version of the model that did account for team membership was analyzed, no significant changes in the outcomes were found. Because of this, the results were presented without being clustered by teams, which does violate any assumption of independence. This decision was made for simplicity, but it would still benefit future research to account for group membership, and to cluster the data analysis using a multilevel analysis approach.

A final consideration is that many of the variables in the current study were similar in nature, and highly related to one another. A substantial amount of overlap between the measured constructs was found. This made it challenging to distinguish the constructs in the measurement model, which likely contributed to the number of items that needed to be removed from the different scales (e.g., motivation). The modifications to the validated scales may impact the results. It is unclear what unintended consequences could have resulted from removing content that was too similar to the content of other variables in the study. These changes may have been meaningful to the constructs or the outcomes. However, previous research has shown that game features are highly interdependent and difficult to separate [11]. It is important to remember that this is a single study, and replication of this methodology is needed for further confidence in the results.

5. Conclusions

Based on the current findings, it appears that there are potential benefits to be derived from continuing to explore games and similar technology [118] as potential interventions [119], including use as a training tool. The current findings demonstrate that the game characteristic of human interaction has a large positive effect on affective states, and a small but significant effect on declarative knowledge. Additionally, perceived value and active learning were important mechanisms through which human interaction impacted affective states. These emerging areas of research show there are potential avenues for continued research in the application of game-based training and education.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/mti6040026/s1, Material S1: Learning objectives and sub-objectives for the Computer-Based Training (CBT); Material S2: Procedures for experiment with final sample of participants; Material S3: Survey 1; Material S4: Survey 2; Video S1: Computer-Based Training.

Funding

This research received no external funding.

Institutional Review Board Statement

This study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Institutional Review Board of Colorado State University (protocol code 16-6538HH, date of approval 4 January 2016).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data for this study is available upon request from the author.

Acknowledgments

I would like to thank Kurt Kraiger, Alyssa Gibbons, Travis Maynard, Lucy Troup, and Mark Prince for their feedback and comments, which provided invaluable guidance on this manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Calvillo-Gámez, E.H.; Cairns, P.; Cox, A.L. Assessing the core elements of the gaming experience. In Evaluating User Experience in Games; Berhnhaupt, R., Ed.; Springer International Publishing: London, UK, 2015; pp. 37–62. [Google Scholar] [CrossRef]

- Hays, R.T. The Effectiveness of Instructional Games: A Literature Review and Discussion; Document No. NAWCTSD-TR-2005-004; Naval Air Warfare Center Training Systems Division: Orlando, FL, USA, 2005. [Google Scholar]

- Pagulayan, R.J.; Keeker, K.; Fuller, T.; Wixon, D.; Romero, R.L. User-centered design in games. In The Human-Computer Interaction Handbook: Fundamentals, Evolving Technologies and Emerging Applications, 2nd ed.; Sears, A., Jacko, J.A., Eds.; Lawrence Erlbaum Associates: New York, NY, USA, 2008; pp. 741–760. [Google Scholar] [CrossRef]

- Guillén-Nieto, V.; Aleson-Carbonell, M. Serious games and learning effectiveness: The case of “It’s a Deal!”. Comput. Educ. 2012, 58, 435–448. [Google Scholar] [CrossRef]

- Mayo, M.J. Games for science and engineering education. Commun. ACM 2007, 50, 30–35. [Google Scholar] [CrossRef]

- Muntean, C.I. Raising Engagement in E-Learning Through Gamification. In Proceedings of the International Conference on Virtual Learning, Cluj-Napoca, Romania, 29 October 2011; pp. 323–329. [Google Scholar]

- By the Numbers: 10 Stats on the Growth of Gamification. Available online: http://www.gamesandlearning.org/2015/04/27/by-the-numbers-10-stats-on-the-growth-of-gamification/ (accessed on 12 April 2017).

- Coller, B.D.; Scott, M.J. Effectiveness of using a video game to teach a course in mechanical engineering. Comput. Educ. 2009, 53, 900–912. [Google Scholar] [CrossRef]

- Wrzesien, M.; Raya, M.A. Learning in serious virtual worlds: Evaluation of learning effectiveness and appeal to students in the E-Junior project. Comput. Educ. 2010, 55, 178–187. [Google Scholar] [CrossRef]

- Garris, R.; Ahlers, R.; Driskell, J.E. Games, motivation, and learning: A research and practice model. Simul. Gaming 2002, 33, 441–467. [Google Scholar] [CrossRef]

- Bedwell, W.L.; Pavlas, D.; Heyne, K.; Lazzara, E.H.; Salas, E. Toward a taxonomy linking game attributes to learning an empirical study. Simul. Gaming 2012, 43, 729–760. [Google Scholar] [CrossRef]

- Kraut, R.; Fussell, S.; Lerch, F.; Espinosa, A. Coordination in teams: Evidence from a simulated management game. Hum.-Comput. Interact. Inst. 2005, 1–58. [Google Scholar] [CrossRef]

- Brodbeck, F.C.; Greitemeyer, T. Effects of individual versus mixed individual and group experience in rule induction on group member learning and group performance. J. Exp. Soc. Psychol. 2000, 36, 621–648. [Google Scholar] [CrossRef]

- Laughlin, P.R.; Bonner, B.L.; Miner, A.G. Groups perform better than the best individuals on letters-to-numbers problems. Organ. Behav. Hum. Decis. Processes 2002, 88, 605–620. [Google Scholar] [CrossRef]

- Laughlin, P.R.; Zander, M.L.; Knievel, E.M.; Tan, T.K. Groups perform better than the best individuals on letters-to-numbers problems: Informative equations and effective strategies. J. Personal. Soc. Psychol. 2003, 85, 684–694. [Google Scholar] [CrossRef]

- Liang, D.W.; Moreland, R.; Argote, L. Group versus individual training and group performance: The mediating role of transactive memory. Personal. Soc. Psychol. Bull. 1995, 21, 384–393. [Google Scholar] [CrossRef]

- Sanchez, D.R.; Van Lysebetten, S.; Gibbons, A.M. Adapting simulation responses from judgment-based to analytic-based scores: A process model, case study, and empirical evaluation of managers’ responses among a sample of managers. Psychol.-Manag. J. 2017, 20, 18–47. [Google Scholar] [CrossRef]

- Laughlin, P.R.; Carey, H.R.; Kerr, N.L. Group-to-individual problem-solving transfer. Group Processes Intergroup Relat. 2008, 11, 319–330. [Google Scholar] [CrossRef]

- Maciejovsky, B.; Sutter, M.; Budescu, D.V.; Bernau, P. Teams make you smarter: How exposure to teams improves individual decisions in probability and reasoning tasks. Manag. Sci. 2013, 59, 1255–1270. [Google Scholar] [CrossRef]

- Gibson, C.B.; Cohen, S.G. Virtual Teams That Work: Creating Conditions for Virtual Team Effectiveness; Jossey-Bass: San Francisco, CA, USA, 2003. [Google Scholar]

- Moreland, R.L.; Myaskovsky, L. Exploring the performance benefits of group training: Transactive memory or improved communication? Organ. Behav. Hum. Decis. Processes 2000, 82, 117–133. [Google Scholar] [CrossRef]

- Maynard, T.; Mathieu, J.; Gilson, L.; Sanchez, D.R. Do I really know you and does it matter? Unlocking the relationship between familiarity and information elaboration in global virtual teams. Group Organ. Manag. 2018, 44, 3–37. [Google Scholar] [CrossRef]

- Kozlowski, S.W.; Bell, B.S. Team learning, development, and adaptation. In Work Group Learning: Understanding, Improving & Assessing How Groups Learn; Sessa, V.I., London, M., Eds.; Lawrence Erlbaum Associates: New York, NY, USA, 2008; pp. 15–44. [Google Scholar] [CrossRef]

- Ford, J.K.; Smith, E.M.; Weissbein, D.A.; Gully, S.M.; Salas, E. Relationships of goal orientation, metacognitive activity, and practice strategies with learning outcomes and transfer. J. Appl. Psychol. 1998, 83, 218–233. [Google Scholar] [CrossRef]

- Curşeu, P.L. Emergent states in virtual teams: A complex adaptive systems perspective. J. Inf. Technol. 2006, 21, 249–261. [Google Scholar] [CrossRef]

- May, D.R.; Gilson, R.L.; Harter, L.M. The psychological conditions of meaningfulness, safety and availability and the engagement of the human spirit at work. J. Occup. Organ. Psychol. 2004, 77, 11–37. [Google Scholar] [CrossRef]

- Plass, J.L.; O’Keefe, P.A.; Homer, B.D.; Case, J.; Hayward, E.O.; Stein, M.; Perlin, K. The impact of individual, competitive, and collaborative mathematics game play on learning, performance, and motivation. J. Educ. Psychol. 2013, 105, 1050–1066. [Google Scholar] [CrossRef]

- Ponce, E.R. Evaluation Student Satisfaction: Measurement of Training and Job Satisfaction of Former Vocational Education Students; National Center for Research in Vocational Education: Columbus, OH, USA, 1981. [Google Scholar]

- Rich, B.L.; Lepine, J.A.; Crawford, E.R. Job engagement: Antecedents and effects on job performance. Acad. Manag. J. 2010, 53, 617–635. [Google Scholar] [CrossRef]

- Rieber, L.P. Seriously considering play: Designing interactive learning environments based on the blending of microworlds, simulations, and games. Educ. Technol. Res. Dev. 1996, 44, 43–58. [Google Scholar] [CrossRef]

- Bernhaupt, R. Evaluating User Experience in Games; Springer: London, UK, 2010. [Google Scholar] [CrossRef]

- Blair, L. The Use of Video Game Achievements to Enhance Player Performance, Self-Efficacy, and Motivation. Ph.D. Thesis, University of Central Florida, Orlando, FL, USA, 2011. [Google Scholar]

- Leung, A. A conceptual model of information technology training leading to better outcomes. Int. J. Bus. Inf. 2015, 1, 74–95. [Google Scholar] [CrossRef]

- Patrick, J. Training Research and Practice; Academic Press: San Diego, CA, USA, 1992. [Google Scholar]

- Tannenbaum, S.I.; Yukl, G. Training and development in work organizations. Annu. Rev. Psychol. 1992, 43, 399–441. [Google Scholar] [CrossRef]

- Warr, P.; Bunce, D. Trainee characteristics and the outcomes of open learning. Pers. Psychol. 1995, 48, 347–375. [Google Scholar] [CrossRef]

- Alliger, G.M.; Tannenbaum, S.I.; Bennett, W.; Traver, H.; Shotland, A. A meta-analysis of the relations among training criteria. Pers. Psychol. 1997, 50, 341–358. [Google Scholar] [CrossRef]

- Morgan, R.B.; Casper, W.J. Examining the factor structure of participant reactions to training: A multidimensional approach. Hum. Resour. Dev. Q. 2000, 11, 301–317. [Google Scholar] [CrossRef]

- Alexander, P.A.; Judy, J.E. The interaction of domain-specific and strategic knowledge in academic performance. Rev. Educ. Res. 1988, 58, 375–404. [Google Scholar] [CrossRef]

- Anderson, J.R. The Architecture of Cognition; Harvard University Press: Cambridge, MA, USA, 1983. [Google Scholar]

- Dillon, P.C.; Graham, W.K.; Aidells, A.L. Brainstorming on a “hot” problem: Effects of training and practice on individual and group performance. J. Appl. Psychol. 1972, 56, 487–490. [Google Scholar] [CrossRef]

- Morrison, K.E. The multiplication game. Math. Mag. 2010, 83, 100–110. [Google Scholar] [CrossRef][Green Version]

- Ma, W.W.; Yuen, A.H. Understanding online knowledge sharing: An interpersonal relationship perspective. Comput. Educ. 2011, 56, 210–219. [Google Scholar] [CrossRef]

- Argote, L. Organizational Learning: Creating, Retaining and Transferring Knowledge; Kluwer Academic Publishers: Boston, MA, USA, 1999. [Google Scholar]

- Darr, E.D.; Kurtzberg, T.R. An investigation of partner similarity dimensions on knowledge transfer. Organ. Behav. Hum. Decis. Processes 2000, 82, 28–44. [Google Scholar] [CrossRef]

- Ko, D.-G.; Kirsch, L.J.; King, W.R. Antecedents of knowledge transfer from consultants to clients in enterprise system implementations. Manag. Inf. Syst. Q. 2005, 29, 59–85. [Google Scholar] [CrossRef]

- Ramos, C.; Yudko, E. “Hits” (not “discussion posts”) predict student success in online courses: A double cross-validation study. Comput. Educ. 2008, 50, 1174–1182. [Google Scholar] [CrossRef]

- Gittell, J.H. Coordinating mechanisms in care provider groups: Relational coordination as a mediator and input uncertainty as a moderator of performance effects. Manag. Sci. 2002, 48, 1408–1426. [Google Scholar] [CrossRef]

- Salas, E.; DiazGranados, D.; Klein, C.; Burke, C.S.; Stagl, K.C.; Goodwin, G.F.; Halpin, S.M. Does team training improve team performance? A meta-analysis. Hum. Factors 2008, 50, 903–933. [Google Scholar] [CrossRef]

- De Freitas, S.; Routledge, H. Designing leadership and soft skills in educational games: The e-leadership and soft skills educational games design model (ELESS). Br. J. Educ. Technol. 2013, 44, 951–968. [Google Scholar] [CrossRef]

- MacCallum-Stewart, E. Stealth learning in online games. In Digital Games and Learning; de Freitas, S., Maharg, P., Eds.; Continuum Press: New York, NY, USA, 2010; pp. 107–128. Available online: https://www.bloomsbury.com/us/digital-games-and-learning-9781441198709/ (accessed on 27 January 2022).

- Adams, D.M.; Mayer, R.E.; MacNamara, A.; Koenig, A.; Wainess, R. Narrative games for learning: Testing the discovery and narrative hypotheses. J. Educ. Psychol. 2012, 104, 235–249. [Google Scholar] [CrossRef]

- Trepte, S.; Reinecke, L. The pleasures of success: Game-related efficacy experiences as a mediator between player performance and game enjoyment. Cyberpsychol. Behav. Soc. Netw. 2011, 14, 555–557. [Google Scholar] [CrossRef]

- Coller, B.D.; Shernoff, D.J.; Strati, A.D. Measuring Engagement as Students Learn Dynamic Systems and Control with a Video Game. Adv. Eng. Educ. 2011, 2, 1–32. Available online: https://files.eric.ed.gov/fulltext/EJ1076061.pdf (accessed on 27 January 2022).

- Derouin-Jessen, R.E. Game On: The Impact of Game Features in Computer-Based Training. Ph.D. Thesis, University of Central Florida, Orlando, FL, USA, 2008. [Google Scholar]

- Liu, C.C.; Cheng, Y.B.; Huang, C.W. The effect of simulation games on the learning of computational problem solving. Comput. Educ. 2011, 57, 1907–1918. [Google Scholar] [CrossRef]

- Wilson, K.A.; Bedwell, W.L.; Lazzara, E.H.; Salas, E.; Burke, C.S.; Estock, J.L.; Orvis, K.L.; Conkey, C. Relationships between game attributes and learning outcomes: Review and research proposals. Simul. Gaming 2009, 40, 217–266. [Google Scholar] [CrossRef]

- Landers, R.N. Developing a theory of gamified learning linking serious games and gamification of learning. Simul. Gaming 2014, 45, 752–768. [Google Scholar] [CrossRef]

- Lankes, M.; Bernhaupt, R.; Tscheligi, M. Evaluating user experience factors using experiments: Expressive artificial faces embedded in contexts. In Evaluating User Experience in Games; Bernhaupt, R., Ed.; Springer: London, UK, 2010; pp. 165–183. [Google Scholar] [CrossRef]

- Lemay, P.; Maheux-Lessard, M. Investigating experiences and attitudes toward videogames using a semantic differential methodology. In Evaluating User Experience in Games; Bernhaupt, R., Ed.; Springer: London, UK, 2010; pp. 89–105. [Google Scholar] [CrossRef]

- Oksanen, K.; Hämäläinen, R. Game mechanics in the design of a collaborative 3D serious game. Simul. Gaming 2014, 45, 255–278. [Google Scholar] [CrossRef]

- Von Der Pütten, A.M.; Klatt, J.; Ten Broeke, S.; McCall, R.; Krämer, N.C.; Wetzel, R.; Blum, L.; Oppermann, L.; Klatt, J. Subjective and behavioral presence measurement and interactivity in the collaborative augmented reality game TimeWarp. Interact. Comput. 2012, 24, 317–325. [Google Scholar] [CrossRef]

- Adomaityte, A. Predicting Post-Training Reactions from Pre-Training Attitudes. Ph.D. Thesis, University of Montreal, Montreal, QC, Canada, 2013. [Google Scholar]

- Lingard, L.; Whyte, S.; Espin, S.; Ross Baker, G.; Orser, B.; Doran, D. Towards safer interprofessional communication: Constructing a model of “utility” from preoperative team briefings. J. Interprofessional Care 2006, 20, 471–483. [Google Scholar] [CrossRef]

- Lepper, M.R.; Gilovich, T. Accentuating the positive: Eliciting generalized compliance from children through activity-oriented requests. J. Personal. Soc. Psychol. 1982, 42, 248–259. [Google Scholar] [CrossRef]

- Shernoff, D.J.; Csikszentmihalyi, M.; Shneider, B.; Shernoff, E. Student engagement in high school classrooms from the perspective of flow theory. Sch. Psychol. Q. 2003, 18, 158–176. [Google Scholar] [CrossRef]

- Bonwell, C.C.; Eison, J.A. Active Learning: Creating Excitement in the Classroom; ERIC Clearinghouse on Higher Education: Washington, DC, USA, 1991. [Google Scholar]

- Brown, K.G. Using computers to deliver training: Which employees learn and why? Pers. Psychol. 2001, 54, 271–296. [Google Scholar] [CrossRef]

- Prince, M. Does active learning work? A review of the research. J. Eng. Educ. 2004, 93, 223–231. [Google Scholar] [CrossRef]