Digital Escape Rooms as Game-Based Learning Environments: A Study in Sex Education

Abstract

:1. Introduction

1.1. Game-Based Learning

- During the game, learners must identify with one or several problems as well as the content areas covered by those problems.

- Learners must develop different solution and action strategies.

- Learners must select a problem solving strategy.

- Feedback from the learning environment must be responded to or utilized by learners.

- Useful strategies must be pursued by learners.

- Learners must modify less helpful strategies.

1.2. Escape Rooms

1.3. Open Research Questions

2. Materials and Methods

2.1. Sample

2.2. Design

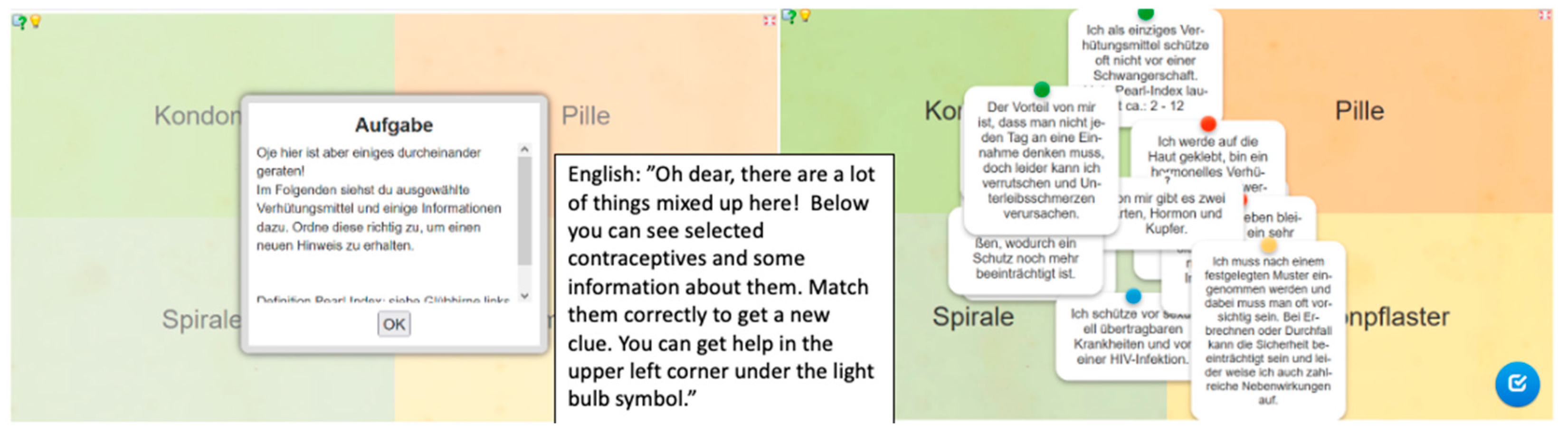

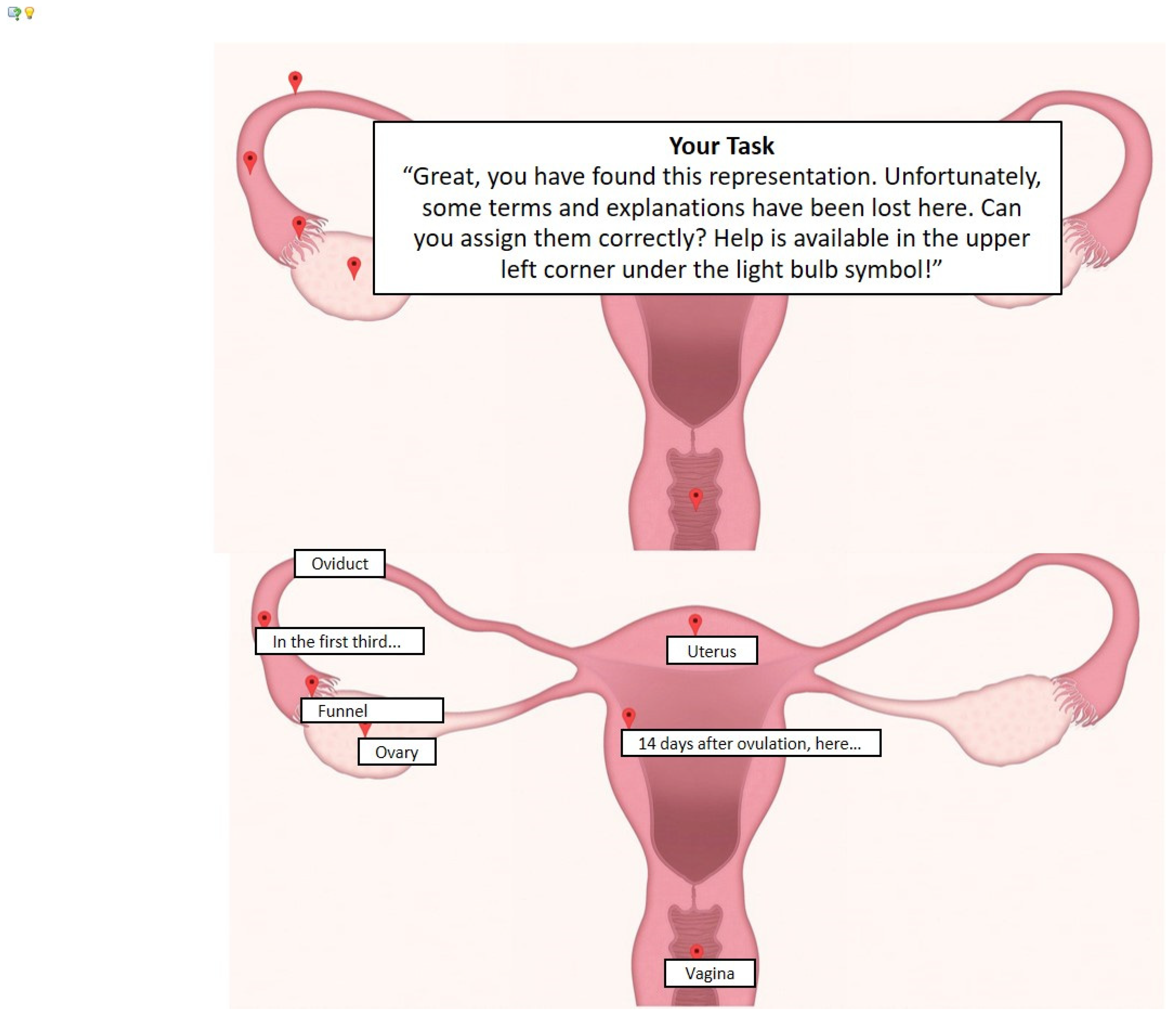

2.3. Material

Learning Environments

2.4. Instruments

2.5. Procedure

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Prensky, M. Digital Game-Based Learning; McGraw-Hill: New York, NY, USA, 2001. [Google Scholar]

- Plass, J.L.; Homer, B.D.; Kinzer, C.K. Foundations of Game-Based Learning. Educ. Psychol. 2015, 50, 258–283. [Google Scholar] [CrossRef]

- Dicheva, D.; Dichev, C.; Agre, G.; Angelova, G. Gamification in education: A systematic mapping study. J. Educ. Technol. Soc. 2015, 18, 75–88. [Google Scholar]

- Nah, F.F.H.; Zeng, Q.; Telaprolu, V.R.; Ayyappa, A.P.; Eschenbrenner, B. Gamification of education: A review of literature. In International Conference on HCI in Business; Nah, F.F.-H., Ed.; Springer: Cham, Switzerland, 2014; pp. 401–409. [Google Scholar]

- Djaouti, D.; Alvarez, J.; Jessel, J.P. Classifying serious games: The G/P/S model. In Handbook of Research on Improving Learning and Motivation through Educational Games: Multidisciplinary Approaches; Felicia, P., Ed.; IGI Global: Hershey, PA, USA, 2011; pp. 118–136. [Google Scholar]

- Egenfeldt-Nielsen, S. Third generation educational use of computer games. J. Educ. Multimed. Hypermed. 2007, 16, 263–281. [Google Scholar]

- Vogel, J.J.; Vogel, D.S.; Cannon-Bowers, J.; Bowers, C.A.; Muse, K.; Wright, M. Computer gaming and interactive simulations for learning: A meta-analysis. J. Educ. Comput. Res. 2006, 34, 229–243. [Google Scholar] [CrossRef]

- Wouters, P.; van Oostendorp, H. A meta-analytic review of the role of instructional support in game-based learning. Comput. Educ. 2013, 60, 412–425. [Google Scholar] [CrossRef]

- Sitzmann, T. A meta-analytic examination of the instructional effectiveness of computer-based simulation games. Pers. Psychol. 2011, 64, 489–528. [Google Scholar] [CrossRef]

- Clark, D.; Tanner-Smith, E.; Killingsworth, S. Digital Games, Design and Learning: A Systematic Review and Meta-Analysis; SRI International: Menlo Park, CA, USA, 2014. [Google Scholar]

- Esquembre, F. Computers in physics education. Comput. Phys. Commun. 2002, 147, 13–18. [Google Scholar] [CrossRef]

- Kim, B.; Park, H.; Baek, Y. Not just fun, but serious strategies: Using meta-cognitive strategies in game-based learning. Comput. Educ. 2009, 52, 800–810. [Google Scholar] [CrossRef]

- Sung, H.Y.; Hwang, G.J. A collaborative game-based learning approach to improving students’ learning performance in science courses. Comput. Educ. 2013, 63, 43–51. [Google Scholar] [CrossRef]

- Meluso, A.; Zheng, M.; Spires, H.A.; Lester, J. Enhancing 5th graders’ science content knowledge and self-efficacy through game-based learning. Comput. Educ. 2012, 59, 497–504. [Google Scholar] [CrossRef]

- Hamari, J.; Shernoff, D.J.; Rowe, E.; Coller, B.; Asbell-Clarke, J.; Edwards, T. Challenging games help students learn: An empirical study on engagement, flow and immersion in game-based learning. Comput. Hum. Behav. 2016, 54, 170–179. [Google Scholar] [CrossRef]

- Erhel, S.; Jamet, E. Digital game-based learning: Impact of instructions and feedback on motivation and learning effectiveness. Comput. Educ. 2013, 67, 156–167. [Google Scholar] [CrossRef]

- Chiu, Y.; Kao, C.; Reynolds, B. The relative effectiveness of digital game-based learning types in English as a foreign language setting: A meta-analysis. Br. J. Educ. Technol. 2012, 43, 104–107. [Google Scholar] [CrossRef]

- Chen, M.H.; Tseng, W.T.; Hsiao, T.Y. The effectiveness of digital game-based vocabulary learning: A framework-based view of meta-analysis. Br. J. Educ. Technol. 2018, 49, 69–77. [Google Scholar] [CrossRef]

- Tokac, U.; Novak, E.; Thompson, C.G. Effects of game-based learning on students’ mathematics achievement: A meta-analysis. J. Comput. Assist. Learn. 2019, 35, 407–420. [Google Scholar] [CrossRef]

- Chen, C.; Shih, C.C.; Law, V. The effects of competition in digital game-based learning (DGBL): A meta-analysis. Educ. Technol. Res. Dev. 2020, 68, 1–19. [Google Scholar] [CrossRef]

- Makri, A.; Vlachopoulos, D.; Martina, R. Digital Escape Rooms as Innovative Pedagogical Tools in Education: A Systematic Literature Review. Sustainability 2021, 13, 4587. [Google Scholar] [CrossRef]

- Nicholson, S. Creating Engaging Escape Rooms for the Classroom. Child. Educ. 2018, 94, 44–49. [Google Scholar] [CrossRef]

- Veldkamp, A.; Grint, L.; Knippels, M.-C.; van Joolingen, W. Escape education: A systematic review on escape rooms in education. Educ. Res. Rev. 2020, 31, 100364. [Google Scholar] [CrossRef]

- Wiemker, M.; Elumir, E.; Clare, A. Escape room games: Can you transform an unpleasant situation into a pleasant one? In Game Based Learning; Haag, J., Weißenböck, J., Gruber, M.W., Christian, M., Freisleben-Teutscher, F., Eds.; Fachhochschule St. Pölten GmbH: St. Pölten, Austria, 2015; pp. 55–68. [Google Scholar]

- Nicholson, S. Peeking Behind the Locked Door: A Survey of Escape Room Facilities. 2015. Available online: https://scottnicholson.com/pubs/erfacwhite.pdf (accessed on 23 December 2021).

- Eukel, H.N.; Frenzel, J.E.; Cernusca, D. Educational gaming for pharmacy students—Design and evaluation of a diabetes-themed escape room. Am. J. Pharm. Educ. 2017, 81, 1–5. [Google Scholar] [CrossRef]

- Glavas, A.; Stascik, A. Enhancing positive attitude towards mathematics through introducing Escape Room games. In Mathematics Education as a Science and a Profession; Element: Osijek, Croatia, 2017; pp. 281–294. [Google Scholar]

- Pan, R.; Lo, H.; Neustaedter, C. Collaboration, Awareness, and Communication in Real-Life Escape Rooms. In Proceedings of the 2017 Conference on Interaction Design and Children, Stanford, CA, USA, 27–30 June 2017; pp. 1353–1364. [Google Scholar]

- Douglas, J.Y.; Hargadon, A. The pleasures of immersion and engagement: Schemas, scripts and the fifth business. Digit. Creat. 2001, 12, 153–166. [Google Scholar] [CrossRef]

- Annetta, L.A. The “I’s” have it: A framework for serious educational game design. Rev. Gen. Psychol. 2010, 14, 105–113. [Google Scholar] [CrossRef] [Green Version]

- Sanchez, E.; Plumettaz-Sieber, M. Teaching and learning with escape games from debriefing to institutionalization of knowledge. In Proceedings of the International Conference on Games and Learning Alliance, Athens, Greece, 27–29 November 2019; pp. 242–253. [Google Scholar] [CrossRef] [Green Version]

- Kinio, A.E.; Dufresne, L.; Brandys, T.; Jetty, P. Break out of the Classroom: The Use of Escape Rooms as an Alternative Teaching Strategy in Surgical Education. J. Surg. Educ. 2019, 76, 134–139. [Google Scholar] [CrossRef]

- Bottge, B.A.; Toland, M.D.; Gassaway, L.; Butler, M.; Choo, S.; Griffen, A.K.; Ma, X. Impact of enhanced anchored instruction in inclusive math classrooms. Except. Child. 2015, 81, 158–175. [Google Scholar] [CrossRef] [Green Version]

- The Cognition and Technology Group at Vanderbilt. Technology and the design of generative learning environments. Educ. Technol. 1991, 31, 34–40. [Google Scholar]

- The Cognition and Technology Group at Vanderbilt. The Jasper series as an example of anchored instruction: Theory, program, description, and assessment data. Educ. Psychol. 1992, 27, 291–315. [Google Scholar] [CrossRef]

- Schank, R.C.; Fano, A.; Bell, B.; Jona, M. The design of goal-based scenarios. J. Learn. Sci. 1994, 3, 305–345. [Google Scholar] [CrossRef]

- Zumbach, J.; Reimann, P. Enhancing learning from hypertext by inducing a goal orientation: Comparing different approaches. Instr. Sci. 2002, 30, 243–267. [Google Scholar] [CrossRef]

- Breakout EDU. 2018. Available online: http://www.breakoutedu.com/ (accessed on 23 December 2021).

- Veldkamp, A.; Daemen, J.; Teekens, S.; Koelewijn, S.; Knippels, M.P.J.; Van Joolingen, W.R. Escape boxes: Bringing escape room experience into the classroom. Br. J. Educ. Technol. 2020, 51, 1220–1239. [Google Scholar] [CrossRef]

- Cain, J. Exploratory implementation of a blended format escape room in a large enrollment pharmacy management class. Curr. Pharm. Teach. Learn. 2019, 11, 44–50. [Google Scholar] [CrossRef]

- Hermanns, M.; Deal, B.; Campbell, A.M.; Hillhouse, S.; Opella, J.B.; Faigle, C.; Campbell, R.H. IV Using an “escape room” toolbox approach to enhance pharmacology education. J. Nurs. Educ. Pract. 2018, 8, 89–95. [Google Scholar] [CrossRef] [Green Version]

- Guckian, J.; Eveson, L.; May, H. The great escape? The rise of the escape room in medical education. Future Health J. 2020, 7, 112–115. [Google Scholar] [CrossRef] [PubMed]

- Hawkins, J.E.; Wiles, L.L.; Tremblay, B.; Thompson, B.A. Behind the Scenes of an Educational Escape Room. Am. J. Nurs. 2020, 120, 50–56. [Google Scholar] [CrossRef] [PubMed]

- Roman, P.; Rodriguez-Arrastia, M.; Molina-Torres, G.; Márquez-Hernández, V.V.; Gutiérrez-Puertas, L.; Ropero-Padilla, C. The escape room as evaluation method: A qualitative study of nursing students’ experiences. Med. Teach. 2019, 42, 403–410. [Google Scholar] [CrossRef]

- Charlo, J.C.P. Educational Escape Rooms as a Tool for Horizontal Mathematization: Learning Process Evidence. Educ. Sci. 2020, 10, 213. [Google Scholar] [CrossRef]

- Alonso, G.; Schroeder, K.T. Applying active learning in a virtual classroom such as a molecular biology escape room. Biochem. Mol. Biol. Educ. 2020, 48, 514–515. [Google Scholar] [CrossRef]

- Chu, S.; Kwan, A.; Reynolds, R.; Mellecker, R.; Tam, F.; Lee, G.; Hong, A.; Leung, C. Promoting Sex Education among Teenagers through an Interactive Game: Reasons for Success and Implications. Games Health J. 2015, 4, 168–174. [Google Scholar] [CrossRef]

- Haruna, H.; Hu, X.; Chu, S.K.W.; Mellecker, R.R.; Gabriel, G.; Ndekao, P.S. Improving Sexual Health Education Programs for Adolescent Students through Game-Based Learning and Gamification. Int. J. Environ. Res. Public Health 2018, 15, 2027. [Google Scholar] [CrossRef] [Green Version]

- Arnab, S.; Brown, K.; Clarke, S.; Dunwell, I.; Lim, T.; Suttie, N.; Louchart, S.; Hendrix, M.; De Freitas, S. The development approach of a pedagogically-driven serious game to support Relationship and Sex Education (RSE) within a classroom setting. Comput. Educ. 2013, 69, 15–30. [Google Scholar] [CrossRef] [Green Version]

- Ang, J.W.J.; Ng, Y.N.A.; Liew, R.S. Physical and Digital Educational Escape Room for Teaching Chemical Bonding. J. Chem. Educ. 2020, 97, 2849–2856. [Google Scholar] [CrossRef]

- Kroski, E. What Is a Digital Breakout Game? Libr. Technol. Rep. 2020, 56, 5–7. [Google Scholar]

- Hou, H.T.; Chou, Y.S. Exploring the technology acceptance and flow state of a chamber escape game-Escape the Lab© for learning electromagnet concept. In Proceedings of the 20th International Conference on Computers in Education ICCE, Singapore, 26–30 November 2012; pp. 38–41. [Google Scholar]

- Connolly, M.T.; Boyle, A.Z.; MacAuthor, E.; Hainey, T.; Boyle, M.J. A systematic literature review of empirical evidence on computer games and serious games. Comput. Educ. 2012, 59, 661–686. [Google Scholar] [CrossRef]

- Jabbar, A.I.; Felicia, P. Gameplay engagement and learning in game-based learning: A systematic review. Rev. Educ. Res. 2015, 85, 740–779. [Google Scholar] [CrossRef]

- Subhash, S.; Cudney, E.A. Gamified learning in higher education: A systematic review of the literature. Comput. Hum. Behav. 2018, 87, 192–206. [Google Scholar] [CrossRef]

- Choi, D.; An, J.; Shah, C.; Singh, V. Examining information search behaviors in small physical space: An escape room study. Proc. Assoc. Inf. Sci. Technol. 2017, 54, 640–641. [Google Scholar] [CrossRef]

- Järveläinen, J.; Paavilainen-Mäntymäki, E. Escape room as game-based learning process: Causation—Effectuation perspective. In Proceedings of the 52nd Hawaii International Conference on System Sciences, Maui, HI, USA, 8–11 January 2019; Volume 6, pp. 1466–1475. [Google Scholar] [CrossRef] [Green Version]

- Warmelink, H.; Haggis, M.; Mayer, I.; Peters, E.; Weber, J.; Louwerse, M. AMELIO: Evaluating the team-building potential of a mixed reality escape room game. CHI PLAY 17 Ext. Abstr. 2017, 111–123. [Google Scholar] [CrossRef]

- Li, P.Y.; Chou, Y.K.; Chen, Y.J.; Chiu, R.S. Problem-based learning (PBL) in interactive design: A case study of escape the room puzzle design. In Proceedings of the IEEE International Conference on Knowledge Innovation and Invention (ICKII), Jeju, Korea, 23–27 July 2018. [Google Scholar] [CrossRef]

- Ma, J.P.; Chuang, M.H.; Lin, R. An innovated design of escape room game box through integrating STEAM education and PBL Principle. In Proceedings of the International Conference on Cross-Cultural Design, Las Vegas, NV, USA, 15–20 July 2018; pp. 70–79. [Google Scholar] [CrossRef]

- Adams, V.; Burger, S.; Crawford, K.; Setter, R. Can you escape? Creating an escape room to facilitate active learning. J. Nurs. Prof. Dev. 2018, 34, E1–E5. [Google Scholar] [CrossRef]

- Brown, N.; Darby, W.; Coronel, H. An escape room as a simulation teaching strategy. Clin. Simul. Nurs. 2019, 30, 1–6. [Google Scholar] [CrossRef]

- Jenkin, I.; Fairfurst, N. Escape room to operating room: A potential training modality? Med. Teach. 2019, 42, 596. [Google Scholar] [CrossRef]

- Pintrich, P.R.; Smith, D.A.F.; Garcia, T.; McKeachie, W.J.A. Manual for the Use of the Motivated Strategies for Learning Questionnaire (MSLQ); University of Michigan: Michigan, IL, USA, 1991. [Google Scholar]

- Rheinberg, F.; Vollmeyer, R.; Burns, B.D. FAM: Ein Fragebogen zur Erfassung aktueller Motivation in Lern- und Leistungssituationen (QCM: A questionnaire to assess current motivation in learning situations). Diagnostica 2001, 2, 57–66. [Google Scholar] [CrossRef]

- Georgiou, Y.; Kyza, E.A. The development and validation of the ARI questionnaire: An instrument for measuring immersion in location-based augmented reality settings. Int. J. Hum.-Comput. Stud. 2017, 98, 24–37. [Google Scholar] [CrossRef]

- Klepsch, M.; Schmitz, F.; Seufert, H. Development and Validation of Two Instruments Measuring Intrinsic, Extraneous, and Germane Cognitive Load. Front. Psychol. 2017, 8, 18. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Eseryel, D.; Law, V.; Ifenthaler, D.; Ge, X.; Miller, R. An investigation of the interrelationships between motivation, engagement, and complex problem solving in game-based learning. J. Educ. Technol. Soc. 2014, 17, 42–53. [Google Scholar]

- Chen, C.H.; Law, V. Scaffolding individual and collaborative game-based learning in learning performance and intrinsic motivation. Comput. Hum. Behav. 2016, 55, 1201–1212. [Google Scholar] [CrossRef]

| Without Support (n = 42) | With Support (n = 42) | |||

|---|---|---|---|---|

| Pre-Test | Post-Test | Pre-Test | Post-Test | |

| Last grade in biology | 1.62 (0.76) | N/A | 1.93 (1.05) | N/A |

| Situational interest | 4.11 (0.61) | 4.31 (0.55) | 3.79 (0.88) | 4.04 (0.79) |

| Knowledge test | 2.30 (2.23) | 8.74 (3.19) | 2.23 (2.63) | 7.82 (3.41) |

| Knowledge-related self-confidence | 2.79 (1.09) | 3.86 (0.72) | 2.36 (1.10) | 3.31 (0.84) |

| Experience of immersion | N/A | 4.10 (0.79) | N/A | 3.98 (0.90) |

| F | p | ηp2 | |

|---|---|---|---|

| Between-group comparison | 1.43 | 0.23 | 0.07 |

| Last grade in biology | 2.43 | 0.06 | 0.12 |

| Situational interest | 6.82 | <0.001 | 0.27 |

| Knowledge test | 4.65 | 0.002 | 0.20 |

| Knowledge-related self-confidence | 10.32 | <0.001 | 0.36 |

| Repeated measurement across all conditions | 118.70 | <0.001 | 0.82 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

von Kotzebue, L.; Zumbach, J.; Brandlmayr, A. Digital Escape Rooms as Game-Based Learning Environments: A Study in Sex Education. Multimodal Technol. Interact. 2022, 6, 8. https://doi.org/10.3390/mti6020008

von Kotzebue L, Zumbach J, Brandlmayr A. Digital Escape Rooms as Game-Based Learning Environments: A Study in Sex Education. Multimodal Technologies and Interaction. 2022; 6(2):8. https://doi.org/10.3390/mti6020008

Chicago/Turabian Stylevon Kotzebue, Lena, Joerg Zumbach, and Anna Brandlmayr. 2022. "Digital Escape Rooms as Game-Based Learning Environments: A Study in Sex Education" Multimodal Technologies and Interaction 6, no. 2: 8. https://doi.org/10.3390/mti6020008

APA Stylevon Kotzebue, L., Zumbach, J., & Brandlmayr, A. (2022). Digital Escape Rooms as Game-Based Learning Environments: A Study in Sex Education. Multimodal Technologies and Interaction, 6(2), 8. https://doi.org/10.3390/mti6020008