A Framework on Division of Work Tasks between Humans and Robots in the Home

Abstract

1. Introduction

2. Definitions of Concepts

2.1. Division of Work

2.2. Actors’ Part in the Division of Work

2.3. Framing the Concept of Work Tasks

2.4. Why We Need to Understand the Division of Work between the Actors and Their Tasks

3. Literature Review

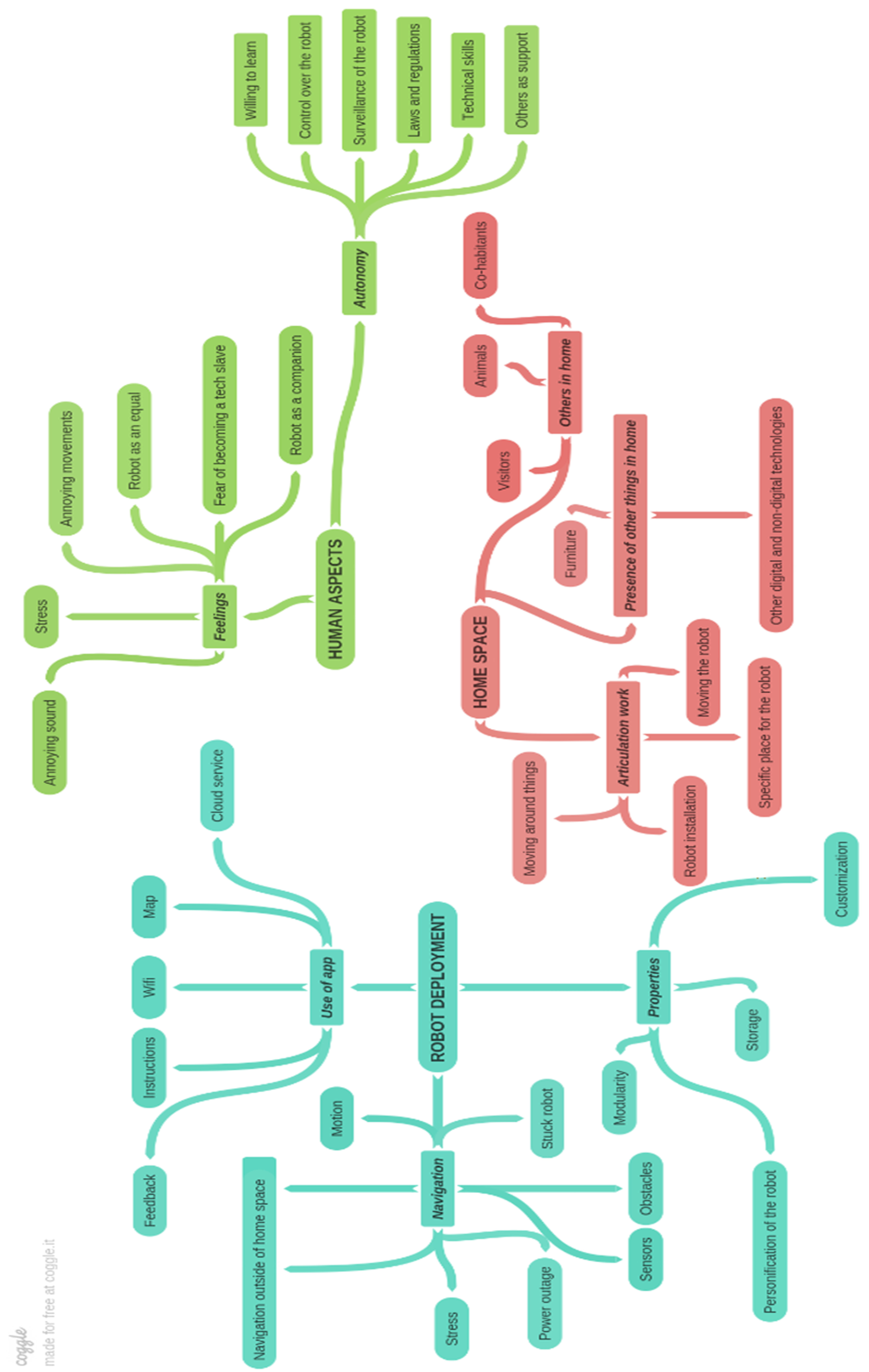

3.1. Robots in the Home

3.2. Available Frameworks

4. Theoretical Framework: The Model from Verne (2015) and Verne and Bratteteig (2016)

5. Methodology and Methods

5.1. Participants

5.2. Data Collection

5.3. Data Analysis

5.4. Ethical Considerations

6. Findings

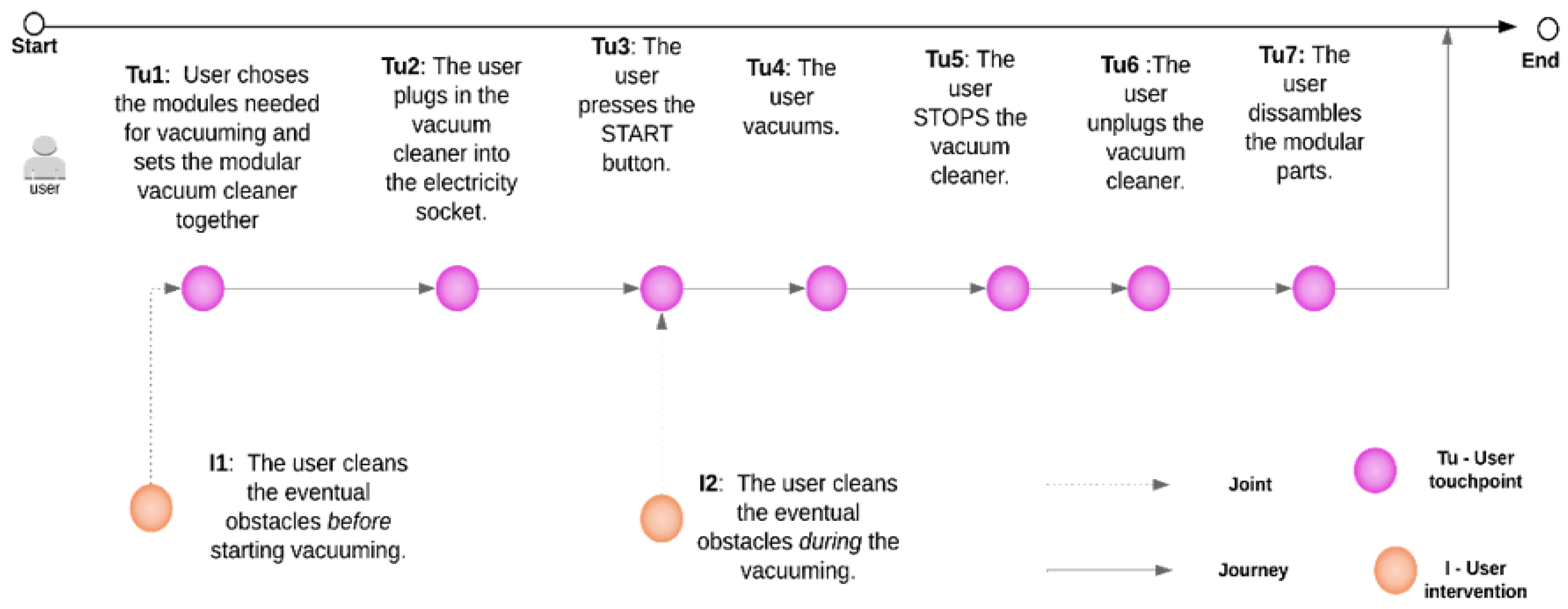

6.1. Use-Case Scenario 1: Human Work Tasks When Using an Ordinary Device (Situation 1)

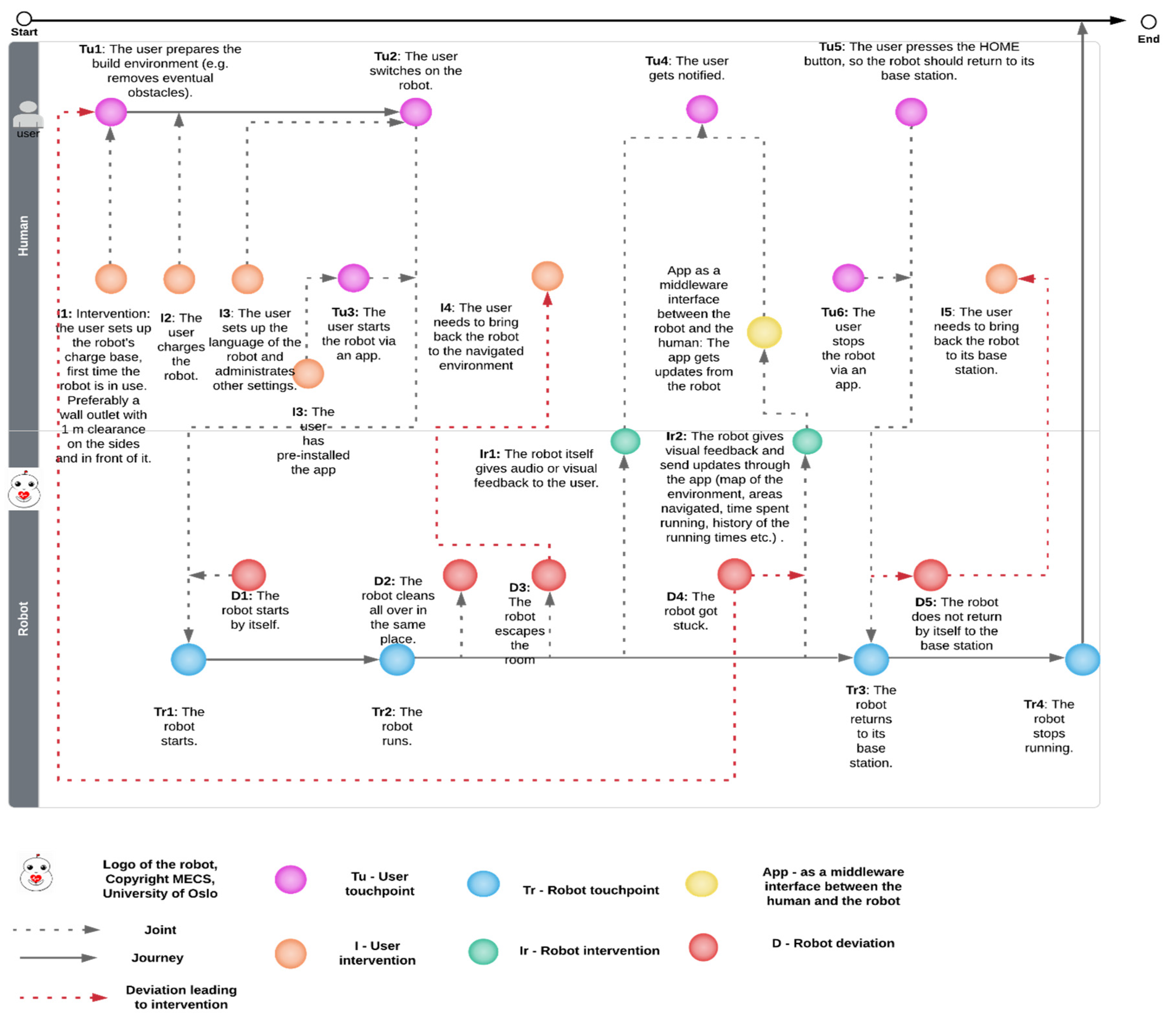

6.2. Use-Case Scenario 2: Division of Work Tasks in Joint Human-Robot Work Activity in the Home Situation 2

6.2.1. Work Performed by the Human Actor

(Participant): I got my brother fixing the cables under the bed, so they are not in its way. […] If it had gotten stuck there, I would not have been able to come down there. I was very afraid of this. So no cables were supposed to be there! I felt then so much better! (Interview, elderly participant).

(Interviewer): Okay … However, you also wrote in your diary notes that you had to clean a bit before you could run the robot.

(Participant): I had to do that more than with an ordinary vacuum cleaner, isn’t it? I have lots of chairs here. I have put those two on top of each other because otherwise, it stops all the time. So I have removed them. Moreover, the cables … I have tried to remove those. Yes, I have cleaned a bit. (Interview, elderly participant).

(Participant): Having experienced a couple of weeks with a robot vacuum cleaner at home, I learned that for the vacuum cleaner to do the job without interruptions, the floor needs to ‘be clean’—understood as tidy. Therefore, I set out to unclutter the floor today. I spent about two hours with moving things from the floor and putting the chair upon the table before setting up the Botvac. There is a reason why things end up on the floor—if there is too much stuff about storage capacities on shelves. While putting down the charging station, finding a 220 V outlet, I thought about means and end. The ‘goal’ I had was to make ‘clean floor’; but to get to this—I needed to install something on the floor … A paradox. (Diary notes, non-elderly participant).

(Participant): I pressed the ‘home’ button, it started. After a while, it got stuck. I remembered the previous installation at home when the app gave notifications about this—when I was out of the house. This information was disturbing at that time since I did not want to do anything with it. It interrupted a nice train journey I remember now, and started a train of thoughts of where it was stuck, and why (since I had done my best to make a ‘clean floor’ there well. (Diary notes, non-elderly participant)

6.2.2. Work Carried out by the Human in Breakdown Situations

(Participant): One time when I pressed on Home, it started going around by itself, so I had to carry it back [meaning back to the charging station]. (Interview)

(Participant): The robot got stuck in the carpet’s tassels and stayed still. It took some time to free R from the tassels, so I took away the carpet. […] Is R made for rooms without carpets and some furniture? (Diary notes, elderly participant)

(Participant): I had to take away the cables a couple of times, and it was trying to take down the lamps. However, I felt that I had to « save » the cables … I had to! I should say. (Diary notes, elderly participant)

6.2.3. Work Performed by the Robot Actor

(Participant): I think it starts in one room, and then it goes to another, and then it goes again to the first room. I think it is a bit strange that it does not finish in the first room, and it goes perhaps to the kitchen, and then it comes back, and it continues likes this and then goes out again. I think it was very strange (break), really, very strange. (Interview, elderly participant)

(Participant): […] And suddenly it started going by itself one morning, though it was very strange. (Interview, elderly participant).

6.2.4. Division of Work Tasks between the Human and the Robot

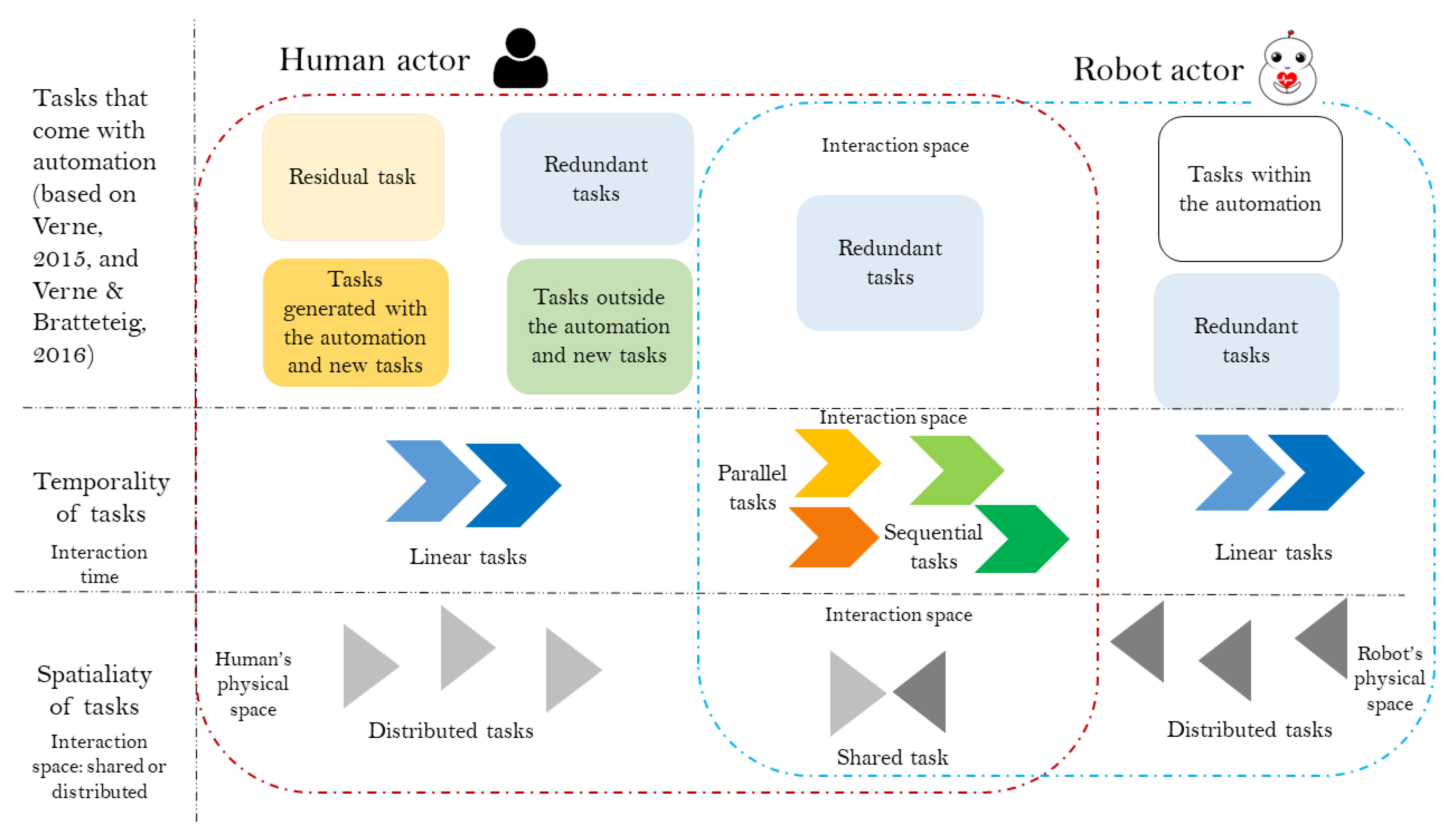

6.3. The Proposed Framework on Division of Work Tasks between Humans and Robots

6.3.1. New Dimensions of Tasks: Temporal and Spatial Distribution

- Temporality of Tasks

- Spatial Distribution of Tasks

6.3.2. The Framework

7. Discussion

7.1. Reflections on the Method and Setting

7.2. Reflections on the Proposed Framework for Division of Work between Humans and Robots

7.2.1. When Is the Framework Useful and Relevant?

- Hospital setting scenario: Using the framework to plan the division of work between human and robot.

- 2.

- Mixed Reality remote-controlled robot scenario: Using the framework to plan the division of work between human and robot.

7.2.2. Design Implications: From Interaction to Cooperation with a Robot

8. Summary and Conclusions

9. Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Baltes, P.B.; Smith, J. New frontiers in the future of aging: From successful aging of the young old to the dilemmas of the fourth age. Gerontology 2003, 49, 123–135. [Google Scholar] [CrossRef] [PubMed]

- Field, D.; Minkler, M. Continuity and change in social support between young-old and old-old or very-old age. J. Gerontol. 1988, 43, 100–106. [Google Scholar] [CrossRef] [PubMed]

- Forlizzi, J.; DiSalvo, C. Service Robots in the Domestic Environment: A Study of the Roomba Vacuum in the Home. In Proceedings of the 1st ACM SIGCHI/SIGART Conference on Human-Robot Interaction, New York, NY, USA, 2–4 March 2006; pp. 258–265. [Google Scholar] [CrossRef]

- Forlizzi, J. Product Ecologies: Understanding the Context of Use Surrounding Products. Ph.D. Thesis, School of Computer Science, Carnegie Mellon University, Pittsburgh, PA, USA, 2007. [Google Scholar]

- Saplacan, D.; Herstad, J. An Explorative Study on Motion as Feedback: Using Semi-Autonomous Robots in Domestic Settings. Int. J. Adv. Softw. 2019, 12, 23. [Google Scholar]

- Saplacan, D.; Herstad, J.; Pajalic, Z. An analysis of independent living elderly’s views on robots-A descriptive study from the Norwegian context. In Proceedings of the International Conference on Advances in Computer-Human Interactions (ACHI), IARIA Conferences, Valencia, Spain, 21–25 November 2020. [Google Scholar]

- Verne, G. “The Winners Are Those Who Have Used the Old Paper Form”. On Citizens and Automated Public Services; University of Oslo: Oslo, Norway, 2015. [Google Scholar]

- Verne, G.; Bratteteig, T. Do-it-yourself services and work-like chores: On civic duties and digital public services. Pers. Ubiquitous Comput. 2016, 20, 517–532. [Google Scholar] [CrossRef]

- Strauss, A. Work and the Division of Labor. Sociol. Q. 1985, 26, 1–19. [Google Scholar] [CrossRef]

- Carstensen, P.H.; Sørensen, C. From the social to the systematic. Comput. Support. Coop. Work CSCW 1996, 5, 387–413. [Google Scholar] [CrossRef]

- Oxford English Dictionary, actor, n. OED Online; Oxford University Press: Oxford, UK, 2019. [Google Scholar]

- Gasser, L. The Integration of Computing and Routine Work. ACM Trans. Inf. Syst. 1986, 4, 205–225. [Google Scholar] [CrossRef]

- Cha, E.; Forlizzi, J.; Srinivasa, S.S. Robots in the Home: Qualitative and Quantitative Insights into Kitchen Organization. In Proceedings of the Tenth Annual ACM/IEEE International Conference on Human-Robot Interaction, New York, NY, USA, 2–5 March 2015; pp. 319–326. [Google Scholar] [CrossRef]

- Sung, J.-Y.; Grinter, R.E.; Christensen, H.I.; Guo, L. Housewives or technophiles? Understanding domestic robot owners. In Proceedings of the 3rd ACM/IEEE International Conference on Human-Robot Interaction, Amsterdam, The Netherlands, 12–15 March 2008; pp. 129–136. [Google Scholar] [CrossRef]

- Pantofaru, C.; Takayama, L.; Foote, T.; Soto, B. Exploring the Role of Robots in Home Organization. In Proceedings of the Seventh Annual ACM/IEEE International Conference on Human-Robot Interaction, New York, NY, USA, 5–8 March 2012; pp. 327–334. [Google Scholar] [CrossRef]

- Forlizzi, J. How Robotic Products Become Social Products: An Ethnographic Study of Cleaning in the Home. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, New York, NY, USA, 8–11 March 2007; pp. 129–136. [Google Scholar] [CrossRef]

- Sung, J.; Grinter, R.E.; Christensen, H.I. Domestic Robot Ecology. Int. J. Soc. Robot. 2010, 2, 417–429. [Google Scholar] [CrossRef]

- Soma, R.; Søyseth, V.D.; Søyland, M.; Schulz, T.W. Facilitating Robots at Home: A Framework for Understanding Robot Facilitation. In Proceedings of the ACHI 2018: The Eleventh International Conference on Advances in Computer-Human Interactions, Rome, Italy, 25–29 March 2018; pp. 1–6. Available online: https://www.thinkmind.org/index.php?view=article&articleid=achi_2018_1_10_20085 (accessed on 6 March 2019).

- Teaming Up with Robots: An IMOI (Inputs-Mediators-Outputs-Inputs) Framework of Human-Robot Teamwork. Available online: https://deepblue.lib.umich.edu/handle/2027.42/138192 (accessed on 1 July 2020).

- Ijtsma, M.; Ye, S.; Feigh, K.; Pritchett, A. Simulating Human-Robot Teamwork Dynamics for Evaluation of Work Strategies in Human-Robot Teams. In Proceedings of the 20th International Symposium on Aviation Psychology, Dayton, OH, USA, 7–10 May 2019; pp. 103–108. [Google Scholar]

- Lee, C.P.; Paine, D. From The Matrix to a Model of Coordinated Action (MoCA): A Conceptual Framework of and for CSCW. In Proceedings of the 18th ACM Conference on Computer Supported Cooperative Work & Social Computing, Vancouver, BC, Canada, 14–18 March 2015; pp. 179–194. [Google Scholar] [CrossRef]

- Ajoudani, A.; Zanchettin, A.M.; Ivaldi, S.; Albu-Schäffer, A.; Kosuge, K.; Khatib, O. Progress and prospects of the human–robot collaboration. Auton. Robot. 2018, 42, 957–975. [Google Scholar] [CrossRef]

- Verne, G.B. Adapting to a Robot: Adapting Gardening and the Garden to fit a Robot Lawn Mower. In Proceedings of the Companion of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, Cambridge, UK, 23–26 March 2020; pp. 34–42. [Google Scholar] [CrossRef]

- Braun, V.; Clarke, V. Using thematic analysis in psychology. Qual. Res. Psychol. 2006, 3, 77–101. [Google Scholar] [CrossRef]

- Halvorsrud, R.; Kvale, K.; Følstad, A. Improving service quality through customer journey analysis. J. Serv. Theory Pract. 2016, 26, 840–867. [Google Scholar] [CrossRef]

- Suchman, L. Plans and Situated Actions: The Problem of Human-Machine Communication; Cambridge University Press: Cambridge, UK, 1987. [Google Scholar]

- Saplacan, D.; Herstad, J. A Quadratic Anthropocentric Perspective on Feedback-Using Proxemics as a Framework. In Proceedings of the British HCI 2017, Sunderland, UK, 3 July 2017; Available online: http://hci2017.bcs.org/wp-content/uploads/46.pdf (accessed on 19 July 2017).

- Saplacan, D.; Herstad, J. Understanding robot motion in domestic settings. In Proceedings of the 9th Joint IEEE International Conference on Development and Learning and on Epigenetic Robotics, IEEE XPlore, Oslo, Norway, 19–22 August 2019. [Google Scholar] [CrossRef]

- Halverson, C.A.; Ellis, J.B.; Danis, C.; Kellogg, W.A. Designing Task Visualizations to Support the Coordination of Work in Software Development. In Proceedings of the 2006 20th Anniversary Conference on Computer Supported Cooperative Work, New York, NY, USA, 4–8 November 2006; pp. 39–48. [Google Scholar] [CrossRef]

- Jung, M.; Hinds, P. Robots in the Wild: A Time for More Robust Theories of Human-Robot Interaction. ACM Trans. Hum. Robot Interact. 2018, 7, 2:1–2:5. [Google Scholar] [CrossRef]

- Sung, J.-Y.; Christensen, H.I.; Grinter, R. Robots in the wild: Understanding long-term use. In Proceedings of the 4th ACM/IEEE International Conference on Human-Robot Interaction, San Diego, CA, USA, 11–13 March 2009; pp. 45–52. [Google Scholar] [CrossRef]

- Oskarsen, J.S. Human-Supported Robot Work. Master’s Thesis, Department of Informatics, Faculty of Mathematics and Natural Sciences, University of Oslo, Oslo, Norway, 2018. [Google Scholar]

- Halodi Robotics. Available online: https://www.halodi.com (accessed on 12 July 2020).

- Hoffman, G. Designing Fluent Human-Robot Collaboration. In Proceedings of the 3rd International Conference on Human-Agent Interaction, Daegu, Korea, 21–24 October 2015; p. 1. [Google Scholar] [CrossRef]

- Schmidt, K.; Bannon, L. Taking CSCW seriously. Comput. Support. Coop. Work CSCW 1992, 1, 7–40. [Google Scholar] [CrossRef]

- Grudin, J.; Jacques, R. Chatbots, Humbots, and the Quest for Artificial General Intelligence. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 209:1–209:11. [Google Scholar] [CrossRef]

- Grudin, J. From Tool to Partner: The Evolution of Human-Computer Interaction. In Proceedings of the Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. C15:1–C15:3. [Google Scholar] [CrossRef]

- Kaindl, H.; Popp, R.; Raneburger, D.; Ertl, D.; Falb, J.; Szép, A.; Bogdan, C. Robot-Supported Cooperative Work: A Shared-Shopping Scenario. In Proceedings of the 2011 44th Hawaii International Conference on System Sciences, 4–7 January 2011; pp. 1–10. [Google Scholar] [CrossRef]

- Yamazaki, K.; Kawashima, M.; Kuno, Y.; Akiya, N.; Burdelski, M.; Yamazaki, A.; Kuzuoka, H. Prior-To-Request and Request Behaviors within Elderly Day Care: Implications for Developing Service Robots for Use in Multiparty Settings; Springer: London, UK, 2007. [Google Scholar]

- Cummings, M. Automation Bias in Intelligent Time Critical Decision Support Systems. In Proceedings of the AIAA 1st Intelligent Systems Technical Conference, American Institute of Aeronautics and Astronautics, Chicago, IL, USA, 20–22 September 2004. [Google Scholar] [CrossRef]

| Types of Task | Task Description |

|---|---|

| New tasks | Tasks that arise as a result of automation cannot be performed by the users themselves. These tasks usually occur when errors or inconsistencies are encountered. |

| Residual tasks | Tasks that still need to be performed outside the automation, usually manual tasks. |

| Automated tasks | Tasks that are automated. |

| Redundant tasks | Tasks that can be done both through automation and manually. |

| Tasks inside the automation | Tasks generated with the automation that is inside the automation. |

| Tasks outside the automation | Tasks generated by automation, but which are outside it. |

| Data Collection Methods—Non-Elders | |||

|---|---|---|---|

| # | Timeframe | Documentation | Robot Used |

| 1 | One week | Yes. Diary notes, seven posts (one per day), ca. 4 and a half A4 pages, analog format, 28 photos | Neato |

| 2 | Ca. two week | Yes. Three pages of A4 notes, digital format, 4 photos enclosed | Neato |

| 3 | Ca. one week | Yes. Short notes on strengths and weaknesses of using such a robot, digital format | iRobot Roomba |

| 4 | One week | Yes. one page of notes, digital format | Samsung PowerBot |

| 5 | Ca. one week | Yes. Half-page was written notes on strengths and weaknesses, digital format | Neato |

| 6 | Ca. one month | Yes. Four pages of written notes, 22 posts, digital format | Neato |

| 7 | Ca. one month | Yes. Ca. 19 A4 pages of written notes, analog format | Neato |

| # | Data Collection Methods—Elderly | |||||

|---|---|---|---|---|---|---|

| Gender (Female F, Male M) | Interview | Elderly’s Diary Notes | Author’s Notes (SD) | Photos Were Taken by the Researchers | Eventual Details about the Robot Used, If Any Assistive Technologies Were Used, and Level of Information Technology Literacy | |

| 1 | F | Ca. 1 h, audio-recorded pilot interview transcribed verbatim (SD) AND Ca. 1 h and 45 min of untranscribed audio-recording from the installation of the robot | Yes. Ca. 5 A4 pages, analog format. | Yes. Ca. 2 A4 pages. | Yes, 36 photos | iRoomba, 87 years old, walking chair, did not use the app |

| 2 | F | Ca. 40 min, audio-recorded, transcribed verbatim (SD) | Yes. Ca. 3 A4 pages notes, analog format | Yes. Ca. 2 A4 pages. | Yes, 4 photos. | iRoomba, walking chair, a necklace alarm that she does not wear it, high interest in technology, used the app, has a smartphone. |

| 3 | M | Ca. 25 min, audio-recorded, transcribed verbatim (SD) | Yes. One letter-size page, analog format, short notes. | Yes. Ca. 4 letter-sized pages. | Yes, 10 photos. | Neato, wheelchair, not interested in technology, did not use the app, has a wearable safety alarm |

| 4 | F | Ca. 33 min audio-recorded, transcribed verbatim (SD) | Yes. One A4 page, analog format | Yes. Ca. 2 A4 pages. | Yes, 36 photos | iRoomba, wheelchair, interested in technology, did not use the app, does not have a smartphone, has a wearable safety alarm |

| 5 | F | Ca. 45 min audio-recorded, transcribed verbatim (SD) | Yes. One letter-size page, analog format. | Not available | Yes, 13 photos | Walker did not use the app, not interested in technology, does not have a smartphone, has a wearable safety alarm |

| 6 | F | Ca. 43 min, audio-recorded, (transcribed verbatim) (SD) | Yes. 4 letter-size pages, analog format. | Yes. Ca. 1 letter-sized page. | Yes, 16 photos | Interested in technology, no walker, wanted to use the app, but gave up, does not have any safety wearable alarm |

| Tasks Dimensions | Type of Task | When the Human Actor Is Using a Non-Moving Actor (N/A = Not Available) | When a Robot Is Introduced in a Physical Environment |

|---|---|---|---|

| Tasks that come with automation (based on Verne, 2015, and Verne and Bratteteig, 2016) | Residual tasks | Yes. Humans need to do some manual work tasks | Yes. The human needs to clean some of the areas that the robot did not reach. |

| Redundant tasks | N/A | Yes. The human needs to start the robot through direct (e.g., by pushing the button) or remote (e.g., through the app) interaction. | |

| Tasks within the automation | N/A | Yes. The robot gives audio or visual feedback to the human. | |

| Tasks outside the automation and new tasks | Yes. | Yes. The human chooses to move the robot, or to remove obstacles without the robot indicating it. | |

| Tasks generated with the automation and new tasks | N/A | Yes. The human needs to charge the robot, to lift the robot from one place to another, when it gets stuck, to bring it back when it “escapes”. | |

| Temporality of tasks | Sequential | Yes. | Yes, partially. Some sequential tasks, for each of the actors, are available. When the tasks for one actor is interrupted or paused, usually the other actor takes on the tasks. |

| Parallel | No. The device itself cannot perform tasks on its own. However, the human can perform several tasks at the same time. | Yes. The human and the robot can perform tasks in parallel. | |

| Linear | Yes. The device is controlled by humans. | Yes. Both the human and the robot can perform linear tasks. However, linear tasks are often interrupted. | |

| Spatiality of tasks | Spatial tasks in shared spatiality | Yes. The human and the device share the space. | Yes. Both of the actors can share space and perform different tasks at the same time. |

| Spatial tasks in distributed spatiality | No. The human and the device cannot be in two different places and work on a joint task | Yes. The robot can perform tasks remotely, while the human can control or give autonomy to the robot through an app, that can be used remotely. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Saplacan, D.; Herstad, J.; Tørresen, J.; Pajalic, Z. A Framework on Division of Work Tasks between Humans and Robots in the Home. Multimodal Technol. Interact. 2020, 4, 44. https://doi.org/10.3390/mti4030044

Saplacan D, Herstad J, Tørresen J, Pajalic Z. A Framework on Division of Work Tasks between Humans and Robots in the Home. Multimodal Technologies and Interaction. 2020; 4(3):44. https://doi.org/10.3390/mti4030044

Chicago/Turabian StyleSaplacan, Diana, Jo Herstad, Jim Tørresen, and Zada Pajalic. 2020. "A Framework on Division of Work Tasks between Humans and Robots in the Home" Multimodal Technologies and Interaction 4, no. 3: 44. https://doi.org/10.3390/mti4030044

APA StyleSaplacan, D., Herstad, J., Tørresen, J., & Pajalic, Z. (2020). A Framework on Division of Work Tasks between Humans and Robots in the Home. Multimodal Technologies and Interaction, 4(3), 44. https://doi.org/10.3390/mti4030044