Audio Legends: Investigating Sonic Interaction in an Augmented Reality Audio Game

Abstract

1. Introduction

2. Towards Augmented Reality Audio Games

2.1. The Evolution and Characteristics of Audio Games

2.2. Approaches to Audio Augmentation

3. Audio Legends’ Implementation

- In the 16th Century, he ended famine by guiding Italian ships carrying wheat out of a storm to the starving Corfiots.

- Twice in the 17th Century, he fought the plague off the island by chasing and beating with a cross the (described as) half-old woman and half-beast disease.

- In the 18th Century, he defended the island against the naval siege of the Turks by destroying most of their fleet with a storm.

- “The Famine”, in which players have to locate a virtual Italian sailor and then guide him to the finish point.

- “The Plague”, in which players have to track down and then defeat in combat a virtual monster.

- “The Siege”, in which players have to find the bombardment and shield themselves from incoming virtual cannonballs.

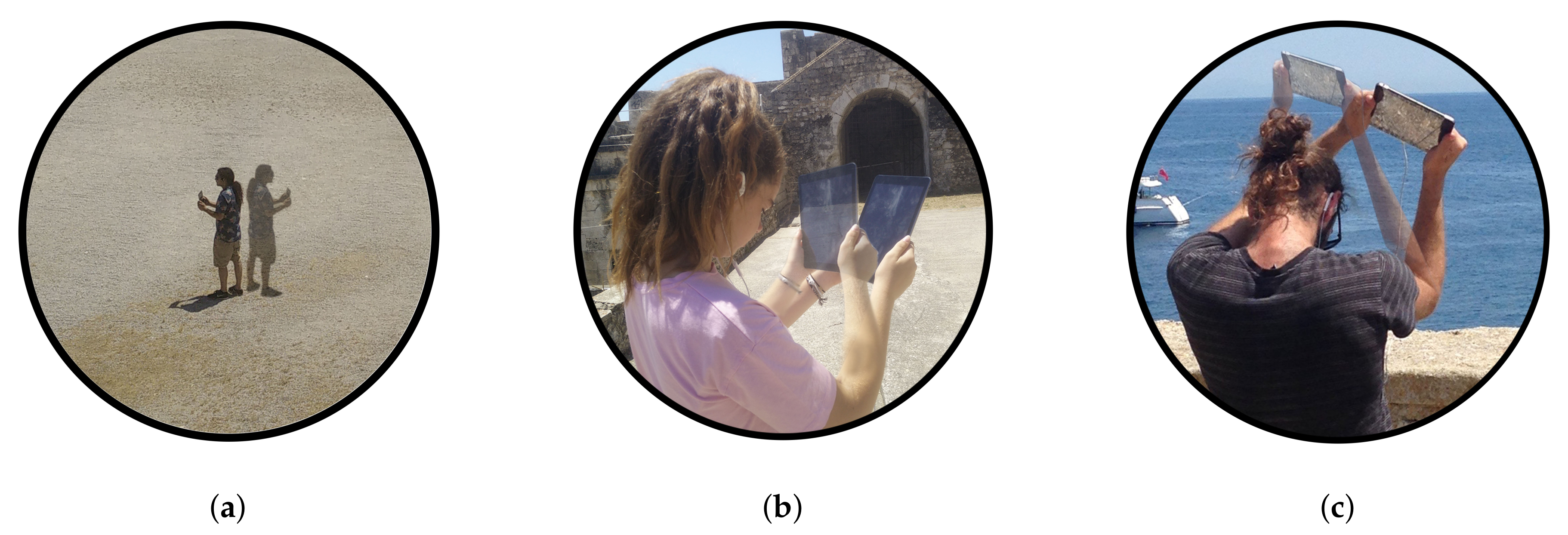

3.1. Mechanics’ Design

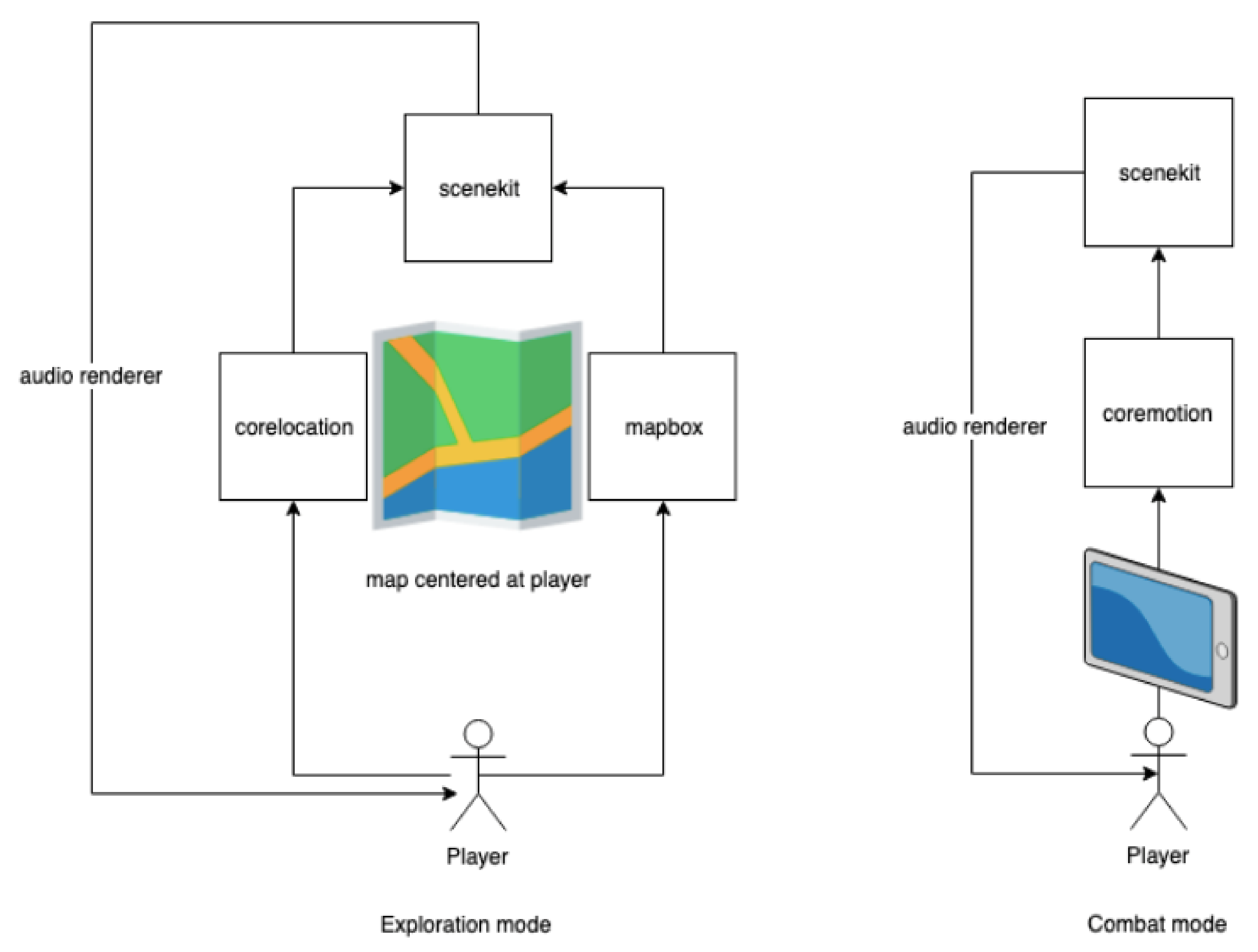

3.2. Technical Implementation

3.3. Sound Design

4. The Evaluation Process of Audio Legends

4.1. Assessment Methodology

- Game Mode Specific Questionnaire (GMSQ)

- -

- Technical aspect (seven statements)

- *

- It was difficult to complete the game

- *

- Audio action and interaction through the device were successfully synced

- *

- It was difficult to locate the sound during the first phase of the game

- *

- It was difficult to interact with the sound during the second phase of the game

- *

- The sounds of the game didn’t have a clear position in space

- *

- The sounds of the game didn’t have a clear movement in space

- *

- The real and digital sound components were mixed with fidelity

- -

- Immersion aspect (five statements)

- *

- I liked the game

- *

- The sounds of the game helped me concentrate on the gaming process

- *

- The sounds of the game had no emotional impact on me

- *

- The game kept my interest till the end

- *

- I found the sound design of the game satisfying

- Overall Experience Questionnaire (OEQ)

- -

- Technical aspect (two statements)

- *

- The prolonged use of headphones was tiring/annoying

- *

- The prolonged use of the tablet was tiring/annoying

- -

- Immersion aspect (four statements)

- *

- I found the game experience (1 very weak–5 very strong)

- *

- During the game I felt that my acoustic ability was enhanced

- *

- Participating in the game process enhanced my understanding of information related to the cultural heritage of Corfu

- *

- I would play again an Augmented Reality Audio Game

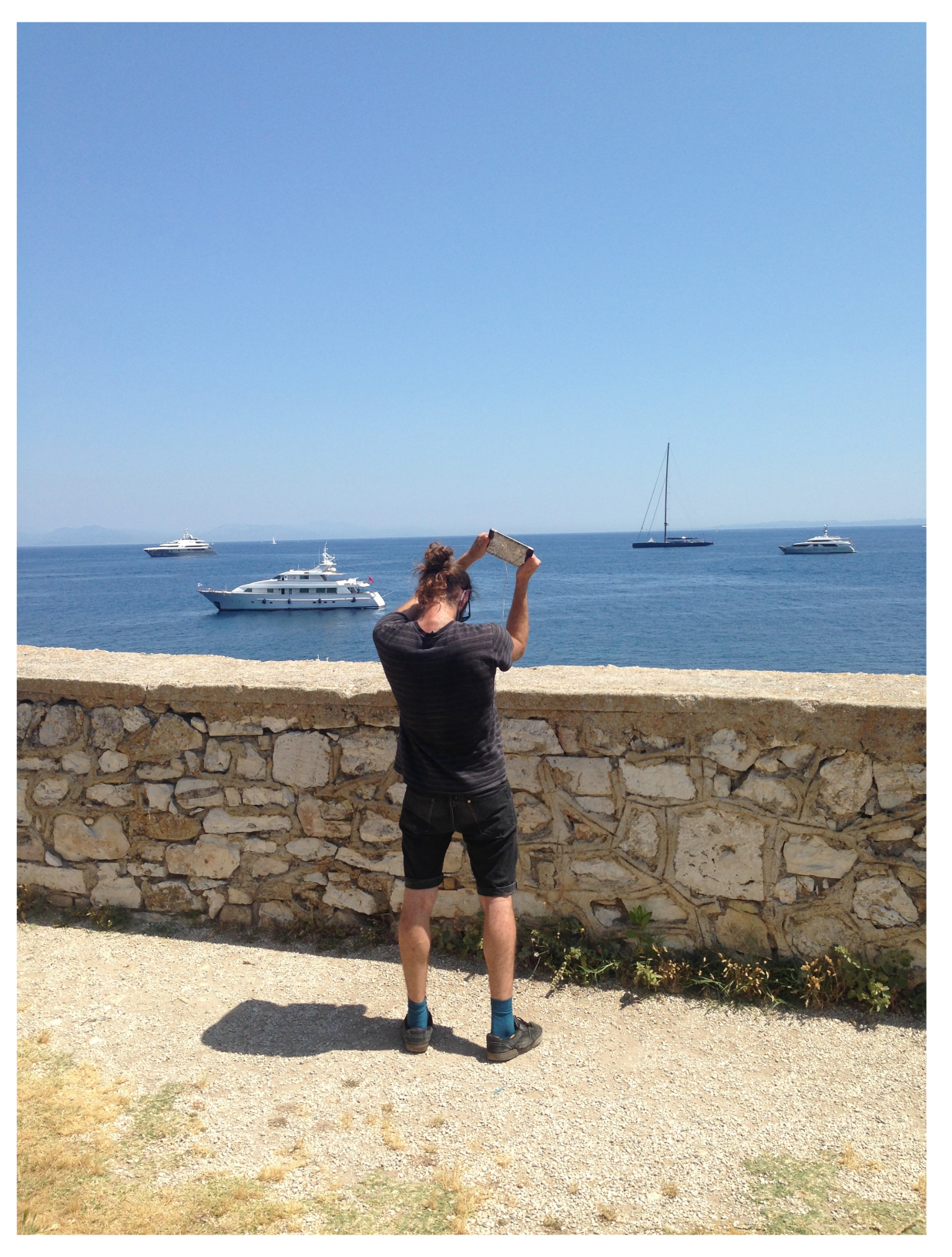

4.2. Conducting the Experiment

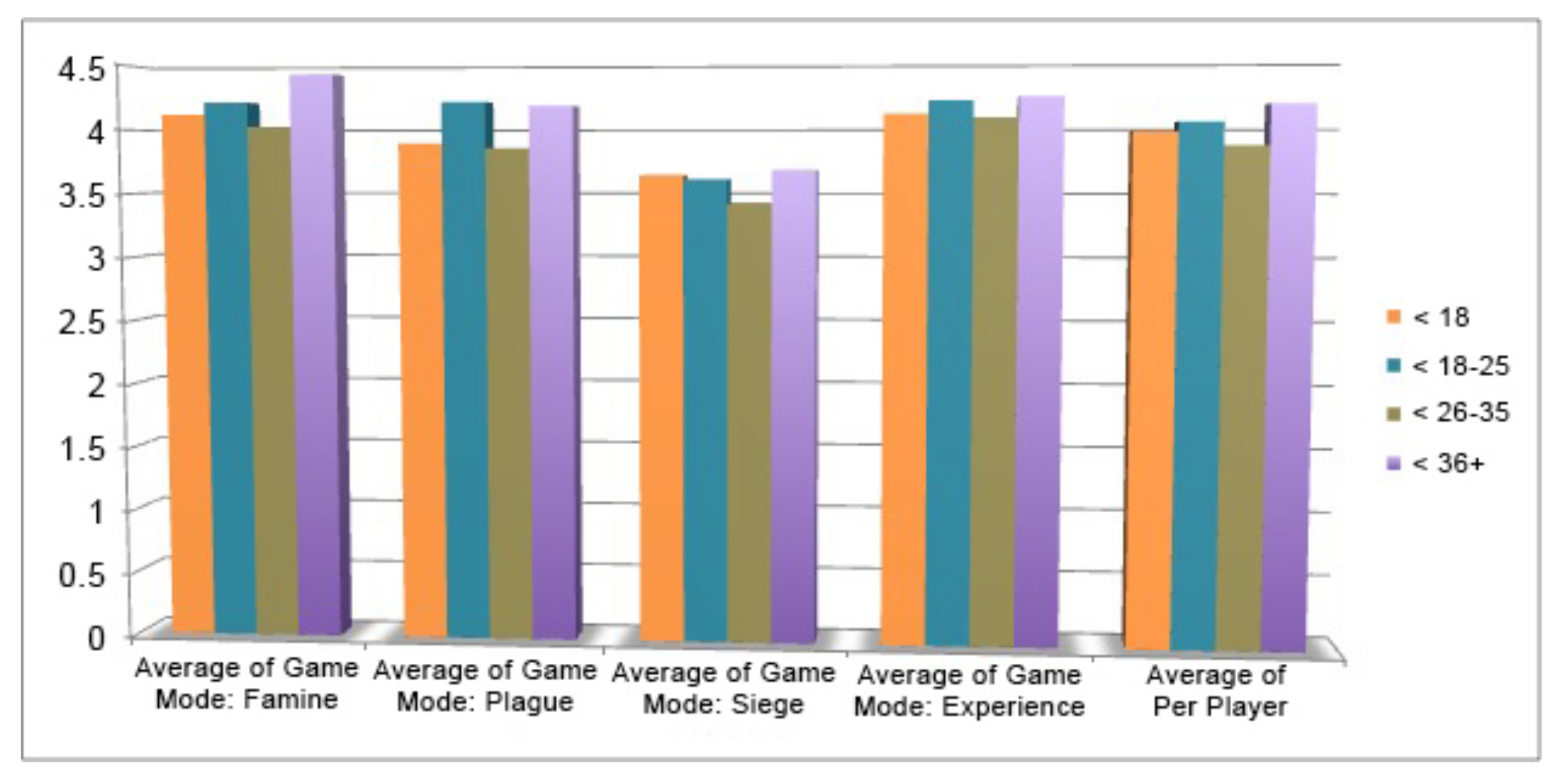

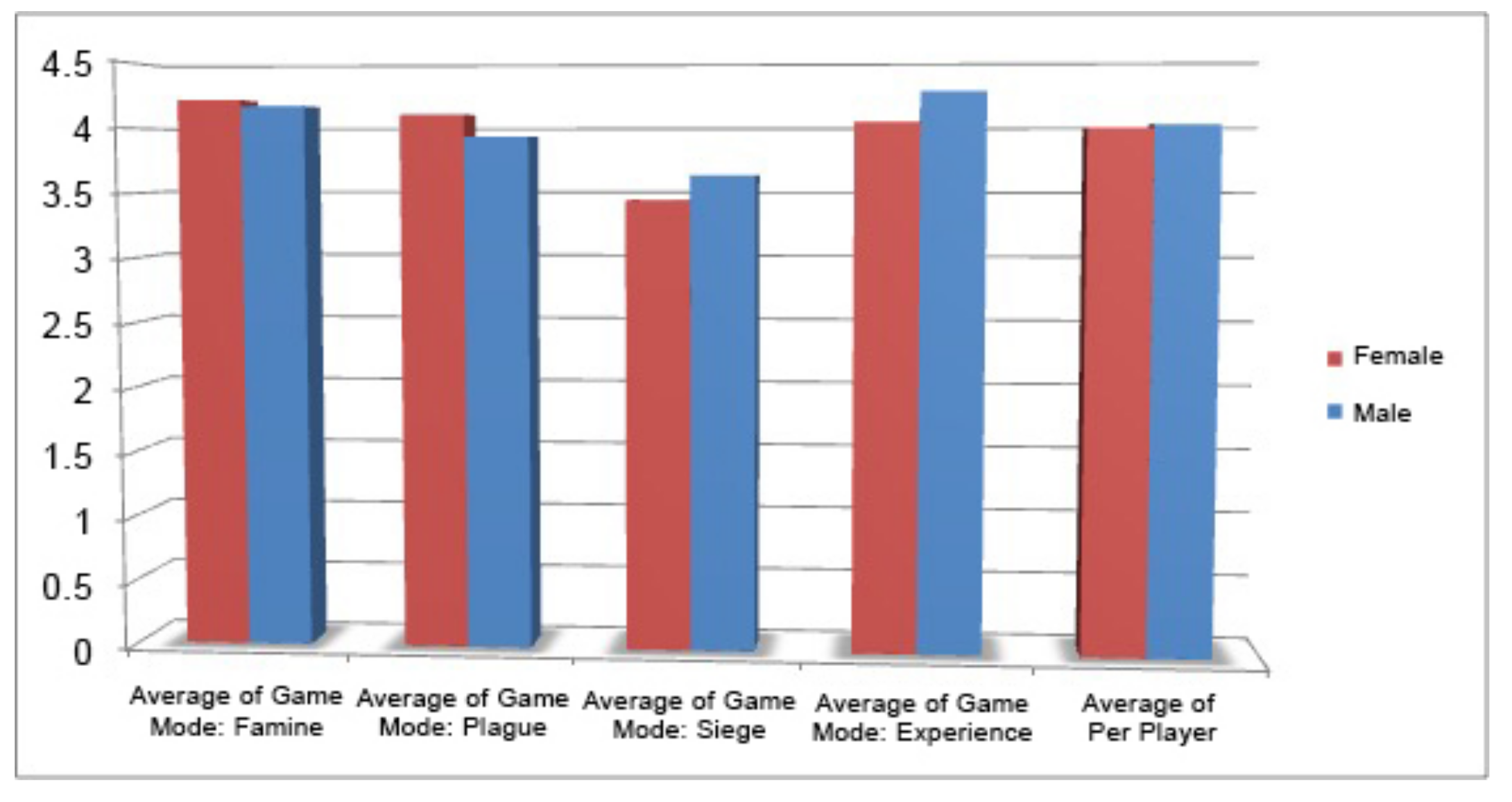

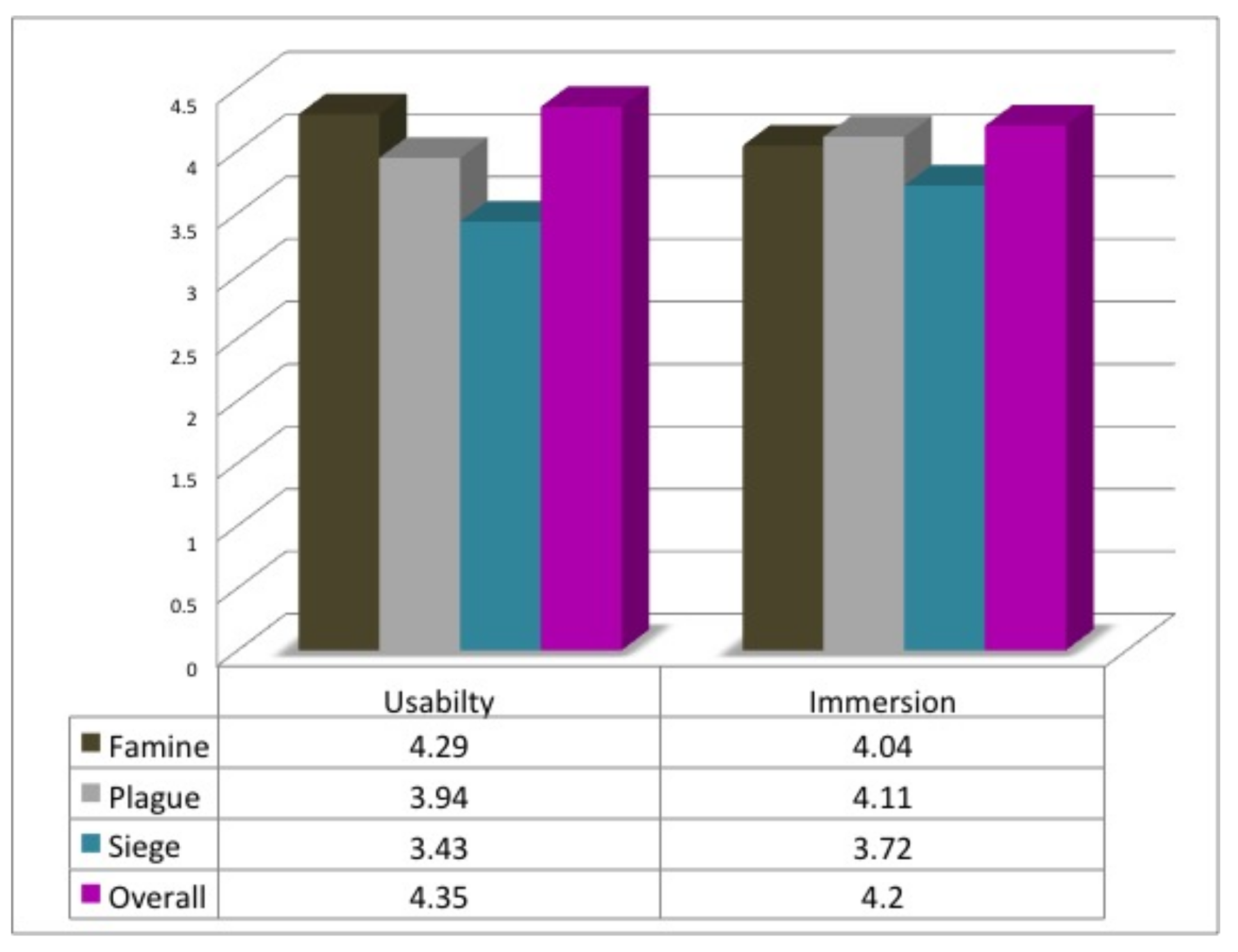

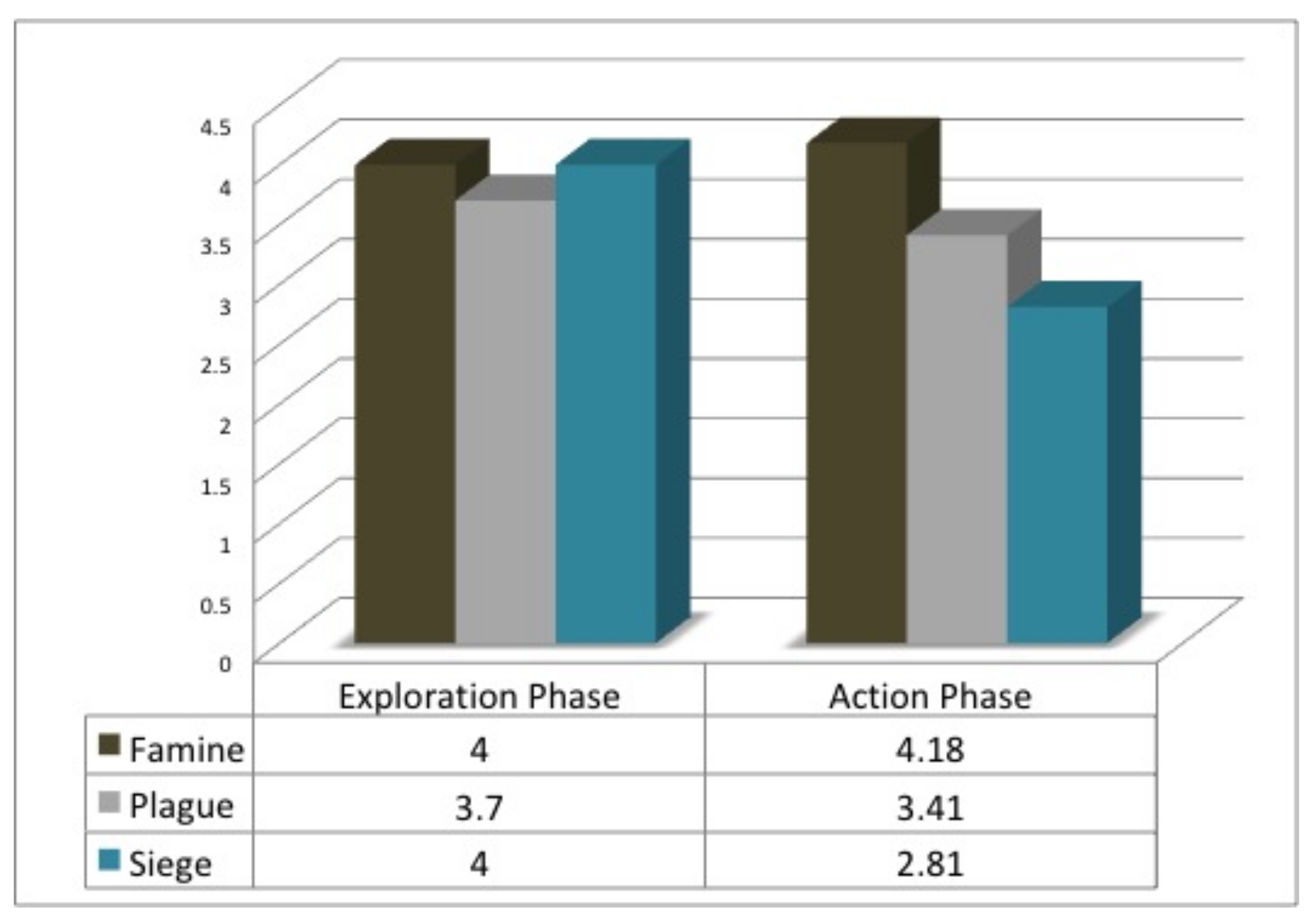

4.3. Analyzing the Results

4.4. Discussion

5. Conclusions and Future Directions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| ARAG | Augmented Reality Audio Games |

| AG | Audio Game |

| AR | Augmented Reality |

| ARA | Augmented Reality Audio |

| GM | Game Modes |

| IP | Interaction Phases |

| RQ | Research Questions |

| GMSQ | Game Mode Specific Questionnaire |

| OEQ | Overall Experience Questionnaire |

| ANOVA | Analysis Of Variance |

References

- Moustakas, N.; Floros, A.; Kanellopoulos, N. Eidola: An interactive augmented reality audio-game prototype. In Proceedings of the Audio Engineering Society Convention 127, New York, NY, USA, 9–12 October 2009. [Google Scholar]

- Rovithis, E.; Floros, A.; Mniestris, A.; Grigoriou, N. Audio games as educational tools: Design principles and examples. In Proceedings of the 2014 IEEE Games Media Entertainment, Toronto, ON, Canada, 22–24 October 2014; pp. 1–8. [Google Scholar]

- Moustakas, N.; Floros, A.; Rovithis, E.; Vogklis, K. Augmented Audio-Only Games: A New Generation of Immersive Acoustic Environments through Advanced Mixing. In Proceedings of the Audio Engineering Society Convention 146, Dublin, Ireland, 20–23 March 2019. [Google Scholar]

- Rovithis, E.; Floros, A.; Moustakas, N.; Vogklis, K.; Kotsira, L. Bridging Audio and Augmented Reality towards a new Generation of Serious Audio-only Games. Electron. J. e-Learn. 2019, 17, 144–156. [Google Scholar]

- Winberg, F.; Hellström, S.O. Investigating auditory direct manipulation: Sonifying the Towers of Hanoi. In Proceedings of the CHI’00 Extended Abstracts on Human Factors in Computing Systems, The Hague, The Netherlands, 1–6 April 2000; pp. 281–282. [Google Scholar]

- Collins, K.; Kapralos, B. Beyond the Screen. In Proceedings of the Computer Games Multimedia and Allied Technology, CGAT 2012, Bali Dynasty Resort, Bali, Indonesia, 7–8 May 2012. [Google Scholar]

- Sánchez, J.; Sáenz, M.; Pascual-Leone, A.; Merabet, L. Enhancing navigation skills through audio gaming. In Proceedings of the CHI’10 Extended Abstracts on Human Factors in Computing Systems, Atlanta, GA, USA, 10–15 April 2010; pp. 3991–3996. [Google Scholar]

- Bishop, M.; Sonnenschein, D. Designing with sound to enhance learning: Four recommendations from the film industry. J. Appl. Instr. Des. 2012, 2, 5–15. [Google Scholar]

- Franinović, K.; Serafin, S. Sonic Interaction Design; MIT Press: Cambridge, MA, USA, 2013. [Google Scholar]

- Targett, S.; Fernstrom, M. Audio Games: Fun for All? All for Fun! Georgia Institute of Technology: Atlanta, GA, USA, 2003. [Google Scholar]

- Parker, J.R.; Heerema, J. Audio interaction in computer mediated games. Int. J. Comput. Games Technol. 2008, 2008, 1. [Google Scholar] [CrossRef]

- Liljedahl, M.; Papworth, N. Beowulf field test paper. In Proceedings of the Audio Mostly Conference, Pitea, Sweden, 22–23 October 2008; pp. 43–49. [Google Scholar]

- Beksa, J.; Fizek, S.; Carter, P. Audio games: Investigation of the potential through prototype development. In A Multimodal End-2-End Approach to Accessible Computing; Springer: Berlin/Heidelberg, Germany, 2015; pp. 211–224. [Google Scholar]

- Röber, N. Playing Audio-only Games: A compendium of interacting with virtual, auditory Worlds. In Proceedings of the 2005 DiGRA International Conference: Changing Views: Worlds in Play, Vancouver, Canada, 16–20 June 2005. [Google Scholar]

- Baer, R.H.; Morrison, H. Simon, Electronic Game. 1978. Available online: https://en.wikipedia.org/wiki/Simon_(game) (accessed on 12 November 2019).

- Bop It. 1996. Available online: https://en.wikipedia.org/wiki/Bop_It (accessed on 30 August 2019).

- Dance Dance Revolution. 1998. Available online: https://en.wikipedia.org/wiki/Dance_Dance_Revolution_(1998_video_game) (accessed on 30 August 2019).

- Harmonix, N. Budcat Creations, Vicarious Visions & FreeStyleGames. (2005–present) . In Guitar Hero [Interactive Music Game]; Red Octane/Activision: Santa Monica, CA, USA, 2005. [Google Scholar]

- Lee, L. Investigating the impact of music activities incorporating Soundbeam Technology on children with multiple disabilities. J. Eur. Teach. Educ. Netw. 2015, 10, 1–12. [Google Scholar]

- Bit Generations: Soundvoyager. 2006. Available online: https://nintendo.fandom.com/wiki/Bit_Generations:_Soundvoyager (accessed on 30 August 2019).

- Urbanek, M.; Güldenpfennig, F. Tangible Audio Game Development Kit: Prototyping Audio Games with a Tangible Editor. In Proceedings of the Eleventh International Conference on Tangible, Embedded, and Embodied Interaction, Yokohama, Japan, 20–23 March 2017; pp. 473–479. [Google Scholar]

- Giannakopoulos, G.; Tatlas, N.A.; Giannakopoulos, V.; Floros, A.; Katsoulis, P. Accessible electronic games for blind children and young people. Br. J. Educ. Technol. 2018, 49, 608–619. [Google Scholar] [CrossRef]

- Hugill, A.; Amelides, P. Audio only computer games—Papa Sangre. In Expanding the Horizon of Electroacoustic Music Analysis; Cambridge University Press: Cambridge, UK, 2016. [Google Scholar]

- Audio Game Hub. Available online: http://www.audiogamehub.com/audio-game-hub/ (accessed on 30 August 2019).

- Bloom (Version 3.1). 2019. Available online: https://apps.apple.com/us/app/bloom/id292792586 (accessed on 30 August 2019).

- Azuma, R.T. A survey of augmented reality. Presence Teleoper. Virtual Environ. 1997, 6, 355–385. [Google Scholar] [CrossRef]

- Hug, D.; Kemper, M.; Panitz, K.; Franinović, K. Sonic Playgrounds: Exploring Principles and Tools for Outdoor Sonic Interaction. In Proceedings of the Audio Mostly 2016, Norrkoping, Sweden, 4–6 October 2016; pp. 139–146. [Google Scholar]

- RjDj. 2008. Available online: https://en.wikipedia.org/wiki/RjDj (accessed on 30 August 2019).

- Vazquez-Alvarez, Y.; Oakley, I.; Brewster, S.A. Auditory display design for exploration in mobile audio-augmented reality. Pers. Ubiquitous Comput. 2012, 16, 987–999. [Google Scholar] [CrossRef]

- Mariette, N. From backpack to handheld: The recent trajectory of personal location aware spatial audio. In Proceedings of the PerthDAC 2007: 7th Digital Arts and Culture Conference, Perth, Australia, 15–18 October 2007; pp. 233–240. [Google Scholar]

- Cohen, M.; Villegas, J. From whereware to whence-and whitherware: Augmented audio reality for position-aware services. In Proceedings of the 2011 IEEE International Symposium on VR Innovation, SUNTEC, Singapore International Convention and Exhibition Center, Singapore, 19–20 March 2011; pp. 273–280. [Google Scholar]

- Mcgookin, D.; Brewster, S.; Priego, P. Audio bubbles: Employing non-speech audio to support tourist wayfinding. In Proceedings of the International Conference on Haptic and Audio Interaction Design, Dresden, Germany, 10–11 September 2009; pp. 41–50. [Google Scholar]

- Stahl, C. The roaring navigator: A group guide for the zoo with shared auditory landmark display. In Proceedings of the 9th International Conference on Human Computer Interaction with Mobile Devices and Services, Singapore, 9–12 September 2007; pp. 383–386. [Google Scholar]

- Holland, S.; Morse, D.R.; Gedenryd, H. AudioGPS: Spatial audio navigation with a minimal attention interface. Pers. Ubiquitous Comput. 2002, 6, 253–259. [Google Scholar] [CrossRef]

- Ren, G.; Wei, S.; O’Neill, E.; Chen, F. Towards the Design of Effective Haptic and Audio Displays for Augmented Reality and Mixed Reality Applications. Adv. Multimedia 2018, 2018, 4517150. [Google Scholar] [CrossRef]

- Chatzidimitris, T.; Gavalas, D.; Michael, D. SoundPacman: Audio augmented reality in location-based games. In Proceedings of the 2016 18th IEEE Mediterranean Electrotechnical Conference (MELECON), Limassol, Cyprus, 18–20 April 2016; pp. 1–6. [Google Scholar]

- Reid, J.; Geelhoed, E.; Hull, R.; Cater, K.; Clayton, B. Parallel worlds: Immersion in location-based experiences. In Proceedings of the CHI’05 extended abstracts on Human factors in computing systems, Portland, OR, USA, 2–7 April 2005; pp. 1733–1736. [Google Scholar]

- Paterson, N.; Naliuka, K.; Jensen, S.K.; Carrigy, T.; Haahr, M.; Conway, F. Design, implementation and evaluation of audio for a location aware augmented reality game. In Proceedings of the 3rd International Conference on Fun and Games, Leuven, Belgium, 15–17 September 2010; pp. 149–156. [Google Scholar]

- Härmä, A.; Jakka, J.; Tikander, M.; Karjalainen, M.; Lokki, T.; Hiipakka, J.; Lorho, G. Augmented reality audio for mobile and wearable appliances. J. Audio Eng. Soc. 2004, 52, 618–639. [Google Scholar]

- Ekman, I. Sound-based gaming for sighted audiences—Experiences from a mobile multiplayer location aware game. In Proceedings of the 2nd Audio Mostly Conference, Ilmenau, Germany, 27–28 September 2007; pp. 148–153. [Google Scholar]

- Shepard, M. Tactical sound garden [tsg] toolkit. In Proceedings of the 3rd International Workshop on Mobile Music Technology, Brighton, UK, 2–3 March 2006. [Google Scholar]

- Tikander, M.; Karjalainen, M.; Riikonen, V. An augmented reality audio headset. In Proceedings of the 11th International Conference on Digital Audio Effects (DAFx-08), Espoo, Finland, 1–4 September 2008. [Google Scholar]

- Blum, J.R.; Bouchard, M.; Cooperstock, J.R. What’s around me? Spatialized audio augmented reality for blind users with a smartphone. In Proceedings of the International Conference on Mobile and Ubiquitous Systems: Computing, Networking, and Services, Copenhagen, Denmark, 6–9 December 2011; pp. 49–62. [Google Scholar]

- Pellerin, R.; Bouillot, N.; Pietkiewicz, T.; Wozniewski, M.; Settel, Z.; Gressier-Soudan, E.; Cooperstock, J.R. Soundpark: Exploring ubiquitous computing through a mixed reality multi-player game experiment. Stud. Inform. Universalis 2009, 8, 21. [Google Scholar]

- Strachan, S.; Eslambolchilar, P.; Murray-Smith, R.; Hughes, S.; O’Modhrain, S. GpsTunes: Controlling navigation via audio feedback. In Proceedings of the 7th International Conference on Human Computer Interaction With Mobile Devices & Services, Salzburg, Austria, 19–22 September 2005; pp. 275–278. [Google Scholar]

- Etter, R.; Specht, M. Melodious walkabout: Implicit navigation with contextualized personal audio contents. In Proceedings of the Third International Conference on Pervasive Computing, Munich, Germany, 8–13 May 2005. [Google Scholar]

- Eckel, G. Immersive audio-augmented environments: The LISTEN project. In Proceedings of the Fifth IEEE International Conference on Information Visualisation, London, UK, 25–27 July 2001; pp. 571–573. [Google Scholar]

- Hatala, M.; Kalantari, L.; Wakkary, R.; Newby, K. Ontology and rule based retrieval of sound objects in augmented audio reality system for museum visitors. In Proceedings of the 2004 ACM Symposium on Applied Computing, Nicosia, Cyprus, 14–17 March 2004; pp. 1045–1050. [Google Scholar]

- Rozier, J.; Karahalios, K.; Donath, J. Hear&There: An Augmented Reality System of Linked Audio. In In Proceedings of the International Conference on Auditory Display; 2000. Available online: https://www.icad.org/websiteV2.0/Conferences/ICAD2000/ICAD2000.html (accessed on 12 November 2019).

- Magnusson, C.; Breidegard, B.; Rassmus-Gröhn, K. Soundcrumbs–Hansel and Gretel in the 21st century. In Proceedings of the 4th International Workshop on Haptic and Audio Interaction Design (HAID ’09), Dresden, Germany, 10–11 September 2009. [Google Scholar]

- Alderman, N. Zombies, run!, Electronic Game. 2012. Available online: https://zombiesrungame.com (accessed on 12 November 2019).

- Lyons, K.; Gandy, M.; Starner, T. Guided by Voices: An Audio Augmented Reality System; Georgia Institute of Technology: Atlanta, GA, USA, 2000. [Google Scholar]

- Moustakas, N.; Floros, A.; Grigoriou, N. Interactive audio realities: An augmented/mixed reality audio game prototype. In Proceedings of the Audio Engineering Society Convention 130, London, UK, 13–16 May 2011. [Google Scholar]

- Stößel, C.; Blessing, L. Is gesture-based interaction a way to make interfaces more intuitive and accessible. In HCI 2009 Electronic Proceedings: WS4–Prior Experience; British Computer Society: Cambridge, UK, 24 July 2009. [Google Scholar]

- Buchmann, V.; Violich, S.; Billinghurst, M.; Cockburn, A. FingARtips: Gesture based direct manipulation in Augmented Reality. In Proceedings of the 2nd International Conference on Computer Graphics and Interactive Techniques in Australasia and South East Asia, Singapore, 15–18 June 2004; pp. 212–221. [Google Scholar]

- Yusof, C.S.; Bai, H.; Billinghurst, M.; Sunar, M.S. A review of 3D gesture interaction for handheld augmented reality. J. Teknol. 2016, 78. [Google Scholar] [CrossRef]

- Engström, H.; Brusk, J.; Östblad, P.A. Including visually impaired players in a graphical adventure game: A study of immersion. IADIS Int. J. Comput. Sci. Inf. Syst. 2015, 10, 95–112. [Google Scholar]

- Sodnik, J.; Tomazic, S.; Grasset, R.; Duenser, A.; Billinghurst, M. Spatial sound localization in an augmented reality environment. In Proceedings of the 18th Australia Conference on Computer-Human Interaction: Design: Activities, Artefacts and Environments, Sydney, Australia, 20–24 November 2006; pp. 111–118. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rovithis, E.; Moustakas, N.; Floros, A.; Vogklis, K. Audio Legends: Investigating Sonic Interaction in an Augmented Reality Audio Game. Multimodal Technol. Interact. 2019, 3, 73. https://doi.org/10.3390/mti3040073

Rovithis E, Moustakas N, Floros A, Vogklis K. Audio Legends: Investigating Sonic Interaction in an Augmented Reality Audio Game. Multimodal Technologies and Interaction. 2019; 3(4):73. https://doi.org/10.3390/mti3040073

Chicago/Turabian StyleRovithis, Emmanouel, Nikolaos Moustakas, Andreas Floros, and Kostas Vogklis. 2019. "Audio Legends: Investigating Sonic Interaction in an Augmented Reality Audio Game" Multimodal Technologies and Interaction 3, no. 4: 73. https://doi.org/10.3390/mti3040073

APA StyleRovithis, E., Moustakas, N., Floros, A., & Vogklis, K. (2019). Audio Legends: Investigating Sonic Interaction in an Augmented Reality Audio Game. Multimodal Technologies and Interaction, 3(4), 73. https://doi.org/10.3390/mti3040073