Accessible Digital Musical Instruments—A Review of Musical Interfaces in Inclusive Music Practice

Abstract

:1. Introduction

2. Background

2.1. Digital Musical Instruments (DMIs)

2.2. Inclusive Music Practice

2.3. Related Work

3. Method

3.1. Data Collection

- The study should present at least one ADMI.

- The paper should focus primarily on ADMIs, mentioning the potential user group(s) in either the title or the abstract.

- The paper should describe a practical implementation of an ADMI that enabled real-time manipulation of input or control data; theoretical papers and reviews were not included.

3.2. Analysis

4. Results

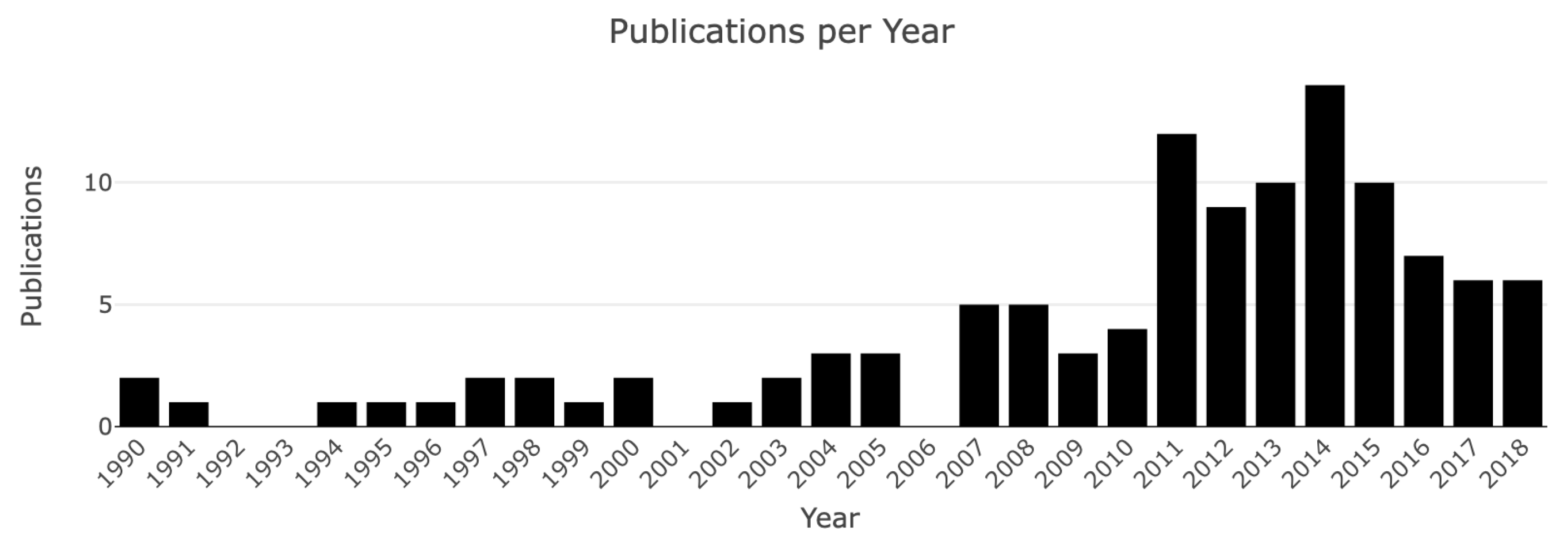

4.1. Publications

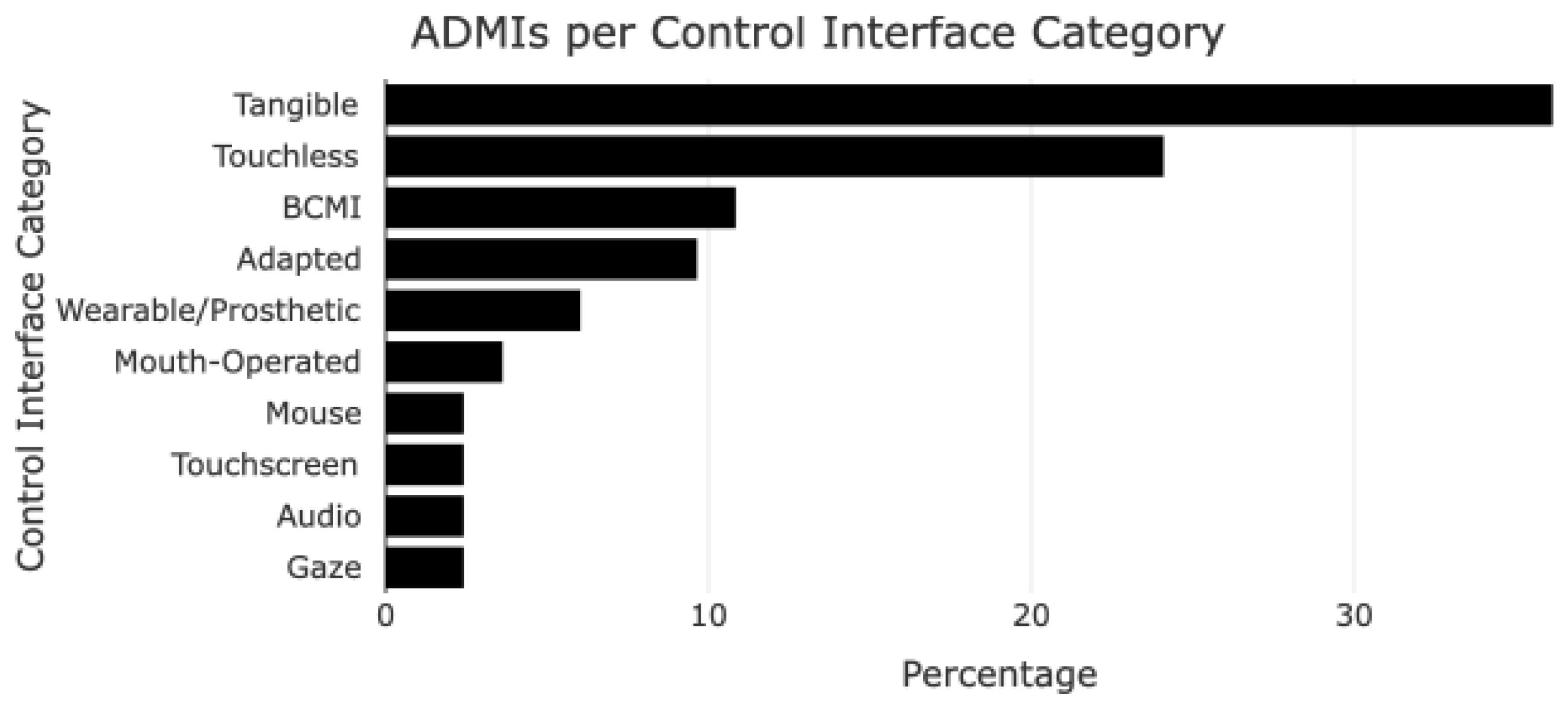

4.2. Control Interface Type

4.3. Sensor and Actuator Use

4.4. Output Modalities

4.5. Target User Group

4.6. Music and Sound Synthesis Control

4.7. Design Process and System Evaluation

5. Discussion

Limitations

6. Conclusions

Supplementary Materials

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| ADMI | Accessible Digital Musical Instrument |

| AMT | Assistive Music Technology |

| BCMI | Brain–Computer Music Interface |

| DMI | Digital Musical Instrument |

| EEG | ElectroEncephaloGraphy |

| EMG | ElectroMyoGraphy |

| MSSE | MultiSenSory Environment |

| NIME | New Interface for Musical Expression |

| SEN | Special EducatioN |

References

- Assembly, U.G. Universal Declaration of Human Rights; UN General Assembly: New York, NY, USA, 1948. Available online: https://www.un.org/en/udhrbook/pdf/udhr_booklet_en_web.pdf (accessed on 29 June 2019).

- McDougall, J.; Wright, V.; Rosenbaum, P. The ICF Model of Functioning and Disability: Incorporating Quality of Life and Human Development. Dev. Neurorehabil. 2010, 13, 204–211. [Google Scholar] [CrossRef] [PubMed]

- Holmes, T.B. Electronic Music Before 1945. In Electronic and Experimental Music; Scribner: New York, NY, USA, 1985; Chapter 1; pp. 3–42. [Google Scholar]

- Adlers, F. Fender Rhodes: The Piano That Changed the History of Music. Available online: http://www.fenderrhodes.com/history/narrative.html (accessed on 29 June 2019).

- Knox, R. Adapted Music as Community Music. Int. J. Community Music. 2004, 1, 247–252. [Google Scholar]

- Magee, W.L.; Burland, K. Using Electronic Music Technologies in Music Therapy: Opportunities, Limitations and Clinical Indicators. Br. J. Music Ther. 2008, 22, 3–15. [Google Scholar] [CrossRef]

- Samuels, K. Enabling Creativity: Inclusive Music Interfaces and Practices. In Proceedings of the International Conference on Live Interfaces (ICLI), Lisbon, Portugal, 19–23 November 2014. [Google Scholar]

- Drake Music Project. Available online: https://www.drakemusic.org/ (accessed on 29 June 2019).

- Open Up Music. Available online: http://openupmusic.org/ (accessed on 29 June 2019).

- Adaptive Use Musical Instruments (AUMI) Project. Available online: http://aumiapp.com/ (accessed on 29 June 2018).

- Human Instruments. Available online: https://www.humaninstruments.co.uk/ (accessed on 29 June 2019).

- OHMI Conference and Awards—Music and Physical Disability: From Instrument to Performance. Available online: https://www.ohmi.org.uk/ (accessed on 29 June 2019).

- Soundbeam. Available online: https://www.soundbeam.co.uk/ (accessed on 29 June 2019).

- Beamz. Available online: https://thebeamz.com/ (accessed on 29 June 2019).

- Skoog Music. Available online: http://skoogmusic.com/ (accessed on 29 June 2019).

- Magic Flute. Available online: http://housemate.ie/magic-flute/ (accessed on 29 June 2018).

- Quintet. Available online: http://housemate.ie/quintet/ (accessed on 29 June 2019).

- Jamboxx Pro. Available online: https://www.jamboxx.com/ (accessed on 29 June 2019).

- BioControl Systems. Available online: http://www.biocontrol.com/products.html (accessed on 29 June 2019).

- GroovTube. Available online: http://housemate.ie/groovtube/ (accessed on 29 June 2019).

- Samuels, K. The Meanings in Making: Openness, Technology and Inclusive Music Practices for People with Disabilities. Leonardo Music J. 2015, 25, 25–29. [Google Scholar] [CrossRef]

- Fiebrink, R.; Cook, P.R. The Wekinator: A system for Real-Time, Interactive Machine Learning in Music. In Proceedings of the Eleventh International Society for Music Information Retrieval Conference (ISMIR 2010), Utrecht, The Netherlands, 9–13 August 2010. [Google Scholar]

- Katan, S.; Grierson, M.; Fiebrink, R. Using Interactive Machine Learning to Support Interface Development through Workshops with Disabled People. In Proceedings of the ACM CHI Conference on Human Factors in Computing Systems, Seoul, South Korea, 18–23 April 2015; pp. 251–254. [Google Scholar] [CrossRef]

- Cambridge Dictionary. Available online: https://dictionary.cambridge.org/dictionary/english/diversity (accessed on 29 June 2019).

- Machover, T. Shaping Minds Musically. BT Technol. J. 2004, 22, 171–179. [Google Scholar] [CrossRef]

- Hayes, L. Sound, Electronics, and Music: A Radical and Hopeful Experiment in Early Music Education. Comput. Music J. 2017, 41, 36–49. [Google Scholar] [CrossRef]

- Webster, P.R. Young Children and Music Technology. Res. Stud. Music Educ. 1998, 11, 61–76. [Google Scholar] [CrossRef]

- Frid, E. Accessible Digital Musical Instruments: A Survey of Inclusive Instruments Presented at the NIME, SMC and ICMC Conferences. In Proceedings of the International Computer Music Conference 2018, Daegu, South Korea, 5–10 August 2018; pp. 53–59. [Google Scholar]

- Larsen, J.V.; Overholt, D.; Moeslund, T.B. The Prospects of Musical Instruments for People with Physical Disabilities. In Proceedings of the International Conference on New Instruments for Musical Expression, Brisbane, Australia, 11–15 June 2016; pp. 327–331. [Google Scholar]

- Graham-Knight, K.; Tzanetakis, G. Adaptive Music Technology: History and Future Perspectives. In Proceedings of the International Conference on Computer Music, Denton, TX, USA, 25 September–1 October 2015; pp. 416–419. [Google Scholar]

- Farrimond, B.; Gillard, D.; Bott, D.; Lonie, D. Engagement with Technology in Special Educational & Disabled Music Settings. Youth Music Report. December 2011, pp. 1–40. Available online: https://network.youthmusic.org.uk/file/5694/download?token=I-1K0qhQ (accessed on 29 June 2019).

- Ward, A.; Woodbury, L.; Davis, T. Design Considerations for Instruments for Users with Complex Needs in SEN Settings. In Proceedings of the New Interfaces for Musical Expression, Copenhagen, Denmark, 15–18 May 2017; Bournemouth University: Fern Barrow, Poole, Dorset, UK, 2017. [Google Scholar]

- Moog, R. The Musician: Alive and Well in the World of Electronics. In The Biology of Music Making: Proceedings of the 1984 Denver Conference; MMB Music, Inc.: St Louis, MO, USA, 1988; pp. 214–220. [Google Scholar]

- Pressing, J. Cybernetic Issues in Interactive Performance Systems. Comput. Music J. 1990, 14, 12–25. [Google Scholar] [CrossRef]

- Hunt, A.; Wanderley, M.M.; Paradis, M. The Importance of Parameter Mapping in Electronic Instrument Design. J. New Music Res. 2003, 32, 429–440. [Google Scholar] [CrossRef]

- Miranda, E.R.; Wanderley, M.M. Musical Gestures: Acquisition and Mapping. In New Digital Instruments: Control and Interaction beyond the Keyboard; A-R Editions, Inc.: Middleton, WI, USA, 2006; p. 3. [Google Scholar]

- Hunt, A.; Wanderley, M.M. Mapping Performer Parameters to Synthesis Engines. Organ. Sound 2002, 7, 97–108. [Google Scholar] [CrossRef]

- Correa, A.G.D.; Ficheman, I.K.; do Nascimento, M.; de Deus Lopes, R. Computer Assisted Music Therapy: A case Study of an Augmented Reality Musical System for Children with cerebral Palsy Rehabilitation. In Proceedings of the Ninth IEEE International Conference on Advanced Learning Technologies, 2009 (ICALT 2009), Riga, Latvia, 15–17 July 2009; pp. 218–220. [Google Scholar]

- Kirk, R.; Abbotson, M.; Abbotson, R.; Hunt, A.; Cleaton, A. Computer Music in the Service of Music therapy: The MIDIGRID and MIDICREATOR Systems. Med. Eng. Phys. 1994, 16, 253–258. [Google Scholar] [CrossRef]

- Krout, R.; Burnham, A.; Moorman, S. Computer and Electronic Music Applications with Students in Special Education: From Program Proposal to Progress Evaluation. Music Ther. Perspect. 1993, 11, 28–31. [Google Scholar] [CrossRef]

- Spitzer, S. Computers and Music Therapy: An Integrated Approach: Four Case Studies. Music Ther. Perspect. 1989, 7, 51–54. [Google Scholar] [CrossRef]

- Vamvakousis, Z.; Ramirez, R. The EyeHarp: A gaze-controlled digital musical instrument. Front. Psychol. 2016, 7, 906. [Google Scholar] [CrossRef] [PubMed]

- Lubet, A. Music, Disability, and Society; Temple University Press: Philadelphia, PA, USA, 2011. [Google Scholar]

- Magee, W.L. Music Technology in Therapeutic and Health Settings; Jessica Kingsley Publishers: London, UK; Philadelphia, PA, USA, 2014. [Google Scholar]

- Cappelen, B.; Andersson, A.P. Designing four generations of ‘Musicking Tangibles’. In Music, Health, Technology and Design; NMH-Publications: Oslo, Norway, 2014. [Google Scholar]

- Challis, B. Octonic: An Accessible Electronic Musical Instrument. Digit. Creat. 2011, 22, 1–12. [Google Scholar] [CrossRef]

- Harrison, J.; McPherson, A.P. Adapting the Bass Guitar for One-Handed Playing. J. New Music Res. 2017, 46, 270–285. [Google Scholar] [CrossRef]

- Fawcett, S.B.; White, G.W.; Balcazar, F.E.; Suarez-Balcazar, Y.; Mathews, R.M.; Paine-Andrews, A.; Seekins, T.; Smith, J.F. A Contextual-Behavioral Model of Empowerment: Case Studies Involving People with Physical Disabilities. Am. J. Community Psychol. 1994, 22, 471–496. [Google Scholar] [CrossRef] [PubMed]

- Rolvsjord, R. Therapy as Empowerment: Clinical and political Implications of Empowerment Philosophy in Mental Health Practises of Music Therapy. Nord. J. Music Ther. 2004, 13, 99–111. [Google Scholar] [CrossRef]

- Leman, M.; Maes, P.J. The Role of Embodiment in the Perception of Music. Empir. Musicol. Rev. 2015, 9, 236–246. [Google Scholar] [CrossRef] [Green Version]

- Godøy, R.I.; Leman, M. Musical Gestures: Sound, Movement, and Meaning; Routledge: Abingdon, UK, 2010. [Google Scholar]

- Partesotti, E.; Peñalba, A.; Manzolli, J. Digital Instruments and their Uses in Music Therapy. Nord. J. Music Ther. 2018, 27, 399–418. [Google Scholar] [CrossRef]

- Anderson, T.; Smith, C. Composability: Widening Participation in Music Making for People with Disabilities via Music Software and Controller Solutions. In Proceedings of the 2nd Annual ACM Conference on Assistive Technologies, Vancouver, BC, Canada, 11–12 April 1996; ACM: New York, NY, USA, 1996; pp. 110–116. [Google Scholar]

- Moog, R.A. MIDI: Musical Instrument Digital Interface. J. Audio Eng. Soc 1986, 34, 394–404. [Google Scholar]

- McPherson, A.; Morreale, F.; Harrison, J. Musical Instruments for Novices: Comparing NIME, HCI and Crowdfunding Approaches. In New Directions in Music and Human-Computer Interaction; Holland, S., Mudd, T., Wilkie-McKenna, K., McPherson, A., Wanderley, M.M., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 179–212. [Google Scholar]

- Hahna, N.D.; Hadley, S.; Miller, V.H.; Bonaventura, M. Music Technology Usage in Music Therapy: A Survey of Practice. Arts Psychother. 2012, 39, 456–464. [Google Scholar] [CrossRef]

- Magee, W.L.; Burland, K. An Exploratory Study of the Use of Electronic Music Technologies in Clinical Music Therapy. Nord. J. Music Ther. 2008, 17, 124–141. [Google Scholar] [CrossRef]

- Whitehead-Pleaux, A.M.; Clark, S.L.; Spall, L.E. Indications and Counterindications for Electronic Music Technologies in a Pediatric Medical Setting. Music Med. 2011, 3, 154–162. [Google Scholar] [CrossRef]

- Crowe, B.J.; Rio, R. Implications of Technology in Music Therapy Practice and Research for Music Therapy Education: A Review of Literature. J. Music Ther. 2004, 41, 282–320. [Google Scholar] [CrossRef] [PubMed]

- Snoezelen Multi-Sensory Environments. Available online: http://www.snoezelen.info/ (accessed on 29 June 2019).

- Machover, T. Hyperinstrument: Musically Intelligent and Interactive Performance and Creativity Systems. In Proceedings of the 1989 International Computer Music Conference, Ann Arbour, MI, USA, 1–6 October 1989; pp. 186–190. [Google Scholar]

- Overholt, D.; Berdahl, E.; Hamilton, R. Advancements in Actuated Musical Instruments. Organ. Sound 2011, 16, 154–165. [Google Scholar] [CrossRef]

- Hattwick, I.; Malloch, J.; Wanderley, M.M. Forming Shapes to Bodies: Design for Manufacturing in the Prosthetic Instruments. In Proceedings of the International New Interfaces for Musical Expression Conference, London, UK, 30 June–4 July 2014; pp. 443–448. [Google Scholar]

- Spiegel, L. Music Mouse. Available online: http://retiary.org/ls/programs.html (accessed on 29 June 2019).

- Jense, A.; Leeuw, H. WamBam: A Case Study in Design for an Electronic Musical Instrument for Severely Intellectually Disabled Users. In Proceedings of the International Conference on New Interfaces for Musical Expression, Baton Rouge, LA, USA, 31 May–3 June 2015; pp. 74–77. [Google Scholar]

- Gumtau, S.; Newland, P.; Creed, C. MEDIATE—A Responsive Environment Designed for Children with Autism. In Proceedings of the Accessible Design in a Digital World, Dundee, Scotland, 23–25 August 2005. [Google Scholar]

- Knapp, R.B.; Lusted, H.S. A Bioelectric Controller for Computer Music Applications. Comput. Music J. 1990, 14, 42–47. [Google Scholar] [CrossRef]

- Harrison, J.; McPherson, A. An Adapted Bass Guitar for One-Handed Playing. In Proceedings of the International Conference on New Interfaces for Musical Expression, Aalborg, Denmark, 15–18 May 2017; Aalborg University Copenhagen: Copenhagen, Denmark, 2017; pp. 507–508. [Google Scholar]

- Wilde, D. Extending Body and Imagination: Moving to Move. Int. J. Disabil. Hum. Dev. 2011, 10, 31–36. [Google Scholar] [CrossRef]

- Gehlhaar, R.; Rodrigues, P.M.; Girão, L.M.; Penha, R. Instruments for Everyone: Designing New Means of Musical Expression for Disabled Creators. In Technologies of Inclusive Well-Being; Springer: Heidelberg, Germany, 2014; pp. 167–196. [Google Scholar]

- Ellis, P. Vibroacoustic Sound Therapy: Case Studies with Children with Profound and Multiple Learning Difficulties and the Elderly in Long-Term Residential Care. Stud. Health Technol. Inform. 2004, 103, 36–42. [Google Scholar]

- Favilla, S.; Pedell, S. Touch Screen Collaborative Music: Designing NIME for Older People with Dementia. Environment 2014, 20, 27. [Google Scholar]

- Meckin, D.; Bryan-Kinns, N. MoosikMasheens: Music, Motion and Narrative with Young People Who Have Complex Needs. In Proceedings of the 12th International Conference on Interaction Design and Children, New York, NY, USA, 24–27 June 2013; ACM: New York, NY, USA, 2013; pp. 66–73. [Google Scholar]

- Søderberg, E.A.; Odgaard, R.E.; Bitsch, S.; Høeg-Jensen, O.; Schildt, N.; Christensen, S.D.P.; Gelineck, S. Music Aid-Towards a Collaborative Experience for Deaf and Hearing People in Creating Music. In Proceedings of the International Conference on New Interfaces for Musical Expression, Brisbane, Australia, 11–15 July 2016. [Google Scholar]

- Miranda, E.R.; Boskamp, B. Steering Generative Rules with the EEG: An Approach to Brain-Computer Music Interfacing. In Proceedings of the Sound and Music Computing Conference, Salerno, Italt, 24–25 November 2005; Volume 5. [Google Scholar]

- Aziz, A.D.; Warren, C.; Bursk, H.; Follmer, S. The Flote: An Instrument for People with Limited Mobility. In Proceedings of the 10th International ACM SIGACCESS Conference on Computers and Accessibility, Halifax, NS, Canada, 13–15 October 2008; ACM: New York, NY, USA, 2008; pp. 295–296. [Google Scholar]

- Almeida, A.P.; Girão, L.M.; Gehlhaar, R.; Rodrigues, P.M.; Rodrigues, H.; Neto, P.; Mónica, M. Sound = Space Opera: Choreographing Life within an Interactive Musical Environment. Int. J. Disabil. Hum. Dev. 2011, 10, 49–53. [Google Scholar] [CrossRef]

- MidiGrid. Available online: http://midigrid.com/ (accessed on 29 June 2019).

- Bresin, R.; Elblaus, L.; Frid, E.; Favero, F.; Annersten, L.; Berner, D.; Morreale, F. Sound Forest/Ljudskogen: A Large-Scale String-Based Interactive Musical Instrument. In Proceedings of the Sound and Music Computing Conference, Hamburg, Germany, 31 August–3 September 2016; pp. 79–84. [Google Scholar]

- Wilde, D. The hipdiskettes: Learning (through) Wearables. In Proceedings of the 20th Australasian Conference on Computer-Human Interaction: Designing for Habitus and Habitat, Cairns, Australia, 8–12 January 2008; pp. 259–262. [Google Scholar]

- Leap Motion. Available online: https://www.leapmotion.com/ (accessed on 29 June 2019).

- Benveniste, S.; Jouvelot, A.P.; Lecourt, A.E.; Michel, A.R. Designing Wiimprovisation for Mediation in Group Music Therapy with Children Suffering from Behavioral Disorders. In Proceedings of the International Conference on Interaction Design and Children, Milan, Italy, 3–5 June 2009; pp. 18–26. [Google Scholar]

- Kirwan, N.J.; Overholt, D.; Erkut, C. Bean: A Digital Musical Instrument for Use in Music Therapy. In Proceedings of the Sound and Music Computing Conference, Salerno, Italy, 24–26 November 2015; pp. 49–54. [Google Scholar]

- Rigler, J.; Seldess, Z. The Music Cre8tor: An Interactive System for Musical Exploration and Education. In Proceedings of the International Conference on New Interfaces for Musical Expression, New York, NY, USA, 6–10 June 2007; ACM: New York, NY, USA, 2007; pp. 415–416. [Google Scholar]

- Cappelen, B.; Andersson, A.P. Embodied and distributed parallel DJing. Stud. Health Technol. Inform. 2016, 229, 528–539. [Google Scholar] [CrossRef] [PubMed]

- Matossian, V.; Gehlhaar, R. Human Instruments: Accessible Musical Instruments for People with Varied Physical Ability. Annu. Rev. Cyberther. Telemed. 2015, 13, 200–205. [Google Scholar]

- Mann, S.; Fung, J.; Garten, A. Deconcert: Bathing in the Light, Sound, and Waters of the Musical Brainbaths. In Proceedings of the International Computer Music Conference, Copenhagen, Denmark, 27–31 August 2007. [Google Scholar]

- Zubrycka, J.; Cyrta, P. Morimo. Tactile Sound Aesthetic Experience. In Proceedings of the International Computer Music Conference, Ljubljana, Slovenia, 9–15 September 2012; pp. 423–425. [Google Scholar]

- Miranda, E.R.; Magee, W.L.; Wilson, J.J.; Eaton, J.; Palaniappan, R. Brain-Computer Music Interfacing (BCMI): From Basic Research to the Real World of Special Needs. Music Med. 2011, 3, 134–140. [Google Scholar] [CrossRef] [Green Version]

- Riley, P.; Alm, N.; Newell, A. An Interactive Tool to Promote Musical Creativity in People with Dementia. Comput. Hum. Behav. 2009, 25, 599–608. [Google Scholar] [CrossRef]

- Eaton, J.; Williams, D.; Miranda, E. The Space Between Us: Evaluating a Multi-User Affective Brain-Computer Music Interface. Brain-Comput. Interfaces 2015, 2, 103–116. [Google Scholar] [CrossRef]

- O’modhrain, S. A Framework for the Evaluation of Digital Musical Instruments. Comput. Music J. 2011, 35, 28–42. [Google Scholar] [CrossRef]

- Wanderley, M.M.; Orio, N. Evaluation of Input Devices for Musical Expression: Borrowing Tools from HCI. Comput. Music J. 2002, 26, 62–76. [Google Scholar] [CrossRef]

- Hunt, A.; Kirk, R. MidiGrid: Past, Present and Future. In Proceedings of the 2003 Conference on New Interfaces for Musical Expression, Montreal, QC, Canada, 22–24 May 2003; pp. 135–139. [Google Scholar]

- Hunt, A.; Kirk, R.; Neighbour, M. Multiple Media Interfaces for Music Therapy. IEEE Multimedia 2004, 11, 50–58. [Google Scholar] [CrossRef]

- Hunt, A.; Kirk, R.; Abbotson, M.; Abbotson, R. Music Therapy and Electronic Technology. In Proceedings of the 26th Euromicro Conference. Informatics: Inventing the Future, Maastricht, The Netherlands, 5–7 September 2000; Volume 2, pp. 362–367. [Google Scholar] [CrossRef]

- Medeiros, C.B.; Wanderley, M.M. A Comprehensive Review of Sensors and Instrumentation Methods in Devices for Musical Expression. Sensors 2014, 14, 13556–13591. [Google Scholar] [CrossRef]

- McCord, K. Children with Special Needs Compose Using Music Technology. J. Technol. Music Learn. 2002, 1, 3–14. [Google Scholar]

- Slevin, E.; Mcclelland, A. Multisensory Environments: Are they Therapeutic? A Single-Subject Evaluation of the Clinical Effectiveness of a Multisensory Environment. J. Clin. Nurs. 1999, 8, 48–56. [Google Scholar] [CrossRef] [PubMed]

- Sánchez, A.; Maseda, A.; Marante-Moar, M.P.; de Labra, C.; Lorenzo-López, L.; Millán-Calenti, J.C. Comparing the Effects of Multisensory Stimulation and Individualized Music Sessions on Elderly People with Severe Dementia: A Randomized Controlled Trial. J. Alzheimers Dis. 2016, 52, 303–315. [Google Scholar] [CrossRef] [PubMed]

- Schofield, P. The Effects of Snoezelen on Chronic Pain. Nurs. Stand. 2000, 15, 33–34. [Google Scholar] [CrossRef] [PubMed]

- Baillon, S.; van Diepen, E.; Prettyman, R. Multisensory Therapy in Psychiatric Care. Adv. Psychiatr. Treat. 2002, 8, 444–450. [Google Scholar] [CrossRef]

- Dobrian, C.; Koppelman, D. The ‘E’ in NIME: Musical Expression with New Computer Interfaces. In Proceedings of the International Conference on New interfaces for Musical Expression, Paris, France, 4–8 June 2006; pp. 277–282. [Google Scholar]

- Bergsland, A.; Wechsler, R. Composing Interactive Dance Pieces for the Motioncomposer, a Device for Persons with Disabilities. In Proceedings of the International Conference on New interfaces for Musical Expression (NIME2015), Baton Rouge, LA, USA, 31 May–3 June 2015; pp. 20–23. [Google Scholar]

| Concept 1 | Concept 2 | Concept 3 | |

|---|---|---|---|

| Key Concepts | musical instrument | accessibility | disability |

| Free text terms | digital musical instrument | adaptive | health |

| new interface for musical expression | adapted | need | |

| musical interface | assistive | impairment | |

| control interface | inclusion | therapy | |

| empowerment | disorder | ||

| Search phrases | “music* instrument*” | “accessib*” | “disab*” |

| “digital music* instrument*” | “adaptive” | “health” | |

| “new interface* for musical expression” | “adapted” | “need*” | |

| “music* interface*” | “assistive | ”impair*” | |

| “control interface*” | “inclus*” | “therap*” | |

| “empower*” | “disorder*” |

| Category | Publications | Percentage |

|---|---|---|

| Conference Proceedings | 60 | 53.1% |

| Journal Articles | 37 | 32.7% |

| Book Chapters | 13 | 11.5% |

| PhD Theses | 3 | 2.7% |

| Interface Category | Tot | ADMI Example |

|---|---|---|

| Tangible | 30 | WamBam, an electronic hand-drum for music therapy sessions with persons with severe intellectual disabilities [65]. |

| Touchless | 20 | MEDIATE, a multisensory environment designed for an interface between autistic and typical expression, using infrared cameras fed to the EyesWeb system to produce interactive output [66]. |

| BCMIs | 9 | Biomuse, a “biocontroller” that can be used to augment normal musical instrument performance or as a computer interface for musical composition and performance. Could be used by paralyzed and movement impaired individuals as a means to regain pleasures of music-making [67]. |

| Adapted Instruments | 8 | A modification of the electric bass guitar, designed for users with upper-limb disabilities. Enables MIDI-controlled actuated fretting via a foot pedal control [68]. |

| Wearable/prosthetic | 5 | HipDisk, a disk-shaped body-worn device designed to inspire people to swing their hips and explore their full range of movement through a simultaneous, interdependent exploration of sound [69]. |

| Mouth-operated | 3 | Doosafon, a mouth and head-operated devices similar to a xylophone, using a lightweight baton held in the mouth, linked to a breath sensor [70]. |

| Audio | 2 | A standard digital sound processor and microphone intended for vocal interaction, used together with a vibroacoustic chair producing vibrations based on the interaction [71]. |

| Gaze | 2 | The EyeHarp, a gaze-controlled DMI which aims to enable people with severe motor disabilities to learn, perform, and compose music using their gaze as control mechanism [42]. |

| Touchscreen | 2 | A collaborative music system based on a touchscreen controller, designed for persons with dementia. [72] |

| Mouse-controlled | 2 | The MidiGrid system, allowing a screen cursor (computer mouse) to be passed over cells so that the musical content of the selected cells is played out on a synthesizer attached to the computer via MIDI [39]. |

| Type | Occurrence |

|---|---|

| Touch | 14 |

| Accelerometer | 13 |

| Camera | 13 |

| Microphone | 10 |

| Button | 9 |

| Pressure | 8 |

| Switch | 7 |

| Ultrasonic | 8 |

| Piezo | 7 |

| Electrodes (for EEG) | 6 |

| Bend | 5 |

| Breath | 4 |

| Computer mouse/trackball | 3 |

| RFID reader | 4 |

| Infrared | 3 |

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Frid, E. Accessible Digital Musical Instruments—A Review of Musical Interfaces in Inclusive Music Practice. Multimodal Technol. Interact. 2019, 3, 57. https://doi.org/10.3390/mti3030057

Frid E. Accessible Digital Musical Instruments—A Review of Musical Interfaces in Inclusive Music Practice. Multimodal Technologies and Interaction. 2019; 3(3):57. https://doi.org/10.3390/mti3030057

Chicago/Turabian StyleFrid, Emma. 2019. "Accessible Digital Musical Instruments—A Review of Musical Interfaces in Inclusive Music Practice" Multimodal Technologies and Interaction 3, no. 3: 57. https://doi.org/10.3390/mti3030057

APA StyleFrid, E. (2019). Accessible Digital Musical Instruments—A Review of Musical Interfaces in Inclusive Music Practice. Multimodal Technologies and Interaction, 3(3), 57. https://doi.org/10.3390/mti3030057