1. Introduction

Wearable technologies refer to small smart devices that are attached or incorporated into body-worn accessories or pieces of clothing to provide convenient access to information [

1]. Wearables are often light-weight, accessible when in motion, and facilitates control over data exchange and communication [

2]. This recent trend is not merely perceived as a technological innovation, but it also holds a fashion component that can influence how and when they are worn [

3]. The benefits of wearable technology can significantly impact societies and businesses due to their support of applications that promote self-care, activity recognition, and self-quantifying [

2,

4]. For instance, using wearables to track convulsive seizures could improve the quality of life of epileptic patients by altering caregivers [

5]. Wearables also promote self-care by monitoring user activity and encouraging healthier behaviours and habits [

6]. Another great benefit of wearable technology is its assistive capabilities within healthcare establishments, thus improving the success rates of medical procedures and safety of patients [

7]. Within business, wearables can be used to train employees, improve customer satisfaction, and promote real-time access to information about goods and materials [

8].

Among wearable technologies, the smartwatch stands out as being well understood due its familiarity to a traditional watch. In fact, the last few years have seen an increase in the popularity of commercial smartwatches. Recent statistics show that the sales of smartwatches have increased from approximately 5 million units in 2014 to a staggering 75 million units in 2017 [

9]. These numbers, which are projected to double in 2018, signify a level of smartwatch adoption that heralds smartwatches as the next dominant computing paradigm. As one of the latest developments in the evolution of information technology, the smartwatch offers its user a remarkable level of convenience, as it swiftly and discreetly delivers timely information with minimal interference or intrusion compared with smartphones and other mobile devices [

10,

11,

12].

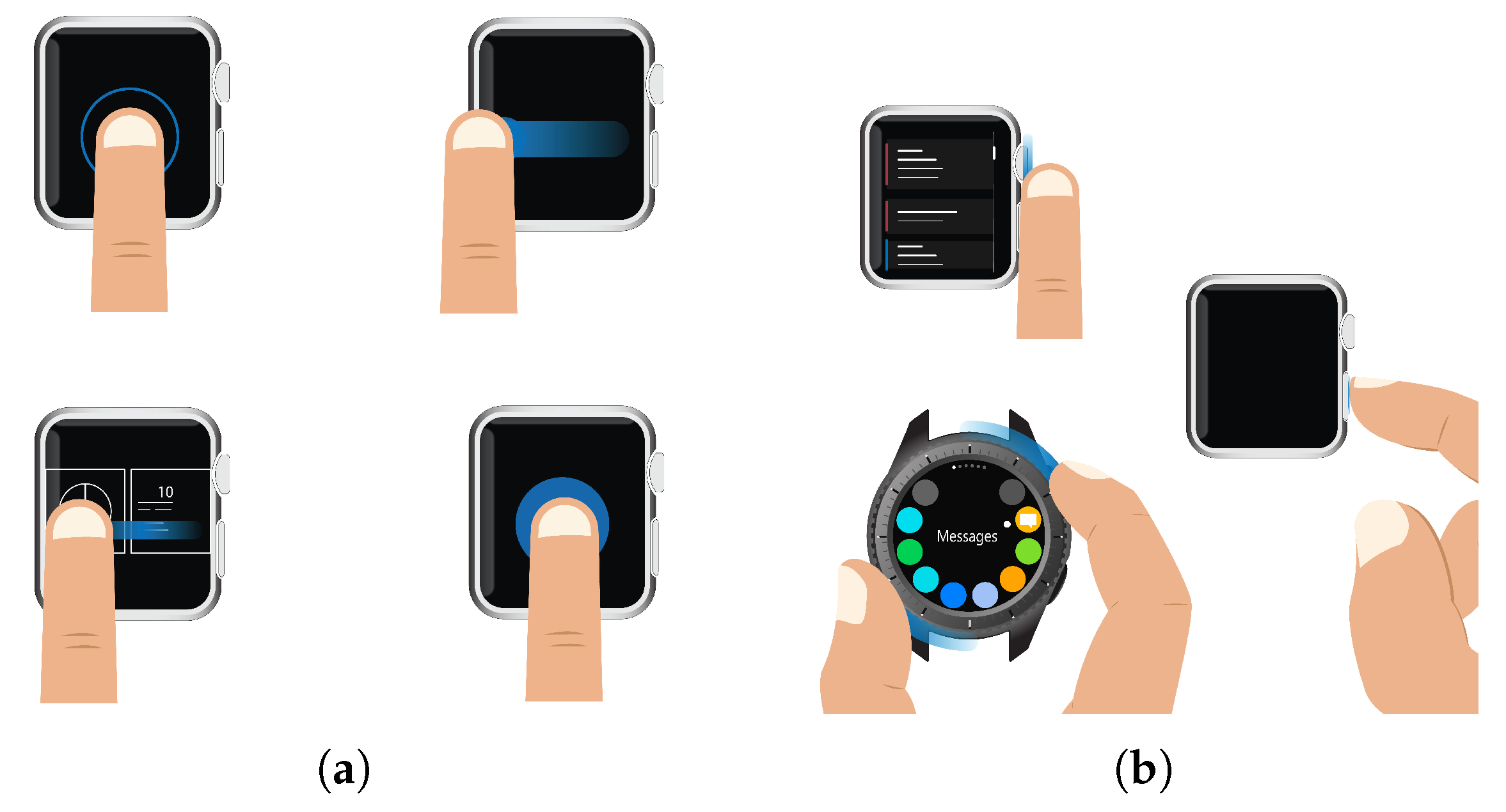

Much like a traditional watch, a smartwatch usually has a touchscreen and side buttons, through which user interaction is achieved. While these interaction modalities are similar to what is seen on a smartphone, the smartwatch provides a smaller touchscreen interaction space and requires bimanual interaction (i.e., the user’s dominant hand is used to asymmetrically interact with the watch strapped to the non-dominant hand [

13]). There has been a wealth of research addressing the potential capabilities and constraints of smartwatches. Several works have been carried out to gain an understanding of the use of smartwatches in daily activities (e.g., [

12,

14,

15,

16]), and even more work has investigated the mechanics of touch interactions on small devices (e.g., [

17,

18,

19]). The interface design space of smartwatches has also received its share of research attention (e.g., [

20,

21,

22]). The usability issues of smartwatches have recently been examined (e.g., [

23,

24]), as well as investigations of smartwatch acceptance (e.g., [

25,

26]). However, little effort has been made to assess smartwatch users’ performance a priori to aid in the design of smartwatch applications.

Predictive models are commonly used in human–computer interaction (HCI) to analytically measure human performance and thus evaluate the usability of low-fidelity prototypes. The Goals, Operators, Methods, and Selection rules (GOMS) model encompasses a family of techniques that are used to describe the procedural knowledge that a user must have in order to operate a system, of which the keystroke-level model (KLM) is one of its simplest forms [

27,

28]. The KLM numerically predicts execution times for typical task scenarios in a conventional environment. The KLM was initially developed to model desktop systems with a mouse and keyboard as input devices, but it has since been extended to model new user interaction paradigms [

27]. Several enhancements to the KLM have been applied to model touchscreen interactions with smartphones and tablets (e.g., [

29,

30]). The KLM has also been extended to in-vehicle information systems (IVISs), in which interaction often involves rotating knobs and pressing buttons (e.g., [

31,

32]). More recently, the KLM has been modified to address the expressive interactions used to engage with natural user interfaces (NUIs) [

33]. Such modifications to the KLM have often proven valuable to the development process, as they reduce the cost of usability testing and help to identify problems early on.

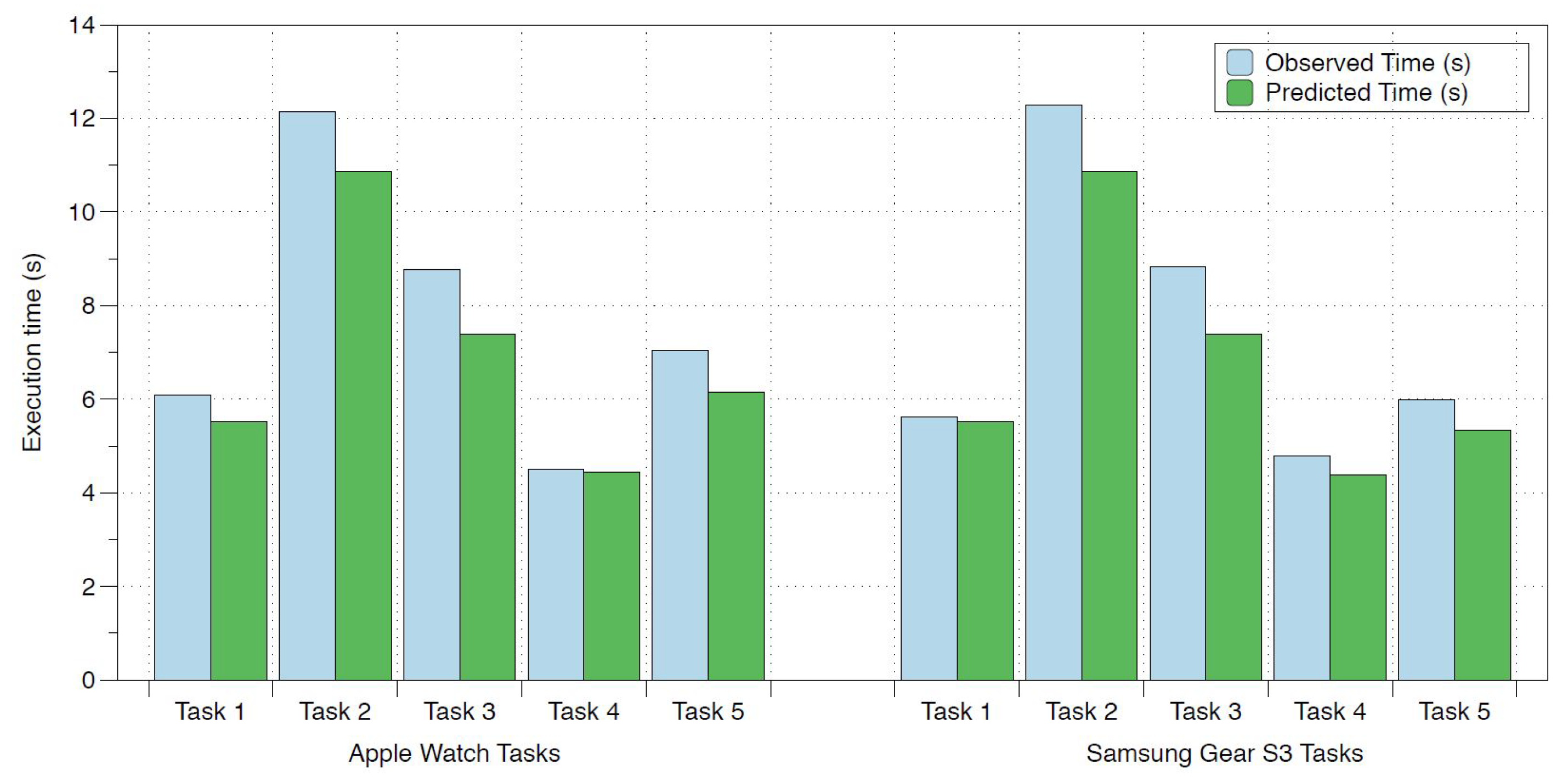

To support future smartwatch development, this paper presents a pragmatic solution for designing and assessing smartwatch applications by building upon the well-established original KLM [

27]. The main contributions of this paper are threefold. First, a revised KLM for smartwatch interaction, tentatively named Watch KLM, is introduced based on observations of participants’ interactions with two types of smartwatches. The new model comprises fourteen operators that are either newly introduced, inherited, or retained from the original KLM [

27]. Second, the unit time for each of these operators is revised to reflect the particularities of smartwatch interactions due to the smaller touchscreen and bimanual interaction mode. Third, the revised model’s predictions are realistically validated by assessing its prediction performance in comparison with observed execution times. The results validate the model’s ability to accurately assess the performance of smartwatch users with a small average error.

The remainder of this paper is organised as follows.

Section 2 presents the background on the KLM, introducing the original model and its seminal related publications.

Section 3 reviews modifications to the original KLM across various application domains.

Section 4 describes the first experimental study, which was conducted to elicit revised operators for the modified KLM for smartwatch interaction. In

Section 5, the second study is described, based on which the unit times for the revised operators are computed. The third study, conducted to validate the revised model for smartwatch interaction to assess its efficacy at predicting task execution times, is reported in

Section 6. Finally,

Section 7 summarises and concludes the paper and briefly discusses future work.

2. Keystroke-Level Model

The KLM is considered to be one of the simpler variants of the GOMS family of techniques [

28]. Unlike the other models in this family, the KLM calculates only the time it is expected to take an expert user to complete a task without errors in a conventional set-up. This calculation is based on the underlying assumption that the user employs a series of small and independent unit tasks; this assumption supports the segmentation of larger tasks into manageable units. In the KLM, the unit tasks are expressed in terms of a set of physical, mental, and system response operations. Each operator is identified by an alphabetical symbol and is assigned a unit value that is used in the calculation of an executable task (see

Table 1). The KLM comprises six operations:

The action of pressing a key, i.e., a keystroke, or pressing a button.

The action of pointing to a target on a display with the mouse.

The action of moving the hand between the keyboard and mouse or performing any fine hand adjustment on either device.

The action of manually drawing a set of straight line segments within a constrained 0.56 cm grid using the mouse.

A mental action operation to reflect the time it takes a user to mentally prepare for an action.

The system response time to a user’s action.

The mental action operator is unobservable but comprises a substantial fraction of a predicted execution time due to its representation of the time it takes a user to prepare to perform or think about performing an action. The placement of the mental operator is therefore governed by a set of heuristic rules that consider cognitive preparation. These rules are as follows:

Insert a mental operator in front of every keystroking operator. Also, place a mental operator in front of every pointing operator used to select a command.

Remove any mental operator that appears between two operators anticipated to appear next to each other.

Remove all mental operators except the first that belong to the same cognitive unit, where a cognitive unit is a premeditated chunk of cognitive activity.

Remove all mental operators that precede consecutive terminators.

Remove all mental operators that precede terminators of commands.

Once a series of physical and system response operations has been identified for a unit task and the placement of the mental operations has been determined, the KLM calculates the execution time of said task by summing the operators’ unit times:

denotes the total time for a single operation; for example, , where is the number of pointing actions and is the duration of each pointing action.

To illustrate how the KLM’s equation and rules can be applied to predict user performance, consider the following example of a user renaming a folder to ’test’ on a desktop. The user homes the hand on the mouse,

H; points the mouse cursor at the object,

P; double-clicks on the folder icon to allow for renaming,

KK; homes hands on the keyboard,

H; keys new name ’test’,

KKKK, and presses Enter,

K. The KLM model without M and R (assuming an instantaneous response from the system) is

HPKKHKKKKK. Applying the heuristic rules for placing the M operators results in the final model

MHPKKHMKKKKK, where the first

M is the time spent by the user searching for the folder on the computer display, and the second

M is the time the user requires to mentally prepare for typing. Therefore:

The performance of the KLM has been validated against observed values to determine its efficacy in predicting execution times. For this validation, the KLM was used to model typical tasks in various systems: executive subsystems and text and graphics editors. Expert users were asked to execute task scenarios to capture observed performances that were logged for comparison. The model’s predictions were assessed in comparison with the observed values, and the root mean square percentage error (RMSPE) was calculated to be 21%. This level of accuracy is reported to be the best that can be expected from the KLM and is comparable to the values of 20–30% obtained with more elaborate models [

28].

3. Related Work

Usability is defined as “the extent to which a product can be used by specified users to achieve specified goals with effectiveness, efficiency and satisfaction in a specified context of use” [

34]. There exist several methodologies that could be utilised to assess the usability of an interactive system or product. Usability tests can categorically be defined as expert-based (e.g., heuristic evaluation, cognitive walkthrough, and cognitive modelling) or user-based (e.g., lab-based testing with users) methods, each of which introduces benefits and limitations to the usability evaluation of an interactive system. Several advantages of wearable technology were previously recalled, and despite their potential as the next generation of core products in the IT industry, their commercial adoption has been slower than anticipated. To support the proliferation of wearables in the future, numerous studies have been conducted to identify the usability issues that arise from utilising wearable technology [

2].

There have been numerous efforts that aimed to address and alleviate the usability issues of smartwatch usage (e.g., [

23,

24,

35,

36]). Usability evaluations were conducted to assess the usability of smartwatches in an academic setting, where a cheating paradigm was used an example [

36]. Quantitative and qualitative measures of effectiveness, efficiency, and usability were used to assess the ability of student’s to cheat using a smartwatch. The results suggest the promotion of academic dishonesty when smartwatches are appropriated in academic settings. A design approach for wrist-worn wearables, which integrates micro-interaction and multi-dimensional graphical user interfaces, was proposed to offer guidelines to overcomes the challenges of the interaction paradigmatic shift [

23]. The usability of smartwatch operations was qualitatively studied to identify issues that impact information display, control, learnability, interoperability, and subjective preference [

24]. The findings highlighted several limitations of wearable devices, such as its poor display of visually rich content and its variable interoperability. These findings lend themselves as design suggestions for user interface form factors that can improve the usability of smartwatches. Despite these efforts, none attempt to estimate ideal base times that can be utilised for quantifying usability with low-fidelity prototypes (e.g., KLM [

27]).

The emergence of new technologies, such as wearables, introduces new challenges in the design and development of computer systems. Consequently, a need arises for the revision or modification of conventional quality assessment models such as the KLM [

27] for the purpose of usability quantification. Revised predictive models can help to evaluate human performance a priori and reduce the need for time- and resource-intensive human studies. The original KLM was developed to predict the performance of desktop users, i.e., in conventional set-ups, but it has continually been modified to model systems including various computing devices. The modifications made to the original KLM include the introduction of new operators, the adaptation of original operators or the adoption of operators from other KLM extensions, and the revision of heuristics or calculations. The rest of this section briefly reviews the literature on modified KLMs across different computing devices. Particular attentions is paid to KLM extensions for touch-based smartphones due to the similarity of their interactions with those of smartwatches.

The earliest efforts made to extend the KLM focused on re-evaluating the original operators for applicability to new application domains or confirming its validity over time (e.g., [

37,

38,

39,

40,

41,

42,

43,

44]). The KLM was revised via a standardised four-dimensional methodology for the evaluation of text editors by introducing new operators and adapting those of the original model [

37]. For measuring the performance of spreadsheet users, the KLM was first evaluated and later revised to consider cognition and extend its parameters [

38]. Further modifications to the KLM were introduced to model skilled spreadsheet users’ performance on hierarchical menus; for this purpose, the placement of the

mental action operator was revised to account for skill [

40]. For hypertext systems, the operators of the original KLM were extensively adapted to consider information retrieval with varying levels of expertise and system complexity [

41]. For the assessment of user performance when using a history tool, the original KLM was extensively updated with ten new operators to address motor, cognitive, and perceptual operations that are relevant to the context of command specification in such tools [

43]. More recently, almost 30 years after its conception, the original model was revised to explore the naturalistic behaviours through which users interact with today’s computers [

44]. Efforts have also been made to revise the KLM for the purpose of assessing the performance of users who are disabled (e.g., [

39,

42]). For instance, the KLM was extended for the performance assessment of users who are disabled when using a traditional set-up to access web content [

42]. The operators in the revised model were expanded to include specific operations for shortcut access.

There has been a wealth of research on the modification of the KLM for the assessment of traditional and touchscreen IVIS designs prior to deployment (e.g., [

31,

32,

45,

46,

47,

48,

49,

50]). The value of the KLM in this domain lies in its ability to determine, simply and at a reduced cost, the time required to complete a task in order to assess the potential distraction it presents while driving. One of the earliest revisions of the KLM for traditional IVISs (i.e., with interaction via knobs and dials) involved adapting the model for the destination entry and retrieval tasks [

49]. The original

keystroke operation was extensively decomposed into finer, key-specific operations to increase the specificity of the model. To ensure that an IVIS is compliant with the 15 s rule for safe driving, a revised model was derived from the KLM that also considers adjustments for age [

46]. The performance of a slightly revised KLM for IVIS designs was evaluated using an occlusion method that simulates driving behaviour (i.e., glancing between the road and the IVIS) for the purpose of determining domain-specific metrics and ensuring the observation of standards [

31,

47]. The performance of the modified model proved adequate, with significant correlations and low error rates. To support the creation of automotive interfaces, a predictive tangible prototyping tool (MI-AUI) was developed in which the unit times of operations are modified from the original KLM and used to estimate task completion time [

48]. More recently, a revised version of the KLM [

46] was further adapted for touch-based IVIS design by extending the considered set of operations to include gestures such as scrolling and dragging [

50].

A large number of the studies concerned with extending the KLM to smartphone interactions consider its adaptation to text entry methods and predictive text (e.g., [

51,

52,

53,

54,

55]). One of the earliest KLM extensions for text entry involved revising the original model for three text entry methods on a smartphone’s 9-key keyboard [

51]. The revised model was used to predict typing speeds considering various methods of input. Another modification to the KLM utilised the adapted operations, Fitts’ law, and a Chinese language model to predict user performance with two types of input on a smartphone [

52]. The KLM was also extended to measure multi-finger touchscreen keystrokes for the purpose of representing 1Line, a text entry method for Chinese text input [

53]. Revisions to the KLM for text entry have not only been limited to key input; another new model investigated the feasibility of a speech-based interface design for text messages [

56]. The evaluations conducted to determine the effectiveness of the design choices indicated the usefulness of speech input. Modifications to the original KLM for smartphone interaction beyond the umbrella of text entry have also been considered. One of the earliest models to comprehensively consider key-based smartphones was the Mobile KLM, in which new operations were introduced to account for interactions with key-based smartphones [

29]. This model considered interactions on and with the smartphone, e.g., key presses and gestures. The unit times were also analysed for each of the new and adapted operations. This model was later refined to consider near-field communication (NFC) and dynamic interfaces [

57].

Over the past decade, touch-based smartphones have almost entirely replaced traditional smartphones, thus necessitating revised extensions to the KLM to properly assess this new paradigm of human interaction. While smartphones are dissimilar in size to smartwatches, the touch-based interactions with both types of devices are somewhat similar. One of the earliest proposed variants of the KLM for touch-based smartphone interactions considers stylus input [

58]. In the new model, the generality of the

mental action operations in the original KLM is reassessed, and the corresponding operator is split into five new mental operators for task initialisation, decision making, information retrieval, finding information and verifying input. The

homing operator distinguishes between homing a stylus to a certain location and homing a finger. Other physical operations include tapping, picking up the stylus, opening a hidden keyboard, rotating the smartphone, pressing a side key, and plugging/unplugging a device to/from the smartphone. The proposed model also introduces the concept of an operator block (OB) to indicate a sequence of operations that are likely to be used together with high repeatability.

The touch-level model (TLM) is a revised version of the original KLM that incorporates interactions with modern touch-based devices, such as smartphones [

59]. The new model retains several operators from the original KLM, namely,

keystroking,

homing,

mental action, and

response time, as they remain relevant for touch input. The rest of the KLM operators were either considered inapplicable to the context or retained within other operations. Several operators were also inherited or adapted from the Mobile KLM, including initial action and distraction [

29]. Numerous new operators were also proposed to allow the new model to account for touchscreen interactions and gestures. These operators include gesturing, pinching, zooming, tapping, swiping, tilting, rotating, and dragging. While the model has the potential to be used for benchmarking touchscreen interactions on a smartphones, the new operators currently have no baseline unit values and are yet to be validated. Three of the proposed operations in TLM (tap, swipe, and zoom) were also reflected in a new model that modifies KLM for touch-based tablets and smartphones [

60]. Fitts’ law, a descriptive model that predicts that time it will take for a user to point to a target, was utilised in combination with this model to determine the unit execution times for each of the proposed operations using custom prototypes. Unit times were suggested for short swipes (0.07 s), zooming (0.2 s), and tapping on an icon from a home position (0.08 s). More accurate estimates are possible with the proposed predictive equations.

The fingerstroke-level model (FLM) is a revised version of the KLM for the assessment of mobile-based game applications on touch-based smartphones [

61]. The proposed model adapts several operators from the original model for application to touch-based interactions, including tapping (adapted from

keystroking),

pointing, and dragging (adapted from

drawing). The FLM uses the original

mental action and

response time operators from the original KLM [

27] and introduces a new flicking operator. For each of the new and adapted operators, unit times were determined through an experimental study to ensure accuracy with respect to touch interactions. Regression models were also devised for four of the operators (tapping, pointing, dragging, and flicking). The validity of the model for estimating execution times for mobile gaming was verified in an experimental scenario, in which its predictions were more accurate than those of the original KLM. An extension to the FLM, the Blind FLM, was later proposed for actions performed by smartphone users who are blind [

62]. This model was similarly validated, resulting in a low root mean square error of 2.36.

Of the four models adapted from the KLM for touch-based interaction, the FLM [

61] and the work of El Batran et al. [

60] include unit times for each of the proposed operators. The FLM was the only model validated in experimental studies, in which it was found to be effective for modelling mobile gaming applications.

Table 2 summarises the operators of the revised KLM models for touch-based interaction in relation to the original KLM. The FLM, the TLM, and El Batran’s model share

tapping,

mental action, and

dragging with the original KLM.

Swiping is similarly shared across these three adapted models but not with the original KLM.

Tapping appears in all four models and was arguably adapted from the original

keystroking operator. While the TLM comprehensively covers all possible touchscreen interactions, it lacks unit times and remains to be validated. For this reason, the revised KLM for smartwatch interaction will be discussed in relation to the FLM in the following sections.