1. Introduction

Mapping an archaeological site today can be accomplished using high spatial resolution and accuracy equipment, such as laser scanners, Unmanned Aircraft Systems (UAS), etc. The products generated (Digital Surface Models and/or orthophotomosaics) contain rich thematic information. To create and/or validate these products, it is essential to use additional high spatial accuracy instruments in the field, such as Global Navigation Satellite System (GNSS) receivers [

1,

2,

3,

4].

In the case of UAS, the thematic information (completeness, resolution, and overall quality of the objects) of the images varies depending on the sensor used. An RGB sensor will capture objects in the visible spectrum with a spatial resolution of a few millimeters from a low flight altitude, whereas a multispectral (MS) sensor, operating from the same altitude, captures objects in spectral bands beyond the visible with a spatial resolution of a few centimeters. For example, the UAS Phantom 4 collects RGB images (using its 1/2.3” CMOS 12.4 Mp RGB sensor) at an approximate spatial resolution of 1.5 cm from a flight height of 40 m, while it gathers MS images (using the Sequoia+ MS sensor by Parrot 1.2 Mp (Parrot SA, Paris, France), covering green, red, Red Edge, and near infrared (NIR) bands) at about 4 cm resolution from the same height [

5]. The UAS Wingtra GEN II (Wingtra AG, Zurich, Switzerland) collects RGB images (using the Sony RX1R II 42.4 Mp RGB sensor, Sony Group Corporation, Tokyo, Japan) with a spatial resolution of 1.5 cm from a flight height of 100 m, and from that same height, it acquires MS images (using the MicaSense RedEdge-MX 1.2 Mp MS sensor, covering blue, green, red, Red Edge, and NIR, MicaSense Inc., Seattle, United States) at a resolution of approximately 7 cm [

6]. Thus, while the spatial resolution of the thematic information in RGB images is excellent, the corresponding resolution of MS images is not acceptable for archaeologists when they require orthophotomosaics at scales of 1:50 or 1:100.

This is why the first author since 2020 conducted original research on image fusion [

5,

6,

7], utilizing the RGB and MS images from the UAS sensors. The ultimate goal is to produce a fused image that retains the spectral information of the original MS image while achieving the spatial solution of the RGB image, thereby enhancing the spatial resolution of the thematic data contained in the MS image.

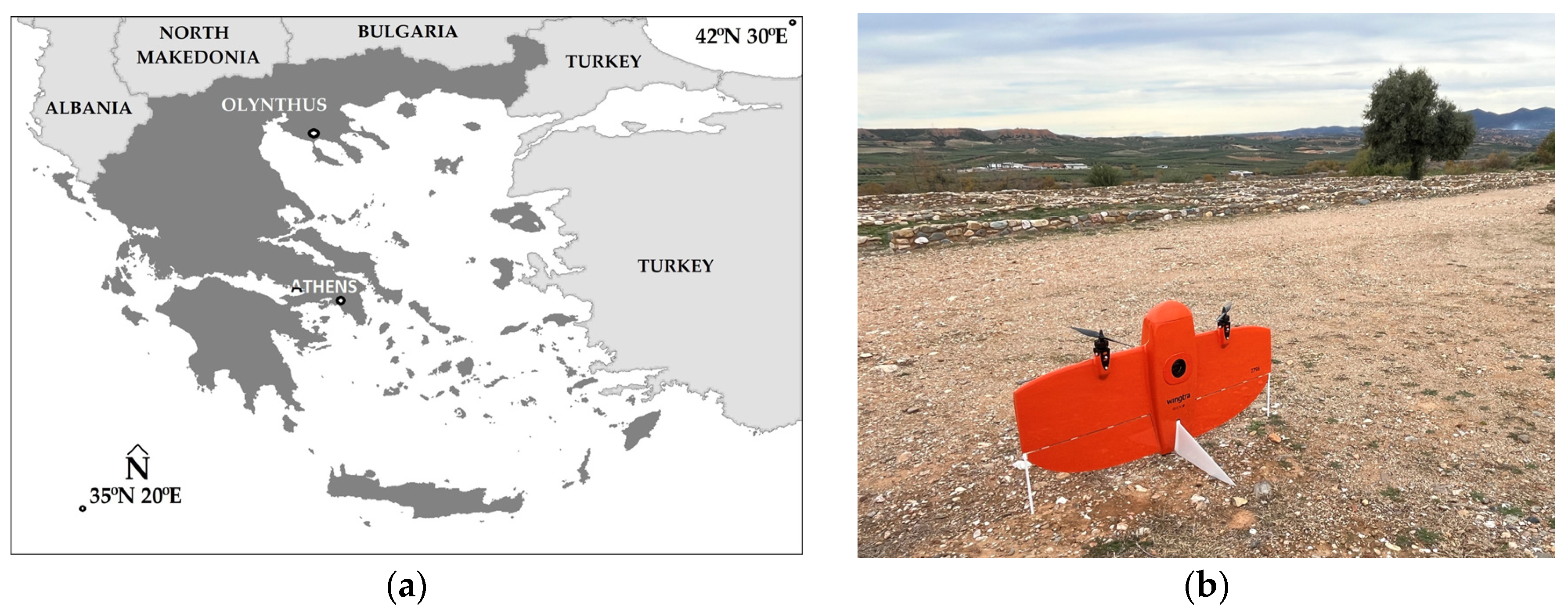

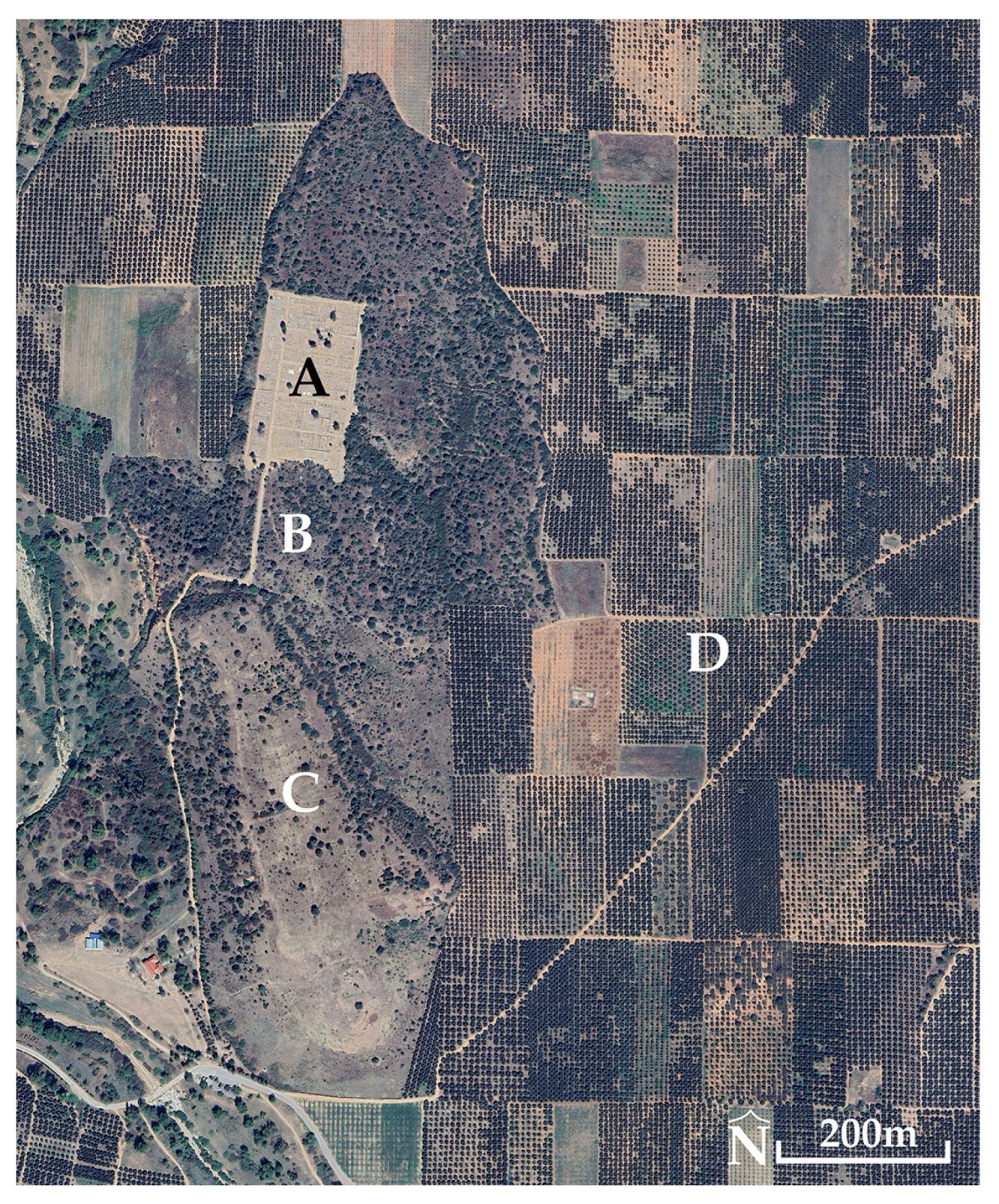

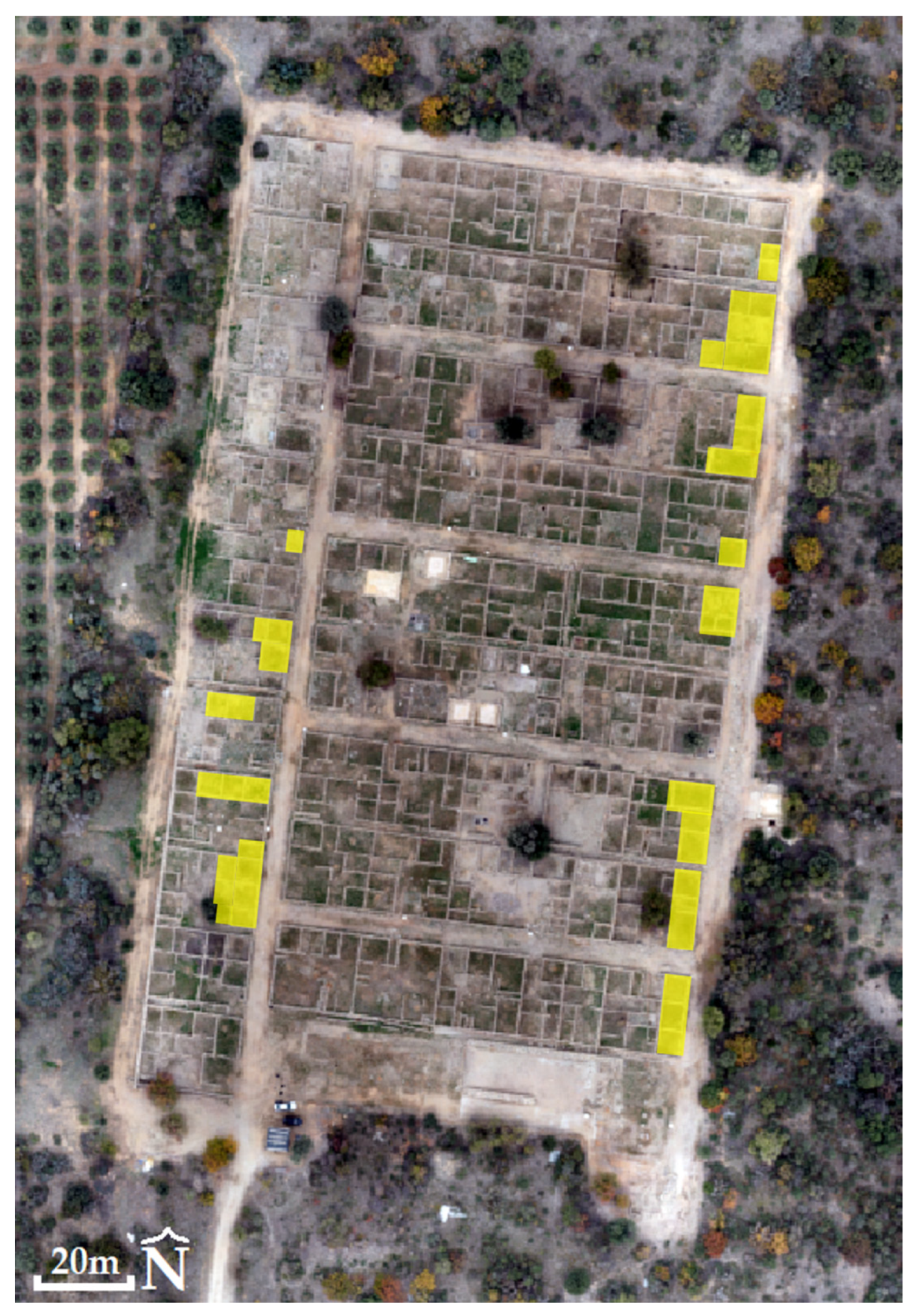

In this study, the UAS Wingtra GEN II (Wingtra AG, Zurich, Switzerland) was used to collect RGB and MS images of the Hippodamian system of ancient Olynthus (Central Macedonia, Greece,

Figure 1). Ground Control Points (GCPs) were measured in the field using GNSS to solve the blocks of images and produce Digital Surface Models (DSMs) and orthophotomosaics. Corresponding products were also generated using the UAS’s Post-processed Kinematic (PPK) system (without the use of GCPs). Check Points (CPs) were measured in the field for quantitative control of products, which is the first objective of the study (to assess the horizontal and vertical accuracy of the products). The primary methods for evaluating a product include calculating the mean, standard deviation, and Root Mean Square Error (RMSE) [

8,

9,

10,

11]. Additionally, when the data follow a normal distribution, analysis of variance (ANOVA) is used for hypothesis testing. This process helps identify differences in mean values and standard deviations across various datasets, such as the measurements on the products versus those taken in the field [

6].

The second objective of the study is to perform image fusion using the RGB and MS images and to validate the resulting fused image. The proposed process is innovative and was initiated by the first author as early as 2020. It is expected that the findings of this study will, once again, confirm the effectiveness of the proposed image fusion process, utilizing the RGB and MS images from the UAS sensors. Finally, by studying the products (and the new fused image with the multispectral information of the original MS, but with much better spatial resolution than the original MS, thus increasing the capacity for the visual observation and interpretation of objects) and reviewing the literature, the third objective is to investigate and present information about the Hippodamian system of ancient Olynthus. This includes details on urban planning and architectural structures (regularity, main and secondary streets, organization of building blocks, hydraulic works, public and private buildings, types and sizes of dwellings, and the internal organization of buildings) as well as information on its socio-economic organization (different social groups based on the characteristics of the buildings, commercial markets, etc.).

3. Equipment

To capture aerial imagery at the ancient Olynthus, the UAS WingtraOne GEN II (Wingtra AG, Zurich, Switzerland) (

Figure 1), a vertical takeoff and landing (VTOL) fixed-wing UAS (weight 3.7 kg and dimensions 125 × 68 × 12 cm

3), was used. It has a maximum flight duration of 59 min. To determine the coordinates of the captured image centers, it employs an integrated multi-frequency PPK GNSS antenna, compatible with GPS (L1 and, L2), GLONASS (L1 and L2), Galileo (L1), and BeiDou (L1). The flight plan and parameters are configured using the WingtraPilot© v2.17.0 software. Additionally, the system is equipped with an RGB and MS sensor. Sony RX1R II (Sony Group Corporation, Tokyo, Japan) is a full-frame RGB sensor with a 35 mm focal length and a resolution of 42.4 Mp. It also provides images with a spatial resolution of 1.6 cm/pixel at a flight height of 120 m. The MicaSense RedEdge-MX (MicaSense Inc., Seattle, United States) is a MS sensor with a 5.5 mm focal length and 1.2 Mp resolution, including having five spectral bands, blue, green, red, Red Edge and NIR, and a spatial resolution of 8.2 cm/pixel at a flight height of 120 m [

13,

14].

The Topcon HiPer SR GNSS receiver (Topcon Positioning Systems, Tokyo, Japan) was used for two reasons. The first was to measure the GCPs and CPs with real-time kinematic (RTK) positioning before the flights, and the second was to collect the necessary measurements using the static method during the flights, which will allow for the calculation of the coordinates of the image centers. This system supports multiple satellite signals, including GPS (L1, L2, and L2C), GLONASS (L1, L2, and 2C), and SBAS-QZAA (L1 and L2C).

5. Data Collection, Processing, Product Production, and Controls

5.1. Collection of Images

Flights were conducted on 21 November 2024 at 10:50 a.m. Flights were designed with 80% side and 70% front overlap between images. A total of seven flight strips were created for both the RGB and MS sensors. The flight height was set at 100 m for the RGB sensor and 90 m for the MS sensor. The anticipated spatial resolution was 1.3 cm for the RGB images and 6.1 cm for the MS images. The total flight duration was 5 min for the RGB sensor and 5 min for the MS sensor. In total, 80 RGB and 102 MS images were captured.

5.2. Ground Measurements

A total of 20 GCPs and 20 CPs were surveyed (

Figure 3). The collection process of the X, Y, and Z coordinates within the Greek Geodetic Reference System 1987 (GGRS87) involved the use of 24 × 24 cm paper targets (

Figure 3) and the Topcon HiPer SR GNSS receiver (Topcon Positioning Systems, Tokyo, Japan), which provided real-time kinematic (RTK) positioning and a network of permanent stations provided by Topcon, with an accuracy of 1.5 cm horizontally and 2 cm vertical.

In relation to the GNSS measurements (using the Topcon HiPer SR GNSS receiver, Topcon Positioning Systems, Tokyo, Japan) associated with the UAS’s PPK system, the x, y, and z coordinates of a randomly selected point, designated as the reference for subsequent measurements, were initially determined with 1.5 cm horizontal and 2.0 cm vertical accuracy in the GGRS87 system. This measurement was conducted near the UAS home position using the RTK method. Following this, the same GNSS device was employed at the same location to continuously record position data using the static method 30 min before the flight, throughout the flight, and for an additional 30 min post-flight. By integrating the high-precision coordinates of this reference point, its static measurements, and in-flight data from the UAS’s built-in multi-frequency PPK GNSS antenna, the reception center coordinates (X, Y, and Z) for each captured image were adjusted and computed during post-processing. This was carried out using the WintraHub© v2.17.0 software from the UAS manufacturer, ultimately achieving a 3D positional accuracy within GGRS87 of 2 cm horizontally and 3 cm vertically for each image.

5.3. Production of DSMs and Orthophotomosaics

The UAS images were processed in the software Agisoft Metashape Professional© version 2.0.3. In the software, initially images are imported, and the GGRS87 coordinate system is established. In cases where the MS images are used, it is essential to calibrate the spectral data right after importing the images. To do this, calibration targets are imaged both before and after the flight. The software then automatically detects these targets and calculates the reflectance values for all spectral bands [

15,

16,

17,

18,

19].

Next, whether you are using an RGB or MS sensor, the images are aligned with high precision. This alignment process also generates a sparse point cloud by matching groups of pixels across the images. If Ground Control Points (GCPs) are incorporated, the next step involves identifying and marking them on each image. Once that is complete, the software calculates the Root Mean Square Error (RMSE) for the x coordinate (RMSE

x) as well as for y and z coordinates (RMSE

y and RMSE

z), along with combined RMSE values for the x and y coordinates (RMSE

xy) and for all coordinates (RMSE

xyz), and for all GCP Locations [

20].

If GCPs are not used, the process relies on the pre-calculated coordinates of the image centers. After aligning the images and generating a sparse point cloud, the software computes RMSE values for the sensor locations, that is RMSEX for the X, RMSEY for Y, RMSEZ for Z coordinate, and the combined values RMSEXY and RMSEXYZ.

These RMSE figures provide a rough indication of the overall accuracy of the resulting DSMs and orthophotomosaics (though they rarely match the true accuracy of the final products).

Following this, with either sensor type, the next step is to build a dense point cloud using high-quality settings and aggressive depth filtering (121,415,757 points for the RGB and 6,668,369 points for the MS). This dense point cloud is then converted into a 3D mesh (triangular mesh). After the mesh is generated (source data: point cloud; surface type: Arbitrary 3D; face count for the RGB: high 32,000,000; face count for the MS: high 1,800,000; and production of 81,006,681 faces for the RGB and 4,022,716 faces for the MS), a texture is applied (RGB or MS texture type: diffuse map; source data: images; mapping mode: generic; blending mode: mosaic; and texture size: 16,284), effectively overlaying the colored details onto the 3D surface. The final step involves producing a DSM and an orthophotomosaic.

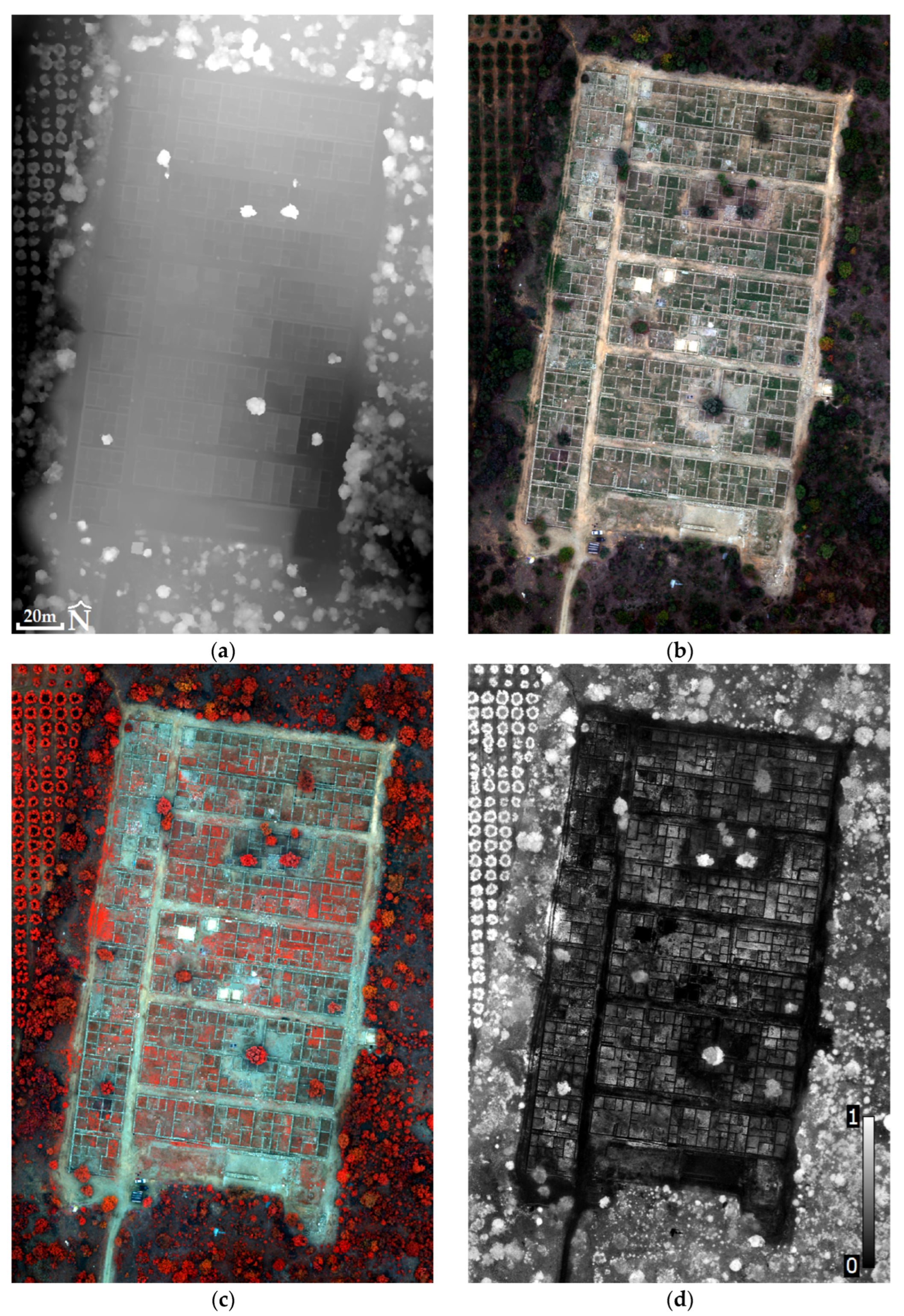

In the case of the ancient Olynthus using RGB images, the RMSE

xyz was 1.6 cm when GCPs were employed, while the RMSE

XYZ was 3.2 cm when GCPs were not employed. Regardless, the resulting products achieved a spatial resolution of 2.6 cm for the DSM (

Table 1) and 1.3 cm for the orthophotomosaic in both scenarios. For the MS images, the RMSE

xyz or XYZ was 1 cm when GCPs were or were not employed. The final products have a spatial resolution of 12.2 cm for the DSM (

Figure 4) and 6.1 cm for the orthophotomosaic in both scenarios.

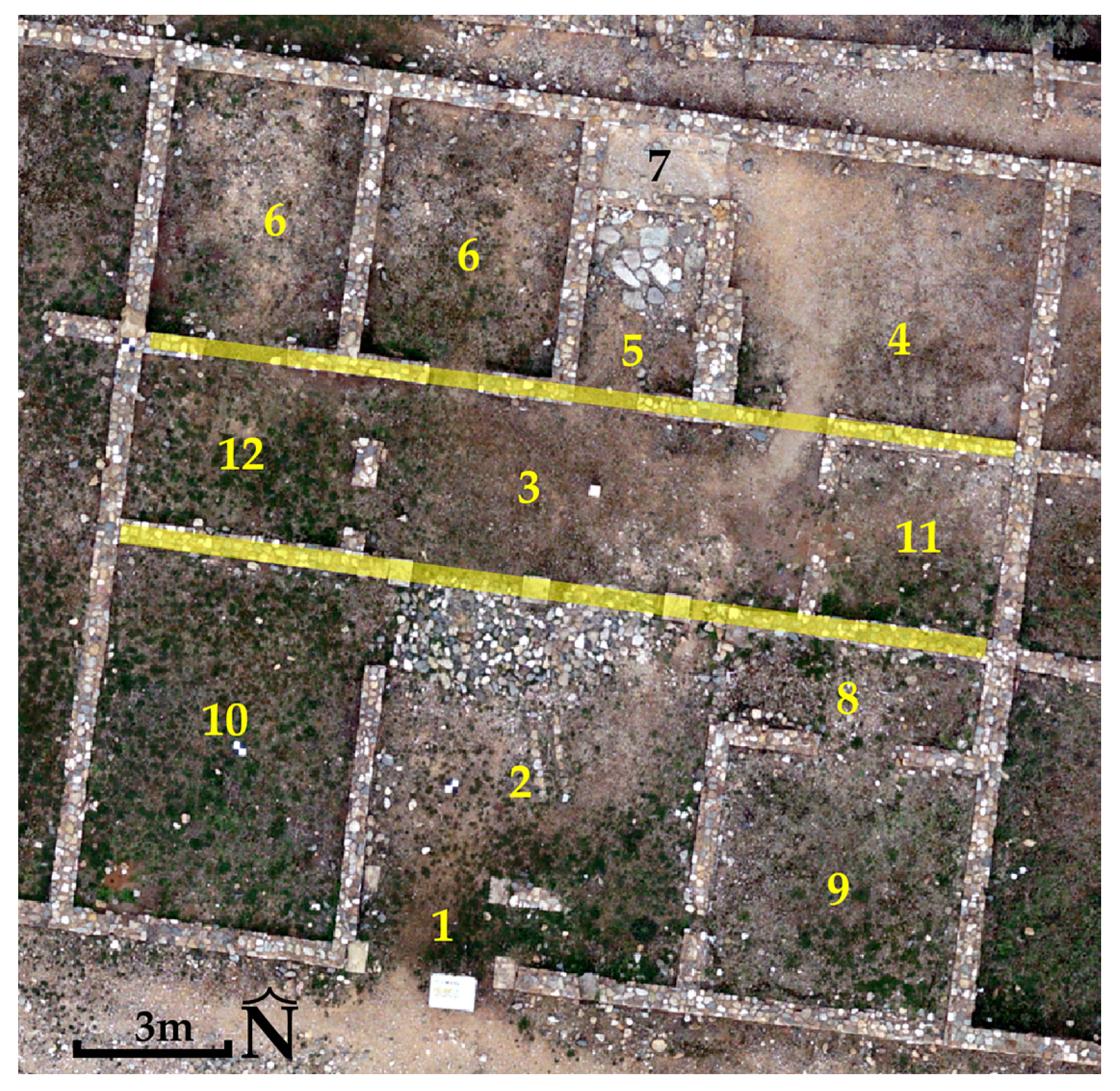

In

Figure 4, the Normalized Difference Vegetation Index (NDVI) is presented [

15,

21]. The grayscale of the images ranges from zero (black) to one (white), with zero representing the least favorable and one representing the most favorable outcome of the index. That is, a value of 0 corresponds to pixels without crop, a value of 0.5 corresponds to pixels with poor growth or poor crop health, and a value of 1 corresponds to pixels with good growth or healthy crop.

5.4. Control of DSMs and Orthophotomosaics

The RGB images were processed twice, once with the use of GCPs and once without the use of GCPs. The same was performed for the MS images. For each of the four processing cases, the final products produced were DSM and an orthophotomosaic. By extracting the coordinates (x’, y’, and z’) of the CPs from the products for the four processing cases, it was possible to compare them with the coordinates (x, y, and z) of the CPs in the field to evaluate the quality of the products.

The mean value is determined by summing the differences between the coordinates of the CPs obtained from the products and those recorded in the field and then dividing this total by the number of CPs. However, because relying solely on the mean is not enough to draw conclusions, the standard deviations were also calculated. This measure quantifies how much Δx, Δy, and Δz vary from their respective mean values. Naturally, we expect these standard deviations to be as low as possible and definitely smaller than the corresponding mean values.

In addition to using a standard histogram to visualize the data distribution, we performed several diagnostic tests, including assessments of Variance Equality, Skewness, and Kurtosis. All of these tests confirmed that our data were normally distributed, which allows the utilization of analysis of variance (ANOVA).

ANOVA is used to conduct hypothesis tests that compare the mean values across different datasets. Specifically, the null hypothesis (H

0) assumes that the samples, whether they come from product measurements (x, y, and z) or field measurements (x’, y’, and z’), have the same mean. In contrast, the alternative hypothesis (H

A) suggests that at least one of the means is different. If the

p-value exceeds 0.05 at a 95% confidence level, it indicates that there is no systematic difference between the product-derived means (x, y, or z) and the corresponding field measurements (x’, y’, or z’). In such cases, any observed differences are considered negligible and attributed to random errors. Also, if the calculated F statistic is lower than the critical value (F crit), it implies that the standard deviations for the product and field measurements do not differ significantly, reinforcing the conclusion that the variations are simply due to random error [

6].

The tables below present the mean values and standard deviations (

Table 2) along with the ANOVA results (

Table 3 and

Table 4).

5.5. Production and Control of the Fused Image

The UAS is not equipped with a Panchromatic (PAN) sensor that is integrated into satellite platforms to capture high-resolution grayscale images. At first glance (

Table 2), the best spatial accuracy is achieved when using GCPs (analysis will be completed in the Discussion). Following the satellite image processing workflow for image fusion [

22,

23,

24,

25,

26,

27,

28], the available high-resolution RGB orthophotomosaics (from UAS, use GCPs) are transformed into pseudo-panchromatic (PPAN) orthophotomosaics (

Figure 5). The process involves utilizing image processing software Adobe Photoshop© CS6 version 13.0 to transform the full-color RGB image into a black and white (B/W) image. The intensity (or brightness) of each pixel is calculated by preserving predetermined ratios among the three primary color channels (red, green, and blue). The precise algorithm employed by software to achieve this conversion remains undisclosed due to copyright restrictions. Consequently, while the PPAN image bears a visual resemblance to those produced by an authentic PAN sensor, they do not exhibit the identical spectral characteristics that PAN sensor, which are sensitive to the entire visible spectrum, are designed to capture.

Following the conversion to a PPAN image, further processing steps are implemented to refine the fusion process. Specifically, the histogram of the PPAN orthophotomosaic is adjusted so that they align with the corresponding MS orthophotomosaic. This histogram matching process plays a critical role in ensuring that the tonal distribution in the PPAN image mirrors that of the MS image, thereby reducing discrepancies and facilitating a more seamless integration of the spectral data.

Numerous methods have been proposed for fusing MS and PAN images. These techniques generally fall into three categories: component substitution (CS), multiresolution analysis (MRA), and degradation model (DM)-based methods. CS approaches use transforms, such [

28,

29,

30] as intensity-hue–saturation (IHS), principal component analysis (PCA), and Gram–Schmidt, to project interpolated MS images into a new domain. In this space, one or more components are partially or completely replaced by a histogram-matched PAN image before applying an inverse transform to reconstruct a MS image (in other words, a fused image). MRA techniques [

24,

28,

30,

31,

32,

33] operate on the assumption that the spatial details missing in MS images can be recovered from the high-frequency components of the PAN image, a concept inspired by ARSIS (Amélioration de la Résolution Spatiale par Injection de Structures) [

34]. Tools such as the discrete wavelet transform, support value transform, and contourlet transform are employed to extract spatial details, which are then injected into the MS images. Some methods also incorporate spatial orientation feature matching to improve correspondence. DM methods [

24,

25,

26,

27,

28] model the relationships among MS, PAN, and fused images by assuming that MS and PAN images are generated by down sampling and filtering an underlying fused image in the spatial and spectral domains, respectively. These approaches integrate priors such as similarity, sparsity, and non-negativity constraints to regularize the fusion process.

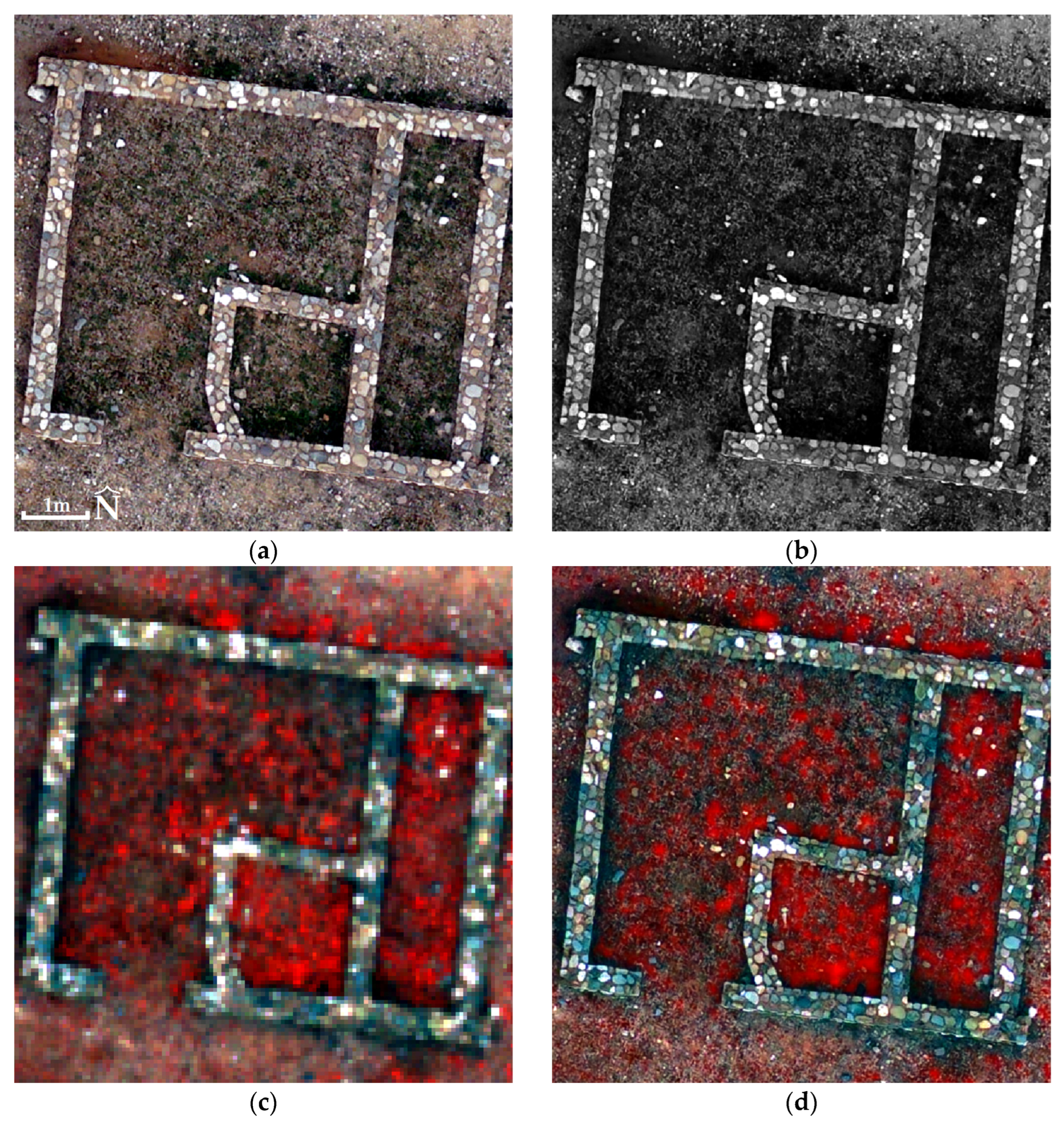

In this research, with use of the software Erdas Imagine© version 16.7.0, the principal component analysis (PCA) method was used to generate the fused image (

Figure 6). PCA is a robust statistical technique that extracts and combines the most significant components from the datasets, merging the detailed spatial information provided by the PPAN image with the rich spectral information inherent in the MS image. To evaluate the success of the fusion process, the correlation table was constructed to compare the MS orthophotomosaic with the fused image (

Table 5). These tables indicate the retention rate of the original spectral information, which should exceed 90% (i.e., correlation values greater than 0.9) [

35,

36,

37].

The ERGAS (Erreur Relative Globale Adimensionnelle de Synthese) index (Equation (1)) is a well-established metric for quantitatively assessing the quality of a fused image in relation to the (original) MS orthophotomosaic [

38].

In Equation (1), the variable “h” represents the spatial resolution of the fused images,

while “I” denotes the spatial resolution of the MS images. “N” indicates the total number of spectral bands under consideration, and “k” serves as the index for each band. For every band in the RMSE(B

k) (Equation (2)) between the fused image and the MS image, “M

k” represents the mean value of the “k” spectral band.

In Equation (2), for each spectral band, the values labeled “Pi” for the MS image and “Oi” for the fused image, are obtained by randomly selecting a number (“n”) of pixels at the same coordinates from both images. This pixel-by-pixel comparison is essential to ensure an unbiased overall assessment of spectral fidelity.

The acceptable limits for ERGAS index values, which determine the quality of the fused image, are not fixed and can vary depending on the specific requirements of the application. For instance, when high spectral resolution is crucial, very low index values may be necessary. In other scenarios, moderate index values might be acceptable, especially if external factors (such as heavy cloud cover or high atmospheric humidity) affect the quality of the fused image. Additionally, these limits depend on the number and spatial distribution of the pixels tested, as well as on the researcher’s criteria for acceptable error on a case-by-case basis. Lower ERGAS index values, especially those close to zero, indicate a minimal relative error between the fused image and the MS orthophotomosaic, suggesting a high-quality fusion. Moderate values, typically between 0.1 and 1, imply that although there might be slight spectral differences, the fused image remains acceptable. High index values, generally ranging from one to three, denote significant relative error and considerable spectral deviation, classifying the fused image as low quality. Despite these general guidelines, the thresholds can be adjusted; however, the index is usually maintained below three for a fused image to be reliably used for further classification or detailed analysis [

38,

39,

40,

41,

42,

43,

44,

45,

46,

47,

48].

At the ancient Olynthus, 68 million pixels out of a total of 290 million from the fused image were analyzed using the Model Maker tool in Erdas Imagine© version 16.7.0 to compute the ERGAS index. The resulting value was 0.8, and this moderate error suggests that, while minor spectral differences exist, the overall quality of the fused image is good.

7. Discussion

According to the above results of both RGB and MS sensor image processing (

Table 3 and

Table 4), with and without the use of GCPs, the

p-values consistently exceeded 0.05. This indicates that, at a 95% confidence level, there appears to be no systematic error between the mean x (or y or z) values of the CPs of the products and the (actual) mean x (or y or z) values of the CPs measured in the field. Thus, any differences between them are considered negligible and are attributed to random errors.

In addition, the F test was found to be lower than its critical value (F crit) in all cases. The standard deviations of x′ (or y′ or z′) and x (or y or z) do not differ significantly, so that the measurements (field and product) are accompanied only by random errors. All the above justifies a deeper analysis of the mean differences and standard deviations between the 3D coordinates of the two data sources.

Table 2 reveals that, in both processing scenarios for both sensors, the standard deviations of the differences in CP measurements are consistently lower than the corresponding mean differences (on the three axes). This finding demonstrates that the dispersion of Δx, Δy, and Δz around their mean values is limited.

Focusing on horizontal accuracy, the average difference between the CP measurements is approximately 1 cm in both cases (whether GCPs are employed or not, for both sensors). This result aligns with the manufacturer’s specification, which anticipates a horizontal accuracy of around 1 cm for RGB sensor imagery processed without GCPs [

13].

Turning to vertical accuracy, the results are varied. When GCPs are used, the average vertical differences are 1.7 cm for the RGB sensor and 2.6 cm for the MS sensor, results that are even better than theoretically expected (theoretically about three times worse than the horizontal accuracy).

On the contrary, processing that relies solely on PPK produces less favorable results. In the case of the MS sensor, the average value of the CP differences is 7 cm, which is two times worse than the theoretically expected. In the case of the RGB sensor, the average value of the CP differences is 10.6 cm, which is over three times worse than the theoretically expected.

Generally, when using PPK for the georeferencing of UAS imagery, the image-center positions exhibit very high relative planimetric accuracy, but the images remain sensitive to small vertical errors. This sensitivity arises primarily because the acquisitions are nadir and the intersecting optical rays form very shallow angles with the vertical plane. As a result, there is little perspective on ground objects, so small errors in elevations of the image-centers translate into larger elevation errors on terrain features. Furthermore, in PPK solutions, factors such as the number of tracked satellites, the satellite geometry (i.e., how well the satellites are distributed across the sky) and atmospheric delays (ionospheric signal dispersion and tropospheric water vapor and pressure) disproportionately affect the vertical component. However, when GCPs are employed, these issues are overcome, since GCPs provide fixed ground points at known accurate elevations. Thus, even if the optical rays intersect at shallow angles, the GCPs anchor the DSM to the true ground level, substantially limiting the elevation errors of surface features.

The question of interest when improving the spatial resolution is whether the spectral information of the MS orthophotomosaic is preserved in the fused image. According to the correlation table (

Table 5), the spectral information of the MS orthophotomosaic is transferred to the fused image at an average percentage of 78% for blue—green—red—Red Edge bands. The percentage of the spectral information of the NIR band transferred is 90%. In general, when a percentage below 90% is observed in any correlation of corresponding bands, then the fused image is not acceptable for classification. On the other hand, the above percentages are objectively not low, and therefore the ERGAS index should be used so that, in combination, reliable conclusions can be drawn.

In summary, there were challenges that had to be addressed to ensure the quality of the fused image, and the main ones are presented below. First, the RGB and MS images had to have spatial alignment (the images should perfectly match spatially when one covers the other) in GGRS87, otherwise the spectral information transferred from the MS to the fused image would have been greatly corrupted. This was successfully overcome, since the processing of the images (RGB and MS), whether using GCPs or not, led to orthophotomosaics with high planimetric accuracy (approximately 1 cm). Also, another important challenge was the radiometric deviation of the produced PPAN orthophotomosaic from the MS orthophotomosaic. This was addressed by performing histogram matching of the PAN image to the MS orthophotomosaic. Finally, a further challenge was preserving the spectral information of the original MS orthophotomosaic in the fused image. This was managed by creating and evaluating the correlation table and by calculating and assessing the ERGAS index.

If the RGB and fused images are compared (see

Figure 6a,d,e,h), it becomes apparent that, although the spatial resolutions of the images are identical, the spectral information is superior in the fused images, thereby enabling automatic detection of different objects when classification techniques are applied. For example, in

Figure 6a it is difficult to detect and distinguish vegetation, whereas in

Figure 6d the areas occupied by vegetation are clearly delineated (pixels shown in red). Furthermore, if the MS and fused images are compared (see

Figure 6c,d,g,h), it emerges that, while their spectral information is similar, the spatial resolution is superior in the fused images, thus permitting both improved and more comprehensive visual observation of objects and greater accuracy in identifying different objects when applying classification techniques. For example, in

Figure 6.g it is difficult to discern the mosaic motifs, such as the rays of the Vergina Sun, whereas in

Figure 6d all mosaic motifs are clearly visible.

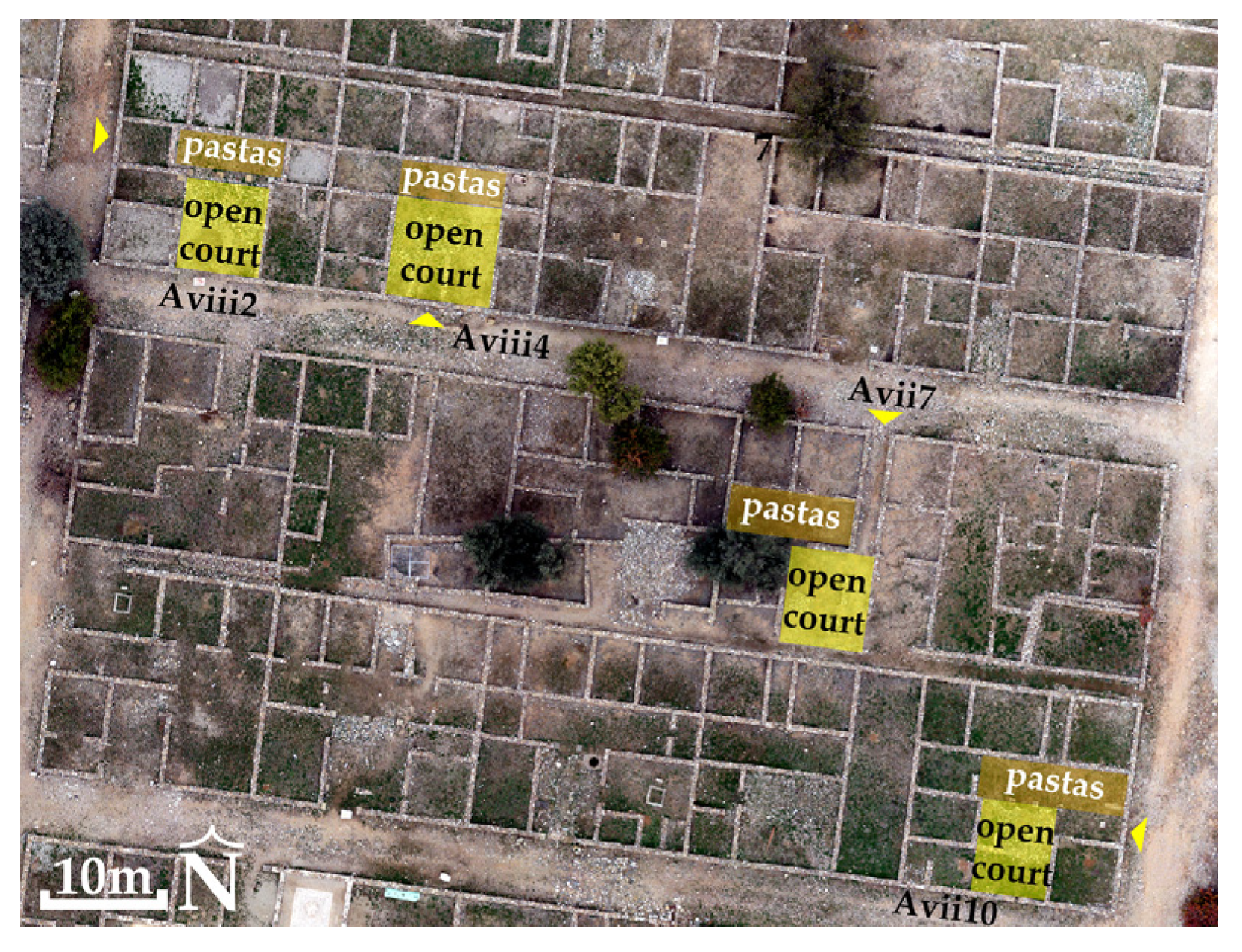

From the study of the Hippodamian system of ancient Olynthus, it follows that the carefully designed urban grid was not merely an aesthetic endeavor but also played a crucial role in the community’s functioning. The organized layout of the city provides valuable insights into its urban structure as well as the prevailing socio-economic relationships. However, despite the advantages the Hippodamian system provides, there are some limitations that govern it. First, there is the adaptation of the design to the natural landscape, such as the slopes of the settlement. Bibliography reports that there were equal building blocks on the flat part of the hill and slightly smaller ones on the eastern slopes, following the morphography of the terrain [

49]. Also, another limitation is the size of the buildings. In particular, the Hippodamian system, despite the organization it offers to the settlement, also promotes the principles of egalitarianism. This means that the inhabitants of ancient Olynthus, regardless of their economic status, were all required to have the same building area. Finally, there were defense disadvantages as the horizontal and vertical streets of the city made it easily accessible to enemies in case of invasion, unlike a more labyrinthine city, whose defense would have been more reinforced. Data reveal a well-structured network of road axes and building blocks, and the examination of building dimensions and internal configurations shows how architectural design mirrored the social structures of the era. Additionally, the placement of workshops and shops confirms the interaction between private production and collective economic activities in the city. The presence of a shop in a house did not reflect the economic status of a household. The wealthier households were mixed within the blocks, and their economic status was revealed by the existence of a second story, more elaborate interiors, or larger storerooms inside the house.

The urban planning and the architecture of ancient Olynthus are an excellent example of sustainability and resilience. The Hippodamian system that the city followed made traffic flow and organization of the city blocks more efficient, while it also aided in good water drainage. The roads were slightly slanted to help move rainwater away from the city, preventing corrosion and flooding [

52].

The construction of the homes found on Olynthus was based on making use of local and resilient materials whose characteristics and properties were known to residents and were strategically selected to serve their purpose. The foundation was made of stone from a neighboring trench while the walls were made of raw bricks, which provided natural insulation from the heat and cold thanks to multilayer coatings with different densities on the inside to manage moisture and temperature inside the buildings [

54]. The roofs were covered by tiles to become resistant to extreme weather conditions. To create the floors, coatings and mortars made of asbestos and pozzolanic materials were used, a technique that helped in preventing humidity [

54]. The richest homes featured mosaic floors, which, additionally to their aesthetic value, were resistant to time wear [

50].

Regarding energy efficiency and climate adaptation, Olynthus is considered one of the first bioclimatic cities. The orientation of the buildings towards the south allowed for the optimal use of light through the courtyards, which was also placed on the south end of the homes and took up one fifth to one tenth of the total property. Houses were designed this way because the windows were small and high enough so as not to be visible to the outside. Also, most Olythian houses had at least one stoa opening into the courtyard, providing a space protected from the sun and rain. Thus, energy efficiency was achieved, with natural heating in the winter and chilly conditions in the summer [

55]. At the same time, the need for artificial lighting was minimized. In addition, the positioning of the homes was mindful of the direction of the winds, allowing for a natural airflow in the home, while the walls featured small openings to provide better insulation. Simultaneously, the city had developed public infrastructure, like public wells and water supply pipelines, making sure natural resources were used fairly [

50].

8. Conclusions

The orthophotomosaics generated from the RGB and MS sensor images without using GCPs exhibit excellent horizontal accuracy, comparable to that achieved with traditional GCP-based image processing. However, when it comes to vertical accuracy, processing with GCPs not only meets but surpasses the theoretical expectations, whereas processing without GCPs results in vertical errors that are two to three times greater than expected. In other words, the conventional GCP-based processing yields superior vertical accuracy. Clearly, these conclusions regarding Z-axis accuracy pertain only to this specific application. In other archaeological or non-archaeological applications where the same UAS was used, vertical accuracy was achieved that far exceeds theoretical expectations. In conclusion, for similar future archaeological applications, reliance on PPK alone is viable.

Additionally, the fusion of the RGB and MS orthophotomosaics produced a fused image with significantly enhanced spatial resolution, facilitating a more detailed visual and digital analysis of the archaeological site. Although the correlation table (

Table 5) indicates that the spectral information transferred from the MS orthophotomosaic to the fused image is slightly below the 90% threshold (with an average correlation of 80% across corresponding bands), the overall quality remains high, as confirmed by the value (0.8) of ERGAS index. This suggests that the spectral deviations between the fused image and the MS orthophotomosaic are moderate, making the fused image suitable for classification purposes. The proposed image fusion procedure can be applied to any archaeological site and is also suitable for other studies, such as urban planning, spatial planning, environmental, geological, etc.

The study of the Hippodamian system of ancient Olynthus demonstrates that the meticulously planned urban grid was far more than an aesthetic exercise, as it played a pivotal role in the functioning of the community. The organized layout of streets and building blocks not only facilitated efficient circulation and effective drainage but also promoted a coherent separation between public (roads) and private (buildings) spaces.

Moreover, it is revealed that the architectural design and building typology of Olynthus reflect deeper social structures. On the other hand, the uniformity of the typology of the houses, which they were forced to follow, did not allow architecture complexity, creating standardized houses and limiting the local identity of the ancient city. The integration of workshops and market areas within residential quarters highlights the symbiotic relationship between private production and collective economic activities.

Additionally, the use of local, durable materials and smart design choices, such as strategic building orientations for optimal natural lighting and ventilation, underscores the city’s emphasis on sustainability and resilience. This approach enhanced energy efficiency and provided natural climatic adaptation, but it indirectly influenced social interactions negatively. The orientation, the design of the courtyard in the center, the fact that houses were structured in a way in which all processes took place within, the vestibule before the courtyard, and the total separation of the houses made the households inward-looking without direct interactions with the other houses of the block.

The case of ancient Olynthus offers valuable insights into how advanced urban planning and architectural strategies can intertwine aesthetics, functionality, and socio-economic organization. The findings contribute to our broader understanding of sustainable urban development and may inspire contemporary practices in city planning and resource management.