Automatic Detection of Dynamic and Static Activities of the Older Adults Using a Wearable Sensor and Support Vector Machines

Abstract

1. Introduction

2. Methods and Materials

2.1. SVM

2.2. Participants and Data Collection

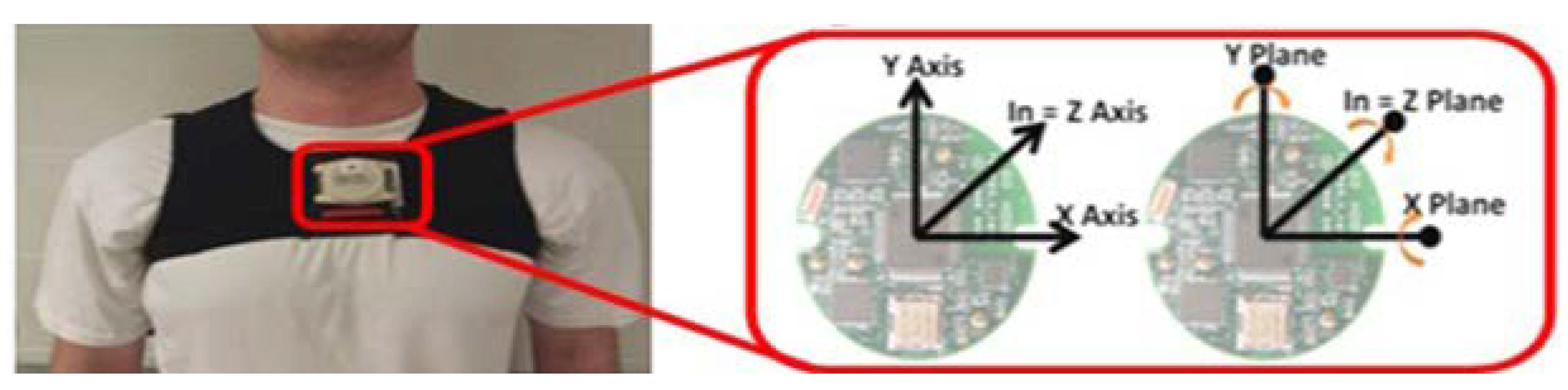

2.3. Instrumentation

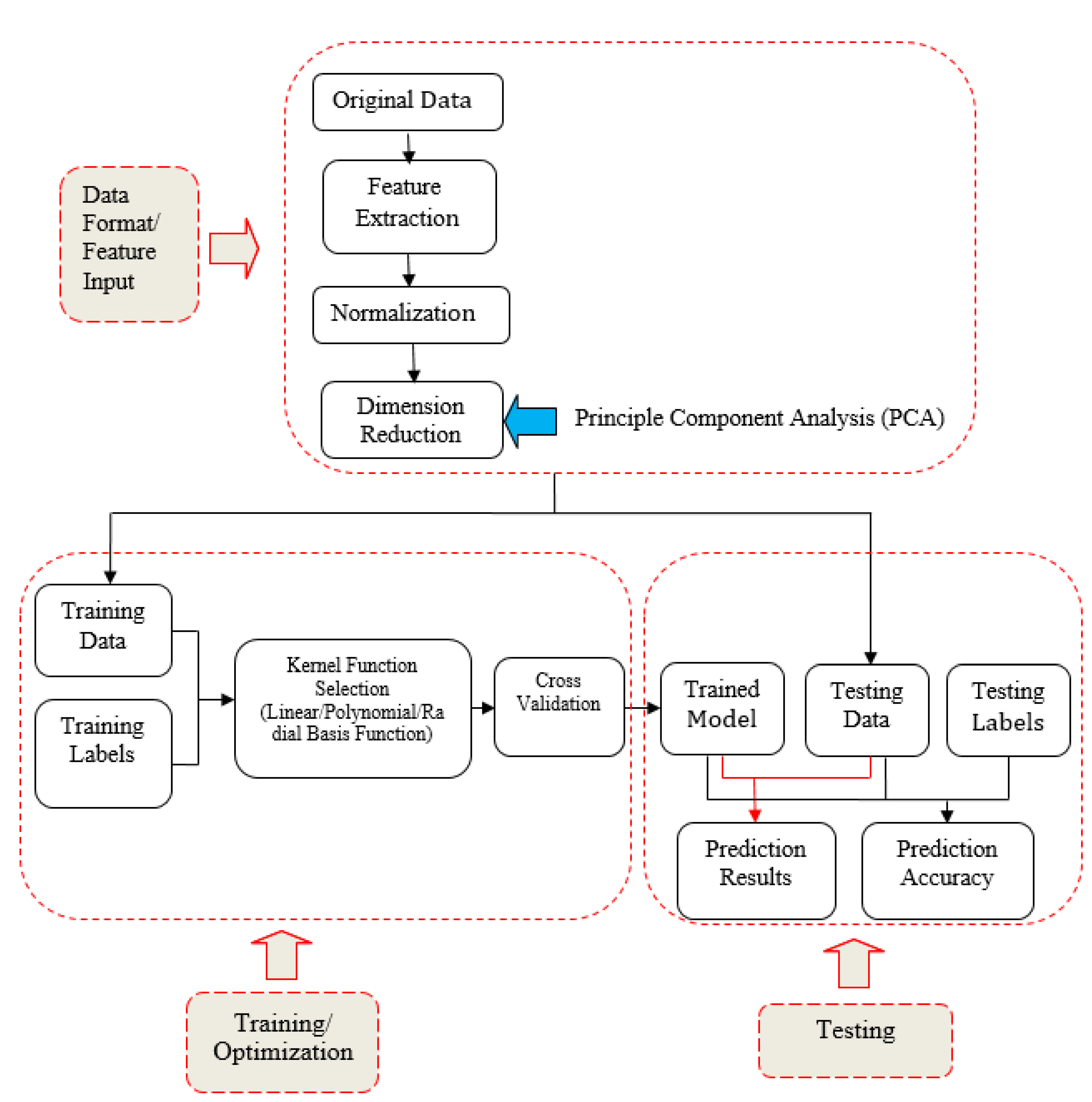

2.4. Data Analysis

- Step 1 Input of the Original Data

- Step 2 Feature Extraction

- (1)

- Mean Absolute Value—the mean absolute value of the original signal, , in order to estimate signal information in time domain:where is the kth sampled point and N represents the total sampled number over the entire signal.

- (2)

- Zero Crossings—the number of times the waveform crosses zero, in order to reflect signal information in the frequency domain.

- (3)

- Slope Sign Changes—the number of times the slope of the waveform changes sign, in order to measure the frequency content of signal.

- (4)

- Waveform Length—the cumulative curve length over the entire signal, in order to provide information on the waveform complexity.

- Step 3 Normalization

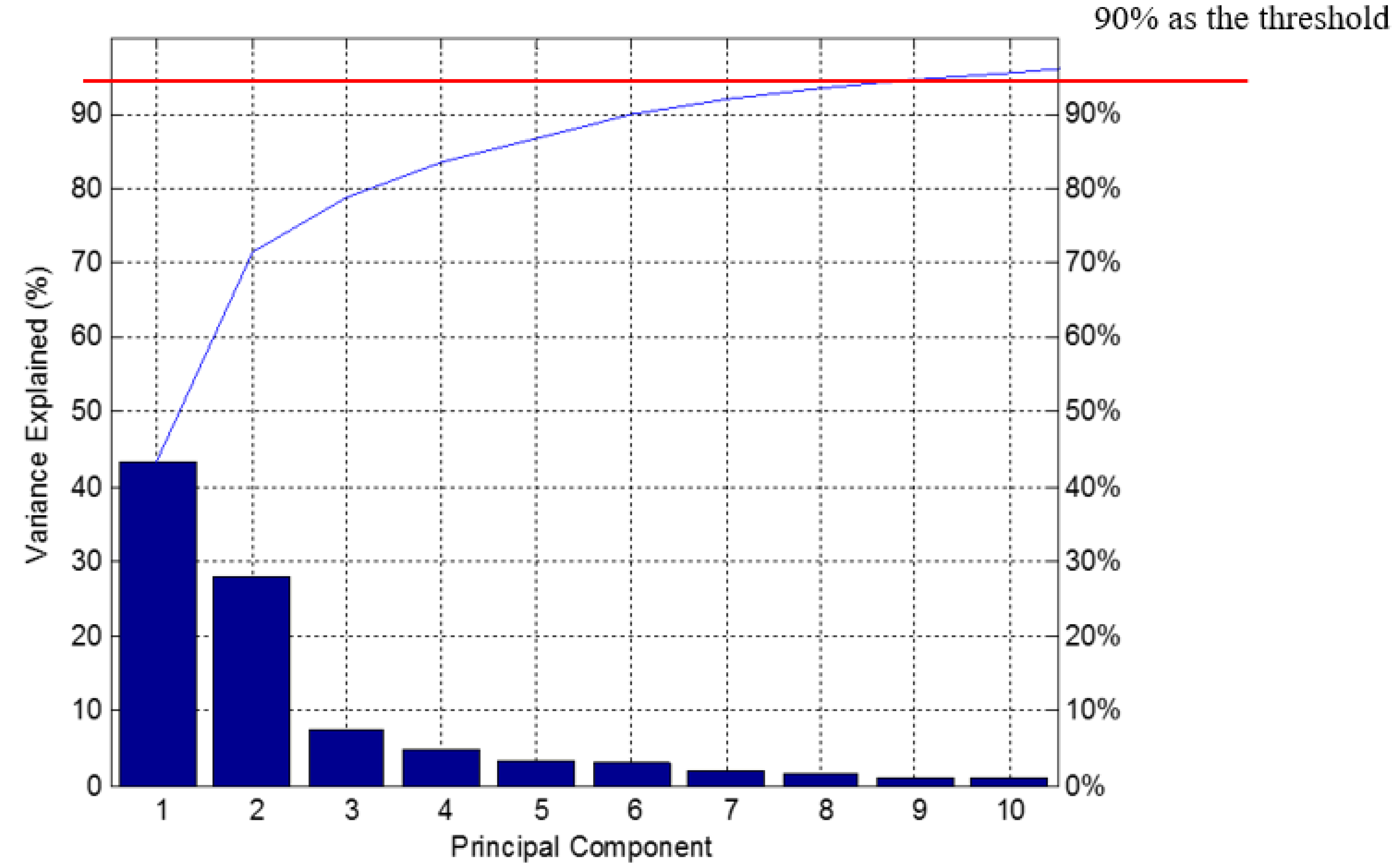

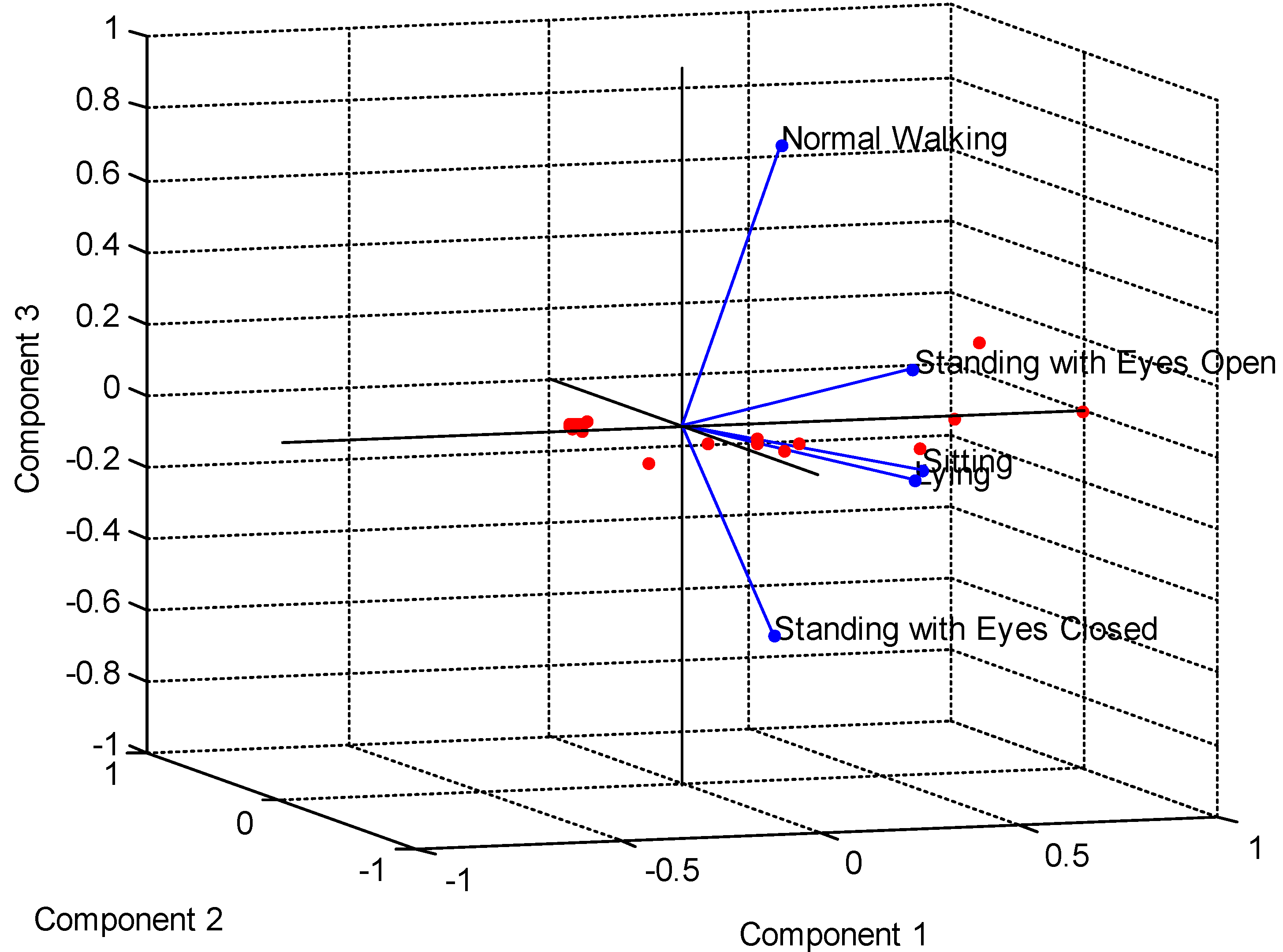

- Step 4 Principle Component Analysis (PCA)

- (1)

- Assume X is an m × n matrix, and choose a normalized direction in m-dimensional space along which the variance in X is maximized, saving this vector as .

- (2)

- Find another direction along which variance is maximized; however, the search is restricted to all directions orthogonal to all previous selected directions due to the orthonormality condition, saving this vector as . The procedure is repeated until m vectors are selected. The resulting ordered set of p is called principal components.

- (1)

- For the m eigenvectors, we reduce from m dimensions to k dimensions by choosing k eigenvectors related to k largest eigenvalues ;

- (2)

- Proportion of Variance (PoV) can be explained as:where are sorted in descending order, and the threshold of PoV is typically set as 0.9.

- Step 5 SVM Classifier Testing

3. Results

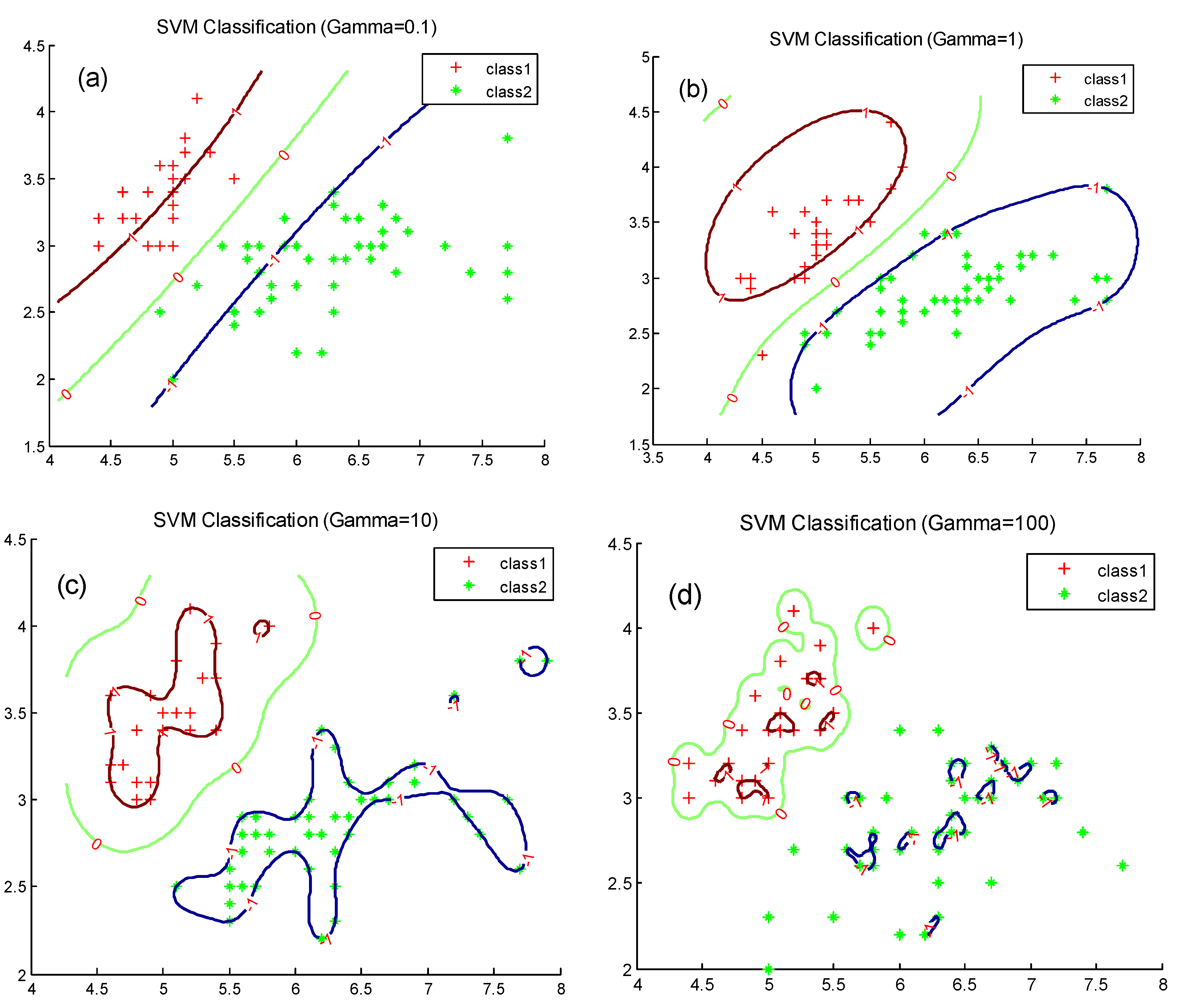

3.1. Effect of SVM Key Parameters on Classification

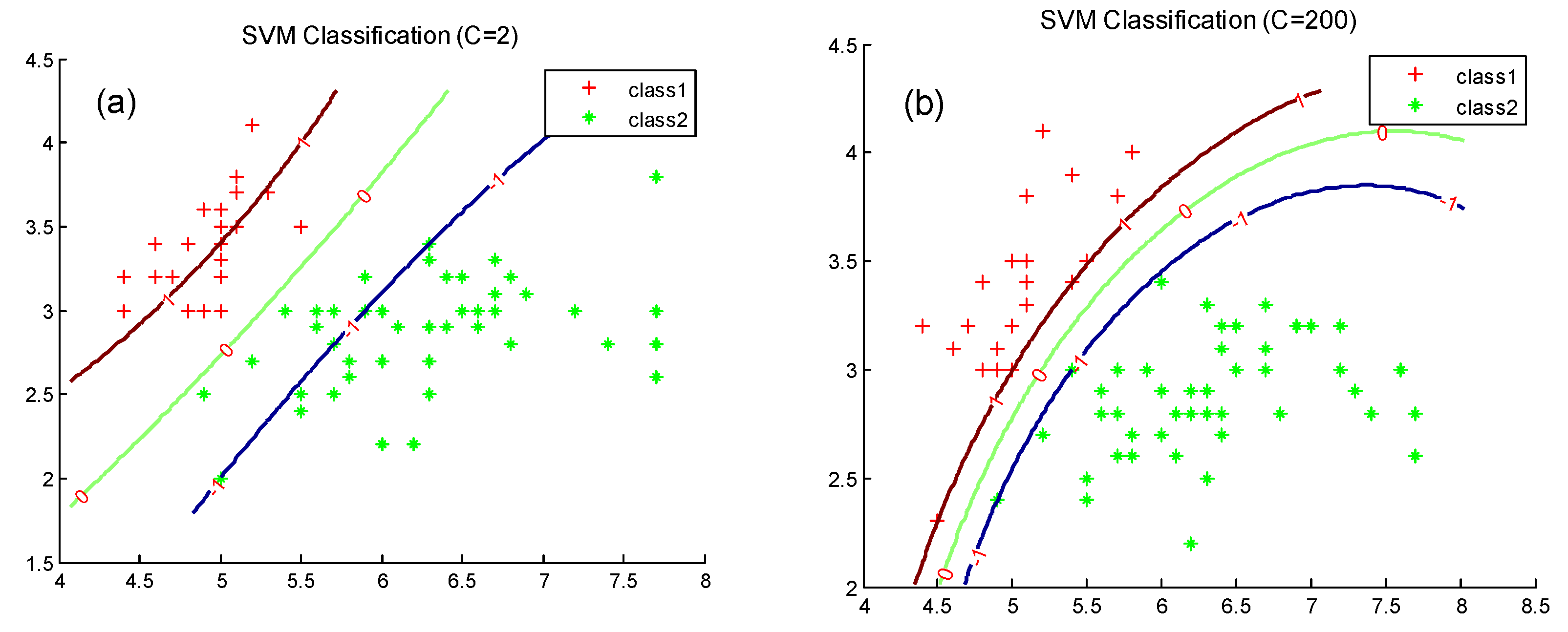

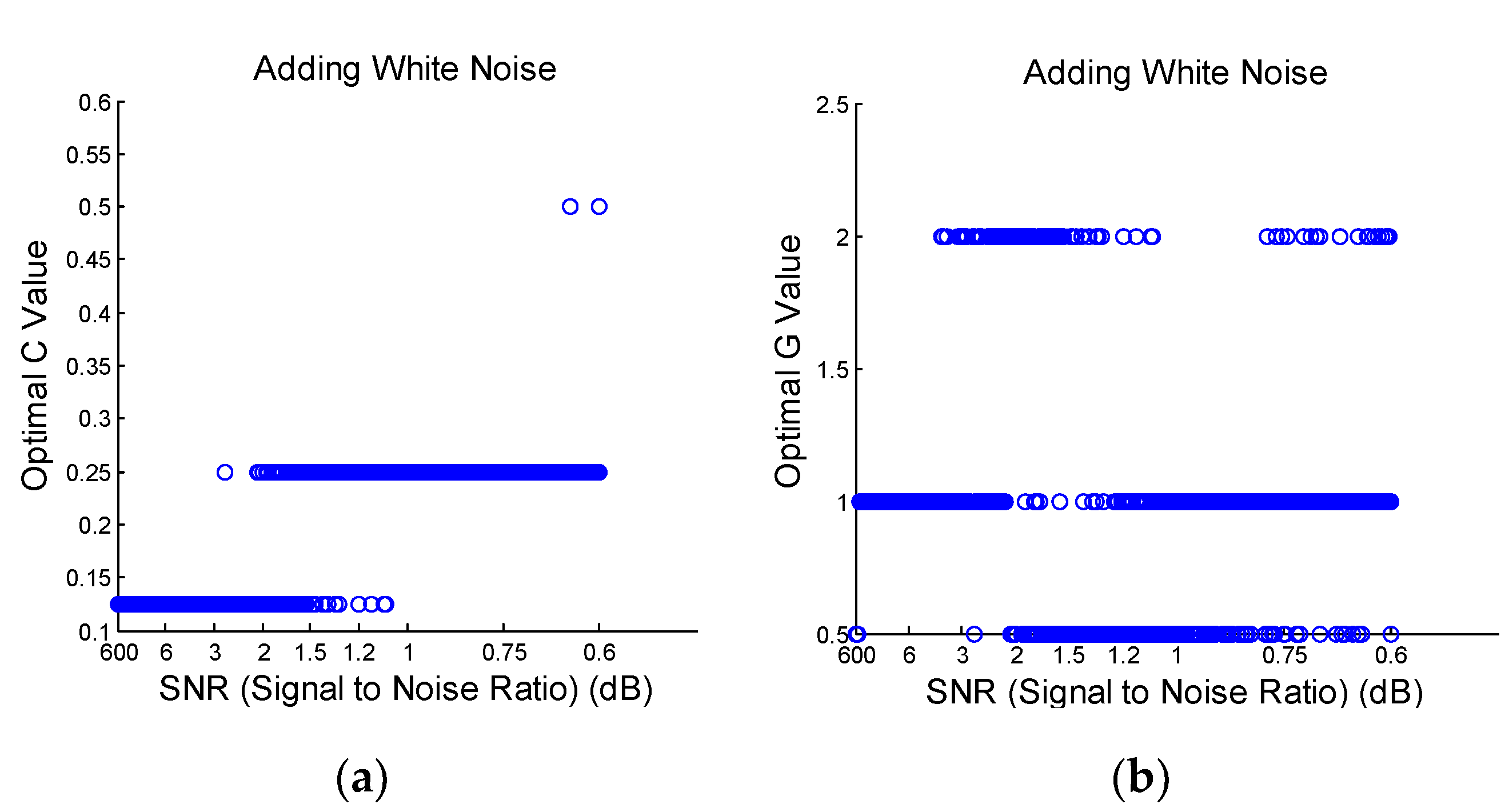

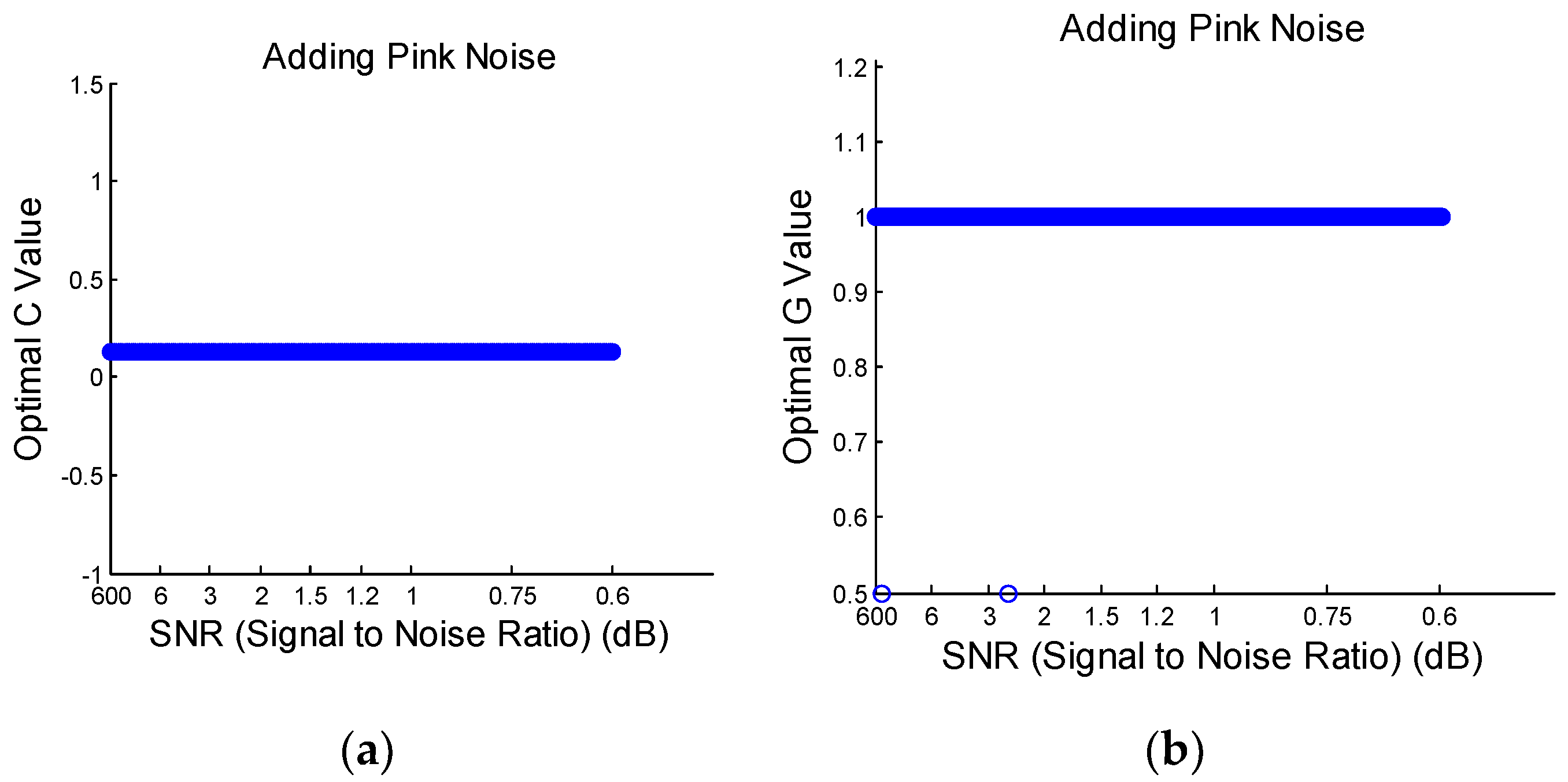

3.2. Optimization on SVM Key Parameters

3.3. Robustness of SVM Algorithm

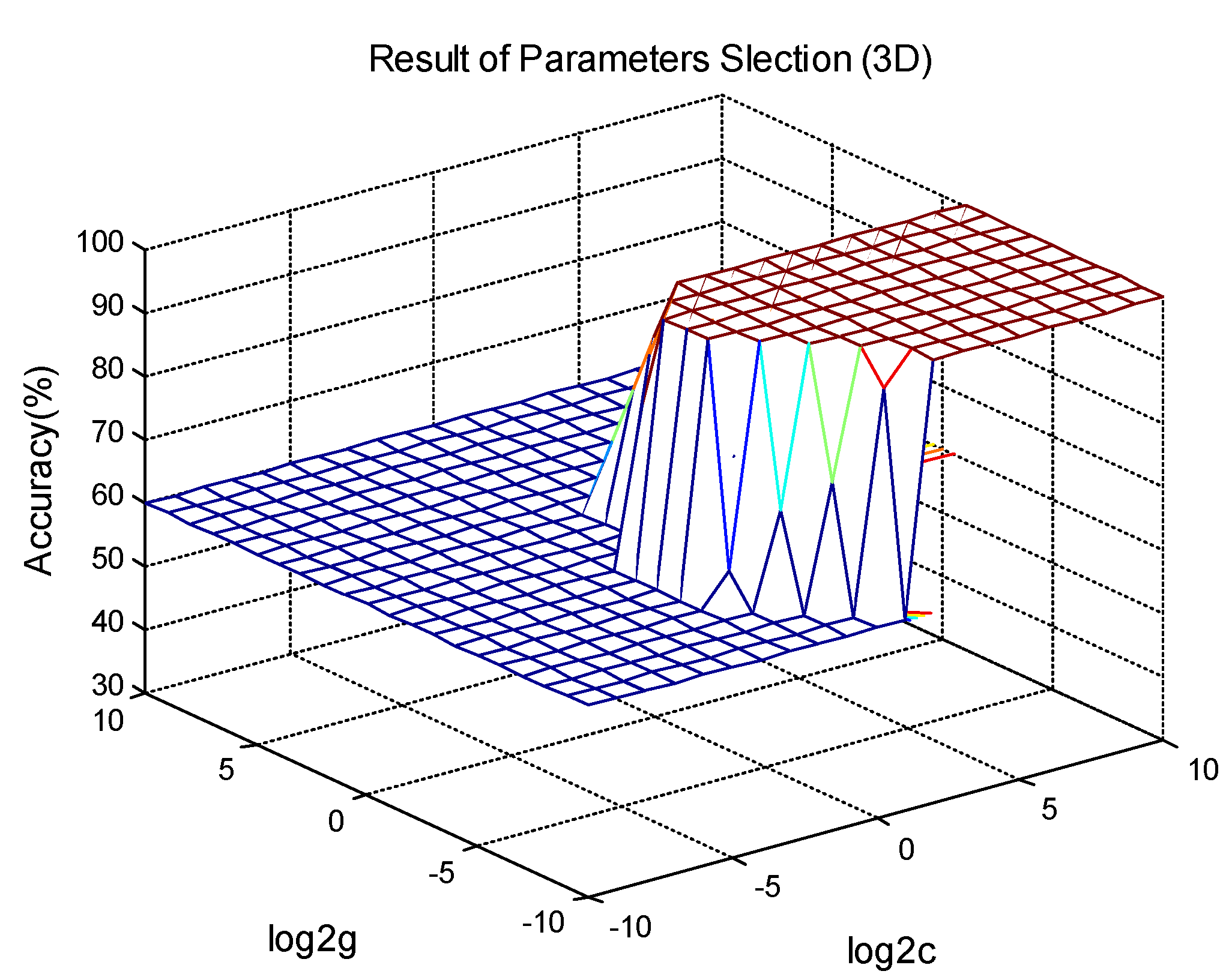

4. Experimental Results

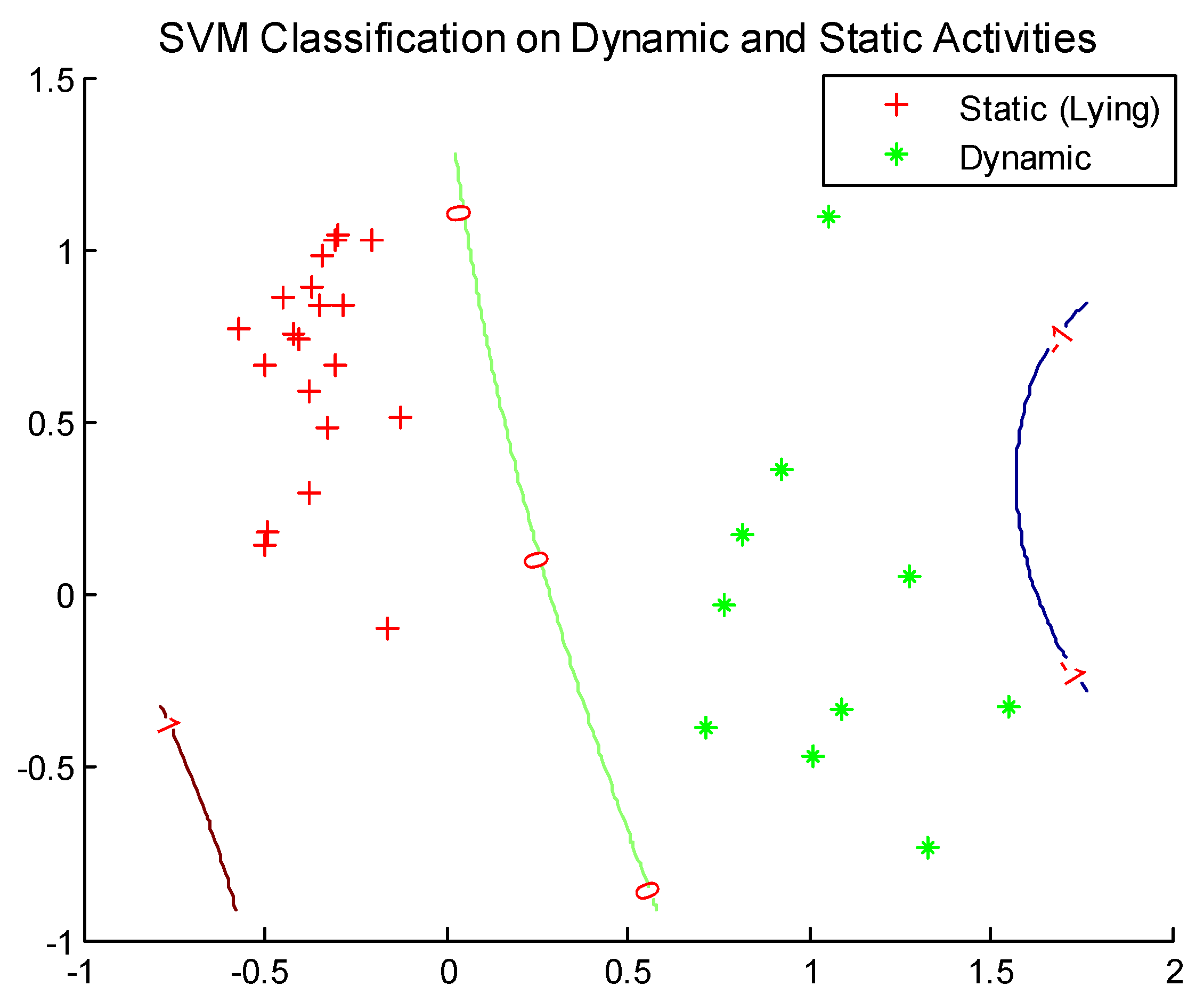

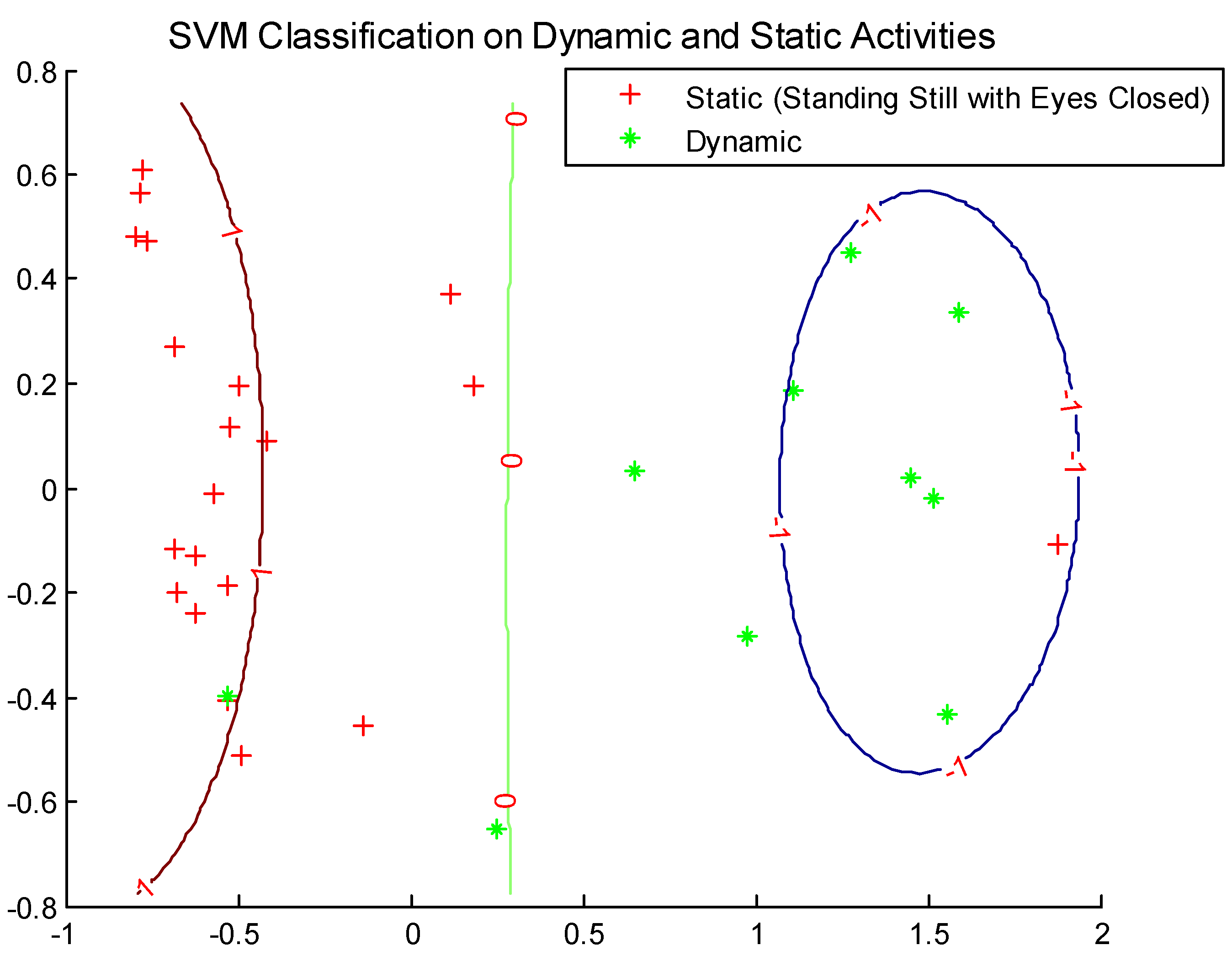

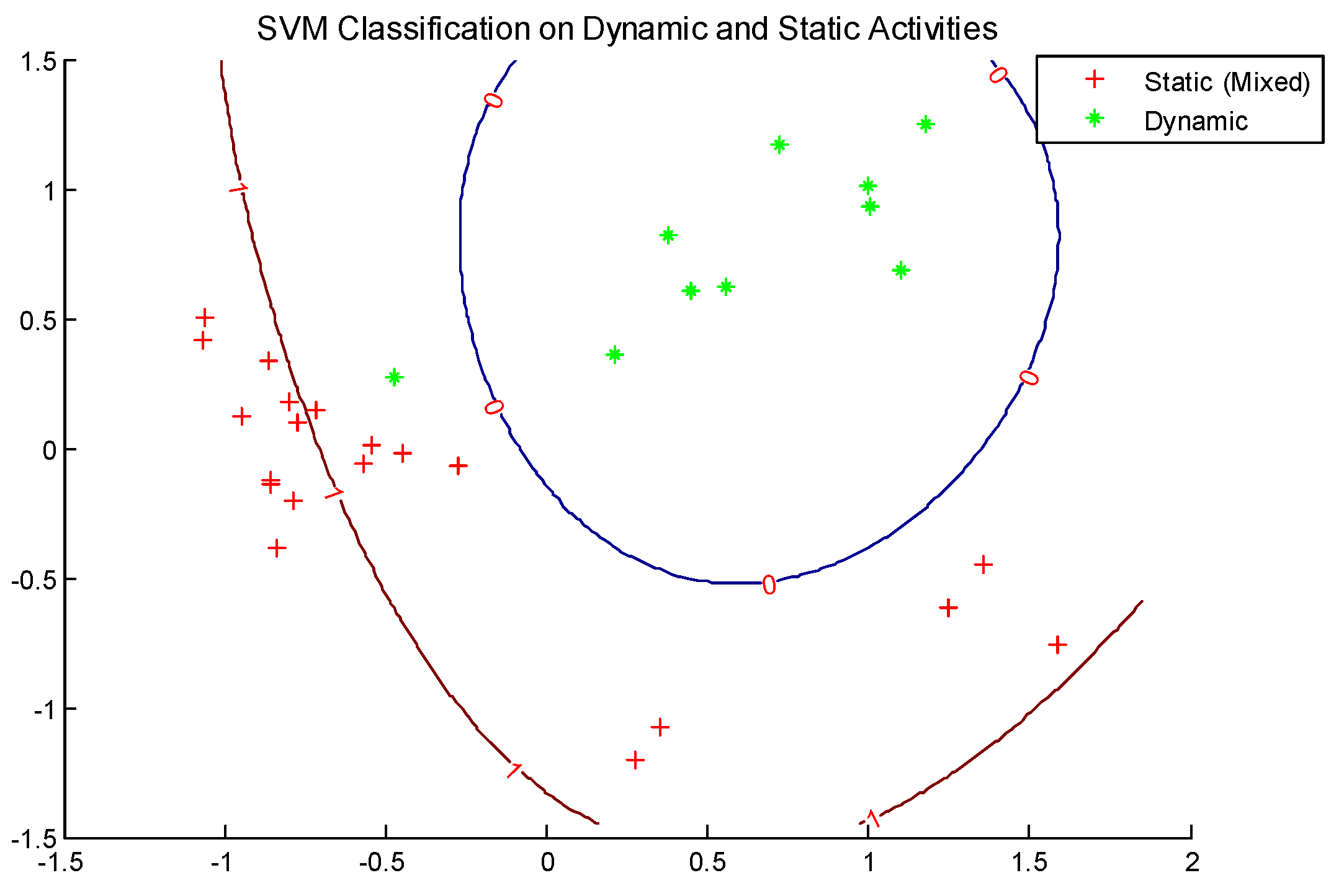

Classification on Dynamic and Static Activities

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Lockhart, T.E. Fall accidents among the elderly. In International Encyclopedia of Ergonomics and Human Factors, 2nd ed.; Karwowski, W., Ed.; CRC Press: Baton Rouge, FL, USA, 2007. [Google Scholar]

- Lockhart, T.E.; Smith, J.K.; Woldstad, J.C. Effects of aging on the biomechanics of slips and falls. Hum. Factors 2005, 47, 708–729. [Google Scholar] [CrossRef] [PubMed]

- Kannus, P.; Sievanen, H.; Palvanen, M.; Jarvinen, T.; Parkkari, J. Prevention of falls and consequent injuries in elderly people. Lancet 2005, 366, 1885–1893. [Google Scholar] [CrossRef]

- Liu, J.; Lockhart, T.E. Age-related joint moment characteristics during normal gait and successful reactive recovery from unexpected slip perturbations. Gait Posture 2009, 30, 276–281. [Google Scholar] [CrossRef] [PubMed]

- Parijat, P.; Lockhart, T.E. Effects of quadriceps fatigue on the biomechanics of gait and slip propensity. Gait Posture 2008, 28, 568–573. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Lockhart, T.E.; Jones, M.; Martin, T. Local dynamic stability assessment of motion impaired elderly using electronic textile pants. IEEE Trans. Autom. Sci. Eng. 2008, 5, 696–702. [Google Scholar] [PubMed]

- Oberg, T.; Karsznia, A.; Oberg, K. Joint angle parameters in gait: Reference data for normal subjects 10–79 years of age. J. Rehabil. Res. Dev. 1994, 31, 199–213. [Google Scholar]

- Oberg, T.; Karsznia, A.; Oberg, K. Basic gait parameters: Reference data for normal subjects 10–79 years of age. J. Rehabil. Res. Dev. 1993, 30, 210–223. [Google Scholar]

- Owings, T.M.; Grabiner, M.D. Step width variability, but not step length variability or step time variability, discriminates gait of healthy young and older adults during treadmill locomotion. J. Biomech. 2004, 37, 935–938. [Google Scholar] [CrossRef]

- Hausdorff, J.M.; Rios, D.A.; Edelberg, H.K. Gait variability and fall risk in community-living older adults: A 1-year prospective study. Arch. Phys. Med. Rehabil. 2001, 82, 1050–1056. [Google Scholar] [CrossRef]

- Najafi, B.; Aminian, K.; Loew, F.; Blanc, Y.; Robert, P.A. Measurement of stand-sit and sit-stand transitions using a miniature gyroscope and its application in fall risk evaluation in the elderly. IEEE Trans. Biomed. Eng. 2002, 49, 843–851. [Google Scholar] [CrossRef]

- Lee, M.; Roan, M.; Smith, B.; Lockhart, T.E. Gait analysis to classify external load conditions using linear discriminant analysis. Hum. Mov. Sci. 2009, 28, 226–235. [Google Scholar] [CrossRef] [PubMed]

- Chessa, S.; Knauth, S. Evaluating AAL systems through competitive benchmarking-indoor localization and tracking. In Proceedings of the International Competition, EvAAL 2011, Valencia, Spain, 25–29 July 2011. [Google Scholar]

- Cvetković, B.; Kaluža, B.; Gams, M.; Luštrek, M. Adapting activity recognition to a person with Multi-Classifier Adaptive Training. J. Ambient Intell. Smart Environ. 2015, 7, 171–185. [Google Scholar] [CrossRef]

- Kerdegari, H.; Mokaram, S.; Samsudin, K.; Ramli, A. A pervasive neural network based fall detection system on smart phone. J. Ambient Intell. Smart Environ. 2015, 7, 221–230. [Google Scholar] [CrossRef]

- Curtis, D.; Bailey, J.; Pino, E.; Stair, T.; Vinterbo, S.; Waterman, J.; Shih, E.; Guttag, J.; Greenes, R.; Ohno-Machado, L. Using ambient intelligence for physiological monitoring. J. Ambient Intell. Smart Environ. 2009, 1, 129–142. [Google Scholar] [CrossRef]

- Begg, R.K.; Palaniswami, M.; Owen, B. Support vector machines for automated gait classification. IEEE Trans. Biomed. Eng. 2005, 52, 828–838. [Google Scholar] [CrossRef]

- Chapelle, O.; Haffner, P.; Vapnik, V.N. Support vector machines for histogram-based classification. IEEE Trans. Neural Netw. 1999, 10, 1055–1064. [Google Scholar] [CrossRef]

- Begg, R.K.; Kamruzzaman, J. A machine learning approach for automated recognition of movement patterns using basic, kinetic and kinematic gait data. J. Biomech. 2005, 38, 401–408. [Google Scholar] [CrossRef]

- Lau, H.; Tong, K.; Zhu, H. Support vector machine for classification of walking conditions of persons after stroke with dropped foot. Hum. Mov. Sci. 2009, 28, 504–514. [Google Scholar] [CrossRef]

- Levinger, P.D.; Lai, T.H.; Begg, R.K.; Webster, K.E.; Feller, J.A. The application of support vector machine for detecting recovery from knee replacement surgery using spatio-temporal gait parameters. Gait Posture 2009, 29, 91–96. [Google Scholar] [CrossRef]

- Vapnik, V.N. An overview of statistical learning theory. IEEE Trans. Neural Netw. 1999, 10, 1055–1064. [Google Scholar] [CrossRef]

- Janssen, D.; Schollhorn, W.I.; Newell, K.M.; Jager, J.M.; Rost, F.; Vehof, K. Diagnosing fatigue in gait patterns by support vector machines and self-organizing maps. Hum. Mov. Sci. 2011, 30, 966–975. [Google Scholar] [CrossRef] [PubMed]

- Vapnik, V.N. Statistical Learning Theory; Wiley: New York, NY, USA, 1998. [Google Scholar]

- Vapnik, V.N.; Golowich, S.E.; Smola, A. Support vector method for function approximation regression, estimation, and signal processing. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, UK, 1997; Volume 9, pp. 281–287. [Google Scholar]

- Nuryani, N.S.; Ling, S.H.; Nguyen, H.T. Electrocardiographic signals and swarm-based support vector machine for hypoglycemia detection. Ann. Biomed. Eng. 2012, 40, 934–945. [Google Scholar] [CrossRef] [PubMed]

- Weston, J.; Mukherjee, S.; Chapelle, O.; Pontil, M.; Poggio, T.; Vapnik, V.N. Feature selection for SVMs. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, UK, 2000; Volume 13, pp. 668–674. [Google Scholar]

- Widodo, A.; Yang, B. Support vector machine in machine condition monitoring and fault diagnosis. Mech. Syst. Signal. Process. 2007, 21, 2560–2574. [Google Scholar] [CrossRef]

- Vapnik, V.N. The Nature of Statistical Learning Theory; Springer: New York, NY, USA, 1995. [Google Scholar]

- Cucker, F.; Smale, S. On the mathematical foundations of learning. Bull. Am. Math. Soc. 2001, 39, 1–49. [Google Scholar] [CrossRef]

- Cristianini, N.; Shawe-Taylor, J. An Introduction to Support Vector Machines and other Kernel-Based Learning Methods; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Barth, A.T.; Hanson, M.A.; Powell, H.C.; Lach, J. TEMPO 3.1: A body area sensor network platform for continuous movement assessment. In Proceedings of the 6th International Workshop on Wearable and Implantable Body Sensor Networks, Berkeley, CA, USA, 3–5 June 2009; pp. 71–76. [Google Scholar]

- Soangra, R. Understanding Variability in Older Adults Using Inertial Sensors. Ph.D. Thesis, Virginia Tech, Blacksburg, VA, USA, 2014. Available online: https://vtechworks.lib.vt.edu/handle/10919/49248 (accessed on 28 July 2020).

- Bouten, C.V.; Koekkoek, K.T.; Verduin, M.; Kodde, R.; Janssen, J.D. A triaxial accelerometer and portable data processing unit for the assessment of daily physical activity. IEEE Trans. Biomed. Eng. 1997, 44, 136–147. [Google Scholar] [CrossRef] [PubMed]

- Chang, C.C.; Lin, C.J. LIBSVM: A library for support vector machines. Acm Trans. Intell. Syst. Technol. 2011, 2, 1–27. [Google Scholar] [CrossRef]

- Jolliffe, I.T. Principal Component Analysis; Springer: New York, NY, USA, 2002. [Google Scholar]

- Gorban, A.; Kegl, B.; Wunsch, D.; Zinovyev, A. Principal Manifolds for Data Visualization and Dimension Reduction; Springer: Berlin/Heidelberg, Germany; New York, NY, USA, 2007. [Google Scholar]

- Mallat, S. A Wavelet Tour of Signal Processing; Academic Press: Cambridge, MA, USA, 1999. [Google Scholar]

- Huang, N.E.; Zheng, S.; Steven, R. The empirical mode decomposition and the Hilbert spectrum for nonlinear and non-stationary time series analysis. Proc. R. Soc. Lond. 1998, 454, 903–995. [Google Scholar] [CrossRef]

- Zhang, J.; Yan, R.; Gao, R.X.; Feng, Z. Performance enhancement of ensemble empirical mode decomposition. Mech. Syst. Signal. Process. 2010, 24, 2104–2123. [Google Scholar] [CrossRef]

- Hudgins, B.; Parker, P.; Scott, R.N. A new strategy for multifunction myoelectric control. Biomed. Eng. IEEE Trans. 1993, 40, 82–94. [Google Scholar] [CrossRef]

- Luukka, P.; Lampinen, J. Differential evolution classifier in noisy settings and with interacting variables. Appl. Soft Comput. 2011, 11, 891–899. [Google Scholar] [CrossRef]

- Nettleton, D.; Orriols-Puig, A.; Fornells, A. A study of the effect of different types of noise on the precision of supervised learning techniques. Artif. Intell. Rev. 2010, 33, 275–306. [Google Scholar]

- Trafalis, T.B.; Alwazzi, S.A. Support vector machine classification with noisy data: A second order cone programming approach. Int. J. Gen. Syst. 2010, 39, 757–781. [Google Scholar] [CrossRef]

- Ng, A.V.; Kent-Braun, J.A. Quantitation of lower physical activity in persons with multiple sclerosis. Med. Sci. Sports Exerc. 1997, 29, 517–523. [Google Scholar] [CrossRef] [PubMed]

- Veltink, P.H.; Bussmann, H.B.J.; de Vries, W.; Martens, W.L.J.; van Lummel, R.C. Detection of static and dynamic activities using uniaxial accelerometers. IEEE Trans. Rehabil. Eng. 1996, 4, 375–385. [Google Scholar] [CrossRef]

- Najafi, B.; Aminian, K.; Paraschiv-lonescu, A.; Loew, F.; Bula, C.J.; Robert, P. Ambulatory system for human motion analysis using a kinematic sensor: Monitoring of daily physical activity in the elderly. IEEE Trans. Biomed. Eng. 2003, 50, 711–723. [Google Scholar] [CrossRef]

- Aminian, K.; Robert, P.; Buchser, E.E. Physical activity monitoring based on accelerometry: Validation and comparison with video observation. Med. Biol. Eng. Comput. 1999, 37, 1–5. [Google Scholar] [CrossRef]

- Busser, H.J.; Ott, J.; van Lummel, R.C.; Uiterwaal, M.; Blank, R. Ambulatory monitoring of children’s activity. Med. Eng. Phys. 1997, 19, 440–445. [Google Scholar] [CrossRef]

- Pashmdarfard, M.; Azad, A. Assessment tools to evaluate Activities of Daily Living (ADL) and Instrumental Activities of Daily Living (IADL) in older adults: A systematic review. Med. J. Islam. Repub. Iran (MJIRI) 2020, 34, 224–239. [Google Scholar]

- Devos, H.; Ahmadnezhad, P.; Burns, J.; Liao, K.; Mahnken, J.; Brooks, W.M.; Gustafson, K. Reliability of P3 Event-Related Potential during Working Memory across the Spectrum of Cognitive Aging. medRxiv 2020. [Google Scholar] [CrossRef]

| Parameter | Sinusoidal Element (N) | |

|---|---|---|

| 1 | 2 | |

| (V) | 0.16 | 0.04 |

| (Hz) | 2.5 | 4 |

| (rad) | 0 | 0.32 |

| Transient Component (M) | ||||||||

|---|---|---|---|---|---|---|---|---|

| Group 1 | Group 2 | Group 3 | Group 4 | |||||

| Parameter | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| (V) | 0.06 | 0.05 | 0.06 | 0.05 | 0.06 | 0.05 | 0.06 | 0.05 |

| 1.73 | 1.8 | 1.73 | 1.8 | 1.73 | 1.5 | 1.73 | 1.5 | |

| (s) | 1.25 | 1.25 | 2.5 | 2.5 | 3.75 | 3.75 | 5.0 | 5.0 |

| (rad) | 0 | 0.33 | 0 | 0.33 | 0 | 0.33 | 0 | 0.33 |

| (Hz) | 15 | 12 | 15 | 12 | 15 | 10 | 15 | 10 |

| Dynamic | Static | Optimal C Value | Optimal G Value | Overall Classification Accuracy | Sensitivity | Specificity |

|---|---|---|---|---|---|---|

| Normal walking | Lying | 0.25 | 0.1436 | 100% | 100% | 100% |

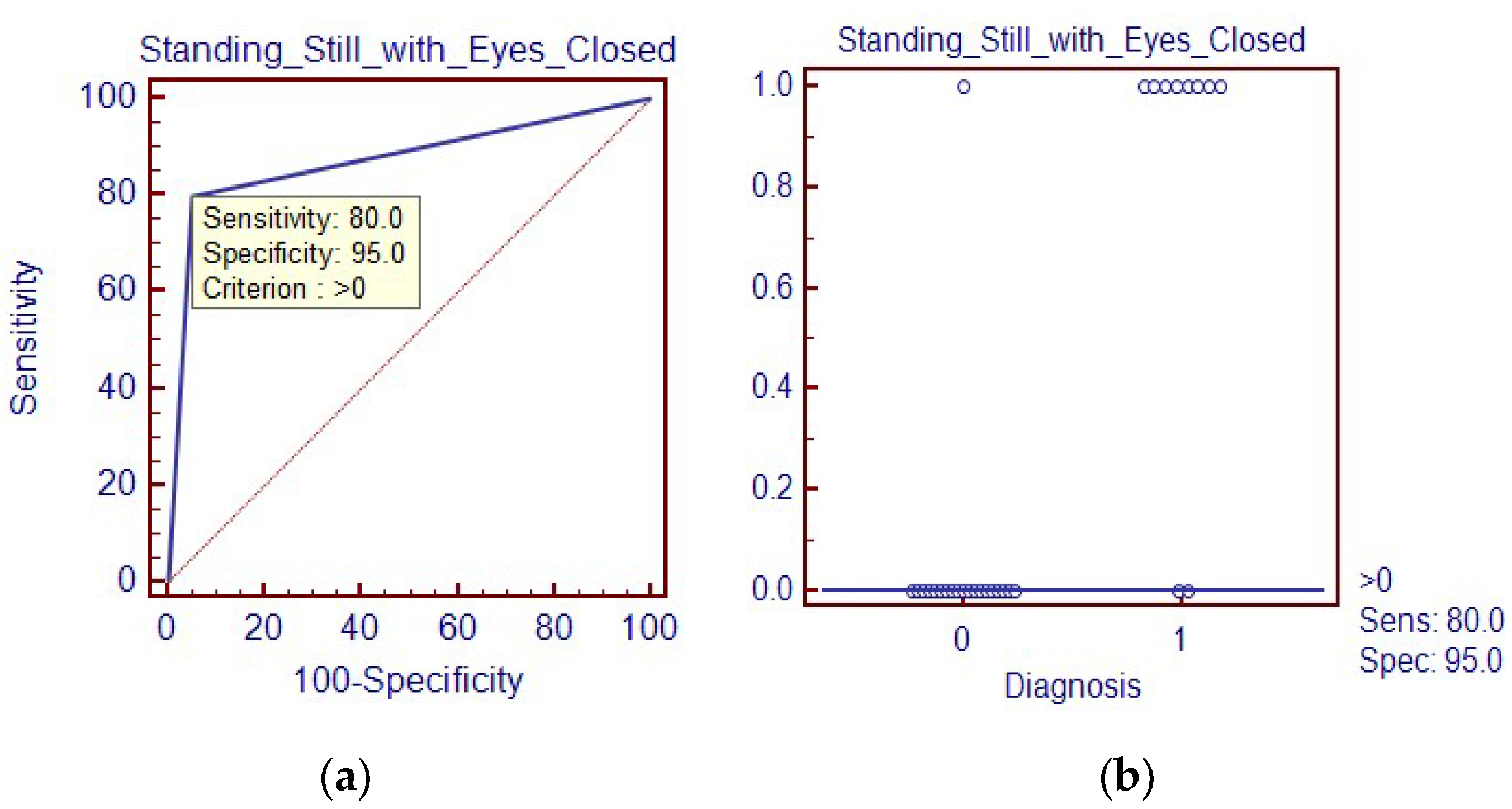

| Normal walking | Standing still with eyes closed | 0.25 | 0.4353 | 90% | 80% | 95% |

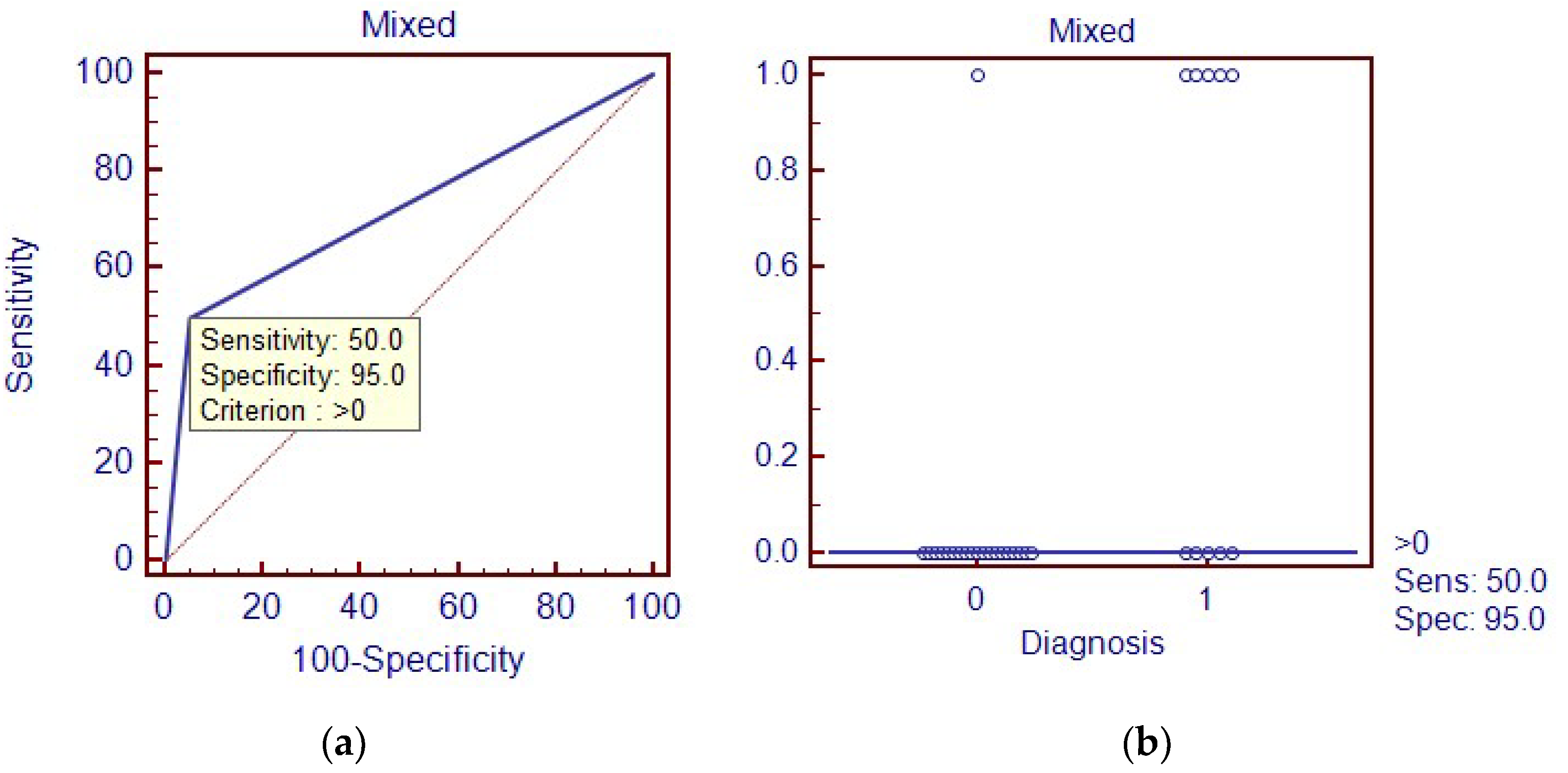

| Normal walking | Mixed activity | 2.2974 | 0.25 | 80% | 50% | 95% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, J.; Soangra, R.; E. Lockhart, T. Automatic Detection of Dynamic and Static Activities of the Older Adults Using a Wearable Sensor and Support Vector Machines. Sci 2020, 2, 62. https://doi.org/10.3390/sci2030062

Zhang J, Soangra R, E. Lockhart T. Automatic Detection of Dynamic and Static Activities of the Older Adults Using a Wearable Sensor and Support Vector Machines. Sci. 2020; 2(3):62. https://doi.org/10.3390/sci2030062

Chicago/Turabian StyleZhang, Jian, Rahul Soangra, and Thurmon E. Lockhart. 2020. "Automatic Detection of Dynamic and Static Activities of the Older Adults Using a Wearable Sensor and Support Vector Machines" Sci 2, no. 3: 62. https://doi.org/10.3390/sci2030062

APA StyleZhang, J., Soangra, R., & E. Lockhart, T. (2020). Automatic Detection of Dynamic and Static Activities of the Older Adults Using a Wearable Sensor and Support Vector Machines. Sci, 2(3), 62. https://doi.org/10.3390/sci2030062