1. Introduction

Flooding and induced scouring are major concerns for highway bridges and are known to be the leading cause of bridge failure in the United States, accounting for almost 53% of all failures [

1]. During flooding, increased flow rates and velocities can erode sediment around bridge piers, weakening their foundations and increasing the risk of scour failures. Prompt detection of flooding, which is associated with scouring, is crucial for issuing early warnings and implementing emergency measures such as reinforcing piers, deploying protective barriers, or restricting traffic. These actions help mitigate the risk of bridge collapse and protect lives and properties. Moreover, detecting the cessation of flooding allows for immediate post-event inspections, avoiding delays that can exacerbate damage. Additionally, systematic monitoring and data collection from flood events aid in analyzing flood patterns and trends, providing a scientific basis for improving bridge design and maintenance. Therefore, advancing flood detection research is essential for ensuring the resilience and safety of infrastructure, supporting both immediate risk management and long-term preventative strategies.

Flood events are a critical factor in the risk assessment of bridges. Ahamed et al. [

2] proposed a comprehensive fragility analysis framework that incorporates flow discharges and scour depths to assess the vulnerability of bridges to flooding in real time. Bento et al. [

3] introduced a risk-based methodology that combines uncertainty with an averaging approach to define design floods and assess scour at bridge foundations, particularly under extreme flood conditions. Lamb et al. [

4] analyzed data from over 50 railway bridge failures during flood events to construct fragility curves that quantify the probability of failure. Prendergast et al. [

5] developed calibrated numerical models to reproduce structural responses and assess bridge performance under flooding and seismic actions. Additionally, floods significantly alter the hydrodynamics around bridge piers, necessitating adjustments in the calculation and assessment of scour depth. Mehta and Yadav [

6] evaluated scour profiles under various flood events, using flow velocity to estimate scour depths. Link et al. [

7] studied local scour and sediment deposition at bridge piers during flood waves to explore the impacts of different flow and sediment regimes. Zhang et al. [

8] developed a numerical solver to simulate bridge failures under extreme flood hazards, while Pizarro and Tubaldi [

9] demonstrated that scour depth is highly sensitive to the parameters describing the flood hydrograph. The literature above highlighted the importance of the fact that sudden increase in flow and scour depth may cause bridge failure. Because of this, timely and accurate detection of floods is essential for calculating bridge scour depths and assessing overall bridge risk, underscoring the importance of precise and reliable flood monitoring systems.

Numerous scholars have focused their research on flood detection, predominantly using water level measurements. Miau and Hung [

10] developed a deep learning model that integrates the strengths of Convolutional Neural Network (CNN) and Gated Recurrent Unit architectures to extract complex features of river water levels, enabling the detection and forecasting of flood phenomena in Taiwan. Lin et al. [

11] designed an early warning system that employs the Mask R-CNN deep learning model to monitor real-time changes in bridge scour depth during floods. Pally and Samadi [

12] created a Python package utilizing various deep learning models, such as YOLOv3 and Fast R–CNN, to estimate and classify flood water levels, assessing aspects like depth, severity, and risk. Additionally, alternative methods for flood detection have been explored. Cao et al. [

13] introduced an iteratively multi-scale chessboard segmentation-based tile selection method for unsupervised flood detection across large areas using Synthetic Aperture Radar data. Tanim et al. [

14] combined Random Forest, Support Vector Machine (SVM), and Maximum Likelihood Classifier with data from road closure reports and satellite imagery to develop a machine learning model for flood detection in San Diego, CA, USA. Qundus et al. [

15] proposed a wireless sensor network decision model based on SVM for flood disaster detection, incorporating data on air pressure, wind speed, water level, temperature, humidity, and precipitation. Despite extensive research, many existing methods rely heavily on water level indicators or require complex, high-volume data, which lacks simplicity and efficiency. As an alternative, this paper proposes a novel flood detection method utilizing vibrations from bridge piers, offering a straightforward and practical solution that complements traditional water level and rainfall monitoring techniques.

Amid the rapid advancements in artificial intelligence, an increasing number of researchers are leveraging machine learning and deep learning techniques for processing and analyzing infrastructure monitoring data. For instance, Meixedo et al. [

16] analyzed response data from a large bridge subjected to train-induced vibrations, employing K-means clustering to classify damage-sensitive features. Deng et al. [

17] developed a fatigue damage prognosis method using Long Short-Term Memory networks, validated with steel deck response data from a bridge under traffic load. Similarly, Ni, Zhang, and Noori [

18] utilized a one-dimensional Convolutional Neural Network (1D CNN) to detect abnormal behaviors in bridge structures, corroborated by data from a Structural Health Monitoring system in China. Shi et al. [

19] introduced a real-time damage detection method using deep support vector domain description, tested with data from laboratory shake table experiments. These studies underscore the significant potential of machine learning algorithms in the realm of infrastructure monitoring. Specifically, the challenge of flood detection based on vibration data from bridge piers represents a time-series classification problem. Numerous machine learning algorithms are suitable for this task, among which 1D CNN is particularly preferred due to its simplicity, robust feature extraction, and low computational footprint. For example, Sony et al. [

20] implemented a windowed 1D CNN to classify multiclass damage in bridges using vibration response data. Chen et al. [

21] developed a method for diagnosing rolling bearing faults using a 1D CNN, achieving an accuracy of 99.2%. Ince [

22] applied shallow and adaptive 1D CNN for the real-time detection and classification of broken rotor bars in induction motors. Abdoli, Cardinal, and Koerich [

23] designed a neural network architecture based on 1D CNN to classify environmental sounds, which was capable of handling audio signals of varying lengths. Furthermore, Ragab et al. [

24] explored a 1D CNN combined with Bayesian optimization and ensemble learning for environmental sound classification. Given these previous studies, exploring the application of 1D CNN for flood detection based on bridge pier vibrations holds considerable promise for enhancing the reliability and effectiveness of infrastructure safety measures.

Another common approach for processing time-series data is the use of Continuous Wavelet Transform (CWT) to derive rich time-frequency representations. Unlike the Hilbert Transform, which primarily focuses on phase and amplitude information, the Continuous Wavelet Transform (CWT) can capture signal details across multiple scales. This makes CWT particularly well-suited for analyzing bridge vibrations, which are often influenced by a variety of sources operating at different frequencies and timescales. Moreover, this transformation allows the flood detection task to be framed as an image classification problem. Among various algorithms for image classification, ResNet18 is a popular choice due to its relatively deep architecture enhanced by residual connections, which enable the model to retain important features from earlier layers and effectively learn complex representations with improved training efficiency. ResNet18 has been widely utilized across diverse fields due to its high accuracy and generalization capability. Numerous studies have demonstrated the effectiveness of ResNet18 in medical applications. For instance, Jing et al. [

25] developed an improved ResNet18 model for classifying electrocardiogram signals, achieving a model accuracy of 96.5%. Liu, She, and Chen [

26] applied ResNet18 combined with Magnetic Resonance Imaging to diagnose femoral head necrosis, attaining an accuracy of 99.27%. Sarwinda et al. [

27] utilized ResNet18 to detect colorectal cancer using images of colon glands, with an accuracy exceeding 80%. Odusami et al. [

28] implemented a finetuned ResNet18 network to diagnose early symptoms of Alzheimer’s disease, achieving over 99% accuracy in tests on neuroimaging from 138 patients. Furthermore, Liu, Fan, and Yang [

29] introduced a 3D ResNet18 Dual Path Faster R-CNN model for lung nodule detection, demonstrating high performance. These examples underscore the high efficacy of ResNet18 in special image classification tasks, which inspired us to investigate its application in flood detection using CWT images derived from bridge vibrations.

This paper proposes a deep learning-based flood detection method using bridge vibration data collected via in situ accelerometers on a bridge in the U.S. The remainder of the paper is organized as follows:

Section 2 introduces and discusses the characteristics of accelerometer data used in this study.

Section 3 presents the methodology and architectural details of the proposed models.

Section 4 details the experiments conducted and the results obtained. Finally, the conclusions are drawn in

Section 5.

3. Methodology

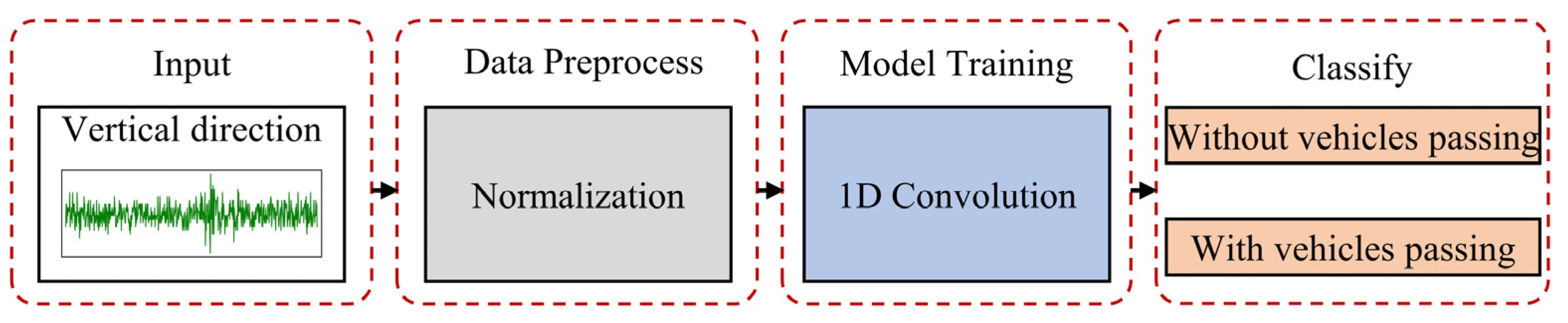

3.1. Flood Detection Method Based on Bridge Pier Vibrations

With the advancement of artificial intelligence, machine learning has demonstrated robust performance across diverse fields. In this paper, a deep learning-based flood detection method based on bridge pier vibrations is proposed, as depicted in

Figure 6.

As outlined in

Figure 6, the process consists of two stages: detection of vehicles passing on bridges and detection of flood events. The overall pipeline is decomposed into four components, as denoted in

Figure 6 and described below.

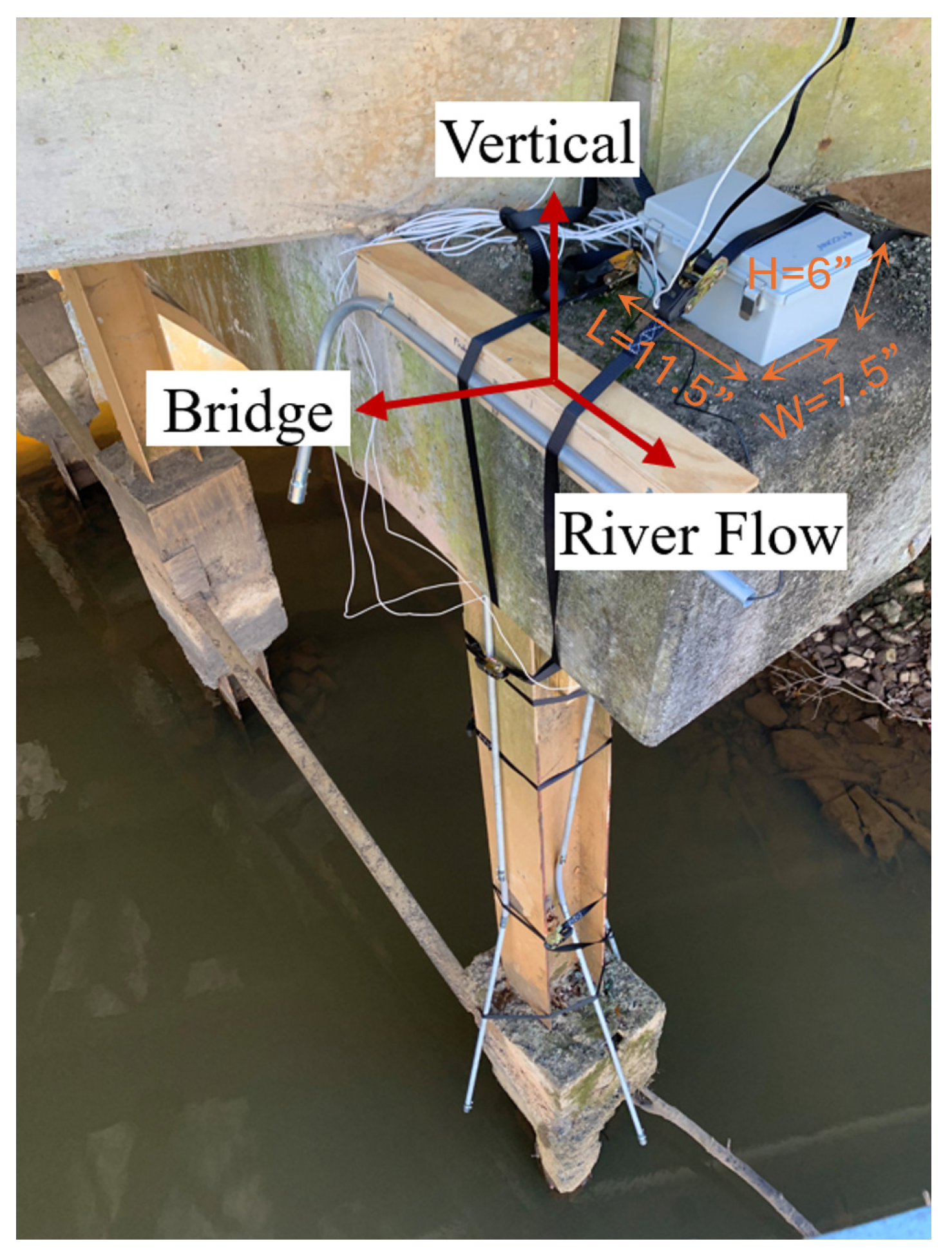

(1) Data collection. Accelerometers are installed on the bridge pier to capture 10 s segments of vibrational data in the river flow, bridge, and vertical directions. This foundational data collection is critical for analyzing the bridge’s response under different environmental conditions.

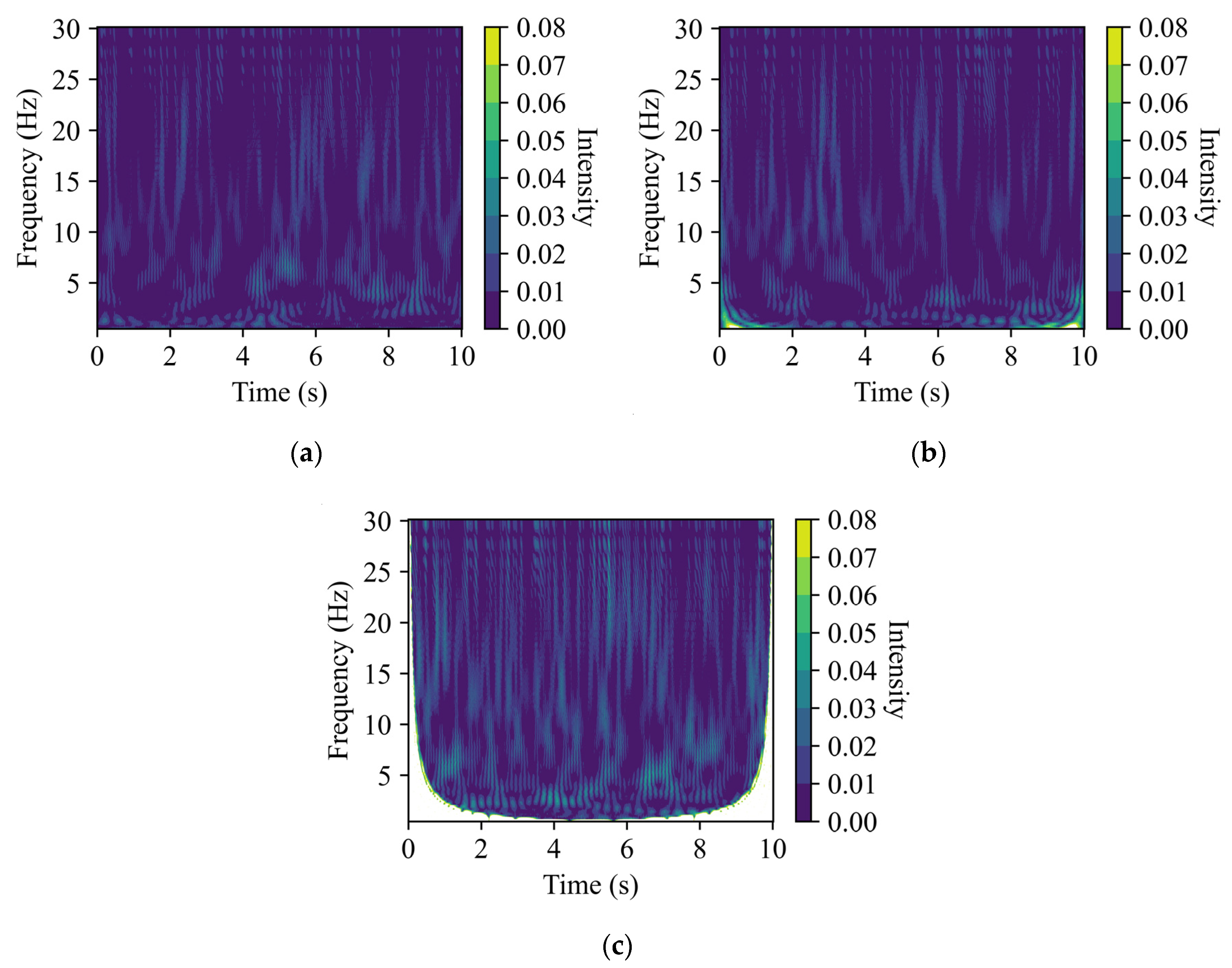

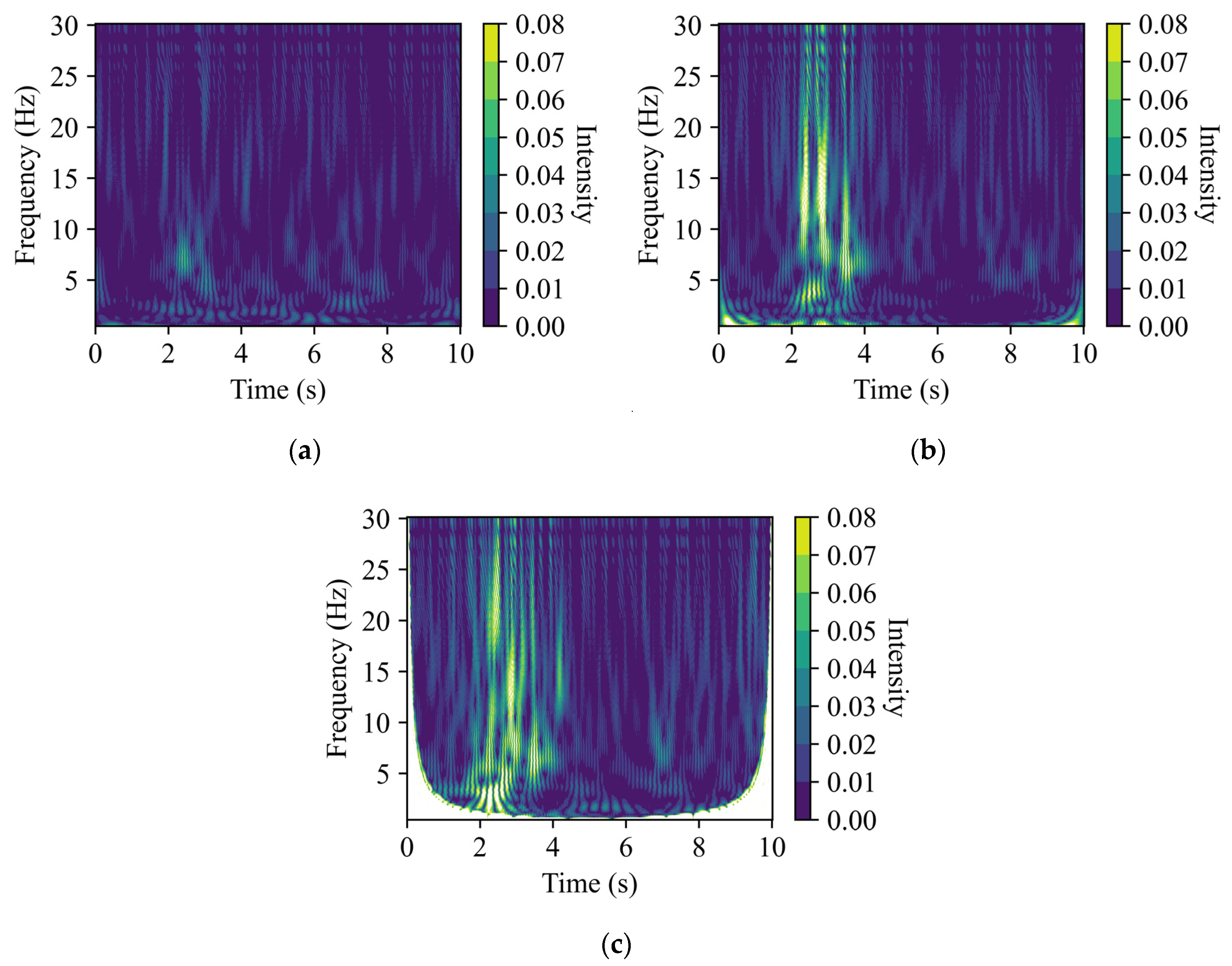

(2) CWT analysis and vehicles passing detection. Given that the vertical direction’s time-frequency representation shows a broader and more sustained energy distribution across a wider frequency range during the passing of vehicles, this direction is specifically chosen for initial analysis to classify two bridge situations: without and with passing of vehicles. First, the data undergoes CWT to obtain a time-frequency representation. This transformed data is then fed into a pre-trained ResNet18 model to determine the passing of the vehicles. Depending on the classification outcome, the process will progress to the third stage or the fourth stage, as described below.

(3) Flood detection without vehicles passing. In scenarios without vehicular movement, the river flow and bridge direction data, which are influenced by flood events, are analyzed using a trained 1D Convolution model. This model, trained exclusively on datasets without vehicles passing, minimizes confounding influences and focuses on detecting flood-induced vibrational patterns.

(4) Flood detection with vehicles passing. When vehicles are passing the bridge, the vibration data from the river flow and bridge directions are processed by another 1D Convolution model trained solely on datasets with vehicles passing. This segmentation ensures that flood detection is tailored to the specific dynamic conditions induced by vehicle movements.

Upon successful detection of flood events, the system will transmit an alert, enabling timely notifications for necessary preemptive actions to ensure bridge safety.

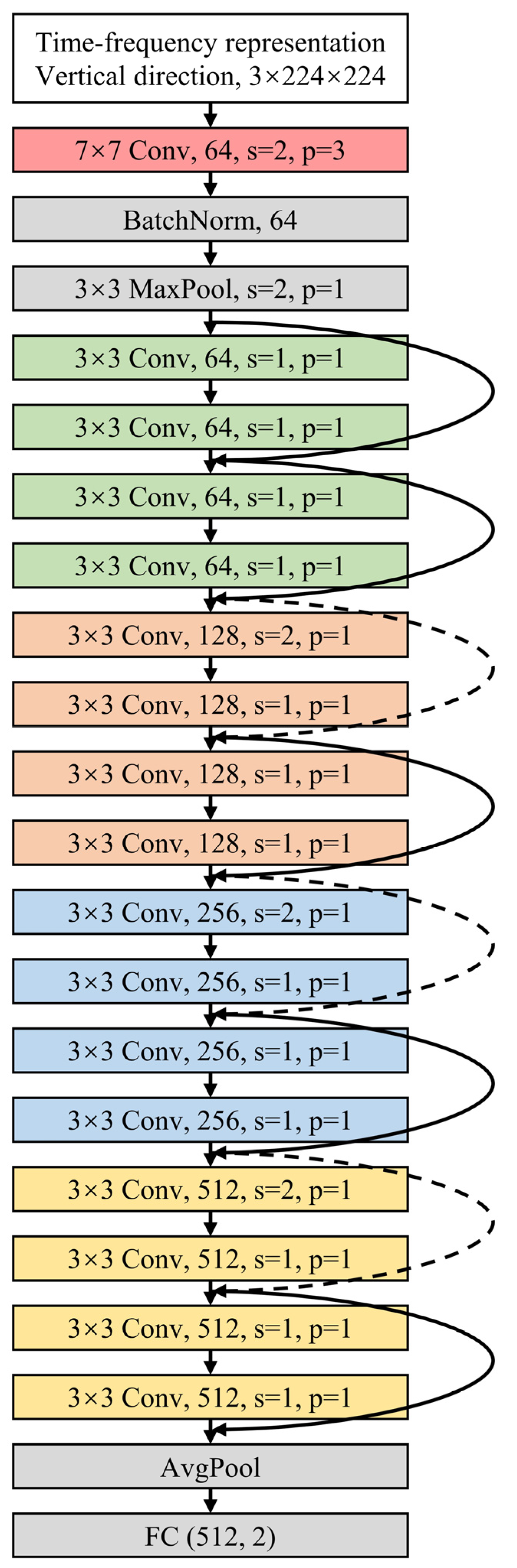

3.2. ResNet18 Model

ResNet18 is a seminal architecture in deep learning architectures, designed to enhance performance in deep networks while maintaining computational efficiency. The model features an 18-layer structure, incorporating convolutional layers, batch normalization, activation layers, and more importantly, residual blocks. These blocks include shortcut or skip connections that bypass one or more layers to permit identity mapping, addressing the vanishing gradient problem by facilitating gradient flow during backpropagation, enabling effective learning in deeper networks [

30]. Given the excellent performance of ResNet18 in image classification tasks, this study investigates its application in the specific context of detecting the passing of vehicles on the bridge.

Figure 7 illustrates the adapted ResNet18 architecture employed for detecting the passing of vehicles over bridges. The input to the network is a time-frequency representation of vibration data from the accelerometer sensor in the vertical direction on a bridge pier. The time-frequency representation takes the shape of (Channel: 3; Height: 224; Width: 224). The final stages of the network involve an average pooling layer that reduces the feature dimensionality, followed by a fully connected layer that maps the extracted features to a binary outcome: with or without passing of vehicles on the bridge.

3.3. 1D Convolution Model

1D Convolution is a mathematical operation widely utilized in the analysis of temporal or sequential signals. In the field of signal processing, 1D Convolution operates by sliding a kernel over the temporal dimension of the data, effectively extracting features by computing the dot product between the kernel and local regions of the input. This technique is particularly advantageous for time-series data, such as accelerometer data for structural monitoring, where the key features or motifs are often encoded along the temporal dimension. This study also investigates the performance of 1D Convolution for the classification task of flood detection.

Figure 8 is the architecture employing a 1D Convolutional Neural Network designed to classify flood events from accelerometer data on the bridge pier. The input consists of dual-axis vibrations (river flow and bridge directions) recorded over 10 s, providing two channels of 600 samples each. The architecture encompasses two 1D Convolutional layers, each followed by a ReLU activation for introducing non-linearity. The outputs are flattened and fed to a fully connected layer to classify two categories: presence or absence of flood events.

5. Discussion

Vibration-based techniques have been widely used for bridge health monitoring, but their application in flood monitoring, which is closely related to scour assessment, remains underdeveloped. One major challenge is the difficulty in isolating vibration signals to accurately differentiate between influences from flooding, wind, vehicles, and other factors.

This study addresses this challenge by proposing an effective flood detection method that combines CWT and deep learning techniques, utilizing accelerometer data from bridge pier vibrations. CWT analysis reveals that accelerometer data in both the vertical and bridge directions are significantly affected by vehicle passage. This effect is characterized by short-duration, high-intensity motifs in the CWT-processed time-frequency representations, which markedly contrast with patterns observed in the absence of vehicles. This clear difference in CWT representations explains the superior performance of both the ResNet18 and 1D Convolutional models in detecting vehicle passages.

However, the flood detection task becomes complex when considering mixed scenarios involving the presence or absence of passing vehicles. To effectively address the unique characteristics of each scenario, two lightweight 1D Convolution models have been developed, each serving as a specialized expert for its respective condition.

6. Conclusions

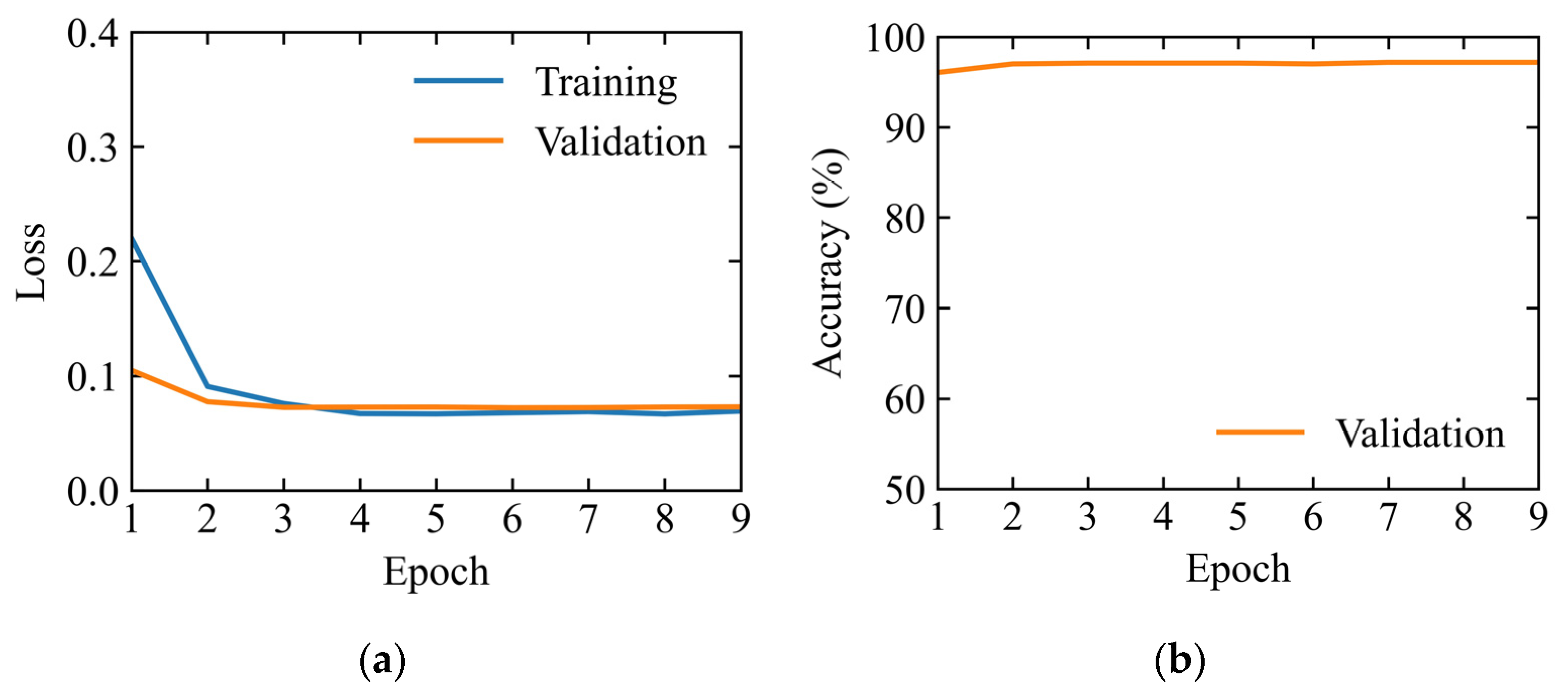

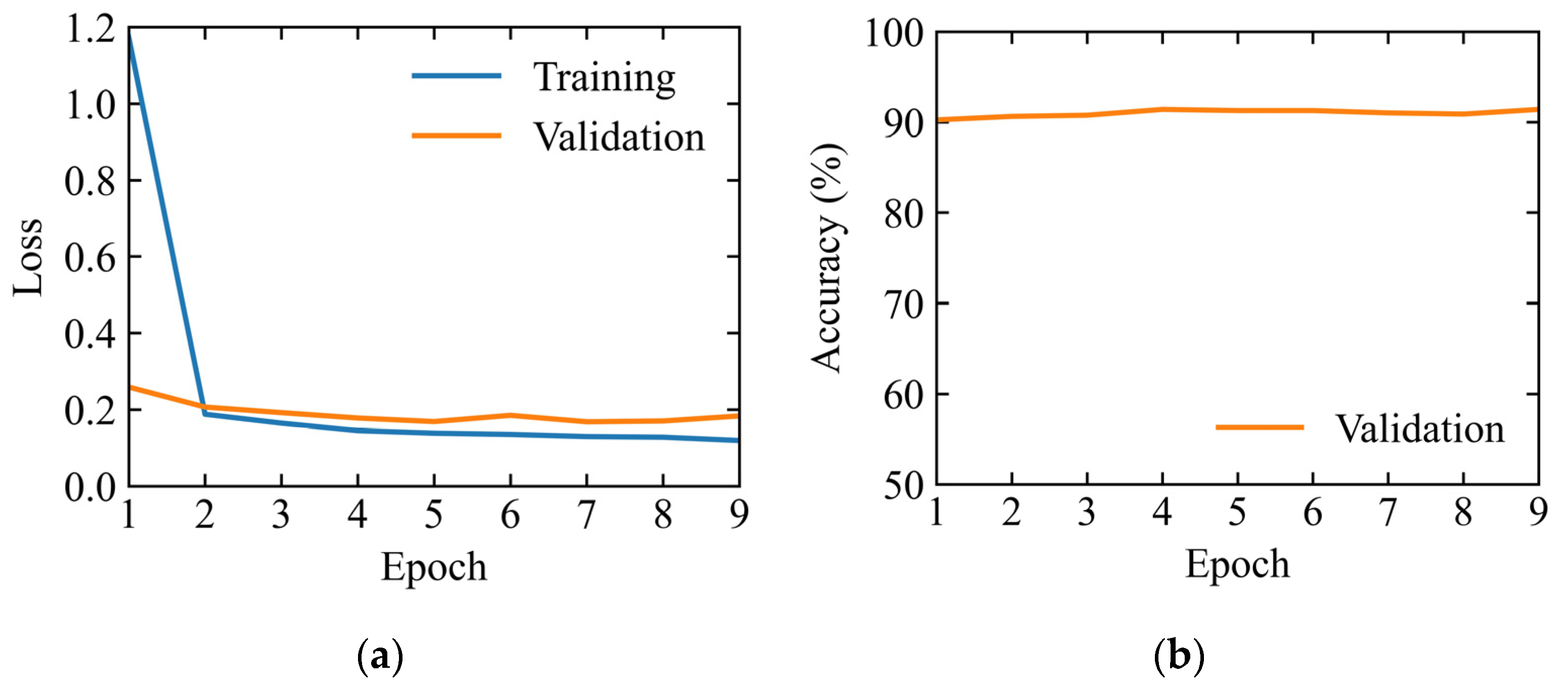

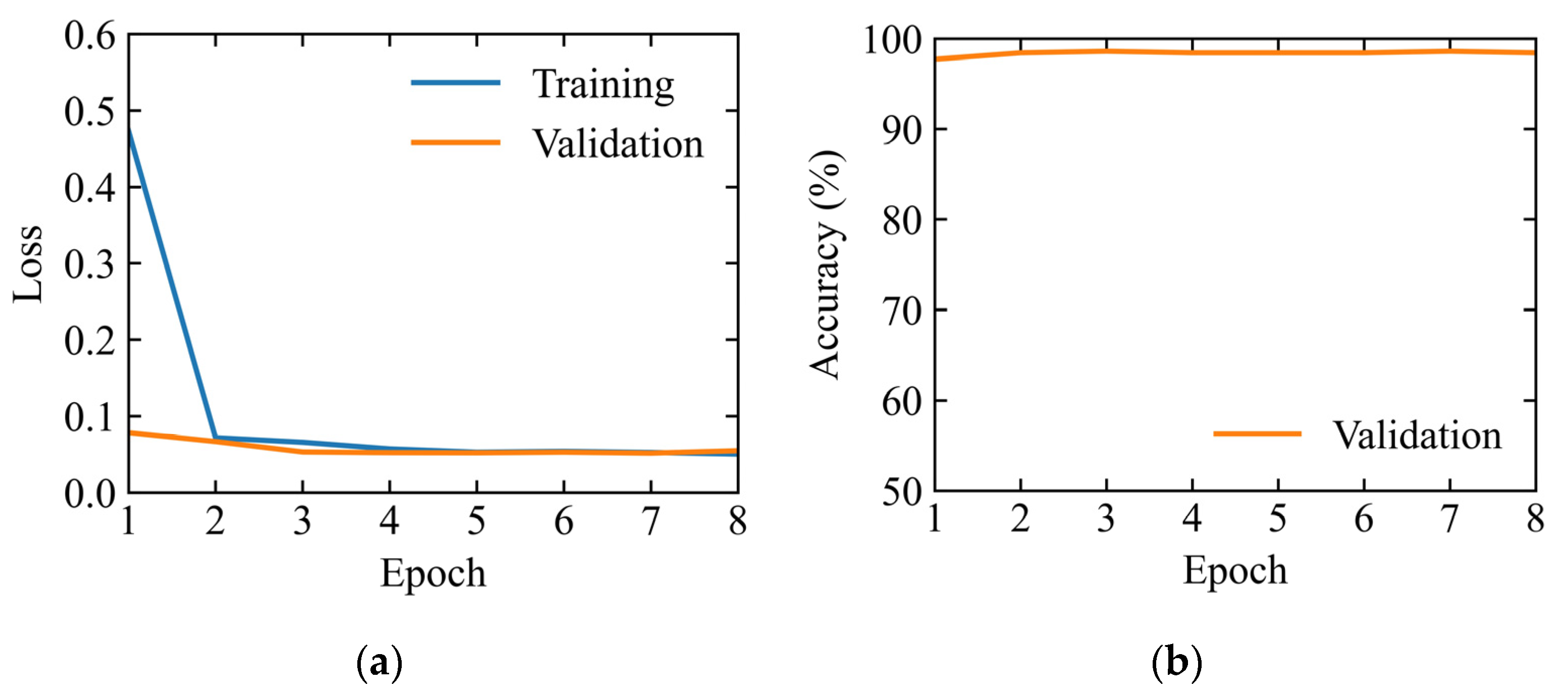

In this study, the characteristics of accelerometer monitoring data from the bridge pier vibration were investigated. The comprehensive evaluation of the ResNet18 and 1D Convolution models across two specific tasks were undertaken: vehicles passing detection, and flood detection under various traffic conditions. The flood detection method based on bridge pier vibration data was validated. The major conclusions of this study are summarized below.

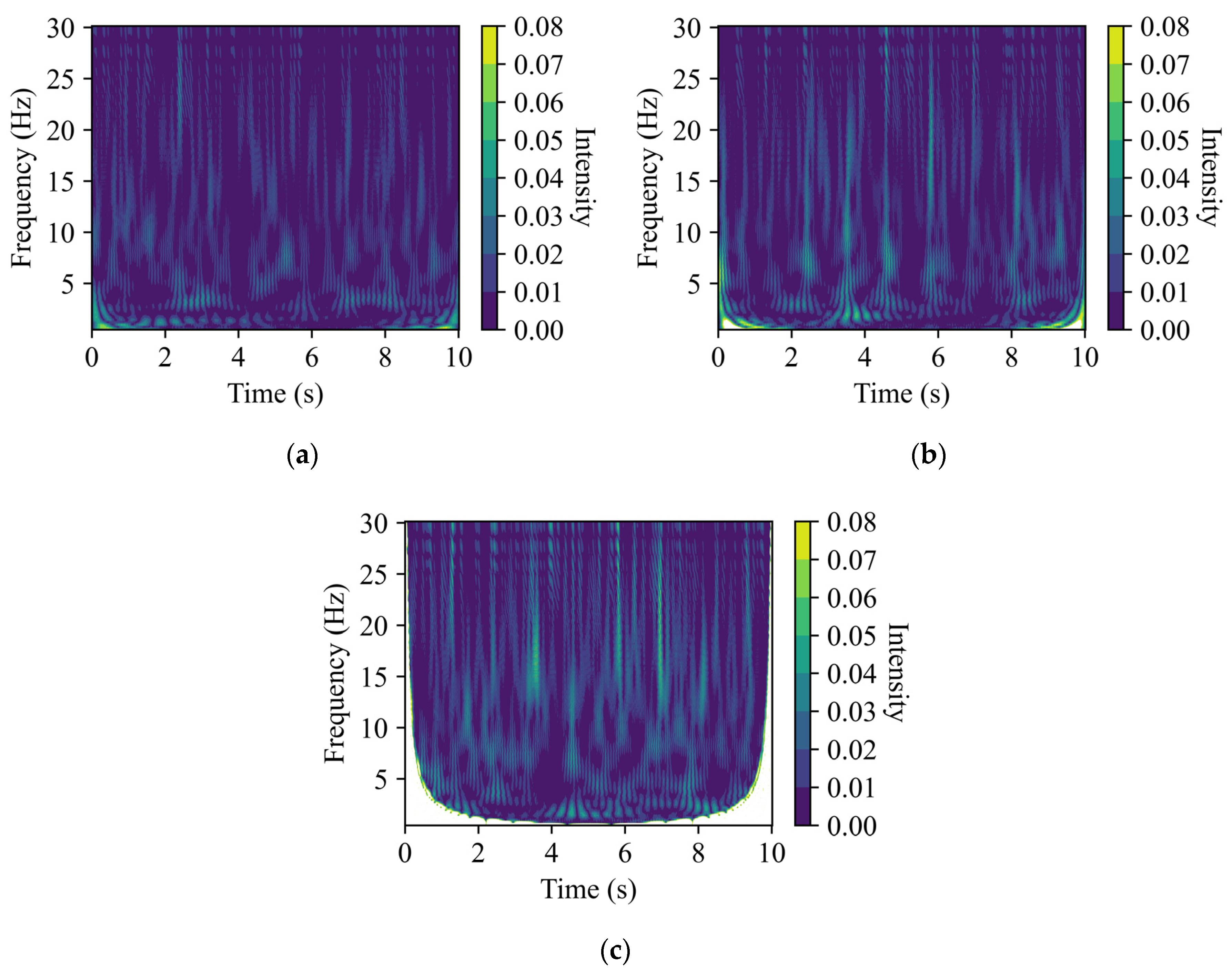

CWT was utilized to transform the accelerometer data into time-frequency representations, which facilitated the detection of vehicles passing on a bridge. It was observed that when vehicles are passing on a bridge, the time-frequency CWT representation from the vertical direction exhibited a broader and more sustained energy distribution across a wider frequency range compared with the river flow and bridge directions. Additionally, for the bridge direction, the time-frequency representations under flooding conditions showed a noticeable increase in intensity and continuity of energy across the spectrum compared with non-flooding conditions.

In terms of detecting the passing of vehicles, the ResNet18 model outperformed the 1D Convolution model, achieving an accuracy of 97.2% compared with 91.4%. This highlights the importance of CWT representation in capturing the amplified signals in the vertical direction across both time and frequency domains of vibrational signals, due to the passing of vehicles.

For flood detection without passing of vehicles, the ResNet18 and 1D Convolution models performed comparably well, achieving accuracies of 97.3% and 98.3%, respectively. However, in scenarios of flood detection with passing of vehicles, the 1D Convolution model significantly outperformed ResNet18, achieving an accuracy of 98.6%, while the latter only sustained an accuracy of 81.6%. This indicates the generalization gain of the simpler 1D Convolution model compared with the more complex ResNet18 model. The latter has a tendency of overfitting to the nonessential vibrational signals induced by the passing of vehicles on the bridge. In summary, this study proposes a two-stage process for flood detection using low-cost accelerometers. The first stage aims to detect whether vehicles are passing on bridges, which serves as a “screening” process for selecting the appropriate model that was trained under each specific situation for flood detection in the second stage.

Regardless of the superior performance of the proposed method in flood detection, several limitations are noted. Firstly, the datasets used for training the models were relatively small; future studies could benefit from utilizing larger training sets to potentially enhance model performance. Secondly, this study only considered accelerometer data. Future work may be directed to the fusion of multisource, multimodal data for enhancing the robustness of flood detection as well as extending the approach to quantify the flooding severity. Thirdly, while this research focused on the performance of the ResNet18 and 1D Convolution models, future investigations could explore a broader array of models and evaluate their effectiveness in detecting and quantifying flood events.