1. Introduction

In recent years, the rapid advancement of unmanned aerial vehicle (UAV) technology [

1,

2] has led to the widespread use of aerial images in the power industry. They are now commonly employed for transmission line inspection [

3,

4] as well as for the planning and management of electrical engineering projects [

5]. However, a single UAV aerial image often provides limited information, which may not be sufficient for these complex tasks. Image matching [

6,

7], which involves aligning several images captured from different spatial coordinate systems but containing the same objects in a unified coordinate framework, serves as a critical step in image fusion [

8,

9], image stitching [

10,

11], and 3-D reconstruction [

12]. Transmission towers are among the most common objects in UAV aerial images [

13] and are distributed across various geographic regions. Matching aerial images of these towers can provide richer and more detailed information about target areas, improving the accuracy of transmission line inspections and offering valuable visual references for power engineering planning. This capability has significant practical importance in real-world applications.

Image matching is a process of identifying identical or similar features across two or more images to establish correspondences between them. Traditional algorithms are primarily based on local features, which describe the local regions around keypoints and then match these keypoints according to distance metrics. The most popular feature-based image matching algorithms are SIFT (Scale-Invariant Feature Transform) [

14], SURF (Speeded Up Robust Features) [

15], ORB (Oriented FAST and Rotated BRIEF) [

16], and AKAZE (Accelerated-KAZE) [

17]. The SIFT [

14] algorithm is highly robust to scale and illumination variations. However, its high computational cost makes it unsuitable for real-time applications. SURF [

15] improves feature detection speed by using integral images for keypoint detection and introducing low-dimensional descriptors, though it still falls short of real-time performance requirements. ORB [

16] combines an improved FAST detection approach with the rBRIEF binary descriptor, significantly boosting the speed. However, it struggles with robustness under scale changes. AKAZE [

17] addresses these challenges by constructing a nonlinear scale space with the Fast Explicit Diffusion (FED) method and employing the Modified-Local Difference Binary (M-LDB) descriptor. This approach offers strong scale and rotation invariance while maintaining a good balance between matching accuracy and computational efficiency.

UAV platforms are often equipped with limited computational resources, so FPGA [

18] and GPU [

19] are often used to accelerate the algorithm operation to meet the real-time requirements. For example, Zhang et al. [

18] combined FAST features with Farneback optical flow on an FPGA to reduce the feature tracking delay to milliseconds. Over the past decade, researchers have also proposed many image matching methods for transmission tower images. For instance, Tragulnuch et al. [

20] introduced a transmission tower detection approach based on video sequences, utilizing the Canny–Hough transform for efficient tower detection. Zhang et al. [

21] introduced a fast image stitching method for transmission towers by combining ORB keypoints with a multi-scale fusion strategy. Similarly, Guo et al. [

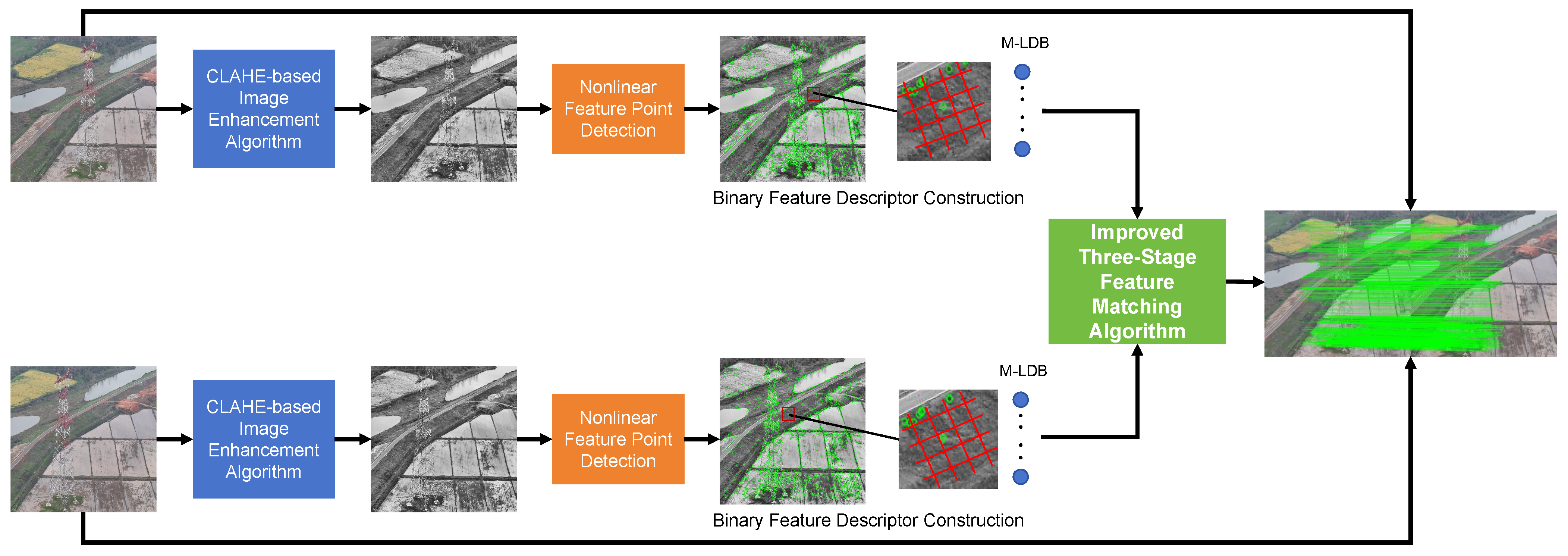

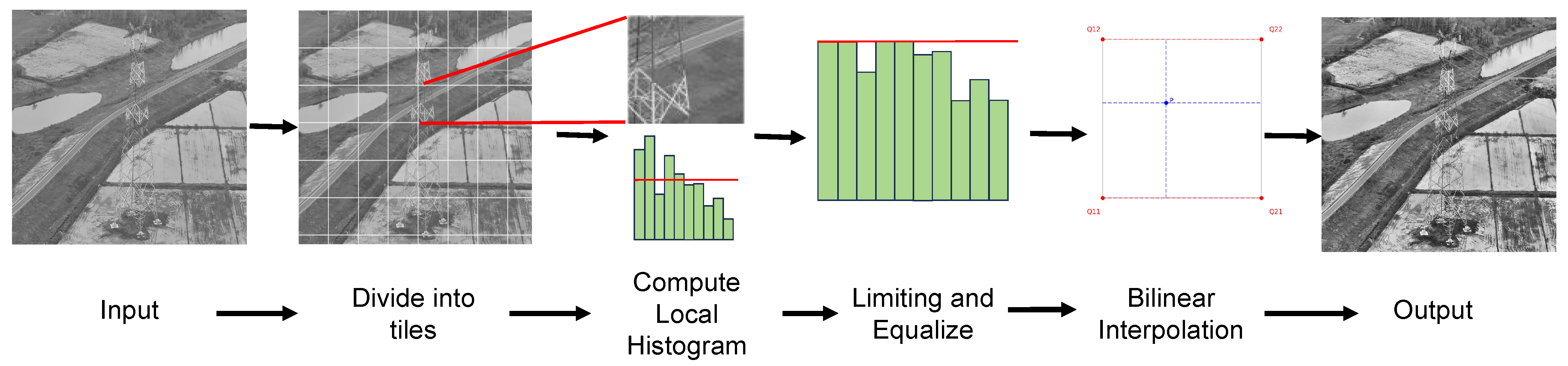

22] employed the Line Segment Detector (LSD) algorithm to detect the structural components of transmission towers accurately. However, these methods often struggle with common challenges in UAV aerial images, such as changes in illumination and image blur, which significantly affect matching accuracy. To overcome these limitations, this study proposes an improved image matching algorithm specifically designed for UAV aerial images of transmission towers. The proposed algorithm consists of two key modules based on the original AKAZE. First, an image preprocessing method is applied to enhance image quality using the Contrast-Limited Adaptive Histogram Equalization (CLAHE) algorithm [

23], which adjusts the contrast of transmission tower aerial images. There is an experimental phenomenon that low brightness or great blurriness in images can obscure object features, hindering feature detection. CLAHE is used to dynamically enhance image contrast and brightness, which helps highlight target structures and increases the number of detectable keypoints. Second, a multi-stage keypoint matching strategy integrates the nearest neighbor ratio (NNR), the Grid-based Motion Statistics (GMS) algorithm [

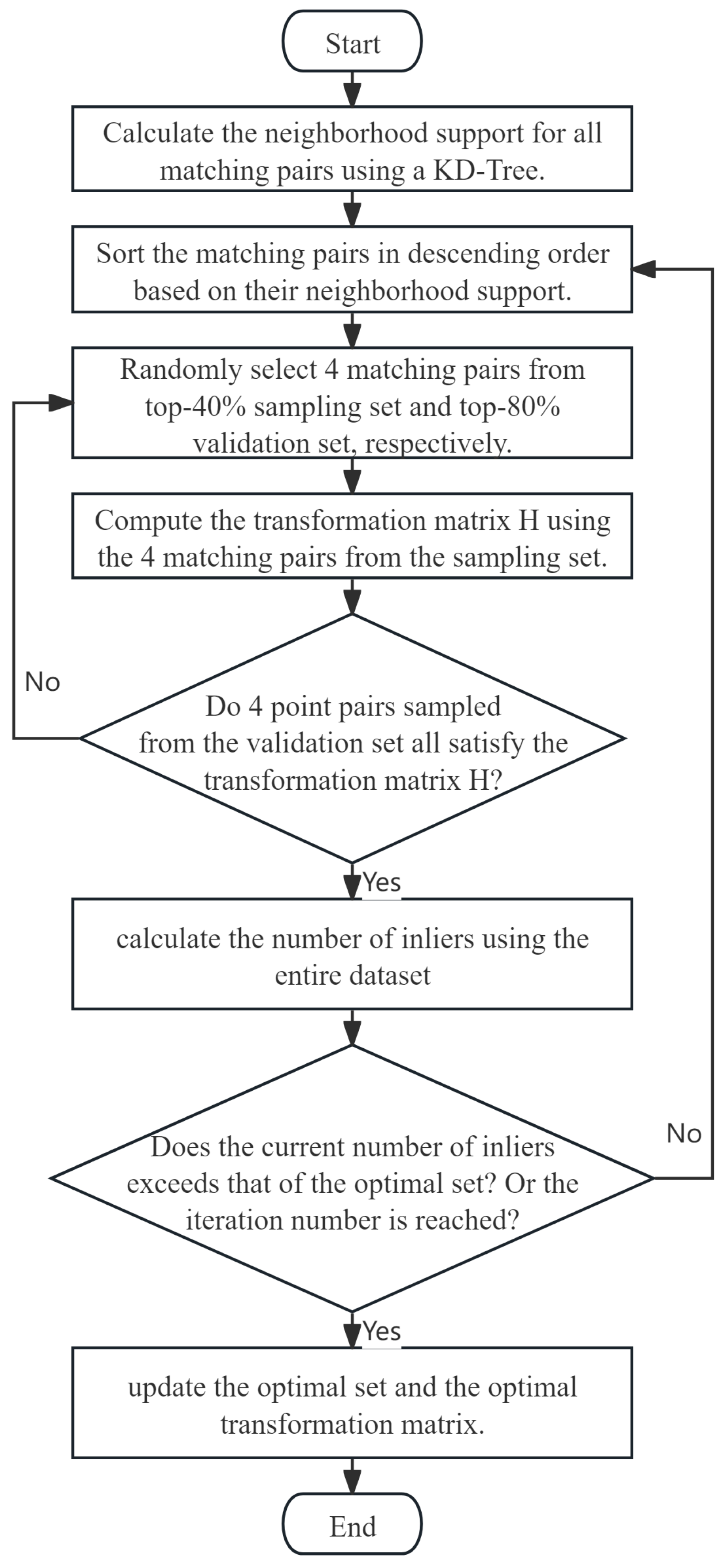

24], and an improved RANSAC algorithm, significantly enhancing matching accuracy. Inspired by the GMS algorithm’s emphasis on keypoints with strong neighborhood support, the improved RANSAC algorithm improves the estimation of transformation matrices by preferentially sampling keypoints with high local support. It can significantly enhance both the accuracy of feature matching and the computational efficiency.

Our approach is the first method that combines CLAHE, AKAZE, GMS, and the improved RANSAC for feature matching of transmission tower images. Specifically, the main contributions of our work can be summarized as follows:

- •

We propose a novel image matching algorithm to achieve fast and accurate image matching of transmission towers, thus meeting the practical applications in the power industry.

- •

We propose a three-stage matching strategy with an improved RANSAC, which can significantly enhance matching accuracy and computational efficiency.

- •

The experimental results on two datasets demonstrate the effectiveness and superiority of our proposed algorithm.

3. Experimental Setting

Datasets. To comprehensively evaluate the effectiveness and robustness of the proposed approach, we conducted experiments using two datasets: the widely used Oxford image matching dataset [

26] and a self-built dataset. The Oxford dataset is one of the most widely used benchmark datasets in image matching research, containing 8 image sequences with 6 images per sequence. Each sequence captures the same scene under different conditions, providing diverse test cases for evaluation. As illustrated in

Figure 5, these variations include changes in blur, viewpoint, illumination, and other transformations, making the dataset well suited for evaluating the generalization of image matching algorithms. The self-built dataset was collected using a DJI Air 3 UAV produced by DJI company with a resolution of

in Jiangmen, China. As illustrated in

Figure 6, the dataset contains aerial images of typical ground objects, including transmission towers, residential buildings, farmland, and forests. These images were captured under diverse environmental conditions, including different times of day and varying weather, providing a challenging testbed for evaluating the algorithm’s real-world performance.

Evaluation metrics. To evaluate the performance of the proposed algorithm, two main evaluation metrics were employed: feature matching time and correct matching rate (CMR). Feature matching time reflects the real-time efficiency, while CMR is used to quantify feature matching accuracy. The CMR is defined as follows:

where

denotes the number of correctly matched feature point pairs, and

represents the total number of matched feature point pairs. A higher CMR indicates greater reliability and precision.

4. Implementation Details

All experiments were performed on the Windows 10 operating system with an AMD Ryzen 7 5800H CPU produced by Taiwan Semiconductor Manufacturing Company (TSMC) at 3.20 GHz and 16 GB of RAM. The algorithms were implemented using Python 3.8 in the PyCharm 2022.1.2 development environment, and the OpenCV 4.5.1 tool was employed for all experiments. To validate the performance of the proposed method, comparative experiments were performed against five widely used feature matching algorithms: SIFT, SURF, ORB, KAZE, and AKAZE. These methods serve as benchmarks for assessing both matching accuracy and computational efficiency.

To ensure optimal performance, several key hyperparameters were carefully configured. Following the default settings in the OpenCV library, we applied CLAHE with a clipping threshold of 2 and a local tile size of pixels. For feature matching, the nearest neighbor ratio threshold was set to 0.8. The hyperparameters for the improved RANSAC algorithm were determined empirically, including a circular neighborhood radius of 50 pixels and a maximum iteration limit of 500.

5. Results and Discussion

5.1. Experimental Results on the Oxford Dataset

To evaluate performance under different conditions, we selected four image sequences from the Oxford dataset: Bikes (image blur), Wall (viewpoint change), Leuven (light change), and Ubc (JPEG compression). Each set contains six images, with the first image designated as the reference image and the remaining five used for matching tests. Each experiment was repeated multiple times with different random seeds to compute average values and standard deviations. The experimental results are presented in

Table 1 and

Table 2.

Table 1 shows the matching accuracy of different algorithms across the different conditions. The results demonstrate that the proposed method outperforms other algorithms in matching accuracy across all test conditions. Notably, it particularly shows strong robustness to blur and viewpoint changes while maintaining consistent performance under other variations. For instance, in the Bikes image set, the proposed method achieves an accuracy of 96.11% with a 0.26% of standard deviation, which is a notable improvement over the KAZE and AKAZE algorithms. These results prove our proposed algorithm’s enhanced stability and effectiveness in challenging visual scenarios.

As shown in

Table 2, the proposed algorithm demonstrates the lowest average feature matching time across all tested data. It is approximately one-third of the SIFT algorithm, representing a substantial reduction compared to the AKAZE algorithm as well. The proposed method consistently outperforms other algorithms across all other environmental changes. In addition, the standard deviation of the average matching time of this proposed algorithm on the Oxford dataset is 8.98ms, which is much lower than other methods, demonstrating the robustness. With both outstanding accuracy and superior real-time performance, we believe that the proposed algorithm can meet the requirements for practical applications.

5.2. Experimental Results on the Self-Built Dataset

Table 3,

Table 4,

Table 5,

Table 6 and

Table 7 present the comparative analysis of experimental results between the proposed algorithm and several mainstream algorithms across five different aerial image sets. The results consistently demonstrate that the proposed method achieves the highest matching accuracy, confirming its robustness under diverse environmental conditions. For example, in the second image set featuring image blur, the proposed algorithm attains a matching accuracy of 98.62%, significantly outperforming SIFT (60.36%), SURF (70.57%), ORB (47.77%), and AKAZE (86.21%). ORB typically offers excellent speed, but its low accuracy makes it difficult to meet practical requirements. Furthermore, as shown in

Table 6, the proposed method significantly outperforms the original AKAZE algorithm in handling challenging lighting conditions. While AKAZE successfully matched only eight feature point pairs in the farmland image set with severe illumination changes, our approach achieved a matching accuracy of 99.29%. This improvement is primarily attributed to the integration of the CLAHE algorithm, which enhances image contrast and emphasizes edge features, enabling reliable feature point detection even in low-light environments.

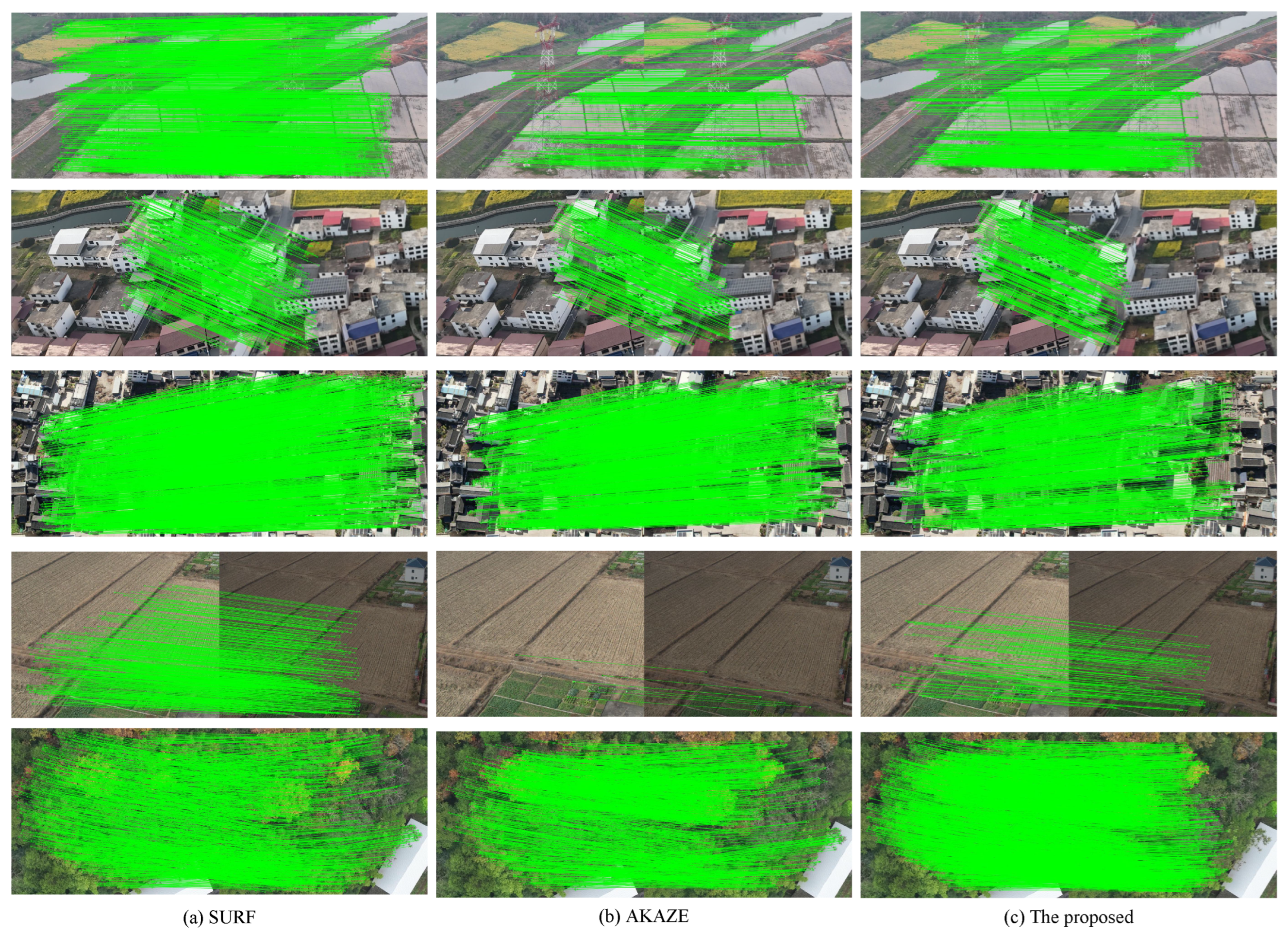

To provide a more intuitive understanding of different methods,

Figure 7 presents a qualitative comparison between the proposed method and other algorithms, i.e., SURF and AKAZE. It clearly shows that the proposed algorithm can effectively detect and match a sufficient number of correct feature points under varying lighting conditions. Additionally, due to the three-stage feature matching strategy, the proposed algorithm exhibits the least false matches than other methods, resulting in a visually accurate matching performance.

Matching time is a crucial metric for evaluating the real-time performance of the proposed algorithm. As presented in the tables, the proposed method consistently achieves faster matching speeds compared to traditional approaches, significantly outperforming both SIFT and SURF. For instance, in the case of building images with scale change, our proposed approach achieves a matching time of just 972.28 ms—nearly five times faster than both SIFT and SURF (requiring only 19% and 20% of their processing time, respectively). Compared to AKAZE, the proposed method also demonstrates superior efficiency. Specifically, it needs less matching time than AKAZE even when processing twice the number of feature points as reported in

Table 3, highlighting its computational efficiency. Recent advancements in deep learning-based image matching, such as SuperPoint [

27], SuperGlue [

28], and LoFTR [

29], have shown remarkable accuracy by designing sophisticated neural network architectures. However, these methods typically require substantial computational resources supported by high-performance GPUs and rely heavily on large-scale, high-quality annotated datasets for effective training. Meanwhile, aerial image matching imposes stringent requirements for real-time performance. The experimental results demonstrate that the proposed algorithm effectively meets practical requirements in both matching accuracy and computational efficiency. Moreover, the algorithm exhibits low computational costs, making it particularly suitable for deployment in UAVs.

5.3. Experiment Results on Transmission Tower Image Matching

Detailed experiments were conducted on aerial images of transmission towers to validate the robustness of the proposed algorithm against changes in blur, scale, light, and rotation. The evaluation involved sequentially matching the original image with progressively modified versions (labeled as ’1’, ’2’, and ’3’ in

Table 8,

Table 9,

Table 10 and

Table 11), where each level represented increasing degrees of transformation. In every experiment, we recorded matching accuracy and matching time across all tested algorithms.

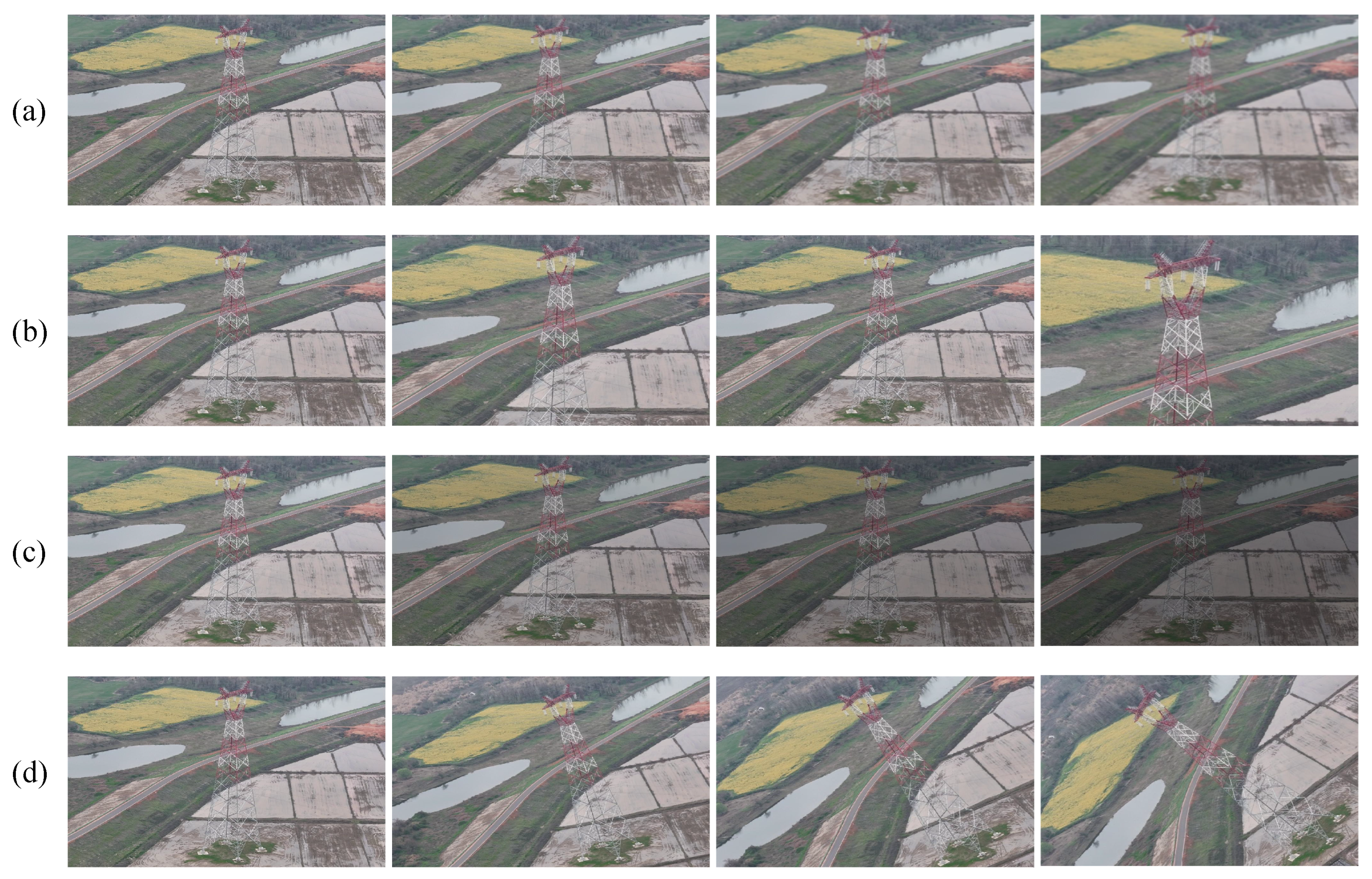

Image Blur. To simulate the blurring effects of haze on aerial images of transmission towers, this study applies varying degrees of mean blur processing. This creates a sequence of images gradually increasing in blur intensity as shown in

Figure 8a. According to

Table 8, the proposed algorithm consistently outperforms other methods in matching accuracy. It achieves an accuracy of 86.88% when matching the original image and heavily blurred images, demonstrating strong robustness against blur variations.

Scale change. Aerial images of transmission towers obtained by drones with different shooting distances and focal lengths vary significantly in scale. As shown in

Figure 8b, this study enlarged the original image by different multiples, including 25%, 50%, and 100%, and then cut off parts of the same size image to simulate scale variations. As shown in

Table 9, the proposed algorithm consistently achieves matching accuracies above 97% for images with different scale changes, while other methods degrade as scaling increases. Compared to the original AKAZE algorithm, our proposed algorithm achieves an average accuracy improvement of nearly 10%, accompanied by a stable reduction in average matching time.

Light change. By adjusting the brightness of the original image, illumination variations due to weather or time changes are simulated. As shown in

Figure 8c, the aerial images of transmission towers undergo incremental decreases of 15% in brightness to obtain varying degrees of illumination variation.

Table 10 compares the matching performance of different algorithms under varying lighting conditions. The proposed algorithm shows outstanding performance in matching images under varying lighting conditions. While other algorithms suffer from poor accuracy as brightness changes—for instance, AKAZE achieves only 54.26% accuracy on the third image set—our improved method maintains a consistently high matching accuracy above 99% across all test cases. This proves its strong robustness to light changes.

Rotation. To generate the rotated transmission tower images in

Figure 8d, we rotated the original image counterclockwise by 15° three times. The matching results for these rotated images are presented in

Table 11. Our proposed algorithm achieves an average matching accuracy approximately 4% higher than AKAZE and SIFT. Additionally, it demonstrates significantly faster matching speeds compared to SIFT and SURF, while performing on par with the original AKAZE.

5.4. Further Discussion

Runtime Performance Analysis. We tested the hardware requirements and runtime of each component of the proposed method on the self-built dataset. As shown in

Table 12, the proposed method only uses 19.6% of CPU power and 3.1 MB of memory during execution, which is slightly higher than the original AKAZE algorithm. Current mainstream computing platforms or drones, such as the Specs Manifold2 and DJI Mavic 3, can easily meet this algorithm’s hardware requirements. For example, the Specs Manifold 2 is equipped with NVIDIA Jetson TX2 and 8GB of memory. It can be seen that feature matching is the main step limiting the real-time performance, with feature matching being the primary time-consuming step (more than 60%). The proposed method reduces the matching time to half that of the original algorithm, and the improved RANSAC’s runtime is only one-third of the original, significantly enhancing the algorithm’s real-world ability. This improvement is mainly attributed to the use of neighborhood support and KD-trees, which simplify the repeated computation of feature point similarity. Overall, the proposed algorithm demonstrates a good real-time performance, while its hardware requirements remain within a reasonable range.

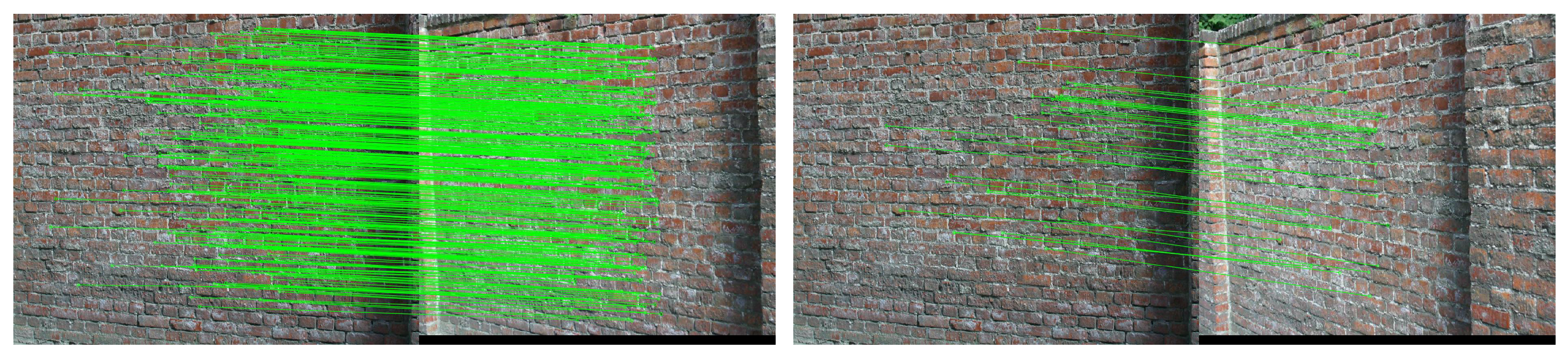

Restricted case analysis. Although our proposed method has exhibited superior performance in the image matching task compared to other algorithms, there is still room for improvement. We show the restricted cases of the proposed method on the Oxford dataset. As shown in

Figure 9, the algorithm can detect a large number of feature points on normal wall images (left case) with high matching accuracy. On the other hand, only a few feature points can be detected on images with severe viewpoint changes (right case). This phenomenon is mainly because the severe view angle changes distort the local features, making it difficult to match the feature points successfully.

Embedded platform deployment. One limitation is that all our experiments were conducted on a general-purpose computing platform, which does not reflect the resource-constrained nature of typical embedded platforms used in UAV applications. While the current results provide a baseline for algorithm performance, future work could focus on evaluating the proposed method on representative constrained hardware platforms such as FPGAs. This will help to assess the real-world feasibility and optimization potential of our approach.

Operation security. In real-world UAV-based power infrastructure inspection systems, cybersecurity is a critical concern. Potential threats include sensor spoofing (e.g., using fake towers to confuse detection), tampering with the image transmission pipeline (e.g., intercepting or modifying image streams), and adversarial image perturbations that may mislead the image matching algorithm. For these problems, our proposed algorithm would introduce image integrity verification mechanisms, such as digital watermarking or cryptographic hash checking, to ensure the authenticity of the input images. In addition, a robust matching technique strategy can also be employed to enhance the resilience against cyberattacks. Image matching failures may lead to misinterpretation of structural conditions or navigation errors. In this regard, the proposed algorithm ensures the reliability of the matching output results through introducing a verification step. This step involves cross-validating the matched image features with historical grid data and applying confidence scoring.

UAV-based power infrastructure inspection is also inherently safety-critical. A failure in image matching could lead to misinterpretation of structural conditions or navigation errors. Therefore, ensuring robustness to failures, real-time response guarantees, and fallback mechanisms is essential for future deployment. The current version of our algorithm focuses on accurate and efficient image matching under normal conditions. Future work will extend the system with real-time constraints and fault-tolerance mechanisms, such as timeout-based watchdogs, multi-stage verification pipelines, or confidence-driven fallback strategies. Additionally, it is important to analyze the timing determinism of the algorithm under resource constraints, especially when deployed on embedded platforms. These aspects are vital to enhance the reliability and safety of the system in practical, autonomous operations.