Generative Design by Using Exploration Approaches of Reinforcement Learning in Density-Based Structural Topology Optimization

Abstract

1. Introduction

1.1. Design Exploration in Generative Design

1.2. Exploration Methods in Reinforcement Learning

1.3. Research Purpose

2. Density-Based Structural Topology Optimization

2.1. Problem Statements

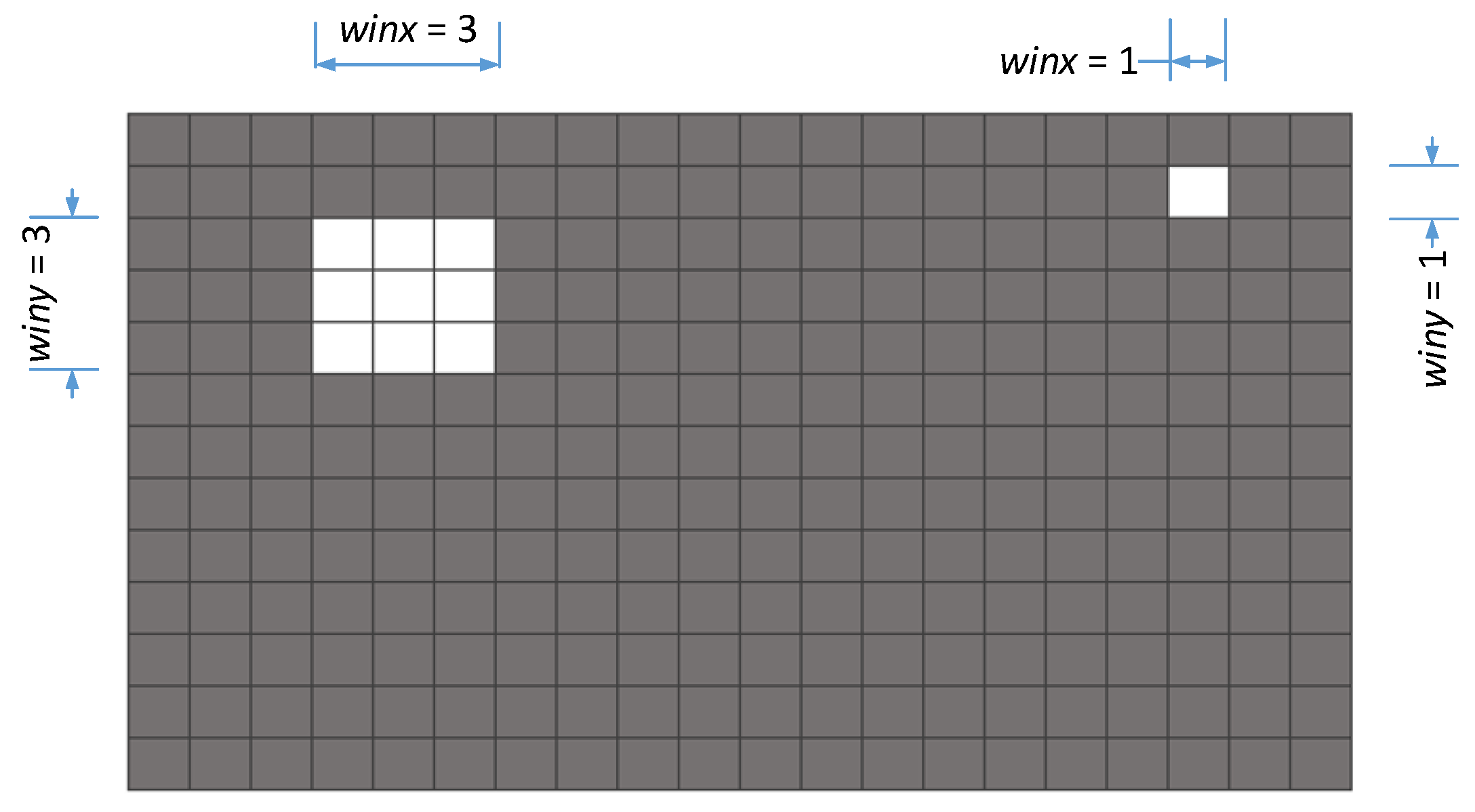

2.2. Sensitivity Analysis and Filter Schemes

2.3. Adding and Removing Elements

2.4. Convergence Criterion

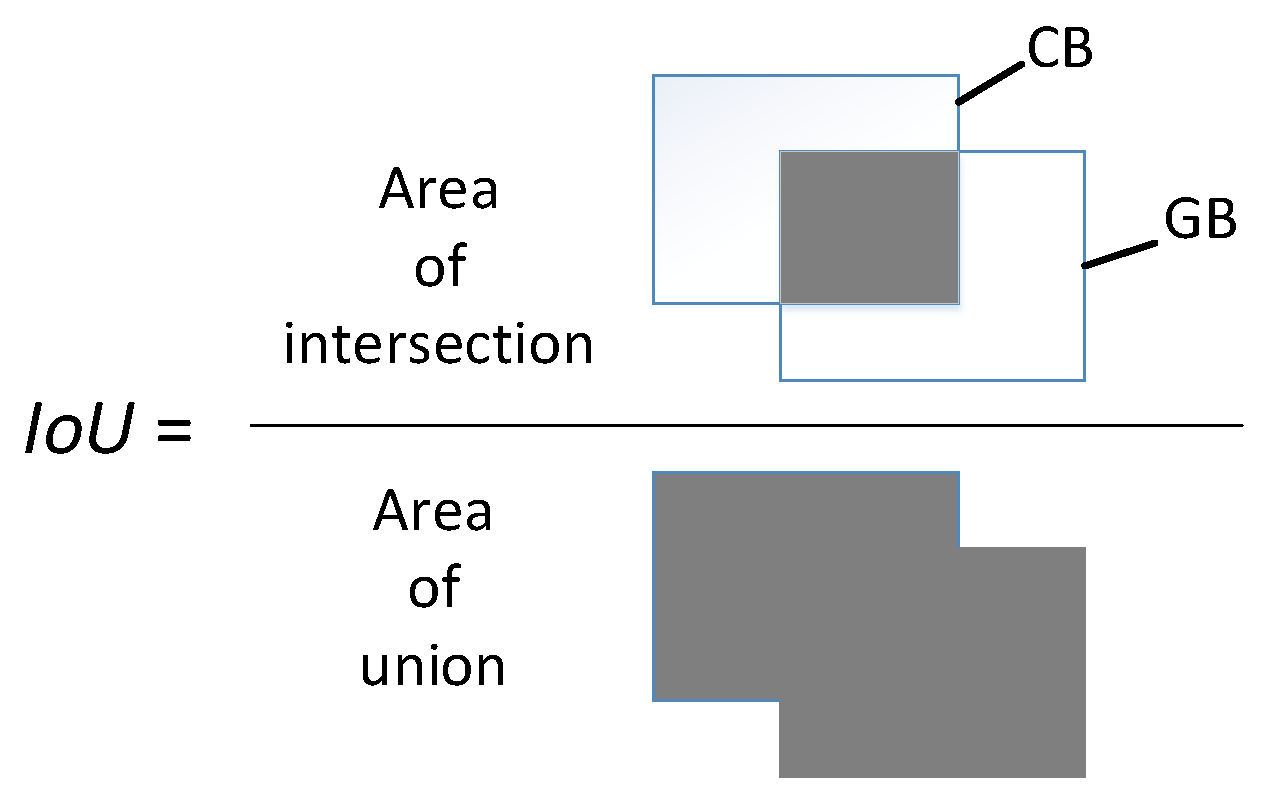

2.5. Evaluation

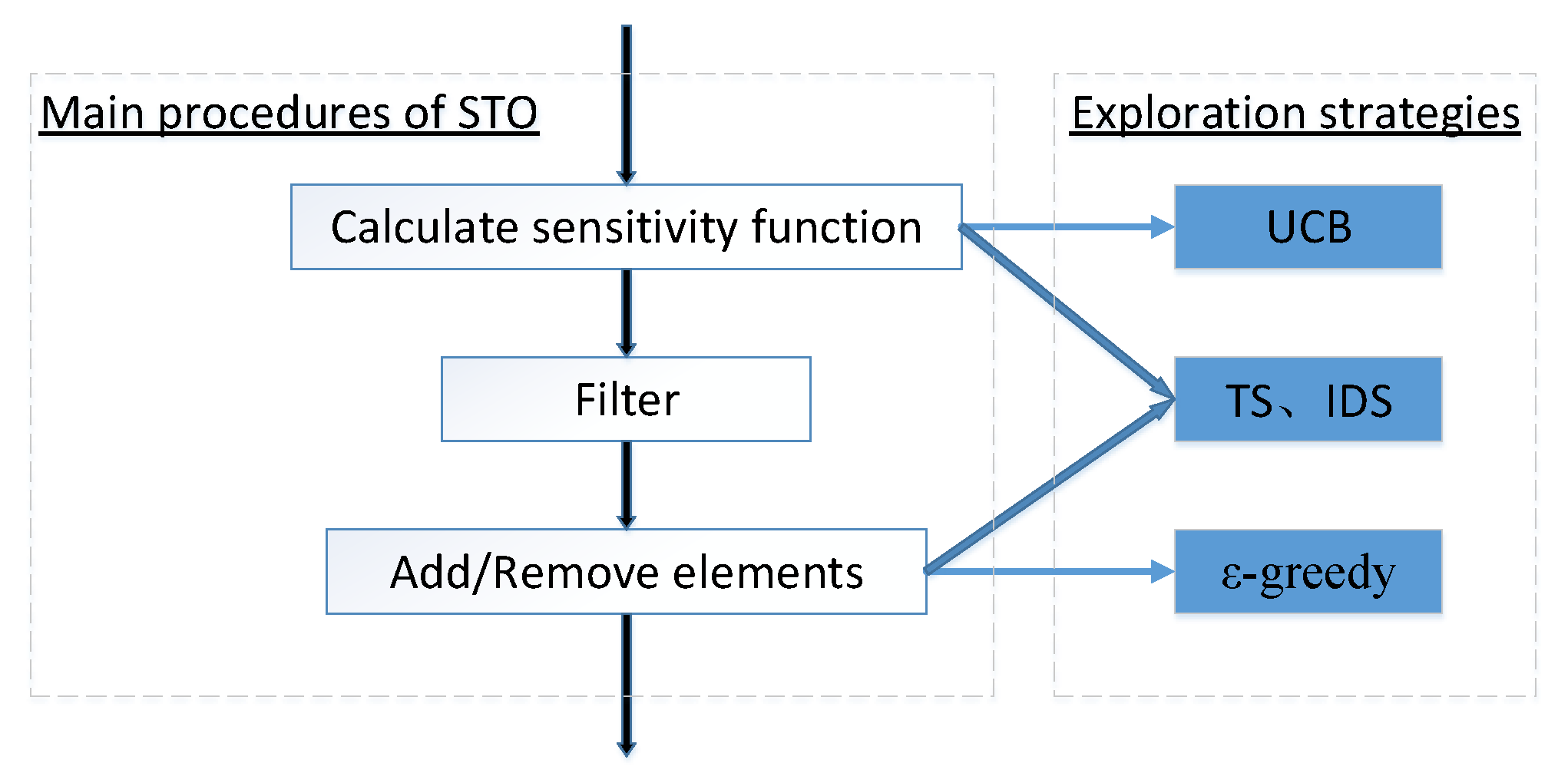

3. Using Exploration Approaches of Reinforcement Learning in STO

3.1. Naïve Exploration

3.2. Optimistic Exploration

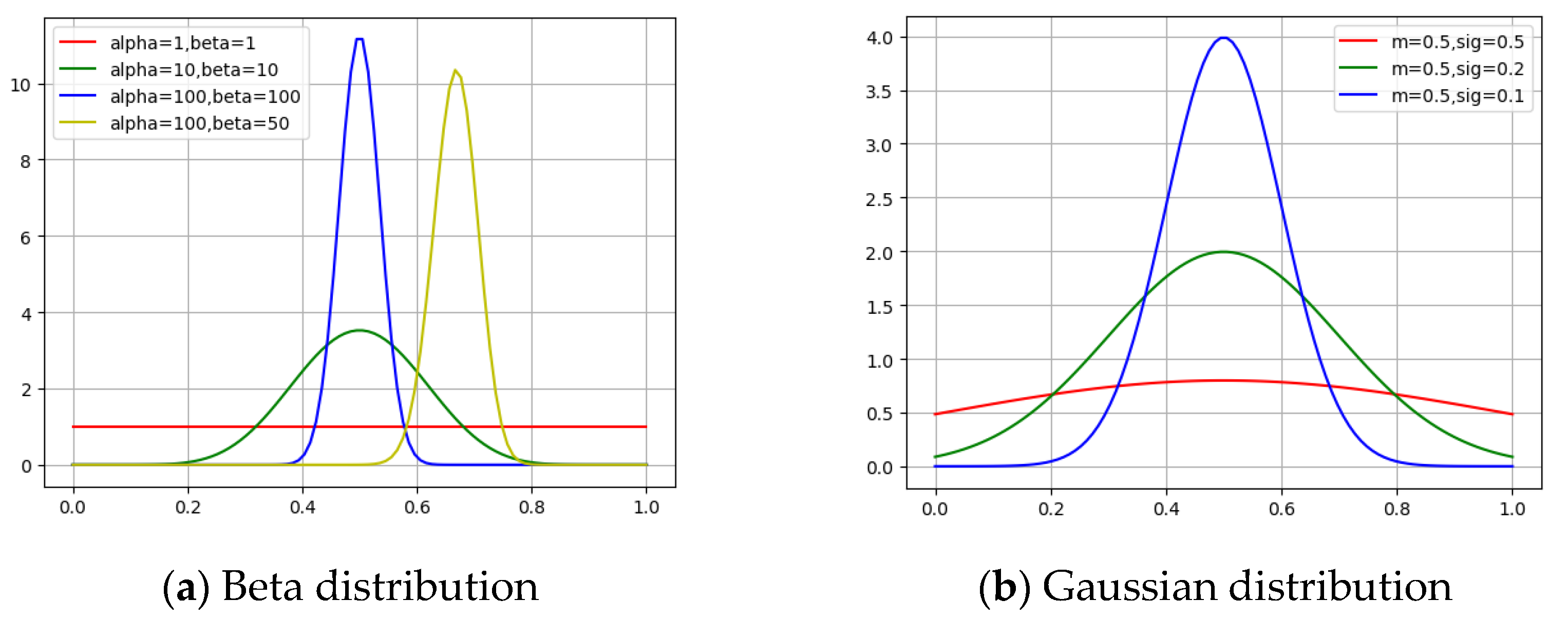

3.3. Probability Matching

- Sample the reward from the posterior reward distribution

- Compute the action-value function

- Take the optimal action by Equation (18)

- Execute the chosen action in actual environment and get the reward

- Update the posterior distribution by Equations (19) and (20)

3.4. Information State Search

- Get the samples for estimation by interacting with the actual environment in the first iteration, and by the updated posterior reward distribution in the following steps

- Compute , and the information gain ratio

- Take the optimal action by Equation (21)

- Execute the chosen action in actual environment and get the reward

- Update the posterior reward distribution by Equations (19) and (20)

4. Cases and Discussion

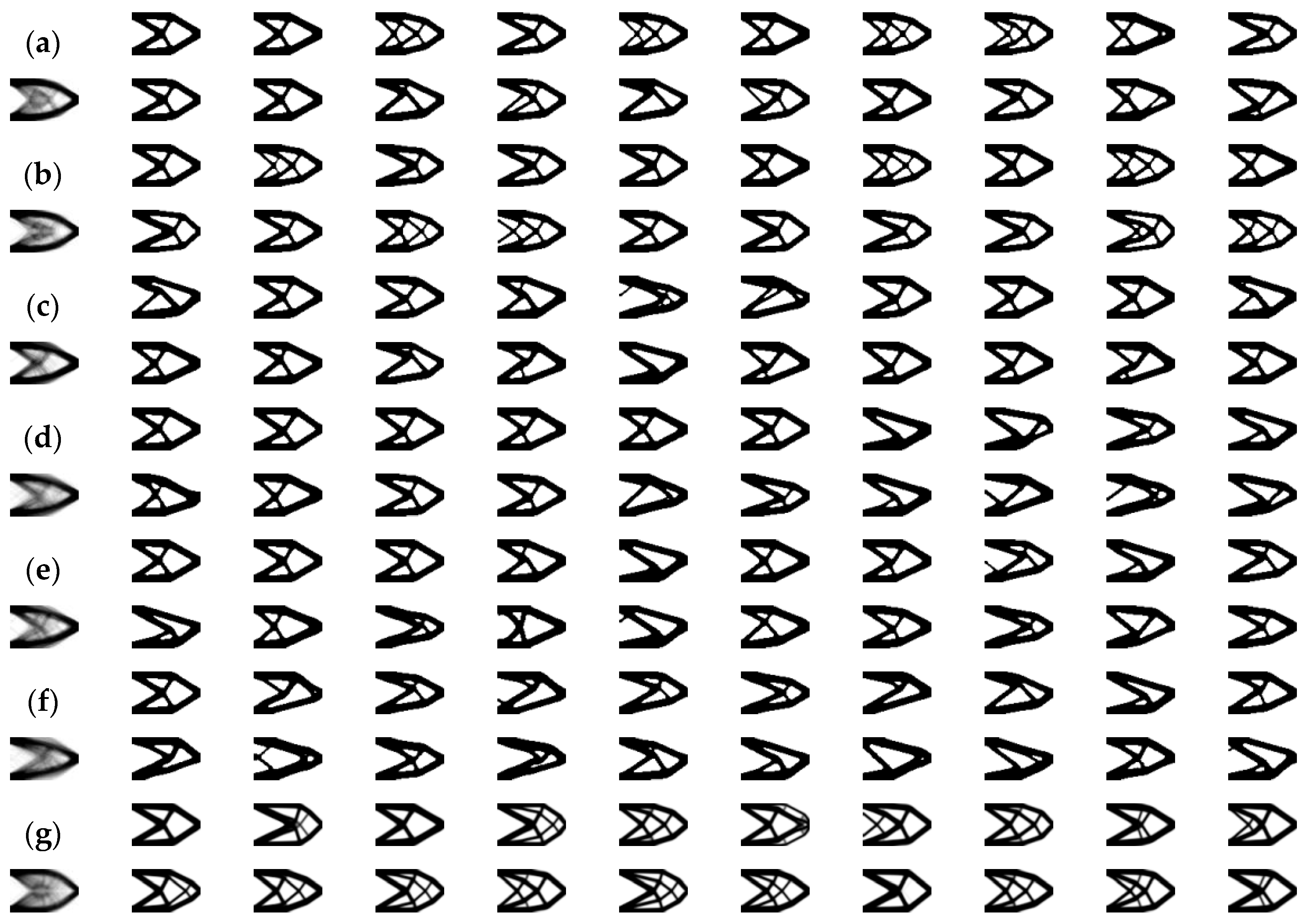

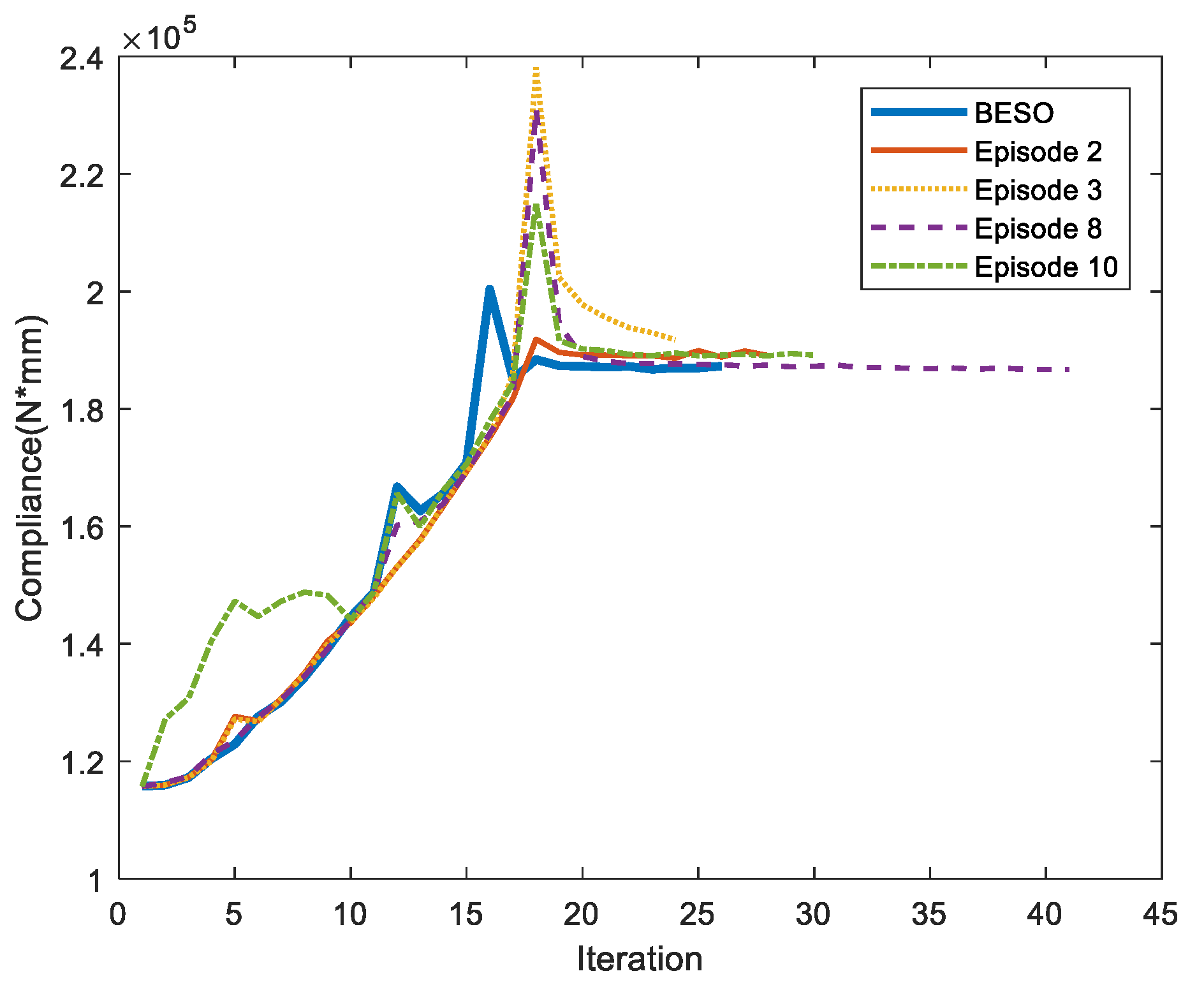

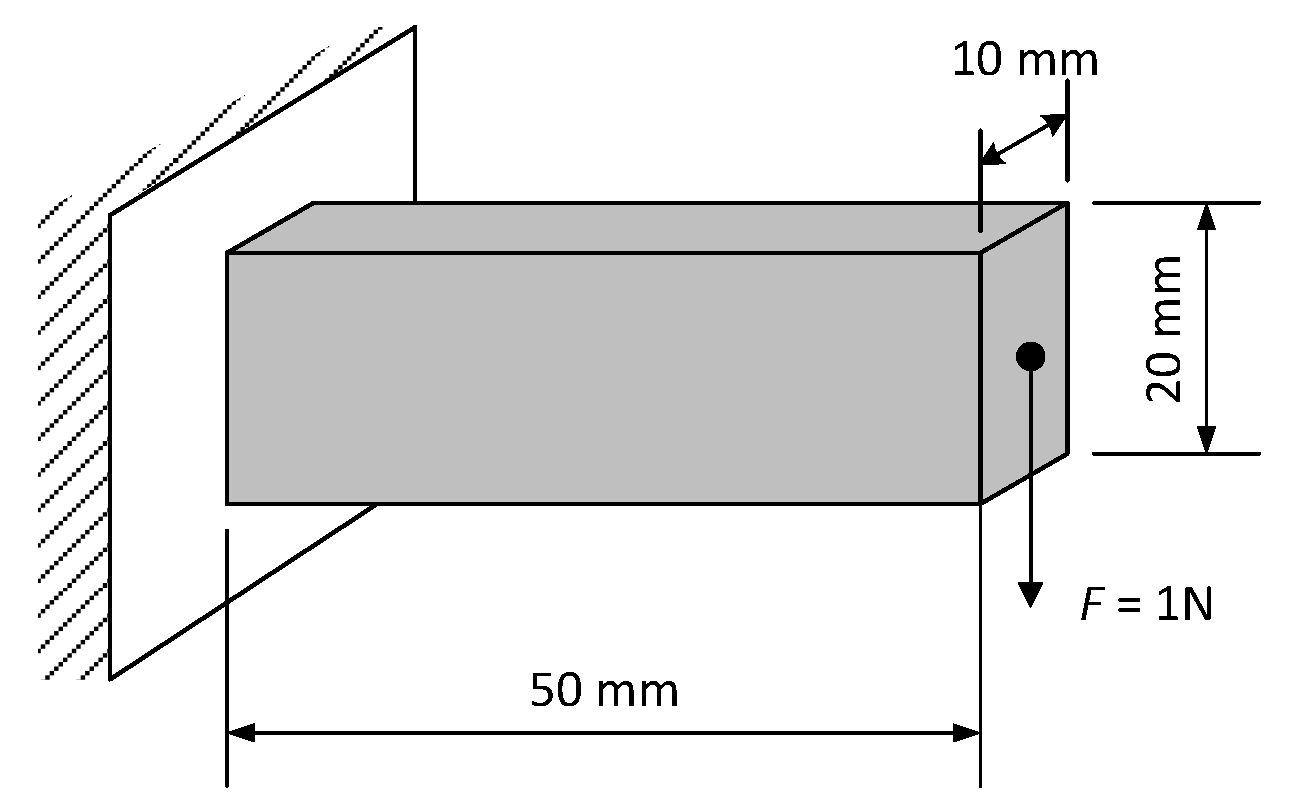

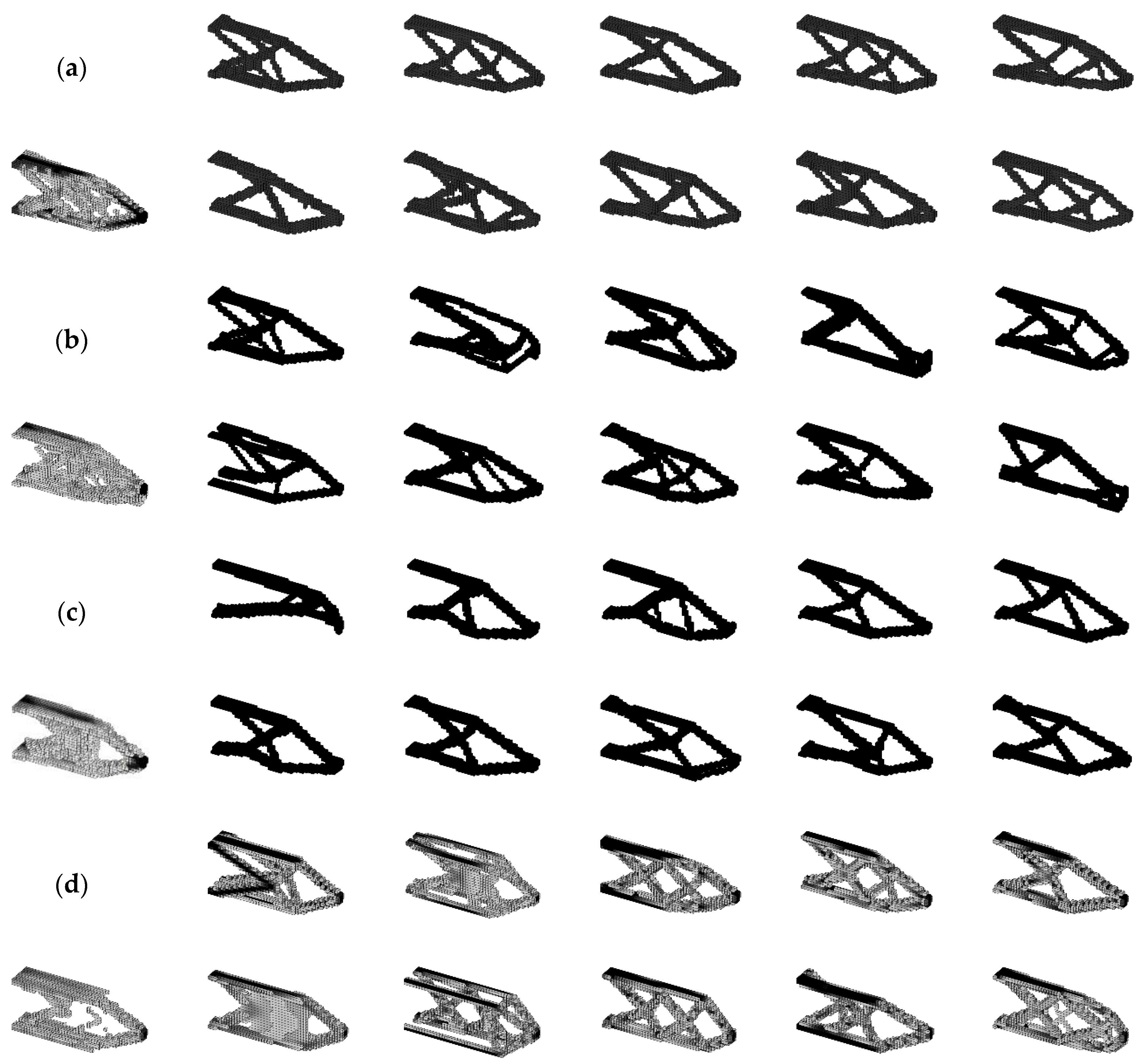

4.1. Cantilever Beam

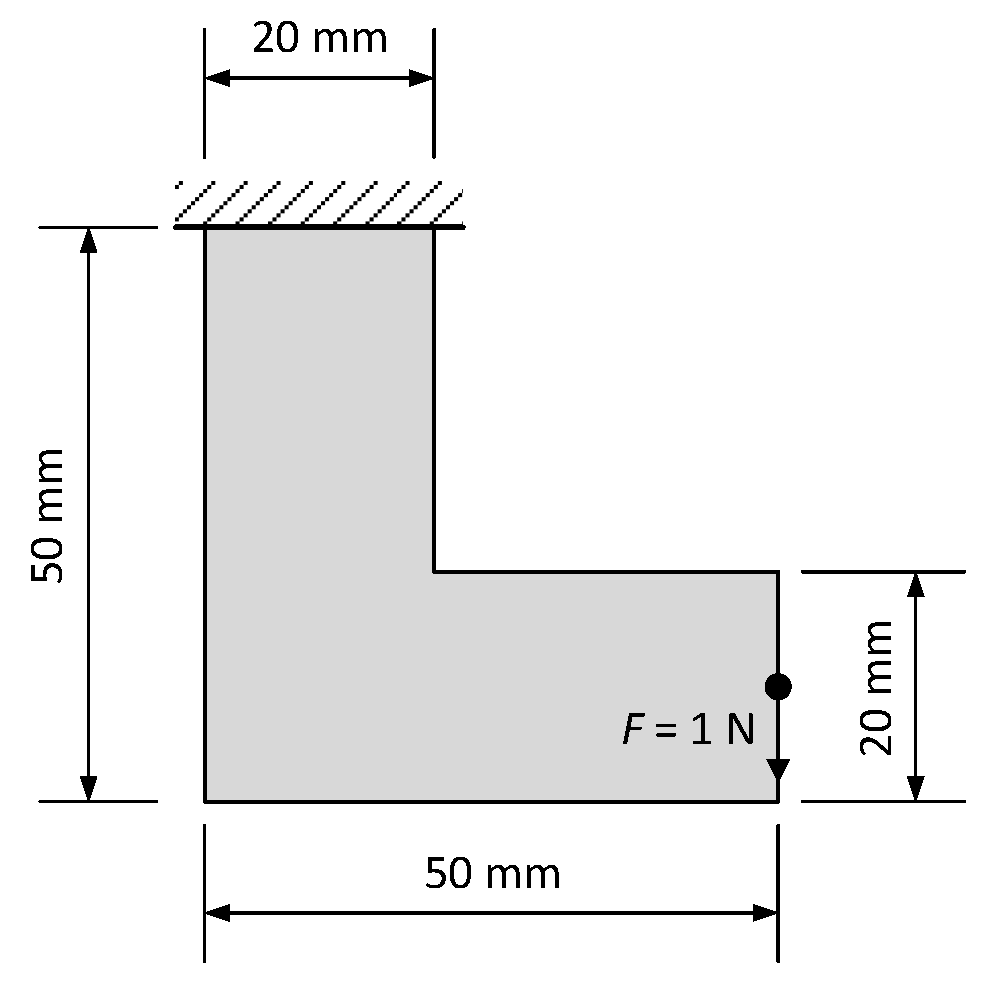

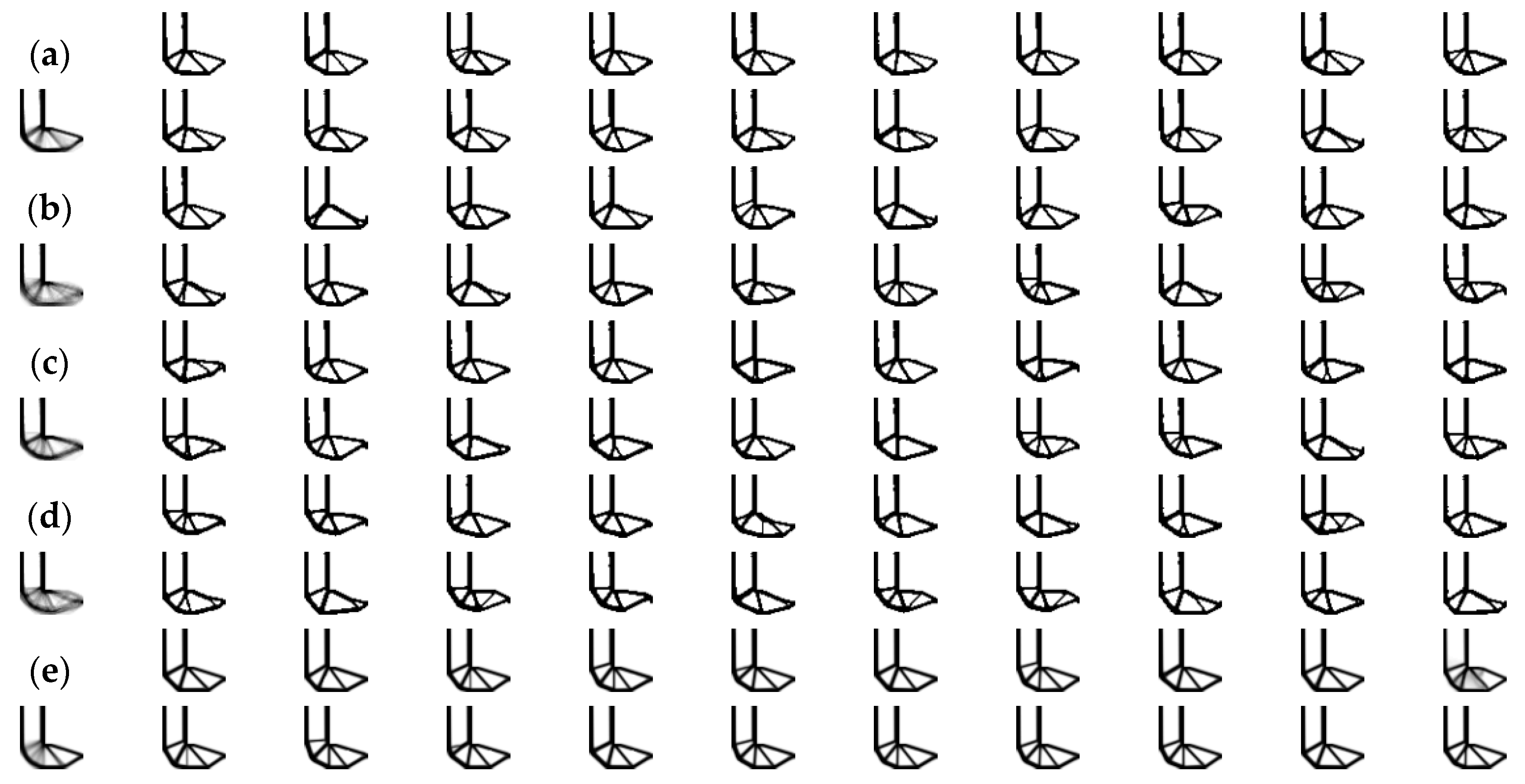

4.2. L-Shaped Beam

4.3. 3D Cantilever Beam

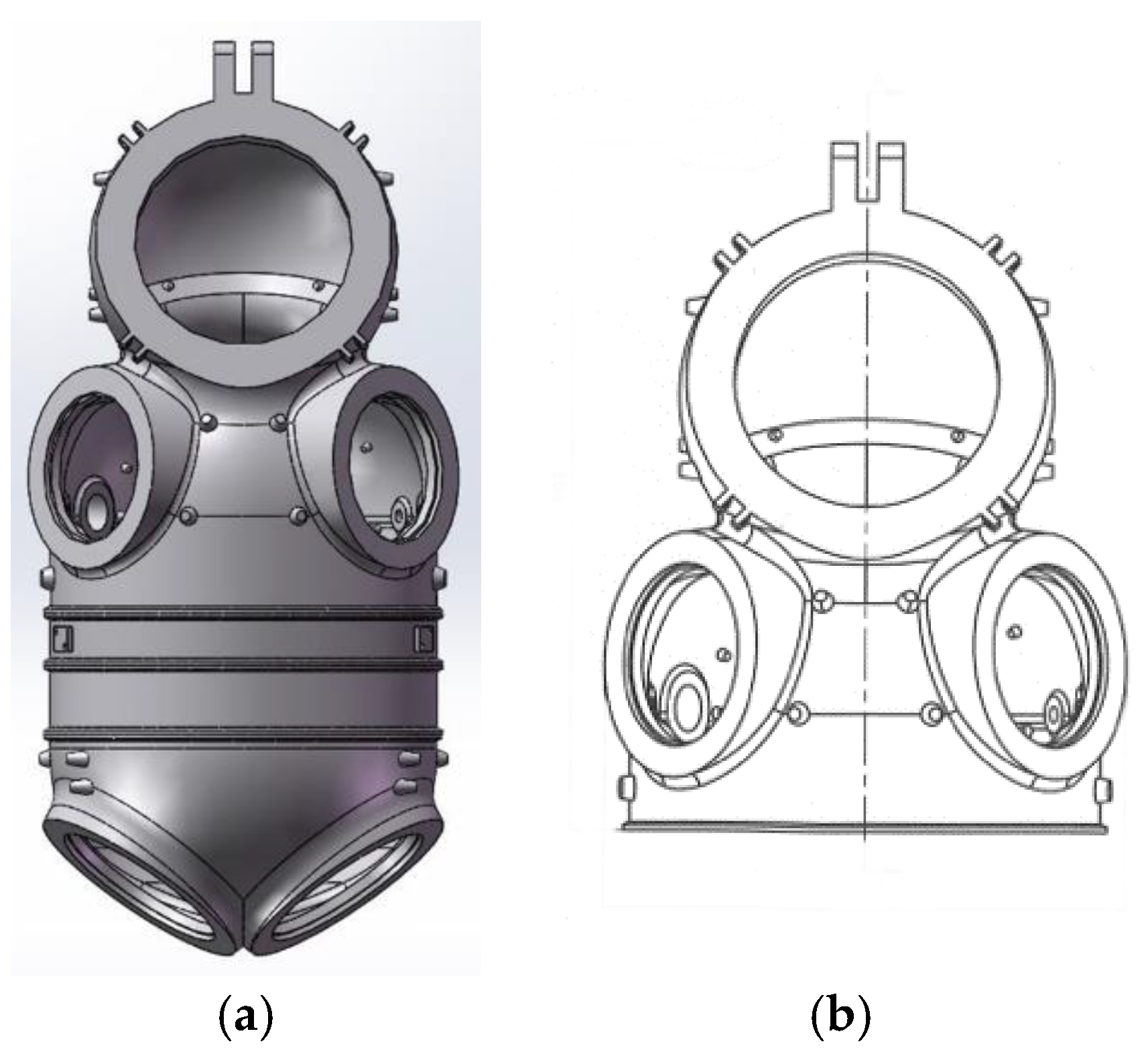

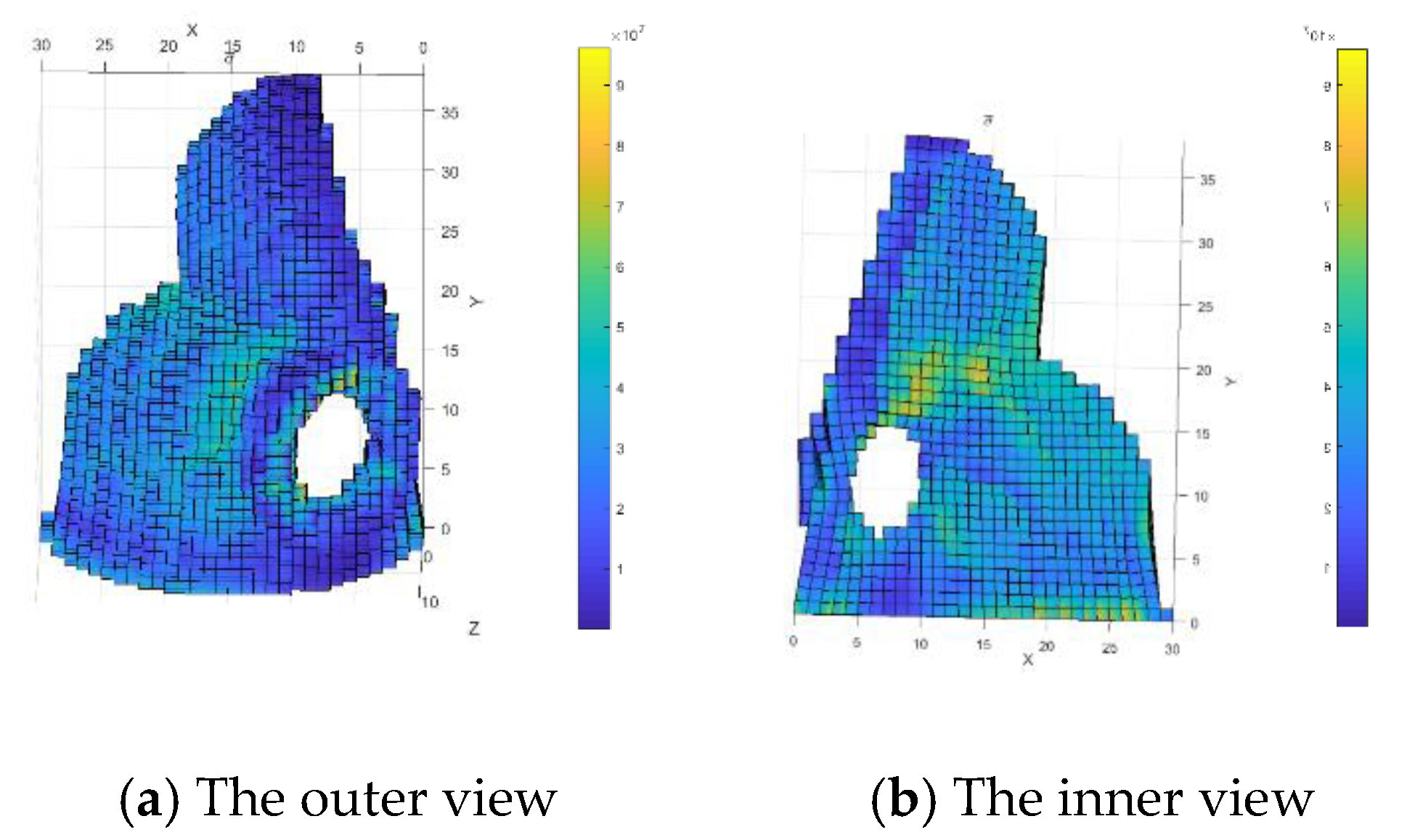

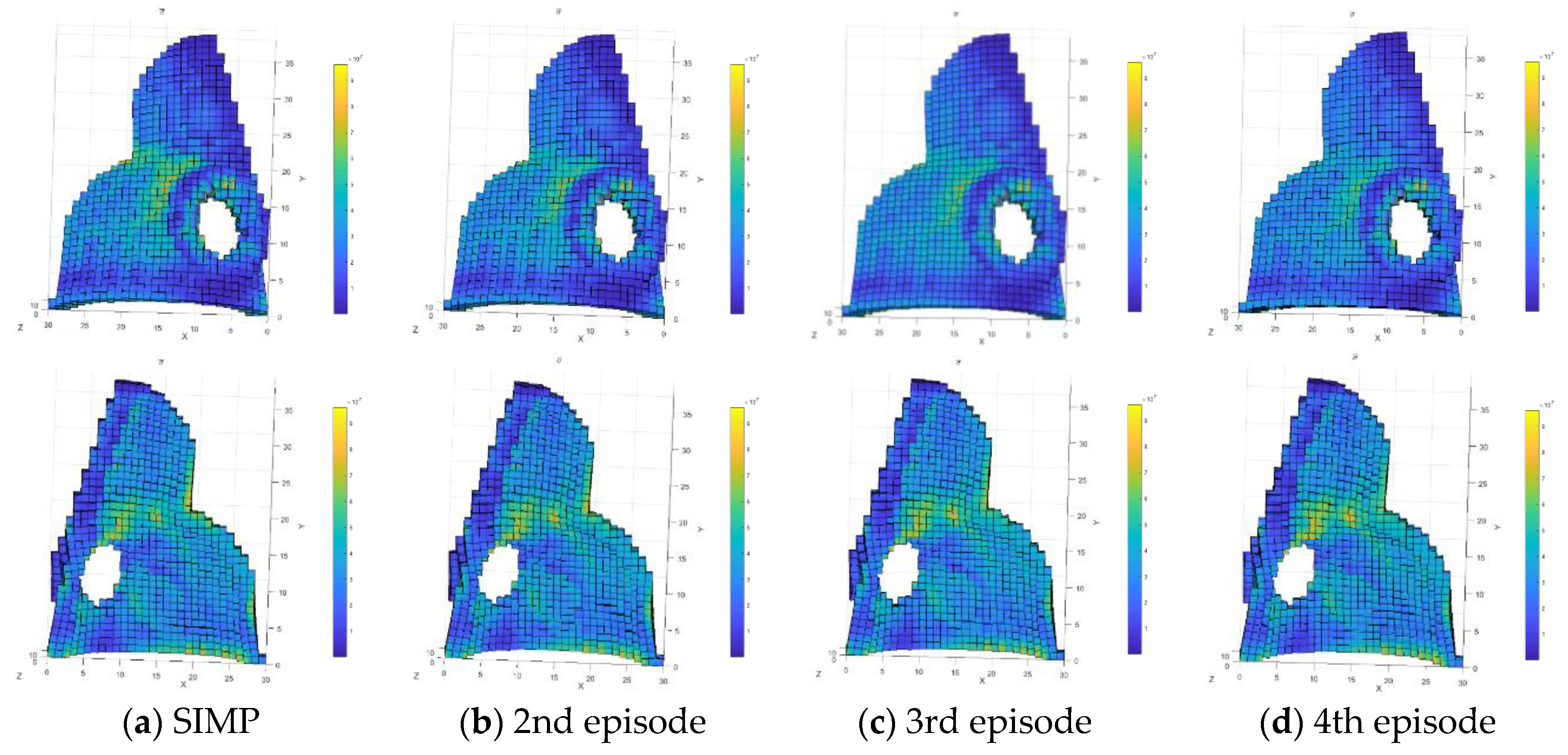

4.4. Upper Body of an Atmospheric Diving Suit

5. Conclusions and Future

Author Contributions

Funding

Conflicts of Interest

Nomenclature

| Variable | Description |

| action vector | |

| action | |

| best action for maximizing the predicted value | |

| bonus | |

| Beta() | Beta distribution, : two parameters |

| structure compliance (strain energy) | |

| a positive parameter that controls the degree of exploration in UCB | |

| [:] | operator of calculating the mathematical expectation |

| evolutionary volume ratio | |

| force vector | |

| information gain of action | |

| operator of the entropy | |

| weight factor of the filter | |

| history sequence of action and reward | |

| global stiffness matrix | |

| element stiffness matrix | |

| an integral number in the convergence criterion | |

| positive move limit | |

| number of times action has been selected | |

| N() | Gaussian distribution, : mean; : variance |

| posterior reward distribution | |

| penalty exponent in SIMP | |

| action-value function | |

| reward function | |

| sampled reward | |

| distance between centers of element e and element j | |

| filter radius | |

| state | |

| current iteration number | |

| number of iteration when the volume fraction just reaching the minimum | |

| displacement vector | |

| prescribed total structural volume | |

| density of element e | |

| observation drawn independently from the actual reward distribution | |

| sensitivity of element e | |

| posterior distribution of | |

| difference of rewards earned by optimal action and actual action | |

| a probability value defined in -greedy | |

| value of at the beginning of the episode | |

| policy | |

| a specified little value representing the convergence tolerance | |

| Subscripts | |

| e | an individual element |

References

- Liu, X.; Yi, W.J.; Li, Q.S.; Shen, P.S. Genetic evolutionary structural optimization. J. Constr. Steel Res. 2008, 64, 305–311. [Google Scholar] [CrossRef]

- Zuo, Z.H.; Xie, Y.M.; Huang, X. Combining genetic algorithms with BESO for topology optimization. Struct. Multidiscip. Optim. 2009, 38, 511–523. [Google Scholar] [CrossRef]

- Kaveh, A.; Hassani, B.; Shojaee, S.; Tavakkoli, S.M. Structural topology optimization using ant colony methodology. Eng. Struct. 2008, 30, 2559–2565. [Google Scholar] [CrossRef]

- Luh, G.C.; Lin, C.Y. Structural topology optimization using ant colony optimization algorithm. Appl. Soft Comput. 2009, 9, 1343–1353. [Google Scholar] [CrossRef]

- Luh, G.C.; Lin, C.Y.; Lin, Y.S. A binary particle swarm optimization for continuum structural topology optimization. Appl. Soft Comput. 2011, 11, 2833–2844. [Google Scholar] [CrossRef]

- Aulig, N.; Olhofer, M. Topology optimization by predicting sensitivities based on local state features. In Proceedings of the 5th European Conference on Computational Mechanics (ECCM V), Barcelona, Spain, 20–25 July 2014. [Google Scholar]

- Nikola, A.; Olhofer, M. Neuro-evolutionary topology optimization of structures by utilizing local state features. In Proceedings of the 2014 Annual Conference on Genetic and Evolutionary Computation, Vancouver, BC, Canada, 12–16 July 2014; pp. 967–974. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction, 2nd ed.; MIT Press: Cambridge, MA, USA, 2018; pp. 19–114. [Google Scholar]

- Shea, K.; Aish, R.; Gourtovaia, M. Towards integrated performance-driven generative design tools. Automat. Constr. 2005, 14, 253–264. [Google Scholar] [CrossRef]

- Krish, S. A practical generative design method. Comput. Aided Design. 2011, 43, 88–100. [Google Scholar] [CrossRef]

- Kang, N. Multidomain Demand Modeling in Design for Market Systems. Ph.D. Thesis, University of Michigan, Ann Arbor, MI, USA, 2014. [Google Scholar]

- Autodesk, Generative Design. Available online: https://www.autodesk.com/solutions/generative-design (accessed on 1 January 2019).

- McKnight, M. Generative Design: What it is? How is it being used? Why it’s a game changer. KNE Eng. 2017, 2, 176–181. [Google Scholar] [CrossRef]

- Justin, M.; Glueck, M.; Bradner, E.; Hashemi, A.; Grossman, T.; Fitzmaurice, G. Dream lens: Exploration and visualization of large-scale generative design datasets. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; p. 369. [Google Scholar]

- Sosnovik, I.; Oseledets, I. Neural networks for topology optimization. Russ. J. Numer. Anal. Math. 2019, 34, 215–223. [Google Scholar] [CrossRef]

- Rawat, S.; Shen, M.H. Application of Adversarial Networks for 3D Structural Topology Optimization. SAE Tech. Pap. 2019. [Google Scholar] [CrossRef]

- Yu, Y.; Hur, T.; Jung, J.; Jang, I.G. Deep learning for determining a near-optimal topological design without any iteration. Struct. Multidiscip. Optim. 2019, 59, 787–799. [Google Scholar] [CrossRef]

- Oh, S.; Jung, Y.; Kim, S.; Lee, I.; Kang, N. Deep generative design: Integration of topology optimization and generative models. J. Mech. Design. 2019, 141, 111405. [Google Scholar] [CrossRef]

- Bendsøe, M.P. Optimal shape design as a material distribution problem. Struct. Optim. 1989, 1, 193–202. [Google Scholar] [CrossRef]

- Young, V.; Querin, O.M.; Steven, G.P.; Xie, Y.M. 3D and multiple load case bi-directional evolutionary structural optimization (BESO). Struct. Optim. 1999, 18, 183–192. [Google Scholar] [CrossRef]

- Huang, X.; Xie, Y.M. Convergent and mesh-independent solutions for the bi-directional evolutionary structural optimization method. Finite Elem. Anal. Design. 2007, 43, 1039–1049. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529. [Google Scholar] [CrossRef]

- Silver, D.; Schrittwieser, J.; Simonyan, K.; Antonoglou, I.; Huang, A.; Guez, A.; Hubert, T.; Baker, L.; Lai, M.; Bolton, A.; et al. Mastering the game of go without human knowledge. Nature 2017, 550, 354. [Google Scholar] [CrossRef] [PubMed]

- Vinyals, O.; Babuschkin, I.; Czarnecki, W.M.; Mathieu, M.; Dudzik, A.; Chung, J.; Choi, D.H.; Powell, R.; Ewalds, T.; Georgiev, P.; et al. Grandmaster level in StarCraft II using multi-agent reinforcement learning. Nature 2019, 575, 350–354. [Google Scholar] [CrossRef] [PubMed]

- Buşoniu, L.; Babuška, R.; De Schutter, B. Multi-agent reinforcement learning: An overview. In Innovations in Multi-Agent Systems and Applications-1; Springer: Berlin, Germany, 2010; pp. 183–221. [Google Scholar]

- Lai, T.L.; Robbins, H. Asymptotically efficient adaptive allocation rules. Adv. Appl. Math. 1985, 6, 4–22. [Google Scholar] [CrossRef]

- Auer, P.; Cesa-Bianchi, N.; Fischer, P. Finite-time analysis of the multiarmed bandit problem. Mach. Learn. 2002, 47, 235–256. [Google Scholar] [CrossRef]

- Thompson, W.R. On the likelihood that one unknown probability exceeds another in view of the evidence of two samples. Biometrika 1933, 25, 285–294. [Google Scholar] [CrossRef]

- Russo, D.; van Roy, B. Learning to optimize via information-directed sampling. In Advances in Neural Information Processing Systems, Proceedings of the NIPS, Montreal, QC, Canada, 8–13 December 2014; Curran: New York, NY, USA, 2014; pp. 1583–1591. [Google Scholar]

- Sigmund, O.; Maute, K. Topology optimization approaches. Struct. Multidiscip. Optim. 2013, 48, 1031–1055. [Google Scholar] [CrossRef]

- Bendsøe, M.P.; Sigmund, O. Topology Optimization: Theory, Methods and Applications, 2nd ed.; Springer: Berlin, Germany, 2003; pp. 9–20. [Google Scholar]

- Chu, D.N.; Xie, Y.M.; Hira, A.; Steven, G.P. Evolutionary structural optimization for problems with stiffness constraints. Finite Elem. Anal. Des. 1996, 21, 239–251. [Google Scholar] [CrossRef]

- Huang, X.H.; Xie, Y. Bidirectional evolutionary topology optimization for structures with geometrical and material nonlinearities. AIAA J. 2007, 45, 308–313. [Google Scholar] [CrossRef]

| Nth Episode | IOU | ITER | Nth Episode | IOU | ITER | ||

|---|---|---|---|---|---|---|---|

| BESO | 1.873 | 26 | 11 | 1.923 | 0.736 | 43 | |

| 2 | 1.891 | 0.600 | 28 | 12 | 1.878 | 0.851 | 34 |

| 3 | 1.918 | 0.779 | 24 | 13 | 1.873 | 0.740 | 46 |

| 4 | 1.885 | 0.794 | 55 | 14 | 1.912 | 0.801 | 34 |

| 5 | 1.883 | 0.856 | 24 | 15 | 1.876 | 0.896 | 29 |

| 6 | 1.883 | 0.695 | 37 | 16 | 1.881 | 0.858 | 26 |

| 7 | 1.877 | 0.746 | 31 | 17 | 1.913 | 0.866 | 75 |

| 8 | 1.867 | 0.859 | 41 | 18 | 1.900 | 0.894 | 42 |

| 9 | 1.878 | 0.870 | 28 | 19 | 1.963 | 0.719 | 100 |

| 10 | 1.891 | 0.868 | 30 | 20 | 1.886 | 0.893 | 40 |

| Nth Episode | IOU | Maximum Stress (Mpa) | ITER | ||

|---|---|---|---|---|---|

| SIMP | 214.9 | --- | --- | 96.3 | 7 |

| 2 | 218.6 | 1.7% | 0.860 | 96.1 | 9 |

| 3 | 220.9 | 2.8% | 0.940 | 96.0 | 9 |

| 4 | 226.4 | 5.3% | 0.936 | 95.9 | 6 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, H.; Ma, L. Generative Design by Using Exploration Approaches of Reinforcement Learning in Density-Based Structural Topology Optimization. Designs 2020, 4, 10. https://doi.org/10.3390/designs4020010

Sun H, Ma L. Generative Design by Using Exploration Approaches of Reinforcement Learning in Density-Based Structural Topology Optimization. Designs. 2020; 4(2):10. https://doi.org/10.3390/designs4020010

Chicago/Turabian StyleSun, Hongbo, and Ling Ma. 2020. "Generative Design by Using Exploration Approaches of Reinforcement Learning in Density-Based Structural Topology Optimization" Designs 4, no. 2: 10. https://doi.org/10.3390/designs4020010

APA StyleSun, H., & Ma, L. (2020). Generative Design by Using Exploration Approaches of Reinforcement Learning in Density-Based Structural Topology Optimization. Designs, 4(2), 10. https://doi.org/10.3390/designs4020010