Appendix A

The proposed checklists of all patterns are shown as follows.

The checklist in Pattern 1

Items examining the entirety of a task

- -

Are there any clues for supposing the following operation?

- -

Can users easily understand the vocabulary or the icons?

- -

Are there any friendly and smooth forms of feedback for the operation?

- -

Can users easily suppose the operation method?

- -

Can users understand immediately the relationship among UI parts?

- -

Are the layouts of operation panels or screens standardized?

- -

Is there consistency in the operation method?

Items examining each subtask in a task

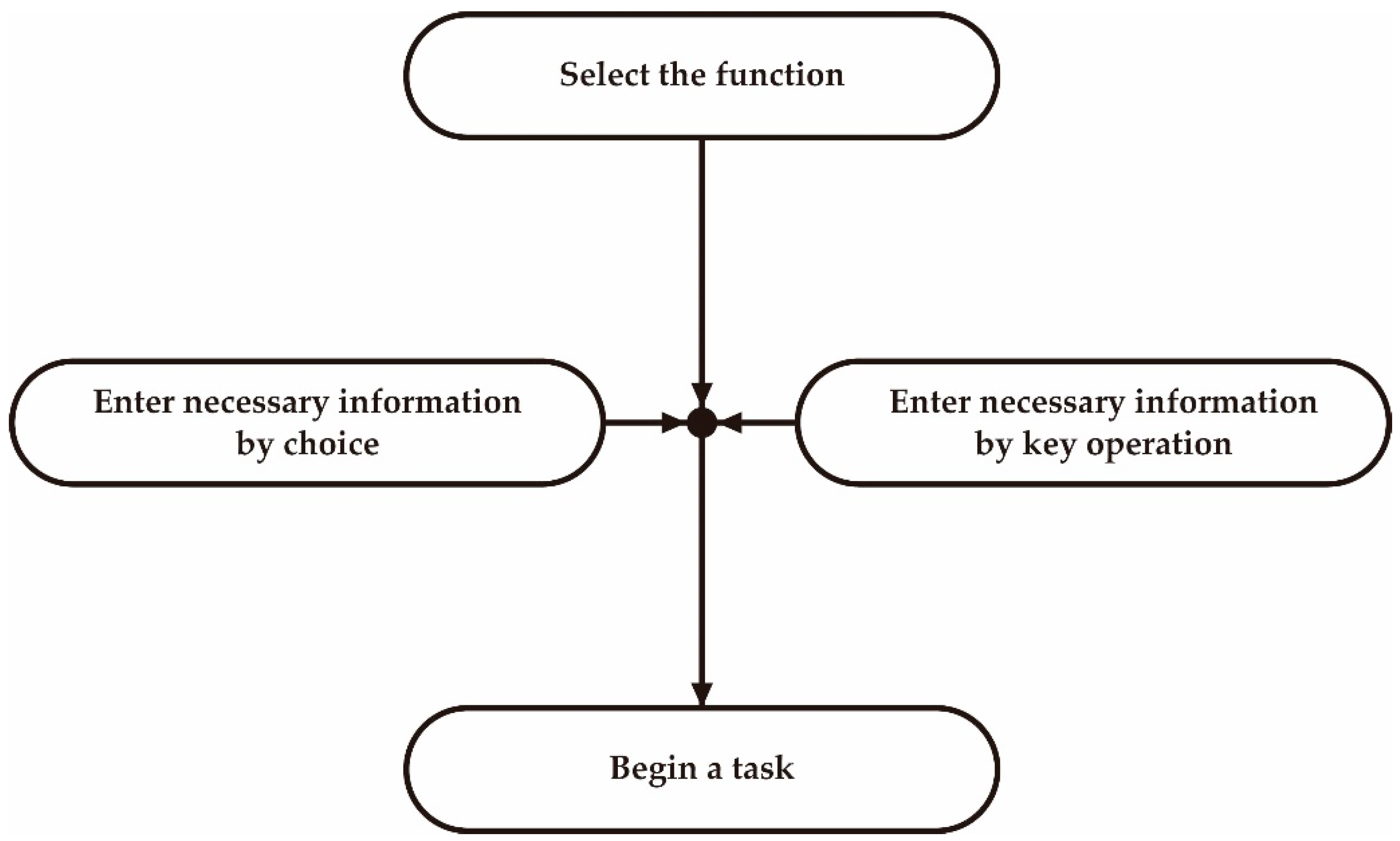

(Select the function)

- -

Can users easily understand where the choices are?

- -

Is the operation panel or screen simple?

- -

Can users easily grasp the entirety of the selecting functions?

(Enter necessary information by choice)

- -

Can users easily understand where the choices are?

- -

Can users easily grasp an entirety of the choices?

(Enter necessary information by key operation)

- -

Can users operate UI with few and efficient operation procedures?

- -

Can users easily understand the operation portion?

- -

Can users easily grasp the entirety of the operation portion? Begin a task

- -

Can users easily understand the operation portion?

The checklist in Pattern 2

Items examining entirety of a task

- -

Are there any clues for supposing the following operation?

- -

Can users easily understand the vocabulary or the icons?

- -

Are there any friendly and smooth forms of feedback for the operation?

- -

Can users easily suppose the operation method?

- -

Can users understand immediately the relationship among UI parts?

Items examining each subtask in a task

(Select the function)

- -

Can users easily understand where the choices are?

- -

Is the operation panel or screen simple?

- -

Can users easily grasp the entirety of the selecting functions?

The checklist in Pattern 3

Items examining entirety of a task

- -

Are there any clues for supposing the following operation?

- -

Can users easily understand the vocabulary or the icons?

- -

Are there any friendly and smooth forms of feedback for the operation?

- -

Can users easily suppose the operation method?

- -

Can users understand immediately the relationship among UI parts?

- -

Are the layouts of operation panels or screens standardized?

- -

Is there consistency in the operation method?

- -

Can users easily understand the timing of task beginning?

Items examining each subtask in a task

(Select the function)

- -

Can users easily to understand where the choices are?

- -

Is the operation panel or screen simple?

- -

Can users easily grasp the entirety of the selecting functions?

(Enter necessary information by choice)

- -

Can users easily understand where the choices are?

- -

Can users easily grasp an entirety of the choices?

(Enter necessary information by key operation)

- -

Can users operate UI with few and efficient operation procedures?

- -

Can users easily understand the operation portion?

- -

Can users easily grasp the entirety of the operation portion?

The checklist in Pattern 4

Items examining entirety of a task

- -

Are there any clues for supposing the following operation?

- -

Can users easily understand the vocabulary or the icons?

- -

Are there any friendly and smooth forms of feedback for the operation?

- -

Can users easily suppose the operation method?

- -

Can users understand immediately the relationship among UI parts?

- -

Can users understand immediately the operation result?

- -

Can parameter adjustment be conducted in real time?

Items examining each subtask in a task

(Select the function)

- -

Can users easily understand where the choices are?

- -

Is the operation panel or screen simple?

The checklist in Pattern 5

Items examining entirety of a task

- -

Are there any clues for supposing the following operation?

- -

Can users easily understand the vocabulary or the icons?

- -

Are there any friendly and smooth forms of feedback for the operation?

- -

Can users easily suppose the operation method?

- -

Can users understand immediately the relationship among UI parts?

- -

Are the layouts of operation panels or screens standardized?

- -

Is there consistency in the operation method?

- -

Can users understand consistently the situation in users?

Items examining each subtask in a task

(Guide users’ operation outside the screen)

- -

Can users understand immediately the input method of media by appearances of the operation portion?

- -

Can users easily understand the timing of input or output of media?

- -

Can users understand immediately relationship between the input or output portion and the operation portion?

- -

Can users easily understand the input or output portion?

- -

Are there clear clues for setting the media?

- -

Can users operate the UI in a natural attitude?

- -

Can users easily understand messages conducive to input-output of the media?

(Enter information)

- -

Can users operate UI with few and efficient operation procedures?

- -

Can users easily understand where the operation portion?

- -

Can users easily grasp the entirety of the operation portion?

- -

Can users easily understand the choices are?

(Show the confirming message)

- -

Can users easily understand the confirming contents?

- -

Can users easily understand that non-invertible operation is begun?

- -

Can users easily understand the message display portion?

(Begin a task)

- -

Can users easily understand the operation portion?

The checklist in Pattern 6

Items examining entirety of a task

- -

Are there any clues for supposing the following operation?

- -

Can users easily understand the vocabulary or the icons?

- -

Are there any friendly and smooth forms of feedback for the operation?

- -

Can users easily suppose the operation method?

- -

Can users understand immediately the relationship among UI parts?

- -

Are the layouts of operation panels or screens standardized?

- -

Is there consistency in the operation method?

- -

Can users understand consistently the situation in users?

Items examining each subtask in a task

(Guide users’ operation outside the screen)

- -

Can users understand immediately the input or output method of media by appearances of the operation portion?

- -

Can users easily understand the timing of input or output of media?

- -

Can users understand immediately relationship between the input or output portion and the operation portion?

- -

Can users easily understand the input or output portion?

- -

Are there clear clues for setting the media?

- -

Can users operate the UI in a natural attitude?

- -

Can users easily understand messages conducive to input-output of the media?

(Begin a task)

- -

Can users easily understand the operation portion?

The checklist in Pattern 7

Items examining entirety of a task

- -

Are there any clues for supposing the following operation?

- -

Can users easily understand the vocabulary or the icons?

- -

Are there any friendly and smooth forms of feedback for the operation?

- -

Can users easily suppose the operation method?

- -

Can users understand immediately the relationship among UI parts?

- -

Are the layouts of operation panels or screens standardized?

- -

Is there consistency in the operation method?

- -

Can users understand consistently the situation in users?

Items examining each subtask in a task

(Select the function)

- -

Can users easily understand where the choices are?

- -

Is the operation panel or screen simple?

- -

Can users easily grasp the entirety of the selecting functions?

(Show the confirming message)

- -

Can users easily understand where the choices are?

- -

Is the operation panel or screen simple?

- -

Can users easily grasp the entirety of the selecting functions?

(Begin a task)

- -

Can users easily understand the operation portion?

(Guide users’ operation outside the screen)

- -

Can users understand immediately the output method of media by appearances of the operation portion?

- -

Can users easily understand the timing of output of media?

- -

Can users understand immediately relationship between the output portion and the operation portion?

- -

Can users easily understand where the output portion?

- -

Can users operate the UI in a natural attitude?

- -

Can users easily understand messages conducive to output of the media?

The checklist in Pattern 8

Items examining entirety of a task

- -

Are there any clues for supposing the following operation?

- -

Can users easily understand the vocabulary or the icons?

- -

Are there any friendly and smooth forms of feedback for the operation?

- -

Can users easily suppose the operation method?

- -

Can users understand immediately the relationship among UI parts?

- -

Are the layouts of operation panels or screens standardized?

- -

Is there consistency in the operation method?

- -

Can users understand consistently the situation in users?

Items examining each subtask in a task

(Select the function)

- -

Can users easily understand where the choices are?

- -

Is the operation panel or screen simple?

- -

Can users easily grasp the entirety of the selecting functions?

(Enter necessary information by choice)

- -

Can users easily understand where the choices are?

- -

Can users easily grasp an entirety of the choices?

(Enter necessary information by key operation)

- -

Can users operate UI with few and efficient operation procedures?

- -

Can users easily understand the operation portion?

- -

Can users easily grasp the entirety of the operation portion?

(Show the confirming message)

- -

Can users easily to understand where the choices are?

- -

Is the operation panel or screen simple?

- -

Can users easily grasp the entirety of the selecting functions?

(Begin a task)

- -

Can users easily understand the operation portion?

(Guide users’ operation outside the screen)

- -

Can users understand immediately the output method of media by appearances of the operation portion?

- -

Can users easily understand the timing of output of media?

- -

Can users understand immediately relationship between the output portion and the operation portion?

- -

Can users easily understand where the output portion?

- -

Can users operate the UI in a natural attitude?

- -

Can users easily understand messages conducive to output of the media?

The checklist in Pattern 9

Items examining entirety of a task

- -

Are there any clues for supposing the following operation?

- -

Can users easily understand the vocabulary or the icons?

- -

Are there any friendly and smooth forms of feedback for the operation?

- -

Can users easily suppose the operation method?

- -

Can users understand immediately the relationship among UI parts?

- -

Can users understand consistently the situation in users?

Items examining each subtask in a task

(Select the method)

- -

Can users easily understand where the choices are?

- -

Is the operation panel or screen simple?

- -

Can users easily grasp the entirety of the choices? Enter necessary information by choice

- -

Can users easily understand where the choices are?

- -

Can users easily grasp an entirety of the choices?

(Enter necessary information by key operation)

- -

Can users operate UI with few and efficient operation procedures?

- -

Can users easily understand the operation portion?

- -

Can users easily grasp the entirety of the operation portion?

(Show the list)

- -

Can users operate UI with few and efficient operation procedures?

- -

Can users easily grasp the entirety of the list display portion?

(Show the contents particularly)

- -

Are there any clues for supposing the following operation?

- -

Can users easily understand where the back button?

- -

Can users easily grasp the entirety of information?

The checklist in Pattern 10

Items examining entirety of a task

- -

Are there any clues for supposing the following operation?

- -

Can users easily understand the vocabulary or the icons?

- -

Are there any friendly and smooth forms of feedback for the operation?

- -

Can users understand immediately the relationship among UI parts?

- -

Can users understand consistently the situation in users?

- -

Is the operation panel or screen simple?

- -

Can users easily grasp the entirety of the choices?

Items examining each subtask in a task

(Show the list)

- -

Can users operate UI with few and efficient operation procedures?

- -

Can users easily grasp the entirety of the list display portion?

(Show the contents particularly)

- -

Are there any clues for supposing the following operation?

- -

Can users easily understand where the back button?

- -

Can users easily grasp the entirety of information?

The checklist in Pattern 11

Items examining entirety of a task

- -

Are there any clues for supposing the following operation?

- -

Can users easily understand the vocabulary or the icons?

- -

Are there any friendly and smooth forms of feedback for the operation?

- -

Can users easily suppose the operation method?

- -

Can users understand immediately the relationship among UI parts?

- -

Are the layouts of operation panels or screens standardized?

- -

Is there consistency in the operation method?

- -

Can users understand consistently the situation in users?

Items examining each subtask in a task

(Select the method)

- -

Can users easily understand where the choices are?

- -

Is the operation panel or screen simple?

- -

Can users easily grasp the entirety of the choices?

(Enter necessary information by choice)

- -

Can users easily understand where the choices are?

- -

Can users easily grasp an entirety of the choices?

(Show the contents particularly)

- -

Are there any clues for supposing the following operation?

- -

Can users easily understand the back button?

- -

Can users easily grasp the entirety of information?

The checklist in Pattern 12

Items examining entirety of a task

- -

Are there any clues for supposing the following operation?

- -

Can users easily understand the vocabulary or the icons?

- -

Are there any friendly and smooth forms of feedback for the operation?

- -

Can users easily suppose the operation method?

- -

Can users understand immediately the relationship among UI parts?

- -

Are the layouts of operation panels or screens standardized?

- -

Is there consistency in the operation method?

- -

Can users understand consistently the situation in users?

Items examining each subtask in a task

(Select the method)

- -

Can users easily understand where the choices are?

- -

Is the operation panel or screen simple?

- -

Can users easily grasp the entirety of the choices?

(Show the list)

- -

Can users operate UI with few and efficient operation procedures?

- -

Can users easily grasp the entirety of the list display portion?

(Select the method)

- -

Can users easily understand where the choices are?

- -

Is the operation panel or screen simple?

- -

Can users easily grasp the entirety of the choices?

(Enter necessary information by choice)

- -

Can users easily understand where the choices are?

- -

Can users easily grasp an entirety of the choices?

(Enter necessary information by key operation)

- -

Can users operate UI with few and efficient operation procedures?

- -

Can users easily understand the operation portion?

- -

Can users easily grasp the entirety of the operation portion?

(Show the contents particularly)

- -

Are there any clues for supposing the following operation?

- -

Can users easily understand the back button?

- -

Can users easily grasp the entirety of information?

The checklist in Pattern 13

Items examining entirety of a task

- -

Are there any clues for supposing the following operation?

- -

Can users easily understand the vocabulary or the icons?

- -

Are there any friendly and smooth forms of feedback for the operation?

- -

Can users easily suppose the operation method?

- -

Can users understand immediately the relationship among UI parts?

- -

Are the layouts of operation panels or screens standardized?

- -

Is there consistency in the operation method?

- -

Can users understand consistently the situation in users?

Items examining each subtask in a task

(Select the method)

- -

Can users easily understand where the choices are?

- -

Is the operation panel or screen simple?

- -

Can users easily grasp the entirety of the choices?

(Enter necessary information by choice)

- -

Can users easily understand where the choices are?

- -

Can users easily grasp an entirety of the choices?

(Enter necessary information by key operation)

- -

Can users operate UI with few and efficient operation procedures?

- -

Can users easily understand the operation portion?

- -

Can users easily grasp the entirety of the operation portion?

(Begin a task)

- -

Can users easily understand the operation portion?