Modulating Multisensory Processing: Interactions Between Semantic Congruence and Temporal Synchrony

Abstract

1. Introduction

2. Experiment 1

2.1. Materials and Methods

2.1.1. Participants

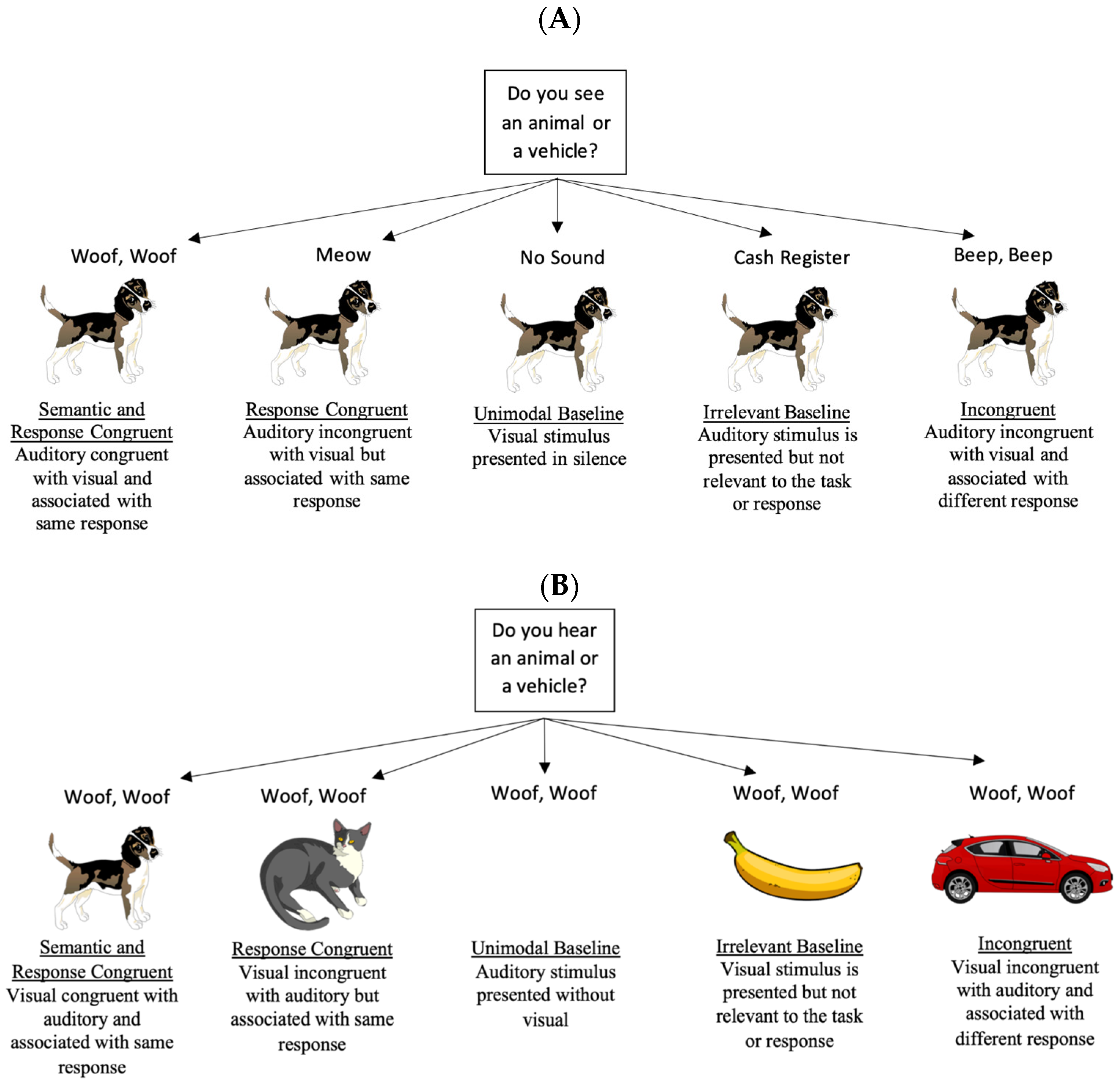

2.1.2. Stimuli and Design

2.1.3. Procedure

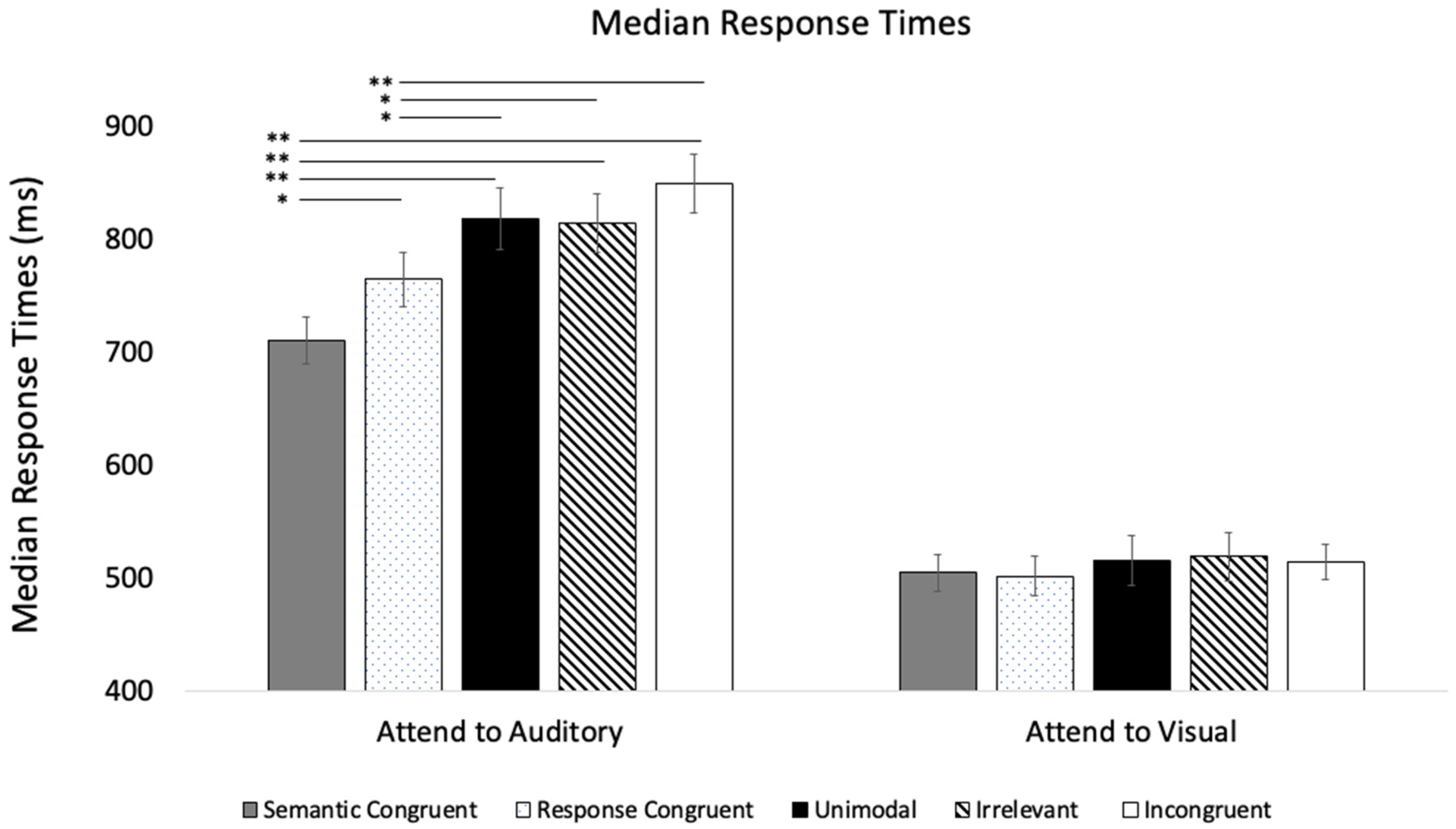

2.2. Results

2.3. Discussion

3. Experiment 2

3.1. Materials and Methods

3.1.1. Participants

3.1.2. Stimuli, Design, and Procedure

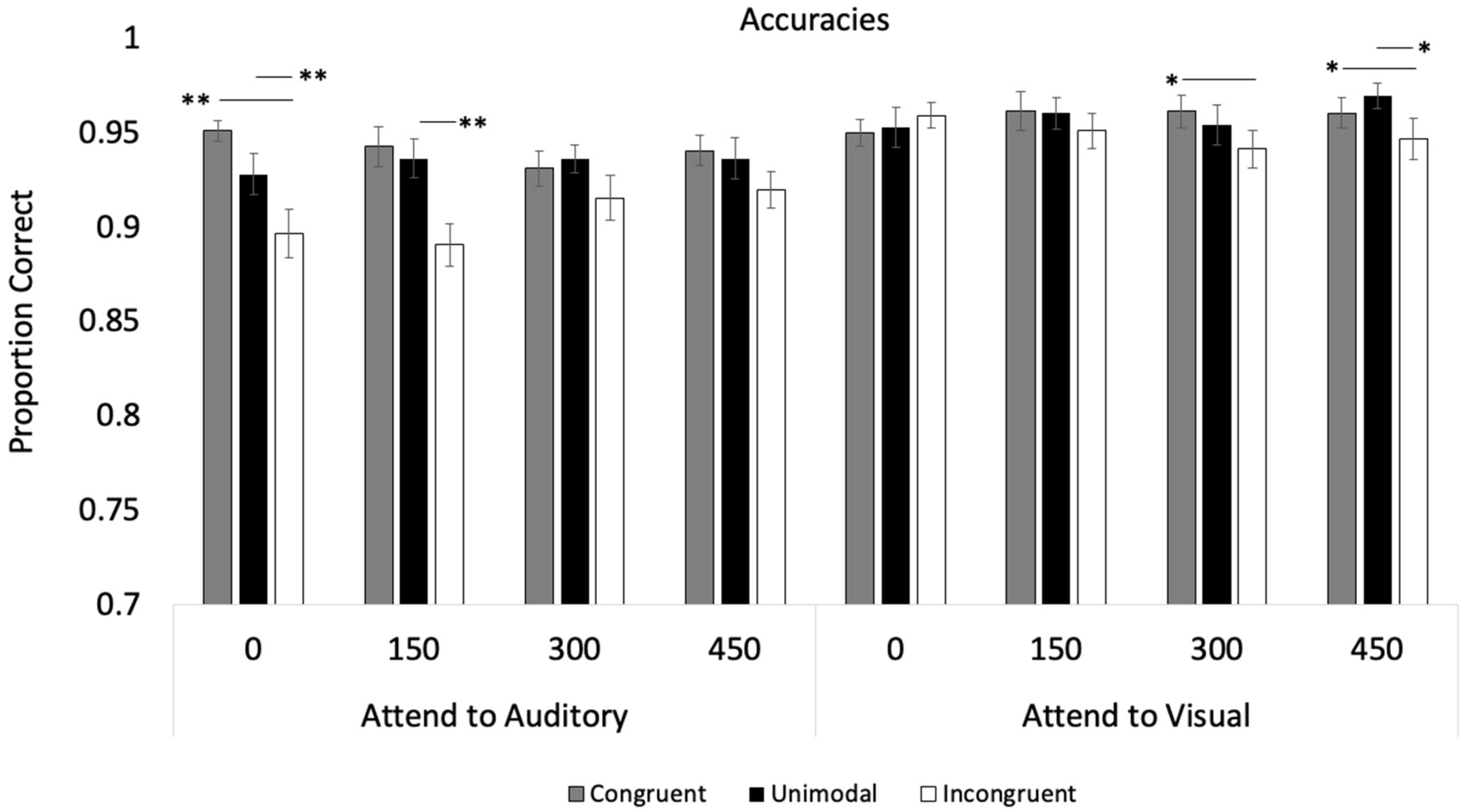

3.2. Results

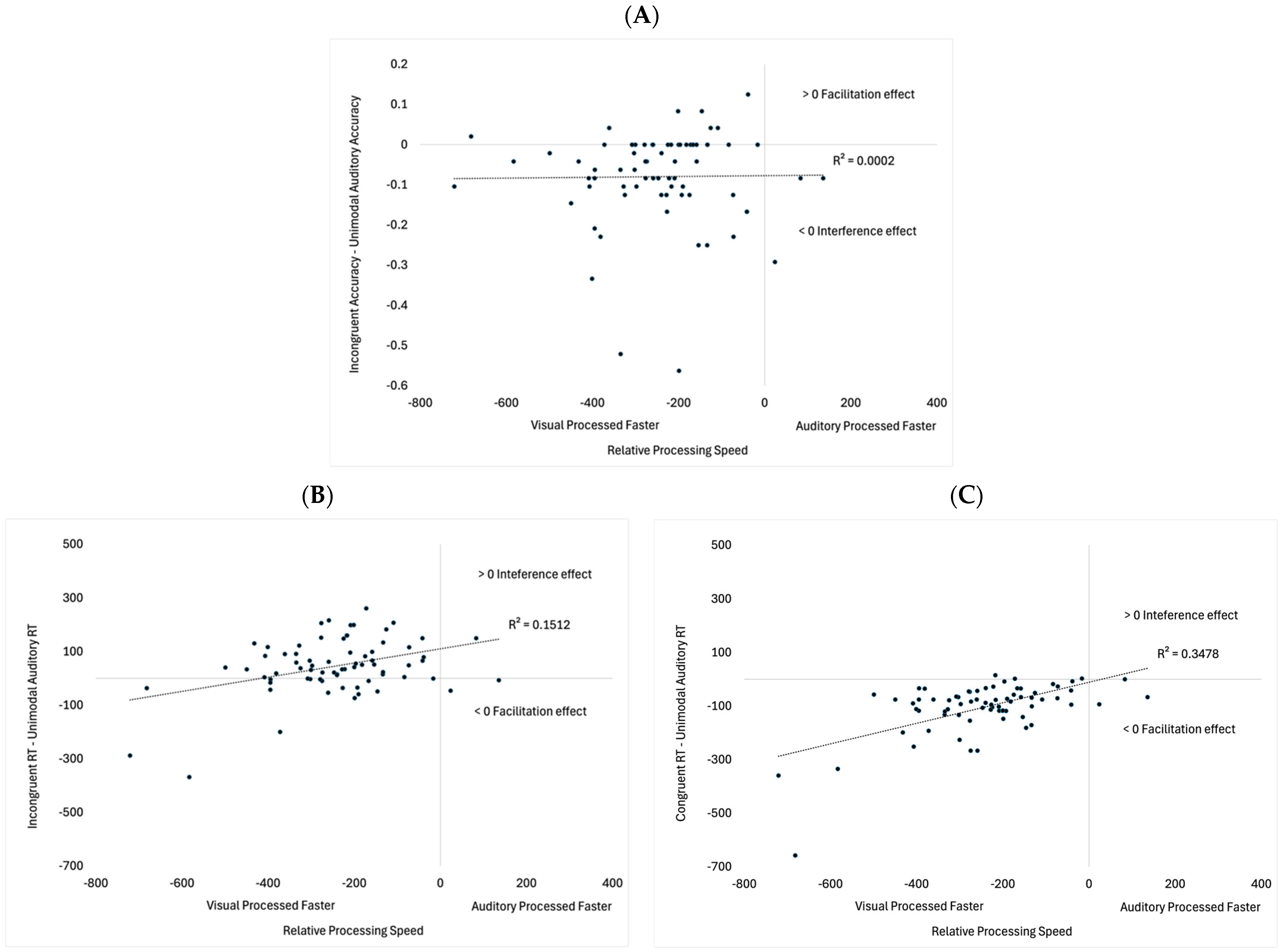

Exploratory Analyses

4. General Discussion

Limitations and Future Directions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Stimuli | Visual | Auditory | Study Phase | Category | Experiment |

| Elephant | x | x | Practice | Animal | Exp 1 & 2 |

| Motorcycle | x | x | Practice | Vehicle | Exp 1 & 2 |

| Dog | x | x | Test | Animal | Exp 1 & 2 |

| Cat | x | x | Test | Animal | Exp 1 & 2 |

| Frog | x | x | Test | Animal | Exp 1 & 2 |

| Monkey | x | x | Test | Animal | Exp 1 & 2 |

| Car | x | x | Test | Vehicle | Exp 1 & 2 |

| Boat | x | x | Test | Vehicle | Exp 1 & 2 |

| Train | x | x | Test | Vehicle | Exp 1 & 2 |

| Bike | x | x | Test | Vehicle | Exp 1 & 2 |

| Violin | x | Test | Irrelevant | Exp 1 | |

| Cymbal | x | Test | Irrelevant | Exp 1 | |

| Bowling strike | x | Test | Irrelevant | Exp 1 | |

| Cash register | x | Test | Irrelevant | Exp 1 | |

| Bubbles | x | Test | Irrelevant | Exp 1 | |

| Hammer | x | Test | Irrelevant | Exp 1 | |

| Pinball | x | Test | Irrelevant | Exp 1 | |

| Sawing | x | Test | Irrelevant | Exp 1 | |

| Banana | x | Test | Irrelevant | Exp 1 | |

| Balloon | x | Test | Irrelevant | Exp 1 | |

| Comb | x | Test | Irrelevant | Exp 1 | |

| Clock | x | Test | Irrelevant | Exp 1 | |

| Broom | x | Test | Irrelevant | Exp 1 | |

| Watermelon | x | Test | Irrelevant | Exp 1 | |

| Scissors | x | Test | Irrelevant | Exp 1 | |

| Apple | x | Test | Irrelevant | Exp 1 | |

| Green circle | x | Test | Attention Check | Exp 1 & 2 | |

| Phone ring | x | Test | Attention Check | Exp 2 |

References

- Calvert, G.; Spence, C.; Stein, B. The Handbook of Multisensory Processes; MIT Press: Cambridge, MA, USA, 2004. [Google Scholar]

- Colavita, F.B. Human sensory dominance. Percept. Psychophys. 1974, 16, 409–412. [Google Scholar] [CrossRef]

- Driver, J.; Spence, C. Crossmodal spatial attention: Evidence from human performance. In Crossmodal Space and Crossmodal Attention; Spence, C., Driver, J., Eds.; Oxford University Press: Oxford, UK, 2004. [Google Scholar]

- Lewkowicz, D.J. Development of intersensory perception in human infants. In The Development of Intersensory Perception: Comparative Perspectives; Lewkowicz, D.J., Lickliter, R., Eds.; Erlbaum: Mahwah, NJ, USA, 1994; pp. 165–203. [Google Scholar]

- Lewkowicz, D.J. The development of intersensory temporal perception: An epigenetic systems/limitations view. Psychol. Bull. 2000, 126, 281–308. [Google Scholar] [CrossRef]

- Lickliter, R.; Bahrick, L.E. The development of infant intersensory perception: Advantages of a comparative convergent-operations approach. Psychol. Bull. 2000, 126, 260–280. [Google Scholar] [CrossRef]

- Robinson, C.W.; Sloutsky, V.M. Development of cross-modal processing. Wiley Interdiscip. Rev. Cogn. Sci. 2010, 1, 135–141. [Google Scholar] [CrossRef]

- Spence, C. Explaining the Colavita visual dominance effect. Prog. Brain Res. 2009, 176, 245–258. [Google Scholar] [PubMed]

- Spence, C. Multisensory Perception. In Stevens’ Handbook of Experimental Psychology and Cognitive Neuroscience, 4th ed.; Serences, J.T., Wixted, J.T., Eds.; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2018; Volume 2, pp. 625–680. [Google Scholar]

- Spence, C.; Parise, C.; Chen, Y.C. The Colavita visual dominance effect. In The Neural Bases of Multisensory Processes; Murray, M.M., Wallace, M.T., Eds.; CRC Press: Boca Raton, FL, USA, 2012; pp. 529–556. [Google Scholar]

- Stein, B.E.; Meredith, M.A. The Merging of the Senses; MIT Press: Cambridge, MA, USA, 1993. [Google Scholar]

- Chen, Y.; Spence, C. When hearing the bark helps to identify the dog: Semantically-congruent sounds modulate the identification of masked pictures. Cognition 2010, 14, 389–404. [Google Scholar] [CrossRef]

- Thomas, R.L.; Nardini, M.; Mareschal, D. The impact of semantically congruent and incongruent visual information on auditory object recognition across development. J. Exp. Child Psychol. 2017, 162, 72–88. [Google Scholar] [CrossRef] [PubMed]

- Dunifon, C.M.; Rivera, S.; Robinson, C.W. Auditory stimuli automatically grab attention: Evidence from eye tracking and attentional manipulations. J. Exp. Psychol. Hum. Percept. Perform. 2016, 42, 1947–1958. [Google Scholar] [CrossRef] [PubMed]

- Laurienti, P.J.; Kraft, R.A.; Maldjian, J.A.; Burdette, J.H.; Wallace, M.T. Semantic congruence is a critical factor in multisensory behavioral performance. Exp. Brain Res. 2004, 158, 405–414. [Google Scholar] [CrossRef]

- Wright, I.; Waterman, M.; Prescott, H.; Murdoch-Eaton, D. A new Stroop-like measure of inhibitory function development: Typical developmental trends. J. Child Psychol. Psychiatry 2003, 44, 561–575. [Google Scholar] [CrossRef]

- Boutonnet, B.; Lupyan, G. Words jump-start vision: A label advantage in object recognition. J. Neurosci. 2015, 35, 9329–9335. [Google Scholar] [CrossRef]

- Lupyan, G.; Thompson-Schill, S.L. The evocative power of words: Activation of concepts by verbal and nonverbal means. J. Exp. Psychol. Gen. 2012, 141, 170–186. [Google Scholar] [CrossRef] [PubMed]

- Kvasova, D.; Spence, C.; Soto-Faraco, S. Not so fast: Orienting to crossmodal semantic congruence depends on task relevance and perceptual load. Psicologica 2023, 44, 1–33. [Google Scholar] [CrossRef]

- Mastroberardino, S.; Santangelo, V.; Macaluso, E. Crossmodal semantic congruence can affect visuo-spatial processing and activity of the fronto-parietal attention networks. Front. Integr. Neurosci. 2015, 9, 1–14. [Google Scholar] [CrossRef]

- Hirst, R.J.; Kicks, E.C.; Allen, H.A.; Cragg, L. Cross-modal interference-control is reduced in childhood but maintained in aging: A cohort study of stimulus- and response-interference in cross-modal and unimodal Stroop tasks. J. Exp. Psychol. Hum. Percept. Perform. 2019, 45, 553–572. [Google Scholar] [CrossRef]

- Almadori, E.; Mastroberardino, S.; Botta, F.; Brunetti, R.; Lupianez, J.; Spence, C.; Santangelo, V. Crossmodal semantic congruence interacts with object contextual consistency in complex visual scenes to enhance short-term memory performance. Brain Sci. 2021, 11, 1206. [Google Scholar] [CrossRef] [PubMed]

- Alejandro, R.J.; Packard, P.A.; Steiger, T.K.; Fuentemilla, L.; Bunzeck, N. Semantic congruence drives long-term memory and similarly affects neural retrieval dynamics in young and older adults. Front. Aging Neurosci. 2021, 13, 683908. [Google Scholar] [CrossRef]

- Robinson, C.W.; Moore, R.L.; Crook, T.A. Bimodal presentation speeds up auditory processing and slows down visual processing. Front. Psychol. 2018, 9, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Robinson, C.W.; Chandra, M.; Sinnett, S. Existence of competing modality dominances. Atten. Percept. Psychophys. 2016, 78, 1104–1114. [Google Scholar] [CrossRef]

- Sinnett, S.; Spence, S.; Soto-Faraco, S. Visual dominance and attention: Revisiting the Colavita effect. Percept. Psychophys. 2007, 69, 673–686. [Google Scholar] [CrossRef]

- Spence, C.; Shore, D.I.; Klein, R.M. Multisensory prior entry. J. Exp. Psychol. Gen. 2001, 130, 799–832. [Google Scholar] [CrossRef]

- Koppen, C.; Spence, C. Audiovisual asynchrony modulates the Colavita visual dominance effect. Brain Res. 2007, 1186, 224–232. [Google Scholar] [CrossRef]

- Sinnett, S.; Soto-Faraco, S.; Spence, S. The co-occurrence of multisensory competition and facilitation. Acta Psychol. 2008, 128, 153–161. [Google Scholar] [CrossRef]

- Desimone, R.; Duncan, J. Neural mechanisms of selective visual attention. Annu. Rev. Neurosci. 1995, 18, 193–222. [Google Scholar] [CrossRef] [PubMed]

- Duncan, J. Cooperating brain systems in selective perception and action. In Attention and Performance; The MIT Press: Cambridge, MA, USA, 1996; pp. 549–578. [Google Scholar]

- Stroop, J.R. Studies of interference in serial verbal reactions. J. Exp. Psychol. 1935, 18, 643–662. [Google Scholar] [CrossRef]

- Marcell, M.M.; Borella, D.; Greene, M.; Kerr, E.; Rogers, S. Confrontation naming of environmental sounds. J. Clin. Exp. Neuropsychol. 2000, 22, 830–864. [Google Scholar] [CrossRef] [PubMed]

- PsychoPy. Available online: https://www.psychopy.org/.

- Schmid, C.; Büchel, C.; Rose, M. The neural basis of visual dominance in the context of audio-visual object processing. NeuroImage 2011, 55, 304–311. [Google Scholar] [CrossRef]

- Joubert, O.R.; Fize, D.; Rousselet, G.A.; Fabre-Thorpe, M. Early interference of context congruence on object processing in rapid visual categorization of natural scenes. J. Vis. 2008, 8, 11. [Google Scholar] [CrossRef]

- Ciraolo, M.F.; O’Hanlon, S.M.; Robinson, C.W.; Sinnett, S. Stimulus onset modulates auditory and visual dominance. Vision 2020, 4, 14. [Google Scholar] [CrossRef]

- Grant, K.W.; Greenberg, S. Speech intelligibility derived from asynchronous processing of auditory-visual information. In Proceedings of the International Conference on Auditory-Visual Speech Processing (AVSP 2001), Aalborg, Denmark, 7–9 September 2001; pp. 132–137. [Google Scholar]

- Navarra, J.; Vatakis, A.; Zampini, M.; Soto-Faraco, S.; Humphreys, W.; Spence, C. Exposure to asynchronous audiovisual speech extends the temporal window for audiovisual integration. Cogn. Brain Res. 2005, 25, 499–507. [Google Scholar] [CrossRef]

- Posner, M.I.; Nissen, M.J.; Klein, R.M. Visual dominance: An information-processing account of its origins and significance. Psychol. Rev. 1976, 83, 157. [Google Scholar] [CrossRef]

- Laughery, D.; Pesina, N.; Robinson, C.W. Tones disrupt visual fixations and responding on a visual-spatial task. J. Exp. Psychol. Hum. Percept. Perform. 2020, 46, 1301–1312. [Google Scholar] [CrossRef]

- Sirois, S.; Brisson, J. Pupillometry. WIREs Cogn. Sci. 2014, 5, 679–692. [Google Scholar] [CrossRef] [PubMed]

- Sloutsky, V.M.; Robinson, C.W. The role of words and sounds in infants’ visual processing: From overshadowing to attentional tuning. Cogn. Sci. 2008, 32, 342–365. [Google Scholar] [CrossRef]

- Napolitano, A.C.; Sloutsky, V.M. Is a picture worth a thousand words? The flexible nature of modality dominance in young children. Child Dev. 2004, 75, 1850–1870. [Google Scholar] [CrossRef]

- Robinson, C.W.; Sloutsky, V.M. Two mechanisms underlying auditory dominance: Overshadowing and response competition. J. Exp. Child Psychol. 2019, 178, 317–340. [Google Scholar] [CrossRef] [PubMed]

- Fei-Fei, L.; Iyer, A.; Koch, C.; Perona, P. What do we perceive in a glance of a real-world scene? J. Vis. 2007, 7, 1–29. [Google Scholar] [CrossRef]

- Rehder, B.; Hoffman, A.B. Eye tracking and selective attention in category learning. Cogn. Psychol. 2005, 51, 1–41. [Google Scholar] [CrossRef]

- Althaus, N.; Mareschal, D. Labels direct infants’ attention to commonalities during novel category learning. PLoS ONE 2014, 9, e99670. [Google Scholar] [CrossRef] [PubMed]

| Primary Accuracy Analyses | ANOVA (df) F | p | ηp2 |

|---|---|---|---|

| Modality | F (1, 35) = 2.09 | =0.160 | 0.06 |

| Trial Type | F (2, 70) = 26.95 | <0.001 | 0.44 |

| SOA | F (3, 105) = 0.86 | =0.466 | 0.02 |

| Modality × SOA | F (3, 105) = 0.72 | =0.541 | 0.02 |

| Modality × Trial Type | F (2, 70) = 3.26 | =0.044 | 0.09 |

| SOA × Trial Type | F (6, 210) = 0.58 | =0.749 | 0.02 |

| Modality × SOA × Trial Type | F (6, 210) = 4.18 | <0.001 | 0.11 |

| Attend to Visual | |||

| Trial Type | F (2, 70) = 3.49 | =0.036 | 0.09 |

| SOA | F (3, 105) = 0.49 | =0.691 | 0.01 |

| SOA × Trial Type | F (6, 210) = 1.31 | =0.254 | 0.04 |

| Attend to Auditory | |||

| Trial Type | F (2, 70) = 18.33 | <0.001 | 0.34 |

| SOA | F (3, 105) = 1.05 | =0.375 | 0.03 |

| SOA × Trial Type | F (6, 210) = 2.84 | =0.011 | 0.08 |

| Primary Response Time Analyses | ANOVA (df) F | p | ηp2 |

|---|---|---|---|

| Modality | F (1, 35) = 175.91 | <0.001 | 0.08 |

| Trial Type | F (2, 70) = 50.75 | <0.001 | 0.59 |

| SOA | F (3, 105) = 5.70 | <0.001 | 0.14 |

| Modality × SOA | F (3, 105) = 6.57 | <0.001 | 0.16 |

| Modality × Trial Type | F (2, 70) = 2.94 | =0.059 | 0.08 |

| SOA × Trial Type | F (6, 210) = 5.50 | <0.001 | 0.14 |

| Modality × SOA × Trial Type | F (6, 210) = 13.01 | <0.001 | 0.27 |

| Attend to Visual | |||

| Trial Type | F (2, 70) = 27.56 | <0.001 | 0.44 |

| SOA | F (3, 105) = 15.17 | <0.001 | 0.30 |

| SOA × Trial Type | F (6, 210) = 6.96 | <0.001 | 0.17 |

| Attend to Auditory | |||

| Trial Type | F (2, 70) = 24.36 | <0.001 | 0.41 |

| SOA | F (3, 105) = 0.37 | =0.772 | 0.01 |

| SOA × Trial Type | F (6, 210) = 11.33 | <0.001 | 0.24 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Geffen, S.; Beck, T.; Robinson, C.W. Modulating Multisensory Processing: Interactions Between Semantic Congruence and Temporal Synchrony. Vision 2025, 9, 74. https://doi.org/10.3390/vision9030074

Geffen S, Beck T, Robinson CW. Modulating Multisensory Processing: Interactions Between Semantic Congruence and Temporal Synchrony. Vision. 2025; 9(3):74. https://doi.org/10.3390/vision9030074

Chicago/Turabian StyleGeffen, Susan, Taylor Beck, and Christopher W. Robinson. 2025. "Modulating Multisensory Processing: Interactions Between Semantic Congruence and Temporal Synchrony" Vision 9, no. 3: 74. https://doi.org/10.3390/vision9030074

APA StyleGeffen, S., Beck, T., & Robinson, C. W. (2025). Modulating Multisensory Processing: Interactions Between Semantic Congruence and Temporal Synchrony. Vision, 9(3), 74. https://doi.org/10.3390/vision9030074