Recognition of Studied Words in Perceptual Disfluent Sans Forgetica Font

Abstract

:1. Introduction

1.1. What Makes SF Special

([2] Experiment 2; grey scale: [12,13]),

([2] Experiment 2; grey scale: [12,13]),  ([2] Experiment 2; [14] Experiment 1; [5] Experiment 2),

([2] Experiment 2; [14] Experiment 1; [5] Experiment 2),  ([15] Experiment 1),

([15] Experiment 1),  [16], and

[16], and  (grey scale: [17]). See Weisserber and Reinhard [10] for a detailed table of manipulations and effects from the recent literature.

(grey scale: [17]). See Weisserber and Reinhard [10] for a detailed table of manipulations and effects from the recent literature.  ,

,  ,

,  ,

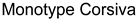

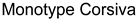

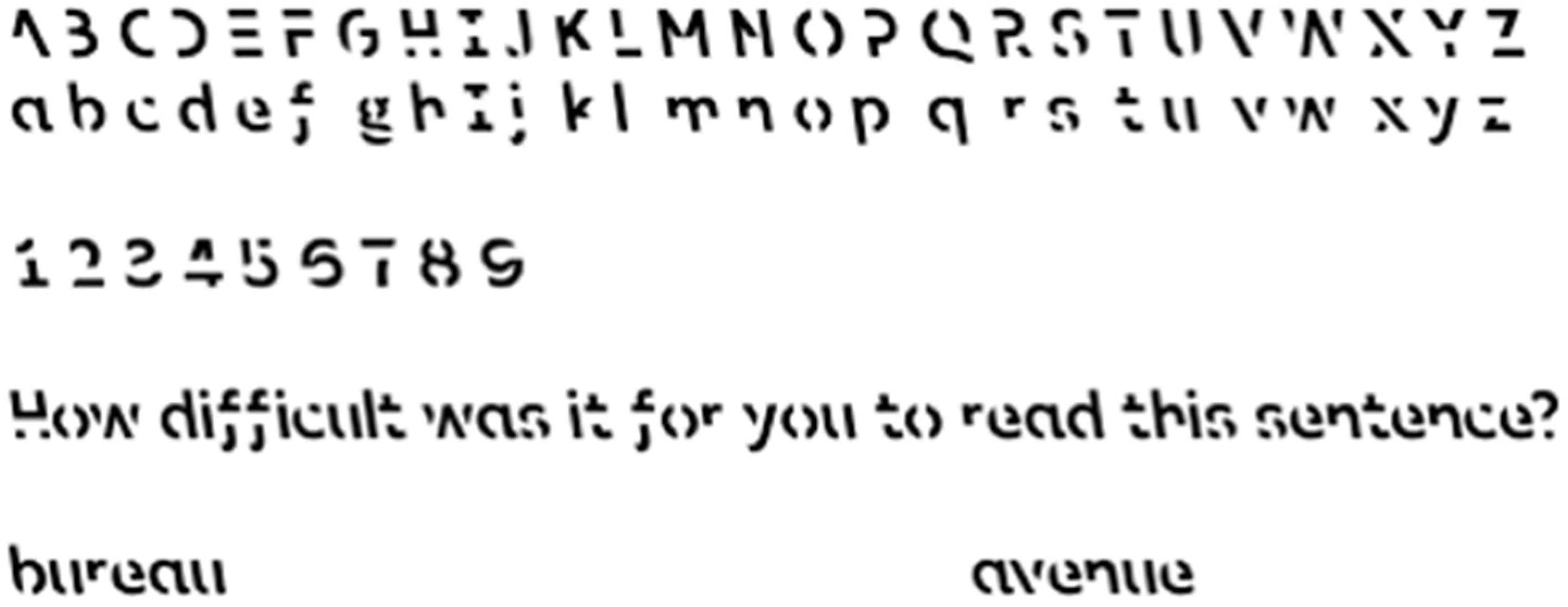

,  ) cannot. These other typefaces create perceptual disfluency through being an unfamiliar reading font or making individual letters hard to parse out (either through narrow spacing or conjoined lettering as in cursive typefaces). In addition, SF uses fragmented letters, where the same slashes of omission are used for the same letter but there is no regular pattern across letters. Moreover, SF’s letters are back-slanted while letters are front-slanted in italicizations and in most disfluent typefaces (See Figure 2, left panel).

) cannot. These other typefaces create perceptual disfluency through being an unfamiliar reading font or making individual letters hard to parse out (either through narrow spacing or conjoined lettering as in cursive typefaces). In addition, SF uses fragmented letters, where the same slashes of omission are used for the same letter but there is no regular pattern across letters. Moreover, SF’s letters are back-slanted while letters are front-slanted in italicizations and in most disfluent typefaces (See Figure 2, left panel). 1.2. Existing Research on SF

1.3. Present Study

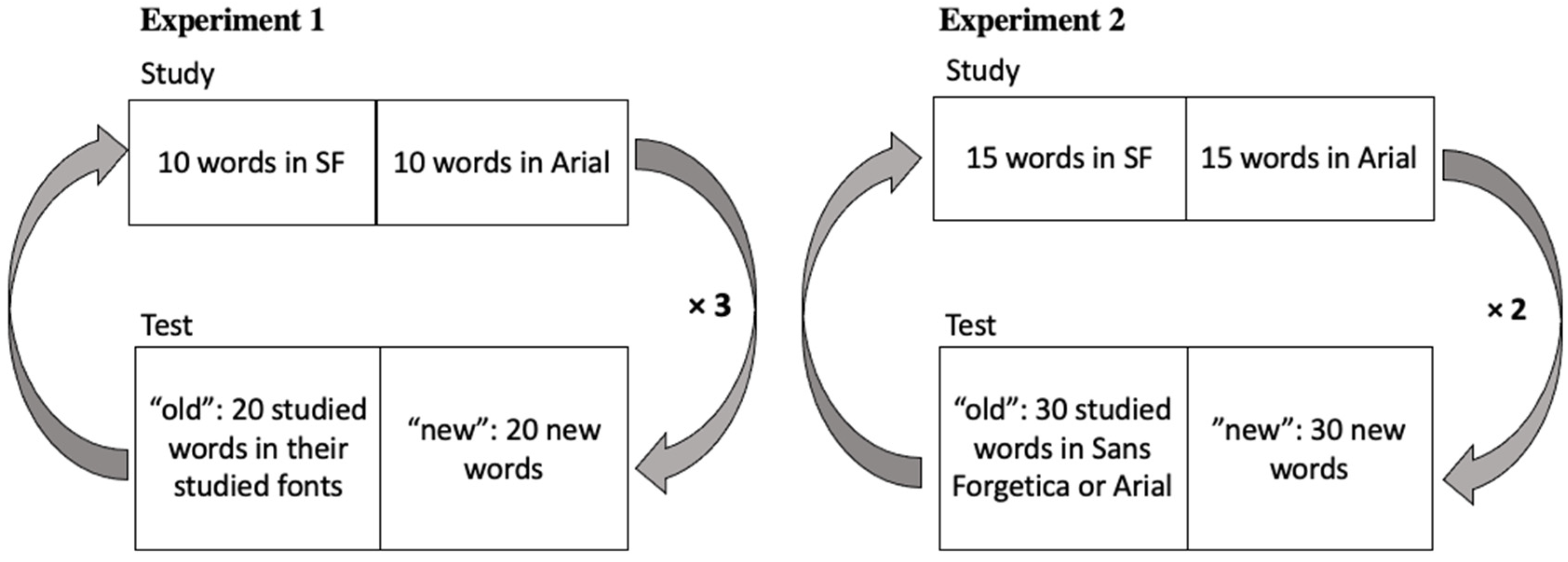

2. Experiment 1: Memory for Words Using Transfer-Appropriate Processing

2.1. Method

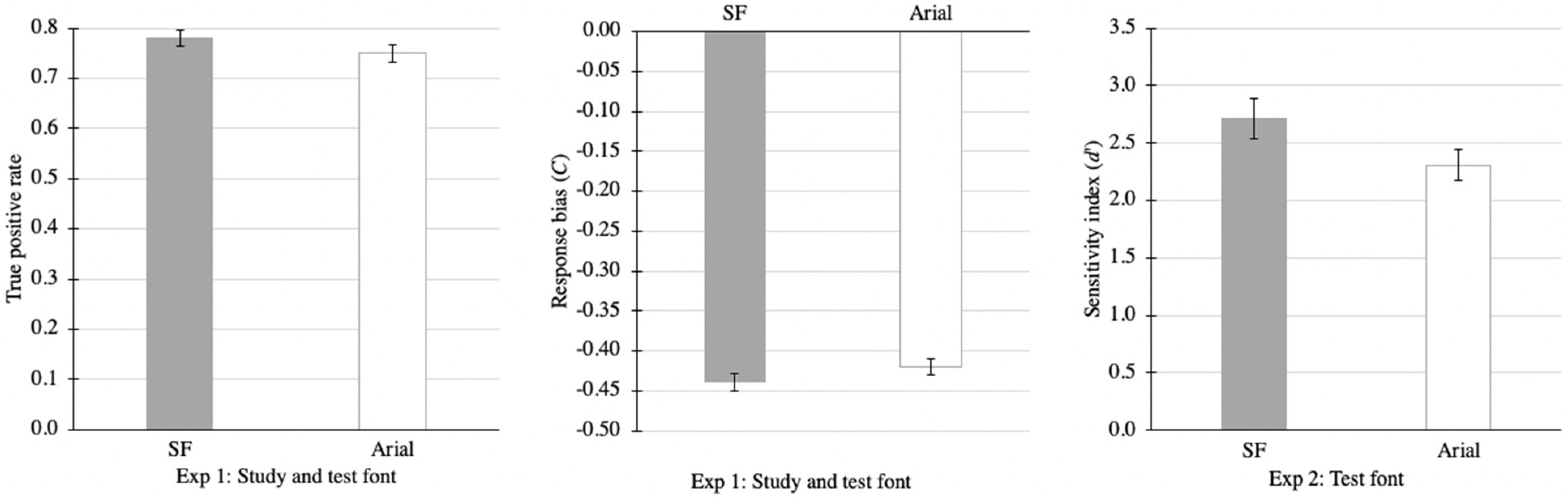

2.2. Results

3. Experiment 2: Memory for Words When Varying Study and Test Fonts, Potential for Contextual Reinstatement?

3.1. Method

3.2. Results

4. General Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bjork, R.A. Memory and meta-memory considerations in the training of human beings. In Metacognition: Knowing about Knowing; Metcalfe, J., Shimamura, A., Eds.; MIT Press: Cambridge, MA, USA, 1994; pp. 185–205. [Google Scholar]

- Diemand-Yauman, C.; Oppenheimer, D.M.; Vaughan, E.B. Fortune favors the bold (and the italicized): Effects of disfluency on educational outcomes. Cognition 2011, 118, 111–115. [Google Scholar] [CrossRef] [PubMed]

- Geller, J.; Still, M.L.; Dark, V.J.; Carpenter, S.K. Would disfluency by any other name still be disfluent? Examining the disfluency effect with cursive handwriting. Mem. Cogn. 2018, 46, 1109–1126. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rosner, T.M.; Davis, H.; Milliken, B. Perceptual blurring and recognition memory: A desirable difficulty effect revealed. Acta Psychol. 2015, 160, 11–22. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Seufert, T.; Wagner, F.; Westphal, T. The effects of different levels of dis-fluency on learning performance and cognitive load. Instr. Sci. 2017, 45, 221–238. [Google Scholar] [CrossRef]

- Halamish, V. Can very small font size enhance memory? Mem. Cogn. 2018, 46, 979–993. [Google Scholar] [CrossRef] [Green Version]

- Sungkhasettee, V.W.; Friedman, M.C.; Castel, A.D. Memory and metamemory for inverted words: Illusions of competency and desirable difficulties. Psychon. Bull. Rev. 2011, 18, 973–978. [Google Scholar] [CrossRef]

- Besken, M.; Mulligan, N.W. Easily perceived, easily remembered? Perceptual interference produces a double dissociation between metamemory and memory performance. Mem. Cogn. 2013, 41, 897–903. [Google Scholar] [CrossRef] [Green Version]

- Xie, H.; Zhou, Z.; Liu, Q. Null effects of perceptual disfluency on learning outcomes in a text-based educational context: A meta-analysis. Educ. Psychol. Rev. 2018, 30, 745–771. [Google Scholar] [CrossRef]

- Weissgerber, S.C.; Reinhard, M. Is disfluency desirable for learning? Learn. Instr. 2017, 49, 199–217. [Google Scholar] [CrossRef]

- Hirshman, E.; Trembath, D.; Mulligan, N. Theoretical implications of the mnemonic benefits of perceptual interference. J. Exp. Psychol. Learn. Mem. Cogn. 1994, 20, 608–620. [Google Scholar] [CrossRef]

- Eitel, A.; Kühl, T.; Scheiter, K.; Gerjets, P. Disfluency meets cognitive load in multimedia learning: Does harder-to-read mean better-to-understand? Appl. Cogn. Psychol. 2014, 28, 488–501. [Google Scholar] [CrossRef]

- Lehmann, J.; Goussios, C.; Seufert, T. Working memory capacity and disfluency effect: An aptitude-treatment-interaction study. Metacognition Learn. 2016, 11, 89–105. [Google Scholar] [CrossRef] [Green Version]

- French, M.M.J.; Blood, A.; Bright, N.D.; Futak, D.; Grohmann, M.J.; Hasthorpe, A.; Heritage, J.; Poland, R.L.; Reece, S.; Tabor, J. Changing fonts in education: How the benefits vary with ability and dyslexia. J. Educ. Res. 2013, 106, 301–304. [Google Scholar] [CrossRef]

- Eitel, A.; Kühl, T. Effects of disfluency and test expectancy on learning with text. Metacognition Learn. 2016, 11, 107–121. [Google Scholar] [CrossRef]

- Pieger, E.; Mengelkamp, C.; Bannert, M. Metacognitive judgments and disfluency—Does disfluency lead to more accurate judgments, better control, and better performance? Learn. Instr. 2016, 44, 31–40. [Google Scholar] [CrossRef]

- Rummer, R.; Schweppe, J.; Schwede, A. Fortune is fickle: Null-effects of disfluency on learning outcomes. Metacognition Learn. 2016, 11, 57–60. [Google Scholar] [CrossRef]

- Telford, T. Researchers create new font designed to boost your memory. The Washington Post, 5 October 2018. Available online: https://www.washingtonpost.com/business/2018/10/05/introducing-sans-forgetica-font-designed-boost-your-memory/ (accessed on 2 April 2021).

- Simon, S. Sans Forgetica: A Font to Remember. Available online: https://www.npr.org/2018/10/06/655121384/sans-forgetica-a-font-to-remember (accessed on 2 April 2021).

- Bregman, A.S. Asking the ‘‘what for’’ question in auditory perception. In Perceptual Organization; Kubovy, M., Pomerantz, J.R., Eds.; Erlbaum: Hillsdale, NJ, USA, 1981. [Google Scholar]

- Zöllner, F. Ueber eine neue Art von Pseudoskopie und ihre Beziehungen zu den von Plateau und Oppel beschrieben Bewegungsphaenomenen. Ann. Phys. 1860, 186, 500–523. [Google Scholar] [CrossRef] [Green Version]

- Kanizsa, G. Organization in Vision: Essays on Gestalt Perception; Praeger: New York, NY, USA, 1979. [Google Scholar]

- Wetzler, E.L.; Pyke, A.A.; Werner, A. Sans Forgetica is not the “font” of knowledge: Disfluent fonts are not always desirable difficulties. SAGE Open 2021, 11, 1–10. [Google Scholar] [CrossRef]

- Geller, J.; Peterson, D. Is this going to be on the test? Test expectancy moderates the disfluency effect with Sans Forgetica. J. Exp. Psychol. Learn. Mem. Cogn. 2021, 47, 1924–1938. [Google Scholar] [CrossRef]

- Cui, L.; Liu, J. Does Sans Forgetica font facilitate word memory? In Proceedings of the 44th Annual Meeting of the Cognitive Science Society, Toronto, ON, Canada, 27–30 July 2022; Culbertson, J., Perfors, A., Rabagliati, H., Ramenzoni, V., Eds.; eScholarship University of California: Los Angeles, CA, USA, 2022; p. 3748. [Google Scholar]

- Hu, X.; Yang, C.; Luo, L. Retrospective confidence rating about memory performance is affected by both retrieval fluency and non-decision time. Metacognition Learn. 2022, 17, 651–681. [Google Scholar] [CrossRef]

- Casumbal, K.J.S.; Chan, C.K.T.; de Guzman, F.Y.V.; Fernandez, N.V.G.; Ng, A.V.N.; So, M.C. The Effects of Low-Fidelity Music and Font Style on Recall; Ateneo de Manila University: Metro Manila, Philippines, 2022. [Google Scholar] [CrossRef]

- Eskenazai, M.A.; Nix, B. Individual differences in the desirable difficulty effect during lexical acquisition. J. Exp. Psychol. Learn. Mem. Cogn. 2020, 47, 45–52. [Google Scholar] [CrossRef] [PubMed]

- Maxwell, N.P.; Perry, T.; Huff, M.J. Perceptually fluent features of study words do not inflate judgements of learning: Evidence from font size, highlights, and Sans Forgetica Font Type. Metacognition Learn. 2022, 17, 293–319. [Google Scholar] [CrossRef]

- Taylor, A.; Sanson, M.; Burnell, R.; Wade, K.A.; Garry, M. Disfluent difficulties are not desirable difficulties: The (lack of) effect of Sans Forgetica on memory. Memory 2020, 28, 850–857. [Google Scholar] [CrossRef] [PubMed]

- Geller, J.; Davis, S.D.; Peterson, D.J. Sans Forgetica is not desirable for learning. Memory 2020, 28, 957–967. [Google Scholar] [CrossRef]

- Buechel, L.L. Disfluency in English language teaching? Eur. J. Foreign Lang. Teach. 2020, 5, 32–44. [Google Scholar] [CrossRef]

- Dykes, R.; Hauca, M. Sans Forgetica: Typography’s effect on ESL/EFL reading comprehension. In Proceedings of the 27th Korea TESOL International Conference, Seoul, Korea, 12–13 October 2019; pp. 53–60. [Google Scholar]

- Cui, L.; Harrison, L.; Liu, J. Sans Forgetica Does Not Improve Cognitive Reflection Test Performance despite Differences in Legibility. Available online: psyarxiv.com/qdxw3 (accessed on 13 August 2022).

- Cushing, C.; Bodner, G.E. Reading aloud improves proofreading (but using Sans Forgetica font does not). J. Appl. Res. Mem. Cogn. 2022. [Google Scholar] [CrossRef]

- Hirshman, E.; Mulligan, N. Perceptual interference improves explicit memory but does not enhance data-driven processing. J. Exp. Psychol. Learn. Mem. Cogn. 1991, 17, 507–513. [Google Scholar] [CrossRef]

- Mulligan, N.W. The effects of perceptual interference at encoding on implicit memory, explicit memory, and memory for source. J. Exp. Psychol. Learn. Mem. Cogn. 1996, 22, 1067–1087. [Google Scholar] [CrossRef]

- Nairne, J.S. The mneumonic value of perceptual identification. J. Exp. Psychol. Learn. Mem. Cogn. 1988, 14, 248–255. [Google Scholar] [CrossRef]

- McDaniel, M.A.; Butler, A.C. A contextual framework for understanding when difficulties are desirable. In Successful Remembering and Successful Forgetting: A Festschrift in Honor of Robert A. Bjork; Benjamin, A.S., Ed.; Psychology Press: London, UK, 2010. [Google Scholar]

- Rhodes, M.G.; Caster, A.D. Memory predictions are influenced by perceptual information: Evidence for metacognitive illusions. J. Exp. Psychol. Gen. 2008, 137, 615–625. [Google Scholar] [CrossRef] [Green Version]

- Balota, D.A.; Yap, M.J.; Cortese, M.J.; Hutchison, K.A.; Kessler, B.; Loftis, B.; Neely, J.H.; Nelson, D.L.; Simpson, G.B.; Treiman, R. The English lexicon project. Behav. Res. Methods 2007, 39, 445–459. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wickens, T. Elementary Signal Detection Theory; Oxford University Press: Oxford, UK; New York, NY, USA, 2002. [Google Scholar]

- Cui, L.; Liu, J.; Liu, Z. Sans Forgetica Font May Help Memory for Words but Not for Numbers, nor Does It Help Analytical Thinking. Available online: https://psyarxiv.com/a7n2r/ (accessed on 23 April 2021).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cui, L.; Liu, J. Recognition of Studied Words in Perceptual Disfluent Sans Forgetica Font. Vision 2022, 6, 52. https://doi.org/10.3390/vision6030052

Cui L, Liu J. Recognition of Studied Words in Perceptual Disfluent Sans Forgetica Font. Vision. 2022; 6(3):52. https://doi.org/10.3390/vision6030052

Chicago/Turabian StyleCui, Lucy, and Jereth Liu. 2022. "Recognition of Studied Words in Perceptual Disfluent Sans Forgetica Font" Vision 6, no. 3: 52. https://doi.org/10.3390/vision6030052

APA StyleCui, L., & Liu, J. (2022). Recognition of Studied Words in Perceptual Disfluent Sans Forgetica Font. Vision, 6(3), 52. https://doi.org/10.3390/vision6030052