No Advantage for Separating Overt and Covert Attention in Visual Search

Abstract

1. Introduction

1.1. Overt and Covert Attention

1.2. Untethering Overt and Covert Attention

1.3. Current Aims

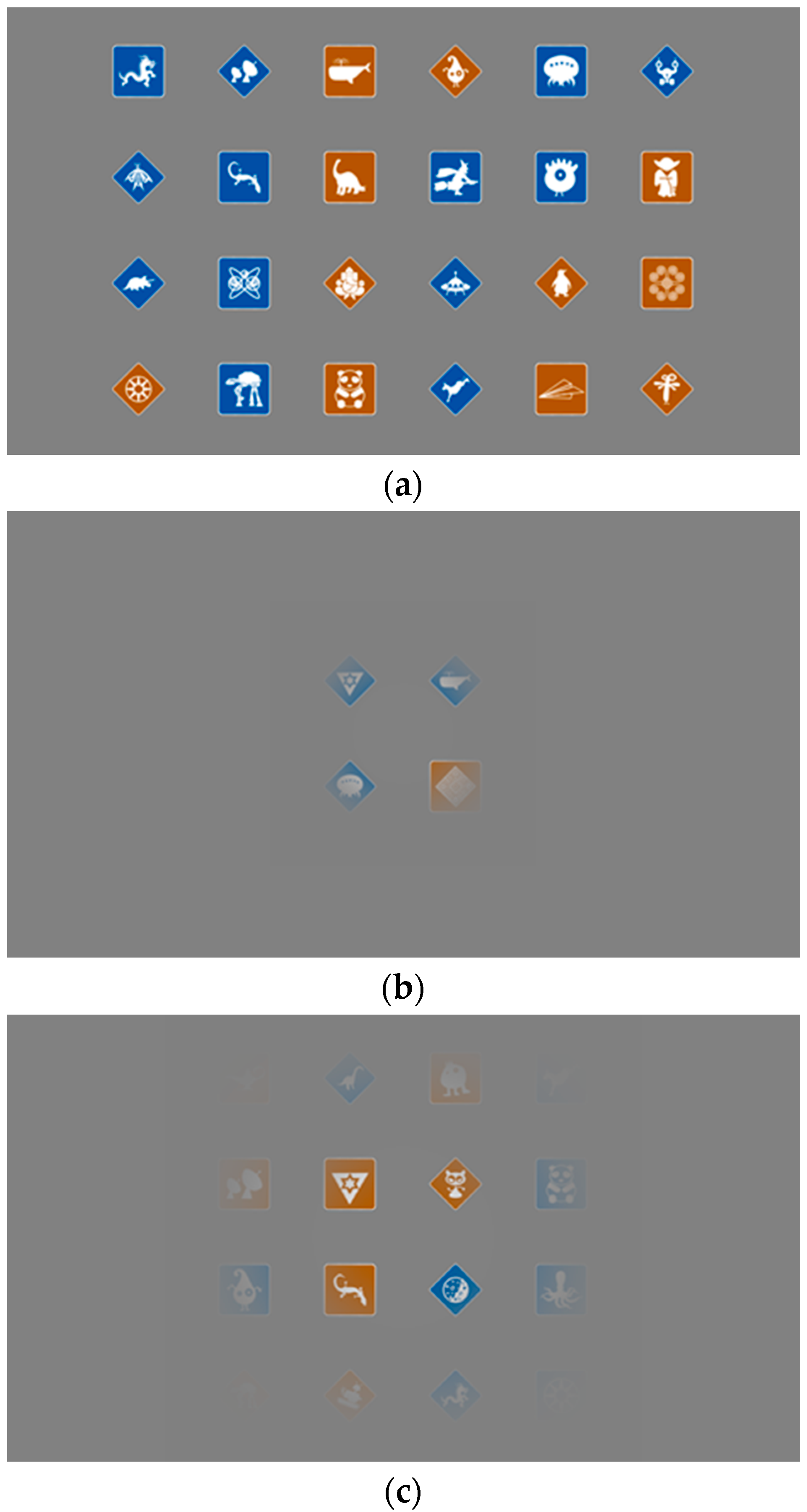

2. Methods

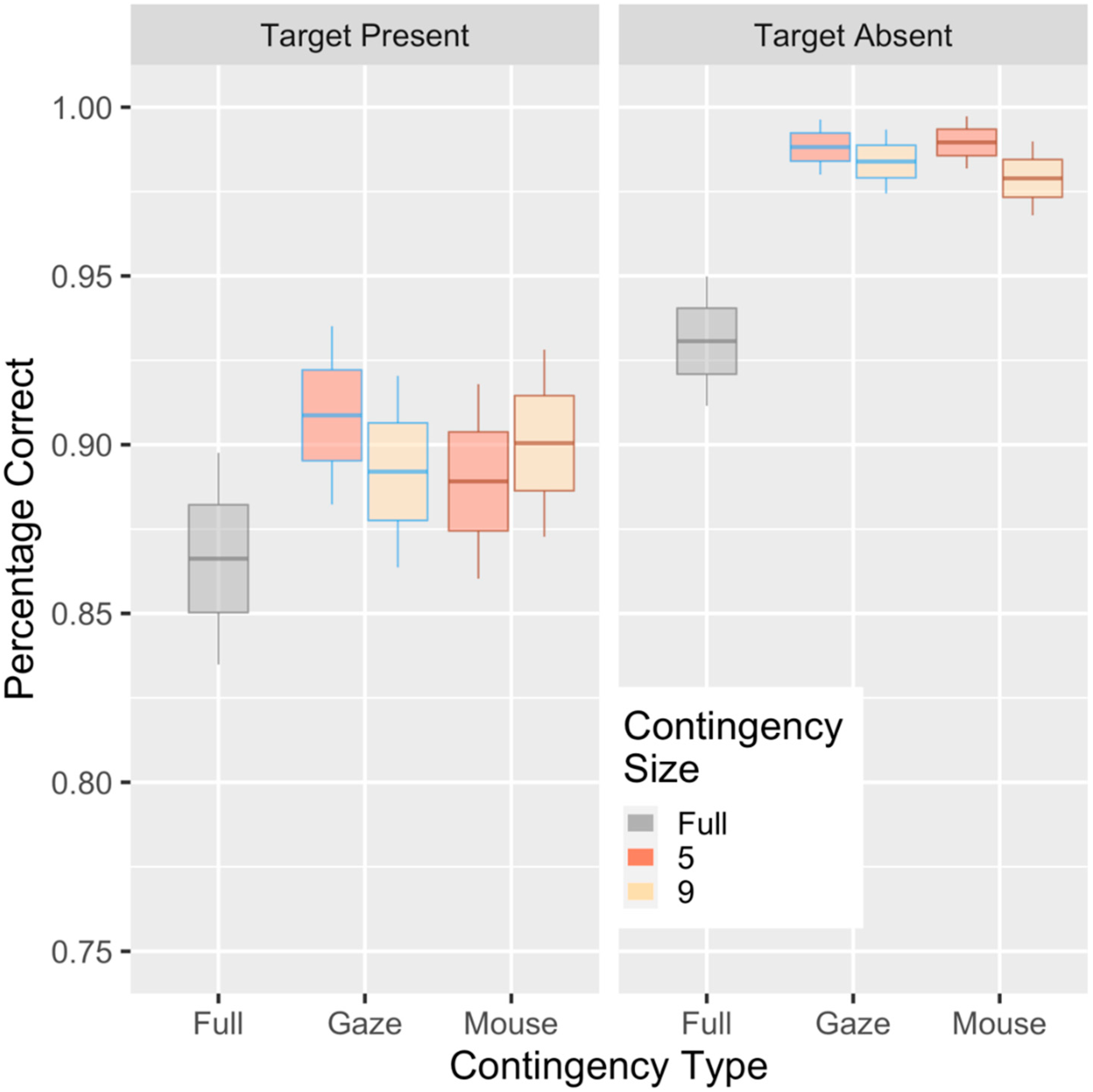

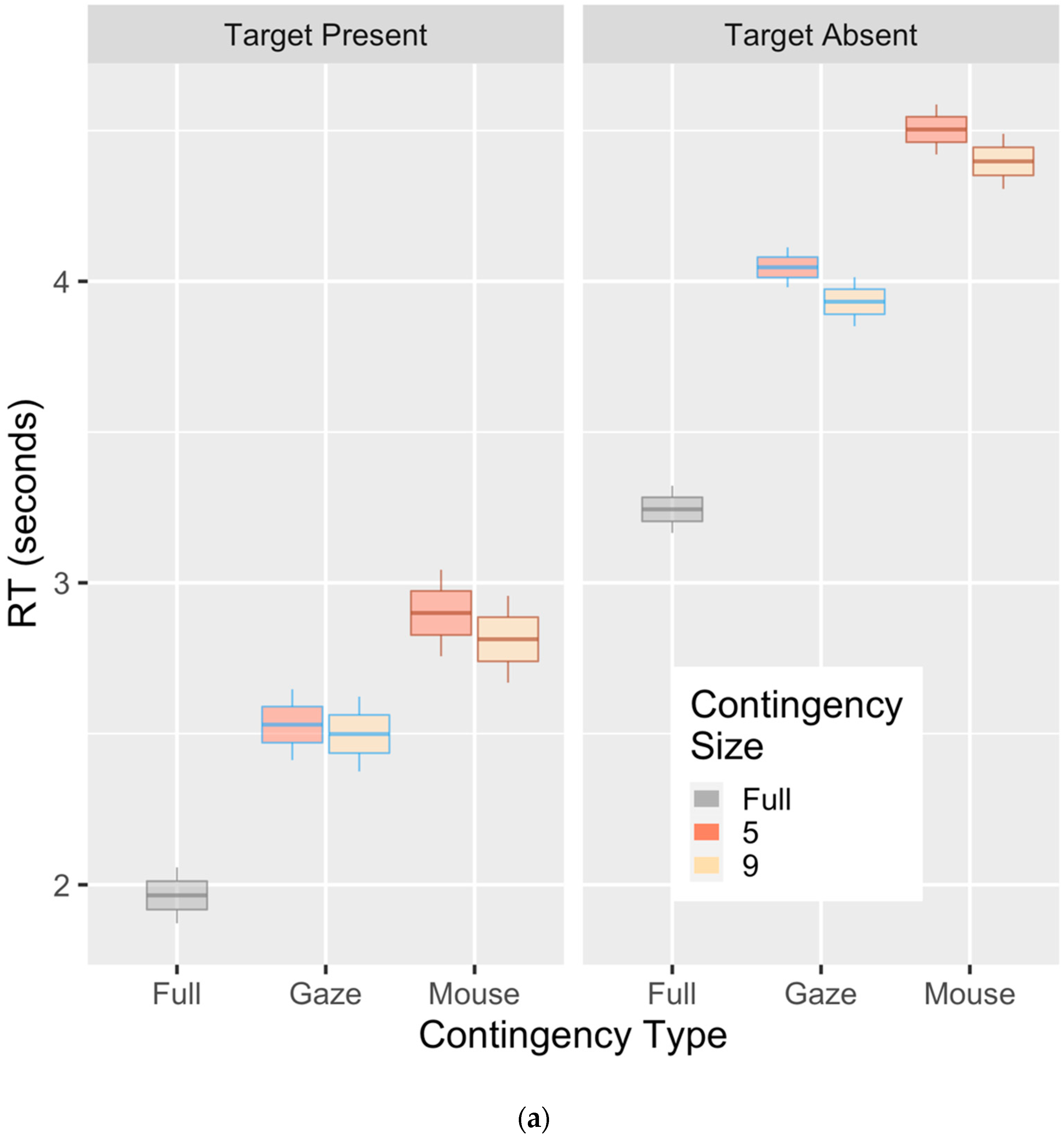

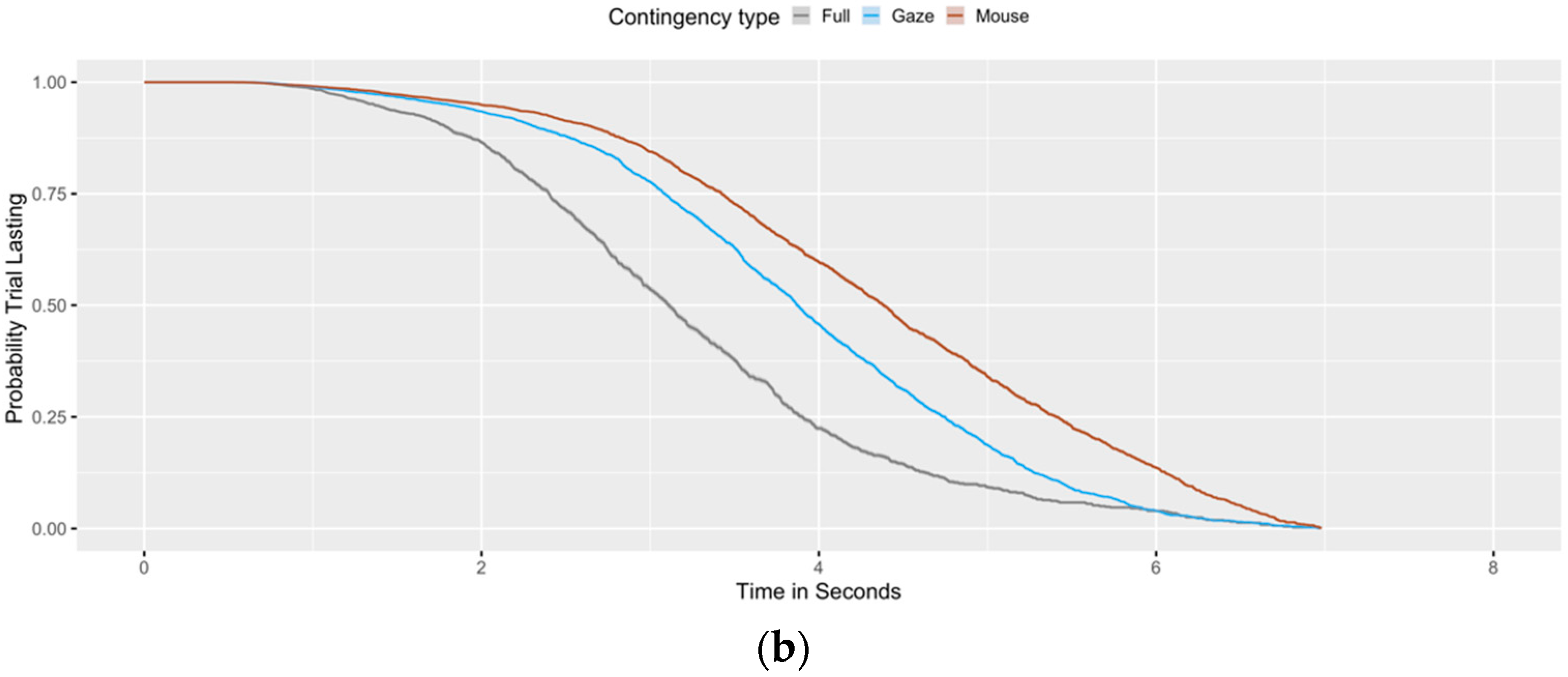

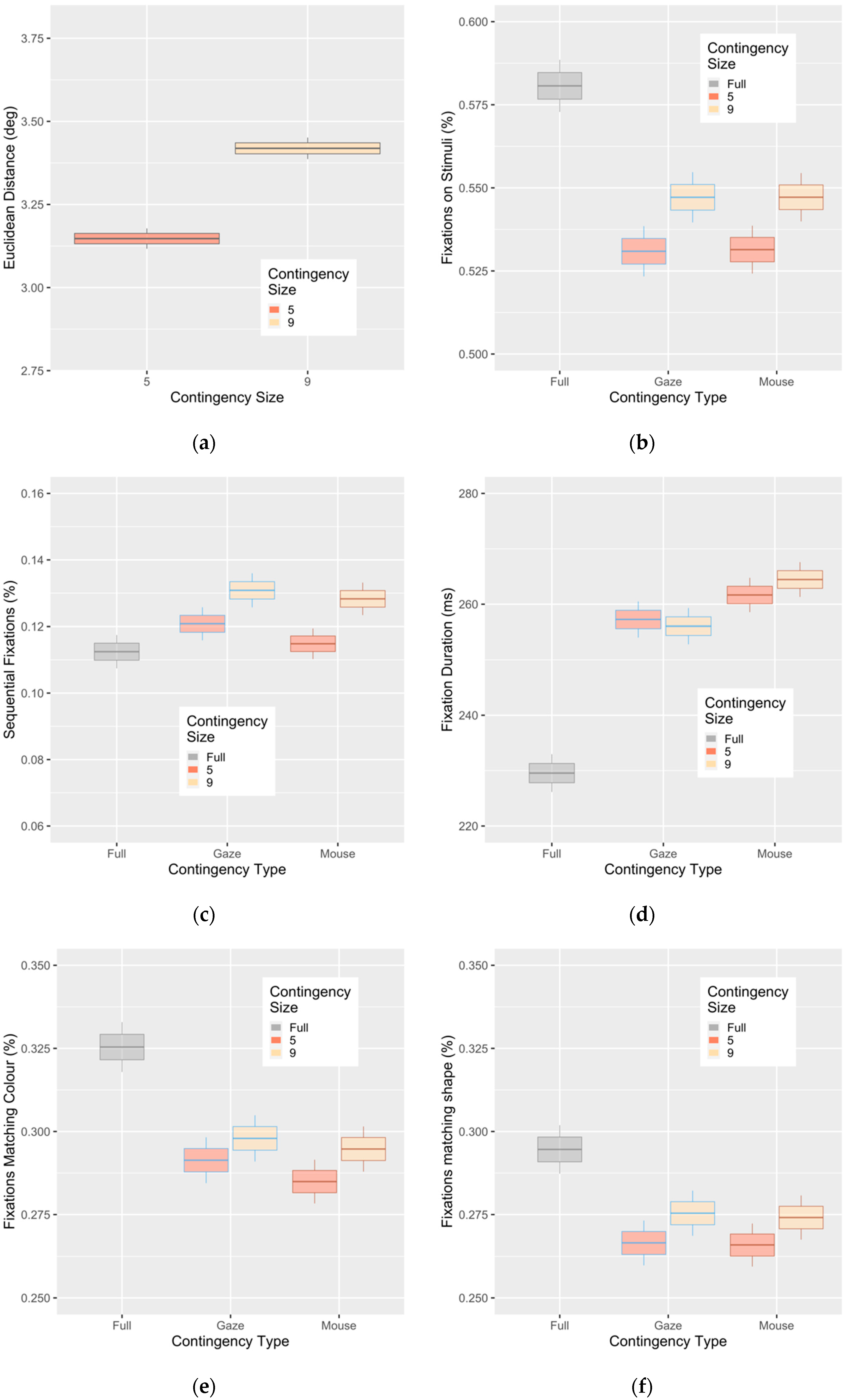

3. Results

Evidence of Untethered Covert Attention

4. Discussion

Author Contributions

Funding

Conflicts of Interest

Data Availability

Appendix A

References

- Desimone, R.; Duncan, J. Neural mechanisms of selective visual attention. Annu. Rev. Neurosci. 1995, 18, 193–222. [Google Scholar] [CrossRef] [PubMed]

- Egeth, H. Attention and preattention. In Psychology of Learning and Motivation; Academic Press: Cambridge, MA, USA, 1977; pp. 277–320. [Google Scholar]

- Driver, J. A selective review of selective attention research from the past century. Br. J. Psychol. 2001, 92, 53–78. [Google Scholar] [CrossRef] [PubMed]

- Most, S.B.; Scholl, B.J.; Clifford, E.R.; Simons, D.J. What You See Is What You Set: Sustained Inattentional Blindness and the Capture of Awareness. Psychol. Rev. 2005, 112, 217–242. [Google Scholar] [CrossRef] [PubMed]

- Kristjánsson, Á. Simultaneous priming along multiple feature dimensions in a visual search task. Vis. Res. 2006, 46, 2554–2570. [Google Scholar] [CrossRef]

- Rensink, R.A.; O’Regan, J.K.; Clark, J.J. On the Failure to Detect Changes in Scenes Across Brief Interruptions. Vis. Cogn. 2000, 7, 127–145. [Google Scholar] [CrossRef]

- Egeth, H.E.; Yantis, S. VISUAL ATTENTION: Control, Representation, and Time Course. Annu. Rev. Psychol. 1997, 48, 269–297. [Google Scholar] [CrossRef]

- Theeuwes, J. Top–down and bottom–up control of visual selection. Acta Psychol. 2010, 135, 77–99. [Google Scholar] [CrossRef]

- Posner, M.I. Orienting of attention. Q. J. Exp. Psychol. 1980, 32, 3–25. [Google Scholar] [CrossRef]

- Von Helmholtz, H. Handbuch der Physiologischen Optik; Voss: Hypoluxo, FL, USA, 1867. [Google Scholar]

- Deubel, H.; Schneider, W.X. Saccade target selection and object recognition: Evidence for a common attentional mechanism. Vis. Res. 1996, 36, 1827–1837. [Google Scholar] [CrossRef]

- Hoffman, J.; Subramaniam, B. The role of visual attention in saccadic eye movements. Percept. Psychophys. 1995, 57, 787–795. [Google Scholar] [CrossRef]

- Kowler, E.; Anderson, E.; Dosher, B.; Blaser, E. The role of attention in the programming of saccades. Vis. Res. 1995, 35, 1897–1916. [Google Scholar] [CrossRef]

- Klein, R.M. Does oculomotor readiness mediate cognitive controlof visual attention? In Attention and Performance VIII; Nickerson, R.S., Ed.; Erlbaum: Hillsdale, NJ, USA, 1980; pp. 259–276. [Google Scholar]

- Sheliga, B.; Riggio, L.; Rizzolatti, G. Orienting of attention and eye movements. Exp. Brain Res. 1994, 98, 507–522. [Google Scholar] [CrossRef] [PubMed]

- Kristjansson, A. The Intriguing Interactive Relationship between Visual Attention and Saccadic Rye Movements; The Oxford Handbook of eye Movements: Oxford, UK, 2011; pp. 455–470. [Google Scholar]

- Klein, R.M.; Pontefract, A. 13 Does Oculomotor Readiness Mediate Cognitive Control of Visual Attention? Revisited! Attention and Performance XV: Conscious and Nonconscious Information Processing; MIT Press: Cambridge, MA, USA, 1994; p. 333. [Google Scholar]

- Hunt, A.R.; Kingstone, A. Inhibition of return: Dissociating attentional and oculomotor components. J. Exp. Psychol. Hum. Percept. Perform. 2003, 29, 1068–1074. [Google Scholar] [CrossRef] [PubMed]

- Sato, T.R.; Schall, J.D. Effects of Stimulus-Response Compatibility on Neural Selection in Frontal Eye Field. Neuron 2003, 38, 637–648. [Google Scholar] [CrossRef]

- Wollenberg, L.; Deubel, H.; Szinte, M. Visual attention is not deployed at the endpoint of averaging saccades. PLoS Boil. 2018, 16, e2006548. [Google Scholar] [CrossRef] [PubMed]

- Smith, D.; Schenk, T. The Premotor theory of attention: Time to move on? Neuropsychology 2012, 50, 1104–1114. [Google Scholar] [CrossRef]

- Beauchamp, M.S.; Petit, L.; Ellmore, T.M.; Ingeholm, J.; Haxby, J.V. A Parametric fMRI Study of Overt and Covert Shifts of Visuospatial Attention. NeuroImage 2001, 14, 310–321. [Google Scholar] [CrossRef]

- Corbetta, M.; Akbudak, E.; Conturo, T.; Snyder, A.Z.; Ollinger, J.M.; Drury, H.; Linenweber, M.R.; Petersen, S.; Raichle, M.; Van Essen, D.C.; et al. A Common Network of Functional Areas for Attention and Eye Movements. Neuron 1998, 21, 761–773. [Google Scholar] [CrossRef]

- De Haan, B.; Morgan, P.S.; Rorden, C. Covert orienting of attention and overt eye movements activate identical brain regions. Brain Res. 2008, 1204, 102–111. [Google Scholar] [CrossRef]

- Kustov, A.A.; Robinson, D.L. Shared neural control of attentional shifts and eye movements. Nature 1996, 384, 74–77. [Google Scholar] [CrossRef]

- Armstrong, K.M.; Fitzgerald, J.K.; Moore, T. Changes in Visual Receptive Fields with Microstimulation of Frontal Cortex. Neuron 2006, 50, 791–798. [Google Scholar] [CrossRef] [PubMed]

- Beckers, G.; Canavan, A.G.M.; Zangemeister, W.H.; Hömberg, V. Transcranial magnetic stimulation of human frontal and parietal cortex impairs programming of periodic saccades. Neuro-Ophthalmology 1992, 12, 289–295. [Google Scholar] [CrossRef]

- Grosbras, M.-H.; Paus, T. Transcranial Magnetic Stimulation of the Human Frontal Eye Field: Effects on Visual Perception and Attention. J. Cogn. Neurosci. 2002, 14, 1109–1120. [Google Scholar] [CrossRef] [PubMed]

- Muggleton, N.G.; Juan, C.-H.; Cowey, A.; Walsh, V. Human Frontal Eye Fields and Visual Search. J. Neurophysiol. 2003, 89, 3340–3343. [Google Scholar] [CrossRef]

- Thompson, K.G.; Biscoe, K.L.; Sato, T.R. Neuronal basis of covert spatial attention in the frontal eye field. J. Neurosci. 2005, 25, 9479–9487. [Google Scholar] [CrossRef] [PubMed]

- Chica, A.B.; Klein, R.M.; Rafal, R.D.; Hopfinger, J.B. Endogenous saccade preparation does not produce inhibition of return: Failure to replicate Rafal, Calabresi, Brennan, & Sciolto (1989). J. Exp. Psychol. Hum. Percept. Perform. 2010, 36, 1193–1206. [Google Scholar]

- Itti, L.; Koch, C. A saliency-based search mechanism for overt and covert shifts of visual attention. Vis. Res. 2000, 40, 1489–1506. [Google Scholar] [CrossRef]

- Krasovskaya, S.; MacInnes, J. Salience Models: A Computational Cognitive Neuroscience Review. Vision 2019, 3, 56. [Google Scholar] [CrossRef]

- Treisman, A.M.; Gelade, G. A feature-integration theory of attention. Cognit. Psychol. 1980, 12, 97–136. [Google Scholar] [CrossRef]

- Hulleman, J.; Olivers, C.N.L. The impending demise of the item in visual search. Behav. Brain Sci. 2015, 40, 1–76. [Google Scholar] [CrossRef]

- Young, A.H.; Hulleman, J. Eye movements reveal how task difficulty moulds visual search. J. Exp. Psychol. Hum. Percept. Perform. 2013, 39, 168–190. [Google Scholar] [CrossRef] [PubMed]

- Najemnik, J.; Geisler, W.S. Optimal eye movement strategies in visual search. Nature 2005, 434, 387–391. [Google Scholar] [CrossRef] [PubMed]

- Kristjánsson, A.; Nakayama, K. A primitive memory system for the deployment of transient attention. Percept. Psychophys. 2003, 65, 711–724. [Google Scholar] [CrossRef] [PubMed]

- Engbert, R.; Kliegl, R. Microsaccades uncover the orientation of covert attention. Vis. Res. 2003, 43, 1035–1045. [Google Scholar] [CrossRef]

- Carrasco, M.; McElree, B. Covert Attention Speeds the Accrual of Visual Information. Proc. Natl. Acad. Sci. USA 2013, 98, 5363–5367. [Google Scholar] [CrossRef] [PubMed]

- Foulsham, T.; Underwood, G. If Visual Saliency Predicts Search, Then Why? Evidence from Normal and Gaze-Contingent Search Tasks in Natural Scenes. Cogn. Comput. 2011, 3, 48–63. [Google Scholar] [CrossRef]

- Geisler, W.S.; Perry, J.S.; Najemnik, J. Visual search: The role of peripheral information measured using gaze-contingent displays. J. Vis. 2006, 6, 1. [Google Scholar] [CrossRef]

- McConkie, G.W.; Rayner, K. The span of the effective stimulus during a fixation in reading. Percept. Psychophys. 1975, 17, 578–586. [Google Scholar] [CrossRef]

- Chetverikov, A.; Kuvaldina, M.; MacInnes, W.J.; Jóhannesson Ómar, I. Kristjánsson, Árni Implicit processing during change blindness revealed with mouse-contingent and gaze-contingent displays. Atten. Percept. Psychophys. 2018, 80, 844–859. [Google Scholar] [CrossRef]

- Duncan, J.; Humphreys, G.W. Visual search and stimulus similarity. Psychol. Rev. 1989, 96, 433. [Google Scholar] [CrossRef]

- Kristjánsson, Árni Reconsidering Visual Search. i-Perception 2015, 6, 2041669515614670.

- Nakayama, K.; Martini, P. Situating visual search. Vis. Res. 2011, 51, 1526–1537. [Google Scholar] [CrossRef] [PubMed]

- Neisser, U. Visual search. Sci. Am. 1964, 210, 94–103. [Google Scholar] [CrossRef] [PubMed]

- Kleiner, M.; Brainard, D.; Pelli, D.; Ingling, A.; Murray, R.; Broussard, C. What’s new in psychtoolbox-3. Perception 2007, 36, 1–16. [Google Scholar]

- Bates, D.; Kliegl, R.; Vasishth, S.; Baayen, H. Parsimonious mixed models. arXiv 2015, arXiv:1506.04967. [Google Scholar]

- Horstmann, G.; Ernst, D.; Becker, S. Dwelling on distractors varying in target-distractor similarity. Acta Psychol. 2019, 198, 102859. [Google Scholar] [CrossRef]

- Horstmann, G.; Becker, S.I.; Ernst, D. Dwelling, rescanning, and skipping of distractors explain search efficiency in difficult search better than guidance by the target. Vis. Cogn. 2017, 25, 291–305. [Google Scholar] [CrossRef]

- Meghanathan, R.N.; Van Leeuwen, C.; Nikolaev, A.R. Fixation duration surpasses pupil size as a measure of memory load in free viewing. Front. Hum. Neurosci. 2015, 8, 1063. [Google Scholar] [CrossRef]

- Egeth, H.E.; Virzi, R.A.; Garbart, H. Searching for conjunctively defined targets. J. Exp. Psychol. 1984, 10, 32. [Google Scholar] [CrossRef]

- Friedman-Hill, S.; Wolfe, J.M. Second-order parallel processing: Visual search for the odd item in a subset. J. Exp. Psychol. Hum. Percept. Perform. 1995, 21, 531–551. [Google Scholar] [CrossRef]

- Yeshurun, Y.; Carrasco, M. Attention improves or impairs visual performance by enhancing spatial resolution. Nature 1998, 396, 72–75. [Google Scholar] [CrossRef] [PubMed]

- Buschman, T.J.; Miller, E.K. Serial, Covert Shifts of Attention during Visual Search Are Reflected by the Frontal Eye Fields and Correlated with Population Oscillations. Neuron 2009, 63, 386–396. [Google Scholar] [CrossRef] [PubMed]

- Donner, T.H.; Kettermann, A.; Diesch, E.; Ostendorf, F.; Villringer, A.; Brandt, S.A. Involvement of the human frontal eye field and multiple parietal areas in covert visual selection during conjunction search. Eur. J. Neurosci. 2000, 12, 3407–3414. [Google Scholar] [CrossRef] [PubMed]

- Findlay, J.M.; Gilchrist, I.D. Visual attention: The active vision perspective. In Vision and Attention; Springer: Berlin/Heidelberg, Germany, 2001; pp. 83–103. [Google Scholar]

- Hunt, A.R.; Cavanagh, P. Looking ahead: The perceived direction of gaze shifts before the eyes move. J. Vis. 2009, 9, 1–7. [Google Scholar] [CrossRef]

- MacInnes, J.; Hunt, A.R. Attentional load interferes with target localization across saccades. Exp. Brain Res. 2014, 232, 3737–3748. [Google Scholar] [CrossRef]

- Duhamel, J.-R.; Colby, C.; Goldberg, M. The updating of the representation of visual space in parietal cortex by intended eye movements. Science 1992, 255, 90–92. [Google Scholar] [CrossRef]

- Sommer, M.A.; Wurtz, R.H. A Pathway in Primate Brain for Internal Monitoring of Movements. Science 2002, 296, 1480–1482. [Google Scholar] [CrossRef]

- Wurtz, R.H. Neuronal mechanisms of visual stability. Vis. Res. 2008, 48, 2070–2089. [Google Scholar] [CrossRef]

- Golomb, J.D. The what and when of remapping across saccades: A dual-spotlight theory of attentional updating and its implications for feature perception. Curr. Opin. Psychol. 2019, 29, 211–218. [Google Scholar] [CrossRef]

- Wolfe, J.M.; Horowitz, T.S. Five factors that guide attention in visual search. Nat. Hum. Behav. 2017, 1, 58. [Google Scholar] [CrossRef]

- Dodd, M.D.; Van Der Stigchel, S.; Hollingworth, A. Novelty Is Not Always the Best Policy. Psychol. Sci. 2009, 20, 333–339. [Google Scholar] [CrossRef] [PubMed]

- Yarbus, A.L. Role of Eye Movements in the Visual Process; American Psychological Association: Washington, DC, USA, 1965. [Google Scholar]

- DeAngelus, M.; Pelz, J. Top-down control of eye movements: Yarbus revisited. Vis. Cogn. 2009, 17, 790–811. [Google Scholar] [CrossRef]

- Henderson, J.M.; Shinkareva, S.V.; Wang, J.; Luke, S.G.; Olejarczyk, J. Predicting Cognitive State from Eye Movements. PLoS ONE 2013, 8, e64937. [Google Scholar] [CrossRef] [PubMed]

- MacInnes, J.; Hunt, A.R.; Clarke, A.D.F.; Dodd, M.D. A Generative Model of Cognitive State from Task and Eye Movements. Cogn. Comput. 2018, 10, 703–717. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

MacInnes, W.J.; Jóhannesson, Ó.I.; Chetverikov, A.; Kristjánsson, Á. No Advantage for Separating Overt and Covert Attention in Visual Search. Vision 2020, 4, 28. https://doi.org/10.3390/vision4020028

MacInnes WJ, Jóhannesson ÓI, Chetverikov A, Kristjánsson Á. No Advantage for Separating Overt and Covert Attention in Visual Search. Vision. 2020; 4(2):28. https://doi.org/10.3390/vision4020028

Chicago/Turabian StyleMacInnes, W. Joseph, Ómar I. Jóhannesson, Andrey Chetverikov, and Árni Kristjánsson. 2020. "No Advantage for Separating Overt and Covert Attention in Visual Search" Vision 4, no. 2: 28. https://doi.org/10.3390/vision4020028

APA StyleMacInnes, W. J., Jóhannesson, Ó. I., Chetverikov, A., & Kristjánsson, Á. (2020). No Advantage for Separating Overt and Covert Attention in Visual Search. Vision, 4(2), 28. https://doi.org/10.3390/vision4020028