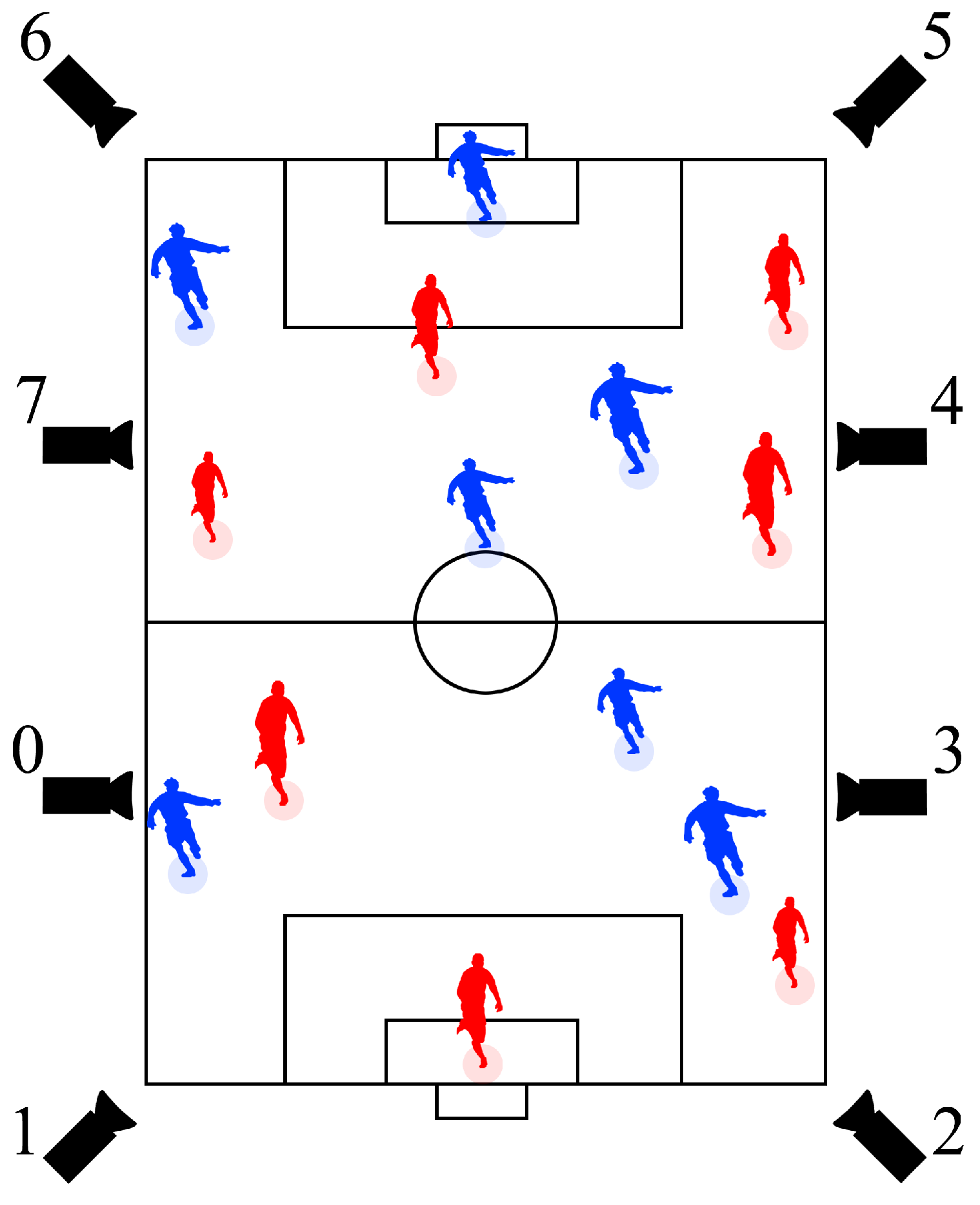

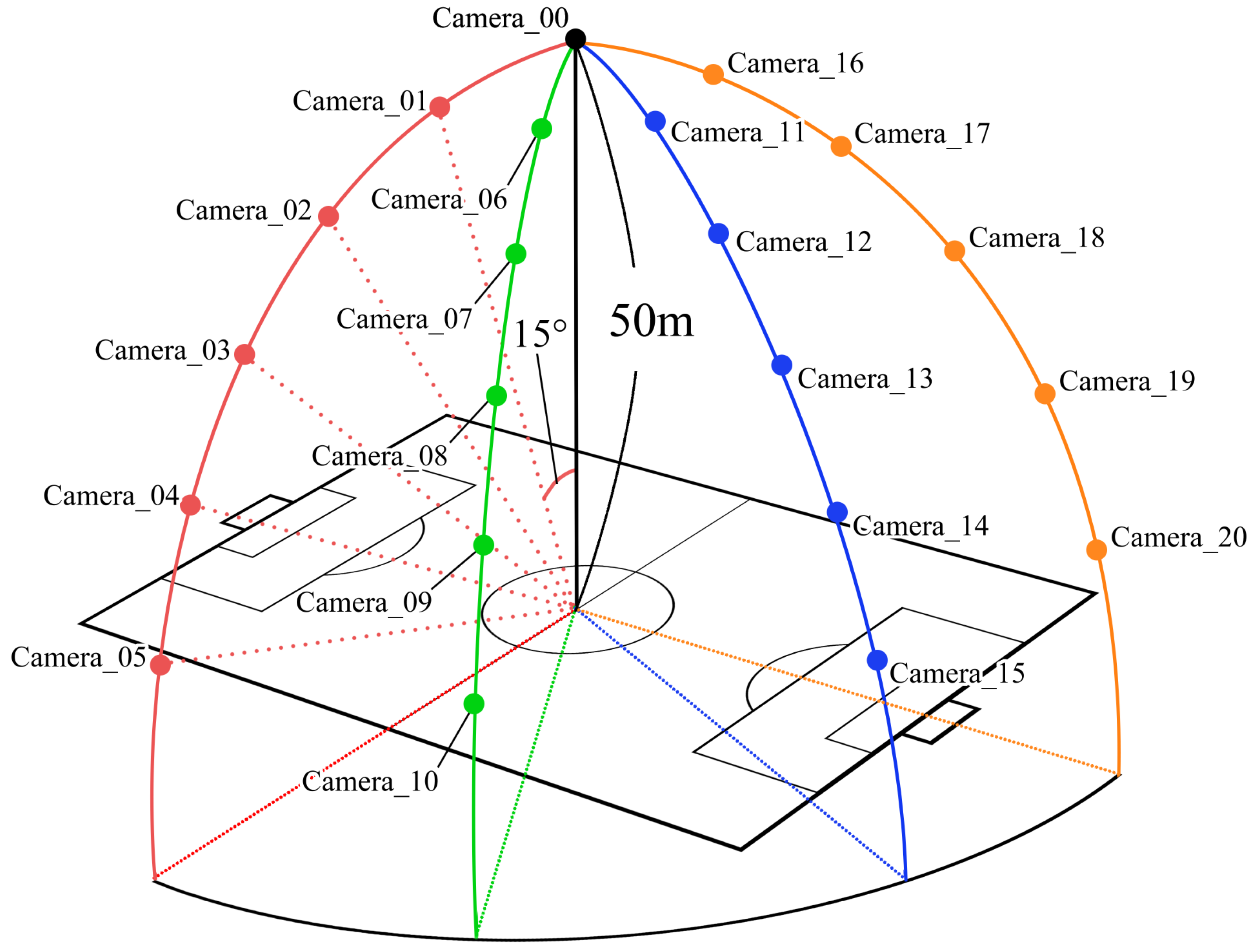

Figure 1.

Camera locations.

Figure 1.

Camera locations.

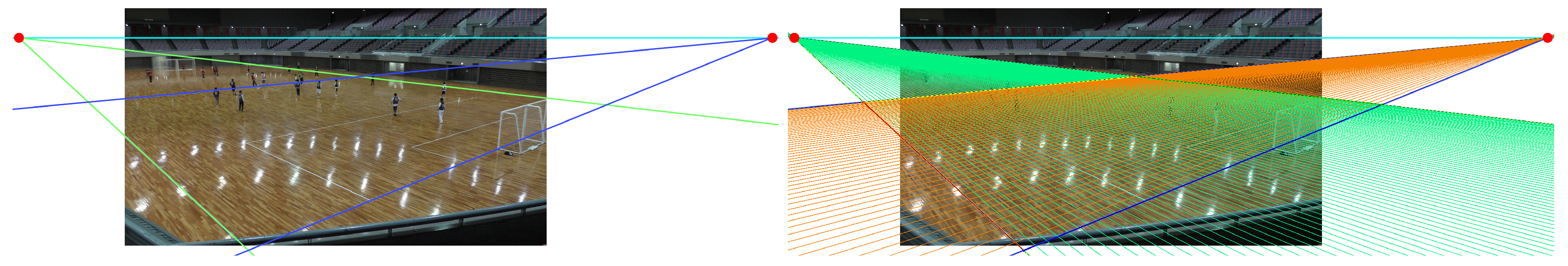

Figure 2.

Example of captured image.

Figure 2.

Example of captured image.

Figure 3.

The soccer field in the captured image can be equally divided by lines drawn from two vanishing points. The vanishing points were obtained by first considering the field is rectangular in shape. By combining this vanishing point with the perspective technique, it was possible to divide the field into an arbitrary number of divisions. However, it was not strict.

Figure 3.

The soccer field in the captured image can be equally divided by lines drawn from two vanishing points. The vanishing points were obtained by first considering the field is rectangular in shape. By combining this vanishing point with the perspective technique, it was possible to divide the field into an arbitrary number of divisions. However, it was not strict.

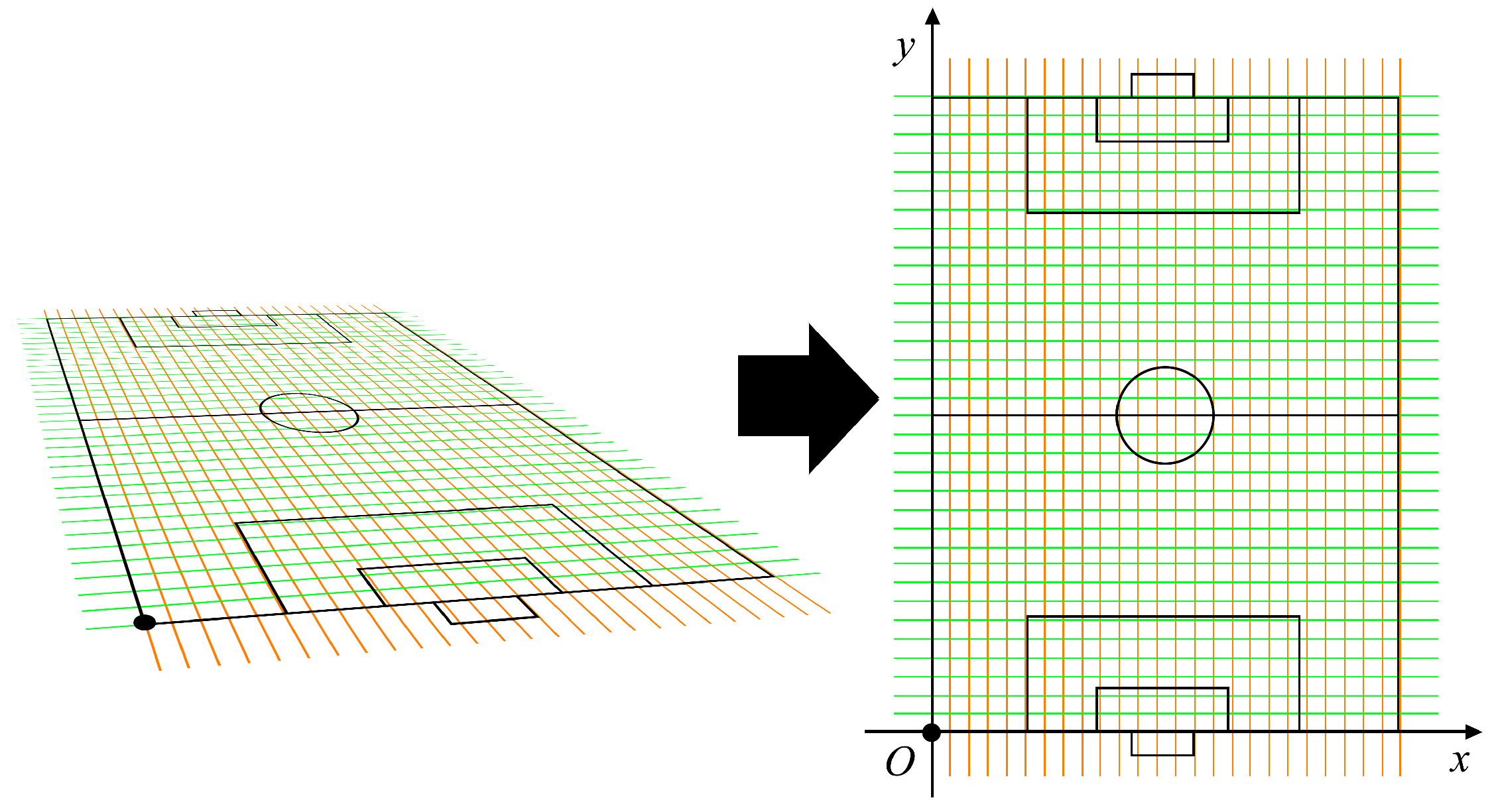

Figure 4.

Overview of the coordinate transform. The coordinate system to the right is orthogonal, for which the black dot is set as the origin. The short side of the soccer field is set as the x-axis. The long side is set as the y-axis. Because coordinates are associated from both left and right spaces, if we know the coordinates of the player in the left space, the coordinates in the right space can be easily obtained.

Figure 4.

Overview of the coordinate transform. The coordinate system to the right is orthogonal, for which the black dot is set as the origin. The short side of the soccer field is set as the x-axis. The long side is set as the y-axis. Because coordinates are associated from both left and right spaces, if we know the coordinates of the player in the left space, the coordinates in the right space can be easily obtained.

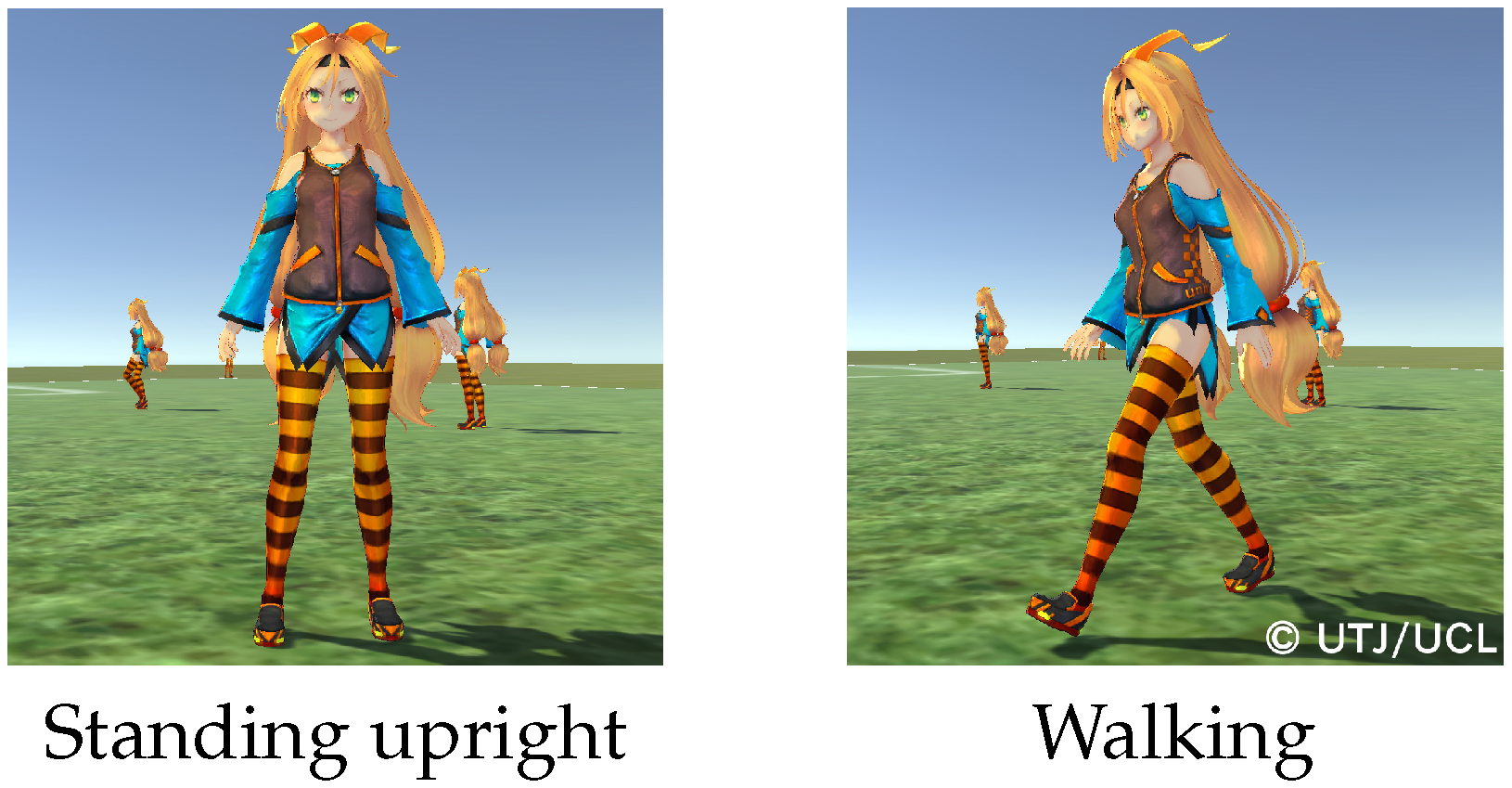

Figure 5.

Example of a 3D model: Unity-chan.

Figure 5.

Example of a 3D model: Unity-chan.

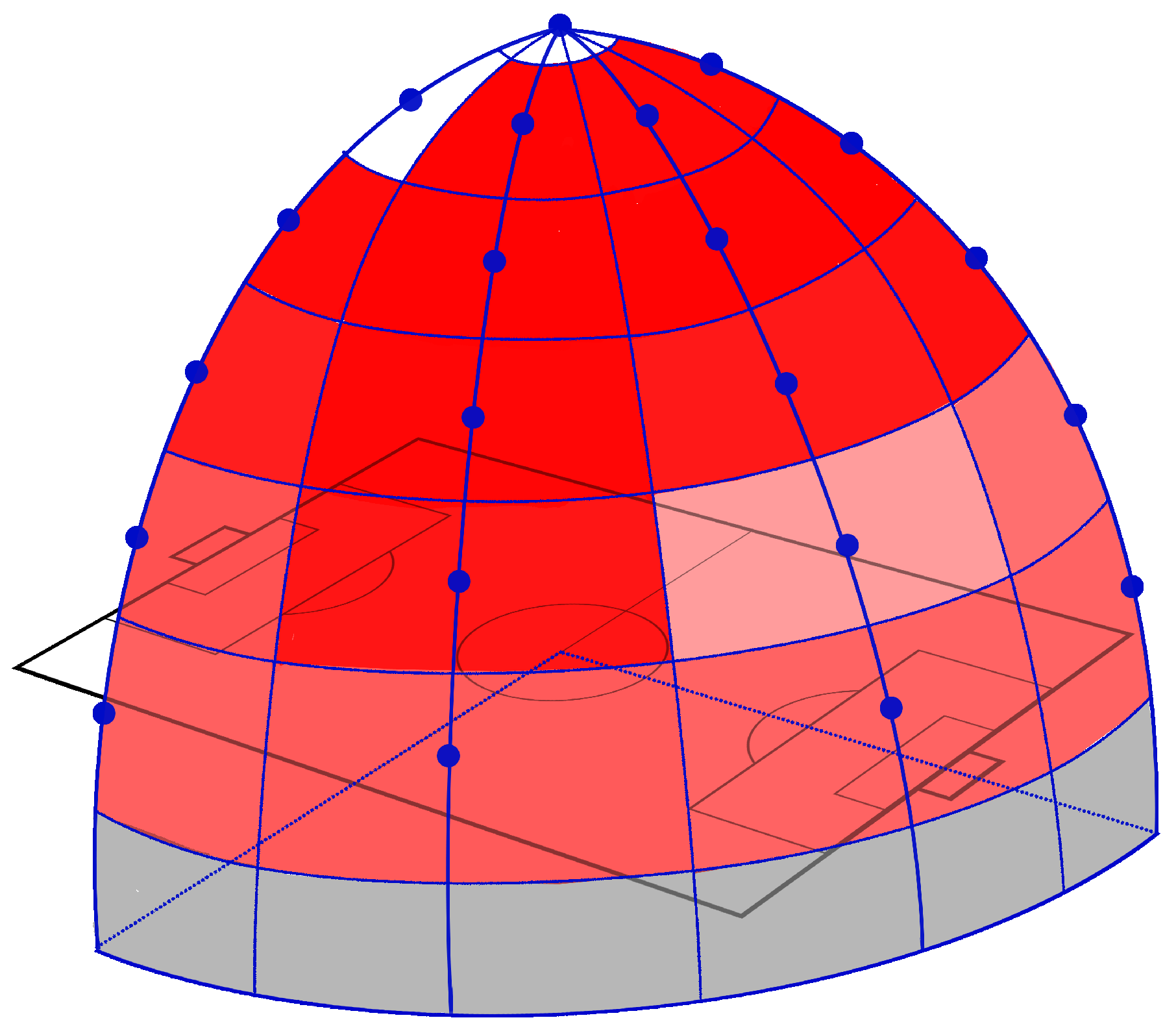

Figure 6.

Overview of camera locations.

Figure 6.

Overview of camera locations.

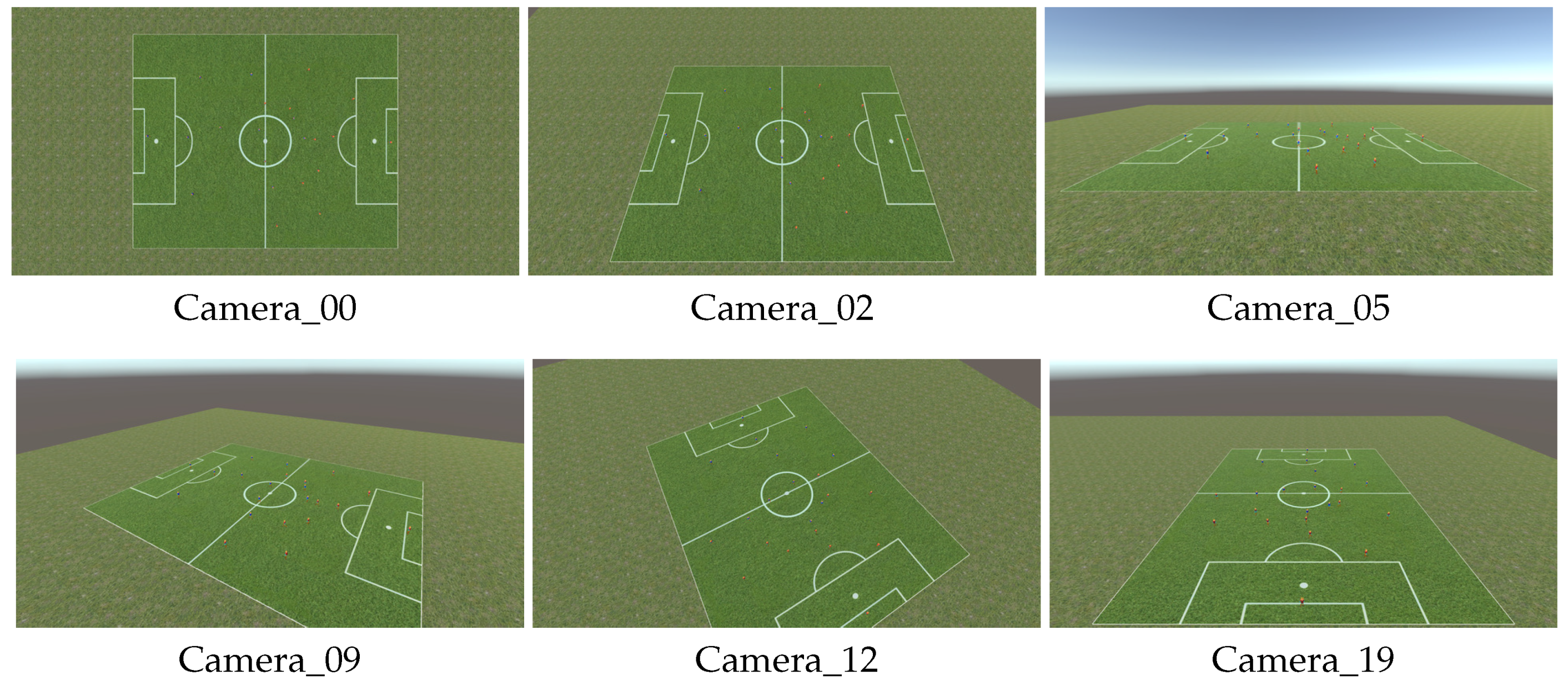

Figure 7.

Examples of datasets.

Figure 7.

Examples of datasets.

Figure 8.

Positive samples.

Figure 8.

Positive samples.

Figure 9.

Negative samples.

Figure 9.

Negative samples.

Figure 10.

Generate edge map.

Figure 10.

Generate edge map.

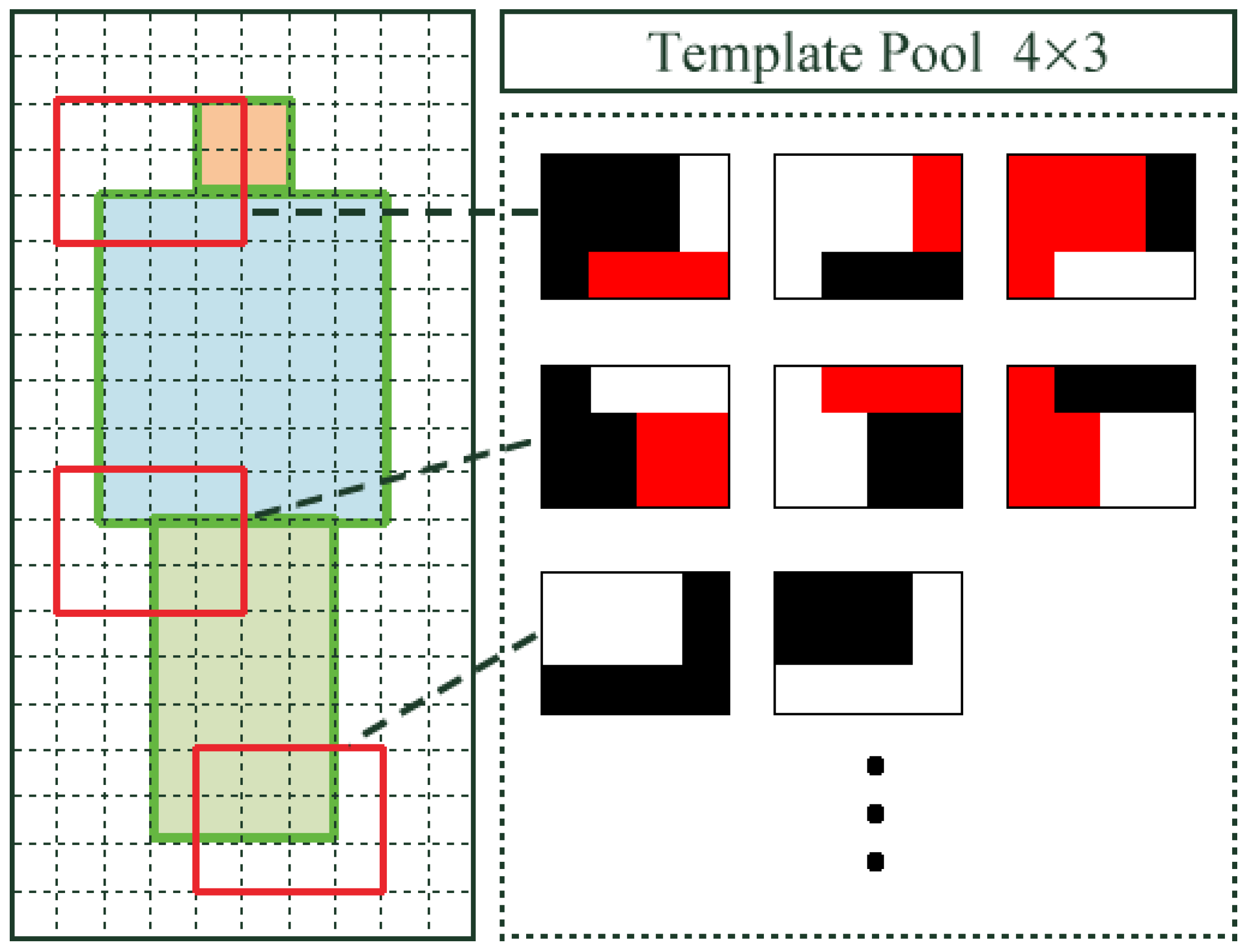

Figure 11.

Template generation.

Figure 11.

Template generation.

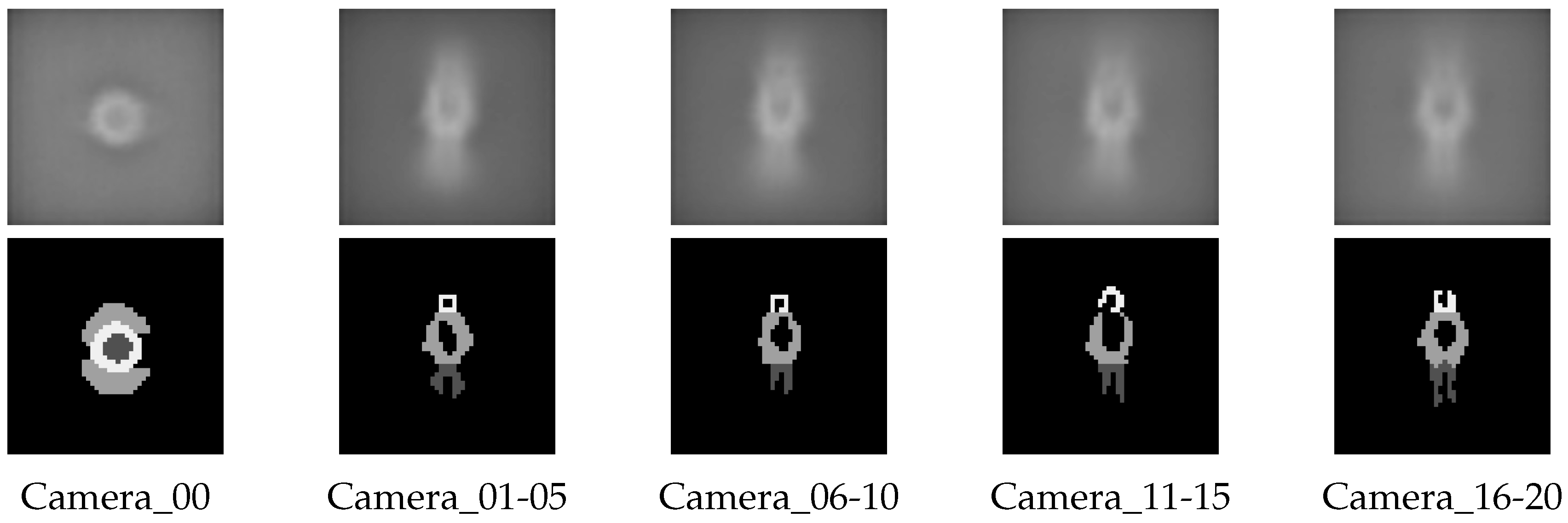

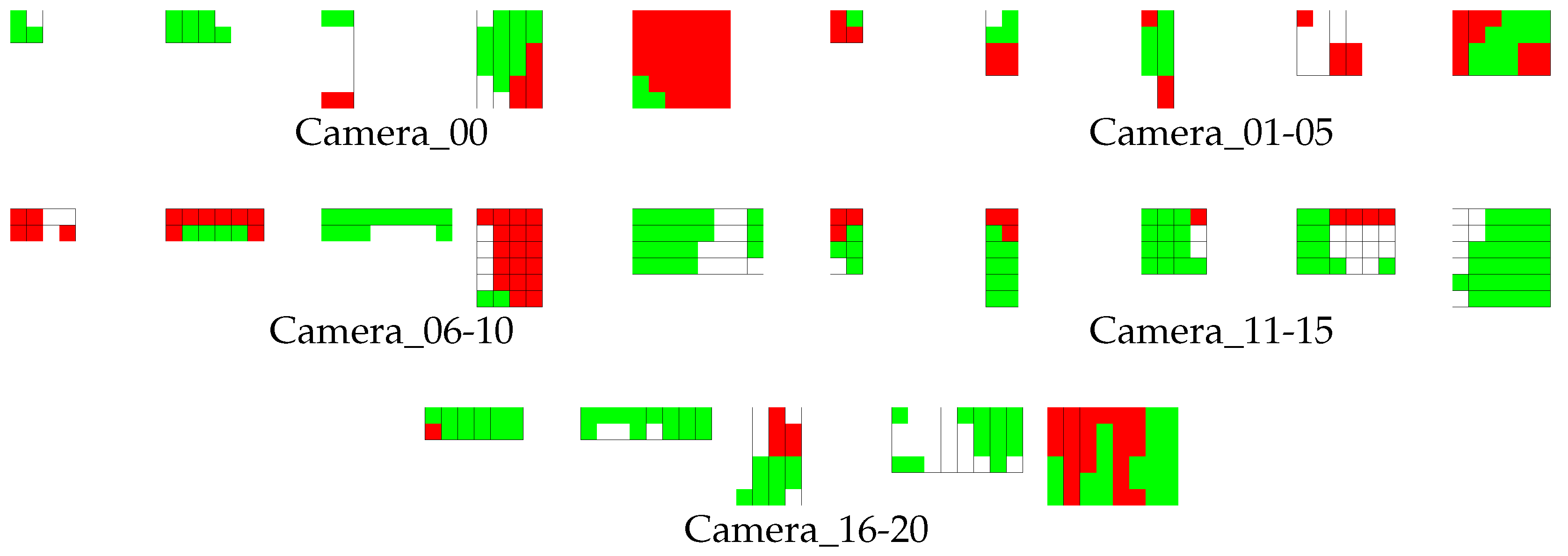

Figure 12.

Average edge maps and labeled edge maps according to shooting orientations. Top and bottom images represent average and labeled-edge maps, respectively.

Figure 12.

Average edge maps and labeled edge maps according to shooting orientations. Top and bottom images represent average and labeled-edge maps, respectively.

Figure 13.

Examples of templates used to construct a classifier for players.

Figure 13.

Examples of templates used to construct a classifier for players.

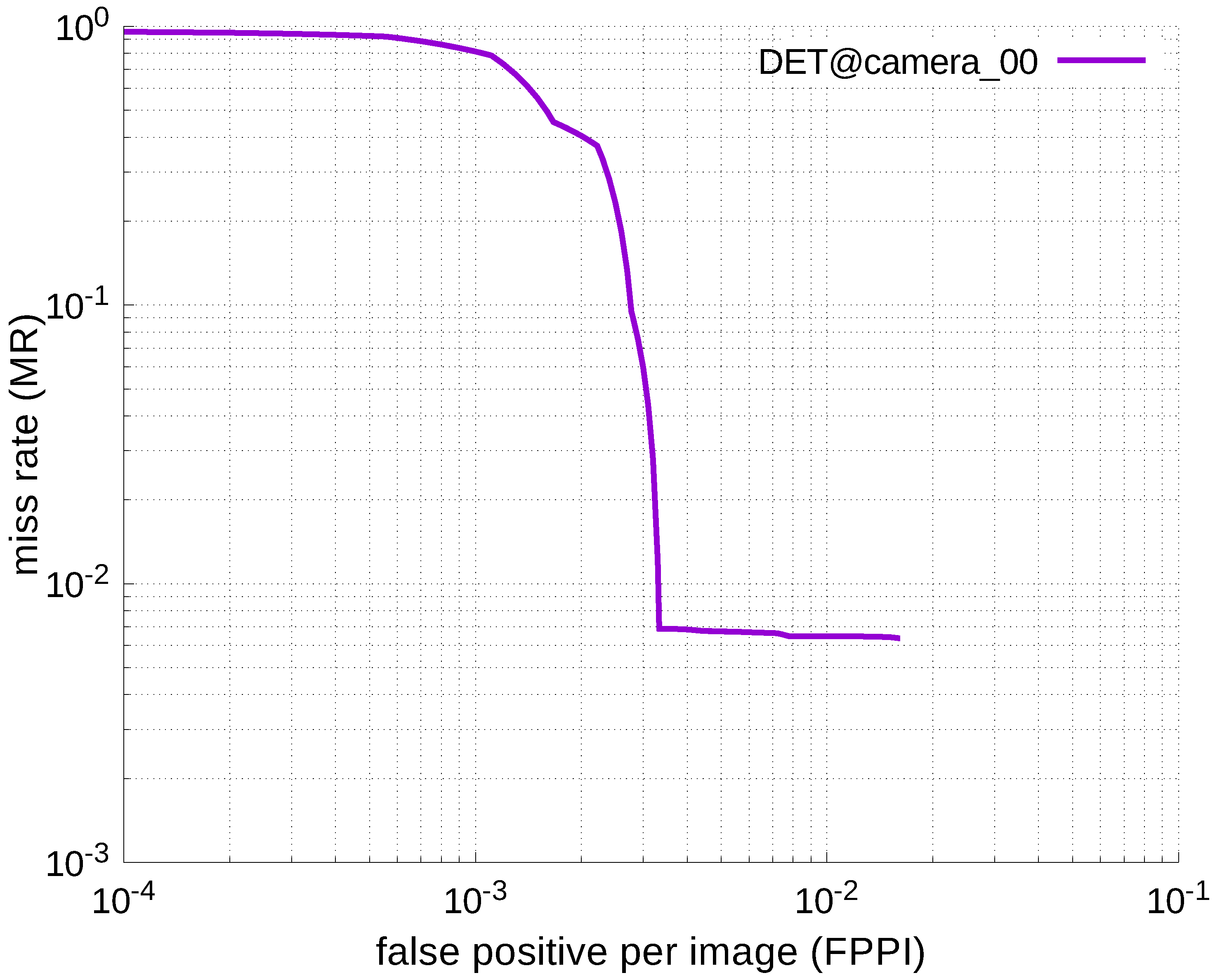

Figure 15.

DET curves @ Camera_00, informed-filters.

Figure 15.

DET curves @ Camera_00, informed-filters.

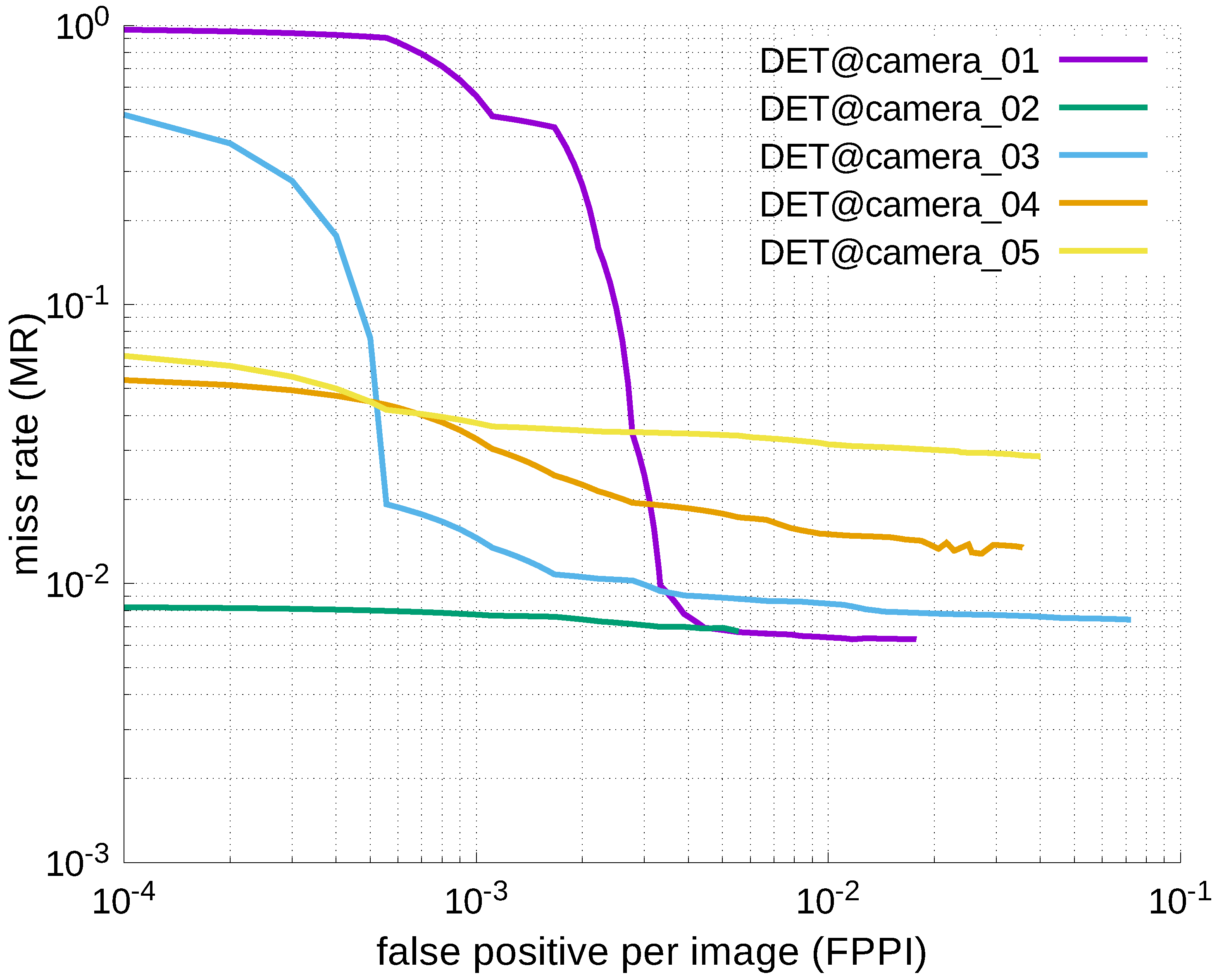

Figure 16.

DET curves @ Cameras_01–05, informed-filters.

Figure 16.

DET curves @ Cameras_01–05, informed-filters.

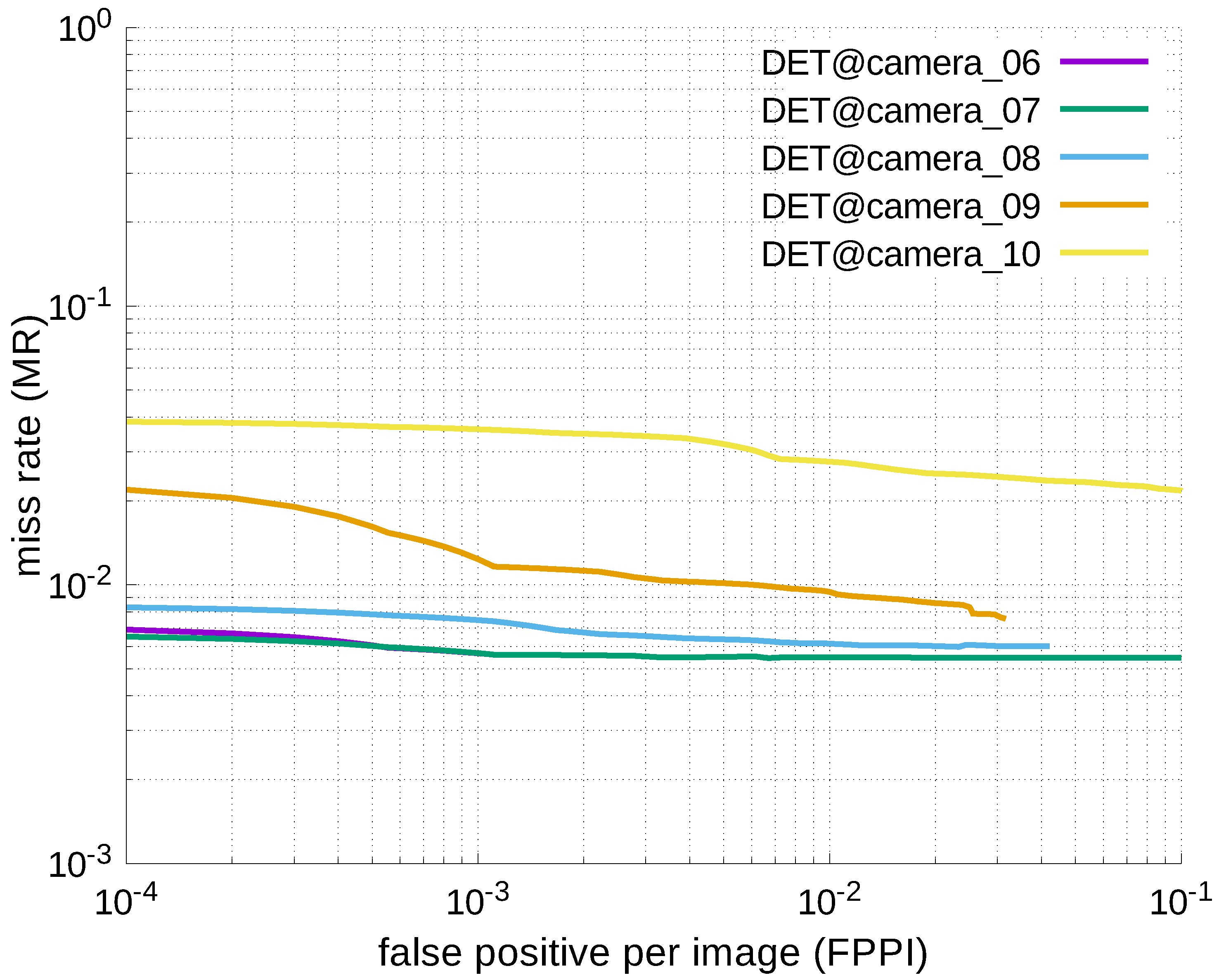

Figure 17.

DET curves @ Cameras_06–10, informed-filters.

Figure 17.

DET curves @ Cameras_06–10, informed-filters.

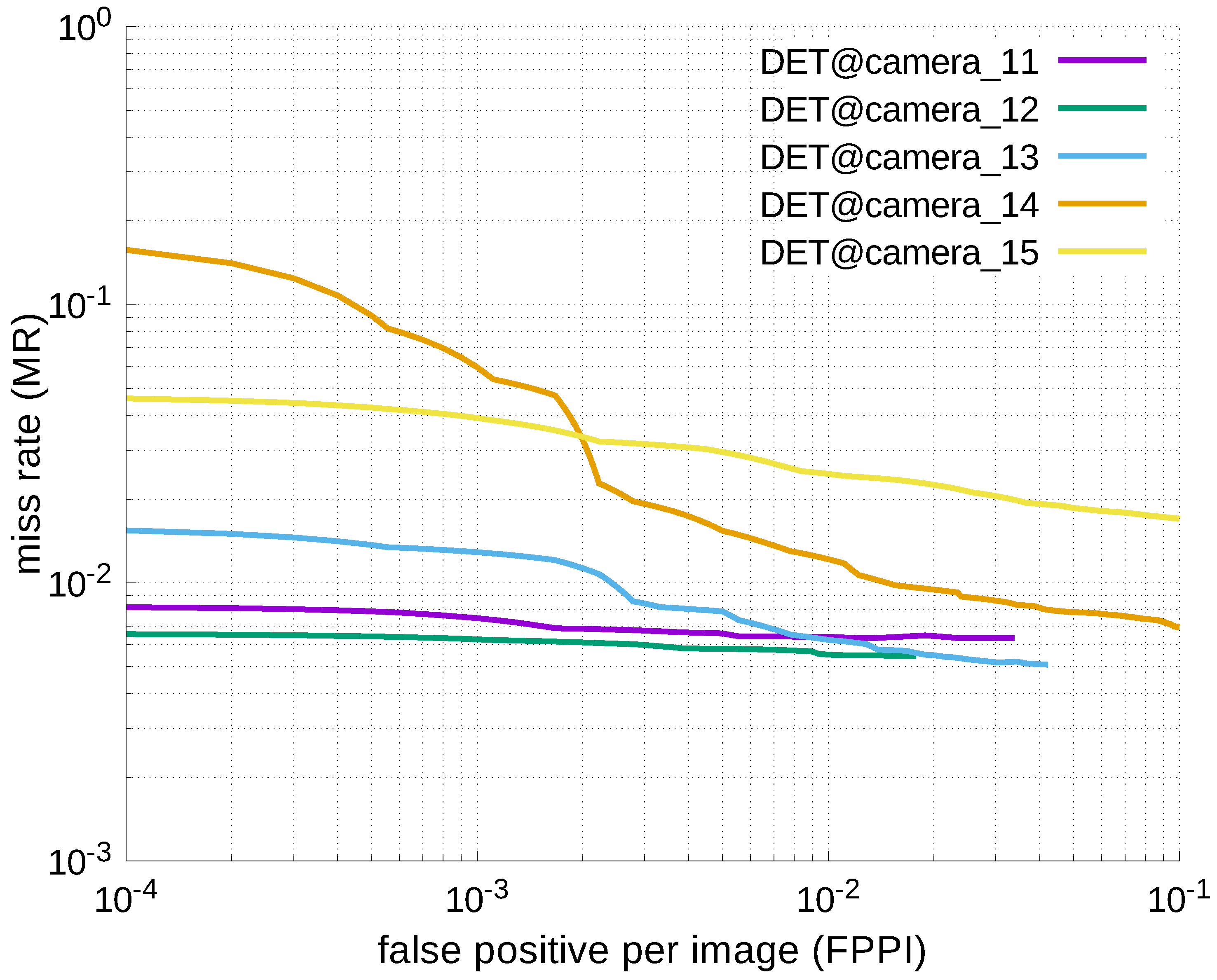

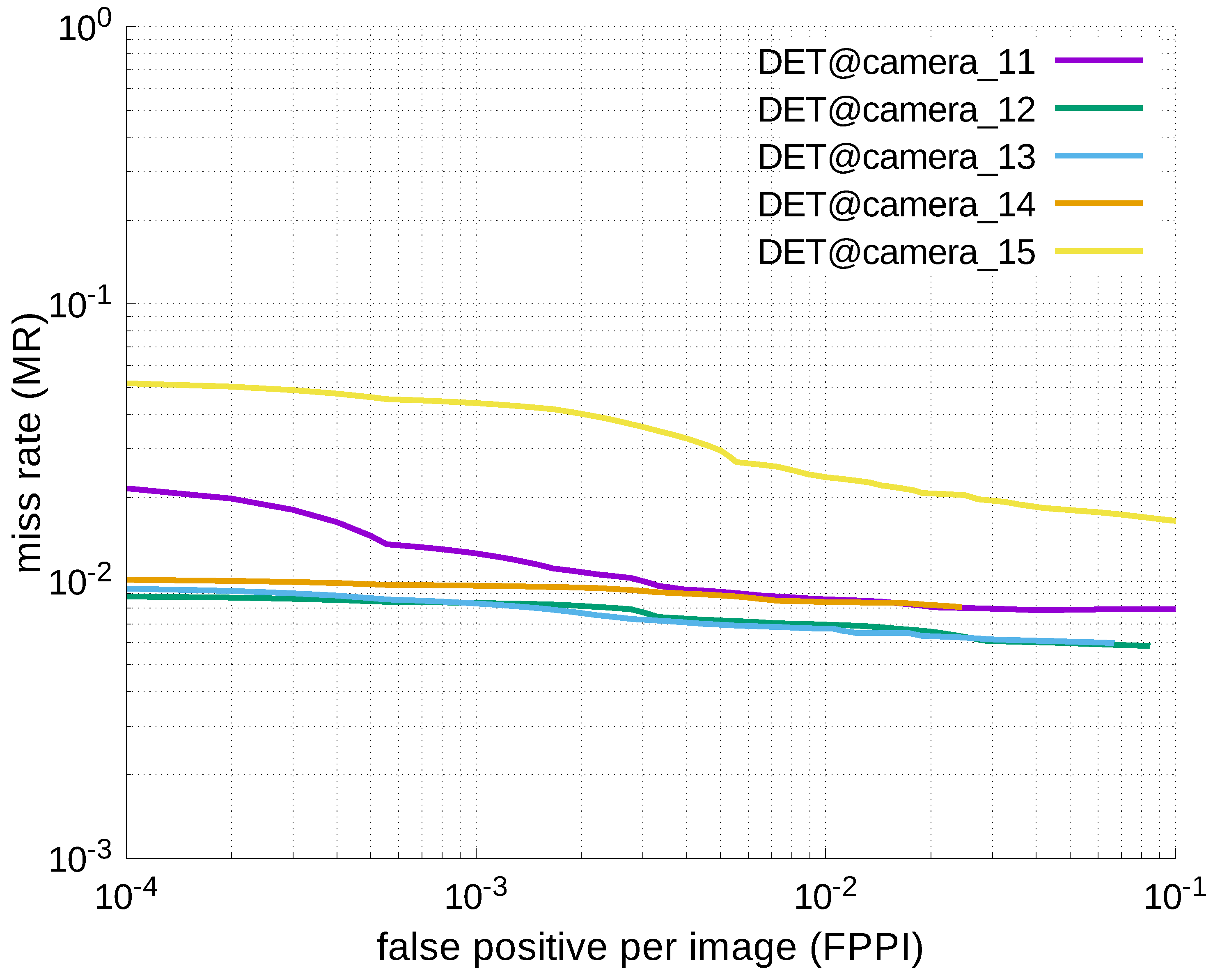

Figure 18.

DET curves @ Cameras_11–15, informed-filters.

Figure 18.

DET curves @ Cameras_11–15, informed-filters.

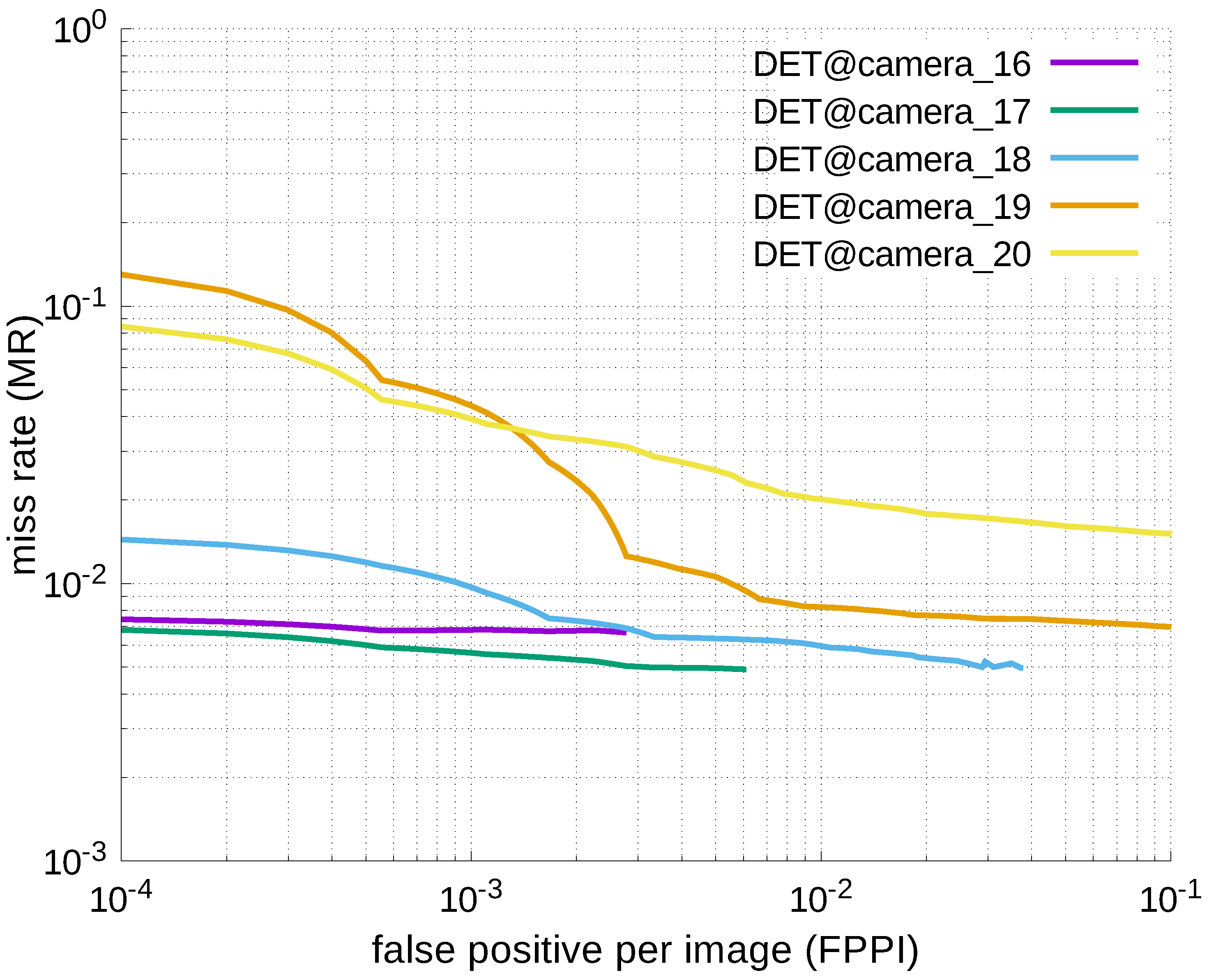

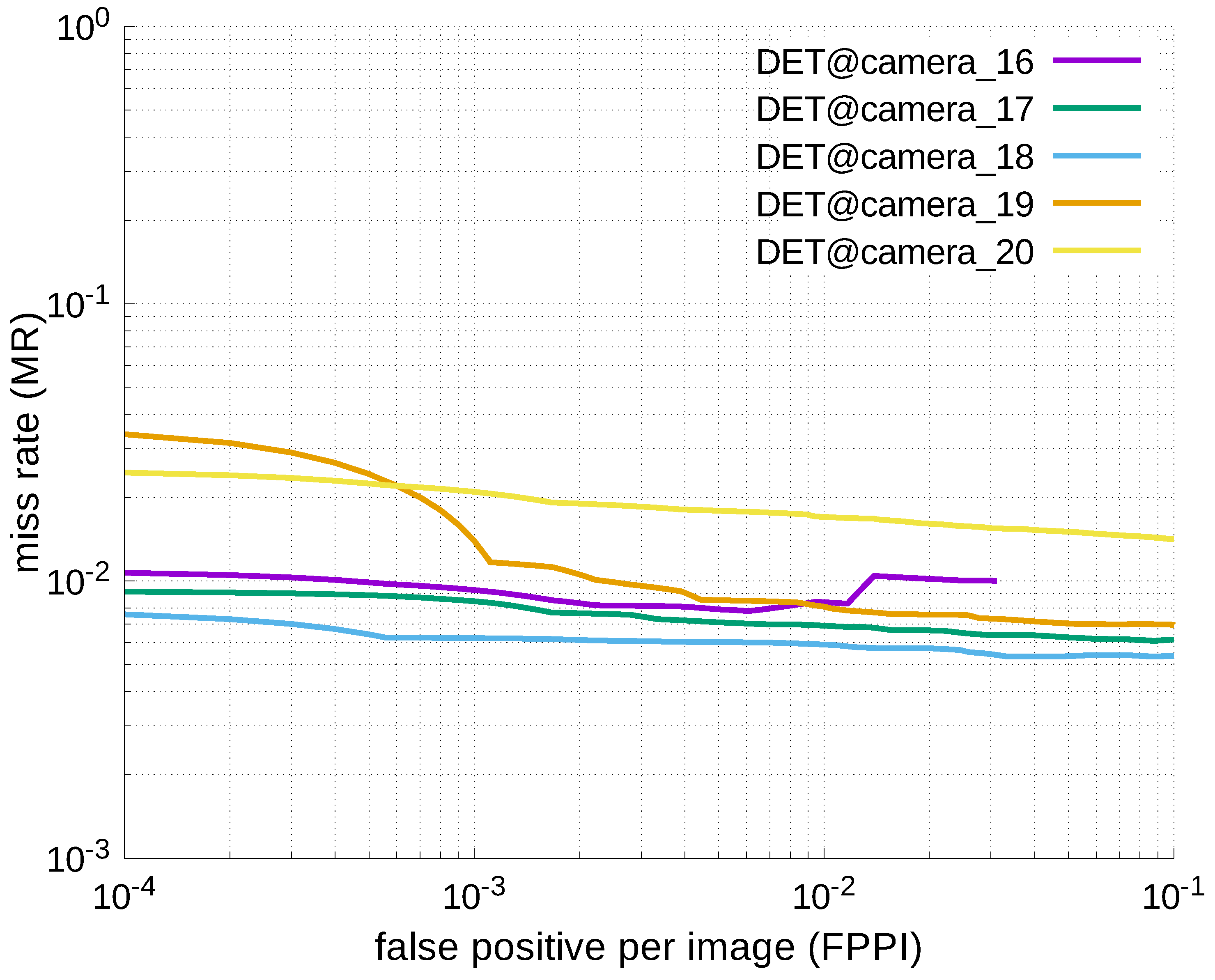

Figure 19.

DET curves @ Cameras_16–20, informed-filters.

Figure 19.

DET curves @ Cameras_16–20, informed-filters.

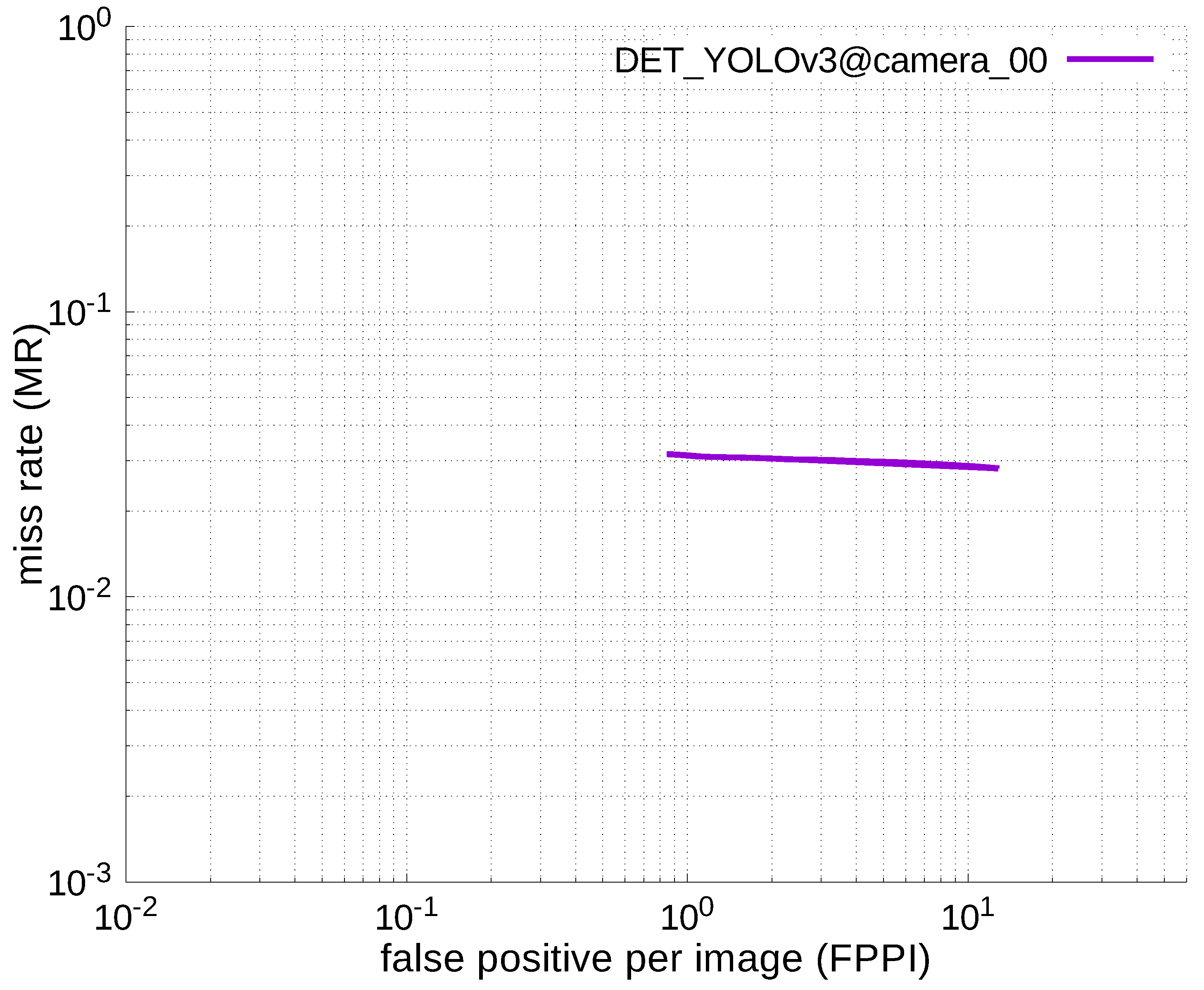

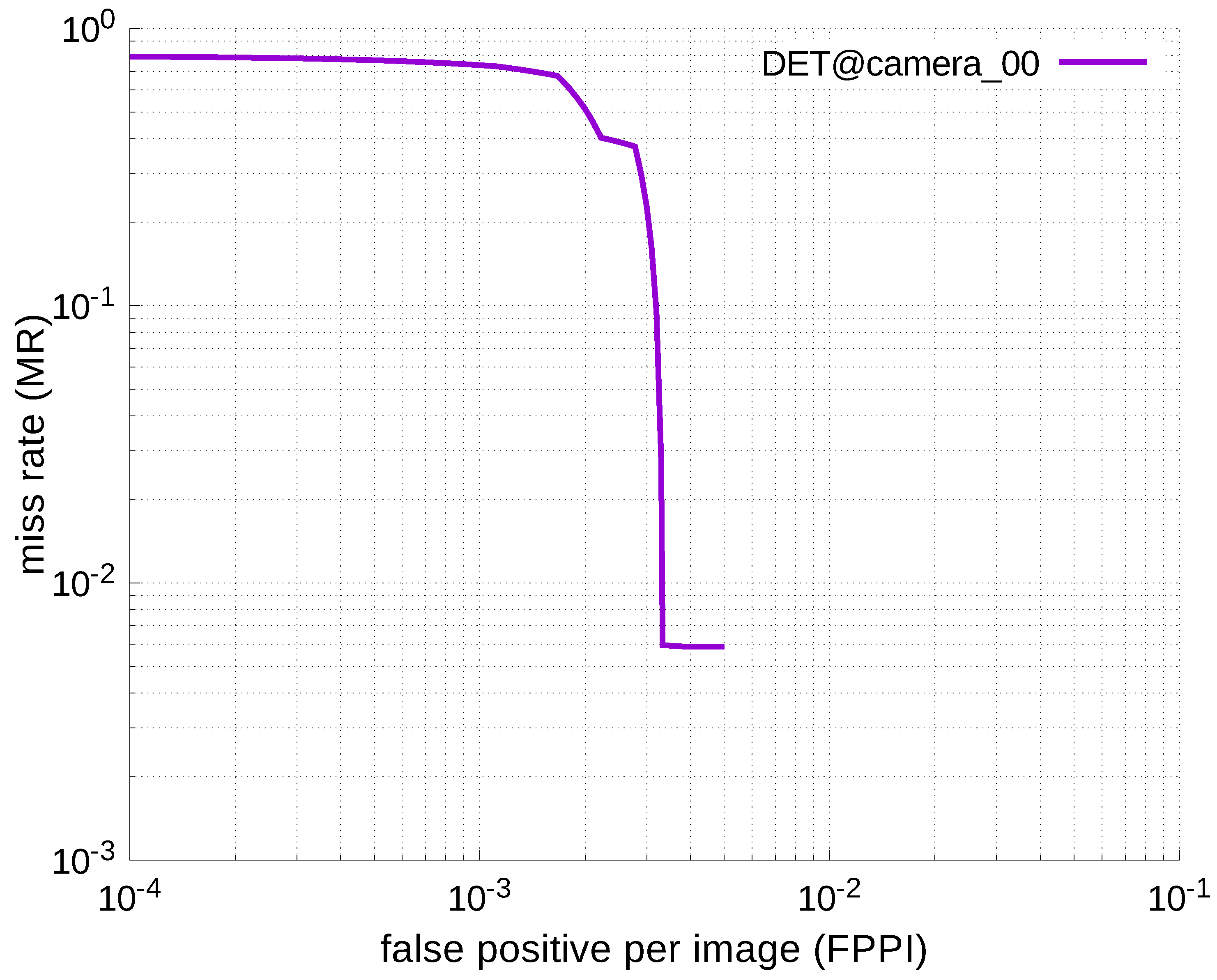

Figure 20.

DET curve @ Camera_00, YOLOv3.

Figure 20.

DET curve @ Camera_00, YOLOv3.

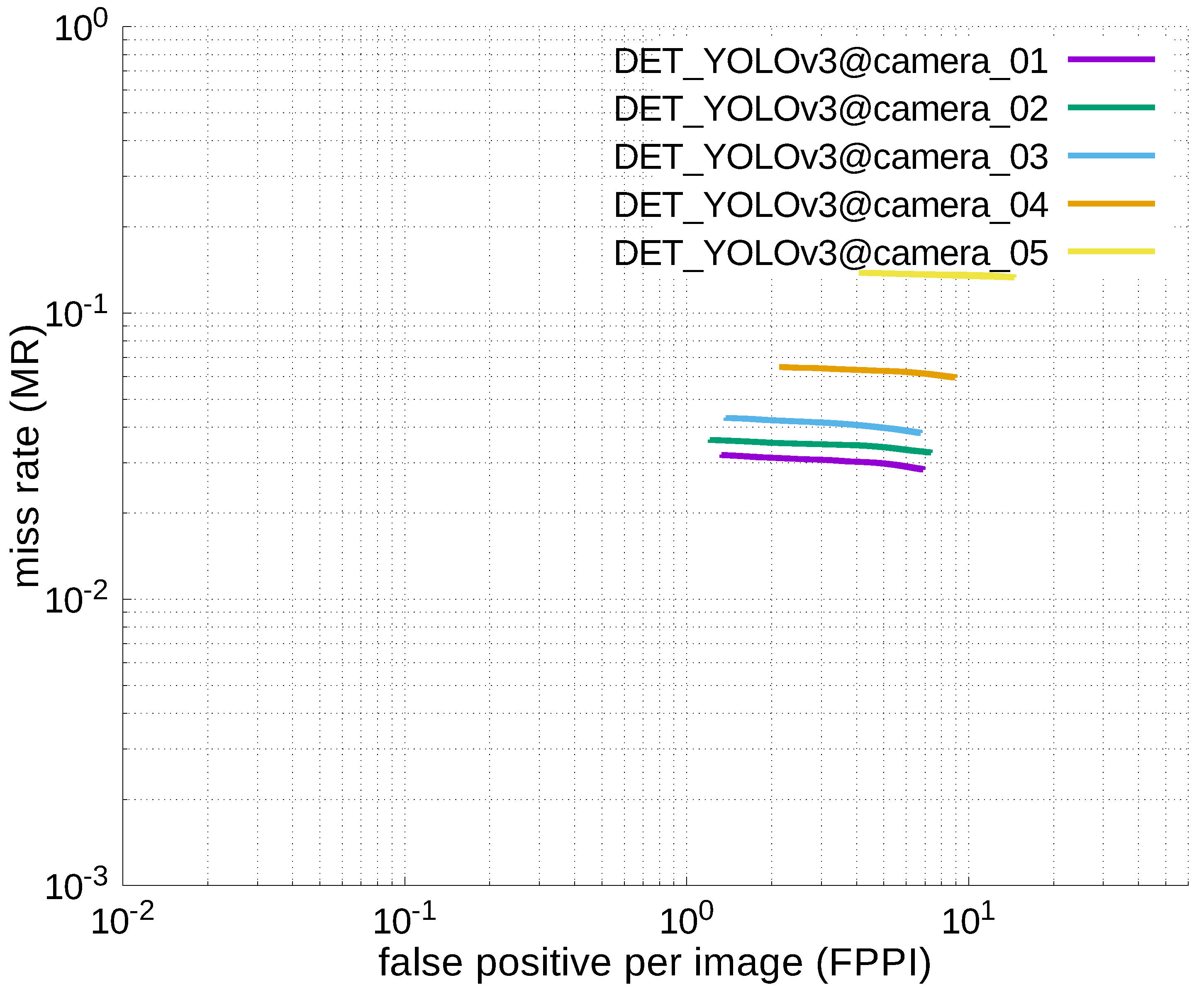

Figure 21.

DET curves @ Cameras_01–05, YOLOv3.

Figure 21.

DET curves @ Cameras_01–05, YOLOv3.

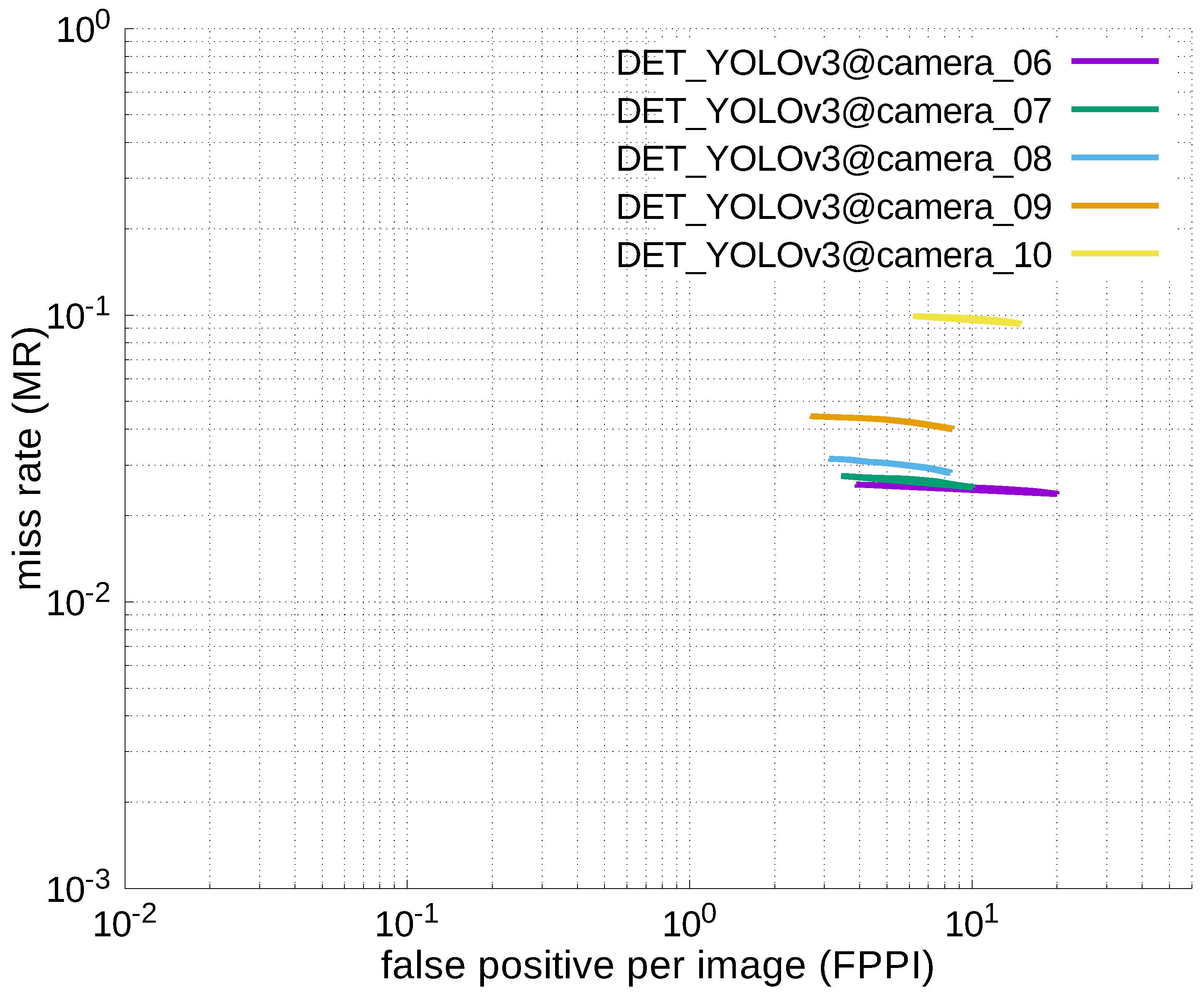

Figure 22.

DET curves @ Cameras_06–10, YOLOv3.

Figure 22.

DET curves @ Cameras_06–10, YOLOv3.

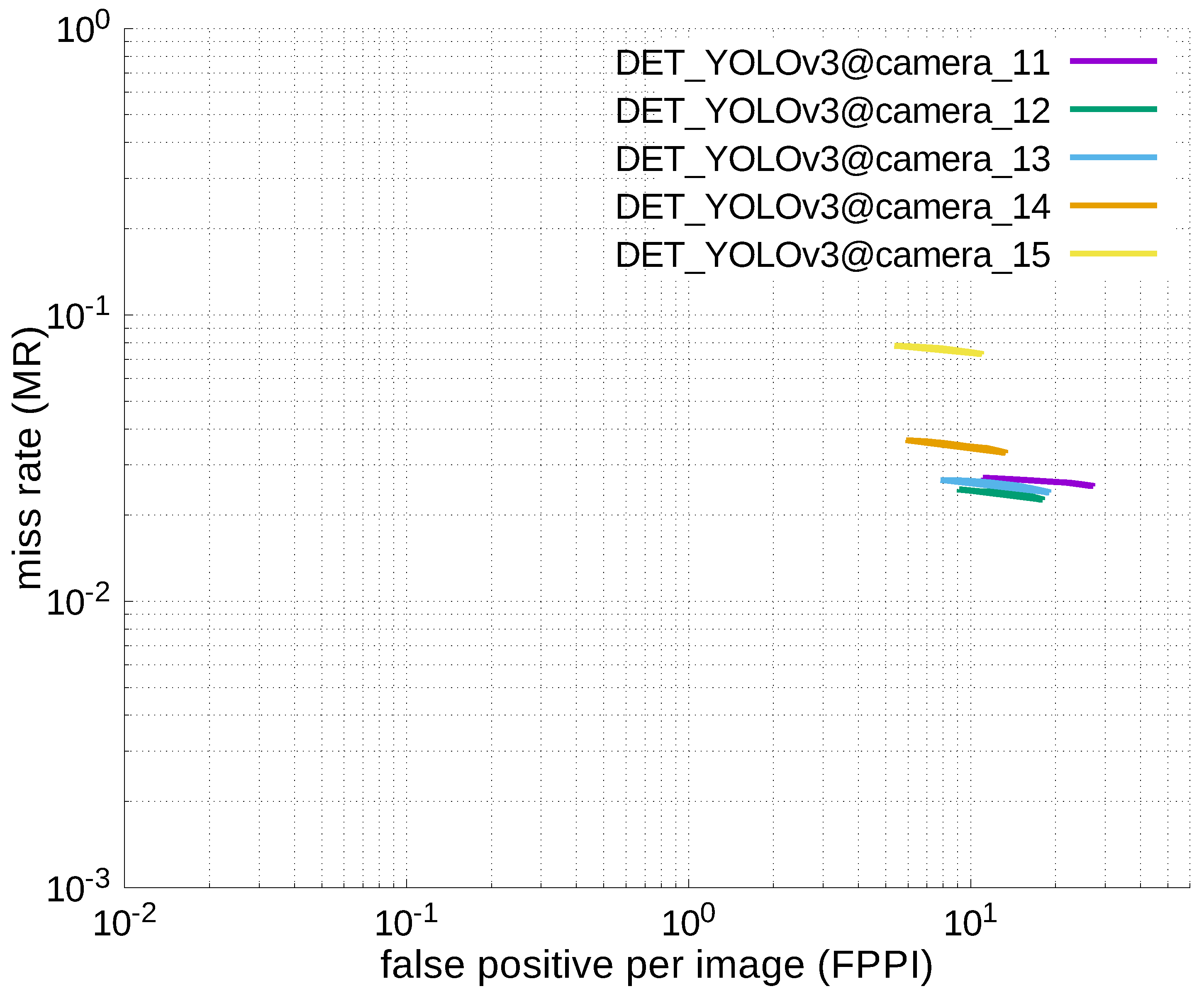

Figure 23.

DET curves @ Cameras_11–15, YOLOv3.

Figure 23.

DET curves @ Cameras_11–15, YOLOv3.

Figure 24.

DET curves @ Cameras_16–20, YOLOv3.

Figure 24.

DET curves @ Cameras_16–20, YOLOv3.

Figure 25.

DET curves @ Camera_00, informed-filters, some viewpoints.

Figure 25.

DET curves @ Camera_00, informed-filters, some viewpoints.

Figure 26.

DET curves @ Cameras_01–05, informed-filters, some viewpoints.

Figure 26.

DET curves @ Cameras_01–05, informed-filters, some viewpoints.

Figure 27.

DET curves @ Cameras_06–10, informed-filters, some viewpoints.

Figure 27.

DET curves @ Cameras_06–10, informed-filters, some viewpoints.

Figure 28.

DET curves @ Cameras_11–15, informed-filters, some viewpoints.

Figure 28.

DET curves @ Cameras_11–15, informed-filters, some viewpoints.

Figure 29.

DET curves @ Cameras_16–20, informed-filters, some viewpoints.

Figure 29.

DET curves @ Cameras_16–20, informed-filters, some viewpoints.

Table 1.

Accuracy table @ Camera_00, informed-filters.

Table 1.

Accuracy table @ Camera_00, informed-filters.

| | MR@Camera_00 |

|---|

| FPPI = 1.0 | 0.000000 |

| FPPI = 0.1 | 0.000000 |

| FPPI = 0.01 | 0.006490 |

| FPPI = 0.001 | 0.812667 |

Table 2.

Accuracy table @ Cameras_01–05, informed-filters.

Table 2.

Accuracy table @ Cameras_01–05, informed-filters.

| | MR@Camera_01 | MR@Camera_02 | MR@Camera_03 | MR@Camera_04 | MR@Camera_05 |

|---|

| FPPI = 1.0 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| FPPI = 0.1 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| FPPI = 0.01 | 0.006414 | 0.000000 | 0.008468 | 0.015025 | 0.031540 |

| FPPI = 0.001 | 0.558980 | 0.007738 | 0.014591 | 0.033021 | 0.037637 |

Table 3.

Accuracy table @ Cameras_06–10, informed-filters.

Table 3.

Accuracy table @ Cameras_06–10, informed-filters.

| | MR@Camera_06 | MR@Camera_07 | MR@Camera_08 | MR@Camera_09 | MR@Camera_10 |

|---|

| FPPI = 1.0 | 0.000000 | 0.005433 | 0.000000 | 0.000000 | 0.000000 |

| FPPI = 0.1 | 0.000000 | 0.005479 | 0.000000 | 0.000000 | 0.021780 |

| FPPI = 0.01 | 0.000000 | 0.005484 | 0.006148 | 0.009432 | 0.027652 |

| FPPI = 0.001 | 0.005678 | 0.005683 | 0.007467 | 0.012366 | 0.036157 |

Table 4.

Accuracy table @ Cameras_11–15, informed-filters.

Table 4.

Accuracy table @ Cameras_11–15, informed-filters.

| | MR@Camera_11 | MR@Camera_12 | MR@Camera_13 | MR@Camera_14 | MR@Camera_15 |

|---|

| FPPI = 1.0 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| FPPI = 0.1 | 0.000000 | 0.000000 | 0.000000 | 0.006938 | 0.017012 |

| FPPI = 0.01 | 0.006399 | 0.005524 | 0.006243 | 0.012166 | 0.024646 |

| FPPI = 0.001 | 0.007465 | 0.006269 | 0.012894 | 0.059582 | 0.039167 |

Table 5.

Accuracy table @ Cameras_16–20, informed-filters.

Table 5.

Accuracy table @ Cameras_16–20, informed-filters.

| | MR@Camera_16 | MR@Camera_17 | MR@Camera_18 | MR@Camera_19 | MR@Camera_20 |

|---|

| FPPI = 1.0 | 0.000000 | 0.000000 | 0.000000 | 0.006879 | 0.000000 |

| FPPI = 0.1 | 0.000000 | 0.000000 | 0.000000 | 0.006982 | 0.015134 |

| FPPI = 0.01 | 0.000000 | 0.000000 | 0.005956 | 0.008233 | 0.020135 |

| FPPI = 0.001 | 0.006808 | 0.005627 | 0.009709 | 0.043803 | 0.039258 |

Table 6.

Accuracy table @ Camera_00, YOLOv3.

Table 6.

Accuracy table @ Camera_00, YOLOv3.

| | MR@Camera_00 |

|---|

| FPPI = 1.0 | 0.031323 |

| FPPI = 0.1 | nan |

| FPPI = 0.01 | nan |

| FPPI = 0.001 | nan |

Table 7.

Accuracy table @ Cameras_01–05, YOLOv3.

Table 7.

Accuracy table @ Cameras_01–05, YOLOv3.

| | MR@Camera_01 | MR@Camera_02 | MR@Camera_03 | MR@Camera_04 | MR@Camera_05 |

|---|

| FPPI = 1.0 | nan | nan | nan | nan | nan |

| FPPI = 0.1 | nan | nan | nan | nan | nan |

| FPPI = 0.01 | nan | nan | nan | nan | nan |

| FPPI = 0.001 | nan | nan | nan | nan | nan |

Table 8.

Accuracy table @ Cameras_06–10, YOLOv3.

Table 8.

Accuracy table @ Cameras_06–10, YOLOv3.

| | MR@Camera_06 | MR@Camera_07 | MR@Camera_08 | MR@Camera_09 | MR@Camera_10 |

|---|

| FPPI = 1.0 | nan | nan | nan | nan | nan |

| FPPI = 0.1 | nan | nan | nan | nan | nan |

| FPPI = 0.01 | nan | nan | nan | nan | nan |

| FPPI = 0.001 | nan | nan | nan | nan | nan |

Table 9.

Accuracy table @ Cameras_11–15, YOLOv3.

Table 9.

Accuracy table @ Cameras_11–15, YOLOv3.

| | MR@Camera_11 | MR@Camera_12 | MR@Camera_13 | MR@Camera_14 | MR@Camera_15 |

|---|

| FPPI = 1.0 | nan | nan | nan | nan | nan |

| FPPI = 0.1 | nan | nan | nan | nan | nan |

| FPPI = 0.01 | nan | nan | nan | nan | nan |

| FPPI = 0.001 | nan | nan | nan | nan | nan |

Table 10.

Accuracy table @ Cameras_16–20, YOLOv3.

Table 10.

Accuracy table @ Cameras_16–20, YOLOv3.

| | MR@Camera_16 | MR@Camera_17 | MR@Camera_18 | MR@Camera_19 | MR@Camera_20 |

|---|

| FPPI = 1.0 | nan | nan | nan | nan | nan |

| FPPI = 0.1 | nan | nan | nan | nan | nan |

| FPPI = 0.01 | nan | nan | nan | nan | nan |

| FPPI = 0.001 | nan | nan | nan | nan | nan |

Table 11.

Accuracy table @ Camera_00, informed-filters, some viewpoints.

Table 11.

Accuracy table @ Camera_00, informed-filters, some viewpoints.

| | MR@Camera_00 |

|---|

| FPPI = 1.0 | 0.000000 |

| FPPI = 0.1 | 0.000000 |

| FPPI = 0.01 | 0.000000 |

| FPPI = 0.001 | 0.737169 |

Table 12.

Accuracy table @ Cameras_01–05, informed-filters, some viewpoints.

Table 12.

Accuracy table @ Cameras_01–05, informed-filters, some viewpoints.

| | MR@Camera_01 | MR@Camera_02 | MR@Camera_03 | MR@Camera_04 | MR@Camera_05 |

|---|

| FPPI = 1.0 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| FPPI = 0.1 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| FPPI = 0.01 | 0.000000 | 0.000000 | 0.006818 | 0.012108 | 0.028662 |

| FPPI = 0.001 | 0.385389 | 0.006091 | 0.008546 | 0.014050 | 0.036182 |

Table 13.

Accuracy table @ Cameras_06–10, informed-filters, some viewpoints.

Table 13.

Accuracy table @ Cameras_06–10, informed-filters, some viewpoints.

| | MR@Camera_06 | MR@Camera_07 | MR@Camera_08 | MR@Camera_09 | MR@Camera_10 |

|---|

| FPPI = 1.0 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| FPPI = 0.1 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.018324 |

| FPPI = 0.01 | 0.006900 | 0.005680 | 0.006053 | 0.008847 | 0.021843 |

| FPPI = 0.001 | 0.007918 | 0.005801 | 0.006270 | 0.012433 | 0.031384 |

Table 14.

Accuracy table @ Cameras_11–15, informed-filters, some viewpoints.

Table 14.

Accuracy table @ Cameras_11–15, informed-filters, some viewpoints.

| | MR@Camera_11 | MR@Camera_12 | MR@Camera_13 | MR@Camera_14 | MR@Camera_15 |

|---|

| FPPI = 1.0 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| FPPI = 0.1 | 0.007914 | 0.000000 | 0.000000 | 0.000000 | 0.016500 |

| FPPI = 0.01 | 0.008600 | 0.006971 | 0.006742 | 0.008397 | 0.023712 |

| FPPI = 0.001 | 0.012590 | 0.008344 | 0.008309 | 0.009631 | 0.043875 |

Table 15.

Accuracy table @ Cameras_16–20, informed-filters, some viewpoints.

Table 15.

Accuracy table @ Cameras_16–20, informed-filters, some viewpoints.

| | MR@Camera_16 | MR@Camera_17 | MR@Camera_18 | MR@Camera_19 | MR@Camera_20 |

|---|

| FPPI = 1.0 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| FPPI = 0.1 | 0.000000 | 0.006160 | 0.005368 | 0.006952 | 0.014206 |

| FPPI = 0.01 | 0.008388 | 0.006904 | 0.005902 | 0.008081 | 0.017039 |

| FPPI = 0.001 | 0.009280 | 0.008454 | 0.006228 | 0.013950 | 0.020981 |