This section provides an overview of homomorphic encryption (HE), explaining its basic principles and levels of capability. Homomorphic encryption allows computations on encrypted data without decryption. Each of the different types: partially homomorphic encryption (PHE), somewhat homomorphic encryption (SWHE), and fully homomorphic encryption (FHE), offers varying degrees of flexibility and efficiency. This background helps in understanding the advancements and challenges in HE research.

3.2. Levels of Homomorphism

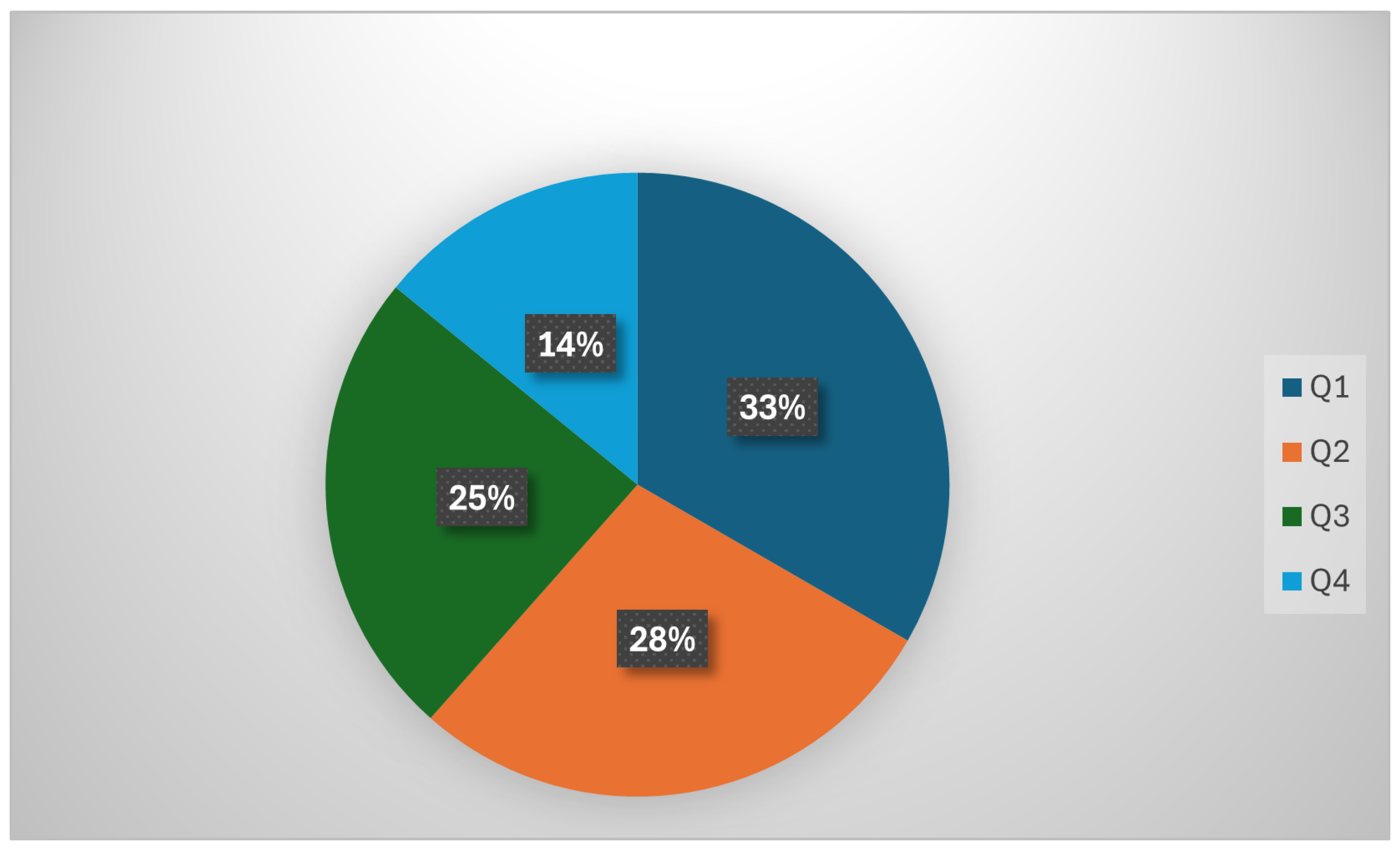

HE schemes are often classified into three levels based on the types and number of homomorphic operations that can be supported. These are:

Partially Homomorphic Encryption (PHE): Supports only one operation (e.g., addition or multiplication).

Somewhat Homomorphic Encryption (SHE): Supports all operations, but has limitations on the number of operations of a certain type.

Fully Homomorphic Encryption (FHE): Enables unlimited arbitrary computations on encrypted data.

Table 2 compares the salient features of the different levels of homomorphic encryption.

3.2.1. Partial Homomorphic Encryption (PHE)

Partial Homomorphic Encryption (PHE) schemes support either additive or multiplicative homomorphism, allowing a single type of operation to be performed on ciphertexts. These schemes are computationally efficient but limited in functionality, as they cannot support both operations simultaneously. One of the most well-known PHE schemes is the RSA cryptosystem, which exhibits multiplicative homomorphism. In RSA, the product of two ciphertexts corresponds to the encryption of the product of their plaintexts, i.e.,

. However, RSA in its textbook form is deterministic, making it semantically insecure. RSA’s security relies on the Integer Factorization Problem (IFP), where the public key consists of a modulus

, where

are prime numbers, and an exponent

e coprime to

. This simple version of RSA is vulnerable to chosen-ciphertext attacks if used without proper padding [

2].

Another widely used PHE scheme is the Paillier cryptosystem [

19], which supports additive homomorphism. In Paillier, the sum of two ciphertexts corresponds to the encryption of the sum of their plaintexts, i.e.,

. Paillier is probabilistic, ensuring semantic security, and its hardness is based on the Decisional Composite Residuosity (DCR) assumption [

19]. The public key in the Paillier cryptosystem consists of a modulus

. Encryption is performed by computing

, where

and

is a randomly chosen value such that

. Paillier is particularly useful in applications such as electronic voting and privacy-preserving aggregation due to its additive properties.

The ElGamal cryptosystem and its variants, including EC-ElGamal, are also partially homomorphic encryption (PHE) schemes that exhibit multiplicative homomorphism. In ElGamal, the component-wise product of two ciphertexts corresponds to the encryption of the product of their plaintexts. Its security is based on the Discrete Logarithm Problem (DLP). The encryption process for plaintext

m involves computing the ciphertext

using a randomly selected number

r where

. Here

g is the generator of a cyclic group

G of order

n,

x is the private key and is a random element of the group and

is the public key [

20]. Variants like EC-ElGamal extend this framework to support additive homomorphism over elliptic curves, making them suitable for specific cryptographic applications.

Other noteworthy PHE schemes include the Modified RSA Encryption Algorithm (MREA) [

21], which extends RSA to support additive homomorphism, and the Chen-ElGamal (CEG) scheme [

22], which combines features of Paillier and ElGamal for hybrid operations. However, these schemes often face challenges related to inefficiency or ciphertext expansion, which can limit their practicality for large-scale applications.

3.2.2. Somewhat Homomorphic Encryption (SWHE)

Somewhat Homomorphic Encryption (SWHE) schemes enable additive and multiplicative operations on encrypted data; however, their computational utility is inherently limited by the growth of noise in the ciphertext. Each homomorphic operation introduces a small error term, and while additions increase noise linearly, multiplications typically cause quadratic (or even exponential) noise growth. Once the accumulated noise exceeds a certain threshold, correct decryption becomes impossible.

The concept of SWHE was first introduced by Gentry in 2009 with a scheme based on ideal lattices [

6]. In Gentry’s construction, the noise grows so rapidly with each multiplication that only circuits of very shallow depth can be evaluated reliably. To mitigate this, Gentry proposed the technique of bootstrapping — homomorphically evaluating the decryption function to refresh ciphertexts by reducing their noise. Despite its theoretical significance, the high computational cost of bootstrapping rendered early SWHE schemes impractical for many applications.

Subsequent research has focused on optimizing noise management to extend the viable depth of homomorphic computations without the need for frequent bootstrapping. The Brakerski–Gentry–Vaikuntanathan (BGV) scheme [

8] introduced modulus switching, a technique that reduces the ciphertext modulus and thereby decreases noise in a linear manner. This innovation enabled leveled homomorphic encryption, where the maximum allowable circuit depth is determined by the chosen parameters.

Similarly, the Fan–Vercauteren (FV) scheme [

23], which is based on the Ring Learning With Errors (RLWE) problem, employs efficient modulus reduction along with relinearization techniques to control noise growth. The FV scheme has proven particularly effective for applications such as encrypted database queries and privacy-preserving analytics [

24].

Further advancements were made by Brakerski’s 2012 work [

25], which introduced scale-invariant techniques. These schemes decouple noise accumulation from the magnitude of the plaintext, allowing the error to grow independently of the scale of the input data, thus enabling more efficient homomorphic computations.

More recently, alternative algebraic approaches have been explored to enhance both efficiency and security. For instance, a 2023 scheme based on random rank metric ideal codes [

26] leverages the randomness inherent in code-based constructions to achieve competitive key and ciphertext sizes while supporting unlimited homomorphic additions and a fixed number of multiplications. In parallel, a scheme based on multivariate polynomial evaluation [

27] utilizes the algebraic properties of multivariate polynomials to perform limited-depth homomorphic operations with controlled noise growth. A recent scheme [

28] leverages the hardness of the sparse LPN (Learning Parities with Noise) problem combined with linear homomorphic properties. This construction supports the evaluation of bounded-degree polynomials on encrypted data while maintaining compact ciphertext sizes. It represents a departure from traditional lattice-based assumptions, thereby broadening the theoretical foundations for SWHE schemes.

Recent research has focused on refining noise management in SWHE schemes through optimized modulus switching and relinearization operations implemented in the Residue Number System (RNS) [

29]. By mapping computations into smaller rings and managing the scale more precisely, these techniques allow for tighter control over noise growth—thus supporting deeper circuit evaluations before bootstrapping becomes necessary. Such advancements have also influenced approximate schemes like CKKS [

30], which share similar noise management challenges to SWHE systems.

Although these advances have significantly improved the practicality of SWHE, noise management remains a central challenge. The trade-offs between computational depth, performance overhead, and security parameters continue to guide the design of modern SWHE systems, ensuring that even as new techniques are developed, careful attention must be paid to balancing noise accumulation against the desired homomorphic functionality.

3.2.3. Fully Homomorphic Encryption (FHE)

Gentry’s model [

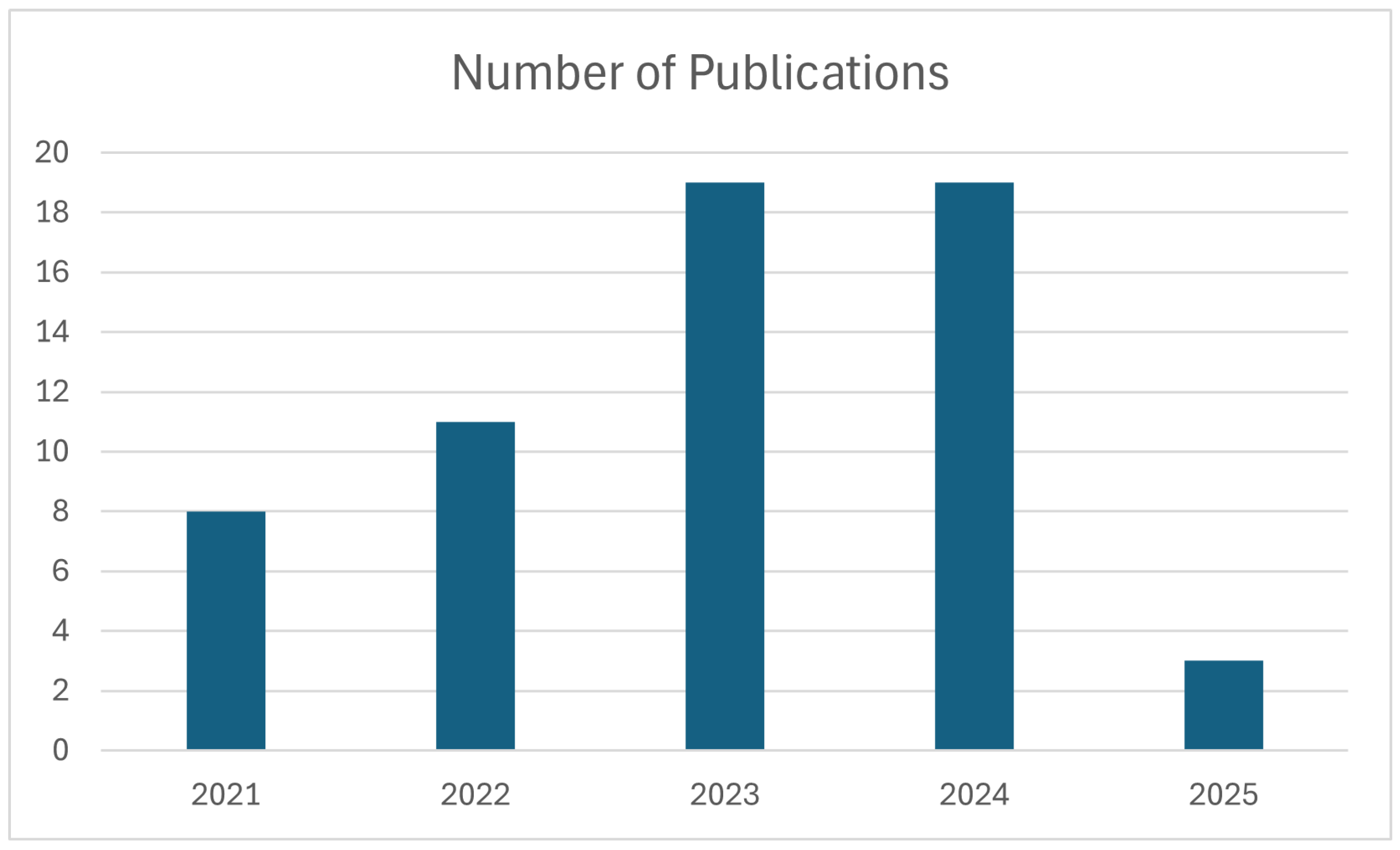

6] of homomorphic encryption relies on lattice-based cryptography. Over the past 15 years, there has been a significant surge in research and development focused on creating and enhancing homomorphic encryption schemes, resulting in a multitude of public key encryption methods capable of supporting homomorphic operations. Since the design of the first fully homomorphic encryption scheme, there have been nearly 40 public key schemes proposed, of which only one is based on coding theory, 10 are based on number theory, and the rest are based on lattices. Current research focusses not only on the construction of homomorphic encryption but also on its application and implementation to various technologies.

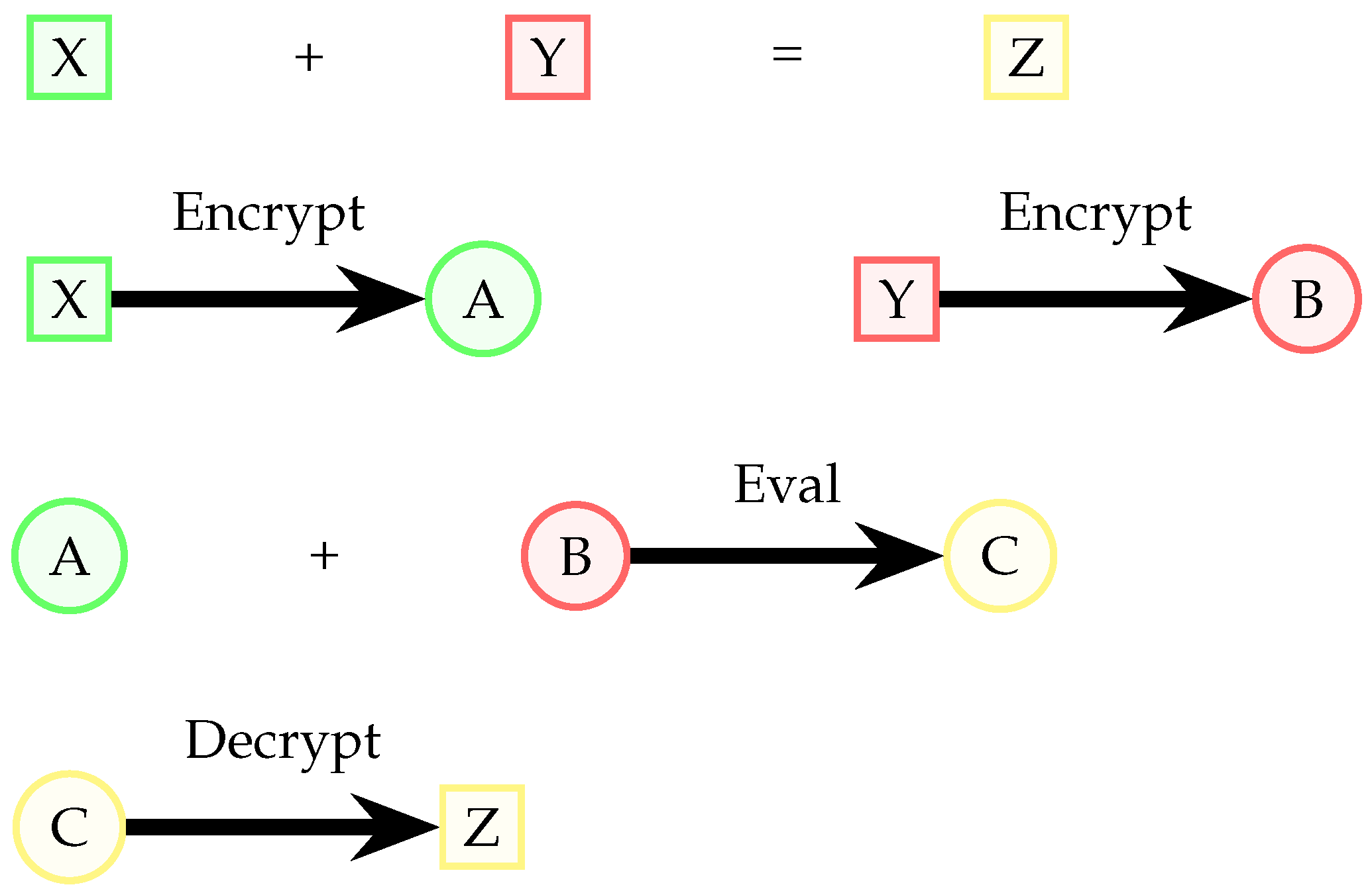

A Fully Homomorphic Encryption (FHE) scheme is a type of encryption scheme that permits direct operations on ciphertexts, eliminating the need for decryption to perform operations. Homomorphic addition and multiplication operations are crucial to this functionality, since they constitute a functionally complete set within the realm of finite rings. Put differently, an FHE scheme enables the execution of computations on encrypted data without the need for prior decryption. We can call any encryption scheme

E homomorphic with respect to any function

f if and only if the following statement is satisfied

where

are plain text.

Homomorphic encryption schemes possess two fundamental attributes: their ability to support a maximum degree of functions and the degree to which ciphertext length expands after each homomorphic operation. The first characteristic determines the types of functions the scheme can accurately evaluate. The second characteristic relates to the increase in ciphertext length, indicating how much the bit length of the ciphertext expands following each evaluation. When the limit on bit-length expansion remains consistent regardless of the complexity of the function, it is referred to as being compact.

3.3. The Evolution of FHE Schemes

In 2017 the Homomorphic Encryption Standard [

31] classified FHE schemes into generations based on the efficiency of computation and the techniques used to manage noise in evaluating the ciphertext. This section describes the four generations of FHE schemes, the algorithms, their security assumptions, benefits and limitations.

Table 3 summarises the comparison of the four generations of fully homomorphic encryption schemes. Note that code-based techniques have not been used in any of the four generations.

3.3.1. First Generation: FHE Based on Ideal Lattice

The Gentry model [

6] represents the foundational framework for Fully Homomorphic Encryption (FHE), leveraging the hardness of problems in ideal lattices.

Key Generation:

- -

An ideal lattice is generated along with two bases: a secret basis () and a public basis ().

- -

Parameters such as the modulus and an error distribution are selected.

Encryption:

- -

A binary message is encoded into a real-valued vector by adding a small error vector, whose entries are randomly chosen from with equal probabilities for and .

- -

The resulting vector is then translated into a specific region (a parallelepiped) defined by the public basis .

Decryption:

- -

The ciphertext is translated back into a parallelepiped defined by the secret basis .

- -

The original message is recovered by reducing the decoded vector modulo 2.

The public key is based on skewed vectors, making it unsuitable for decoding, and the secret key consists of nearly orthogonal vectors, enabling efficient decryption.

Bootstrapping: The scheme is not inherently bootstrappable due to the high complexity of the decryption circuit. To address this, Gentry introduced the squashing technique, which reduces the decryption complexity by embedding additional information about the secret key into the evaluation key.

The security of the scheme relies on three core mathematical problems:

Sparse Subset Sum Problem (SSSP): Given a set of integers , determine whether there exists a sparse subset such that .

Bounded Distance Decoding Problem (BDD): Given a lattice and a target vector that is close to the lattice, find the closest lattice vector.

Ideal Shortest Vector Problem (Ideal SVP): Find the shortest non-zero vector in an ideal lattice.

Additionally, the scheme assumes circular security, which means that the encryption of the secret key does not compromise the overall security of the scheme.

Limitations and Vulnerabilities:

3.3.2. First Generation: FHE Based on AGCD Problem

In 2010, van Dijk et al. [

33] introduced the DGHV Fully Homomorphic Encryption (FHE) scheme, which is built on number-theoretic assumptions. The DGHV scheme marks a significant milestone in the advancement of FHE, as it was the first construction to achieve fully homomorphic encryption using basic arithmetic operations over integers.

The DGHV scheme operates over the integers and relies on the hardness of the Approximate Greatest Common Divisor (AGCD) problem. The construction consists of three main algorithms: key generation, encryption, and decryption.

Key Generation:

- -

The secret key is an odd random integer chosen uniformly from a predefined range.

- -

The public key consists of several integers. Each public key element is computed by multiplying the secret key with a large random integer and then adding a small random noise. One of the public key elements is chosen to be the largest odd integer in the set.

Encryption:

- -

To encrypt a bit:

- ∗

A random subset of the public key elements is selected.

- ∗

A small random noise is sampled.

- ∗

The ciphertext is computed by combining the message, the sampled noise, and a sum over the selected public key elements. The result is reduced modulo the largest public key element.

- -

The resulting ciphertext contains the message hidden among noise terms, which ensures security.

Decryption:

- -

The ciphertext is first reduced modulo the secret key, and then the result is further reduced modulo 2 to recover the original message.

- -

The correctness of decryption depends on the small size of the noise relative to the secret key.

Security Assumptions

The security of the DGHV scheme is based on the following computational problems and assumptions:

Approximate Greatest Common Divisor (AGCD) Problem: Given a set of integers , where p is a secret odd integer and are small noise terms, the AGCD problem requires recovering p. This problem is believed to be computationally hard, even for quantum computers.

Sparse Subset Sum Problem (SSSP): The scheme also relies on the hardness of the SSSP, which involves finding a sparse subset of integers that sums to a specific value.

Circular Security: The DGHV scheme assumes circular security, meaning that the encryption of the secret key p does not compromise the overall security of the scheme.

Limitations and Drawbacks

Despite its theoretical significance, the DGHV scheme has several practical limitations:

Large Public Key Size: The public key consists of integers, each of which is significantly larger than the secret key p. This results in large public key size, which can be impractical for real-world applications.

High Computational Complexity: The encryption and decryption processes involve arithmetic operations on large integers, leading to high computational overheads.

Noise Growth: While the scheme supports homomorphic operations, the noise in the ciphertext grows with each operation, eventually requiring bootstrapping to maintain correctness. However, bootstrapping in the DGHV scheme is computationally expensive.

3.3.3. Second Generation: FHE Based on LWE and RLWE

Brakerski and Vaikuntanathan introduced two Fully Homomorphic Encryption (FHE) schemes, marking the beginning of the second generation of FHE. These schemes are based on the Learning With Errors (LWE) problem [

34] and the Ring Learning With Errors (RLWE) problem [

8]. The symmetric scheme based on LWE, referred to as the BV scheme, and its RLWE-based counterpart, the BGV scheme, are foundational to modern FHE. Below, we provide a detailed description of these schemes, their algorithms, and their key innovations.

BV Scheme: LWE-Based FHE

The BV scheme is based on the LWE problem, which involves solving linear equations perturbed by small noise. The scheme consists of the following algorithms:

Encryption:

- -

To encrypt a bit:

- ∗

A random vector is generated.

- ∗

The ciphertext is formed as a pair consisting of this random vector and a computed value that includes the inner product with the secret key, a small noise term, and the message.

- ∗

The noise term is sampled from a predefined error distribution.

Decryption:

- -

To decrypt:

- ∗

The inner product of the random vector and the secret key is subtracted from the second component of the ciphertext.

- ∗

The result is reduced modulo the underlying modulus, and then modulo 2 to recover the message.

- -

Decryption works correctly as long as the error introduced during encryption remains below a specified threshold.

The BV scheme introduced two critical techniques to enable fully homomorphic encryption:

Relinearization (Key-Switching): This technique reduces the size of ciphertexts after homomorphic multiplication. Specifically, it reduces the ciphertext size from to , where n is the dimension of the LWE problem.

Dimension-Modulus Reduction (Modulus Switching): This technique transforms a ciphertext into a ciphertext , where . Modulus switching reduces the noise growth during homomorphic operations, enabling deeper computations without bootstrapping.

By eliminating the need for Gentry’s squashing technique as well as the reliance on the Sparse Subset Sum Problem (SSSP), the BV scheme becomes more efficient and practical.

BGV Scheme: RLWE-Based FHE

The BGV scheme extends the BV framework to the Ring Learning With Errors (RLWE) setting, offering improved efficiency and scalability. The scheme operates over the ring , where for some integer M. The BGV scheme is defined as follows:

Key Generation:

- -

The secret key is a vector consisting of the constant 1 and a small random element sampled from an error distribution.

- -

The public key is a pair of ring elements. One element is chosen at random, and the other is computed by multiplying the choosen element with the secret part of the key and adding a small error, then reducing modulo a fixed modulus.

Encryption:

- -

The message is encoded as a two-component vector, placing the bit to be encrypted in the first component.

- -

The ciphertext is computed by adding the encoded message, a small random error vector, and a product involving the public key and a random ring element.

Decryption:

- -

Decryption involves computing the inner product between the ciphertext and the secret key.

- -

The message is recovered by reducing the result modulo 2.

Brakerski introduced a scale-invariant variant of the BGV scheme [

25], achieved by scaling down both the ciphertext and the error by a factor of

q, where

q is the ciphertext modulus, thus reducing the noise growth during homomorphic multiplications from exponential to linear. This innovation replaces the traditional modulus switching technique, further improving the scheme’s efficiency.

FV Scheme: Optimized RLWE-Based FHE

The FV scheme, an optimized version of the BGV scheme, was introduced by Fan and Vercauteren [

23]. It is designed for efficient modular arithmetic on encrypted integers and is implemented in Microsoft’s SEAL library [

35]. The FV scheme operates as follows:

Key Generation:

- -

The secret key is a small random element sampled from a noise distribution.

- -

The public key consists of two ring elements:

- ∗

One is computed using the secret key and an additional small noise term.

- ∗

The other is a random ring element.

Encryption:

- -

To encrypt a message, several small random elements are sampled.

- -

The ciphertext is a pair of ring elements:

- ∗

One includes the message scaled by a modulus ratio, combined with a noisy term and one part of the public key.

- ∗

The other is formed similarly using the second part of the public key and additional noise.

Decryption:

- -

Decryption computes a linear combination of the ciphertext components using the secret key.

- -

The result is scaled down and reduced to recover the original message.

The BGV and FV schemes have been further enhanced through techniques such as batching, modulus switching, and improved bootstrapping. These optimizations enable their integration into practical cryptographic libraries like HElib [

36] and SEAL [

35], making them suitable for applications such as privacy-preserving machine learning and secure computation.

3.3.4. Second Generation: FHE Based on NTRU

The NTRU encryption scheme, introduced by Hoffstein et al. in 1998 [

37], is a lattice-based cryptosystem that has played a significant role in the development of post-quantum cryptography. The scheme was initially proposed with a provisional patent, which was later granted in 2000 [

38]. NTRU is often referred to as the LTV scheme in the literature [

39], and it incorporates techniques such as bootstrapping and modulus switching to achieve its functionality.

The NTRU scheme operates over the ring , where for some integer m. The scheme consists of three main algorithms: key generation, encryption, and decryption.

Key Generation:

- -

Sample two small polynomials from a bounded distribution over the ring.

- -

Construct the secret key by doubling one of the polynomials and adding one, ensuring it is invertible modulo a specified integer.

- -

Compute the public key by multiplying the second sampled polynomial with the inverse of the secret key and scaling appropriately.

- -

The public and secret keys form the key pair.

Encryption:

- -

For a binary message, sample two small polynomials from the error distribution.

- -

The ciphertext is computed by combining the public key with the sampled polynomials and adding the message.

- -

All operations are performed modulo a specified integer.

Decryption:

- -

Multiply the ciphertext by the secret key and reduce modulo the ring and modulo 2.

- -

Correct decryption is ensured as long as the noise remains within acceptable bounds.

Security Assumptions

The security of the NTRU scheme is based on the following computational problems and assumptions:

Circular Security: The scheme assumes that the encryption of the secret key does not compromise the overall security of the system.

Ring Learning With Errors (RLWE) Problem: The hardness of solving the RLWE problem over the ring R is a fundamental assumption for the security of NTRU.

Decisional Small Polynomial Ratio (DSPR) Problem: This problem involves distinguishing between a random polynomial and a ratio of two small polynomials in the ring R. The DSPR problem is believed to be computationally hard, even for quantum computers.

Limitations and Vulnerabilities

Despite its initial promise, the NTRU scheme has several limitations and vulnerabilities that have impacted its practical adoption:

Parameter Sizing: To achieve security against known attacks, the parameters of NTRU-based schemes must be significantly larger than those initially proposed. This results in larger key sizes and reduced efficiency.

Error Rates: Research by Lepoint and Naehrig [

40] has shown that RLWE-based schemes exhibit lower error rates compared to NTRU-based schemes. This makes RLWE-based schemes more robust for practical applications.

Attacks: Numerous attacks have been documented against NTRU-based schemes, including lattice reduction attacks and subfield attacks. While parameters have been improved to mitigate these attacks, the resulting schemes are less efficient than their RLWE counterparts.

Due to the aforementioned limitations, NTRU-based schemes are no longer widely used or supported by cryptographic libraries. The efficiency and security advantages of RLWE-based schemes have made them the preferred choice for lattice-based cryptography in both academic research and practical implementations.

3.3.5. Third Generation: FHE Based on LWE and RLWE

The third generation of homomorphic encryption introduced a novel approach known as the approximate eigenvector method [

41]. This method significantly advances the field by eliminating the need for key and modulus switching techniques, which were critical in earlier schemes such as BGV and FV. A key advantage of this approach is its ability to control error growth during homomorphic multiplications, limiting it to a small polynomial factor. Specifically, when multiplying

l ciphertexts with the same error level, the final error increases by a factor of

, where

n represents the dimension of the encryption scheme or lattice. This is a substantial improvement over previous schemes, where error growth was quasi-polynomial. This third-generation scheme is commonly referred to as the GSW scheme, named after its inventors Gentry, Sahai, and Waters. Below, we provide a detailed description of the GSW scheme, its algorithms, and its optimizations.

GSW Scheme: Approximate Eigenvector Method

The GSW scheme is based on the Learning With Errors (LWE) problem and employs an approximate eigenvector approach to achieve fully homomorphic encryption. The scheme consists of the following algorithms:

Key Generation:

- -

Generate a secret key as a vector starting with 1 followed by randomly chosen integers.

- -

Construct a public matrix such that its product with the secret key yields a small error vector.

Encryption:

- -

Represent the message as a scalar multiple of the identity matrix.

- -

Generate a random binary matrix and compute the ciphertext as the sum of the message matrix and the product of this random matrix with the public key.

- -

The randomness in the binary matrix introduces encryption noise.

Decryption:

- -

Multiply the ciphertext with the secret key to retrieve the message component, while the noise term remains small.

- -

Use the first coordinate of the resulting vector to approximate and recover the original message.

Homomorphic Multiplication and Bootstrapping

The GSW scheme employs bit decomposition for homomorphic multiplication allowing for efficient computation of products of ciphertexts. However, the scheme has some limitations:

High Communication Costs: The ciphertext size in the GSW scheme is relatively large compared to the plaintext, leading to increased communication overhead.

Computational Complexity: The scheme involves complex matrix operations, which can be computationally intensive.

To address these limitations, several optimizations have been proposed:

Arithmetic Bootstrapping: Alperin-Sheriff and Peikert (AP) [

42] introduced a bootstrapping algorithm that treats decryption as an arithmetic function rather than a Boolean circuit, significantly reducing computational complexity.

Homomorphic Matrix Operations: Hiromasa et al. [

43] extended the GSW scheme to support homomorphic matrix operations, further enhancing its applicability.

Programmable Bootstrapping (PBS): Ducas and Micciancio [

44] introduced the FHEW scheme, which incorporates the AP bootstrapping technique and enables programmable bootstrapping. This allows for the homomorphic evaluation of arbitrary functions, including the NAND operation, using lookup tables.

In addition to the GSW scheme, the third generation of homomorphic encryption includes three distinct schemes based on the torus:

TLWE (Torus Learning With Errors): Generalizes the LWE problem to the torus .

TRLWE (Torus Ring Learning With Errors): Extends the RLWE problem to the torus, enabling encryption of plaintexts in the ring of integer polynomials R.

TRGSW (Torus Ring GSW): A ring variant of the GSW scheme on the torus, which supports homomorphic operations on encrypted data.

The TRLWE scheme operates over the ring , where . The message space is defined as , and the scheme is constructed as follows:

Key Generation:

- -

Choose a small secret key from a ring of polynomials modulo 2, where coefficients are in and polynomials are reduced modulo a cyclotomic polynomial.

Encryption:

- -

Select a message from the torus , representing real numbers modulo 1.

- -

Sample a random masking vector uniformly and add a small error drawn from a bounded distribution.

- -

Construct the ciphertext as a pair, consisting of the mask and a linear combination of the secret key with the mask, added to the message and the error.

Decryption:

- -

Use the secret key to compute a linear combination that cancels the mask.

- -

Subtract this value from the second component of the ciphertext to extract a noisy version of the message.

- -

Round the result to the nearest valid message point in the torus.

Chillotti et al. [

45] introduced several optimizations for torus-based FHE schemes, including the ability to generate fresh ciphertexts by linearly combining existing ones. However, non-linear operations remain challenging due to the limitations of TLWE. To address this, the TRGSW scheme was developed, which supports generalized scale-invariant operations and enables efficient switching between TRLWE and TLWE.

3.3.6. Fourth Generation: FHE Based on LWE and RLWE

In 2017, Cheon et al. introduced a groundbreaking collection of Fully Homomorphic Encryption (FHE) schemes [

30], which are specifically designed for approximate arithmetic on real and complex numbers. This scheme, originally named HEAAN (Homomorphic Encryption for Arithmetic of Approximate Numbers), is now commonly referred to as the CKKS scheme, named after its authors. The CKKS scheme provides a leveled homomorphic encryption framework, enabling efficient computations on encrypted data while maintaining approximate correctness. Additionally, the authors released an open-source library to facilitate the implementation and deployment of this scheme.

The CKKS scheme operates over the ring , where for some integer M. The scheme is designed to handle approximate arithmetic, making it particularly suitable for applications involving real or complex numbers, such as machine learning and scientific computing. The CKKS scheme consists of the following algorithms:

Key Generation:

- -

Choose a base value, an initial modulus, and a level parameter.

- -

Define appropriate distributions for noise and randomness.

- -

Generate a secret key consisting of a fixed value and a random component.

- -

Create a public key using a randomly chosen polynomial and an error term.

- -

Construct an evaluation key using additional randomness and a scaled secret.

- -

Output the secret key, public key, and evaluation key.

Encryption:

- -

To encrypt a message, choose random values for masking and noise.

- -

Use the public key to compute a ciphertext as a pair of noisy polynomials encoding the message.

Decryption:

- -

Use the secret key to combine the ciphertext components and recover an approximation of the original message.

- -

The correctness depends on the noise being sufficiently small.

A notable feature of the CKKS scheme is its ability to encode messages as elements in the extension field , which corresponds to the complex numbers. Specifically:

The message space is defined as , where the roots of the polynomial are the complex primitive roots of unity in .

A message can be embedded into a vector of complex numbers by evaluating it at these roots. This allows for efficient encoding and decoding of real and complex numbers, making the CKKS scheme particularly suitable for applications involving approximate arithmetic.

The CKKS scheme offers several advantages over previous FHE schemes:

Approximate Arithmetic: The scheme is specifically designed for approximate computations, making it ideal for applications such as machine learning, where exact precision is not required.

Efficient Encoding: The ability to encode messages as complex numbers allows for efficient representation of real and complex data, reducing the computational overhead associated with homomorphic operations.

Leveled Homomorphism: The CKKS scheme supports leveled homomorphic encryption, enabling a predetermined number of homomorphic operations without the need for bootstrapping. This makes it more efficient for practical applications.

3.4. Security Assumptions of Current FHE Schemes

The security of lattice-based Fully Homomorphic Encryption (FHE) schemes relies on the hardness of several well-studied computational problems in lattice theory. These problems form the foundation of the cryptographic assumptions underlying the security of LWE (Learning With Errors) and RLWE (Ring Learning With Errors)-based schemes. Below, we provide a brief analysis of the various problems and their relevance to FHE. The reader can find more details about the NP-Hard problems in [

7].

3.4.1. Shortest Vector Problem (SVP)

The Shortest Vector Problem (SVP) is a fundamental problem in lattice theory and serves as the basis for many lattice-based cryptographic schemes. Given a lattice

L, the SVP involves finding the shortest non-zero vector in

L. Several variants of the SVP are used in cryptographic constructions [

7]:

Approximate Shortest Vector Problem ():

- -

Given a lattice and an approximation factor greater than or equal to 1, the goal is to find a short non-zero vector in the lattice.

- -

The vector should be at most a factor longer than the actual shortest non-zero vector in the lattice.

Gap Shortest Vector Problem ():

- -

Given a lattice, a distance bound, and an approximation factor, the task is to decide whether the shortest non-zero vector is shorter than the bound or significantly longer than it.

- -

Specifically, determine if the shortest vector is less than the given bound or at least times larger.

Unique Shortest Vector Problem ():

- -

Given a lattice and an approximation factor, the goal is to find the shortest non-zero vector assuming it is significantly shorter than all other non-parallel vectors.

- -

That is, the first shortest vector is unique in that it is strictly shorter than the next shortest one by a factor of .

The hardness of these problems is crucial for the security of lattice-based FHE schemes. If the SVP is solvable, then the decisional variant is also solvable.

3.4.2. Closest Vector Problem (CVP)

The Closest Vector Problem (CVP) is a generalization of the SVP, where the goal is to find the lattice vector closest to a given target vector

. The CVP and its variants are defined as follows [

7]:

Approximate Closest Vector Problem ():

- -

Given a lattice and a target point in space, the goal is to find a lattice vector that is close to the target.

- -

The vector found should be no more than a factor farther from the target than the actual closest lattice point.

Gap Closest Vector Problem ():

- -

Given a lattice, a target point, a distance bound, and an approximation factor, the task is to decide whether the target is very close to the lattice or significantly farther away.

- -

Specifically, determine whether the distance is less than the given bound or at least times larger.

Bounded Distance Decoding Problem ():

- -

Given a lattice and a target point that is guaranteed to be close to it, the goal is to find the closest lattice vector.

- -

The guarantee is that the target lies within a certain fraction of the shortest non-zero distance between lattice points.

The CVP and its variants are closely related to the SVP and are used in the security analysis of lattice-based cryptographic schemes.

3.4.3. Shortest Integer Solution (SIS) Problem

The Shortest Integer Solution (SIS) problem is another fundamental problem in lattice-based cryptography. It is defined as follows [

7]:

The SIS problem is equivalent to finding a short vector in the scaled dual lattice . It is used in the construction of cryptographic primitives such as digital signatures and hash functions.

3.4.4. Learning with Errors (LWE) and Ring-LWE

The Learning With Errors (LWE) problem and its ring variant (Ring-LWE) are central to the security of many lattice-based FHE schemes. These problems are defined as follows:

The security of LWE and Ring-LWE-based schemes relies on the hardness of solving these problems using lattice reduction algorithms. However, as demonstrated by Albrecht et al. [

46], there is no universal attack that can efficiently solve all instances of these problems. The effectiveness of lattice reduction attacks depends on the choice of parameters, such as the modulus

q, the dimension

n, and the error distribution.

For Ring-LWE, the security analysis is similar to that of LWE, but with additional considerations due to the ring structure. According to the Homomorphic Encryption Security Standard, selecting an appropriate error distribution ensures that there are no superior attacks on Ring-LWE compared to LWE. However, the error distribution must be sufficiently spread to maintain security.

The security of lattice-based FHE schemes is grounded in the hardness of problems such as SVP, CVP, SIS, LWE, and Ring-LWE. These problems are believed to be resistant to quantum attacks, making lattice-based cryptography a promising candidate for post-quantum security. While lattice reduction algorithms pose a potential threat, careful parameter selection and error distribution management can mitigate these risks, ensuring the robustness of lattice-based cryptographic schemes.

Lattice-based homomorphic encryption (HE) schemes, like those based on Learning With Errors (RLWE) and NTRU, have made notable strides in efficiency and security. However, they still face challenges such as large ciphertext expansion, high computational overhead, and susceptibility to quantum attacks. Concerns about their long-term security arise from their reliance on lattice problems. To address these limitations, researchers are turning to alternative hardness assumptions. Code-based cryptography, known for its strength in post-quantum encryption, offers potential benefits for HE, including robust security and efficiency. Exploring code-based HE schemes may help overcome the shortcomings of lattice approaches while maintaining resistance to quantum threats.