Abstract

Multivariate public-key cryptosystems are potential candidates for post-quantum cryptography. The security of multivariate public-key cryptosystems relies on the hardness of solving a system of multivariate quadratic polynomial equations. Faugère’s F4 algorithm is one of the solution techniques based on the theory of Gröbner bases and selects critical pairs to compose the Macaulay matrix. Reducing the matrix size is essential. Previous research has not fully examined how many critical pairs it takes to reduce to zero when echelonizing the Macaulay matrix in rows. Ito et al. (2021) proposed a new critical-pair selection strategy for solving multivariate quadratic problems associated with encryption schemes. Instead, this paper extends their selection strategy for solving the problems associated with digital signature schemes. Using the OpenF4 library, we compare the software performance between the integrated F4-style algorithm of the proposed methods and the original F4-style algorithm. Our experimental results demonstrate that the proposed methods can reduce the processing time of the F4-style algorithm by up to a factor of about seven under certain specific parameters. Moreover, we compute the minimum number of critical pairs to reduce to zero and propose their extrapolation outside our experimental scope for further research.

1. Introduction

Shor demonstrated that solving both the integer factorization problem (IFP) and the discrete logarithm problem (DLP) is theoretically tractable in polynomial time [1]. Both Rivest–Shamir–Adleman (RSA) public-key cryptography and elliptic curve cryptography (ECC) are widely used, and their security depends on the IFP and DLP, respectively. In recent years, research and development of quantum computers have progressed rapidly. For example, noisy intermediate-scale quantum computers are already in practical use. Since system migration takes time in general, preparation for migration to PQC is a significant issue. Research, development, and standardization projects for post-quantum cryptography (PQC) are ongoing within these contexts. The PQC standardization process was started by the National Institute of Standards and Technology (NIST) in 2016 [2]. Several cryptosystems have been proposed for the NIST PQC project, including lattice-based, code-based, and hash-based cryptosystems. The multivariate public-key cryptosystem (MPKC) is one of the cryptosystems proposed for the NIST PQC project. At the end of the third round, NIST selected four candidates to be standardized, as shown in Table 1, and moved four candidates to the fourth-round evaluation, as shown in Table 2. Moreover, NIST issued a request for proposals of digital signature schemes with short signatures and fast verification [3]. MPKCs are often more efficient than other public-key cryptosystems, primarily digital signature schemes, as described in the subsequent paragraph; therefore, researching the security of MPKCs is still important.

Table 1.

NIST Selected Algorithms 2022.

Table 2.

NIST Round-Four Submissions.

An MPKC is basically an asymmetric cryptosystem that has a trapdoor one-way multivariate (quadratic) polynomial map over a finite field . Let : a (quadratic) polynomial map whose inverse can be computed easily, and two randomly selected invertible affine linear maps and . The secret key consists of , , and . The public key consists of the composite map : such that . The public key can be regarded as a set of m (quadratic) polynomials in n variables:

where each is a non-linear (quadratic) polynomial.

Multivariate quadratic (MQ) problem: Find a solution such that the system of (quadratic) polynomial equations:

Then, the MQ problem is closely related to the attack that forges signatures for the MPKC.

Isomorphism of polynomials (IP) problem: Let and be two polynomial maps from to . Find two invertible affine linear maps : and : such that .

Then, the IP problem is closely related to the attack for finding secret keys for the MPKC.

One of the most well-known public-key cryptosystems based on multivariate polynomials over a finite field was proposed by Matsumoto and Imai [12]. Patarin [13] later demonstrated that the Matsumoto–Imai cryptosystem is insecure, proposed the hidden field equation (HFE) public-key cryptosystem by repairing their cryptosystems [14] and designed the Oil and Vinegar (OV) scheme [15]. There are several variations of the HFE and OV schemes, e.g., Kipnis et al. proposed the unbalanced OV (UOV) scheme [16] as described in Section 2.5.

Several MPKCs were proposed for the NIST PQC project [17], e.g., GeMSS [18], LUOV [19], MQDSS [20], and Rainbow [21] MPKCs. Ding et al. found a forgery attack on LUOV [22], and Kales and Zaverucha found a forgery attack on MQDSS [23]. At the end of the second round, NIST selected Rainbow as a third-round finalist and moved GeMSS to an alternate candidate [24].

MinRank problem: Let r be a positive integer and k matrices . Find such that and

The MinRank problem can be reduced to the MQ problem [25,26,27]. By solving the MinRank problem, Tao et al. found a key recovery attack on GeMSS [28], and Beullens found a key recovery attack on Rainbow [29,30].

NIST reported that a third-round finalist of the digital signature scheme, Rainbow, had the property that its signing and verification were efficient, and the signature size was very short [31]. Beullens et al. also demonstrated that the (U)OV scheme performed comparably to the algorithms selected by NIST [32].

As noted above, the security of an MPKC is highly dependent on the hardness of the MQ problem because multivariate polynomial equations are transformed into MQ polynomial equations by increasing the number of variables and equations. To break a cryptosystem, we translate its underlying algebraic structure into a system of multivariate polynomial equations. There are three well-known algebraic approaches to solving the MQ problem: the extended linearization (XL) algorithm proposed by Courtois et al. [33] and the F4 and F5 algorithms proposed by Faugère [34,35]. The XL algorithm is described in Section 2.2. Gröbner bases algorithm is described in Section 2.3 and Section 3.1 and the F4 algorithm is described in Section 3.2.

In addition to the theoretical evaluations of the computational complexity, practical evaluations are also crucial in the research of cryptography, e.g., such as many efforts addressing the RSA [36], ECC [37], and Lattice challenges [38]. The Fukuoka MQ challenge project [39,40] was started in 2015 to evaluate the security of the MQ problem. In this project, the MQ problems were classified into encryption and digital signature schemes. Each scheme was then classified into three categories according to the number of quadratic equations (m), the number of variables (n), and the characteristic of the finite field. The encryption schemes were classified into types I to III, which correspond to the condition where over , , and , respectively. The digital-signature schemes were classified into types IV to VI, which correspond to the condition where over , , and , respectively. Up to the time of writing this paper, all the best records in the Fukuoka MQ challenge, except type IV, have been set by variant algorithms of both the XL and F4 algorithms. For example, the authors improved the F4-style algorithm and set new records of both type II and III, as described below, but a variant of the XL algorithm later surpassed the record of type III.

The F4 algorithm proposed by Faugère is an improvement of Buchberger’s algorithm for computing Gröbner bases [41,42], as described in Section 3.2. In Buchberger’s algorithm, it is fundamental to compute the S-polynomial of two polynomials, as described in Section 3.1. The critical pair is defined by a set of data (two polynomials, the least common multiple () of their leading terms, and two associated monomials required to compute the S-polynomial, as described in Section 2.3. The F4 algorithm computes many S-polynomials simultaneously using Gaussian elimination. A variant of the F4 algorithm involving these matrix operations is referred to as an F4-style algorithm in this paper. There are several variants of the F4-style algorithm. For example, Joux and Vitse [43] designed an efficient variant algorithm to compute Gröbner bases for similar polynomial systems. Additionally, Makarim and Stevens [44] proposed a variant M4GB algorithm that could reduce the leading and lower terms of a polynomial. Using the M4GB algorithm, they set the best record for the Fukuoka MQ challenge of type VI with up to 20 equations (), at the time of writing this paper.

Recently, Ito et al. [45] also proposed a variant algorithm that could solve the Fukuoka MQ challenge for both types II and III, with up to 37 equations (), and set the best record of type II at the time of writing. In their paper, the following selection strategy for critical pairs was proposed: (a) a set of critical pairs is partitioned into smaller subsets such that ; (b) Gaussian elimination is performed for an associated Macaulay matrix composed of each subset; and (c) the remaining subsets are omitted if some S-polynomials are reduced to zero. Herein, we refer to the subdividing method as (a) and the removal method as (c). Their strategy was then validated only under the following two situations: systems of MQ polynomial equations associated with encryption schemes, i.e., the case and the case. Thus, in this paper, we propose several types of partitions related to the subdividing method and focus on their validity for solving systems of MQ polynomial equations associated with digital-signature schemes, i.e., the case. We evaluate the performance of the proposed methods for the case only because we focus on evaluating the performance of the proposed methods. In other words, before executing the F4-style algorithm combined with the proposed methods, we assume that random values or specific values have already been substituted for some variables in the system, according to the hybrid approach [46].

Our contribution. In general, the size of a matrix affects the computational complexity of Gaussian elimination. Reducing the number of critical pairs is essential because they determine the size of the Macauley matrix. First, we propose three basic subdividing methods SD1, SD2, and SD3, which have different types of partitions for a set of critical pairs. Then, we integrate both the proposed subdividing methods and the removal method into the OpenF4 library [47] and compare their software performance with that of the original library using similar settings to those of types V and VI of the Fukuoka MQ challenge, i.e., over and over . To validate the removal method, we then verify that neither a temporary base nor critical pair of a higher degree arises from unused critical pairs in omitted subsets. Here, denotes the highest degree of critical pairs appearing in the Gröbner bases computation or the F4-style algorithm. The process by which the degree of a critical pair reaches for the first time is referred to as the first half, and the remaining process is referred to as the second half. Then, our experiments show that a combination of two different basic methods (i.e., SD3 followed by SD1) is faster than all other methods because of the difference between the first and second halves of the computation. Finally, our experiments show that the number of critical pairs that generate a reduction to zero for the first time is approximately constant under the condition where in the sense that a similar number is obtained with a high probability. We also propose two derived subdividing methods (SD4 and SD5) for the first half. The experimental results show that SD4 followed by SD1 is the fastest method and SD5 is as fast as SD4, as long as over and over hold. Moreover, we propose an extrapolation outside the scope of the experiments for further research. Our findings make a unique contribution toward improving the security evaluation of MPKC.

Organization. The remainder of this paper is organized as follows. First, we introduce basic notations and preliminaries in Section 2. Next, we present background to Gröbner bases computation in Section 3. Then, we describe the proposed method in Section 3.4. Afterward, we present the performance result of the proposed method in Section 4. Finally, the paper is concluded in Section 5.

2. Preliminaries

In the following, we define the notations and terminology used in this paper.

2.1. Notations

Let be the set of all natural numbers, the set of all integers, the set of all non-negative integers, and a finite field with q elements. denotes a polynomial ring of n variables over , i.e., .

A monomial is defined by a product , where the is an element of . Furthermore, denotes the set of all monomials in , i.e., . For and , we call the product a term and c the coefficient of u. denotes the set of all terms, i.e., . For a polynomial, for and , denotes the set , and denotes the set .

The total degree of is defined by the sum , which is denoted by . The total degree of f is defined by and is denoted by .

Definition 1.

A total order ≺ on is called a monomial order if the following conditions hold:

- (i)

- s, t, , t ⪯ s ⇒ ⪯ ,

- (ii)

- ∀, 1 ⪯ t.

Here, if or holds, then we denote .

Definition 2.

The degree reverse lexicographical order ≺ is defined by

For example, in ,

The degree reverse lexicographical order ≺ is fixed throughout this paper as a monomial order.

For a polynomial , denotes the leading monomial of f, i.e., , and denotes the leading term of f, i.e., . In addition, denotes the corresponding coefficient for . A polynomial f is called monic if .

For a subset , denotes the set of leading monomials of polynomials , i.e., .

For two monomials and where and , their corresponding least common multiple (LCM) and the greatest common divisor (GCD) are defined as where , and where .

For two subsets A and B, is defined if holds for and .

2.2. The XL Algorithm

Let D be the parameter of the XL algorithm. Let be a set of polynomials over a finite field . The XL algorithm executes the following steps:

- Multiply: Generate all the products () with .

- Multiply: Consider each monomial in of degree as a new variable and perform Gaussian elimination on the linear equation obtained in step 1. The ordering on the monomials must be such that all the terms containing one variables (say ) are eliminated last.

- Solve: Assume that step 2 yields at least one univariate equation in the power of . Solve this equation over .

- Repeat: Simplify the equations and repeat the process to find the values of the other variables.

Step 1 is regarded as the construction of the Macaulay matrix with the ordering specified in step 2, as described in Section 3.2. It is difficult to estimate the parameter D in advance. The computational complexity of the XL algorithm is roughly

where n is the number of variables and is the linear algebra constant [48].

2.3. Gröbner Bases

The concept of Gröbner bases was introduced by Buchberger [49] in 1979. Computing Gröbner bases is a standard tool for solving simultaneous equations. This section presents the definitions and notations used in Gröbner bases. Methods to compute Gröbner bases are explained in Section 3.

Here, denotes an ideal generated by a subset . is called a basis of an ideal I if holds. We refer to G as Gröbner bases of I if for all there exists such that . To compute Gröbner bases, we need to compute polynomials called S-polynomials.

Here, let and . It is said that f is reducible by G if there exist and such that . Thus, we can eliminate from f by computing , where c is the coefficient of u in f. In this case, g is said to be a reductor of u. If f is not reducible by G, then f is said to be a normal form of G. Repeatedly reducing f using a polynomial of G to obtain a normal form is referred to as normalization, and the function normalizing f using G is represented by .

For example, let and . First, the term in f is divisible by and is obtained. Next, the term in is divisible by and is obtained. Finally, is the normal form of f by G since is not reducible by G.

A critical pair of two polynomials is defined by the tuple ( , , , , ) such that

For example, let . and . We have . Then, and .

For a critical pair p of , , , and denote , , , , and , respectively.

The S-polynomial, (or ), of a critical pair p of is defined as follows:

and denote and , respectively.

2.4. MQ Problem

Let F be a subset , and let be a quadratic polynomial (i.e., ). The MQ problem is to compute a common zero for a system of quadratic polynomial equations defined by F, i.e.,

The MQ problem is discussed frequently in terms of MPKCs because representative MPKCs, e.g., UOV, Rainbow, and GeMSS, use quadratic polynomials. These schemes are signature schemes and employ a system of MQ polynomial equations under the condition where .

The computation of Gröbner bases is a fundamental tool for solving the MQ problem. If , the system of F tends to have no solution or exactly one solution. If the system of F has no solution, can be obtained as a Gröbner basis of . If it has a solution, , can be obtained as Gröbner bases of . Thus, it is easy to obtain the solution of the system of F from the Gröbner bases of .

If , it is generally necessary to compute Gröbner bases concerning lexicographic order using a Gröbner -basis conversion algorithm, e.g., FGLM [50]. Another method is to convert the system associated with F to a system of multivariate polynomial equations by substituting random values for some variables and then computing its Gröbner bases. The process is repeated with other random values if there is no solution. This method is called the hybrid approach and typically substitutes random values for variables. Hence, it is important to solve the MQ problem with .

2.5. The (Unbalanced) Oil and Vinegar Signature Scheme

Let be a finite field. Let o, , , and . For a message to be signed, we define a signature of y as follows.

2.5.1. Key Generation

The secret key consists of two parts:

- a bijective affine transformation (coefficients in K),

- m equations:where , , , , and are secret coefficients in K.

The public key is the following m quadratic equations:

- (i)

- Let .

- (ii)

- Compute .

- (iii)

- We have m quadratic equations in n variables:

2.5.2. Signature Generation

- (i)

- We generate such that (1) holds.

- (ii)

- Compute where .

2.5.3. Signature Verification

If (2) is solved, then we find another solution . Thus, we can find another signature of y. Therefore, the difficulty of forging signatures can be related to the difficulty of the MQ problem.

3. Materials and Methods

In this section, we introduce three algorithms to compute Gröbner bases: the Buchberger-style algorithm, the algorithm proposed by Faugère, and the -style algorithm proposed by Ito et al., which is the primary focus of this paper.

3.1. Buchberger-Style Algorithm

In 1979, Buchberger introduced the concept of Gröbner bases and proposed an algorithm to compute them. He found that Gröbner bases can be computed by repeatedly generating S-polynomials and reducing them. Algorithm 1 describes the Buchberger-style algorithm to compute Gröbner bases. First, we generate a polynomial set G and a set of critical pairs P from the input polynomials F. We then repeat the following steps until P is empty: one critical pair p from P is selected, an S-polynomial s is generated, s is reduced to the polynomial h by G, and G and P are updated from if h is a nonzero polynomial. The Update function (Algorithm 2) is frequently used to update G and P, omitting some redundant critical pairs [51]. If a polynomial h is reduced to zero, then G and P are not updated; thus, the critical pair that generates an S-polynomial to be reduced to zero is redundant. Here, the critical pair selection method that selects the pair with the lowest (referred to as the normal strategy) is frequently employed. If the degree reverse lexicographic order is used as a monomial order, then the critical pair with the lowest degree is naturally selected under the normal strategy.

| Algorithm 1 Buchberger-style algorithm |

| Input:. Output: A Gröbner bases of .

|

| Algorithm 2 Update |

| Input:, P is a set of critical pairs, and . Output: and .

|

3.2. -Style Algorithm

The F4 algorithm, which is a representative algorithm for computing Gröbner bases, was proposed by Faugère in 1999, and it reduces S-polynomials simultaneously. Herein, we present an F4-style algorithm with this feature.

Here, let G be a subset of . A matrix in which the coefficients of polynomials in G are represented as corresponding to their monomials is referred to as a Macaulay matrix of G. G is said to be a row echelon form if and for all . The F4-style algorithm reduces polynomials by computing row echelon forms of Macaulay matrices. For example, let as in the fourth paragraph of Section 2.3. We use and to compute . The Macaulay matrix M of is given as follows:

In addition, a row echelon form of M is given as follows:

We can obtain from .

The F4-style algorithm is described in Algorithm 3. The main process is described in lines 5 to 14, where some critical pairs are selected using the Select function (Algorithm 4), and the polynomials of the pairs are reduced using the Reduction function (Algorithm 5). The Select function selects critical pairs with the lowest degree on the basis of the normal strategy. In particular, the F4-style algorithm selects all critical pairs with the lowest degree. It takes the subset of P and integer d so that and . The Reduction function collects reductors to reduce the polynomials and computes the row echelon form of the polynomial set. In addition, the Simplify function (Algorithm 6) determines the reductor with the lowest degree from the polynomial set obtained during the computation of the Gröbner bases.

| Algorithm 3 F4-style algorithm |

| Input:. Output: A Gröbner basis of .

|

| Algorithm 4 Select |

| Input:. Output:: and .

|

| Algorithm 5 Reduction |

| Input:, and , where . Output: and .

|

| Algorithm 6 Simplify |

| Input:, and , where . Output:.

|

The computational complexity of the F4-style algorithm can be evaluated from above by the same order of magnitude as that of Gaussian elimination of the Macaulay matrix. The size of the Macaulay matrix of degree D is bounded above by the number of monomials of degree , which is equal to

The computational complexity of Gaussian elimination is bounded above by if the matrix size is N ( is the linear algebra constant). denotes the highest degree of critical pairs appearing in the Gröbner bases computation. Then, the computational complexity of the F4-style algorithm is roughly

We can reduce the computational complexity by omitting redundant critical pairs.

3.3. The Algorithm Proposed by Ito et al.

Redundant critical pairs do not necessarily vanish after applying the Update function. Here, we introduce a method to omit many redundant pairs. We assume that the degree reverse lexicographic order is employed as a monomial order, and the normal strategy is used as the pair selection strategy in the Gröbner bases computation. When solving the MQ problem in the Gröbner bases computation, in many cases, the degree d of the critical pairs changes, as described below.

Herein, the computation until the degree of the selected pair becomes is referred to as the first half. In the first half of the computation, many redundant pairs are reduced to zero. When solving the MQ problem, Ito et al. found that if a critical pair of degree d is reduced to zero, all pairs of degree d stored at that time are also reduced to zero with a high probability. Thus, redundant critical pairs can be efficiently eliminated by ignoring all stored pairs of degree d after the critical pairs of degree d are reduced to zero. Algorithm 7 introduces the above method into Algorithm 3. In Algorithm 7, is the set of pairs with the lowest degree d that are not tested. The subset contains critical pairs selected from , and refers to new polynomials obtained by reducing . If the number of new polynomials is less than the number of selected pairs , a reduction to zero has occurred, and then is deleted.

| Algorithm 7 F4-style algorithm proposed by Ito et al. |

| Input:. Output: A Gröbner basis of .

|

Note that Ito et al. stated that the proposed method was valid for MQ problems associated with encryption schemes, i.e., of type , but other MQ problems, including those of type , were not discussed. Moreover, they set the number of selected pairs to 256 to divide . Hence, they did not guarantee that this subdividing method is optimal.

3.4. Proposed Methods

We explain the subdividing methods and the removal method in Section 3.4.1 and Section 3.4.2, respectively. The proposed methods were integrated into the F4-style algorithm as described in Algorithm 8. The OpenF4 library was used for these implementations. The OpenF4 library is an open-source implementation of the F4-style algorithm and, thus, is suitable for this purpose.

| Algorithm 8 F4-style algorithm integrating the proposed methods |

| Input:. Output: A basis of .

|

| Algorithm 9 SubDividePd |

| Input: and . Output:

|

As mentioned above, the SelectPd function serves to select a subset of all the critical pairs at each step for the reduction part of the F4-style algorithm. Ito et al. proposed a method where they subdivide into smaller subsets as described in Algorithm 9, and perform the Reduction and Update functions for each set consecutively when no S-polynomials are reduced to zero during the reduction. On the other hand, if some S-polynomials reduce to zero during the reduction of a set for the first time, this method ignores the remaining sets and removes them from all the critical pairs.

The authors confirmed that their method was effective in solving the MQ problems under the condition where and only and they did not mention other types, especially , or other subdividing methods.

In our experiments, as described in Section 4.1, we generated the MQ problems () with random polynomial coefficients to have at least one solution in the same manner as the Fukuoka MQ challenges ([40], Algorithm 2 and Step 4 of Algorithm 1), and we assumed that for all input polynomials because such polynomials are obtained with non-negligible probability for experimental purposes. Taking a change in variables into account, the probability is exactly . For example, it is close to 1 for and .

3.4.1. Subdividing Methods

To solve the MQ problems, Ito et al. fixed the number of elements of each to 256, i.e., . In our experiments, we propose three types of subdividing methods:

- SD1:

- The number of elements in () is fixed except .

We set , 256, 512, 768, 1024, 2048, and 4096.

- SD2:

- The number of subdivided subsets is fixed.

We set , 10, and 15.

- SD3:

- The fraction of elements to be processed in the remaining element in is fixed; i.e., and for .

We set , , and .

Furthermore, we propose two subdividing methods based on SD1 in Section 4.2.

3.4.2. A Removal Method

It is important to skip redundant critical pairs in the -style algorithm because it takes extra time to compute reductions of larger matrix sizes. To solve the MQ problems that are defined as systems of m quadratic polynomial equations over n variables, Ito et al. experimentally confirmed that once a reduction to zero occurs for some critical pairs in , nothing but a reduction to zero will be generated for all subsequently selected critical pairs in P in the case of or with the number of polynomials and the number of variables .

We checked Hypothesis 1 through computational experiments.

Hypothesis 1.

If a Macaulay matrix composed of critical pairs has some reductions to zero, i.e., in line 15 in Algorithm 8 with the normal strategy, then all remaining critical pairs s.t. will be reduced to zero with a high probability.

The difference between a measuring algorithm and a checking algorithm is as follows: in the algorithm measuring the software performance of the OpenF4 library and our methods, as defined in Algorithm 8, once a reduction to zero occurs, the remaining critical pairs in are removed. In other words, in such an algorithm, a new next is selected immediately after a reduction to zero. On the other hand, in the algorithm checking Hypothesis 1 as described above, we need to continue reducing all remaining critical pairs and monitor whether reductions to zero are consecutively generated after the first one. However, because the behavior of the checking algorithm needs to match that of the measuring one, every internal state just before processing the remaining critical pairs in the checking algorithm is reset to the state immediately after a reduction to zero.

To check Hypothesis 1, we solved the MQ problems of random coefficients over for the condition where and using Algorithm 8 with SD1. Due to processing times, a hundred samples and fifty samples were generated for each problem for and , respectively. Furthermore, of SD1 was fixed to 1, 16, 32, 256, and 512 for ; ; ; ; and , respectively.

Our programs were terminated normally with about probability. Thus, the experiments showed that Hypothesis 1 was valid with about probability. The remaining events, which coincided with a probability of approximately 0.1, corresponded to an OpenF4 library’s warning concerning the number of temporary basis. Although the warning was output, neither temporary basis (i.e., an element in G, in line 17 of Algorithm 8) nor a critical pair of higher degree arose from unused critical pairs in omitted subsets. Moreover, all outputs of all problems contained the initial values with no errors.

3.5. System Architecture

Our experiments were performed on the following systems as shown in Table 3 and Table 4. In the case of and in Appendix A1, our experiments were performed as shown in Table 4.

Table 3.

Benchmarking system architecture.

Table 4.

System architecture for computing a reduction to zero.

Our software diagram was as shown in Figure 1.

Figure 1.

Software architecture of our system.

4. Results

In this section, we describe the software performance of the proposed methods introduced in Section 3.4. Then, we describe their behavior in the first half of the computation.

4.1. Software Performance Comparisons

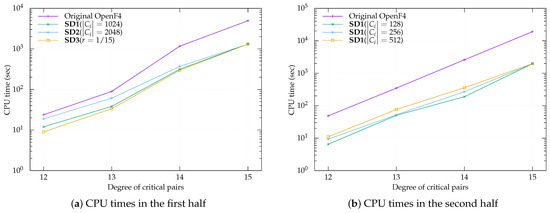

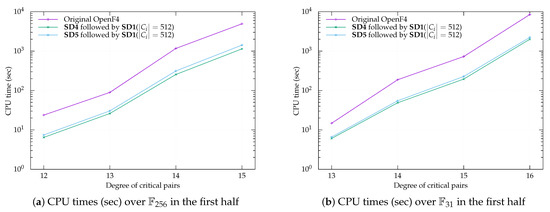

We integrated our proposed methods into the F4-style algorithm using the OpenF4 library version 1.0.1 and compared their software performances, including the original OpenF4. We benchmarked these implementations on the MQ problems similar to the Fukuoka MQ challenge of type V over a base field and type VI over a base field . These problems were defined as MQ polynomial systems of m equations over n variables with , based on the hybrid approach. We experimented with ten samples for each parameter: and over and and over . Note that we could not run these programs for over and for over because the OpenF4 library needs significantly more memory than the RAM installed on our machine. Our results for and over are listed in Table A2 and for and over are listed in Table A3. For the first and second halves of the computation, the top three records produced by the proposed methods and the record by the OpenF4 library are shown in Figure 2a,b and Figure 3a,b.

Figure 2.

Benchmark results over for .

Figure 3.

Benchmark results over for .

These experiments demonstrated that there was no failure to compute solutions including initially selected values and standard variations () of the CPU times were relatively small and the F4-style algorithms integrating our proposed methods were faster than that of the original OpenF4 library, e.g., by a factor of up to in the case of SD1 under and over and factor in the case of SD1 under and over . According to these results, we could argue that SD1 is faster than all other methods. However, if we focus only on the first half of the computation, we found that SD3 with may be the fastest in both the cases of and . This reason will be discussed in the next section. If we distinguish between the first and second halves, we conclude that it is appropriate to apply SD3 with in the first half and SD1 with in the second half. For example, a combination of SD3 with for the first half and SD1 with for the second half (i.e., SD3 followed by SD1) is faster than otherwise, e.g., by a factor of up to for over .

4.2. The Performance Behavior of the Proposed Methods in the First Half

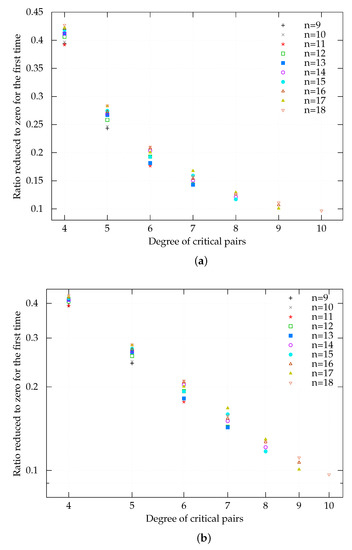

In our experiments, we calculated the CPU time and the number of critical pairs used at each reduction. We found that the minimum number of critical pairs that generates a reduction to zero for the first time is approximately constant. Note, however, that if more than one of the critical pairs arises, we take the maximum number among them.

The number of critical pairs that generates a reduction to zero for the first time for each and d is listed in Table A1. The symbol Total in the table represents the number of critical pairs before reducing the Macaulay matrix for each and d. The symbol Min represents the minimum number of critical pairs that generates a reduction to zero for the first time for each and d. The ratio of Min-to-Total is shown in Figure 4a. The log-log scale version of this figure is shown in Figure 4b. Figure 4a shows that the ratios gradually decrease, and the decreasing ratios are not constant. These tendencies were likely the reason that SD3 with was useful in the first half. Figure 4b shows that it is expected that the errors by the linear approximation will not be so large.

Figure 4.

Benchmark results for over . (a) Experimental results of the ratio of critical pairs for a reduction to zero in the first half over and for . (b) - graph of the ratio of critical pairs for a reduction to zero in the first half over and for .

Here, we propose the subdividing method SD4 in the first half as follows.

- SD4:

- The number of elements in the first subset is 1 plus the number Min specified in Table A1. is fixed to a small value in place.

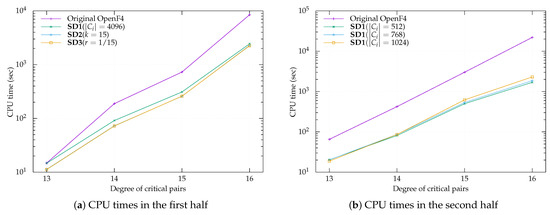

We experimented with SD4 in the first half followed by SD1 with in the second half (i.e., SD4 ⇒ SD1). Our benchmark results of SD4 followed by SD1 are listed in Table A2 and Table A3. For the first half of the computation, the record by SD4 and the record by the OpenF4 library are shown in Figure 5a,b.

Figure 5.

Benchmark results for .

Here, we investigate the approximation of the ratio (r) shown in Figure 4a. The Simplify function outputs the product of such that is a variable and p is a polynomial with a high probability, as stated in the paper ([34], Remark 2.6). Thus, it seems likely that the rows of the Macaulay matrix are composed of the products of where and p is a polynomial with a high probability because of the normal strategy.

Moreover, the elements in the leftmost columns of the Macaulay matrix come from the leading monomials. Hence, it seems reasonable to suppose that the ratio r is approximately related to the number of monomials of degree d:

Accordingly, we assume that r is proportional to a power of d, i.e., (c is a constant), by ignoring lower terms. We have where a and b are constants. Thus, it seems that there is a correspondence between linear approximations of the graphs in Figure 4b and the lower terms on the rightmost side of (4) are ignored. Furthermore, we assume that linear expressions in n approximate both a and b, and we distinguish between the approximations according to whether n is even or odd.

The linear regression analysis of a shows that

In addition, the regression analysis of b shows that

Then, we add the constant value as r passes over all points in Figure 4b because the expected number of critical pairs should not be less than the required number.

Finally, we propose the subdividing method SD5 in the first half as follows:

- SD5:

- The number of elements in the first subset is multiplied by the number Total specified in Table A1, regarding SD1. is fixed to a small value in place. is defined as follows:

We experimented with SD5 in the first half followed by SD1 with in the second half (i.e., SD5 ⇒ SD1). Our benchmark results of SD5 followed by SD1 are listed in Table A2 and Table A3. For the first half of the computation, the record by SD5 and the record by the OpenF4 library are shown in Figure 5a,b.

5. Conclusions and Future Work

The experimental results of our previous study demonstrated that the subdividing method SD1 with and the removal method are valid for solving a system of MQ polynomial equations associated with encryption schemes. In this study, we proposed three basic (SD1, SD2, and SD3) and two extra (SD4 and SD5) subdividing methods of the -style algorithm. Our proposed methods considerably improved the performance of the -style algorithm by omitting redundant critical pairs using the removal method. Then, our experimental results validated the effectiveness of these methods in solving a system of MQ polynomial equations under . Furthermore, the experiments revealed that the number of critical pairs that generates a reduction to zero for the first time was approximately constant under . However, we could not estimate the number of critical pairs that generates a reduction to zero for the first time under the condition where over or over because of the limitations of our machine and the OpenF4 library.

As discussed in the derivation of (3), the minimum number of critical pairs that generates a reduction to zero for the first time determines the Macaulay-matrix size, and its size determines the computational complexity of the Gaussian elimination. Thus, it is expected that the minimum number is significantly related to the computational complexity of our proposed algorithm. Since the rules are not clear as far as Table A1 is concerned, further research is needed. If the resources required for further study are identified, it can be conducted efficiently. Therefore, we developed SD5 in the first half of the computation by approximating the Min-to-Total ratio specified in Table A1. SD5 can be applied to the condition where over or over . It should be noted that SD4 can be applied once the number of critical pairs that generates a reduction to zero for the first time under a given condition is computed and identified like that in Table A1. Our future work will mainly investigate a mechanism for generating a reduction to zero.

Author Contributions

Conceptualization, T.K.; methodology, T.K.; software, T.K. and T.I.; formal analysis, T.K.; investigation, T.K.; data curation, T.K.; writing—original draft preparation, T.K.; supervision, N.S., A.Y. and S.U. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Ministry of Internal Affairs and Communications as part of the research program R&D for Expansion of Radio Wave Resources (JPJ000254).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Acknowledgments

We would like to thank the anonymous reviewers for their useful suggestions and valuable comments.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Experimental results of the number of critical pairs that generates a reduction to zero in the first half for over and are shown in Table A1. Our benchmark results for MQ problems over for are shown in Table A2. Our benchmark results for MQ problems over for are shown in Table A3.

Table A1.

Experimental results of the minimum number of critical pairs that generates a reduction to zero in the first half for over and .

Table A1.

Experimental results of the minimum number of critical pairs that generates a reduction to zero in the first half for over and .

| Min | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | |

| Total | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | |

| Min | 20 | 24 | 28 | 32 | 36 | 40 | 45 | 50 | 55 | 60 | |

| Total | 20 | 24 | 28 | 32 | 36 | 40 | 45 | 50 | 55 | 60 | |

| Min | 39 | 50 | 60 | 76 | 91 | 106 | 126 | 146 | 165 | 189 | |

| Total | 99 | 126 | 153 | 187 | 221 | 256 | 301 | 347 | 393 | 445 | |

| Min | 63 | 88 | 120 | 156 | 204 | 248 | 318 | 378 | 462 | 550 | |

| Total | 259 | 354 | 456 | 604 | 764 | 927 | 1158 | 1386 | 1638 | 1942 | |

| Min | – | 132 | 187 | 286 | 364 | 532 | 664 | 901 | 1089 | 1424 | |

| Total | 737 | 1059 | 1432 | 2004 | 2612 | 3449 | 4331 | 5443 | 6780 | ||

| Min | – | – | – | 429 | 572 | 936 | 1300 | 1768 | 2448 | 3078 | |

| Total | 3003 | 4004 | 6216 | 8164 | 11,492 | 14,616 | 19,614 | ||||

| Min | – | – | – | – | – | 1430 | 2002 | 3094 | 4590 | 5814 | |

| Total | 11,804 | 17,108 | 24,480 | 35,496 | 45,999 | ||||||

| Min | – | – | – | – | – | – | – | 4862 | 7072 | 10,336 | |

| Total | 45,526 | 70,176 | 93,024 | ||||||||

| Min | – | – | – | – | – | – | – | – | – | 16,796 | |

| Total | 173,774 | ||||||||||

Min: the minimum number of critical pairs for a reduction to zero. Total: the total number of critical pairs

appearing before a reduction. † Another NUMA machine that has 3 TB RAM.

Table A2.

Benchmark results for MQ problems over for , total CPU time (s).

Table A2.

Benchmark results for MQ problems over for , total CPU time (s).

| Original OpenF4 | |||||||

| Average | |||||||

| Before | |||||||

| After | |||||||

| SD1 | |||||||

| Average | |||||||

| Before | |||||||

| After | |||||||

| Average | |||||||

| Before | |||||||

| After | |||||||

| Average | |||||||

| Before | |||||||

| After | |||||||

| Average | |||||||

| Before | |||||||

| After | |||||||

| Average | |||||||

| Before | |||||||

| After | |||||||

| Average | |||||||

| Before | |||||||

| After | |||||||

| Average | |||||||

| Before | |||||||

| After | |||||||

| SD2 | |||||||

| Average | |||||||

| Before | |||||||

| After | |||||||

| Average | |||||||

| Before | |||||||

| After | |||||||

| Average | |||||||

| Before | |||||||

| After | |||||||

| SD3 | |||||||

| Average | |||||||

| Before | |||||||

| After | |||||||

| Average | |||||||

| Before | |||||||

| After | |||||||

| Average | |||||||

| Before | |||||||

| After | |||||||

| SD3 followed by SD1 with and | |||||||

| Average | |||||||

| Before | |||||||

| After | |||||||

| SD4 followed by SD1 with | |||||||

| Average | |||||||

| Before | |||||||

| After | |||||||

| SD5 followed by SD1 with | |||||||

| Average | |||||||

| Before | |||||||

| After | |||||||

σ stands for a standard deviation.

Table A3.

Benchmark results for MQ problems over for , total CPU time (s).

Table A3.

Benchmark results for MQ problems over for , total CPU time (s).

| Original OpenF4 | ||||||||

| Average | ||||||||

| Before | ||||||||

| After | ||||||||

| SD1 | ||||||||

| Average | ||||||||

| Before | ||||||||

| After | ||||||||

| Average | ||||||||

| Before | ||||||||

| After | ||||||||

| Average | ||||||||

| Before | ||||||||

| After | ||||||||

| Average | ||||||||

| Before | ||||||||

| After | ||||||||

| Average | ||||||||

| Before | ||||||||

| After | ||||||||

| Average | ||||||||

| Before | ||||||||

| After | ||||||||

| Average | ||||||||

| Before | ||||||||

| After | ||||||||

| SD2 | ||||||||

| Average | ||||||||

| Before | ||||||||

| After | ||||||||

| Average | ||||||||

| Before | ||||||||

| After | ||||||||

| Average | ||||||||

| Before | ||||||||

| After | ||||||||

| SD3 | ||||||||

| Average | ||||||||

| Before | ||||||||

| After | ||||||||

| Average | ||||||||

| Before | ||||||||

| After | ||||||||

| Average | ||||||||

| Before | ||||||||

| After | ||||||||

| SD3+SD1 | ||||||||

| Average | ||||||||

| Before | ||||||||

| After | ||||||||

| SD4+SD1 | ||||||||

| Average | ||||||||

| Before | ||||||||

| After | ||||||||

| SD5+SD1 | ||||||||

| Average | ||||||||

| Before | ||||||||

| After | ||||||||

σ stands for a standard deviation.

References

- Shor, P.W. Polynomial-Time Algorithms for Prime Factorization and Discrete Logarithms on a Quantum Computer. SIAM Rev. 1999, 41, 303–332. [Google Scholar] [CrossRef]

- National Institute of Standards and Technology. Post-Quantum Cryptography: Proposed Requirements and Evaluation Criteria. Available online: https://csrc.nist.gov/News/2016/Post-Quantum-Cryptography-Proposed-Requirements (accessed on 1 December 2022).

- National Institute of Standards and Technology. New Call for Proposals: Call for Additional: Digital Signature Schemes for the Post-Quantum Cryptography Standardization Process. Available online: https://csrc.nist.gov/projects/pqc-dig-sig/standardization/call-for-proposals (accessed on 1 December 2022).

- CRYSTALS, Cryptographic Suite for Algebraic Lattices. Available online: https://pq-crystals.org (accessed on 5 February 2023).

- FALCON, Fast-Fourier Lattice-Based Compact Signatures over NTRU. Available online: https://pq-crystals.org (accessed on 5 February 2023).

- SPHINCS+, Stateless Hash-Based Signatures. Available online: https://sphincs.org (accessed on 5 February 2023).

- Aragon, N.; Barreto, P.S.L.M.; Bettaieb, S.; Bidoux, L.; Blazy, O.; Deneuville, J.C.; Gaborit, P.; Ghosh, S.; Gueron, S.; Güneysu, T.; et al. BIKE—Bit Flipping Key Encapsulation. Available online: https://bikesuite.org (accessed on 5 February 2023).

- Bernstein, D.J.; Chou, T.; Cid, C.; Gilcher, J.; Lange, T.; Maram, V.; von Maurich, I.; Misoczki, R.; Niederhagen, R.; Persichetti, E.; et al. Classic McEliece. Available online: https://classic.mceliece.org (accessed on 5 February 2023).

- Melchor, C.A.; Aragon, N.; Bettaieb, S.; Bidoux, L.; Blazy, O.; Bos, J.; Deneuville, J.C.; Dion, A.; Gaborit, P.; Lacan, J.; et al. Hamming Quasi-Cyclic (HQC). Available online: http://pqc-hqc.org (accessed on 5 February 2023).

- Castryck, W.; Decru, T. An Efficient Key Recovery Attack on SIDH (Preliminary Version); Paper 2022/975; Cryptology ePrint Archive. 2022. Available online: https://eprint.iacr.org/2022/975 (accessed on 5 February 2023).

- The SIKE Team, SIKE and SIDH Are Insecure and Should Not Be Used. Available online: https://csrc.nist.gov/csrc/media/Projects/post-quantum-cryptography/documents/round-4/submissions/sike-team-note-insecure.pdf (accessed on 5 February 2023).

- Matsumoto, T.; Imai, H. Public Quadratic Polynomial-Tuples for Efficient Signature-Verification and Message-Encryption. In Proceedings of the Advances in Cryptology—EUROCRYPT ’88, Davos, Switzerland, 25–27 May 1988; Barstow, D., Brauer, W., Brinch Hansen, P., Gries, D., Luckham, D., Moler, C., Pnueli, A., Seegmüller, G., Stoer, J., Wirth, N., et al., Eds.; Springer: Berlin/Heidelberg, Germany, 1988; pp. 419–453. [Google Scholar]

- Patarin, J. Cryptanalysis of the Matsumoto and Imai Public Key Scheme of Eurocrypt’88. In Proceedings of the Advances in Cryptology—CRYPT0’ 95, Santa Barbara, CA, USA, 27–31 August 1995; Coppersmith, D., Ed.; Springer: Berlin/Heidelberg, Germany, 1995; pp. 248–261. [Google Scholar]

- Patarin, J. Hidden Fields Equations (HFE) and Isomorphisms of Polynomials (IP): Two New Families of Asymmetric Algorithms. In Proceedings of the Advances in Cryptology—EUROCRYPT ’96, Saragossa, Spain, 12–16 May 1996; Maurer, U., Ed.; Springer: Berlin/Heidelberg, Germany, 1996; pp. 33–48. [Google Scholar]

- Patarin, J. The Oil and Vinegar Signature Scheme. Presented at the Dagstuhl Workshop on Cryptography, Dagstuhl, Germany, 22–26 September 1997. Transparencies. [Google Scholar]

- Kipnis, A.; Patarin, J.; Goubin, L. Unbalanced Oil and Vinegar Signature Schemes. In Proceedings of the Advances in Cryptology—EUROCRYPT ’99, Prague, Czech Republic, 2–6 May 1999; Stern, J., Ed.; Springer: Berlin/Heidelberg, Germany, 1999; pp. 206–222. [Google Scholar]

- National Institute of Standards and Technology. Post-Quantum Cryptography. Available online: https://csrc.nist.gov/projects/post-quantum-cryptography (accessed on 1 December 2022).

- Casanova, A.; Faugère, J.; Macario-Rat, G.; Patarin, J.; Perret, L.; Ryckeghem, J. GeMSS: A Great Multivariate Short Signature. Available online: https://www-polsys.lip6.fr/Links/NIST/GeMSS_specification.pdf (accessed on 5 February 2023).

- Beullens, W.; Preneel, B. Field Lifting for Smaller UOV Public Keys. In Proceedings of the Progress in Cryptology-INDOCRYPT 2017—18th International Conference on Cryptology in India, Chennai, India, 10–13 December 2017; pp. 227–246. [Google Scholar] [CrossRef]

- Chen, M.S.; Hülsing, A.; Rijneveld, J.; Samardjiska, S.; Schwabe, P. From 5-Pass MQ-Based Identification to MQ-Based Signatures. In Proceedings of the Advances in Cryptology—ASIACRYPT 2016, Hanoi, Vietnam, 4–8 December 2016; Cheon, J.H., Takagi, T., Eds.; Springer: Berlin/Heidelberg, Germany, 2016; pp. 135–165. [Google Scholar]

- Ding, J.; Schmidt, D. Rainbow, a New Multivariable Polynomial Signature Scheme. In Proceedings of the Applied Cryptography and Network Security, Third International Conference, ACNS 2005, New York, NY, USA, 7–10 June 2005; pp. 164–175. [Google Scholar] [CrossRef]

- Ding, J.; Deaton, J.; Schmidt, K.; Zhang, Z. Cryptanalysis of the Lifted Unbalanced Oil Vinegar Signature Scheme. In Proceedings of the Advances in Cryptology—CRYPTO 2020, Santa Barbara, CA, USA, 17–21 August 2020; Micciancio, D., Ristenpart, T., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 279–298. [Google Scholar]

- Kales, D.; Zaverucha, G. Forgery Attacks on MQDSSv2.0. 2019. Available online: https://csrc.nist.gov/CSRC/media/Projects/Post-Quantum-Cryptography/documents/round-2/official-comments/MQDSS-round2-official-comment.pdf (accessed on 5 February 2023).

- Moody, D.; Alagic, G.; Apon, D.; Cooper, D.; Dang, Q.; Kelsey, J.; Liu, Y.; Miller, C.; Peralta, R.; Perlner, R.; et al. Status Report on the Second Round of the NIST Post-Quantum Cryptography Standardization Process; NIST Interagency/Internal Report (NISTIR); National Institute of Standards and Technology: Gaithersburg, MD, USA, 2020. [Google Scholar] [CrossRef]

- Kipnis, A.; Shamir, A. Cryptanalysis of the HFE Public Key Cryptosystem by Relinearization. In Proceedings of the Advances in Cryptology—CRYPTO’ 99, Santa Barbara, CA, USA, 15–19 August 1999; Wiener, M., Ed.; Springer: Berlin/Heidelberg, Germany, 1999; pp. 19–30. [Google Scholar]

- Faugère, J.C.; El Din, M.S.; Spaenlehauer, P.J. Computing Loci of Rank Defects of Linear Matrices Using GröBner Bases and Applications to Cryptology. In Proceedings of the 2010 International Symposium on Symbolic and Algebraic Computation, Munich, Germany, 25–28 July 2010; Association for Computing Machinery: New York, NY, USA, 2010; Volume ISSAC ’10, pp. 257–264. [Google Scholar] [CrossRef]

- Bardet, M.; Bros, M.; Cabarcas, D.; Gaborit, P.; Perlner, R.; Smith-Tone, D.; Tillich, J.P.; Verbel, J. Improvements of Algebraic Attacks for Solving the Rank Decoding and MinRank Problems. In Proceedings of the Advances in Cryptology—ASIACRYPT 2020, Daejeon, Republic of Korea, 7–11 December 2020; Moriai, S., Wang, H., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 507–536. [Google Scholar]

- Tao, C.; Petzoldt, A.; Ding, J. Efficient Key Recovery for All HFE Signature Variants. In Proceedings of the Advances in Cryptology—CRYPTO 2021, Virtual Event, 16–20 August 2021; Malkin, T., Peikert, C., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 70–93. [Google Scholar]

- Beullens, W. Improved Cryptanalysis of UOV and Rainbow. In Proceedings of the Advances in Cryptology—EUROCRYPT 2021, Zagreb, Croatia, 17–21 October 2021; Canteaut, A., Standaert, F.X., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 348–373. [Google Scholar]

- Beullens, W. Breaking Rainbow Takes a Weekend on a Laptop. In Proceedings of the Advances in Cryptology—CRYPTO 2022, Santa Barbara, CA, USA, 15–18 August 2022; Dodis, Y., Shrimpton, T., Eds.; Springer Nature: Cham, Switzerland, 2022; pp. 464–479. [Google Scholar]

- Alagic, G.; Cooper, D.; Dang, Q.; Dang, T.; Kelsey, J.; Lichtinger, J.; Liu, Y.; Miller, C.; Moody, D.; Peralta, R.; et al. Status Report on the Third Round of the NIST Post-Quantum Cryptography Standardization Process; NIST Interagency/Internal Report (NISTIR); National Institute of Standards and Technology: Gaithersburg, MD, USA, 2022. [Google Scholar] [CrossRef]

- Beullens, W.; Chen, M.S.; Hung, S.H.; Kannwischer, M.J.; Peng, B.Y.; Shih, C.J.; Yang, B.Y. Oil and Vinegar: Modern Parameters and Implementations; Paper 2023/059; Cryptology ePrint Archive. 2023. Available online: https://eprint.iacr.org/2023/059 (accessed on 5 February 2023).

- Courtois, N.; Klimov, A.; Patarin, J.; Shamir, A. Efficient Algorithms for Solving Overdefined Systems of Multivariate Polynomial Equations. In Proceedings of the Advances in Cryptology—EUROCRYPT 2000, International Conference on the Theory and Application of Cryptographic Techniques, Bruges, Belgium, 14–18 May 2000; pp. 392–407. [Google Scholar] [CrossRef]

- Faugère, J.C. A New Efficient Algorithm for Computing Gröbner Bases (F4). J. Pure Appl. Algebra 1999, 139, 61–88. [Google Scholar] [CrossRef]

- Faugère, J.C. A New Efficient Algorithm for Computing Gröbner Bases without Reduction to Zero (F5). In Proceedings of the 2002 International Symposium on Symbolic and Algebraic Computation, ISSAC ’02, Lille, France, 7–10 July 2002; Association for Computing Machinery: New York, NY, USA, 2002; pp. 75–83. [Google Scholar] [CrossRef]

- The RSA Challenge Numbers. Available online: https://web.archive.org/web/20010805210445/http://www.rsa.com/rsalabs/challenges/factoring/numbers.html (accessed on 18 November 2022).

- The Certicom ECC Challenge. Available online: https://www.certicom.com/content/dam/certicom/images/pdfs/challenge-2009.pdf (accessed on 18 November 2022).

- TU Darmstadt Lattice Challenge. Available online: https://www.latticechallenge.org (accessed on 18 November 2022).

- Yasuda, T.; Dahan, X.; Huang, Y.; Takagi, T.; Sakurai, K. A multivariate quadratic challenge toward post-quantum generation cryptography. ACM Commun. Comput. Algebra 2015, 49, 105–107. [Google Scholar] [CrossRef]

- Yasuda, T.; Dahan, X.; Huang, Y.; Takagi, T.; Sakurai, K. MQ Challenge: Hardness Evaluation of Solving Multivariate Quadratic Problems; Paper 2015/275; Cryptology ePrint Archive. 2015. Available online: https://eprint.iacr.org/2015/275 (accessed on 1 December 2022).

- Buchberger, B. A Theoretical Basis for the Reduction of Polynomials to Canonical Forms. SIGSAM Bull. 1976, 10, 19–29. [Google Scholar] [CrossRef]

- Becker, T.; Weispfenning, V. Gröebner Bases, a Computationnal Approach to Commutative Algebra; Graduate Texts in Mathematics; Springer: New York, NY, USA, 1993. [Google Scholar]

- Joux, A.; Vitse, V. A Variant of the F4 Algorithm. In Proceedings of the Topics in Cryptology—CT-RSA 2011, San Francisco, CA, USA, 14–18 February 2011; Kiayias, A., Ed.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 356–375. [Google Scholar]

- Makarim, R.H.; Stevens, M. M4GB: An Efficient Gröbner-Basis Algorithm. In Proceedings of the 2017 ACM on International Symposium on Symbolic and Algebraic Computation, ISSAC 2017, Kaiserslautern, Germany, 25–28 July 2017; pp. 293–300. [Google Scholar] [CrossRef]

- Ito, T.; Shinohara, N.; Uchiyama, S. Solving the MQ Problem Using Gröbner Basis Techniques. IEICE Trans. Fundam. Electron. Commun. Comput. Sci. 2021, E104.A, 135–142. [Google Scholar] [CrossRef]

- Bettale, L.; Faugère, J.C.; Perret, L. Hybrid approach for solving multivariate systems over finite fields. J. Math. Cryptol. 2009, 3, 177–197. [Google Scholar] [CrossRef]

- Joux, A.; Vitse, V.; Coladon, T. OpenF4: F4 Algorithm C++ Library (Gröbner Basis Computations over Finite Fields). Available online: https://github.com/nauotit/openf4 (accessed on 17 May 2021).

- Yeh, J.Y.C.; Cheng, C.M.; Yang, B.Y. Operating Degrees for XL vs. F4/F5 for Generic MQ with Number of Equations Linear in That of Variables. In Number Theory and Cryptography: Papers in Honor of Johannes Buchmann on the Occasion of His 60th Birthday; Fischlin, M., Katzenbeisser, S., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 19–33. [Google Scholar] [CrossRef]

- Buchberger, B. A criterion for detecting unnecessary reductions in the construction of Gröbner bases. In Proceedings of the Symbolic and Algebraic Computation, EUROSAM ’79, An International Symposium on Symbolic and Algebraic Manipulation, Marseille, France, 25–27 June 1979; Lecture Notes in Computer Science. Ng, E.W., Ed.; Springer: Berlin/Heidelberg, Germany, 1979; Volume 72, pp. 3–21. [Google Scholar] [CrossRef]

- Faugère, J.; Gianni, P.M.; Lazard, D.; Mora, T. Efficient Computation of Zero-Dimensional Gröbner Bases by Change of Ordering. J. Symb. Comput. 1993, 16, 329–344. [Google Scholar] [CrossRef]

- Gebauer, R.; Möller, H.M. On an installation of Buchberger’s algorithm. J. Symb. Comput. 1988, 6, 275–286. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).