1. Introduction

User’s data privacy concerns in computation outsourcing to cloud servers have gained serious attention over the past couple of decades. To address this challenge, various trusted-hardware based secure processor architectures have been proposed including TPM [

1,

2,

3], Intel’s TPM+TXT [

4], eXecute Only Memory (XOM) [

5,

6,

7], Aegis [

8,

9], Ascend [

10], Phantom [

11], and Intel’s SGX [

12]. A trusted-hardware platform receives user’s encrypted data, which is decrypted and computed upon inside the trusted boundary, and finally the encrypted results of the computation are sent to the user.

Although all the data stored outside the secure processor’s trusted boundary, e.g., in the main memory, can be encrypted, information can still be leaked to an adversary through the access patterns to the stored data [

13]. In order to prevent such leakage, Oblivious RAM (ORAM) is a well-established technique, first proposed by Goldreich and Ostrovsky [

14]. ORAM obfuscates the memory access pattern by introducing several randomized ‘redundant’ accesses, thereby preventing privacy leakage at a cost of significant performance penalty. Intense research over the last few decades has resulted in more and more efficient and secure ORAM schemes [

15,

16,

17,

18,

19,

20,

21,

22,

23,

24,

25,

26,

27,

28].

A key observation regarding the adversarial model assumed by the current renowned ORAM techniques is that the adversary is capable of learning fine-grained information of all the accesses made to the memory. This includes the information about which location is accessed, the type of operations (read/write), and the time of the access. It is an extremely strong adversarial model which, in most cases, requires direct physical access to the memory address bus in order to monitor both read and write accesses, e.g., the case of a curious cloud server.

On the other hand, for purely remote adversaries (where the cloud server itself is trusted), direct physical access to the memory address bus is not possible thereby preventing them from directly monitoring read/write access patterns. Such remote adversaries, although weaker than adversaries having physical access, can still “learn” the application’s write access patterns. Interestingly, privacy leakage is still possible even if the adversary is able to infer just the write access patterns of an application.

John et al. demonstrated such an attack [

29] on the famous Montgomery’s ladder technique [

30] commonly used for modular exponentiation in public key cryptography. In this attack, a 512-bit secret key is correctly inferred in just

min by only monitoring the application’s write access pattern via a compromised Direct Memory Access (DMA) device on the system [

31,

32,

33,

34,

35] (DMA is a standard performance feature which grants full access of the main memory to certain peripheral buses, e.g., FireWire, Thunderbolt etc.). The adversary collects the snapshots of the application’s memory via the compromised DMA. Clearly, any two memory snapshots only differ in the locations where the data has been modified in the latter snapshot. In other words, comparing the snapshots not only reveals the fact that write accesses (if any) have been made to the memory, but it also reveals the exact locations of the accesses which leads to a precise access pattern of memory writes resulting in privacy leakage.

Recent work [

36] demonstrated that DMA attacks can also be launched remotely by injecting malware to the dedicated hardware devices, such as graphic processors and network interface cards, attached to the host platform. This allows even a remote adversary to learn the application’s write access pattern. Intel’s TXT has also been a victim of DMA based attacks where a malicious OS directed a network card to access data in the protected VM [

37,

38].

One approach to prevent such attacks, as adapted by TXT, could be to block certain DMA accesses through modifications in DRAM controller. However, this requires the DRAM controller to be included in the trusted computing base (TCB) of the system which is undesirable. Nevertheless, there could potentially be many scenarios other than DMA based attacks where write access patterns can be learned by the adversary.

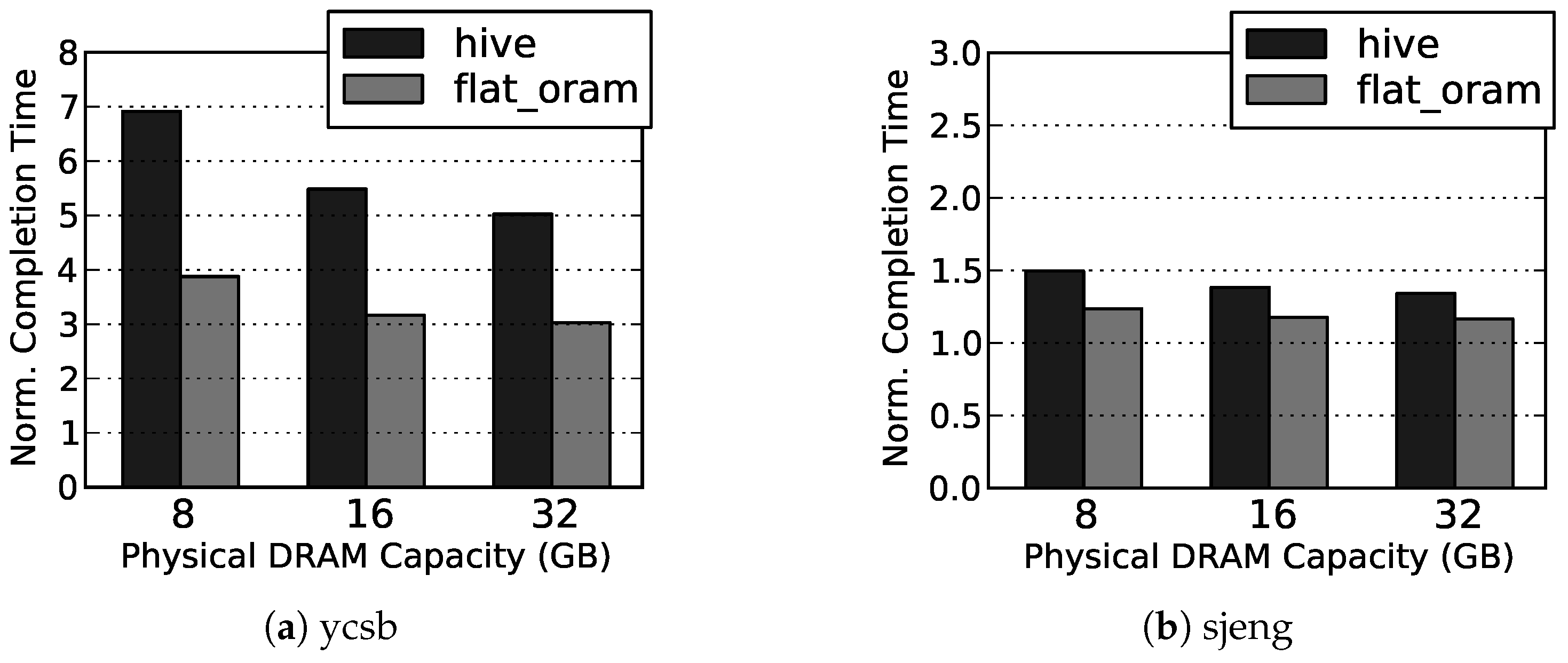

Current

full-featured ORAM schemes, which obfuscate both read and write access patterns, also offer a solution to such weaker adversaries. However, the added protection (obfuscation of reads) offered by full-featured ORAMs is unnecessary and is practically an overkill in this scenario which results in significant performance penalty. Path ORAM [

24], the most efficient and practical fully functional ORAM system for secure processors so far, still incurs about 2–10× performance degradation [

10,

39] over an insecure DRAM system. A far more efficient solution to this problem is a

write-only ORAM scheme, which only obfuscates the write accesses made to the memory. Since read accesses leave no trace in the memory snapshot and hence do not need to be obfuscated in this model, a write-only ORAM can offer significant performance advantage over a full-featured ORAM.

A recent work, HIVE [

40], has proposed a write-only ORAM scheme for implementing hidden volumes in hard disk drives. The key idea is similar to Path ORAM, i.e., each data block is written to a new random location, along with some dummy blocks, every time it is accessed. However, a fundamental challenge that arises in this scheme is to avoid

collisions, i.e., to determine whether a randomly chosen physical location contains real or dummy data, in order to avoid overwriting the existing useful data block. HIVE proposes a complex ‘inverse position map’ approach for collision avoidance. Essentially, it maintains a forward position mapping (logical to physical blocks) and a backward/inverse position mapping (physical to logical blocks) for the whole memory. Before each write, both forward and backward mappings are looked up to determine whether the corresponding physical location is vacant or occupied. This approach, however, turns out to be a storage and performance bottleneck because of the size of inverse position map and dual lookups of the position mappings.

A simplistic and obvious solution to this problem would be to use a bit-mask to mark each physical block as vacant or occupied. Based on this intuition, we propose a simplified write-only ORAM scheme called Flat ORAM that adapts the basic construction from HIVE while solving the collisions problem in a much more efficient way. At the core of the algorithm, Flat ORAM introduces a new data structure called Occupancy Map (OccMap): a bit-mask which offers an efficient collision avoidance mechanism. The OccMap records the availability information (occupied/vacant) of every physical location in a highly compressed form (i.e., just 1-bit per cache line). For typical parameter settings, OccMap is about compact compared to HIVE’s inverse position map structure. This dense structure allows the OccMap blocks to exploit higher locality, resulting in considerable performance gain. While naively storing the OccMap breaks the ORAM’s security, we present how to securely store and manage this structure in a real system. In particular, the paper makes the following contributions:

We are the first ones to implement an existing write-only ORAM scheme, HIVE, in the context of secure processors with all state-of-the-art ORAM optimizations, and analyze its performance bottlenecks.

A simple write-only ORAM, named Flat ORAM, having an efficient collision avoidance approach is proposed. The micro-architecture of the scheme is discussed in detail and the design space is comprehensively explored. It has also been shown to seamlessly adopt various performance optimizations of its predecessor: Path ORAM.

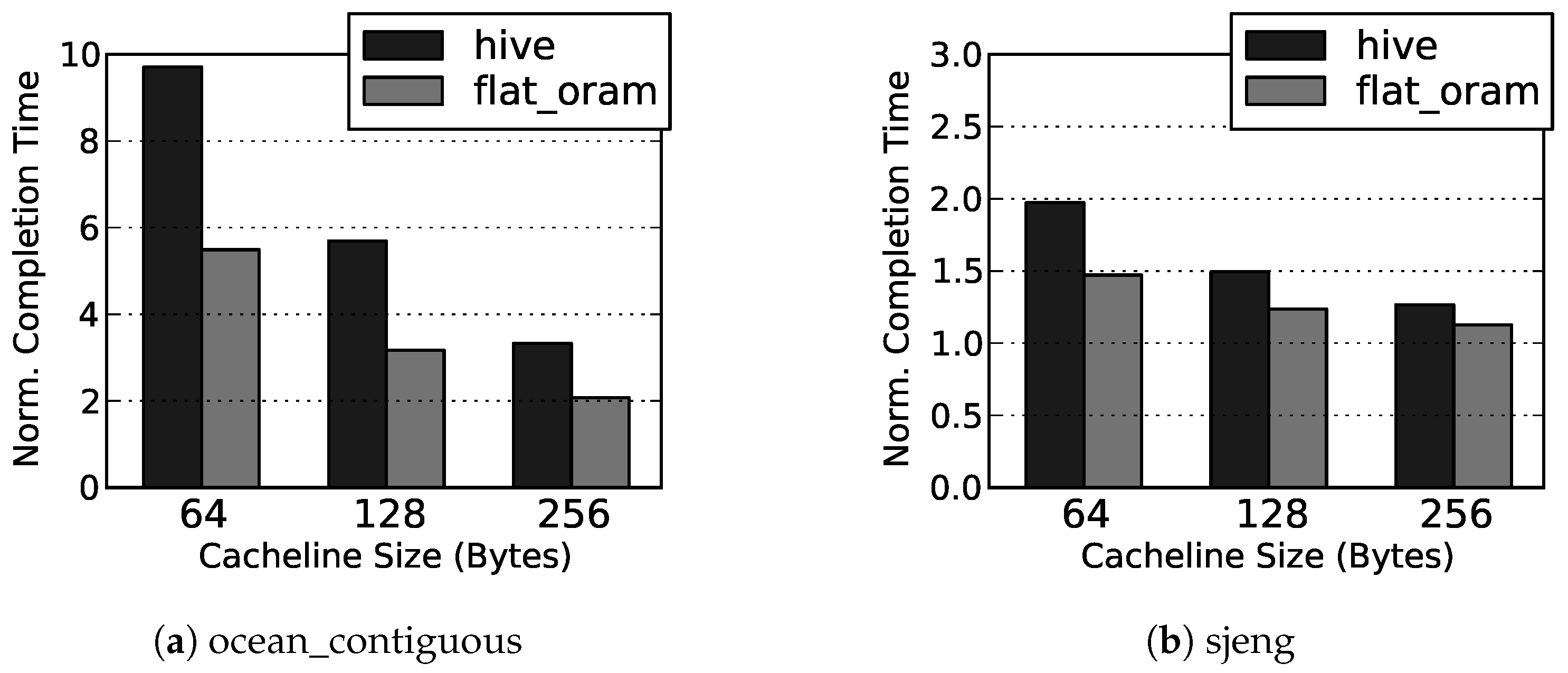

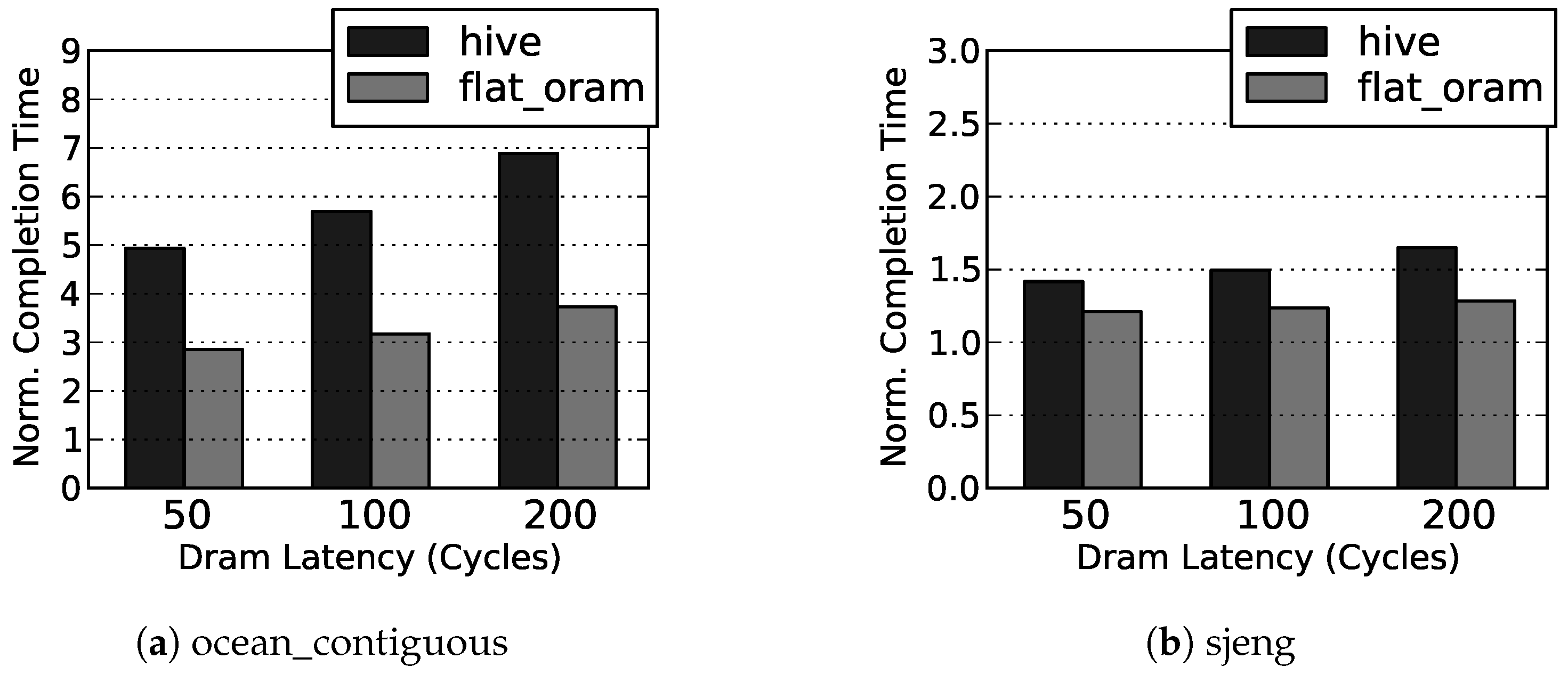

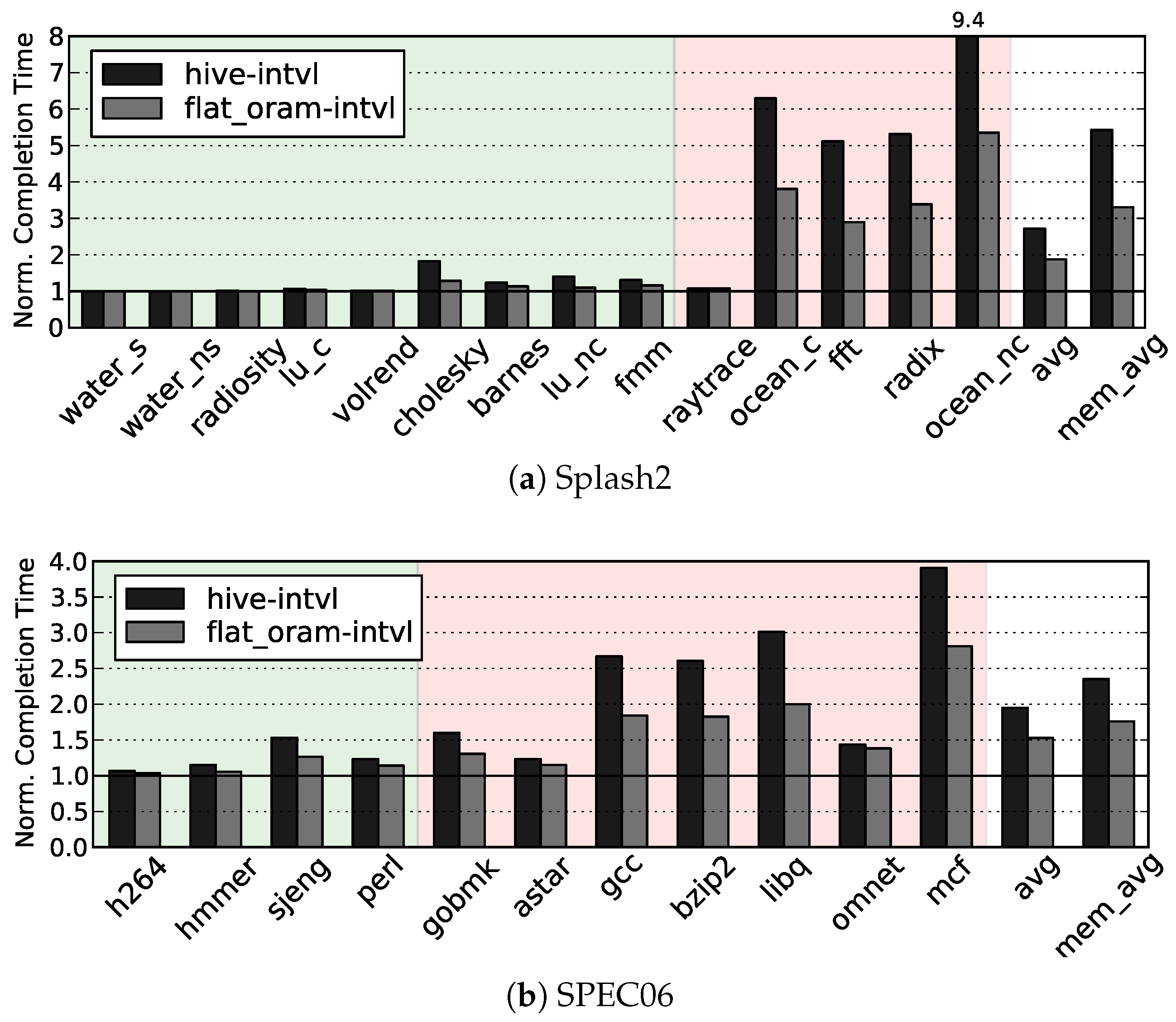

Our simulation results show that, on average, Flat ORAM offers performance gain (up to for DBMS) over HIVE, and only incurs slowdowns of and over the insecure DRAM for memory bound Splash2 and SPEC06 benchmarks, respectively.

The rest of the paper is organized as follows:

Section 2 describes our adversarial model in detail along with a practical example from the current literature.

Section 3 provides the necessary background of fully functional ORAMs and write-only ORAMs. The proposed Flat ORAM scheme along with its security analysis is presented in

Section 4, and the detailed construction of its occupancy map structure is shown in

Section 5. A few additional optimizations from literature implemented in Flat ORAM are discussed in

Section 6.

Section 7 and

Section 8 evaluate Flat ORAM’s performance, and we conclude the paper in

Section 9.

2. Adversarial Model

We assume a relaxed form of the adversarial model considered in several prior works related to fully functional oblivious RAMs in secure processor settings [

26,

28,

39].

In our model, a user’s computation job is outsourced to a cloud, where a trusted processor performs the computation on user’s private data. The user’s private data is stored (in encrypted form) in the untrusted memory external to the trusted processor, i.e., DRAM. In order to compute on user’s private data, the trusted processor interacts with DRAM. In particular, the processor’s last level cache (LLC) misses result in reading the data from DRAM, and the evictions from the LLC result in writing the data to DRAM. The cloud service itself is not considered as an adversary, i.e., it does not try to infer any information from the memory access patterns of the user’s program. However, since the cloud serves several users at the same time, sharing of critical resources such as DRAM among various applications from different users is inevitable. Among these users being served by the cloud service, we assume a malicious user who is able to monitor remotely (and potentially learn the secret information from) the data write sequences of other users’ applications to the DRAM, e.g., by taking frequent snapshots of the victim application’s memory via a compromised DMA. Moreover, he may also tamper with the DRAM contents or play replay attacks in order to manipulate other users’ applications and/or learn more about their secret data.

To protect the system from such an adversary, we add to the processor chip a ‘Write-Only ORAM’ controller: an additional trusted hardware module. Now all the off-chip traffic goes to DRAM through the ORAM controller. In order to formally define the security properties satisfied by our ORAM controller, we adapt the write-only ORAM privacy definition from [

41] as follows:

Definition 1. (Write-Only ORAM Privacy) For every two logical access sequences and of infinite length, their corresponding (infinite length) probabilistic access sequences and are identically distributed in the following sense: For all positive integers n, if we truncate and to their first n accesses, then the truncations and are identically distributed.

In other words, memory snapshots only reveal to the adversary the timing of write accesses made to the memory (i.e., leakage over ORAM Timing Channel) instead of their precise access pattern, whereas no trace of any read accesses made to the memory is revealed to the adversary. An important aspect of Definition 1 to note is that it completely isolates the problem of leakage over ORAM Termination Channel from the original problem that ORAM was proposed for, i.e., preventing leakage over memory address channel (which is also the focus of this paper). Notice that the original definition of ORAM [

14] does not protect against timing attacks, i.e., it does not obfuscate

when an access is made to the memory (ORAM Timing Channel) or how long it takes the application to finish (ORAM Termination Channel). The write-only ORAM security definition followed by HIVE [

40] also allows leakage over ORAM termination channel, as two memory access sequences generated by the ORAM for two same-length logical access sequences can have different lengths [

41]. Therefore, in order to define precise security guarantees offered by our ORAM, we follow Definition 1.

Periodic ORAMs [

10] deterministically make ORAM accesses always at regular predefined (publicly known) intervals, thereby preventing leakage over ORAM timing channel and shifting it to the ORAM termination channel. We present a periodic variant of our write-only ORAM to protect leakage over ORAM timing channel. Following the prior works [

11,

26], (a) we assume that the timing of individual DRAM accesses made during an ORAM access does not reveal any information; (b) we do not protect the leakage over ORAM termination channel (i.e., total number of ORAM or DRAM accesses). The problem of leakage over ORAM termination channel has been addressed in the existing literature [

42] where only

bits are leaked for a total of

n accesses. A similar approach can easily be applied to the current scheme.

In order to detect malicious tampering of the memory by the adversary, we follow the standard definition of data integrity and freshness [

26]:

Definition 2. (Write-Only ORAM Integrity) From the processor’s perspective, the ORAM behaves like a valid memory with overwhelming probability, and detects any violation to data authenticity and/or freshness.

Practicality of the Adversarial Model

Modular exponentiation algorithms, such as RSA algorithm, are widely used in public-key cryptography. In general, these algorithms perform computations of the form

, where the attacker’s goal is to find the secret

k. Algorithm 1 shows the Montgomery Ladder scheme [

30] which performs exponentiation (

) through simple square-and-multiply operations. For a given input

g and a secret key

k, the algorithm performs multiplication and squaring operations on two local variables

and

for each bit of

k starting from the most significant bit down to the least significant bit. This algorithm prevents leakage over power side-channel since, regardless of the value of bit

, the same number of operations are performed in the same order, hence producing the same power footprint for

and

.

| Algorithm 1 Montgomery Ladder |

Inputs:g, Output:

Start:- 1:

; - 2:

fordo - 3:

if then ; - 4:

else ; - 5:

end if - 6:

end for

return |

Notice, however, that the specific order in which

and

are updated in time depends upon the value of

. E.g., for

,

is written first and then

is updated; whereas for

the updates are done in the reverse order. This sequence of write access to

and

reveals to the adversary the exact bit values of the secret key

k. A recent work [

29] demonstrated such an attack where frequent memory snapshots of victim application’s data (particularly

and

) from the physical memory are taken via a compromised DMA. These snapshots are then correlated in time to determine the sequence of write access to

,

, which in turn reveals the secret key. The reported time taken by the attack is

min.

One might argue that under write-back caches, the updates to application’s data will only be visible in DRAM once the data is evicted from the last level cache (LLC). This will definitely introduce noise to the precise write-access sequence discussed earlier, hence making the attacker’s job difficult. However, he can still collect several ‘noisy’ sequences of memory snapshots and then run correlation analysis on them to find the secret key k. Furthermore, if the adversary is also a user of the same computer system, he can flush the system caches frequently to reduce the noise in write-access sequence even further.

4. Flat ORAM Scheme

At a high level, the proposed Flat ORAM scheme adapts the basics from HIVE’s [

40] construction while improving on its data collision avoidance solution by introducing an efficient bit-mask based approach, which is a key factor resulting in substantial performance gain. Furthermore, Flat ORAM inherits several architectural optimizations from Path ORAM benefiting from years of research efforts in this domain.

In this section, we first present the core algorithm of Flat ORAM, then we discuss its architectural details and various optimizations for a practical implementation.

4.1. Fundamental Data Structures

4.1.1. Data Array

Flat ORAM replaces the binary tree structure of Path ORAM with a flat or linear array of data blocks; hence termed as ‘Flat’ ORAM. Since read operations are not visible to the adversary in our model, one does not need to hide the read location as done in Path ORAM by accessing all the locations residing on the path of desired data block. This allows Flat ORAM to avoid the tree structure of Path ORAM altogether and have the data stored in a linear array where only the desired data block is read.

4.1.2. Position Map (PosMap)

PosMap structure maintains randomized mappings of logical blocks to physical locations. Flat ORAM adopts the PosMap structure from a standard Path ORAM. However there is one subtle difference between PosMap of Path ORAM and Flat ORAM. In Path ORAM, PosMap stores a path number for each logical block and the block can reside anywhere on that path. In contrast, PosMap in Flat ORAM stores the exact physical address where a logical block is stored in the linear data array.

4.1.3. Occupancy Map (OccMap)

OccMap is a newly introduced structure in Flat ORAM. It is essentially a large bit-mask where each bit corresponds to a physical location (i.e., a cache line). The binary value of each bit represents whether the corresponding physical block contains fresh/real or outdated/dummy data. OccMap is of crucial importance to avoid data collisions, and hence for the correctness of Flat ORAM. A collision happens when a physical location, which is randomly chosen to store a logical block, already contains useful data which cannot be overwritten. Managing the OccMap securely and efficiently is a major challenge which we address in

Section 5 in detail.

4.1.4. Stash

Stash, also adapted from Path ORAM, is a small buffer in the trusted memory to temporarily hold data blocks evicted from the processor’s last level cache (LLC). A slight but crucial modification, however, is that Flat ORAM only buffers dirty data blocks (blocks with modified data) in the stash; while clean (or unmodified) blocks evicted from the LLC are simply ignored since a valid copy of these blocks already exists in the main memory. This modification is significantly beneficial for performance.

4.2. Basic Algorithm

Let N be the total number of logical data blocks that we want to securely store in our ORAM, which is implemented on top of a DRAM; and let each data block be of size B bytes. Let P be the number of physical blocks that our DRAM can physically store, i.e., the DRAM capacity (where ).

Initial Setup: Algorithm 2 shows the setup phase of our scheme. Two null-initialized arrays and , corresponding to position and occupancy map of size N and P entries respectively, are allocated. For now, we assume that both and reside on-chip in the trusted memory to which the adversary has no access. However, since these arrays can be quite large and the trusted memory is quite constrained, we later on show how this problem is solved. Initially, since all physical blocks are empty, each OccMap entry is set to 0. Now, each logical block is mapped to a uniformly random physical block, i.e., PosMap is initialized, while avoiding any collisions using OccMap. The OccMap is updated along the way in order to mark those physical locations which have been assigned to a logical block as ‘occupied’. Notice that in order to minimize the probability of collision, P should be sufficiently larger than N, e.g., gives a collision probability.

| Algorithm 2 Flat ORAM Initialization. |

| 1: procedure Initialize() | |

| 2: | ▹ Empty Position Map. |

| 3: | ▹ Empty Occupancy Map. |

| 4: for do | |

| 5: loop | |

| 6: UniformRand() | |

| 7: if then | ▹ If vacant |

| 8: | ▹ Mark Occupied. |

| 9: | ▹ Record position. |

| 10: break | |

| 11: end if | |

| 12: end loop | |

| 13: end for | |

| 14: end procedure | |

Reads: The procedures to read/write a data block corresponding to the virtual address a from/to the ORAM are shown in Algorithm 3. A read operation is straightforward as it does not need to be obfuscated. The PosMap entry for the logical block a is looked up, and the encrypted data is read through normal DRAM read. The data is decrypted and returned to the LLC along with its current physical position s. The location s is stored in the tag array of the LLC, and proves to be useful upon eviction of the data from the LLC.

Writes: Since write operations should be non-blocking in a secure processor setting, the ORAM writes are performed in two steps. Whenever a data block is evicted from the LLC, it is first simply added to the stash queue, without incurring any latency. While the processor moves on to computing on other data in its registers/caches, the ORAM controller then works in the background to evict the block from stash to the DRAM. A block

a to be written is picked from the stash, and a new uniformly random physical position

is chosen for this block. The OccMap is looked up to determine whether the location

is vacant. If so, the write operation proceeds by simply recording the new position

for block

a in PosMap, updating the OccMap entries for

and

accordingly, and finally writing encrypted data at location

. Otherwise if the location

is already occupied by some useful data block, the probability of which is

, the existing data block is read, decrypted, re-encrypted under probabilistic encryption and written back. A new random position is then chosen for the block

a and the above mentioned process is repeated until a vacant location is found to evict the block. The encryption/decryption algorithms

/

implement probabilistic encryption, e.g., AES counter mode, as done in prior works [

26,

39]. Notice that storing

along with the data upon reads will save extra ORAM accesses to lookup

from the recursive PosMap (cf.

Section 4.5).

An implementation detail related to read operations is that upon a LLC miss, in some cases the data block might be supplied by the stash instead of the DRAM. Since the stash serves as another level of cache hierarchy for the data blocks, it might contain fresh data recently evicted from the LLC that is yet to be written back to DRAM. In such a scenario, the data block is refilled to LLC by the stash which contains the freshest data, which is also beneficial for performance.

| Algorithm 3 Basic Flat ORAM considering all PosMap and OccMap is on-chip. Following procedures show reading, writing and eviction of a logical block a from the ORAM. |

| 1: procedure ORAMRead(a) | |

| 2: | ▹ Lookup position |

| 3: DecK(DRAMRead(s)) | |

| 4: return | ▹ Position is also returned. |

| 5: end procedure | |

| 1: procedure ORAMWrite() | |

| 2: | ▹ Add to Stash |

| 3: return | |

| 4: end procedure | |

| 1: procedure EvictStash | |

| 2: | ▹ Read from Stash

|

| 3: loop | |

| 4: UniformRand() | |

| 5: if then | ▹ If vacant

|

| 6: | ▹ Mark as Occupied. |

| 7: | ▹ Vacate old block. |

| 8: | ▹ Record position. |

| 9: DRAMWrite() | |

| 10: | |

| 11: break | |

| 12: else | ▹ If occupied. |

| 13: (DRAMRead(snew)) | |

| 14: DRAMWrite() | |

| 15: end if | |

| 16: end loop | |

| 17: end procedure | |

4.3. Avoiding Redundant Memory Accesses

The fact that the adversary cannot see read accesses allows Flat ORAM to avoid almost all the redundancy incurred by a fully functional ORAM (e.g., Path ORAM). Instead of reading/writing a whole path for each read/write access as done in Path ORAM, Flat ORAM simply reads/writes only the desired block directly given its physical location from the PosMap. This is fundamentally where the write-only ORAMs (i.e., HIVE [

40], Flat ORAM) get the performance edge over the full-featured ORAMs. However, the question arises whether Flat ORAM is still secure after eliminating the redundant accesses.

4.4. Security

Privacy: Consider any two logical write-access sequences and of the same length. In EvictStash procedure (cf. Algorithm 3), a physical block chosen uniformly at random out of P blocks is always modified regardless of it being vacant or occupied. Therefore, the write accesses generated by Flat ORAM while executing either of the two logical access sequences and will update memory locations uniformly at random throughout the whole physical memory space. As a result, an adversary monitoring these updates cannot distinguish between real vs. dummy blocks, and in turn the two sequences and seem computationally indistinguishable.

Furthermore, notice that in Path ORAM the purpose of accessing a whole path instead of just one block upon each read and write access is to prevent linkability between a write and a following read access to the same logical block. In Flat ORAM’s model, however, since the adversary cannot see the read accesses at all, therefore the linkability problem would never arise as long as each logical data block is written to a new random location every time it is evicted (which is guaranteed by Flat ORAM algorithm). Although HIVE proposes a constant k-factor redundancy upon each data write, we argue that it is unnecessary for the desired security as explained above, and can be avoided to gain performance. Hence, the basic algorithm of Flat ORAM presented above guarantees the desired privacy property of our write-only ORAM (cf. Definition 1).

Integrity: Next, we move on to making the basic Flat ORAM practical for a real system. The main challenge is to get rid of the huge on-chip memory requirements imposed by PosMap and OccMap. While addressing the PosMap management problem, we discuss an existing efficient memory integrity verification technique from Path ORAM domain called

[

26] (cf.

Section 6.2) which satisfies our integrity definition (cf. Definition 2).

Stash Management: Another critical missing piece is to prevent the unlikely event of stash overflow for a small constant sized stash, as such an event could break the privacy guarantees offered by Flat ORAM. We completely eliminate the possibility of a stash overflow event by using a proven technique called

Background Eviction [

39]. We present a detailed discussion about the stash size under Background Eviction technique in

Section 5.4.

4.5. Recursive Position Map & Position Map Lookaside Buffer (PLB)

In order to squeeze the on-chip PosMap size, a standard recursive construction of position map [

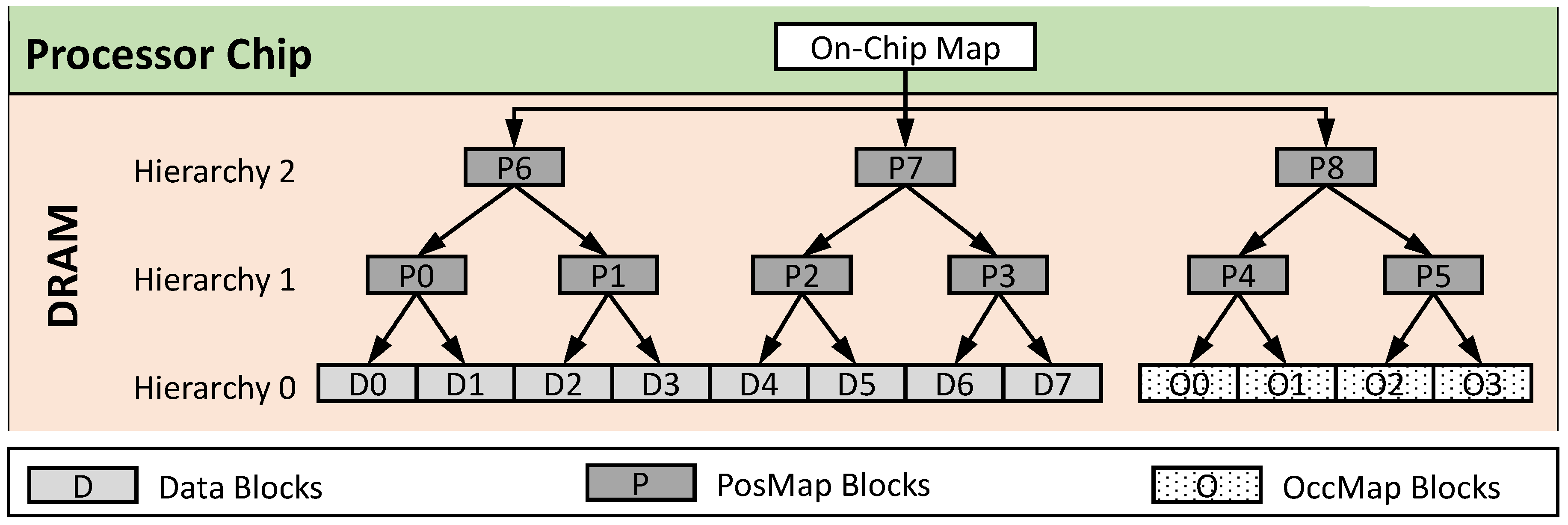

22] is used. In a 2-level recursive position map, for instance, the original PosMap structure is stored in another set of data blocks which we call a hierarchy of position map, and the PosMap of the first hierarchy is stored in the trusted memory on-chip (

Figure 1). The above trick can be repeated, i.e., adding more hierarchies of position map to further reduce the final position map size at the expense of increased latency. Notice that all the position map hierarchies (except for the final position map) are stored in the untrusted DRAM along with the actual data blocks, and can be treated as regular data blocks; this technique is called Unified ORAM [

26].

Unified ORAM scheme reduces the performance penalty of recursion by caching position map ORAM blocks in a Position map Lookaside Buffer (PLB) to exploit locality (similar to the TLB exploiting locality in page tables). To hide whether a position map access hits or misses in the cache, Unified ORAM stores both data and position map blocks in the same binary tree. Further compression of PosMap structure is done by using Compressed Position Map technique discussed in

Section 6.1.

4.6. Background Eviction

Stash (cf.

Section 4.1.4) is a small buffer to temporarily hold the dirty data blocks evicted from the LLC/PLB. If at any time, the rate of blocks being added to the stash becomes higher than the rate of evictions from the stash, the blocks may accumulate in stash causing a stash overflow. Background eviction [

39] is a proven and secure technique proposed for Path ORAM to prevent stash overflow. The key idea of background evictions is to temporarily stop serving the real requests (which increase stash occupancy) and issue background evictions or so-called dummy accesses (which decrease stash occupancy) when the stash is full.

We use background eviction technique to eliminate the possibility of a stash overflow event. When the stash is full, the ORAM controller suspends the read requests which consequently stops any write-back requests preventing the stash occupancy to increase further. Then it simply chooses random locations and, if vacant, evicts the blocks from the stash until the stash occupancy is reduced to a safe threshold. The probability of a successful eviction in each attempt is determined by the DRAM utilization parameter, i.e., the ratio of occupied blocks to the total blocks in DRAM. In our experiments, we choose a utilization of ≈1/2, therefore each eviction attempt has ≈50% probability of success. Note that background evictions essentially push the problem of stash overflow to the program’s termination channel. Configuring the DRAM utilization to be less than 1 guarantees the termination, and we demonstrate good performance for a utilization of

in

Section 8. Although it is true that background eviction may have different effect on the total runtime for different applications, or even for different inputs for the same application which leak the information about data locality etc. However, the same argument applies to Path ORAM based systems, and also for other system components as well, such as enabling vs. disabling branch prediction or the L3 cache, etc. Protecting any leakage through the program’s termination time is out of scope of this paper (cf.

Section 2).

4.7. Periodic ORAM

As mentioned in our adversarial model, the core definition of ORAM [

14] does not address leakage over ORAM timing or termination channel (cf.

Section 2). Likewise, the fundamental algorithm of Flat ORAM (Algorithm 3) does not target to prevent these leakages. Therefore in order to protect the ORAM timing channel, we adapt the Flat ORAM algorithm to issue periodic ORAM accesses, while maintaining its security guarantees.

In the literature, periodic variants of Path ORAM have been presented [

10] which simply always issue ORAM requests at regular periodic intervals. However, under write-only ORAMs, such a straightforward periodic approach would break the security as explained below. Since in write-only ORAMs, the read requests do not leave a trace, therefore for a logical access sequence of (Write, Read, Write), the adversary will only see two writes occurring at times 0 and

for

T being the interval between two ORAM accesses. The access at time

T will be omitted which reveals to the adversary that a read request was made at this time.

To fix this problem, we modify the Flat ORAM algorithm as follows. Among the periodic access, for every real read request to physical block s, another randomly chosen physical block is also read. Block s is consumed by the processor, whereas block is re-encrypted (under probabilistic encryption) and written back to the same location from where it was read. This would always result in update(s) to the memory after each and every time period T.

Security: A few things should be noted: First, it does not matter whether the location contains real or dummy data, because the plain-text data content is never modified but just re-encrypted. Second, writing back is indistinguishable from a real write request as this location is chosen uniformly at random. Third, this write to does not reveal any trace of the actual read of s as the two locations are totally independent.

We present our simulation results for periodic Flat ORAM in the evaluation section.

5. Efficient Collision Avoidance

In

Section 4.3 and

Section 4.4, we discuss how Flat ORAM outperforms Path ORAM by avoiding redundant memory accesses. However, an immediate consequence of this is the problem of collisions which now becomes the main performance bottleneck. A collision refers to a scenario when a physical location

s, which is randomly chosen to write a logical block

a, already contains useful data which cannot be overwritten. The overall efficiency of such a write-only ORAM scheme boils down to its collision avoidance mechanism. In the following subsections, we discuss the occupancy map based collision avoidance mechanism of Flat ORAM in detail and compare it with HIVE’s ‘inverse position map’ based collision avoidance scheme.

5.1. Inverse Position Map Approach

Since a read access must not leave its trace in the memory in order to avoid the linkability problem, a naive approach of marking the physical location as ‘vacant’ by writing ‘dummy’ data to it upon each read is not possible. HIVE [

40] proposes an inverse position map structure that maps each physical location to a logical address. Before each write operation to a physical location

s, a potential collision check is performed which involves two steps. First the logical address

a linked to

s is looked up via the inverse position map. Then the regular position map is looked up to find out the most recent physical location

linked to the logical address

a. If

then since the two mappings are synchronized, it shows that

s contains useful data, hence a collision has occurred. Otherwise, if

then this means that the entry for

s in the inverse position map is outdated, and block

a has now moved to a new location

. Therefore, the current location can be overwritten, hence no collision.

HIVE stores the encrypted inverse position map structure in the untrusted storage at a fixed location. With each physical block being updated, the corresponding inverse position map entry is also updated. Since write-only ORAMs do not hide the physical block ID of the updated block, therefore revealing the position of the corresponding inverse position map entry does not leak any secret information.

We demonstrate in our evaluations that the large size of inverse position map approach introduces storage as well as performance overheads. For a system with a block size of B bytes and total N logical blocks, inverse position map requires additional bits space for each of the P physical blocks. Crucially, this large size of a single inverse position map entry restricts the total number of entries per block to a small constant, which leads to less locality within a block. This results in performance degradation.

5.2. Occupancy Map Approach

In the simplified OccMap based approach, each of the P physical blocks requires just one additional bit to store the occupancy information (vacant/occupied). In terms of storage, this gives times improvement over HIVE.

5.2.1. Insecurely Managing the Occupancy Map

The OccMap array bits are first sliced into chunks equal to the ORAM block size (B bytes). We call these chunks the OccMap Blocks. Notice that each OccMap block contains occupancy information of physical locations (i.e., 8 bits per byte; 1-bit per location). Now the challenge is to efficiently store these blocks somewhere off-chip. A naive approach would be to encrypt OccMap blocks under probabilistic encryption, and store them contiguously in a dedicated fixed area in DRAM. However under Flat ORAM algorithm, this approach would lead to a serious security flaw which is explained below.

If the OccMap blocks are stored contiguously at a fixed location in DRAM, an adversary can easily identify the corresponding OccMap block for a given a physical address; and for a given OccMap block, he can identify the contiguous range of corresponding physical locations. With that in mind, when a data block a (previously read from ) is evicted from the stash and written to location (cf. Algorithm 3), the old OccMap entry is marked as ‘vacant’ and the new OccMap entry is marked as ‘occupied’; i.e., two OccMap blocks and are updated. Furthermore, the location, which falls in the contiguous range covered by one of the two updated OccMap blocks, is also updated with the actual data—thereby revealing the identity of . This reveals to the adversary that a logical data block was previously read from some location within the small contiguous range covered by , and it is now written to location (i.e., coarse grained linkability). Recording several such instances of read-write access pairs and linking them together in a chain reveals the precise pattern of movement of logical block a across the whole memory.

5.2.2. Securely Managing the Occupancy Map

To avoid this problem, we treat the OccMap blocks as regular data blocks, i.e., OccMap blocks are encrypted and also randomly distributed throughout the whole DRAM, and tracked by the regular PosMap.

Figure 1 shows the logical organization of data, PosMap and OccMap blocks. The OccMap blocks are added as ‘additional’ data blocks at the data hierarchy (Hierarchy 0). Then the recursive position map hierarchies are constructed on top of the complete data set (data blocks and OccMap blocks). Every time an OccMap block is updated, it is mapped to a new random location and hence avoids the linkability problem. We realize that this approach results in overall more position map blocks, however for practical parameters settings (e.g., 128 B block size, 8 GB DRAM, 4 GB working set), it does not result in an additional PosMap hierarchy. Therefore the recursive position map lookup latency is unaffected.

5.3. Performance Related Optimizations

Now that we have discussed how to securely store the occupancy map, we move on to discuss some performance related optimizations implemented in Flat ORAM.

5.3.1. Locality in OccMap Blocks

For realistic parameters, e.g., 128 bytes block size, each OccMap block contains occupancy information of 1024 physical locations. This factor is termed as OccMap scaling factor. The dense structure of OccMap blocks offers an opportunity to exploit spatial locality within a block. In other words, for a large scaling factor it is more likely that two randomly chosen physical locations will be covered by the same OccMap block, as compared to a small scaling factor. In order to also benefit from such locality, we cache the OccMap blocks as well in PLB along with the PosMap blocks. For a fair comparison with HIVE in our experiments, we also model the caching of HIVE’s inverse position map blocks in PLB. Our experiments confirm that the OccMap blocks show a higher PLB hit rate as compared to HIVE’s inverse position map blocks cached in PLB in the same manner. The reason is that, for the same parameters, the scaling factor of inverse position map approach is just about 40 which results in a larger size of the data structure and hence more capacity-misses from the PLB.

5.3.2. Dirty vs. Clean Evictions from LLC & PLB

An eviction of a block from the LLC where the data content of the block has not been modified is called a clean eviction, whereas an eviction where the data has been modified is called dirty eviction. In Path ORAM, since all the read operations also need to be obfuscated, therefore following a read operation, when a block gets evicted from the LLC, it must be re-written to a new random location even if its data is unmodified, i.e., a clean eviction. This is crucial for Path ORAM’s security as it guarantees that successive reads to the same logical block result in random paths being accessed. This notion is termed as read-erase, which assumes that the data will be erased from the memory once it is read.

In write-only ORAMs, however, since the read access patterns are not revealed therefore the notion of read-erase is not necessary. A data block can be read from the same location as often as needed as long as it’s contents are not modified. We implement this relaxed model in Flat ORAM which greatly improves performance. Essentially, upon a clean eviction from the LLC, the block can simply be discarded since one useful copy of the data is still stored in the DRAM. Same reasoning applies to the clean evictions from the PLB. Only the dirty evictions are added to the stash to be written back at a random location in the memory.

5.4. Implications on PLB & Stash Size

Each dirty eviction requires not only the corresponding data block to be updated but also the two related OccMap blocks which store the new and old occupancy information. In order to relocate these blocks to new random positions, the ‘d’ hierarchies of corresponding PosMap blocks will need to be updated and this in turn implies updating their related OccMap blocks, and so on. If not prevented, this avalanche effect will repeatedly fill the stash implying background evictions which stop serving real requests and increase the termination time. For a large enough PLB with respect to a benchmark’s locality, most of the required OccMap blocks during the benchmark’s execution will be in the PLB. This prevents the avalanche effect most of the time (as our evaluation shows) since the OccMap blocks in PLB can be directly updated.

Even if all necessary OccMap blocks are in PLB, a dirty eviction still requires the data block with its d PosMap blocks to be updated. Each of these blocks is successfully evicted from the stash with probability , determined by the DRAM utilization, on each attempt. The probability that exactly m attempts are needed to evict all blocks is equal to . This probability becomes very small for m equal to a small constant c times . If the dirty eviction rate (per DRAM access) is at most , then the stash size will mostly be contained to a small size (e.g., blocks for ) so that additional background eviction which stops serving real requests is not needed.

Notice that the presented write-only ORAM is not asymptotically efficient: In order to show at most a constant factor increase in termination time with overwhelming probability, a proper argument needs to show a small probability of requiring background eviction which halts normal execution. An argument based on M/D/1 queuing theory or 1-D random walks needs the OccMap to be always within the PLB and this means that the effective stash size as compared to Path ORAM’s stash definition includes this PLB which scales linearly with N and is not .