Now define the set

is a monomial of

and

is a monomial of

, where

l is a leading monomial of

f and define the shift polynomials as

and

for the coefficient

of

l. For

, divide the above shift polynomials according to

and

. Then for

, the shift polynomials

are

and for

, the shift polynomials

are

Let

L be the lattice spanned by the coefficient vectors

and

shifts with dimension

[

7]. Let

M be the matrix of

L with each row is the coefficients of the shift polynomial

and each column is the coefficients of each variable (in shift polynomials)

As

is the leading monomial in

with coefficient 1, the diagonal elements in the matrix

M are

3.2. Applying Sub-Lattice Based Techniques to Get an Attack Bound

In [

5], J. Blomer, A. May proposed a method to find an attack bound for low deciphering exponent in a smaller dimension than the approach by Boneh and Durfee’s attack in [

4]. Apply their method based on sublattice reduction techniques to our lattice

to get an attack bound and is described in the following.

In order to apply the Howgrave-Graham’s theorem [

11] by using Theorem 1, we need three short vectors in

as our polynomial consists of three variables. However, note that

is not a square matrix. So, first construct a square matrix

by removing some columns in

, which are small linear combination of non-removing columns in

. Then the short vector in

lead to short reconstruction vector in

.

Construction of a square sub-matrix of .

Columns in M and are same and each column in M is nothing but the coefficients of a variable, which is a leading monomial of the polynomial g or h-shifts. The first and remaining columns are corresponding to the leading monomial of the polynomials g and h-shifts respectively. Therefore,

the first columns are the coefficients of the each variable for and and remaining columns are the coefficients of the each variable for and . So the variable corresponds a column in first columns if and corresponds a column in remaining columns if .

As are the monomials of f, the set of all monomials of for is . Therefore, the coefficient of the variable in is non-zero if and only if , i.e., .

Remove columns in corresponding to the coefficients of the variable for all and note that every such column is multiple of a non-removed column, corresponding to the coefficients of and is proved in the following theorem.

Theorem 4. Each column in corresponding to the coefficients of the variable , a leading monomial of the polynomial g or h-shifts, for all is multiple of a non-removed column, represents the coefficients of the variable .

Proof. First assume that , then .

For , the -shifts corresponds first rows in and for , the -shifts corresponds remaining rows in . We prove this theorem in two cases.

Case(i): Any column in first

columns of

. i.e., a column corresponding coefficients of a variable

with

, from the above analysis in (

1).

Given that . From the above analysis in (1) and (2), the coefficient of is non-zero in -shifts if and only if and . As , and , and also as for , is such that

Therefore, the coefficient of is non-zero in -shifts if and only if and .

Similarly we can prove that, the coefficient of is non-zero in -shifts if and only if and using the inequalities , and analysis in (1) and (2), and say

The formula for finding a coefficient of a variable

for

in

is

and coefficient of

in

is nothing but a coefficient of

in

.

Note that a column corresponding to a variable

is in the non-removing columns in

and coefficient of

is zero for

in

-shifts,

in

-shifts. The columns corresponding to a variable

and a variable

only with non-zero terms is depicted in

Table 1.

Therefore, from

Table 1 the result holds in this case.

Case(ii): Any column in remaining

columns of

, i.e., a column corresponding coefficients of a variable

with

, from the above analysis in (

1).

The coefficient of is non-zero in -shifts if and only if , and note for , as in -shifts. So the coefficient of is zero in all rows corresponding to -shifts.

The coefficient of is non-zero in -shifts if and only if and . For and from the inequalities , , we have the coefficient of is non-zero in -shifts if and only if and , . Take .

Note that coefficient of

is zero in all

-shifts as

and for

in

-shifts. The columns corresponding to a variable

and a variable

only with non-zero terms is depicted in

Table 2. Therefore, from

Table 2 the result holds in this case.

Now apply the above analysis to the polynomial for , then this result is obtained. □

From the above theorem, all columns corresponding to a variable for all are depending on a non-removed column, corresponding to a variable in . Let be a matrix formed by removing all above columns from the matrix and be a lattice spanned by rows of . Then the short vector in lead to short reconstruction vector in , i.e., if is a short vector in then this lead to a short vector (same coefficients ) in where B and are the basis for and respectively.

As we removed all depending columns in

to form a matrix

, apply the lattice based techniques to

instead of

to get an attack bound and this lattice reduction techniques gives a required short vectors in

for a given bound. The matrix

is lower triangular with rows same as in

and each column corresponding to coefficients of one of the variables (leading monomials of

and

-shifts)

Therefore

is a lattice spanned by coefficient vectors of the shift polynomials

and

where

Since

is full-rank lattice,

where

are denotes the number of

in all the diagonal elements of

respectively. As

is a leading monomial of

with coefficient 1, we have

Take

, then for sufficiently large

m, the exponents

and the dimension

reduce to

Applying the LLL algorithm to the basis vectors of the lattice

, i.e., coefficient vectors of the shift polynomials, we get a LLL-reduced basis say

and from the Theorem 1 we have

In order to apply the generalization of Howgrave-Graham result in Theorem 2, we need the following inequality

from this, we deduce

As the dimension is not depending on the public encryption exponent e, is a fixed constant, so we need the inequality i.e.,

Substitute all values and taking logarithms, neglecting the lower order terms and after simplifying by

we get

The left hand side inequality is minimized at

and putting this value in the above inequality we get

From the first three short vectors

and

in LLL reduced basis of a basis

B in

we consider three polynomials

and

over

such that

. These short vectors

and

lead to a short vector

and

respectively and

and

its corresponding polynomials. Apply the same analysis in paper [

7] to the above polynomials to get the factors

p and

q of RSA modulus

N.

Theorem 5. Let be an RSA modulus with . Let and k be the multiplicative inverse of modulo e. Suppose the prime sum is of the form , for a known positive integer n and for and one can factor N in polynomial time if Proof. Follows from the above argument and the LLL lattice basis reduction algorithm operates in polynomial time [

9]. □

Note that for any given primes

p and

q with

, we can always find a positive integer

n such that

where

. A typical example is

as

[

12]. So take

and

in the range (0, 0.25).

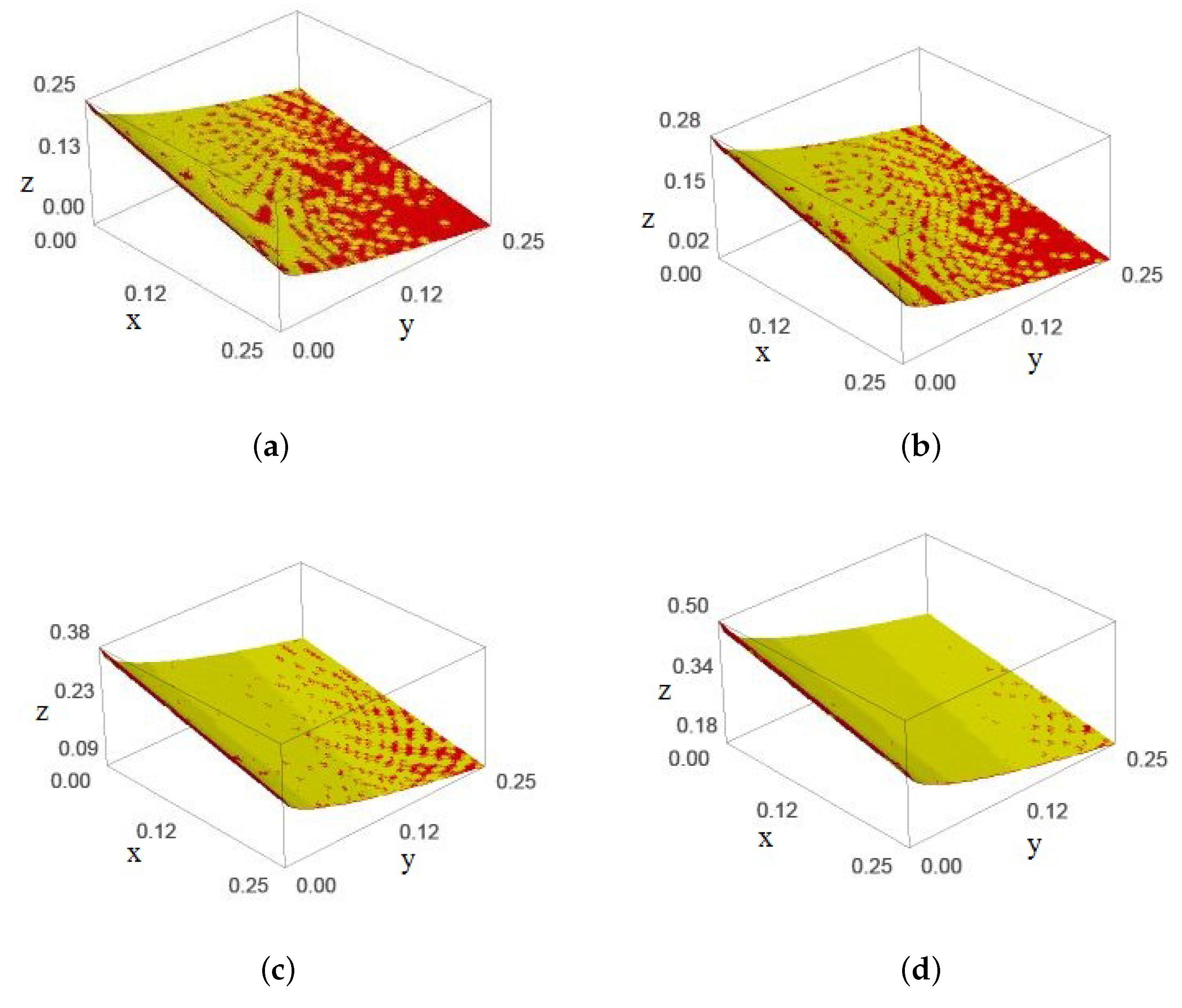

Let

and

be the bounds for

in inequalities (

1) and (

2) respectively. Then note that

is slightly larger than

and is depicted in

Figure 1 for

and 1.

In the

Figure 1,

,

z-axis represents

, bound for

respectively and yellow, red regions represents

,

receptively. From this figure, it is noted that the yellow region is slightly above the red region, i.e.,

is slightly grater than

and this improvement increases when the values of

increases.

As the dimension of

L is

for

[

7] and

is

for

, note the dimension of

is

, for

smaller than the dimension of

L.