Abstract

The article proposes a universal dual-axis intelligent systems assessment scale. The scale considers the properties of intelligent systems within the environmental context, which develops over time. In contrast to the frequent consideration of the “mind” of artificial intelligent systems on a scale from “weak” to “strong”, we highlight the modulating influences of anticipatory ability on their “brute force”. In addition, the complexity, the ”weight“ of the cognitive task and the ability to critically assess it beforehand determine the actual set of cognitive tools, the use of which provides the best result in these conditions. In fact, the presence of ”common sense“ options is what connects the ability to solve a problem with the correct use of such an ability itself. The degree of ”correctness“ and ”adequacy“ is determined by the combination of a suitable solution with the temporal characteristics of the event, phenomenon, object or subject under study. The proposed approach can be applied in the evaluation of various intelligent agents in different contexts including AI and humans performing complex domain-specific tasks with high uncertainty.

1. Introduction

Let’s suppose that the definitions of “artificial intelligence”, “power of intelligence” and “critical thinking” are unambiguous, that these terms are agreed upon and accepted by everyone, and are understood by everyone to the same extent as, for example, the definition of “light”. Following the ideas of P. Feyerabend [1], our further reasoning is based on the general idea that the manifestations of an entity that occupies thoughts and is the subject of people’s real activity can characterize this entity in sufficient detail to attempt to isolate, study and describe it. In conversations with people of past generations, a frequent response to a question would be: “I don’t know enough about this”, suggesting to ask someone else who, in the opinion of the respondent, might know better and give the correct answer. Such a critical approach to assessing one’s own “intellectual power” is traditional and is reflected in the most ancient, millennia-old evidence [2]: different peoples’ fairy tales. For example, in the Russian folk tale “Rejuvenating apples and life-giving water”, the main character seeks advice in solving a difficult matter from a magical assistant, Baba Yaga: “give your head to my mighty shoulders, direct me to mind and to reason”. Today one may get the impression that some “magic assistant”, for example, a search engine on the Internet, is always nearby and thereby guarantees omniscience, or at least a self-evident opportunity for anyone to have a genuine opinion and freely judge this or that event or phenomenon, complex system or scientific work, even without resorting to the “magic assistant”. In other words, the availability of knowledge, as it were, makes the person next to you a priori “smarter”. It is likely that when studying this kind of phenomenon, we are dealing not just with a lack of time for reflection due to the high pace of life, and not with a manifestation of insufficient attentiveness, since attempts to increase “mindfulness” do not enhance critical thinking [3]. Also, the presence of a large amount of knowledge does not make a person smarter–let’s remember another old Indian tale about “foolish smart men”, the three Brahmins who “studied many different sciences and considered themselves smarter than everyone” [4]. Having invited a “stupid” peasant to guide them through the mountains, they found a dead lion and revived him, despite the warnings of the guide, who was the only one to escape from the resurrected predator. The tale ends with this message: “To a fool, science is like the light of a lamp to a blind man!”.

The discussion linking the approaches that define the concepts of “cognition” and “intelligence” and their relationship may concern the psychology of cognitive development and the psychology of intelligence [5]. Usually considered the most authoritative Cattell-Horn-Carroll model [6] is used as the basis for psychometrics. At the same time, there is an opinion that “these two disciplines did not interact closely through the years despite their common focus on the human mind, despite calls to relate them as noted in the many of the papers in this special issue. Their weak interaction is reflected in the fact that so far there are no commonly accepted theoretical constructs that might unite the two disciplines ” [5]. Attempts to synthesize different approaches can also be seen, for example, when combining the views of L. S. Vygotsky and J. Haidt [7]. In our opinion, the consideration of the relationship between emotions and intelligence in assessing the general “mind” of a person remains an important issue [8], but the proposed universal two-axis scale, apparently, allows us to bypass this issue due to the presence of an assessment of predictive “power” in it, since the topic of anticipation, prediction, a sense of the future and predicting behavior also concerns the emotional sphere [9,10,11].

Modern artificial intelligence (AI) [12] should be considered a multi-disciplinary field of knowledge including technical, philosophical, social, psychological, and other aspects. Still, this multi-disciplinary nature often complicates the unified definition of AI and related concepts. AI is widely considered from the point of view of philosophy within issues of ethics, trust, and others. One of the main philosophical issues considered in this area was proposed by A. Turing in a discussion about whether a machine can think with a concept of “imitation game” (nowadays more known as Turing test) [13].

Considering an idea “thinking” agent, an important concept is cognition and cognitive ability. Currently, cognition usually is defined as acquiring knowledge and understanding through thought, experience, and senses following pioneer works such as [14,15]. Still, it touches multiple areas including cognitive psychology, epistemology, linguistics, etc. [16] Also, a concept worth noting was proposed earlier by I.P. Pavlov [17] is “higher nervous activity” [18] which is close but mostly originates from physiological ideas and concepts, while modern ideas in cognition are mostly referred as psychology and cognitive science.

Describing his theory of functional systems, P. Anokhin noted that “any fractional function of an organism turns out to be possible only if, at the moment of the formation of a decision and a command to action, a prediction mechanism is immediately formed. It is quite obvious that machines that could ‘look into the future’ at every stage of their operation would receive a significant advantage over the modern ones” [19]. The idea of “prediction” as a distinctive feature for the creation of intelligent systems, also expressed by Norbert Wiener for cybernetics, was actively developed in the middle of the 20th century [20]. It preceded many modern problems of artificial intelligence construction, including the search for analogies with the living brain [21]. Once we focus on the ability to “see the future”, it becomes clear that the “foolish smart men” from the Indian fairy tale were deprived of it, unlike the “stupid” peasant. In other words, neither knowledge, nor “big data” by itself determines critical thinking for a person or a similar property for artificial intelligence.

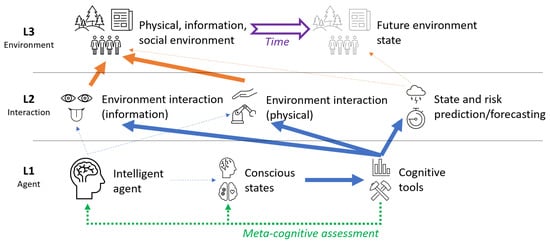

Thus, considering only computing power and performance as indicators of power of intellect is futile if predictive ability is ignored. An obligatory condition for the existence of real (living) intelligent systems is the consideration of time, its flow and the correlation of past, present and future events with the system itself and the surrounding world; a simplified diagram is presented in Figure 1. In the figure, an intelligent agent has access to sufficiently powerful intellectual tools that significantly enhance their ability to assess the environment (physical, social, informational), as well as their future states and associated risks. The agent also has access to tools for meta-cognitive analysis and assessment of the agent, their knowledge and abilities. However, access to these tools is limited by the cognitive interaction capabilities of an intelligent agent. We explicitly identify three levels (L1–L3) of intelligent system interaction with the environment: agent level, interaction level, and environment level. We consider two types of cross-level relationships: implicit (thin dashed lines) showing high-level conceptual interaction and explicit (thick lines) showing more detailed interaction through cognitive tools application.

Figure 1.

The interaction of various conditions taken into account and used by the intelligent system.

In this context, we believe that the assessment of the power of intellect should not be a linear scale from strong to weak, but should rather contain at least two axes: the scales of brute force and smart force along with the scale of adequacy. Therefore, the purpose of this article is to present our approach to the assessment of intelligent systems.

Within our approach, we consider an agent (or system of agents) as an intelligent system interacting with the environment. In this respect, the remaining part of the article is structured as follows. First, we consider the individual (L1) reasoning limitations of an agent (Section 2). Next, in Section 3, we discuss objective complexity and issues in system-level evaluation and prediction (L3). Section 4 presents an analysis of complex context-depending, ethical, and other limitations of interaction and prediction (L2). The next section focuses on cognitive reasoning and its limitations which appear in the transition from L1 to L2. The following section (Section 6) considers the issues that appeared in the prediction process faced environmental constraints (i.e., transition from L2 to L3). Next, Section 7, summarizes the considered aspects within a framework of a two-dimensional cognitive assessment scale to enable the evaluation of intelligent systems (independent of their nature). Finally, the last section provides concluding remarks and summarizes the work.

2. The Weight of Objects and the Complexity of Intellectual Tasks

If you ask an ordinary person whether they can lift a weight of 5 kg, they will most likely answer affirmatively, and truthfully. And honest. If you repeat the question regarding progressively greater weights, reaching, for example, up to 100 or 200 kg, you will see that people usually judge the proportionality of their own physical strength very well. There is a clear understanding that a certain weight cannot be lifted. It is much more complicated when one is asked to evaluate events or phenomena. If you offer someone who does not know mathematics to solve several equations, or someone who does not know a programming language to write code, then the excessive complexity of the task will be obvious. However, reasoning about extremely complex things that do not provide such explicit formal markers of “complexity” (as program code or equations) can be assessed by a person as accessible to their intellectual “power”. The topics on which many people easily express judgments include, for example, ideas about the work of the government, about epidemiological measures and vaccination [22,23], etc. Thus, without the “magic veil” of formulas, equations and codes, complex cognitive tasks often seem quite clear and feasible for analysis, for having an opinion or a judgment.

The importance of matching the form of presenting information to comfortable perception is indicated, for example, by the phenomenon of “mathematics anxiety” widely discussed by specialists (for example, [24,25]). At the same time, the connection between anxiety and the ability to mental work, as a rule, can be traced in clinical disciplines–for example, with affective disorders, psycho-emotional stress, and so on [26,27]. However, it’s not just about anxiety–you can’t associate a phenomenon like “math anxiety” with just one factor [28]. In this regard, a convenient generalized approach is required (which we are trying to develop during the presented work). At the same time, one of the options for explaining the complexity of the perception of mathematical formulas or an accurate understanding of the real measure of one’s own intellectual capabilities can be an element of “dogmatism” (see also the following discussion).

In such situations, the fallacy of judgments about complex things and the low degree of critical thinking can be compared (in an exaggerated way) to what happens in mental disorders (e.g., such psychotic disorders as schizophrenia characterized by disturbances in thought, perception, and behavior as defined by DSM-5 [29]). Arul et al. [30] conclude that “…a delusion doesn’t have to stem from or be centered around an external event. Instead, delusions can, starting from a thought or some other internal event like a hallucination, still develop into a deeply held opinion, proposition, or judgement on oneself or one’s status. To summarize, a delusion begins with a snap judgement on an object of personal significance, which is elaborated on and pushed into an absurd direction by further snap judgements”.

It should also be noted that the theme of madness and rationality is also reflected in philosophy, for example, in the works of Michel Foucault, contrary to a more traditional view of psychiatry and questions of the mental norm (normal intellectual process) [31]. Thus, the most appropriate explanation of the problems of critical thinking and evaluation of events today is correlating the probability of events (facts) with existing experience (previously obtained representations, opinions or delusions), while ranking the degrees of confidence. We believe that it is possible to describe this formally, for example, using Bayesian approaches [32]. Another approach is through catastrophe theory [33], in which the “breakdown” of critical thinking can be likened to a sudden (for an external observer) breakage of the ruler on which the load is placed, or the theory of self-organized criticality [34], in which the “breakdown” is due to the previous “accumulation” of small intellectual errors. This is reflected in the characterization of any unreliable statement as “built on sand”.

Also, according to the long-standing concept of Rokeach and Fruchter [35,36], an important characteristic for a person (intelligent system) can be the level of dogmatism. Dogmatism here means anxiety-related features, a certain static thinking. In our opinion, the concept of “dogmatism” can be correlated with a small set of predictions of the future, a “forced” orientation towards one version of the future. Or, in other words, we believe that it is possible to draw a connection between “dogmatism” and low predictive ability on the intelligence scale we propose. At the same time, perseverance and confidence in the optimal version of the future action (chosen from many versions) should be distinguished from dogmatism, as the “poverty” of versions.

3. Nonlinearity and Elements of Artificial Intelligence

A chaotic system is sensitive to the initial conditions but with the same initial state, it is predictable at least in a short time interval (a longer time interval is hardly predictable due to a large number of influencing factors, numerical errors, etc.). In stochastic systems, even with the same initial condition, the results state may vary. Systems of both types are hardly predictable [37]. It is also challenging to differentiate between random and non-random events [38]. Therefore, there can be different approaches to the design of artificial intelligence. Today, AI is undergoing a phase of active development, often associated with deep learning technologies. The success of deep learning in a number of areas (e.g, computer vision, speech recognition) promotes the use of these methods for various applications. Nevertheless, AI models, like any other models used in an applied setting, exhibit a high degree of uncertainty in different aspects [39]. Moreover, many AI models (especially those formed within the connectivist framework) display the properties of a “black box”. An increase in the size of models in learning problems leads not only to greater computational complexity, but also to the unstable operation of learning algorithms (the so-called “curse of dimensionality”), which further increases the uncertainty of the structure and parameters of models. As a result, the generated models require the development of mechanisms for interpreting and explaining both the results of predictive modeling and the structure of the generated model. In the current AI paradigm, such mechanisms appear in the areas of explicable AI (eXplainable AI, XAI) and interpretable machine learning [40,41], which focus on structuring models and simulation results, while taking into account the interpretable contribution of individual (subject-interpreted) factors. Mechanisms of Bayesian inference [42,43], and causal methods developed by J. Pearl [44] and other authors [45] also explicitly reveal the structure of models. Such approaches allow a shift to a new, “metacognitive” level of working with AI: using AI methods to identify the structuring of its own characteristics and reducing internal structural and parametric uncertainty.

On the other hand, the technical closedness of the model (“black box”) may bring limitations related to understanding and willingness to use the results of AI work on the part of end users. These issues include issues of trust [46], morality [47], and ethics [48] of AI. The problem of explainability is expanded by questions of unbiasedness [49], stability [50], conformity to values and norms (value-alignment) [51], relationship with the objective formulation of the problem being solved [52] etc. There is a transition to the extended tasks of AI development: instead of creating isolated solutions that replace humans, the task is to organize a collaboration between humans and AI within different approaches, such as human-centered AI (human-centered AI) [53,54], and augmented intelligence [55]. It is also critical to build effective interactions and mutual understanding between humans and AI, which leads to a rethinking of both technical and logical means of information exchange. For example, there are new perspectives on the issues of visualization [56] and interaction in natural language [57], issues of automating the management of AI models [58] and the relationship of understanding user experience (user experience, UX) in light of explainable AI technologies [59]. Thus, the “metacognitive” level is already attained through the human-AI system, in which the development of an isolated autonomous (albeit detailed) AI system is not the goal, but only a tool for achieving effective synergy of system elements.

Hence, the complex combination of different elements into an intellectual system further complicates it, increasing non-linearity, or, at least, moving further away from the “simple”, “formal” sequence of development of the cognitive process, which, as it may seem to the observer, corresponds to “ordinary”, “understandable” logic. In other words, the method of solving a problem, reminiscent of “insight” or “intuition”, marks the high nonlinearity of the intellectual system, which is associated with effective intellectual work. Metaphorically such a property of an intellectual system can be called “hyperdrive” or a “hypercognitive” property, like the engines of interstellar ships described in fantasy works, and the method of solving a problem is similar to traveling through a “wormhole” in space.

4. Cognitive Limitations and Their Connection to Ethics in Artificial Intelligence

Kurt Vonnegut wrote: “If it weren’t for [people], the world would be an engineer’s paradise”. It is precisely people that should be in focus when it comes to artificial intelligence. All procedures, from data entry to interpretation of results, require the algorithmization of critical thinking, which takes cognitive factors into account. These factors include dominant paradigms, concepts, traditions, beliefs, social myths and cultural stereotypes, and all of them must be considered in creating strong AI mimicking human cognitive abilities. Here, we consider “human” approach to AI according to Stuart J. Russell’s taxonomy (“human” vs. “rational”) [12,60] as we tend to focus on unified cognitive abilities shared between human, animals, machines. Recall that the features of cognitive systems can be considered both through the humanistic approach (C. Rogers’ law of congruence), and in light the cognitive approach. Furthermore, other aspects of human intelligence should be taken into account, for example, consciousness (being receptive to the environment); self-awareness (being aware of oneself as a separate person, in particular, to understand one’s own thoughts; empathy (the ability to “feel”, emotional intelligence); wisdom, motivation, etc. The complexity of social structure is accompanied by a high degree of stochasticity of global processes, the high level of risk in any segment of society. The conditions of uncertainty and weakly expressed determinism form a space of non-standard problems that require appropriate, equally non-standard approaches and solutions within the framework of strategic forecasting. One should not ignore the possibility of singularity, which is very characteristic of social processes, wherein one single event generates a new meaning [61]. This is similar to mathematics, where singularity is understood as a point at which a function approaches infinity or becomes unpredictable.We assume that at the moment of the onset of singularity, usual laws cease to operate [62].

Developing forecasts at bifurcation points or in periods of catastrophe, when systems that have exhausted their evolution, fall apart, is especially difficult. Can artificial intelligence, trained to detect the “correct” patterns, and acting according to the input data, offer an adequate exit vector from bifurcation points? Furthermore, the current state of mankind, described by A.P. Nazaretyan as “polyfurcation”, surpasses previous phase transitions in the history of the Earth in planetary and, possibly, cosmic consequences [63]. The result of polyfurcation is the formation of “hybrid structures”. The concept of hybridity has become popular today when explaining complex systems of different levels. The term itself has acquired a universal meaning and is used in a variety of contexts: from political “hybrid” regimes to “hybrid” forms of education, to mixed reality technologies combining the real and virtual worlds, and to cognitive hybrid intelligent decision support and forecasting systems. The latter refers to a hybrid of the fuzzy cognitive map (FCM; this map reflects the analysis of the consciousness of people involved in the analyzed processes) and the neuro-fuzzy network ANFIS, which supports decision-making in dynamic unstructured situations, for example, the irrational behavior of people, or, in the present work, in behavioral economics [64].

For us, the research can be a detailed case study, because it provides an example of taking into account the influence of social, cognitive and emotional factors on economic decision making. It also becomes clear that approaches from neurophysiology, psychology, linguistics, anthropology, as well as the entire apparatus of modern computer science, robotics and brain modeling are in demand when solving this type of problem. All these areas are connected with the representation of knowledge in the human brain. The decision support in unstructured situations model is interesting, because it makes it possible to evaluate, on the basis of a scale of criteria, the weight of a number of alternatives in forecasts for the development of a nonlinear situation, under conditions of uncertainty, stochasticity, limited information, or weak determinism. It is in these cases that fuzzy logic (introduced in 1965 by Professor Lotfi A. Zadeh at the University of California at Berkeley) is reasonably used as a structural element of post-non-classical mathematics. Linguistically, it can be expressed in “yes, no, maybe”, all in a single utterance during reasoning. The advantages of the method are flexibility, the ability to set the rules and accept even inaccurate, distorted and erroneous information; all of this is inherent in human reasoning.

In other words, the choice of a situational strategy and strategic forecasting today are unthinkable without the widespread use of neural programming, which has been studied in detail, while the choice of the most effective strategy is impossible without the use of fuzzy logic methods.

Let us return, however, from long-term global forecasting to solving utilitarian, pragmatic tasks. These tasks nevertheless require the development of certain strategies, which increasingly resort to AI. Until recently, it was believed that it was impossible to entrust AI withrecruiting, training and retaining talents. First, this procedure requires great responsibility: the selection of personnel is the key to business success. Any great business strategy is just as bad as not having one if the wrong person is put in the wrong place. However, it is not so much the responsibility of those who deal with human resources, but the complexity of the task, which determines the competence of applicants based on dimensional values, such as the level of knowledge, possession of professional skills, self-motivation, self-esteem and perception of roles. It is required to carry out an evidence-based assessment of weakly formalized, or even non-formalized cognitive tasks in order to predict the qualities of the applicant, while one can only count on the evidence of the correctness of the solution in well-formalized tasks (for example, mathematical ones). Meanwhile, in the literature there is a description of the development experience based on a quantitative mathematical model of a predictive model that uses AI to determine the level of personnel competence to optimize their performance. The model tests discrete and continuous relationships between competency dimensions and their synergistic effects. Four AI algorithms are used to train a dataset containing 362 data items consisting of two optimized combinations of dimensional values in step 1 and 360 data items derived from a dimensional relationship that produces synergistic effects. The proposed model predicts the competence of applicants and compares this competence with the value, while providing an optimized labor productivity for an adequate selection of human resources [65].

Although academic research in the field of intelligent automation is growing noticeably, there is still no understanding of the role of using these technologies in human resource management (HRM) at the organizational (firms) and individual (employees) level. In this regard, it is interesting to systemize academic contributions to intelligent automation and identify its problems for HRM. The study provides a review of 45 articles examining artificial intelligence, robotics, and other advanced technologies in an HRM environment, selected from publications in leading HRM, International Business (IB), General Management (GM), and Information Management (IM) journals. The results show that intelligent automation technologies represent a new approach to managing employees and improving firm performance, accompanied by an analysis of significant problems not only at the technological, but also at the ethical levels. The impact of these technologies is focused on human resource management strategies: changing the job structure, the collaboration between humans, robots and AI, the ability to make adequate decisions in recruitment, as well as training and evaluation of their production activities [66].

Many problems arise in the use of biometric technologies that develop digital representations of bodily characteristics for identification of persons. Their widespread use has led to the institutionalization of registration and identification of persons, primarily in the field of the right to cross borders. Given the ever-increasing intensity of human movement (hopefully, only temporarily limited by the pandemic) around the globe for various purposes (tourism, various kinds of migration, academic exchanges, etc.), biometric technologies will soon take a dominant place in official registration procedures. However, as usual, technologies outrun the timely comprehension of their place in society, and it is this place that determines how digital procedures provide an objective and undeniable identification of a person. One of the first socio-anthropological studies based on ethnographic field work among technical developers, border police, forensic scientists, IT hacktivists and migrants emphasizes that in practice biometric technologies (as well as any other technologies) are embedded in specific social contexts, fraught with ambiguity and uncertainty, and are highly dependent on human interpretation and social identification [67].

In addition to the objective limitations of AI capabilities, questions of ethical, moral and philosophical limitations are being raised more and more. Decisions made by artificial intelligence (no matter what criteria of social good they are guided by) will have unobvious consequences related to the specifics of its implementation and people’s reactions to new technologies [60].

5. Intelligence and Predictive Ability

The modern view of the “anticipatory” activity of the living brain as a manifestation of mental activity [68], even in simple living beings (previously also proposed by L.V. Krushinsky [69], highlights the importance of this component of intelligence. The other side of the issue is an attempt at a cybernetic approach to living things, a view of “anticipation” as an element of self-regulation of complex systems. For example, the use of feedback and memory for medicine [70]. In Soviet systemic biological theories, such as, for example, the “theory of functional systems” by P.K. Anokhin [71] or the concept of motion control by N.A. Bernstein [72], the idea of anticipation, the “prediction of reality” is also key to explaining behavior.

Even “simple” living creatures, not usually considered particularly “intelligent”, for example, fish, exhibit a wide range of complex behavior [73]. In other words, not only the total “strength of intellect” of higher animals plays an important role in behavior. There are other important features. In our opinion, one of them is the ability to predict, closely related to the ability to adapt to environmental conditions. The fact that such everyday adaptation is successfully carried out not only by conditionally “simple” living creatures like fish, but even by jellyfish and other, even simpler living creatures that do not have a brain similar to humans, indicates that it is not a single “computing power” that determines efficient behavior.

The cognitive abilities of both living organisms and AI systems differ in the complexity of the functional characteristics available to a single living or artificial agent. In systemizing of cognitive abilities, there are a number of solutions that formalize the relation of ordering (cognitive “superiority”) depending on the set of available cognitive functions: these are cognitive scales.

Some examples of such scales include the BICA*AI community scale of cognition levels [74], which distinguishes five levels from reflexive to metacognitive and self-awareness, as well as the ConScale scale [75], based on the systematization of living organisms in terms of the level of cognitive development: from the uncontrolled behavior of an individual agent to “superconsciousness” (an agent operating with several “streams of consciousness”). In most cases, the highest levels of consciousness are characterized not only by predictive and proactive abilities, but also by the ability for (self-)reflection and meta-cognitive reasoning (i.e., conscious manipulation of one’s own cognitive functional characteristics).

At the same time, to understand the structure of cognitive characteristics that explicitly configure the functional capabilities of consciousness, it is necessary to systematically structure their contents, features, interaction, etc. During the last decade of the development of cognitive science and AI, the curiosity towards this vector of study created many cognitive architectures [76] which formalize consciousness from different points of view, primarily, from the point of view of building AI. In general, approaches to the construction of cognitive architectures are organized within two directions: symbolism, which deductively structures the key elements of consciousness, and the emergent approach, most often within the framework of connectivism, which inductively generates elements of consciousness based on the combination of atomic elements.

One of the main current problems in the field of cognitive architectures is working with functional elements of consciousness that remain difficult to systematize even at the level of metacognitive consciousness, for example, intuition, solving creative problems, inspiration, etc. A number of developers of cognitive architectures and individual AI solutions offer original approaches for the functional reproduction of such aspects of human consciousness: the Clarion cognitive architecture defines creative work as the interaction of explicit and implicit knowledge [77], the Darwinian Neurodynamics architecture proposes the use of evolutionary approaches to generate new ideas [78], the developers of the well-known AlphaGo solution for playing Go, see reinforcement learning as a way to formalize intuition and the “sense of Go”. However, these questions generally remain open despite the fact that many people actively use their own creative abilities. As a result, from the point of view of structuring the cognitive abilities of a natural intelligent agent (and, after that, an AI agent), there are at least two aspects that require systematization: the tools available to the agent (memory, thinking, will, ability to predict, make decisions etc.) and the “accessibility” of these tools to consciousness.

In this situation, metacognition becomes not just a tool for self-assessment, but a measurement that determines the ability of an agent to consciously and purposefully work with its functional capabilities. Within the framework of such a dimension, the management of the tools of the “mind” (self-criticism) becomes the most important knowledge for the agent, as well as competencies (self-reflection and skills (metacognition, as an accessible management tool). Despite the use of terminology that primarily implies living agents, such a structure can (and in many cases should) also characterize AI agents. The current focus on research on agency and self-awareness [79], in our opinion, is also closely related to anticipation, including when brain activity is modulated by internal signaling [80,81]. In this context, we can talk about intelligent systems in which there are other components that are different from the conventional “main” or “central brain”, which, interacting with each other, give the system the very qualities of “hypercognition” that were discussed above.

It can also be argued that only the “power”/“computing power” of an intellectual system, does not, in itself, determine the “agency” and the connection with the temporal characteristics of the environment, the self-correlation of the “agent” with the processes occurring in time and anticipation. In other words, the ability to anticipate is probably an ability that does not directly depend on the conditional “strength” of the intellect, if, for example, one considers fish to be less intelligent in terms of “computational power” than a person. Conversely, one can consider that the strength of intellect can increase by summing simple organisms (a swarm of bees, a school of fish) or a set of artificial elements (for example, transistors. At the same time, the ability to predict is critical for living beings. With this approach, the ability to predict is what distinguishes a mere set of “intelligent” elements from a truly intelligent system.

Also, in the context of interaction or control, anticipation can be closely related to the concept of “feedforward” introduced by I.A. Richards and widely developed in a multitude of areas including cognitive science, control, management, etc. [82,83] as a way of predicting future context or future state of a system. Moreover, the anticipation could be considered a prerequisite for proper feedforward in the closed loop between feedforward and feedback.

6. Event Prediction and Conditional Environment Constraints

Different factors affect the capacity of an agent to use available cognitive instruments. First, the objective uncertainty of the instruments. For example, in the case of evaluating predictive modeling [39] as an AI agent tool, one should take into account the structural, parametric, contextual uncertainty of the model, as well as the imperfection of input data and information (inaccuracy, inconsistency, incorrectness, integrity violations). As a result, the prediction obtained using such a model is associated with the uncertainty of the simulation results. Similarly, living agents (natural intelligence) rely on limited available tools, both external (available sources of information, means of communication, etc.) and internal (memory, ability to predict situations, physical skills, etc.). One can, however, objectively speak about the limitations of these tools and the uncertainty of their “output data”.

Secondly, in people’s everyday activities, systems of “quick” reasoning are often used, which in their essence are heuristics based on simplification and usually work in most cases. This approach, being evolutionarily justified, gave rise, on the one hand, to a system of reflexes, and, on the other hand, led to such phenomena as cognitive distortions [84], irrational behavior [85] even in areas that at first glance require the priority of rational reasoning (economics, medicine, etc.). Nevertheless, at the level of meta-cognitive reasoning, the assessment of internal cognitive abilities is critical for reliable and informed decision-making. Finally, social interaction in teams of various sizes has a significant impact on the work of intelligent agents. Meanwhile, collective behavior in a sense is also a heuristic that simplifies the reasoning of an individual agent and expands the available tools to the level of distributed intelligence. On the one hand, a distributed intelligent system is able to store a larger amount of knowledge, perceive and process a larger amount of information and, as a result, solve more complex problems [86]. On the other hand, such large-scale systems have increased complexity [87,88] and are characterized by the appearance of emergent properties. As a result, the systemic effect can lead not only to positive [86], but also to negative effects [89]. Moreover, a system with emergent properties may function optimally from the point of view of the system as a whole, but not optimally from the point of view of a single agent. And again, this can characterize the system positively or negatively, depending on the ratio of collective and individual good. The individual agent is under the influence of all three constraints: instrumental-objective, subjective, and collective (inter-subjective: from the point of view of the agent). At the same time, a decision-oriented intelligent agent must adequately control the operation of the available tools.On the one hand, AI expands the number of available tools, but at the current level of development, we can talk about distributed intelligent systems [90], in which natural and artificial intelligence agents operate simultaneously, each of which has its own set of (limited) tools, and (limited) cognitive abilities.

At the same time, AI becomes an element similar to a person, which means that it is required to have a similar level of responsibility, adequacy and even ethics. On the other hand, one must consider that, due to the emergent properties of the system on a large scale, “naturally ethical” people and “artificially ethical” AI can generate systemic effects that deviate from the established rules (including ethics). In order to prevent this from happening, it is necessary to take into account the hybrid, symbiotic nature of distributed intelligence systems that are emerging now and may appear in the future. This approach will allow to form the emergent properties of distributed intelligent systems in the “automatic mode” even in a situation of weak control at the level of the functional properties of individual agents.

With regard to a complex environment, such as, for example, human society, it will always be difficult for a real intellectual system (a living person or a hypothetical artificial intelligence) to choose an intelligent solution to fulfill the super-conditions specified by the current ethics. Similar paradoxes are described by Isaac Asimov in the fantastic construction of “laws of robotics”, when there is a problem of choice, for example, between the protection of the identity of the robot, the human owner, and other people. Today there is a search for a future complex (human and robotic) ethics, causing interest in the versions presented by science fiction writers like A. Azimov. Here, probably, the point of view that “time of Singularity cannot be met by current biological technologies, and that human-robotic physiology must be integrated for the Singularity to occur” [91] is the most correct.

7. Dual-Axis Intelligent System Assessment Scale

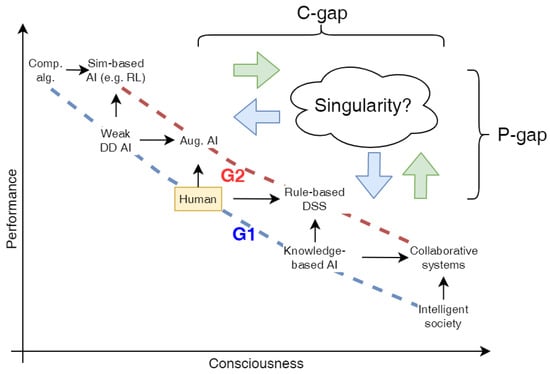

For a correct assessment of cognitive features, a one-dimensional scale isn’t sufficient. We propose a two-dimensional scale to achieve a comprehensive assessment of cognitive abilities. One of the dimensions of this scale is the level of available cognitive tools (conditional “computing power”, “performance”), and the other is the degree of adequate control of the tool by the forecasting agent. The advantage of such a scale is the possibility of a unified display of various systems: AI agents, live agents, distributed systems, etc. Considering such a scale (Figure 2), you can display the following on it, in descending order of performance and increasing order of adequacy:

Figure 2.

Dual-axis intelligent system assessment scale.

- algorithmic solutions with predefined logic;

- AI algorithms on data, including algorithms for machine learning, data mining, etc.;

- “classical” AI algorithms based on symbolic knowledge;

- mass intelligent systems (as an ideal abstraction of “superconsciousness” in the terminology of ConsScale [75]).

A person within this scale is in an “average” position, inferior in performance to computer systems (1–2), but significantly surpassing them in the mastery of available tools. Meanwhile, algorithms based on knowledge (3) are on the other side of the scale, because, due to a limited knowledge base, they have a smaller “outlook”, but often greater stability and interpretability, which can be considered as a level of awareness. While mass intelligent systems (4) have common conditional knowledge/consciousness, they also exhibit significant inconsistency.

These systems can be considered relatively homogeneous. Moreover, it is possible to consider the hybridization of such systems, which in many situations makes it possible to build the second (G2) generation of intelligent systems, which can, in the long term, surpass the first (G1). Thus:

- if we combine deterministic algorithms with algorithms on data (1 + 2), we get solutions such as reinforcement learning [92] and, in particular, imitation learning [93];

- if we combine AI algorithms based on data and human intelligence (2 + H), an actual area of “augmented intelligence” (augmented AI) or human-centered AI (human-ceneterd AI) is formed [94,95];

- within the framework of combining human agents and knowledge-based systems (H + 3), scenarios of human-machine interaction arise, which are also within the framework of expert systems, decision support systems, etc.;

- combining knowledge-based systems with large-scale systems (3 + 4) corresponds to knowledge exchange systems, distributed learning systems, etc. [96,97].

It should be noted that the solutions of the second generation seem to be more promising and relevant today in terms of AI development and building information systems. Nevertheless, even within the framework of a multidimensional scale, it remains possible to improve both the performance of the tools available to agents (P-gap) and the appropriateness of their use (C-gap). In the future, controllable complex systems, having emergence, can create precedents for self-organizing distributed intelligent systems [90] with desired or unexpected properties.

This scale can be augmented with other dimensions. An obvious candidate for a third dimension can be the mass character (number) of intelligent systems. Large-scale mass systems can be present at various levels:

- computing systems [98] and workflow management systems) [99] can serve as an example for the level of deterministic algorithms;

- at the level of AI algorithms on data (2) this can be distributed data processing systems [100], including Internet of Things (IoT) systems [101];

- at the human level (H) this can be social systems;

- at the level of knowledge systems this can be systems of intelligent agents [102];

- at the level of mass systems this can be multi-agent systems, including hybrid, multi-agent reinforcement learning systems, etc. [103].

However, when subjecting intelligent systems to an increasingly sophisticated classification, two conditional axes should be considered basic: “brute” power (productivity) and “fine” power (adequacy, anticipation). These two axes mainly determine the integral and contextual effectiveness of an intelligent system. Thus, the two key properties that characterize the “mind” of an intellectual system are, on one hand, “power” and “productivity”, and on the other, “adequacy” and “anticipativity”; brute and intelligent power at the same time.

An important aspect of the developed approach is applicability to various scenarios, different kinds of intelligent agents, and diverse problem domains. One of the most important directions of such application is the evaluation of the role and perceiving characteristics of AI agents in complex problem domains such as medicine, industry, etc. Here AI agents may be considered autonomous decision-makers or as “partners” to human decision-makers. In both cases, the proposed idea of dual-axis evaluation can be further extended to the high-level evaluation of trust and fairness in AI agents. And even more, to the practical question of finding the right role for AI in existing or prospective domain-specific processes.

Concerning the development of the views proposed here, we note the possibility, as we believe, of conducting numerical experiments (“in silico”) based on the concept of a “two-dimensional intelligence scale”. It should be specially noted that, in our opinion, “in silico” experiments are only a trend emerging today for research on intelligence and behavior, different from purely psychological approaches (such as [104]), or from purely physiological ones, as in the case of Pavlov’s “higher nervous activity” [18]. It also differs from purely philosophical reflections, where mental constructions can often turn out to be conjecture, completing the construction of possible variants of reality in the mind, but not necessarily coinciding with them in practice (for example, in the debate around homeopathy [105]), and beyond the reach of any evidences or tests other than reaching agreement among authoritative authors. Approaches to the development of experimental research into the nature of intelligence seem to be attempts at various types of modeling, design, or discussion of next-generation expert systems. For example [106] discusses extending such systems with the possibilities of representative plasticity, attention switching, integration of the subject area, creativity, and concept formation to eliminate uncertainties. In other words, today there are a large number of works related to the topic of AI in purely practical problems (computer sciences), and the use of the solutions formed there in the form of more abstract generalizations, concepts for philosophy, is, in our opinion, a natural process. And, conversely, it becomes possible to test abstract assumptions by modeling them, drawing on the arsenal of computer science that has appeared today. In this sense, the possibility of conducting even simplified, limited experimental studies, in our opinion, can significantly complement and expand our understanding of the phenomena under study. We can conditionally call our approach “experimental philosophy”. However, its implementation requires suitable conditions for modeling, which also contain possible simplifications.

8. Conclusions

Constantly within the time stream, we often take the flow of time from the past to the future for granted, as well as the development of physical, chemical, biological and other processes in time. When evaluating computers, we only focus on computing speed: this many calculations per second. However, the key property of a true intelligent system is the ability to correlate the solution being prepared with the passage of time, with the same time flow within which the system is located. This can be likened to the calculation of future moves in chess. However, chess has very little predictive complexity, compared to the real challenges that even small fish face, taking into account conditions and options. For an intellectual system, this quality can also be conditionally designated as “range of vision” in time of changing conditions, the ability to anticipate. In the dual-axis scale we propose, this “range of vision” is represented as a modulating, “subtle” force that affects the achievable efficiency of “brute” power, which in turn is represented as “performance” or “computing power”. At the same time, the “range of vision” can also be designated as “adequacy”, correspondence to the context, temporal characteristics of what is known. The ability to anticipate is inherent even in the simplest living organisms, for example, protozoa capable of escaping drying out by crawling towards moisture, or crawling from light to shade, or vice versa. Taking into account that anticipation is not necessarily associated with a gigantic individual “computing power” (developed brain), we highlight it as a separate quality of an intellectual system. Now, if we evaluate an intelligent system not only by the number of transistors, calculations per second, and other attributes of “brute” force, but also by its relationship with the environment (conditions) that changes over time, we can get a more accurate, understandable characteristic of the “mind” of such a system. Thus, we are approaching the concept of common sense.

Author Contributions

Conceptualization, O.V.K. and S.V.K.; methodology, O.V.K. and N.G.B.; software, S.V.K.; validation, O.V.K. and N.G.B.; formal analysis, S.V.K.; investigation, O.V.K. and S.V.K.; resources, O.V.K.; data curation, S.V.K.; writing—original draft preparation, O.V.K., S.V.K. and N.G.B.; writing—review and editing, S.V.K.; visualization, S.V.K.; supervision, O.V.K.; project administration, O.V.K.; funding acquisition, O.V.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Feyerabend, P. Against Method: Outline of An Anarchistic Theory of Knowledge; Verso Books: New York, NY, USA, 2020. [Google Scholar]

- Da Silva, S.G.; Tehrani, J.J. Comparative phylogenetic analyses uncover the ancient roots of Indo-European folktales. R. Soc. Open Sci. 2016, 3, 150645. [Google Scholar] [CrossRef]

- Noone, C.; Hogan, M.J. A randomised active-controlled trial to examine the effects of an online mindfulness intervention on executive control, critical thinking and key thinking dispositions in a university student sample. BMC Psychol. 2018, 6, 13. [Google Scholar] [CrossRef] [PubMed]

- Hodza, N. Magic Bowl. Indian fairy TALES (Translated); Detgiz: Moscow, Russia, 1956. [Google Scholar]

- Demetriou, A.; Spanoudis, G. From Cognitive Development to Intelligence: Translating Developmental Mental Milestones into Intellect. J. Intell. 2017, 5, 30. [Google Scholar] [CrossRef] [PubMed]

- Schneider, W.J.; McGrew, K.S. The Cattell-Horn-Carroll model of intelligence. In Contemporary Intellectual Assessment: Theories, Tests, and Issues; Flanagan, D.P., Harrison, P.L., Eds.; The Guilford Press: New York, NY, USA, 2012; Chapter 4; pp. 99–144. [Google Scholar]

- Smagorinsky, P. The Relation between Emotion and Intellect: Which Governs Which? Integr. Psychol. Behav. Sci. 2021, 55, 769–778. [Google Scholar] [CrossRef] [PubMed]

- Todd, R.M.; Miskovic, V.; Chikazoe, J.; Anderson, A.K. Emotional Objectivity: Neural Representations of Emotions and Their Interaction with Cognition. Annu. Rev. Psychol. 2020, 71, 25–48. [Google Scholar] [CrossRef] [PubMed]

- Shih, Y.L.; Lin, C.Y. The relationship between action anticipation and emotion recognition in athletes of open skill sports. Cogn. Process. 2016, 17, 259–268. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Z.; Thornton, M.A.; Tamir, D.I. Accurate emotion prediction in dyads and groups and its potential social benefits. Emotion 2022, 22, 1030–1043. [Google Scholar] [CrossRef]

- Thornton, M.A.; Tamir, D.I. Mental models accurately predict emotion transitions. Proc. Natl. Acad. Sci. USA 2017, 114, 5982–5987. [Google Scholar] [CrossRef]

- Russell, S.J.; Norvig, P. Artificial Intelligence: A Modern Approach; Pearson Education, Inc.: London, UK, 2009. [Google Scholar]

- Turing, A.M. I. – Computing Machinery and Intelligence. Mind 1950, LIX, 433–460. [Google Scholar] [CrossRef]

- Miller, G.A. Cognitive Science. Science 1981, 214, 57. [Google Scholar] [CrossRef]

- Chomsky, N. Universals of Human Nature. Psychother. Psychosom. 2005, 74, 263–268. [Google Scholar] [CrossRef] [PubMed]

- Anderson, J.R. The Architecture of Cognition; Psychology Press: New York, NY, USA, 2013. [Google Scholar] [CrossRef]

- Samoilov, V.O. Ivan Petrovich Pavlov (1849–1936)*. J. Hist. Neurosci. 2007, 16, 74–89. [Google Scholar] [CrossRef]

- Gantt, W.H. Pavlov’s “higher nervous activity”. Cond. Reflex 1968, 3, 281–286. [Google Scholar] [CrossRef]

- Anokhin, P.K. Biology and Neurophysiology of the Conditioned Reflex; Medicine: Moscow, Russia, 1968. [Google Scholar]

- Maron, M. Design principles for an intelligent machine. IEEE Trans. Inf. Theory 1962, 8, 179–185. [Google Scholar] [CrossRef]

- Macpherson, T.; Churchland, A.; Sejnowski, T.; DiCarlo, J.; Kamitani, Y.; Takahashi, H.; Hikida, T. Natural and Artificial Intelligence: A brief introduction to the interplay between AI and neuroscience research. Neural Netw. 2021, 144, 603–613. [Google Scholar] [CrossRef] [PubMed]

- Dubé, E.; Gagnon, D.; Nickels, E.; Jeram, S.; Schuster, M. Mapping vaccine hesitancy—Country-specific characteristics of a global phenomenon. Vaccine 2014, 32, 6649–6654. [Google Scholar] [CrossRef]

- Tram, K.H.; Saeed, S.; Bradley, C.; Fox, B.; Eshun-Wilson, I.; Mody, A.; Geng, E. Deliberation, Dissent, and Distrust: Understanding Distinct Drivers of Coronavirus Disease 2019 Vaccine Hesitancy in the United States. Clin. Infect. Dis. 2022, 74, 1429–1441. [Google Scholar] [CrossRef]

- Cipora, K.; Santos, F.H.; Kucian, K.; Dowker, A. Mathematics anxiety—Where are we and where shall we go? Ann. N. Y. Acad. Sci. 2022, 1513, 10–20. [Google Scholar] [CrossRef]

- Orbach, L.; Fritz, A. A latent profile analysis of math anxiety and core beliefs toward mathematics among children. Ann. N. Y. Acad. Sci. 2021, 1509, 130–144. [Google Scholar] [CrossRef]

- Gorman, J.M. Comorbid depression and anxiety spectrum disorders. Depress. Anxiety 1996, 4, 160–168. [Google Scholar] [CrossRef]

- Sun, X.; So, S.H.; Chan, R.C.K.; Chiu, C.D.; Leung, P.W.L. Worry and metacognitions as predictors of the development of anxiety and paranoia. Sci. Rep. 2019, 9, 14723. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Shakeshaft, N.; Schofield, K.; Malanchini, M. Anxiety is not enough to drive me away: A latent profile analysis on math anxiety and math motivation. PLoS ONE 2018, 13, e0192072. [Google Scholar] [CrossRef] [PubMed]

- Diagnostic and Statistical Manual of Mental Disorders: DSM-5; American Psychiatric Association: Arlington, VA, USA, 2013; p. 947.

- Arul, B.; Lee, D.; Marzen, S. A Proposed Probabilistic Method for Distinguishing Between Delusions and Other Environmental Judgements, With Applications to Psychotherapy. Front. Psychol. 2021, 12, 674108. [Google Scholar] [CrossRef] [PubMed]

- Foucault, M. Madness and Civilization: A History of Insanity in the Age of Reason; Vintage: Paris, France, 2001. [Google Scholar]

- Shultz, T.R. The Bayesian revolution approaches psychological development. Dev. Sci. 2007, 10, 357–364. [Google Scholar] [CrossRef] [PubMed]

- Arnold, V.I. Catastrophe Theory; Springer: Berlin/Heidelberg, Germany, 1992. [Google Scholar] [CrossRef]

- Bak, P.; Tang, C.; Wiesenfeld, K. Self-organized criticality: An explanation of the 1/f noise. Phys. Rev. Lett. 1987, 59, 381–384. [Google Scholar] [CrossRef] [PubMed]

- Rokeach, M.; Fruchter, B. A factorial study of dogmatism and related concepts. J. Abnorm. Soc. Psychol. 1956, 53, 356–360. [Google Scholar] [CrossRef] [PubMed]

- Vacchiano, R.B.; Strauss, P.S.; Hochman, L. The open and closed mind: A review of dogmatism. Psychol. Bull. 1969, 71, 261–273. [Google Scholar] [CrossRef]

- Ott, E. Chaos in Dynamical Systems; Cambridge University Press: Cambridge, UK, 2002. [Google Scholar] [CrossRef]

- Boaretto, B.R.R.; Budzinski, R.C.; Rossi, K.L.; Prado, T.L.; Lopes, S.R.; Masoller, C. Discriminating chaotic and stochastic time series using permutation entropy and artificial neural networks. Sci. Rep. 2021, 11, 15789. [Google Scholar] [CrossRef]

- Walker, W.; Harremoës, P.; Rotmans, J.; Van Der Sluijs, J.; Van Asselt, M.; Janssen, P.; Krayer Von Krauss, M. Defining Uncertainty: A Conceptual Basis for Uncertainty Management in Model-Based Decision Support. Integr. Assess. 2003, 4, 5–17. [Google Scholar] [CrossRef]

- Murdoch, W.J.; Singh, C.; Kumbier, K.; Abbasi-Asl, R.; Yu, B. Definitions, methods, and applications in interpretable machine learning. Proc. Natl. Acad. Sci. USA 2019, 116, 22071–22080. [Google Scholar] [CrossRef]

- Abdul, A.; Vermeulen, J.; Wang, D.; Lim, B.Y.; Kankanhalli, M. Trends and Trajectories for Explainable, Accountable and Intelligible Systems: An HCI Research Agenda. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–18. [Google Scholar] [CrossRef]

- Diana, G.; Sainsbury, T.T.J.; Meyer, M.P. Bayesian inference of neuronal assemblies. PLoS Comput. Biol. 2019, 15, e1007481. [Google Scholar] [CrossRef] [PubMed]

- Kutschireiter, A.; Surace, S.C.; Sprekeler, H.; Pfister, J.P. Nonlinear Bayesian filtering and learning: A neuronal dynamics for perception. Sci. Rep. 2017, 7, 8722. [Google Scholar] [CrossRef] [PubMed]

- Pearl, P.; Mackenzie, D. The Book of Why: The New Science of Cause and Effect; Basic Books: New York, NY, USA, 2018. [Google Scholar]

- Antonacci, Y.; Minati, L.; Faes, L.; Pernice, R.; Nollo, G.; Toppi, J.; Pietrabissa, A.; Astolfi, L. Estimation of Granger causality through Artificial Neural Networks: Applications to physiological systems and chaotic electronic oscillators. PeerJ Comput. Sci. 2021, 7, e429. [Google Scholar] [CrossRef] [PubMed]

- Herzig, A.; Lorini, E.; Hubner, J.F.; Vercouter, L. A logic of trust and reputation. Log. J. IGPL 2010, 18, 214–244. [Google Scholar] [CrossRef]

- Allen, C.; Smit, I.; Wallach, W. Artificial Morality: Top-down, Bottom-up, and Hybrid Approaches. Ethics Inf. Technol. 2005, 7, 149–155. [Google Scholar] [CrossRef]

- Santow, E. Emerging from AI utopia. Science 2020, 368, 9. [Google Scholar] [CrossRef] [PubMed]

- Halabi, M.E.; Mitrović, S.; Norouzi-Fard, A.; Tardos, J.; Tarnawski, J. Fairness in Streaming Submodular Maximization: Algorithms and Hardness. arXiv 2020, arXiv:2010.07431. [Google Scholar]

- Wang, X.; Wang, S.; Chen, P.Y.; Wang, Y.; Kulis, B.; Lin, X.; Chin, S. Protecting Neural Networks with Hierarchical Random Switching: Towards Better Robustness-Accuracy Trade-off for Stochastic Defenses. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence, Macao, China, 10–16 August 2019; pp. 6013–6019. [Google Scholar] [CrossRef]

- Gabriel, I. Artificial Intelligence, Values, and Alignment. Minds Mach. 2020, 30, 411–437. [Google Scholar] [CrossRef]

- Kovalchuk, S.V.; Kopanitsa, G.D.; Derevitskii, I.V.; Matveev, G.A.; Savitskaya, D.A. Three-stage intelligent support of clinical decision making for higher trust, validity, and explainability. J. Biomed. Inform. 2022, 127, 104013. [Google Scholar] [CrossRef]

- Wang, D.; Weisz, J.D.; Muller, M.; Ram, P.; Geyer, W.; Dugan, C.; Tausczik, Y.; Samulowitz, H.; Gray, A. Human-AI Collaboration in Data Science: Exploring Data Scientists’ Perceptions of Automated AI. Proc. ACM Hum. Comput. Interact. 2019, 3, 1–24. [Google Scholar] [CrossRef]

- Geyer, W.; Weisz, J.; Pinhanez, C.S. What Is Human-Centered AI? 2022. Available online: https://research.ibm.com/blog/what-is-human-centered-ai (accessed on 24 April 2023).

- Zheng, N.N.; Liu, Z.Y.; Ren, P.J.; Ma, Y.Q.; Chen, S.T.; Yu, S.Y.; Xue, J.R.; Chen, B.D.; Wang, F.Y. Hybrid-augmented intelligence: Collaboration and cognition. Front. Inf. Technol. Electron. Eng. 2017, 18, 153–179. [Google Scholar] [CrossRef]

- Weidele, D.K.I.; Weisz, J.D.; Oduor, E.; Muller, M.; Andres, J.; Gray, A.; Wang, D. AutoAIViz: Opening the blackbox of automated artificial intelligence with conditional parallel coordinates. In Proceedings of the 25th International Conference on Intelligent User Interfaces, Cagliari, Italy, 17–20 March 2020; pp. 308–312. [Google Scholar] [CrossRef]

- Cavalin, P.; Ribeiro, V.H.A.; Vasconcelos, M.; Pinhanez, C.; Nogima, J.; Ferreira, H. Towards a Method to Classify Language Style for Enhancing Conversational Systems. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, 18–22 July 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Wang, D.; Andres, J.; Weisz, J.D.; Oduor, E.; Dugan, C. AutoDS: Towards Human-Centered Automation of Data Science. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–12. [Google Scholar] [CrossRef]

- Wang, D.; Yang, Q.; Abdul, A.; Lim, B.Y. Designing Theory-Driven User-Centric Explainable AI. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–15. [Google Scholar] [CrossRef]

- Russell, S.J. Human Compatible: Artificial Intelligence and the Problem of Control; Penguin Books: London, UK, 2019. [Google Scholar]

- Deleuze, J.; Guattari, F. What Is Philosophy? Aletheia: Davao City, Philippines, 1998. [Google Scholar]

- Burovsky, A.M. Another planetary revolution or a unique singularity? Hist. Psychol. Sociol. Hist. 2018, 11, 61–112. [Google Scholar] [CrossRef]

- Nazaretyan, A.P.; Karnatskaya, L.A. Historical and psychological background of global challenges. Dev. Personal. 2017, 20–46. [Google Scholar]

- Averkin, A.N.; Yarushev, S.A.; Pavlov, Y.V. Cognitive hybrid decision support and forecasting systems. Softw. Prod. Syst. 2017, 36, 632–642. [Google Scholar] [CrossRef]

- Chen, C.C.; Wei, C.C.; Chen, S.H.; Sun, L.M.; Lin, H.H. AI Predicted Competency Model to Maximize Job Performance. Cybern. Syst. 2022, 53, 298–317. [Google Scholar] [CrossRef]

- Vrontis, D.; Christofi, M.; Pereira, V.; Tarba, S.; Makrides, A.; Trichina, E. Artificial intelligence, robotics, advanced technologies and human resource management: A systematic review. Int. J. Hum. Resour. Manag. 2022, 33, 1237–1266. [Google Scholar] [CrossRef]

- Grünenberg, K.; Møhl, P.; Olwig, K.F.; Simonsen, A. Issue Introduction: IDentities and Identity: Biometric Technologies, Borders and Migration. Ethnos 2022, 87, 211–222. [Google Scholar] [CrossRef]

- Abbott, A. Inside the mind of an animal. Nature 2020, 584, 182–185. [Google Scholar] [CrossRef]

- Krushinsky, L.V. Biological Foundations of Rational Activity. Evolutionary and Physiological-Genetic Aspects of Behavior; Moscow State University: Moscow, Russia, 1977. [Google Scholar]

- Nadin, M. (Ed.) Anticipation and Medicine; Springer International Publishing: Cham, Switzerland, 2017. [Google Scholar] [CrossRef]

- Anokhin, P. Nodular Mechanism of Functional Systems as a Self-regulating Apparatus. In Progress in Brain Research; Elsevier: Amsterdam, The Netherlands, 1968; Volume 22, pp. 230–251. [Google Scholar] [CrossRef]

- Bernstein, N.A. The Co-Ordination and Regulation of Movements; Pergamon Press: Oxford, UK, 1967. [Google Scholar]

- Salena, M.G.; Turko, A.J.; Singh, A.; Pathak, A.; Hughes, E.; Brown, C.; Balshine, S. Understanding fish cognition: A review and appraisal of current practices. Anim. Cogn. 2021, 24, 395–406. [Google Scholar] [CrossRef]

- BICA*AI, Hierarchy of Levels of Cognition. Available online: https://bica.ai/hierarchy-of-levels-of-cognition/ (accessed on 24 April 2023).

- Arrabales, R. Conscious-Robots.com | ConsScale—A Machine Consciousness Scale. Available online: https://www.conscious-robots.com/consscale/index.html (accessed on 24 April 2023).

- Kotseruba, I.; Tsotsos, J.K. 40 years of cognitive architectures: Core cognitive abilities and practical applications. Artif. Intell. Rev. 2020, 53, 17–94. [Google Scholar] [CrossRef]

- Hélie, S.; Sun, R. Incubation, insight, and creative problem solving: A unified theory and a connectionist model. Psychol. Rev. 2010, 117, 994–1024. [Google Scholar] [CrossRef] [PubMed]

- Fedor, A.; Zachar, I.; Szilágyi, A.; Öllinger, M.; De Vladar, H.P.; Szathmáry, E. Cognitive Architecture with Evolutionary Dynamics Solves Insight Problem. Front. Psychol. 2017, 8, 427. [Google Scholar] [CrossRef]

- Haggard, P. Sense of agency in the human brain. Nat. Rev. Neurosci. 2017, 18, 196–207. [Google Scholar] [CrossRef] [PubMed]

- Kubryak, O. The Anticipating Heart. In Anticipation and Medicine; Nadin, M., Ed.; Springer International Publishing: Cham, Switzerland, 2017; pp. 49–65. [Google Scholar] [CrossRef]

- Koreki, A.; Goeta, D.; Ricciardi, L.; Eilon, T.; Chen, J.; Critchley, H.D.; Garfinkel, S.N.; Edwards, M.; Yogarajah, M. The relationship between interoception and agency and its modulation by heartbeats: An exploratory study. Sci. Rep. 2022, 12, 13624. [Google Scholar] [CrossRef] [PubMed]

- Richards, I.A. Communication between men: The meaning of language. In Proceedings of the Transactions of 8th Macy Conference-Cybernetics: Circular Causal and Feedback Mechanisms in Biological and Social System; Josiah Macy, Jr. Foundation: New York, NY, USA, 1952. [Google Scholar]

- Logan, R.K. Feedforward, IA Richards, cybernetics and Marshall McLuhan. Systema Connect. Catter Life Cult. Technol. 2015, 3, 177–185. [Google Scholar]

- O’Sullivan, E.; Schofield, S. Cognitive Bias in Clinical Medicine. J. R. Coll. Physicians Edinb. 2018, 48, 225–232. [Google Scholar] [CrossRef] [PubMed]

- Becker, G.S. Irrational Behavior and Economic Theory. J. Political Econ. 1962, 70, 1–13. [Google Scholar] [CrossRef]

- Surowiecki, J. The Wisdom of Crowds: Why the Many Are Smarter Than the Few and How Collective Wisdom Shapes Business, Economies, Societies and Nations; Doubleday, Anchor: New York, NY, USA, 2004. [Google Scholar]

- Sayama, H. Introduction to the Modeling and Analysis of Complex Systems; Color, D., Ed.; Open SUNY Textbooks, Milne Library: Geneseo, NY, USA, 2015. [Google Scholar]

- Boccara, N. Modeling Complex Systems. In Graduate Texts in Physics; Springer: New York, NY, USA, 2010. [Google Scholar] [CrossRef]

- Mackay, C. Extraordinary Popular Delusions and The Madness of Crowds; CreateSpace Independent Publishing Platform: New York, NY, USA, 1841; Reprint edition (23 July 2011). [Google Scholar]

- Guleva, V.; Shikov, E.; Bochenina, K.; Kovalchuk, S.; Alodjants, A.; Boukhanovsky, A. Emerging Complexity in Distributed Intelligent Systems. Entropy 2020, 22, 1437. [Google Scholar] [CrossRef]

- Martin, A.K. Singularity now: Using the ventricular assist device as a model for future humanrobotic physiology. Rom. J. Anaesth. Intensive Care 2016, 23, 77–81. [Google Scholar] [CrossRef][Green Version]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction. In Adaptive Computation and Machine Learning; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Hussein, A.; Gaber, M.M.; Elyan, E.; Jayne, C. Imitation Learning: A Survey of Learning Methods. ACM Comput. Surv. 2018, 50, 1–35. [Google Scholar] [CrossRef]

- Glowacka, D.; Howes, A.; Jokinen, J.P.; Oulasvirta, A.; Şimşek, O. RL4HCI: Reinforcement Learning for Humans, Computers, and Interaction. In Proceedings of the Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–3. [Google Scholar] [CrossRef]

- Harris, D.; Duffy, V.; Smith, M.; Stephanidis, C. (Eds.) Human-Centered Computing; CRC Press: Boca Raton, FL, USA, 2019. [Google Scholar] [CrossRef]

- Zhen, L.; Jiang, Z.; Song, H. Distributed recommender for peer-to-peer knowledge sharing. Inf. Sci. 2010, 180, 3546–3561. [Google Scholar] [CrossRef]

- Alavi, M.; Marakas, G.M.; Yoo, Y. A Comparative Study of Distributed Learning Environments on Learning Outcomes. Inf. Syst. Res. 2002, 13, 404–415. [Google Scholar] [CrossRef]

- Buyya, R.; Thampi, S.M. (Eds.) Intelligent Distributed Computing; Volume 321, Advances in Intelligent Systems and Computing; Springer International Publishing: Cham, Switzerland, 2015. [Google Scholar] [CrossRef]

- Liu, J.; Pacitti, E.; Valduriez, P.; Mattoso, M. A Survey of Data-Intensive Scientific Workflow Management. J. Grid Comput. 2015, 13, 457–493. [Google Scholar] [CrossRef]

- Isah, H.; Abughofa, T.; Mahfuz, S.; Ajerla, D.; Zulkernine, F.; Khan, S. A Survey of Distributed Data Stream Processing Frameworks. IEEE Access 2019, 7, 154300–154316. [Google Scholar] [CrossRef]

- Li, S.; Xu, L.D.; Zhao, S. The internet of things: A survey. Inf. Syst. Front. 2015, 17, 243–259. [Google Scholar] [CrossRef]

- Wooldridge, M.; Jennings, N.R. Intelligent agents: Theory and practice. Knowl. Eng. Rev. 1995, 10, 115–152. [Google Scholar] [CrossRef]

- Zhang, K.; Yang, Z.; Başar, T. Multi-Agent Reinforcement Learning: A Selective Overview of Theories and Algorithms. arXiv 2021, arXiv:1911.10635. [Google Scholar]

- Miller, G.A. Trends and debates in cognitive psychology. Cognition 1981, 10, 215–225. [Google Scholar] [CrossRef]

- Eskinazi, D. Madingley, Madding, Mad scenarios: An exercise in futurology. Br. Homeopath. J. 2000, 89, 146–149. [Google Scholar] [CrossRef]

- Vigo, R.; Zeigler, D.E.; Wimsatt, J. Uncharted Aspects of Human Intelligence in Knowledge-Based “Intelligent” Systems. Philosophies 2022, 7, 46. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).