1. Introduction

We consider a problem of prediction (or inference or estimation). We seek to identify

where

and

are positive and finite real numbers that exhibit a statistical dependence

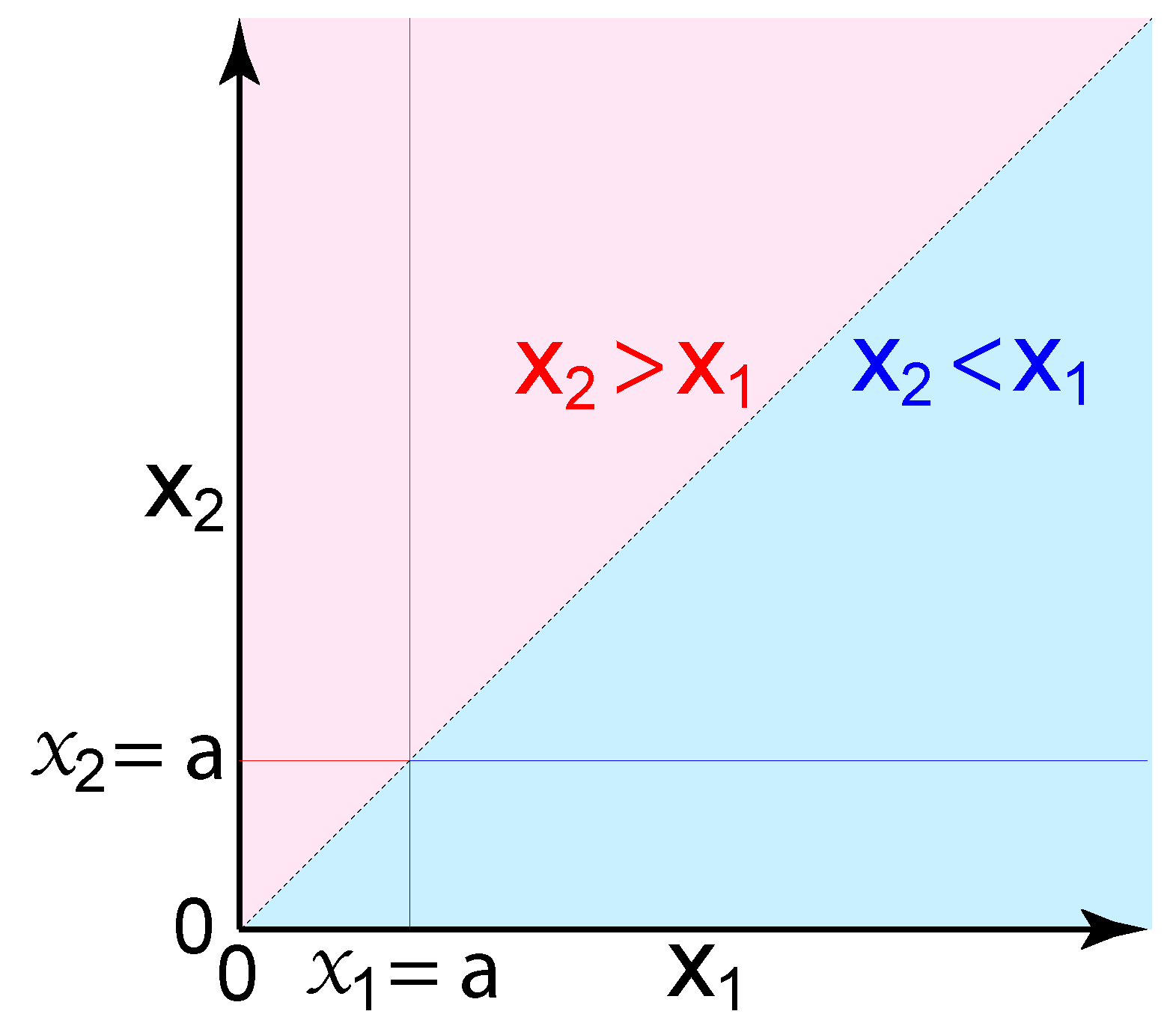

, and there is no additional information that is relevant to probability. These variables could represent physical sizes or magnitudes, such as distances, volumes, or energies. Given only one known size (a sample), what is the probability that an unknown size is larger versus smaller? The answer is not obvious, since there are infinitely more possible sizes corresponding to ‘larger’ than ‘smaller’ (

Figure 1).

That such a basic question does not already have a recognized answer is explained not by its mathematical difficulty, but by the remarkable controversy that has surrounded the definition of probability over the last century. We cannot hope to overcome longstanding disputes here, but we try to clarify issues essential to our conclusion (at the cost of introducing substantial text to what would otherwise be concise and routine mathematics).

The question we pose does not involve any frequency; thus it is entirely nonsensical if probability measures frequency. The “frequentist” definition of probability dominates conventional statistics. It was promoted by Fisher and others in an effort to associate probability with objectivity and ontology, but it has severe faults and limitations [

1]. We follow instead the Bayesian definition, in which probability concerns prediction (inference) and information (evidence) and epistemology.

1A precise definition of Bayesian probability requires one to specify the exact mathematical relation used to derive probability from information (using criteria such as indifference, transformation invariance, and maximum entropy). This divides Bayesians into two camps. The “objectivists” consider the relation between information and probability to be deterministic [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10,

11,

12], whereas the “subjectivists” consider it to be indeterminate [

13,

14,

15,

16,

17,

18,

19,

20] (for discussion, see References [

1,

11,

12,

17]). We follow the objective Bayesian approach, developed most notably by Jeffreys, Cox, and Jaynes [

1,

2,

3,

4,

5,

6,

7].

All Bayesians agree that information is subjective, insofar as it varies across observers (in our view, an observer

is information, and information

is matter, therefore being local in space and time). The objectivists consider probability to be objective in the sense that properly derived probabilities rationally and correctly quantify information and prediction, just as numbers can objectively quantify distance and energy. Therefore, once the information in our propositions (e.g.,

) is rigorously defined, and sufficient axioms are established, there should exist a unique and objectively correct probability

that is fully determined by the information. In contrast, subjective Bayesians do not accept that there exists such a uniquely correct probability [

13,

14,

16,

17,

18,

20]; therefore they are predisposed to reject our conclusion.

2Objectivity requires logical consistency. All rigorous Bayesians demand a mathematical system in which an observer with fixed information is logically consistent (e.g., never assigns distinct probabilities to the same proposition). However, subjectivists do not demand logical consistency

across observers (

Section 3.2). They accept that a Bayesian probability could differ between two observers despite each having identical evidence (see Reference [

14], pp. 83–87). In that sense, they see probability as a local and personal measure of evidence, with the relation of probability to evidence across observers being indeterminate. In contrast, the objective Bayesian ideal is a universal mathematics of probability in which the same rules apply to all observers,

3 so that probability measures evidence, and evidence uniquely determines probability.

The objective ideal can only be achieved by adopting a sufficient set of axioms (

Section 3). We see failure to do so as the critical factor explaining why subjectivists believe the relation between information and probability to be indeterminate (

Section 3.2). A mathematical system must be defined by axioms that are neither proven nor provable (they cannot be falsified). For example, there are axioms that define real numbers, and Kolmogorov’s axiom that a probability is a positive real number. These axioms are subjective insofar as others could have been chosen, and there is not a uniquely correct set of axioms.

4 There may be any number of axiomatic systems that are each internally consistent and able to correctly (objectively) measure evidence across all observers. We believe the system of Jeffreys, Cox, and Jaynes to be one of these. Thus, we deduce

from our axioms, but we do not claim that our axioms are uniquely correct. We claim only that they are commonly used and useful standards.

We are interested in knowledge of only a single ’sample’ because we believe it to be a simple yet realistic model of prediction by a physical observer, such as a sensor in a brain or robot (

Section 10) [

21,

22]. Jaynes often used the example of an “ill informed robot” to clarify the relation of probabilities to information [

1]. Unlike a robot, a scientist given only one sample can choose to collect more data before calculating probabilities and communicating results to other scientists. In contrast, a robot must continually predict and act upon its dynamically varying environment in “real time” given whatever internal information it happens to have here and now within its sensors and actuators. If the only information in a sensor at a single moment in time is a single energy

, then the probability that an external or future energy

is larger must be conditional on only

, as expressed by

. At that moment,

is fixed, and it is not an option for the sensor to gain more information or to remain agnostic. This example helps to clarify the role of probability theory, for which the fundamental problem is not to determine what the relevant information is, or should be, but rather to derive probabilities once the information has been precisely and explicitly defined.

2. Notation

We use bold and stylized font for variables (samples)

, and standard font for their corresponding state spaces

(note that these are not technically “random variables”; see

Section 4.2). Therefore

is an element from the set of real numbers

(

). Whereas these represent dependent variables,

are independent of one another (

Section 6.3). The subscripts are arbitrary and exchangeable labels; therefore the implication of a sequence is an unintended consequence of this notation.

We denote arbitrary numbers

. For each uppercase letter representing real-numbered “location”, we have a corresponding lowercase letter representing a positive real “scale”,

(

Section 4.2). Therefore lowercase letters symbolize positive real numbers (except

, which symbolizes a physical size and is not a number).

As described in

Section 4, all probabilities are conditional on prior information

I and

J, as well as

K in cases involving both

and

(e.g.,

and

). For simplicity, this conditionality is not shown in notation.

We use uppercase

P for the probability of discrete propositions, with defined intervals,

, as in the case of a cumulative distribution function (CDF). We use the lowercase

p for a probability density function (PDF), which is the derivative of its corresponding CDF,

. Because our simplified notation omits the variable of interest and the propositions, for clarity, we define it for CDF and PDF as

where

represents the set of possible values of the number

; thus

represents an infinite set of propositions, one for each real number. For example, if we had discrete units of 0.1 rather than continuous real numbers, then

,

. Analogous notation applies to locations; thus,

.

A joint distribution over two variables is a marginal distribution of the full joint distribution (following the sum rule),

where

n approaches infinity. Likewise,

is a marginal distribution of

.

4. Defining the Prior Information

We try to define the prior information as rigorously and explicitly as possible, since failure to do so has been a major source of confusion and conflict regarding probabilities. We distinguish information I about physical sizes, information J about our choice of numerical representation of size (the variables), and information K about the statistical dependence between variables. For clarity, we also distinguish the prior information from its absence (ignorance). Although it is the information that we seek to quantify with probabilities, it is its absence that leads directly to the mathematical expressions of symmetry that are the antecedents of our proofs.

4.1. Information I about Size

Information I is prior knowledge of physical ‘size’. Our working definition of a ‘size’ is a physical quantity that we postulate to exist, such as a distance, volume, or energy, which is usually understood to be positive and continuous. A ‘size’ need not have extension and is not distinguished from a ‘magnitude’. Critically, since a single size can be quantified by various numerical representations, and size is a relative rather than absolute quantity, a specific size is not uniquely associated with a specific number, and the space of possible sizes s is not a unique set of numbers. Information I concerns only prior knowledge of physical size , not its numerical representation (such as ).

We define I as the conjunction of three postulates.

There exist a finite and countable number of distinct sizes .

Each existing size is an element of a totally ordered space of possible sizes s.

For each existing size , there are larger and smaller possible sizes within s.

Information

I is so minimal that it could reasonably be called “the complete absence of information”. Being so minimal,

I appears vague, but its consequence becomes more apparent once we consider its many corollaries of ignorance. These constrain our choice of numerical representation (

Section 4.2) and provide the sufficient antecedents for our proofs (

Section 4.4).

4.2. Information J about Numerical Measures of Size

We should not confuse our information

I about physical sizes with our information

J about our choice of numerical representation.

J corresponds to our knowledge of numbers, which includes our chosen axioms. We will therefore use the term “size” for a physical attribute, and “variable” for a number we use to quantify it (we quantify size

with variables

and

). Our choice of numerical representation

J cannot have any influence on the actual sizes, or our information

I about them; thus probabilities

must be invariant to our choice of numerical representation (parameterization) (

Section 5.1) (although the density

does depend on

J).

Information I implies certain properties that are desirable if not necessary in our choice of J. Postulate 1 indicates that there must be an integer number of sizes (samples) that actually exist, . Since no number is postulated, n is unknown, and its state space is countably infinite. For example, each size could be the distance between a pair of particles, and the number of pairs n is unknown. For each of these n sizes, we define below two corresponding numerical quantities (variables). Postulate 2 specifies no minimal distance between neighboring sizes in the ‘totally ordered space’ s; thus s is continuous. Postulate 3 specifies that, for any given size , larger and smaller sizes are possible. Thus, no bound is known for space s, and the range of possibilities is infinite. We therefore choose to represent a physical size with real numbered variables ( and ), with more positive numbers representing larger sizes. We emphasize that, although the true value of each variable is finite, that value is entirely unknown given prior information I and J; therefore its state space is uncountably infinite.

We choose two numerical representations, one measuring relative size as difference, and the other as ratio, related to one another by exponential and logarithmic functions.

We define information

J as the conjunction of these equations, together with the standard distance metric in one dimension.

We therefore represent a single physical size

with two numbers (variables), “scale”

and “location”

, where

. In common practice, a “scale”

typically corresponds to a ratio (e.g.,

m is the ratio

, by definition); therefore, a “location” corresponds to a log ratio,

. Because we are free to choose any parameterization, and only one is necessary for a proof, it is not essential that we justify the relationship between parameterizations. However, the exponential relationship arises naturally and is at least a convenient choice.

5Since we follow the objective Bayesian definition of probability, our variables are not “random variables” in the standard sense (see Reference [

1], especially p. 500). This is in part just semantic, since “random” is ill-defined in standard use and promotes misunderstanding. The technical distinction is that the standard definition of a “random variable” assumes there to be a function (known or unknown) that “randomly samples”

from its state space

following some “true probability distribution” (which could be determined by a physical process). We do not assume there to be any such function or true distribution. Information

I and

J leaves us entirely agnostic about any potential process that might have generated the physical size we quantify with

. Such a process could just as well be deterministic as random, and even if we knew it to be one or the other, this alone would not alter the probabilities of concern here.

4.3. Information K about Dependence of Variables

Information K postulates that the variables are not independent but does not specify the exact form of dependence. The dependence between variables is typically specified as part of a statistical model. It relates to a measure of the state space in two or more dimensions. For example, the Euclidean distance metric, which exhibits rotational invariance, underlies what we call “Gaussian dependence”.

For independent variables

and

,

, and therefore our conclusion that

is obvious (although since

is not normalizable,

is not a unique median). Given our measures of distance (Equation (

5)) and probability (Equation (

6)) in one dimension, the measure in this two-dimensional state space is simply the product

where

is the joint CDF in our simplified notation (Equation (

2)). Information

K postulates a dependence, which we define by the negation of Equation (

11):

We further define

K to be any form of dependence (measure) consistent with the two corollaries specified below that result from the conjunction of information

I,

J, and

K (

Section 4.4). These corollaries mandate symmetries (

Section 5) that defines a set

of joint distributions

and

. Our proofs apply to every member of this set. They demonstrate that every member of the set has the form

, where

h is any function consistent with our corollaries.

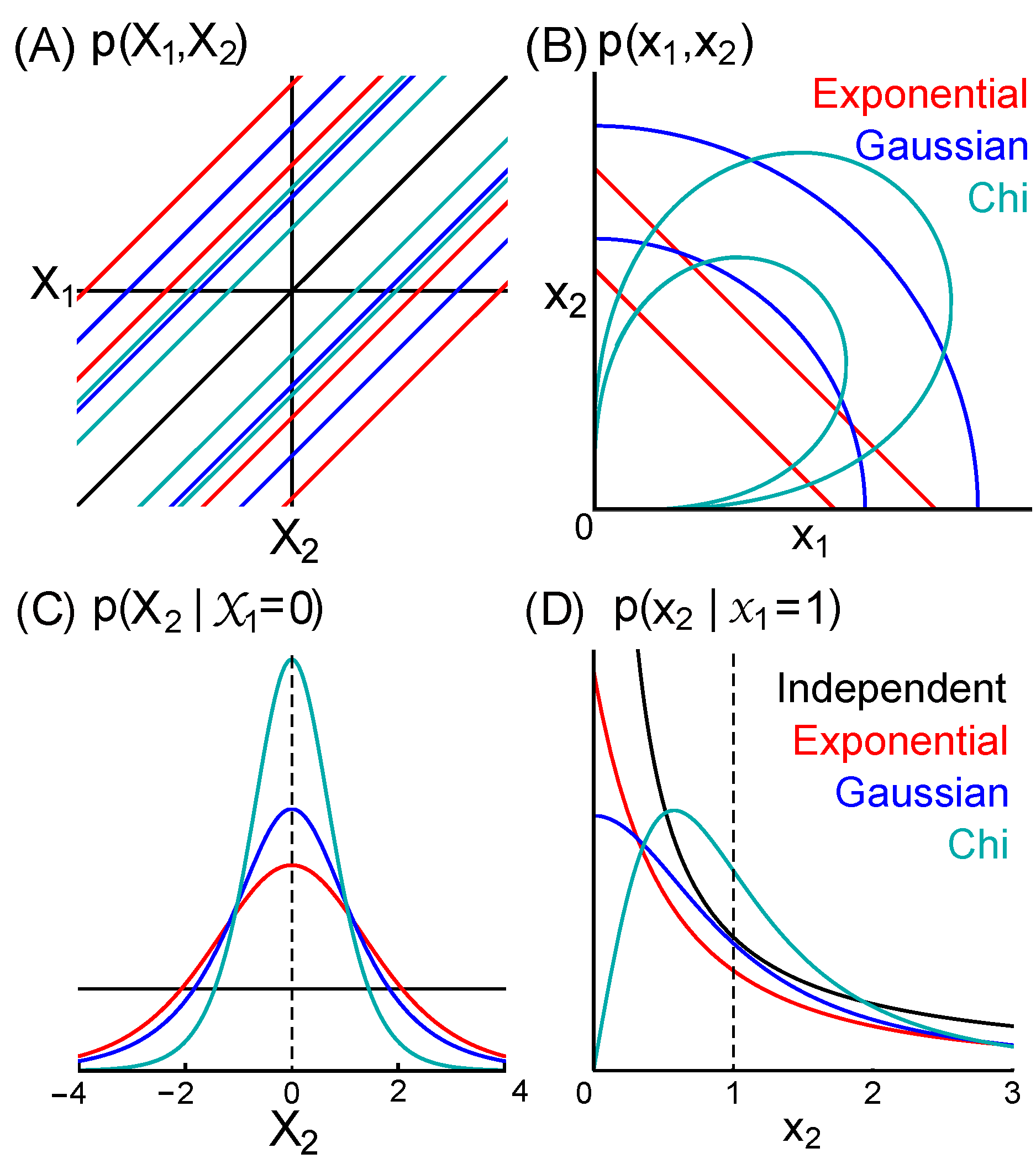

Figure 2 illustrates three exemplary distributions in set

. In

Section 7, we present in detail the special case of an “exponential” dependence or model.

4.4. Corollaries of Ignorance Implied by I, J, and K

The conjunction of information I, J, and K is all prior knowledge, and its logical corollary is ignorance of all else. The consequence of ignorance is symmetry, which provides constraints that we use to derive probabilities.

Corollary. There is no information beyond that in I, J, and K.

- 1.

There is no information discriminating the location or scale of one variable from another.

- 2.

There is no information about the location or scale of any variable.

Corollaries 1 and 2 make explicit two sorts of ignorance that are necessary and sufficient for our proofs. Corollary 1 requires that we treat variables equally, meaning that they are “exchangeable” (Equation (

14)). Corollary 2 is sufficient to establish location and scale invariance (Equations (

15)–(

18)) and to derive non-informative priors over single variables (Equations (

19)–(

22)).

9. The Effect of Additional Information

A single known number is the median over an unknown only as a result of ignorance (corollaries 1 and 2), and therefore additional information can result in loss of this symmetry. For example,

is not the median of

if

and

. This is because

and

are not exchangeable in the joint distribution

. An interesting special case is that

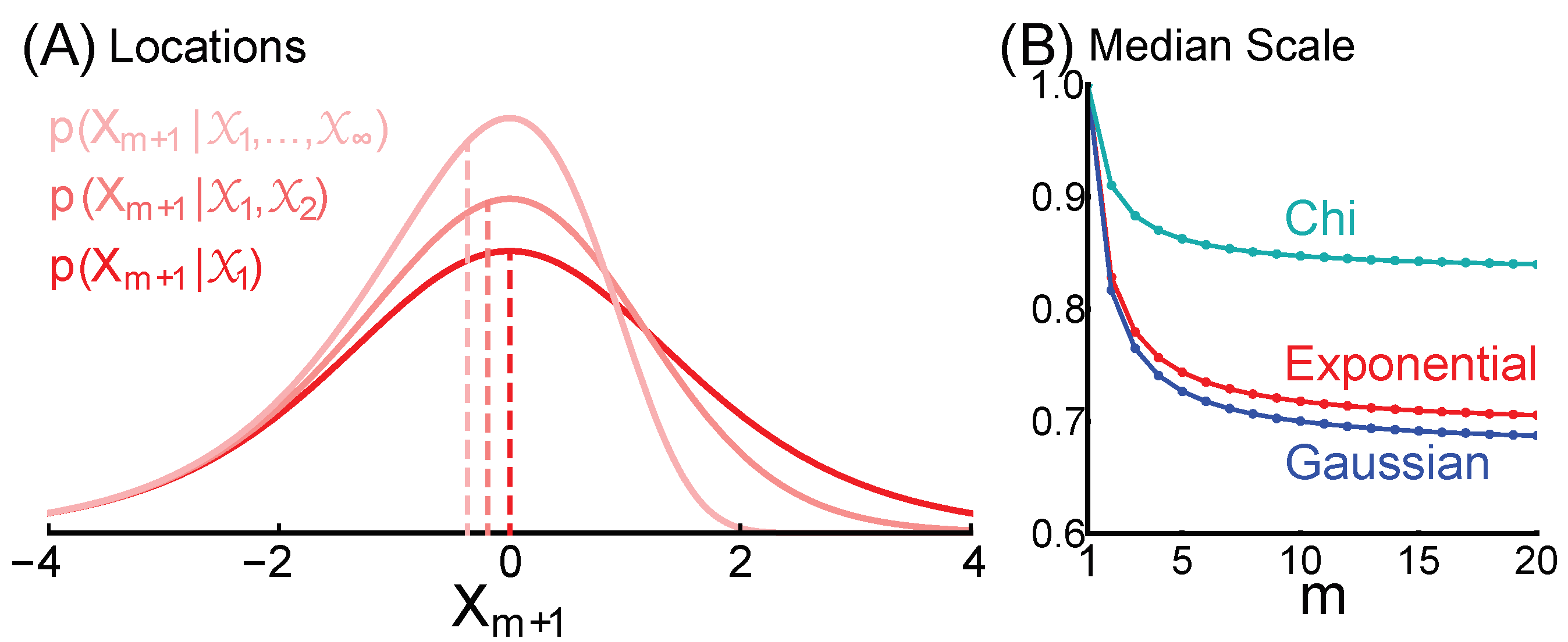

m variables are known and are equal to one another,

. One might expect that the median will be the one known number, but this is only true if

, at least for our exemplary forms of dependence. Scale information increases with

m, causing the distribution to skew towards smaller numbers (

Figure 3A). If

, the median converges to a number between 0 and 1 that is determined by the form of dependence (

Figure 3B). Given the exponential dependence, the median is

, which is ≈ 0.828 for

, and converges to

as

m goes to infinity.

There are forms of additional information that allow a single known to remain the median. For example, additional information could specify that

and

are each the mean or sum of

m variables.

8 We still must treat

and

equally, and we still know nothing about their scale; therefore,

is still the median of

. This remains true whether the number of summed variables

m is itself known or unknown.

10. Discussion

Our proof advances the objective Bayesian foundation of probability theory, which seeks to quantify information (knowledge or evidence) with the same rigor that has been attained in other branches of applied mathematics. The ideal is to start from axioms, derive non-informative prior probabilities, and to then deduce probabilities conditional on any additional evidence. A probability should measure evidence just as objectively as numbers measure physical quantities (distance, energy, etc.).

The only aspect of our proofs that has been controversial is the issue of whether absence of information uniquely determines a prior distribution over real numbers. Formal criteria, including indifference, location invariance, and maximum entropy, have all been used to derive the uniform density

, where

(

Section 6). Subjectivists view

c as indeterminate, thus being a source of subjectivity (see Reference [

14], pp. 83–87), whereas objectivists view it merely as a positive constant to be specified by convention (our proofs hold for any choice of

c). In contrast, we deduced

from the standard distance metric, which we declared an axiom (Equation (

5)) (

Section 3). In our opinion, the indeterminacy of the subjectivist view is the logical result of insufficient axioms.

Our conclusion appears surprising, since for any known and finite positive number, the space of larger numbers is infinitely larger than the space of smaller positive numbers (

Figure 1). However, probability measures evidence, not its absence, and the space of possibilities only exists as the consequence of the absence of evidence (ignorance). A physical ‘size’ is evidence about other physical sizes, and we postulate that physical size and evidence exist (

Section 4.1). This postulate implies that the absence of evidence (ignorance), and the corresponding state space, does not exist (or at least has a secondary and contingent status) and therefore carries no weight in reasoning. Reason requires that two physical sizes be treated equally (

Section 4.4), and there is no evidence that one is larger than the other, even if one is known. In this way our proof that a known number

(quantifying size) is the median over an unknown

can be understood intuitively. Our result can also be understood by considering that there is no meaningful (non-arbitrary) number associated with a single “absolute” size (

Section 3.2), but only with ratios of sizes, and we must have

(

Section 6.4), regardless of whether

is known. Indeed, we have proven that

has no information about

or

, and vice versa (

Section 8.1), even though

and

are dependent variables and thus have information about one another.

Practical application of our result requires us to consider what information we actually have. The probability that the unknown distance to star B is greater than the known distance to star A is , and the probability is also that the unknown energy of particle B is greater than the known energy of particle A. However, this is only true in the absence of additional information. We do realistically have additional information that is implicit in the terms ‘star’ and ‘particle,’ since we know that the distance to a star is large, and the mass of a particle is small, relative to the human scale. Before inquiring about stars or particles, we already know the length and mass of a human, and that additional information changes the problem. Furthermore, we know the human scale with more certainty than we can ever know a much larger or smaller scale.

All scientific measurement and inference of size begins with knowledge of the human scale that is formalized in our standard units (m, kg, s, etc.). These standard units are only slightly arbitrary, since they were chosen from the infinite space of possible sizes to be extremely close to the human scale. Therefore members of the set of distributions that we have characterized here, each conditional on one known size, would be appropriate as prior distributions given only knowledge of standard units. One meter would then be the median over an unknown distance

. If

is then observed, and we ask “what is another distance

?”, we already know two distances, and neither will be the median (our central conclusion does not apply) (

Figure 3) (

Section 9).

A beautiful aspect of Bayesian probabilities is that they can objectively describe any knowledge of any observer, at least in principle. Probabilities are typically used to describe the knowledge of scientists, but they can also be used to describe the information in a cognitive or physical model of an observer. This is the basis of Bayesian accounts of biology and psychology that view an organism as a collection of observers that predict and cause the future [

21,

30,

31,

32,

33,

34,

35,

36,

37,

38,

39,

40].

All brains must solve inference problems that are fundamentally the same as those facing scientists. When humans must estimate the distance to a visual object in a minimally informative sensory context, such as a light source in the night sky, they perceive the most likely distance to be approximately one meter, and bias towards one meter also influences perception in more complex and typical sensory environments [

41,

42]. This is elegantly explained by the fact that the most frequently observed distance to visual objects in a natural human environment is about a meter [

31]. The brain is believed to have learned the human scale from experience, and to integrate this prior information with incoming sensory ‘data’ to infer distances, in accord with Bayesian principles [

30,

31,

32]. Therefore, a simple cognitive model, in which the only prior information of a human observer is a single distance of

m, or an energy of

joule (kg m

2 s

−2), might account reasonably well for average psychophysical data under typical conditions.

Such a cognitive model is extremely simplified, since the knowledge in a brain is diverse and changes dynamically over time. However, we do not think it is overly simplistic for a model of a physical observer to be just a single quantity at a single moment in time. The brain is a multitude of local physical observers, but our initial concern is just a single observer at a single moment [

21,

22,

33,

34,

35]. The problem is greatly simplified by the fact that we equate an observer with information, and information with a physical quantity that is local in space and time, and is described by a single number. For example, a single neuron at a single moment has a single energy in the electrical potential across its membrane.

9 Given this known and present internal energy, what is the probability distribution over the past external energy that caused it, or the future energy that will be its effect? Here, we have provided a partial answer by characterizing a set of candidate distributions, and demonstrating that a known energy will be the median over an unknown energy.