Epistemological Framework for Computer Simulations in Building Science Research: Insights from Theory and Practice

Abstract

1. Introduction

2. The Ontology of Computer Simulations

3. Conditions for Use of Computer Simulations

4. Epistemological Framework for Building and Testing Theory Using Computer Simulations

4.1. Nature of Knowledge Obtained from Computer Simulations

4.2. Hierarchical Order of Computer Simulations

4.3. Challenges of Computer Simulations

4.4. Validation, Verification and Robustness of Computer Simulations

4.5. Conditions for the Failure of Computer Simulations

5. Epistemological Framework for Computer Simulations in the Practice of Building Science Research

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Winsberg, E. Models of success versus the success of models: Reliability without truth. Synthese 2006, 152, 1–19. [Google Scholar] [CrossRef]

- Winsberg, E. Computer simulation and the philosophy of science. Philos. Compass 2009, 4, 835–845. [Google Scholar] [CrossRef]

- Lenhard, J. Computer simulation. In The Oxford Handbook of Philosophy of Science; Oxford University Press: Oxford, UK, 2016. [Google Scholar]

- Humphreys, P. The philosophical novelty of computer simulation methods. Synthese 2008, 169, 615–626. [Google Scholar] [CrossRef]

- Ord-Smith, R.J.; Stephenson, J. Computer Simulation of Continuous Systems; Cambridge University Press: Cambridge, UK, 1975; Volume 3. [Google Scholar]

- Parker, W.S. Does matter really matter? Computer simulations, experiments, and materiality. Synthese 2008, 169, 483–496. [Google Scholar] [CrossRef]

- Bennett, A.W. Introduction to Computer Simulation; West Publishing Company: Eagan, MN, USA, 1974. [Google Scholar]

- Grim, P.; Rosenberger, R.; Rosenfeld, A.; Anderson, B.; Eason, R.E. How simulations fail. Synthese 2011, 190, 2367–2390. [Google Scholar] [CrossRef]

- Grüne-Yanoff, T.; Weirich, P. The philosophy and epistemology of simulation: A review. Simul. Gaming 2010, 41, 20–50. [Google Scholar] [CrossRef]

- Humphreys, P. Computer Simulations. In Proceedings of the Extending Ourselves; Oxford University Press: Oxford, UK, 2004; pp. 105–135. [Google Scholar]

- Winsberg, E. Simulations, models, and theories: Complex physical systems and their representations. Philos. Sci. 2001, 68, S442–S454. [Google Scholar] [CrossRef]

- Paul, R.J.; Neelamkavil, F. Computer simulation and modeling. J. Oper. Res. Soc. 1987, 38, 1092. [Google Scholar] [CrossRef]

- Guala, F. The Methodology of Experimental Economics; Cambridge University Press: Cambridge, UK, 2005. [Google Scholar]

- Radder, H. The philosophy of scientific experimentation: A review. Autom. Exp. 2009, 1, 2. [Google Scholar] [CrossRef]

- Morgan, M.S. Model experiments and models in experiments. In Model-Based Reasoning; Springer: Boston, MA, USA, 2002; pp. 41–58. [Google Scholar]

- Morgan, M.S. Experiments versus models: New phenomena, inference and surprise. J. Econ. Methodol. 2005, 12, 317–329. [Google Scholar] [CrossRef]

- Winsberg, E. Simulated experiments: Methodology for a virtual world. Philos. Sci. 2003, 70, 105–125. [Google Scholar] [CrossRef]

- Angius, N. Qualitative models in computational simulative sciences: Representation, confirmation, experimentation. Minds Mach. 2019, 29, 397–416. [Google Scholar] [CrossRef]

- Winsberg, E. Sanctioning models: The epistemology of simulation. Sci. Context 1999, 12, 275–292. [Google Scholar] [CrossRef]

- Kleindorfer, G.B.; O’Neill, L.; Ganeshan, R. Validation in simulation: Various positions in the philosophy of science. Manag. Sci. 1998, 44, 1087–1099. [Google Scholar] [CrossRef]

- Parker, W.S. Franklin, Holmes, and the epistemology of computer simulation. Int. Stud. Philos. Sci. 2008, 22, 165–183. [Google Scholar] [CrossRef]

- Sargent, R.G. Verification and validation of simulation models. J. Simul. 2013, 7, 12–24. [Google Scholar] [CrossRef]

- D’Arms, J.; Batterman, R.W.; Gorny, K. Game theoretic explanations and the evolution of justice. Philos. Sci. 1998, 65, 76–102. [Google Scholar] [CrossRef]

- Kleindorfear, G.B.; Geneshan, R. The philosophy of science and validation in simulation. In Proceedings of the 25th International Conference on World Wide Web—WWW’16; Association for Computing Machinery (ACM): New York City, NY, USA; 1993; pp. 50–57. [Google Scholar]

- Frigg, R.; Hartmann, S. Models in Science. Available online: https://plato.stanford.edu/entries/models-science/ (accessed on 11 September 2020).

- Weissart, T. The Genesis of Simulation in Dynamics; Springer: New York, NY, USA, 1997. [Google Scholar]

- Franklin, A. The Neglect of Experiment; Cambridge University Press: Cambridge, UK, 1986. [Google Scholar]

- Gooding, D.; Pinch, T.; Schaffer, S. The Uses of Experiment: Studies in the Natural Sciences; Cambridge University Press: Cambridge, UK, 1989. [Google Scholar]

- Franklin, A.; Newman, R. Selectivity and discord: Two problems of experiment. Am. J. Phys. 2003, 71, 734–735. [Google Scholar] [CrossRef][Green Version]

- Bourdoukan, P.; Wurtz, E.; Joubert, P.; Spérandio, M. Overall cooling efficiency of a solar desiccant plant powered by direct-flow vacuum-tube collectors: Simulation and experimental results. J. Build. Perform. Simul. 2008, 1, 149–162. [Google Scholar] [CrossRef]

- Jenkins, D. Using dynamic simulation to quantify the effect of carbon-saving measures for a UK supermarket. J. Build. Perform. Simul. 2008, 1, 275–288. [Google Scholar] [CrossRef]

- Ji, Y.; Cook, M.J.; Hanby, V.; Infield, D.G.; Loveday, D.L.; Mei, L. CFD modeling of naturally ventilated double-skin facades with Venetian blinds. J. Build. Perform. Simul. 2008, 1, 185–196. [Google Scholar] [CrossRef]

- Chvatal, K.; Corvacho, H. The impact of increasing the building envelope insulation upon the risk of overheating in summer and an increased energy consumption. J. Build. Perform. Simul. 2009, 2, 267–282. [Google Scholar] [CrossRef]

- Le, A.D.T.; Maalouf, C.; Mendonça, K.C.; Mai, T.H.; Wurtz, E. Study of moisture transfer in a double-layered wall with imperfect thermal and hydraulic contact resistances. J. Build. Perform. Simul. 2009, 2, 251–266. [Google Scholar] [CrossRef]

- Van Treeck, C.; Frisch, J.; Pfaffinger, M.; Rank, E.; Paulke, S.; Schweinfurth, I.; Schwab, R.; Hellwig, R.T.; Holm, A. Integrated thermal comfort analysis using a parametric manikin model for interactive real-time simulation. J. Build. Perform. Simul. 2009, 2, 233–250. [Google Scholar] [CrossRef]

- Hugo, A.; Zmeureanu, R.; Rivard, H. Solar combisystem with seasonal thermal storage. J. Build. Perform. Simul. 2010, 3, 255–268. [Google Scholar] [CrossRef]

- Sartipi, A.; Laouadi, A.; Naylor, D.; Dhib, R. Convective heat transfer in domed skylight cavities. J. Build. Perform. Simul. 2010, 3, 269–287. [Google Scholar] [CrossRef]

- Siva, K.; Lawrence, M.X.; Kumaresh, G.R.; Rajagopalan, P.; Santhanam, H. Experimental and numerical investigation of phase change materials with finned encapsulation for energy-efficient buildings. J. Build. Perform. Simul. 2010, 3, 245–254. [Google Scholar] [CrossRef]

- Johansson, D.; Bagge, H. Simulating space heating demand with respect to non-constant heat gains from household electricity. J. Build. Perform. Simul. 2011, 4, 227–238. [Google Scholar] [CrossRef]

- Laouadi, A. The central sunlighting system: Development and validation of an optical prediction model. J. Build. Perform. Simul. 2011, 4, 205–226. [Google Scholar] [CrossRef][Green Version]

- Wetter, M. Co-simulation of building energy and control systems with the Building Controls Virtual Test Bed. J. Build. Perform. Simul. 2011, 4, 185–203. [Google Scholar] [CrossRef]

- Mahmoud, A.M.; Ben-Nakhi, A.; Ben-Nakhi, A.; Alajmi, R. Conjugate conduction convection and radiation heat transfer through hollow autoclaved aerated concrete blocks. J. Build. Perform. Simul. 2012, 5, 248–262. [Google Scholar] [CrossRef]

- Saber, H.H.; Maref, W.; Elmahdy, H.; Swinton, M.C.; Glazer, R. 3D heat and air transport model for predicting the thermal resistances of insulated wall assemblies. J. Build. Perform. Simul. 2012, 5, 75–91. [Google Scholar] [CrossRef]

- Wang, X.; Kendrick, C.; Ogden, R.; Baiche, B.; Walliman, N. Thermal modeling of an industrial building with solar reflective coatings on external surfaces: Case studies in China and Australia. J. Build. Perform. Simul. 2012, 5, 199–207. [Google Scholar] [CrossRef]

- Barreira, E.; Delgado, J.M.P.Q.; Ramos, N.; De Freitas, V.P. Exterior condensations on façades: Numerical simulation of the undercooling phenomenon. J. Build. Perform. Simul. 2013, 6, 337–345. [Google Scholar] [CrossRef]

- Maurer, C.; Baumann, T.; Hermann, M.; Di Lauro, P.; Pavan, S.; Michel, L.; Kuhn, T.E. Heating and cooling in high-rise buildings using facade-integrated transparent solar thermal collector systems. J. Build. Perform. Simul. 2013, 6, 449–457. [Google Scholar] [CrossRef]

- Villi, G.; Peretti, C.; Graci, S.; De Carli, M. Building leakage analysis and infiltration modeling for an Italian multi-family building. J. Build. Perform. Simul. 2013, 6, 98–118. [Google Scholar] [CrossRef]

- Berardi, U. Simulation of acoustical parameters in rectangular churches. J. Build. Perform. Simul. 2013, 7, 1–16. [Google Scholar] [CrossRef]

- Geva, A.; Saaroni, H.; Morris, J. Measurements and simulations of thermal comfort: A synagogue in Tel Aviv, Israel. J. Build. Perform. Simul. 2013, 7, 233–250. [Google Scholar] [CrossRef]

- Kalagasidis, A.S. A multi-level modeling and evaluation of thermal performance of phase-change materials in buildings. J. Build. Perform. Simul. 2013, 7, 289–308. [Google Scholar] [CrossRef]

- Mahyuddin, N.; Awbi, H.B.; Essah, E.A. Computational fluid dynamics modeling of the air movement in an environmental test chamber with a respiring manikin. J. Build. Perform. Simul. 2014, 8, 359–374. [Google Scholar] [CrossRef]

- Su, C.-H.; Tsai, K.-C.; Xu, M.-Y. Computational analysis on the performance of smoke exhaust systems in small vestibules of high-rise buildings. J. Build. Perform. Simul. 2014, 8, 239–252. [Google Scholar] [CrossRef]

- Wang, J.; Chow, T.-T. Influence of human movement on the transport of airborne infectious particles in hospital. J. Build. Perform. Simul. 2014, 8, 205–215. [Google Scholar] [CrossRef]

- Georges, L.; Skreiberg, Ø. Simple modeling procedure for the indoor thermal environment of highly insulated buildings heated by wood stoves. J. Build. Perform. Simul. 2016, 9, 663–679. [Google Scholar] [CrossRef]

- Muslmani, M.; Ghaddar, N.; Ghali, K. Performance of combined displacement ventilation and cooled ceiling liquid desiccant membrane system in Beirut climate. J. Build. Perform. Simul. 2016, 9, 648–662. [Google Scholar] [CrossRef]

- Le, A.D.T.; Maalouf, C.; Douzane, O.; Promis, G.; Mai, T.H.; Langlet, T. Impact of combined moisture buffering capacity of a hemp concrete building envelope and interior objects on the hygrothermal performance in a room. J. Build. Perform. Simul. 2016, 9, 589–605. [Google Scholar] [CrossRef]

- Corbin, C.D.; Henze, G. Predictive control of residential HVAC and its impact on the grid. Part II: Simulation studies of residential HVAC as a supply following resource. J. Build. Perform. Simul. 2016, 10, 1–13. [Google Scholar] [CrossRef]

- Jones, A.; Finn, D. Co-simulation of a HVAC system-integrated phase change material thermal storage unit. J. Build. Perform. Simul. 2016, 10, 313–325. [Google Scholar] [CrossRef]

- Kubilay, A.; Carmeliet, J.J.; Derome, D. Computational fluid dynamics simulations of wind-driven rain on a mid-rise residential building with various types of facade details. J. Build. Perform. Simul. 2016, 10, 125–143. [Google Scholar] [CrossRef]

- Brideau, S.A.; Beausoleil-Morrison, I.; Kummert, M. Above-floor tube-and-plate radiant floor model development and validation. J. Build. Perform. Simul. 2017, 11, 449–469. [Google Scholar] [CrossRef]

- Mortada, A.; Choudhary, R.; Soga, K. Multi-dimensional simulation of underground subway spaces coupled with geoenergy systems. J. Build. Perform. Simul. 2018, 11, 517–537. [Google Scholar] [CrossRef]

- Rakotomahefa, T.M.J.; Wang, F.; Zhang, T.; Wang, S. Zonal network solution of temperature profiles in a ventilated wall module. J. Build. Perform. Simul. 2017, 11, 538–552. [Google Scholar] [CrossRef]

- Barz, T.; Emhofer, J.; Marx, K.; Zsembinszki, G.; Cabeza, L.F. Phenomenological modeling of phase transitions with hysteresis in solid/liquid PCM. J. Build. Perform. Simul. 2019, 12, 770–788. [Google Scholar] [CrossRef]

- Ralph, B.; Carvel, R.; Floyd, J. Coupled hybrid modeling within the Fire Dynamics Simulator: Transient transport and mass storage. J. Build. Perform. Simul. 2019, 12, 685–699. [Google Scholar] [CrossRef]

- Van Kenhove, E.; De Backer, L.; Janssens, A.; Laverge, J. Simulation of Legionella concentration in domestic hot water: Comparison of pipe and boiler models. J. Build. Perform. Simul. 2019, 12, 595–619. [Google Scholar] [CrossRef]

- Filipsson, P.; Trüschel, A.; Gräslund, J.; Dalenbäck, J.-O. Modeling of rooms with active chilled beams. J. Build. Perform. Simul. 2020, 13, 409–418. [Google Scholar] [CrossRef]

- Sardoueinasab, Z.; Yin, P.; O’Neal, D. Energy modeling and analysis of variable airflow parallel fan-powered terminal units using Energy Management System (EMS) in EnergyPlus. J. Build. Perform. Simul. 2019, 13, 1–12. [Google Scholar] [CrossRef]

- Mohamed, S.; Buonanno, G.; Massarotti, N.; Mauro, A. Ultrafine particle transport inside an operating room equipped with turbulent diffusers. J. Build. Perform. Stimul. 2020, 13, 443–455. [Google Scholar] [CrossRef]

| Experimental Evaluation Strategies Proposed by Franklin | Simulation Model Evaluation Strategies | Simulation Code Evaluation Strategies |

|---|---|---|

| Apparatus gives other results that match known results | Simulation output fits closely enough with various observational data | Estimated solutions fit closely enough with analytic and/or other numerical solutions |

| Apparatus responds as expected after intervention on the experimental system | Simulation results change as expected after intervention on substantive model parameters | Solutions change as expected after intervention on algorithm parameters |

| Capacities of apparatus are underwritten by well confirmed theories | Simulation model is constructed using well-confirmed theoretical assumptions | Solution method is underwritten by sound mathematical theorizing and analysis |

| Experimental results are replicated in other experiments | Simulation results are reproduced in other simulations or in traditional experiments | Solutions are produced using other pieces of code |

| Plausible sources of significant experimental error can be ruled out | Plausible sources of significant modeling error can be ruled out | Plausible sources of significant mathematical/computational error can be ruled out |

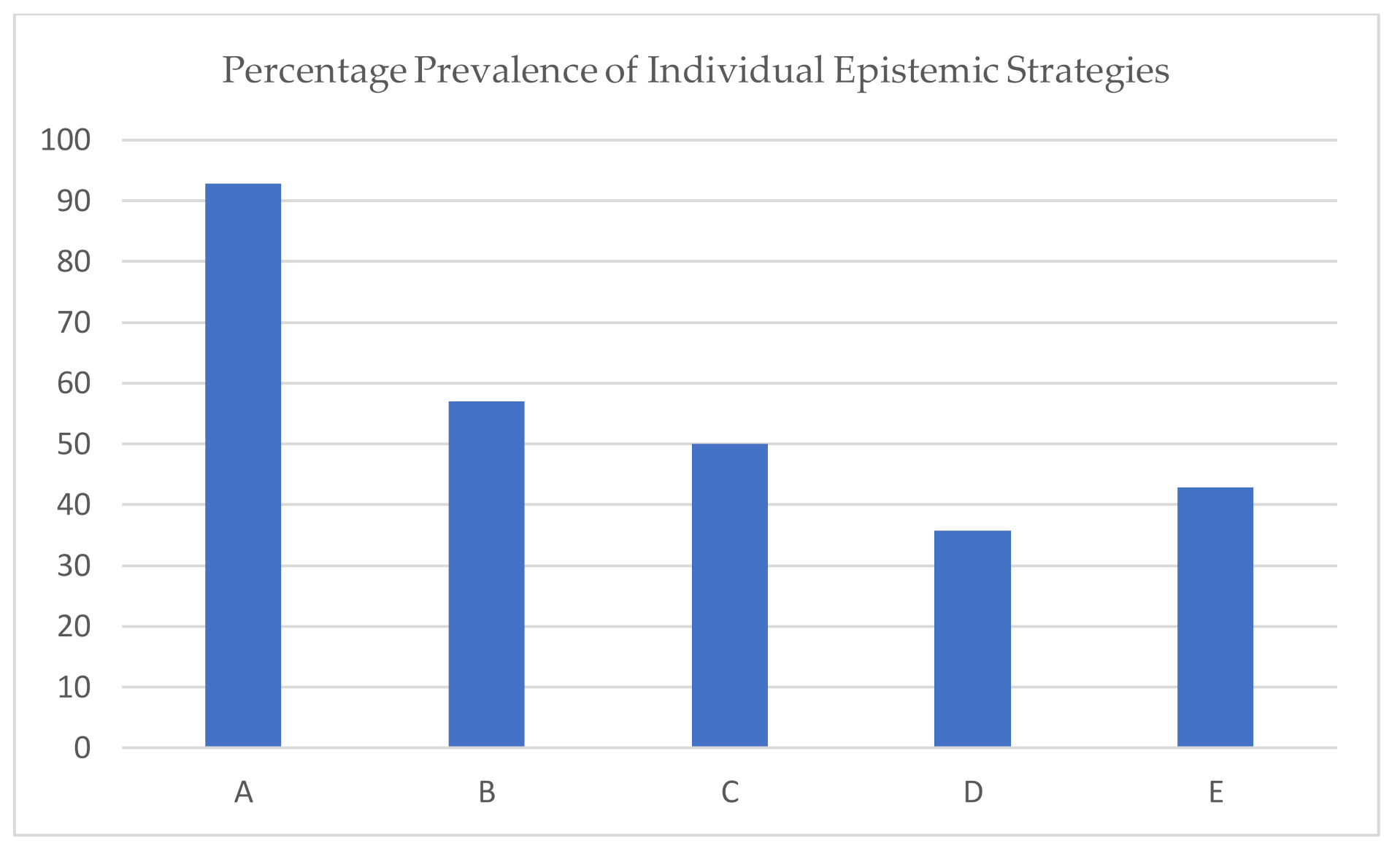

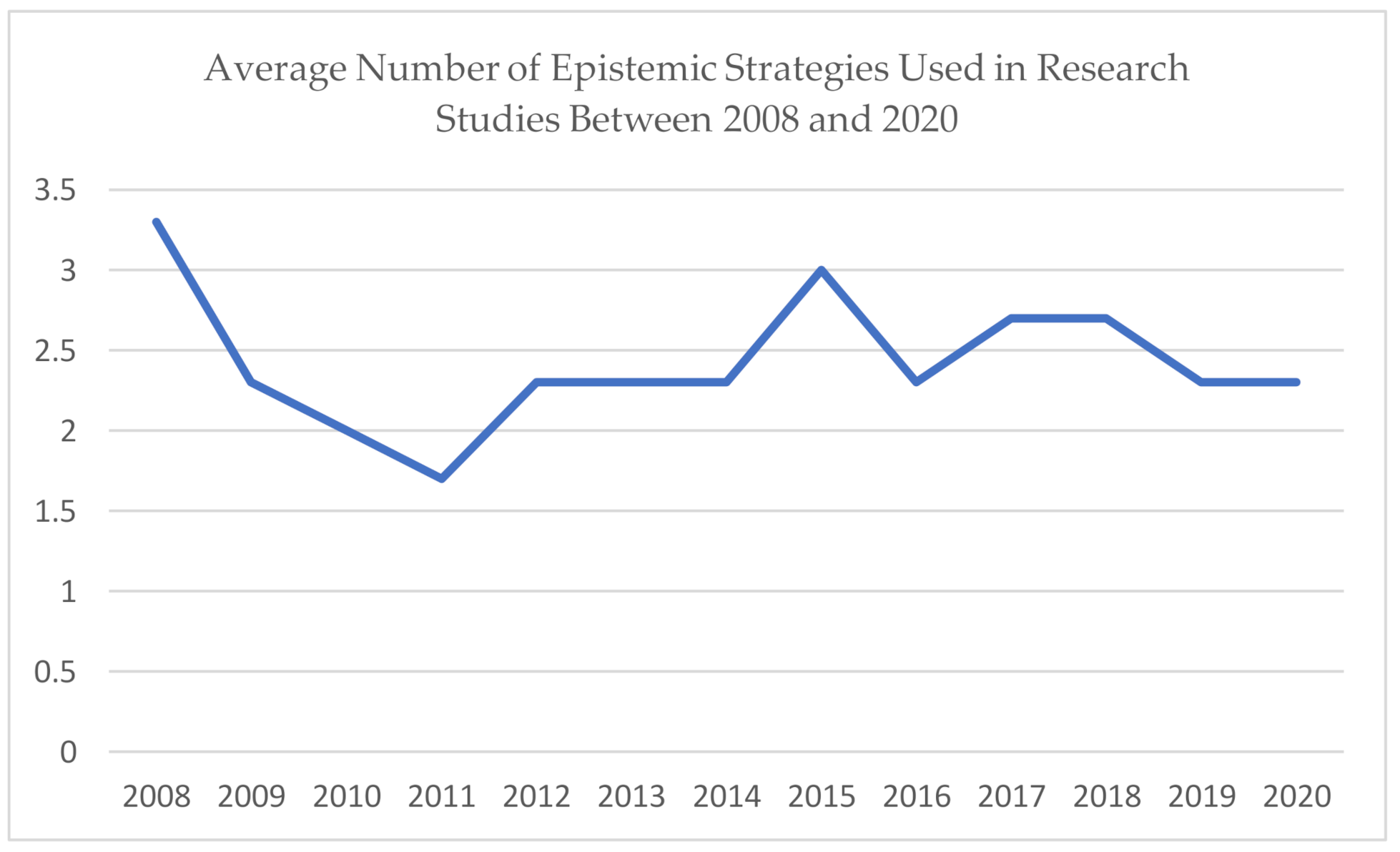

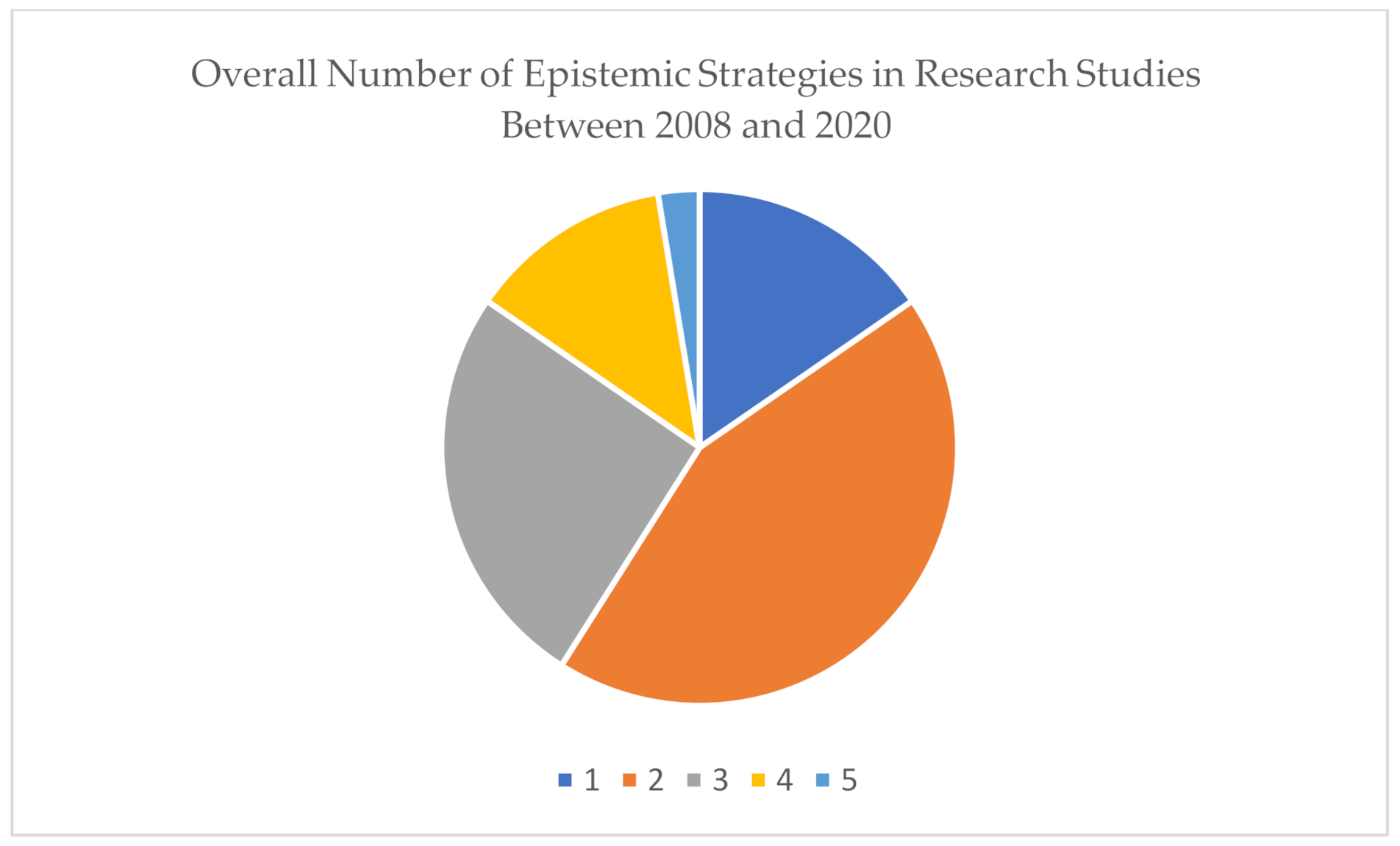

| Year | Research Study | Objective | Epistemological Framework | ||||

|---|---|---|---|---|---|---|---|

| A | B | C | D | E | |||

| 2008 | [30] | Prediction | Yes | Yes | Yes | None | None |

| 2008 | [31] | Prediction | Yes | Yes | None | None | None |

| 2008 | [32] | Prediction | Yes | Yes | Yes | Yes | Yes |

| 2009 | [33] | Prove theory | Yes | None | None | None | None |

| 2009 | [34] | Prediction | Yes | Yes | Yes | None | None |

| 2009 | [35] | Prediction | Yes | Yes | None | None | Yes |

| 2010 | [36] | Prediction | Yes | None | Yes | None | None |

| 2010 | [37] | Prediction | Yes | None | None | Yes | None |

| 2010 | [38] | Prediction | Yes | Yes | None | None | None |

| 2011 | [39] | Prediction and Prove theory | None | None | None | None | Yes |

| 2011 | [40] | Prove theory | Yes | Yes | None | Yes | None |

| 2011 | [41] | Prove theory | Yes | None | None | None | None |

| 2012 | [42] | Prediction | Yes | None | Yes | Yes | None |

| 2012 | [43] | Prove theory | Yes | Yes | None | Yes | None |

| 2012 | [44] | Prove theory | Yes | None | None | None | None |

| 2013 | [45] | Prove theory | Yes | Yes | None | None | None |

| 2013 | [46] | Prediction | Yes | None | None | Yes | None |

| 2013 | [47] | Prediction and Prove theory | Yes | Yes | None | None | Yes |

| 2014 | [48] | Prediction | Yes | Yes | None | None | None |

| 2014 | [49] | Prove theory | Yes | Yes | None | None | None |

| 2014 | [50] | Prediction | Yes | Yes | None | None | Yes |

| 2015 | [51] | Prediction | Yes | Yes | Yes | None | Yes |

| 2015 | [52] | Prediction | Yes | None | Yes | None | Yes |

| 2015 | [53] | Prediction | Yes | Yes | None | None | None |

| 2016 | [54] | Prove theory | Yes | Yes | None | None | None |

| 2016 | [55] | Prediction | Yes | Yes | None | None | None |

| 2016 | [56] | Prediction | Yes | Yes | Yes | None | None |

| 2017 | [57] | Prediction | Yes | None | None | Yes | None |

| 2017 | [58] | Prediction | Yes | Yes | None | None | None |

| 2017 | [59] | Prove theory | Yes | Yes | Yes | None | Yes |

| 2018 | [60] | Prove theory | Yes | Yes | Yes | Yes | None |

| 2018 | [61] | Prove theory | Yes | Yes | None | None | None |

| 2018 | [62] | Prove theory | Yes | Yes | None | None | None |

| 2019 | [63] | Prediction | Yes | Yes | None | None | None |

| 2019 | [64] | Prove theory | Yes | None | None | None | None |

| 2019 | [65] | Prove theory | Yes | Yes | Yes | Yes | None |

| 2020 | [66] | Prediction | Yes | Yes | None | None | None |

| 2020 | [67] | Prove theory | Yes | None | None | None | None |

| 2020 | [68] | Prediction | Yes | Yes | Yes | None | Yes |

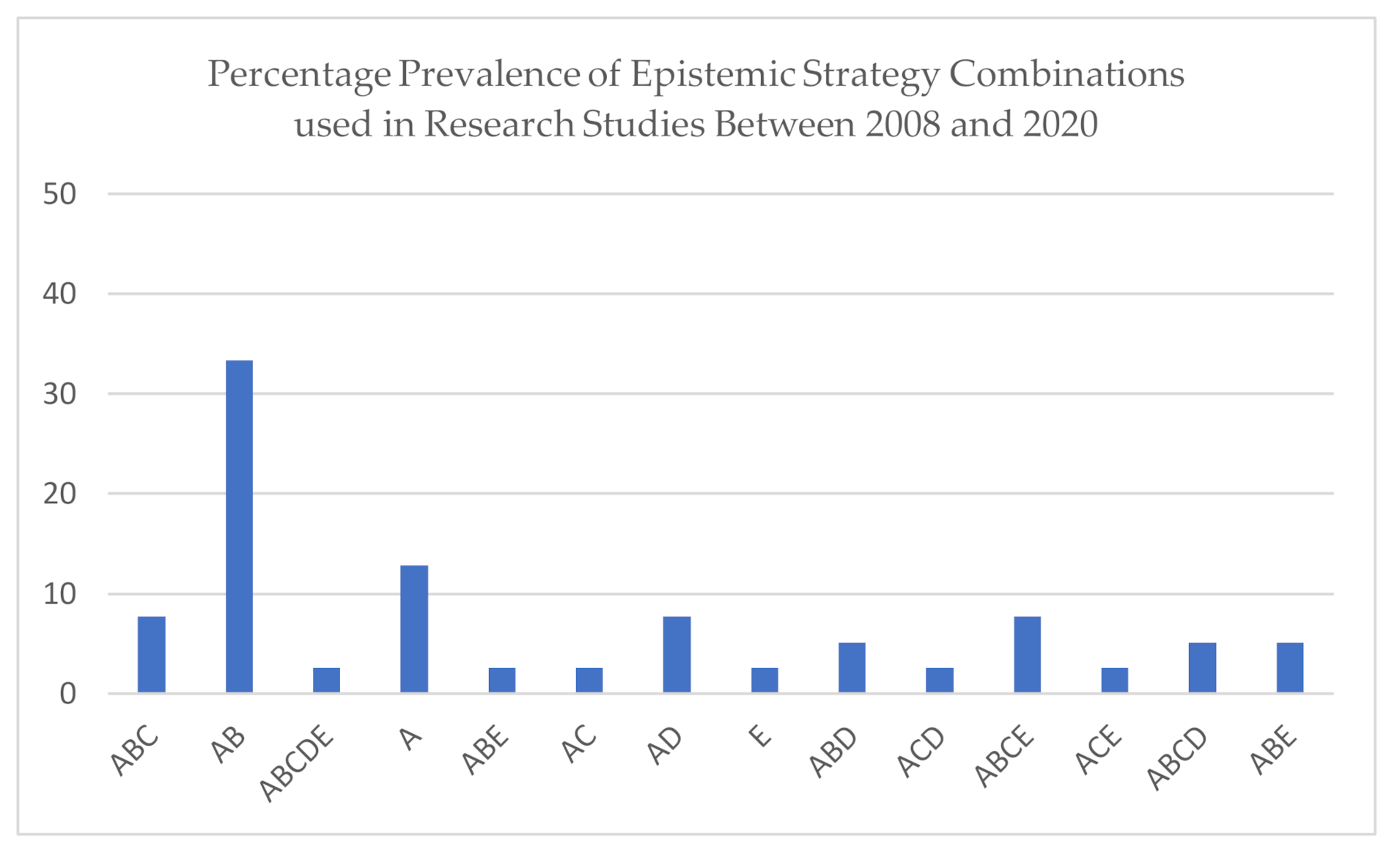

| Combination | Description |

|---|---|

| ABC | Verification, experimental validation, sensitivity analysis |

| AB | Verification, experimental validation |

| ABCDE | Verification, experimental validation, sensitivity analysis, inter-model comparison, justification for use of simulations over conventional experiments |

| A | Verification |

| ABE | Verification, experimental validation, justification for use of simulations over conventional experiments |

| AC | Verification, sensitivity analysis |

| AD | Verification, inter-model comparison |

| E | Justification for use of simulations over conventional experiments |

| ABD | Verification, experimental validation, inter-model comparison |

| ACD | Verification, sensitivity analysis, inter-model comparison |

| ABCE | Verification, experimental validation, sensitivity analysis, justification for use of simulations over conventional experiments |

| ACE | Verification, sensitivity analysis, justification for use of simulations over conventional experiments |

| ABCD | Verification, experimental validation, sensitivity analysis, inter-model comparison |

| ABE | Verification, experimental validation, justification for use of simulations over conventional experiments |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kalua, A.; Jones, J. Epistemological Framework for Computer Simulations in Building Science Research: Insights from Theory and Practice. Philosophies 2020, 5, 30. https://doi.org/10.3390/philosophies5040030

Kalua A, Jones J. Epistemological Framework for Computer Simulations in Building Science Research: Insights from Theory and Practice. Philosophies. 2020; 5(4):30. https://doi.org/10.3390/philosophies5040030

Chicago/Turabian StyleKalua, Amos, and James Jones. 2020. "Epistemological Framework for Computer Simulations in Building Science Research: Insights from Theory and Practice" Philosophies 5, no. 4: 30. https://doi.org/10.3390/philosophies5040030

APA StyleKalua, A., & Jones, J. (2020). Epistemological Framework for Computer Simulations in Building Science Research: Insights from Theory and Practice. Philosophies, 5(4), 30. https://doi.org/10.3390/philosophies5040030