Abstract

Background: Despite the growing demand for amyloid PET quantification, practical challenges remain. As automated software platforms are increasingly adopted to address these limitations, we evaluated the reliability of commercial tools for Centiloid quantification against the original Centiloid Project method. Methods: This retrospective study included 332 amyloid PET scans (165 [18F]Florbetaben; 167 [18F]Flutemetamol) performed for suspected mild cognitive impairments or dementia, paired with T1-weighted MRI within one year. Centiloid values were calculated using three automated software platforms, BTXBrain, MIMneuro, and SCALE PET, and compared with the original Centiloid method. The agreement was assessed using Pearson’s correlation coefficient, the intraclass correlation coefficient (ICC), a Passing–Bablok regression, and Bland–Altman plots. The concordance with the visual interpretation was evaluated using receiver operating characteristic (ROC) curves. Results: BTXBrain (R = 0.993; ICC = 0.986) and SCALE PET (R = 0.992; ICC = 0.991) demonstrated an excellent correlation with the reference, while MIMneuro showed a slightly lower agreement (R = 0.974; ICC = 0.966). BTXBrain exhibited a proportional underestimation (slope = 0.872 [0.860–0.885]), MIMneuro showed a significant overestimation (slope = 1.053 [1.026–1.081]), and SCALE PET demonstrated a minimal bias (slope = 1.014 [0.999–1.029]). The bias pattern was particularly noted for FMM. All platforms maintained their trends for correlations and biases when focusing on subthreshold-to-low-positive ranges (0–50 Centiloid units). However, all platforms showed an excellent agreement with the visual interpretation (areas under ROC curves > 0.996 for all). Conclusions: Three automated platforms demonstrated an acceptable reliability for Centiloid quantification, although software-specific biases were observed. These differences did not impair their feasibility in aiding the image interpretation, as supported by the concordance with visual readings. Nevertheless, users should recognize the platform-specific characteristics when applying diagnostic thresholds or interpreting longitudinal changes.

1. Introduction

Amyloid PET is recognized as reliable and is currently the only clinically applicable imaging biomarker for detecting the amyloid-β deposition, a pathological hallmark of Alzheimer’s disease (AD) [1,2]. Three radiopharmaceuticals—[18F]Florbetaben (FBB), [18F]Flutemetamol (FMM), and [18F]Florbetapir—are currently available under the U.S. Food and Drug Administration (FDA) approval for amyloid imaging. Although these tracers show broadly similar imaging characteristics and diagnostic performances, they exhibit subtle differences in tracer kinetics and regional distributions [3,4]. Consequently, when using conventional quantitative metrics such as the standardized uptake value ratio (SUVR), tracer- and protocol-specific reference values and thresholds are required. To address this challenge, the Centiloid Project, under the Global Alzheimer’s Association Interactive Network (GAAIN), developed a universal quantitative scale [5]. This system standardizes the whole cerebellum (WC)-referenced SUVR, defining a value of 0 for the average of young cognitively normal individuals and 100 for the average of AD patients, based on 50–70 min [11C]PiB PET data. Subsequently, tracer-specific conversion formulas were established to translate PET using 18F-labeled radiopharmaceuticals into Centiloid units, providing a cross-tracer standardized quantitative metric [6,7,8,9]. Today, the Centiloid scale is the most widely used quantitative index, offering objective cutoffs, supporting visual assessments, and guiding treatment decisions [10,11,12].

Recently, amyloid-targeting therapies, such as lecanemab and donanemab, have been FDA-approved and have entered clinical use [13,14]. Clinical trials defined eligibility using the amyloid positivity determined by cerebrospinal fluid biomarkers or amyloid PET, and demonstrated significant Centiloid value reductions with treatment, supporting its role in monitoring responses. Particularly, the donanemab trial set a Centiloid threshold of 37 for eligibility and defined treatment discontinuation criteria based on Centiloid value reductions [14,15], indicating its potential pivotal roles in the era of amyloid-targeting drugs.

Despite the increasing clinical demand, the routine application of the Centiloid scale in daily practice faces hurdles, including researcher labor, long analysis times, and the standardization of the analysis process. To address this, multiple automated quantification platforms have been developed and are increasingly used in research and clinical settings. However, the evidence validating their reliability in real-world populations remains limited. Several previous studies have compared amyloid PET quantification software, but most focused on research-use-only tools requiring manual labor, assessed SUVR values without Centiloid standardization, or lacked a reference method (Table 1).

Table 1.

Previous studies comparing amyloid PET quantification software.

In this study, we investigated the reliability of the Centiloid scale as implemented by three commercial software platforms, each based on distinct processing pipelines currently used in clinical and research practice. We simultaneously applied these software tools to patient amyloid PET scans and compared their Centiloid values with those calculated using the original Centiloid Project method to evaluate their agreement and reliability.

2. Materials and Methods

2.1. Patients

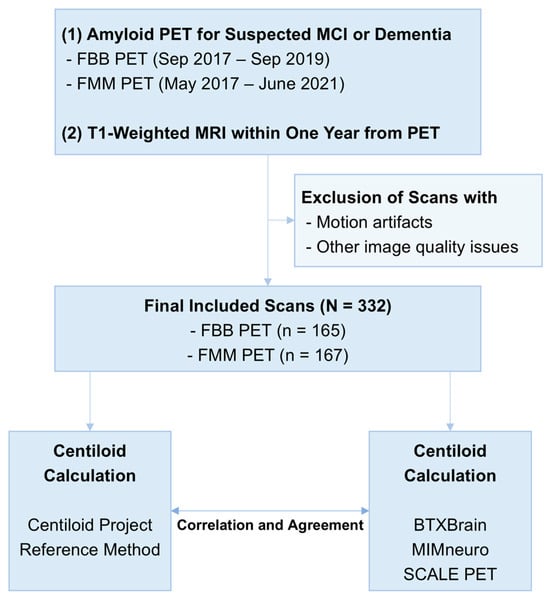

This single-center retrospective study included patients who underwent amyloid PET for suspected mild cognitive impairment or dementia. Inclusion criteria were as follows: (1) FBB PET acquired between September 2017 and September 2019 or FMM PET acquired between May 2017 and June 2021 and (2) T1-weighted MRI performed within one year of the PET scan (Figure 1). The inclusion periods were defined separately for each radiotracer to ensure a balanced enrollment of patients across tracers, reflecting differences in their respective introduction dates and usage frequencies in this period. Patients were excluded based on manual review if their PET or MRI images were compromised by motion artifacts or other quality issues that could interfere with image analysis.

Figure 1.

Overall study flow.

2.2. Amyloid PET Imaging and Visual Interpretation

Amyloid PET was performed 90 min after intravenous administration of 296 MBq FBB or 185 MBq FMM. PET images were acquired for 20 min using dedicated PET/CT scanners (Discovery 710, GE HealthCare, Chicago, IL, USA; Biograph mCT 40 TruePoint, Siemens Healthineers, Erlangen, Germany) to cover the entire brain immediately following non-contrast CT. PET images were reconstructed using ordered subset expectation maximization (4 iterations and 16 subsets for Discovery; 3 iterations and 21 subsets for Biograph), accompanied by CT-based attenuation correction, scatter correction, and point spread function recovery. Time-of-flight was not applied. The images had a matrix size of 256 × 256.

Visual assessment to determine amyloid positivity was conducted by two experienced nuclear medicine physicians blinded to Centiloid values. Any discrepancies were resolved by thorough consensus discussion.

2.3. The Centiloid Quantification Using the Original Centiloid Project Method

Centiloid values were calculated for each amyloid PET image following the standard method established by Centiloid Project (https://www.gaain.org/centiloid-project) (accessed on 16 April 2025). Ref. [5] and used as the reference standards for evaluating the reliability of automated software.

Image processing was performed using SPM8 (Wellcome Trust Centre for Neuroimaging, London, UK; https://www.fil.ion.ucl.ac.uk/spm/software/spm8) (accessed on 16 April 2025) and MATLAB 2024b (Mathworks, Natick, MA, USA). Initially, PET and T1-weighted MRI images were reoriented to approximately match the coordinate space and orientation of the Montreal Neurological Institute (MNI) template. MRI images were coregistered with the MNI template for rough alignment, followed by coregistration of PET images to the corresponding MRI scans. Unified segmentations were applied to the MRI, and PET images were spatially normalized using the transformation parameters derived from segmentation. Mean counts for the cerebral cortex and WC were extracted using the regions of interest (ROIs) provided by Centiloid Project. SUVRs were calculated as the cerebral-to-cerebellar mean count ratio.

The SUVRs were converted to Centiloid values using previously established equations [6,7].

2.4. Automated Software-Based Centiloid Quantification

Centiloid values were also calculated using three commercially available software platforms: BTXBrain (v1.1.2, Brightonix Imaging, Seoul, Republic of Korea), MIMneuro (v.7.3.7, MIM Software, Cleveland, OH, USA), and SCALE PET (v2.0.1, Neurophet, Seoul, Republic of Korea). All DICOM images were imported into the software platforms and processed to calculate Centiloid values without any preprocessing, including partial-volume correction or harmonization of image quality across vendors.

Briefly, BTXBrain performs fully automated PET-only processing, using an in-house artificial intelligence (AI)-based spatial normalization pipeline without requiring MRI [23]. It applies the original Centiloid Project ROIs to calculate global SUVR with WC reference and converts SUVRs to Centiloid values using the original equations. In addition to the official BTXBrain Centiloid values, we provided a recalibrated Centiloid value adapted using GAAIN dataset as Supplementary Materials, which was a local setting in our institution.

MIMneuro also operates as a PET-only platform, registering PET images via BrainAlign deformable registration algorithm with multiple template registration of negative, average, and positive amyloid-specific templates [17]. The global cortical SUVR was calculated using to the [18F]Florbetapir Clark atlas referenced to WC [24]. The extracted SUVRs were converted to Centiloid values using recalibrated equations tailored to its pipeline [8].

SCALE PET offers PET-only and MRI-based versions, and the MRI-based version was used in this study. This version uses AI-based segmentation for patient MRI images directly on the individual space without transforming the MRI to a template [25]. The PET image is registered to the individual 3D T1 MRI using affine registration, and global SUVRs with WC reference are calculated on individualized ROIs based on the Desikan–Killiany atlas [21]. Centiloid values are calculated using an equation recalibrated with public datasets [5,6,7,8,26].

2.5. Statistical Analysis

Patient characteristics were summarized as mean ± standard deviation for continuous variables and as numbers with percentages for categorical variables. Correlations between reference and software-derived Centiloid values were evaluated using Pearson’s correlation coefficients. The reliability of each tool was assessed using intraclass correlation coefficient (ICC), and agreement was visualized using Bland–Altman plots. Passing–Bablok regression was performed to assess systematic and proportional bias. Differences between Pearson’s correlation coefficient were tested using Fisher’s Z-transformation. Concordance with visual interpretation results was analyzed using receiver operating characteristic (ROC) curve analysis, and optimal cutoff values were determined based on Youden’s index. A p-value < 0.05 was considered significant.

3. Results

3.1. Patient Characteristics

This study included 332 patients (age, 73.9 ± 7.5 years; male/female = 107:225). A total of 165 patients underwent FBB PET, and 167 patients underwent FMM PET (Table 2). The age and sex distribution were not significantly different between the two tracer groups. The Clinical Dementia Rating–Sum of Boxes (CDR-SB) showed no significant difference between groups (3.87 ± 3.13 vs. 3.86 ± 3.72, p = 0.983). The proportion of amyloid-positive cases based on the visual reading was also similar (44.2% for FBB and 41.9% for FMM, p = 0.751). The mean Centiloid value based on the original method was 35.9 ± 49.2 for FBB and 28.8 ± 38.4 for FMM, and the difference was not significant (p = 0.146).

Table 2.

Patient characteristics.

3.2. The Agreement of Software-Based Centiloid with the Reference

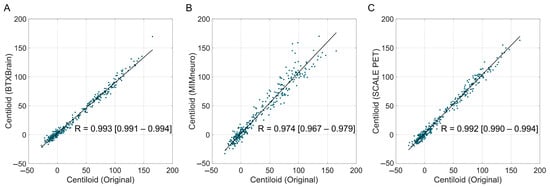

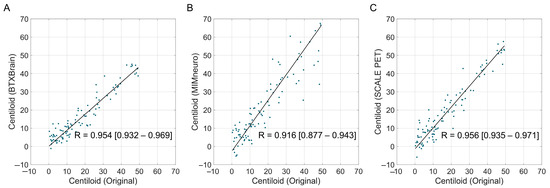

Overall, software-derived Centiloid values demonstrated a reliable correlation with the reference (Figure 2). BTXBrain and SCALE PET achieved an excellent linear association (R = 0.993 [95% CI, 0.991–0.994] and 0.992 [0.990–0.994], respectively), whereas MIMneuro showed a slightly lower correlation (R = 0.974 [0.967–0.979]) with a significant difference with the other two platforms (p < 0.001 for both) (Table 3). The Passing–Bablok regression showed a proportional bias in each platform: BTXBrain demonstrated a proportional underestimation (slope = 0.872 [0.860–0.885]), SCALE PET exhibited near-unity scaling with an equivocal overestimation (slope = 1.014 [0.999–1.029]), and MIMneuro showed a proportional overestimation (slope = 1.053 [1.026–1.081]). In terms of the overall reliability presented as ICCs, SCALE PET achieved the highest ICC (0.991 [0.983–0.994]), followed by BTXBrain (0.986 [0.982–0.989]) and MIMneuro (0.966 [0.944–0.978]).

Figure 2.

Correlation between software-based and original Centiloid values. Scatter plots showing the correlation between the reference Centiloid values calculated using the original Centiloid Project method and Centiloid values derived from three commercial software platforms ((A), BTXBrain; (B), MIMneuro; (C), SCALE PET). Each dot represents an individual patient scan. The solid lines represent the Passing–Bablok regression lines.

Table 3.

Agreement of software-based Centiloids according to tracer types.

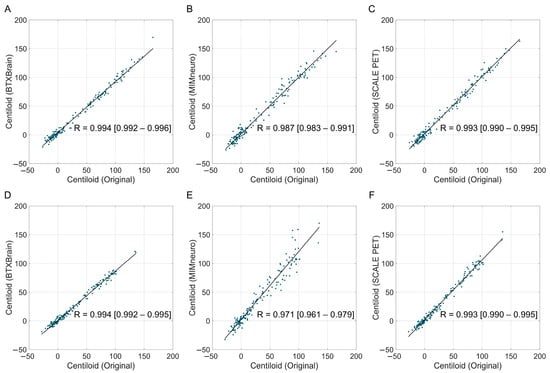

In tracer-specific analyses, both BTXBrain and SCALE PET maintained an excellent performance for FBB (BTXBrain, R = 0.994, ICC = 0.989; SCALE PET, R = 0.993, ICC = 0.992) and FMM (BTXBrain, R = 0.994, ICC = 0.980; SCALE PET, R = 0.993, ICC = 0.988) (Figure 3 and Table 3). MIMneuro’s agreement was robust for FBB (R = 0.987, ICC = 0.986) but declined for FMM (R = 0.971, ICC = 0.941). For the FMM subgroup, both SCALE PET and MIMneuro overestimated Centiloid values; however, MIMneuro’s overestimation (slope = 1.182 [1.130–1.231]) was greater than that of SCALE PET (slope = 1.044 [1.022–1.067]). In contrast, BTXBrain consistently underestimated Centiloid values across tracers, although the underestimation was slightly more pronounced in FMM (FBB, slope = 0.883 [0.867–0.899]; FMM, slope = 0.859 [0.842–0.877]).

Figure 3.

Correlation between software-based and original Centiloid values by radiotracer. The scatter plots show the correlation between the reference Centiloid values calculated using the original Centiloid Project method and Centiloid values derived from three commercial software platforms ((A,D): BTXBrain; (B,E): MIMneuro, (C,F): SCALE PET), separated by radiotracers ((A–C): [18F]Florbetaben PET; (D–F): [18F]Flutemetamol PET). Each dot represents an individual patient scan. The solid lines represent the Passing–Bablok regression lines.

Using 10–30 Centiloids as a gray zone, we assessed the presence of critically discordant cases, defined as cases where Centiloid values were classified as definitely negative (<10) by the original method but definitely positive (>30) by the software or vice versa. Despite the quantitative biases and variability, none of the tools produced such a discrepancy.

3.3. The Agreement of Software-Based Centiloid with the Original Method According to Centiloid Levels

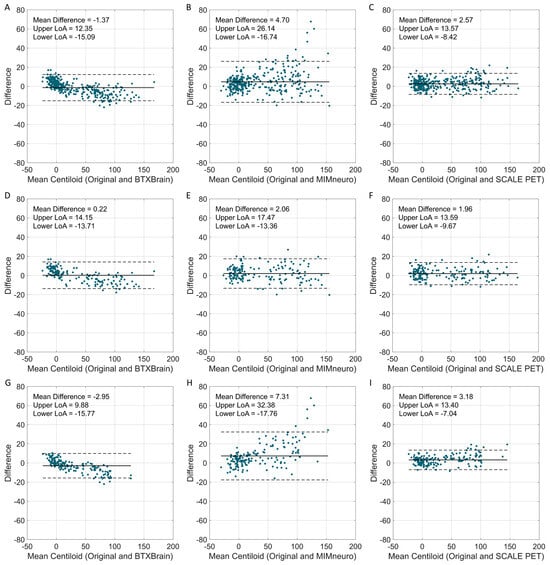

Beyond the overall agreement, we further examined biases across Centiloid ranges. Bland–Altman plots across the full Centiloid spectrum revealed that limits of agreement (LoAs) were narrowest for SCALE PET (−8.4 to 13.6), followed by BTXBrain (−15.1 to 12.4) and MIMneuro (−16.7 to 26.1) (Figure 4). BTXBrain exhibited a bidirectional bias, showing a slight overestimation at low Centiloid values and a progressive underestimation at higher values, which is consistent with its lower slope in the regression. MIMneuro showed a positive bias across all ranges, with larger differences in the definitely positive range. SCALE PET demonstrated a borderline overestimation over the entire range.

Figure 4.

Bland–Altman plots comparing software-derived Centiloid values with the original Centiloid values. Bland–Altman plots show the agreement between software-derived Centiloid values and the original Centiloid Project method. (A–C) Total population (BTXBrain, MIMneuro, SCALE PET); (D–F) [18F]Florbetaben (BTXBrain, MIMneuro, SCALE PET); (G–I) [18F]Flutemetamol (BTXBrain, MIMneuro, SCALE PET). Each plot displays the mean Centiloid value on the x-axis and the difference between software-derived and reference Centiloid values on the y-axis. The solid line represents the mean difference, while the dashed lines indicate the 95% limits of agreement (LoAs, mean difference ± 1.96 standard deviations).

We focused on the subthreshold-to-low-positive range (0–50 Centiloid unit), which is extended from the commonly considered gray zone (typically 10–30) [11], to evaluate the reliability in the most clinically significant zone for aiding diagnosis. Similar patterns were observed in this population, although overall statistics for reliability were slightly decreased due to the smaller group size (Figure 5 and Table 4). BTXBrain showed a strong agreement (R = 0.954 [0.932–0.969]; ICC = 0.942 [0.906–0.964]) with a modest underestimation (mean difference = −1.6 [−2.5 to −0.7]; slope = 0.876 [0.817–0.930]). SCALE PET demonstrated excellent reliability (R = 0.956 [0.935–0.971]; ICC = 0.944 [0.911–0.964]), with a modest overestimation (mean difference = +1.6 [0.6–2.6]; slope = 1.147 [1.078–1.210]). MIMneuro’s performance was relatively lower (R = 0.916 [0.877–0.943]; ICC = 0.853 [0.740–0.912]), with a more pronounced overestimation (mean difference = +4.0 [2.3–5.7]; slope = 1.397 [1.261–1.507]).

Figure 5.

The correlation between software-derived and original Centiloid values in the subthreshold-to-low-positive range (0–50 Centiloid unit). Scatter plots show the correlation between the reference Centiloid values calculated using the original Centiloid Project method and Centiloid values derived from three commercial software platforms ((A), BTXBrain; (B), MIMneuro; (C), SCALE PET), limited to cases with original Centiloid values between 0 and 50. Each dot represents an individual patient scan. The solid lines represent the Passing–Bablok re-gression lines.

Table 4.

The agreement of software-based Centiloid values in the subthreshold-to-low-positive range (0–50 Centiloid unit).

When stratified by tracers, MIMneuro’s overestimation was accentuated in the FMM subgroup (slope = 1.436 [1.318–1.584]; mean difference = +5.9 [3.6–8.1]). SCALE PET’s slight overestimation was consistent across tracers (FBB slope = 1.155 [0.978–1.355]; FMM slope = 1.146 [1.074–1.215]; mean difference = +1.6 [0.6–2.7] for both), although statistical significance was achieved only in FMM due to the group size difference. BTXBrain tended to underestimate Centiloid values for both tracers, with the underestimation more prominent in FMM (slope = 0.890 [0.826–0.941]) compared to FBB (slope = 0.922 [0.776–1.093]).

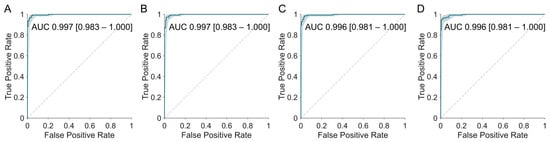

3.4. Agreement with Visual Interpretation Results

All software-derived Centiloids demonstrated an excellent concordance with the visual interpretation, comparable to the original method (Table 5 and Figure 6). The area under the ROC curve (AUROC) was high (Original 0.997 [95% CI, 0.983–1.000]; BTXBrain 0.997 [0.983–1.000]; MIMneuro 0.996 [0.981–1.000]; SCALE PET 0.996 [0.981–1.000]), even for MIMneuro which exhibited a relatively lower correlation with the original method.

Table 5.

Agreement of Centiloid values derived from variable methods with visual interpretation results.

Figure 6.

Receiver operating characteristic (ROC) curves for predicting amyloid PET visual interpretation results using Centiloid values derived from each software platform. The original method (A) and all three platforms—BTXBrain (B), MIMneuro (C), and SCALE PET (D)—demonstrated an excellent concordance with the visual interpretation, with areas under the curve (AUCs) exceeding 0.996.

Optimal cutoffs determined by Youden’s index were close overall to the original threshold of 21.4. BTXBrain’s cutoff (21.3) was the most similar to the reference. MIMneuro (28.2) and SCALE PET (25.4) required higher cutoff values.

When defining a practical diagnostic threshold and gray zone—i.e., cutoffs that achieve either 99% sensitivity or 99% specificity—the ranges for SCALE PET (18.8 for 99% sensitivity and 30.8 for 99% specificity) closely mirrored those of the original method (19.9–30.8). BTXBrain (16.6–37.6) and MIMneuro (21.1–38.1), however, needed substantially wider ranges to attain an equivalent sensitivity- or specificity-driven performance.

4. Discussion

Since its introduction in 2015 [5], the Centiloid framework has become the most widely accepted tracer-independent quantitative metric for amyloid PET, supporting amyloid positivity determination [27,28], disease progression prediction [29], amyloid burden increase monitoring [30], therapy candidate selection [14,15], treatment response evaluation [13,14,31,32], and treatment discontinuation decisions [14]. With the shift from clinical to biomarker-based diagnostic criteria for AD and the rise of amyloid-targeting therapies, the demand for practical quantification methods has grown, prompting the introduction of several commercial tools for clinical use [33,34,35]. Although commercial software adopting the Centiloid scale has demonstrated a satisfactory performance on reference datasets before market release, the validation using real-world data remains limited. While prior studies compared software-generated Centiloid values across platforms [19,22], none have benchmarked multiple commercial tools directly against the original reference method.

We addressed this gap by evaluating the reliability of Centiloid values generated by three widely used commercial tools, using the original Centiloid Project method as the reference standard. All three platforms produced Centiloid values with an acceptable agreement, but software-specific differences were evident. BTXBrain demonstrated an excellent overall correlation with the reference but exhibited a proportional underestimation. MIMneuro showed a slightly lower correlation and greater random variability, with a particularly pronounced overestimation in FMM scans. SCALE PET achieved an excellent overall correlation and minimal proportional overestimations for FMM. Importantly, these differences did not compromise the concordance with visual interpretations, suggesting that a strict alignment with the original method may not be necessary for maintaining clinical diagnostic performances.

Among the three platforms, SCALE PET showed the strongest concordance with the original Centiloid method, with an excellent correlation and a minimal proportional bias (Table 6). Although a slight overestimation was observed (slope 1.044 in FMM; mean difference +1.6), this deviation is clinically negligible. This excellent reliability reflects both the consistency of the AI-based individual MRI segmentation used for the PET SUVR quantification and its appropriate recalibration to match the original Centiloid. This robustness makes SCALE PET an attractive option for obtaining Centiloid values that most closely align with the reference. The MRI-based AI protocol for PET quantification ensures dependable results with a modest 5–10 min processing time, but it relies on having a separately acquired MRI. It is noteworthy that SCALE PET also offers a PET-only version [21], which warrants further validation to assess its performance in Centiloid calculations without MRI input.

Table 6.

A summary of key findings in this study.

BTXBrain demonstrated an excellent correlation with the Centiloid reference, though it initially underestimated values, mainly in the positive amyloid PET range, because it applies the original Centiloid equation without adjustments. In practice, this underestimation stems from a SUVR mismatch between BTXBrain and the MRI-based SPM pipeline [36], whereas BTXBrain’s SUVR aligns more closely with FreeSurfer, and both BTXBrain and FreeSurfer outperform SPM in spatial registration accuracy [23]. After applying a linear recalibration formula based on the GAAIN dataset to align with the original Centiloid scale, as MIMneuro and SCALE PET do, BTXBrain proves efficient for both clinical and research applications, with supplementary correlation plots (Figure S1), Bland–Altman analyses (Figure S2), and a post-recalibration concordance with visual reads (Table S1). Its ability to generate robust, highly correlated Centiloid results in about one to three minutes—without requiring a patient MRI—constitutes a clear practical advantage.

MIMneuro exhibited a higher variability and the lowest correlation with the original Centiloid method among the evaluated pipelines. Its PET quantification relies on an adaptive-template registration with a landmark-based deformation algorithm, which can be less precise than MRI-guided methods, such as the MRI-based high-dimensional normalization (SPM), MRI-driven surface registration and cortical parcellation (FreeSurfer), MRI-driven deep-learning-based tissue segmentation for ROI extractions (SCALE PET), or MR-less end-to-end PET normalization via deep learning trained on paired PET-MRI data (BTXBrain). Importantly, despite this variability, MIMneuro maintained a concordance with the visual interpretation, indicating that these methodological differences do not reduce its essential clinical diagnostic value. However, in research or clinical trial settings, where Centiloid values must be rigorously compared and reproduced, its higher variability and lower correlation with the original Centiloid method relative to other software should be taken into account.

Various Centiloid thresholds have been proposed for determining amyloid positivity [15,28,37,38], but given the known variability, applying a single cutoff across all settings is generally not recommended [11]. In this study, we suggested platform-specific cutoffs corresponding to a 99% sensitivity and a 99% specificity, which aligned broadly with the published threshold, which ranges from 17 to 42. This supports the overall suitability of these commercial tools for clinical and research use. Nevertheless, the small but consistent differences in cutoffs across platforms indicate the need for caution when applying a fixed Centiloid threshold (e.g., 30 for treatment enrollment decisions) across the different software. A careful consideration of platform-specific characteristics is essential for accurate and clinically meaningful interpretations.

We excluded comparisons of the SUVR between software platforms due to differences in their atlases. BTXBrain uses the Centiloid Project atlas for calculating Centiloid values, but it applies the Automated Anatomical Labeling 3 atlas for SUVR values displayed within the software. MIMneuro employs an atlas developed for the [18F]Florbetapir PET quantification [24], and SCALE PET uses the Desikan–Killiany atlas commonly adopted in MRI segmentation [39]. These differences in the ROI parcellation introduce an unavoidable SUVR variability; therefore, we focused our analysis exclusively on Centiloid values.

This study has several limitations. First, the single-center retrospective design limits the exclusion of a potential selection bias. To ensure a broader generalizability and robustness, future multicenter studies are warranted to validate these automated Centiloid platforms across diverse populations and imaging systems.

The analysis did not include images acquired from digital PET scanners, which are increasingly adopted in clinical practice. Because digital PET systems differ in technical aspects, such as the spatial resolution, sensitivity, and noise characteristics, the performance of automated Centiloid quantification tools may vary accordingly. As a result, the generalizability of our findings to digital PET systems remains uncertain.

In addition, our study focused on validating the quantitative outputs of each software platform, rather than examining their associations with clinical outcomes. As such, the comparative clinical utility of these tools, such as predicting cognitive decline or monitoring treatment responses, remains outside the scope of this analysis. This design reflects our primary aim: to evaluate whether Centiloid values derived from recently commercialized, automated platforms can be used interchangeably with those obtained from the reference method and other established approaches. Confirming their technical reliability is a necessary prerequisite before applying these Centiloid values for outcome prediction or treatment monitoring.

Last, we evaluated the commercial software in a clinical setting without harmonizing PET/CT images across different scanners. Despite using PET/CT systems from multiple vendors, the results were highly consistent, suggesting that Centiloid values from these commercial platforms can be used reliably without prior harmonization.

5. Conclusions

This study is the first to directly benchmark dedicated, nearly fully automated software platforms for Centiloid calculations against the original Centiloid Project method using real-world amyloid PET data. By evaluating tools with distinct processing pipelines, it provides a practical reference for researchers and clinicians to assess the reliability of Centiloid values generated by different platforms. All three commercial automated platforms provided an acceptable reliability for the Centiloid quantification when compared to the original Centiloid Project method. Although each software exhibited a proportional bias or variability, these differences did not compromise the agreement with the visual interpretation, suggesting their potential utility as supportive tools in clinical practice. Nevertheless, clinicians should remain aware of platform-specific characteristics, especially when applying diagnostic thresholds or monitoring longitudinal changes. Further investigations are warranted, including large-scale multicenter validation studies that incorporate digital PET/CT systems and assess associations between software-derived Centiloid values and clinical outcomes, to establish their utility in patient management.

Supplementary Materials

The following supporting information can be downloaded at https://www.mdpi.com/article/10.3390/tomography11080086/s1, Figure S1: Correlation plots showing the recalibrated Centiloid values derived from BTXBrain against the original Centiloid Project reference values; Figure S2: Bland–Altman plots comparing the recalibrated Centiloid values derived from BTXBrain with the original Centiloid values; Table S1: Agreement of recalibrated Centiloid values derived from BTXBrain with visual interpretation results.

Author Contributions

Conceptualization, Y.-k.K. and S.H.; Methodology, Y.-k.K. and S.H.; Software, Y.-k.K., J.W.M., S.J.K. and S.H.; Validation, J.W.M., S.J.K. and S.H.; Formal Analysis, Y.-k.K.; Investigation, Y.-k.K., J.W.M., S.J.K. and S.H.; Resources, Y.-k.K., J.W.M., S.J.K. and S.H.; Data Curation, Y.-k.K.; Writing—Original Draft Preparation, Y.-k.K.; Writing—Review and Editing, J.W.M., S.J.K. and S.H.; Visualization, Y.-k.K.; Supervision, S.H.; Project Administration, S.H.; Funding Acquisition, S.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (NRF-2021R1I1A1A01059759).

Institutional Review Board Statement

This study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board of Seoul’s St. Mary’s Hospital (No. KC25RISI0233 on 15 April 2025).

Informed Consent Statement

Patient consent was waived due to the retrospective nature of this study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. Permission from our institution’s Data Review Board is required prior to sharing.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| AD | Alzheimer’s disease |

| FBB | [18F]Florbetaben |

| FMM | [18F]Flutemetamol |

| FDA | Food and Drug Administration |

| SUVR | Standardized uptake value ratio |

| GAAIN | Global Alzheimer’s Association Interactive Network |

| WC | Whole cerebellum |

| MNI | Montreal Neurological Institute |

| AI | Artificial intelligence |

| ICC | Intraclass correlation coefficient |

| ROC | Receiver operating characteristic |

| CDR-SB | Clinical Dementia Rating–Sum of Boxes |

| LoAs | Limits of agreement |

| AUROC | Area under the ROC curve |

References

- Therriault, J.; Schindler, S.E.; Salvadó, G.; Pascoal, T.A.; Benedet, A.L.; Ashton, N.J.; Karikari, T.K.; Apostolova, L.; Murray, M.E.; Verberk, I.; et al. Biomarker-based staging of Alzheimer disease: Rationale and clinical applications. Nat. Rev. Neurol. 2024, 20, 232–244. [Google Scholar] [CrossRef]

- Chapleau, M.; Iaccarino, L.; Soleimani-Meigooni, D.; Rabinovici, G.D. The Role of Amyloid PET in Imaging Neurodegenerative Disorders: A Review. J. Nucl. Med. 2022, 63, 13S–19S. [Google Scholar] [CrossRef]

- Minoshima, S.; Drzezga, A.E.; Barthel, H.; Bohnen, N.; Djekidel, M.; Lewis, D.H.; Mathis, C.A.; McConathy, J.; Nordberg, A.; Sabri, O.; et al. SNMMI Procedure Standard/EANM Practice Guideline for Amyloid PET Imaging of the Brain 1.0. J. Nucl. Med. 2016, 57, 1316–1322. [Google Scholar] [CrossRef]

- Morris, E.; Chalkidou, A.; Hammers, A.; Peacock, J.; Summers, J.; Keevil, S. Diagnostic accuracy of 18F amyloid PET tracers for the diagnosis of Alzheimer’s disease: A systematic review and meta-analysis. Eur. J. Nucl. Med. Mol. Imaging 2016, 43, 374–385. [Google Scholar] [CrossRef]

- Klunk, W.E.; Koeppe, R.A.; Price, J.C.; Benzinger, T.L.; Devous, M.D.; Jagust, W.J.; Johnson, K.A.; Mathis, C.A.; Minhas, D.; Pontecorvo, M.J.; et al. The Centiloid Project: Standardizing quantitative amyloid plaque estimation by PET. Alzheimer’s Dement. 2015, 11, 1–15.e14. [Google Scholar] [CrossRef] [PubMed]

- Battle, M.R.; Pillay, L.C.; Lowe, V.J.; Knopman, D.; Kemp, B.; Rowe, C.C.; Doré, V.; Villemagne, V.L.; Buckley, C.J. Centiloid scaling for quantification of brain amyloid with [18F]flutemetamol using multiple processing methods. EJNMMI Res. 2018, 8, 107. [Google Scholar] [CrossRef]

- Rowe, C.C.; Doré, V.; Jones, G.; Baxendale, D.; Mulligan, R.S.; Bullich, S.; Stephens, A.W.; De Santi, S.; Masters, C.L.; Dinkelborg, L.; et al. 18F-Florbetaben PET beta-amyloid binding expressed in Centiloids. Eur. J. Nucl. Med. Mol. Imaging 2017, 44, 2053–2059. [Google Scholar] [CrossRef] [PubMed]

- Navitsky, M.; Joshi, A.D.; Kennedy, I.; Klunk, W.E.; Rowe, C.C.; Wong, D.F.; Pontecorvo, M.J.; Mintun, M.A.; Devous, M.D., Sr. Standardization of amyloid quantitation with florbetapir standardized uptake value ratios to the Centiloid scale. Alzheimer’s Dement. 2018, 14, 1565–1571. [Google Scholar] [CrossRef]

- Joshi, A.D.; Pontecorvo, M.J.; Lu, M.; Skovronsky, D.M.; Mintun, M.A.; Devous, M.D. A Semiautomated Method for Quantification of F 18 Florbetapir PET Images. J. Nucl. Med. 2015, 56, 1736–1741. [Google Scholar] [CrossRef] [PubMed]

- Pemberton, H.G.; Collij, L.E.; Heeman, F.; Bollack, A.; Shekari, M.; Salvadó, G.; Alves, I.L.; Garcia, D.V.; Battle, M.; Buckley, C.; et al. Quantification of amyloid PET for future clinical use: A state-of-the-art review. Eur. J. Nucl. Med. Mol. Imaging 2022, 49, 3508–3528. [Google Scholar] [CrossRef]

- Collij, L.E.; Bollack, A.; La Joie, R.; Shekari, M.; Bullich, S.; Roé-Vellvé, N.; Koglin, N.; Jovalekic, A.; Garciá, D.V.; Drzezga, A.; et al. Centiloid recommendations for clinical context-of-use from the AMYPAD consortium. Alzheimer’s Dement. 2024, 20, 9037–9048. [Google Scholar] [CrossRef]

- Jagust, W.J.; Mattay, V.S.; Krainak, D.M.; Wang, S.-J.; Weidner, L.D.; Hofling, A.A.; Koo, H.; Hsieh, P.; Kuo, P.H.; Farrar, G.; et al. Quantitative Brain Amyloid PET. J. Nucl. Med. 2024, 65, 670–678. [Google Scholar] [CrossRef]

- Van Dyck, C.H.; Swanson, C.J.; Aisen, P.; Bateman, R.J.; Chen, C.; Gee, M.; Kanekiyo, M.; Li, D.; Reyderman, L.; Cohen, S.; et al. Lecanemab in Early Alzheimer’s Disease. N. Engl. J. Med. 2023, 388, 9–21. [Google Scholar] [CrossRef]

- Sims, J.R.; Zimmer, J.A.; Evans, C.D.; Lu, M.; Ardayfio, P.; Sparks, J.; Wessels, A.M.; Shcherbinin, S.; Wang, H.; Monkul Nery, E.S.; et al. Donanemab in Early Symptomatic Alzheimer Disease: The TRAILBLAZER-ALZ 2 Randomized Clinical Trial. JAMA 2023, 330, 512–527. [Google Scholar] [CrossRef]

- Mintun, M.A.; Lo, A.C.; Evans, C.D.; Wessels, A.M.; Ardayfio, P.A.; Andersen, S.W.; Shcherbinin, S.; Sparks, J.; Sims, J.R.; Brys, M.; et al. Donanemab in Early Alzheimer’s Disease. N. Engl. J. Med. 2021, 384, 1691–1704. [Google Scholar] [CrossRef]

- Hutton, C.; Declerck, J.; Mintun, M.A.; Pontecorvo, M.J.; Devous, M.D.; Joshi, A.D.; for the Alzheimer’s Disease Neuroimaging Initiative. Quantification of 18F-florbetapir PET: Comparison of two analysis methods. Eur. J. Nucl. Med. Mol. Imaging 2015, 42, 725–732. [Google Scholar] [CrossRef]

- Choi, W.H.; Um, Y.H.; Jung, W.S.; Kim, S.H. Automated quantification of amyloid positron emission tomography: A comparison of PMOD and MIMneuro. Ann. Nucl. Med. 2016, 30, 682–689. [Google Scholar] [CrossRef] [PubMed]

- Landau, S.M.; Ward, T.J.; Murphy, A.; Iaccarino, L.; Harrison, T.M.; La Joie, R.; Baker, S.; Koeppe, R.A.; Jagust, W.J.; Alzheimer’s Disease Neuroimaging Initiative. Quantification of amyloid beta and tau PET without a structural MRI. Alzheimer’s Dement. 2023, 19, 444–455. [Google Scholar] [CrossRef] [PubMed]

- Jovalekic, A.; Roé-Vellvé, N.; Koglin, N.; Quintana, M.L.; Nelson, A.; Diemling, M.; Lilja, J.; Gómez-González, J.P.; Doré, V.; Bourgeat, P.; et al. Validation of quantitative assessment of florbetaben PET scans as an adjunct to the visual assessment across 15 software methods. Eur. J. Nucl. Med. Mol. Imaging 2023, 50, 3276–3289. [Google Scholar] [CrossRef] [PubMed]

- Roh, H.W.; Son, S.J.; Hong, C.H.; Moon, S.Y.; Lee, S.M.; Seo, S.W.; Choi, S.H.; Kim, E.-J.; Cho, S.H.; Kim, B.C.; et al. Comparison of automated quantification of amyloid deposition between PMOD and Heuron. Sci. Rep. 2023, 13, 9891. [Google Scholar] [CrossRef]

- Kim, S.; Wang, S.-M.; Kang, D.W.; Um, Y.H.; Han, E.J.; Park, S.Y.; Ha, S.; Choe, Y.S.; Kim, H.W.; Kim, R.E.; et al. A Comparative Analysis of Two Automated Quantification Methods for Regional Cerebral Amyloid Retention: PET-Only and PET-and-MRI-Based Methods. Int. J. Mol. Sci. 2024, 25, 7649. [Google Scholar] [CrossRef] [PubMed]

- Shang, C.; Sakurai, K.; Nihashi, T.; Arahata, Y.; Takeda, A.; Ishii, K.; Ishii, K.; Matsuda, H.; Ito, K.; Kato, T.; et al. Comparison of consistency in centiloid scale among different analytical methods in amyloid PET: The CapAIBL, VIZCalc, and Amyquant methods. Ann. Nucl. Med. 2024, 38, 460–467. [Google Scholar] [CrossRef] [PubMed]

- Kang, S.K.; Kim, D.; Shin, S.A.; Kim, Y.K.; Choi, H.; Lee, J.S. Fast and Accurate Amyloid Brain PET Quantification Without MRI Using Deep Neural Networks. J. Nucl. Med. 2023, 64, 659–666. [Google Scholar] [CrossRef]

- Clark, C.M.; Schneider, J.A.; Bedell, B.J.; Beach, T.G.; Bilker, W.B.; Mintun, M.A.; Pontecorvo, M.J.; Hefti, F.; Carpenter, A.P.; Flitter, M.L.; et al. Use of Florbetapir-PET for Imaging β-Amyloid Pathology. JAMA 2011, 305, 275–283. [Google Scholar] [CrossRef]

- Lee, J.; Ha, S.; Kim, R.E.Y.; Lee, M.; Kim, D.; Lim, H.K. Development of Amyloid PET Analysis Pipeline Using Deep Learning-Based Brain MRI Segmentation—A Comparative Validation Study. Diagnostics 2022, 12, 623. [Google Scholar] [CrossRef]

- Rowe, C.C.; Jones, G.; Doré, V.; Pejoska, S.; Margison, L.; Mulligan, R.S.; Chan, J.G.; Young, K.; Villemagne, V.L. Standardized Expression of 18F-NAV4694 and 11C-PiB β-Amyloid PET Results with the Centiloid Scale. J. Nucl. Med. 2016, 57, 1233–1237. [Google Scholar] [CrossRef]

- Collij, L.E.; Salvadó, G.; de Wilde, A.; Altomare, D.; Shekari, M.; Gispert, J.D.; Bullich, S.; Stephens, A.; Barkhof, F.; Scheltens, P.; et al. Quantification of [18F]florbetaben amyloid-PET imaging in a mixed memory clinic population: The ABIDE project. Alzheimer’s Dement. 2023, 19, 2397–2407. [Google Scholar] [CrossRef]

- Collij, L.E.; Salvadó, G.; Shekari, M.; Lopes Alves, I.; Reimand, J.; Wink, A.M.; Zwan, M.; Niñerola-Baizán, A.; Perissinotti, A.; Scheltens, P.; et al. Visual assessment of [18F]flutemetamol PET images can detect early amyloid pathology and grade its extent. Eur. J. Nucl. Med. Mol. Imaging 2021, 48, 2169–2182. [Google Scholar] [CrossRef]

- Farrell, M.E.; Jiang, S.; Schultz, A.P.; Properzi, M.J.; Price, J.C.; Becker, J.A.; Jacobs, H.I.L.; Hanseeuw, B.J.; Rentz, D.M.; Villemagne, V.L.; et al. Defining the Lowest Threshold for Amyloid-PET to Predict Future Cognitive Decline and Amyloid Accumulation. Neurology 2021, 96, e619–e631. [Google Scholar] [CrossRef]

- Bollack, A.; Collij, L.E.; García, D.V.; Shekari, M.; Altomare, D.; Payoux, P.; Dubois, B.; Grau-Rivera, O.; Boada, M.; Marquié, M.; et al. Investigating reliable amyloid accumulation in Centiloids: Results from the AMYPAD Prognostic and Natural History Study. Alzheimer’s Dement. 2024, 20, 3429–3441. [Google Scholar] [CrossRef] [PubMed]

- Bateman, R.J.; Smith, J.; Donohue, M.C.; Delmar, P.; Abbas, R.; Salloway, S.; Wojtowicz, J.; Blennow, K.; Bittner, T.; Black, S.E.; et al. Two Phase 3 Trials of Gantenerumab in Early Alzheimer’s Disease. N. Engl. J. Med. 2023, 389, 1862–1876. [Google Scholar] [CrossRef]

- Budd Haeberlein, S.; Aisen, P.S.; Barkhof, F.; Chalkias, S.; Chen, T.; Cohen, S.; Dent, G.; Hansson, O.; Harrison, K.; von Hehn, C.; et al. Two Randomized Phase 3 Studies of Aducanumab in Early Alzheimer’s Disease. J. Prev. Alzheimer’s Dis. 2022, 9, 197–210. [Google Scholar] [CrossRef] [PubMed]

- Jack, C.R., Jr.; Andrews, J.S.; Beach, T.G.; Buracchio, T.; Dunn, B.; Graf, A.; Hansson, O.; Ho, C.; Jagust, W.; McDade, E.; et al. Revised criteria for diagnosis and staging of Alzheimer’s disease: Alzheimer’s Association Workgroup. Alzheimer’s Dement. 2024, 20, 5143–5169. [Google Scholar] [CrossRef]

- Rabinovici, G.D.; Knopman, D.S.; Arbizu, J.; Benzinger, T.L.S.; Donohoe, K.J.; Hansson, O.; Herscovitch, P.; Kuo, P.H.; Lingler, J.H.; Minoshima, S.; et al. Updated appropriate use criteria for amyloid and tau PET: A report from the Alzheimer’s Association and Society for Nuclear Medicine and Molecular Imaging Workgroup. Alzheimer’s Dement. 2025, 21, e14338. [Google Scholar] [CrossRef]

- Park, K.W. Anti-amyloid Antibody Therapies for Alzheimer’s Disease. Nucl. Med. Mol. Imaging 2024, 58, 227–236. [Google Scholar] [CrossRef]

- Kang, S.K.; Heo, M.; Chung, J.Y.; Kim, D.; Shin, S.A.; Choi, H.; Chung, A.; Ha, J.-M.; Kim, H.; Lee, J.S. Clinical Performance Evaluation of an Artificial Intelligence-Powered Amyloid Brain PET Quantification Method. Nucl. Med. Mol. Imaging 2024, 58, 246–254. [Google Scholar] [CrossRef]

- Hanseeuw, B.J.; Malotaux, V.; Dricot, L.; Quenon, L.; Sznajer, Y.; Cerman, J.; Woodard, J.L.; Buckley, C.; Farrar, G.; Ivanoiu, A.; et al. Defining a Centiloid scale threshold predicting long-term progression to dementia in patients attending the memory clinic: An [18F] flutemetamol amyloid PET study. Eur. J. Nucl. Med. Mol. Imaging 2021, 48, 302–310. [Google Scholar] [CrossRef] [PubMed]

- Royse, S.K.; Minhas, D.S.; Lopresti, B.J.; Murphy, A.; Ward, T.; Koeppe, R.A.; Bullich, S.; DeSanti, S.; Jagust, W.J.; Landau, S.M.; et al. Validation of amyloid PET positivity thresholds in centiloids: A multisite PET study approach. Alzheimers Res. Ther. 2021, 13, 99. [Google Scholar] [CrossRef] [PubMed]

- Desikan, R.S.; Ségonne, F.; Fischl, B.; Quinn, B.T.; Dickerson, B.C.; Blacker, D.; Buckner, R.L.; Dale, A.M.; Maguire, R.P.; Hyman, B.T.; et al. An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. Neuroimage 2006, 31, 968–980. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).