Automated Distal Radius and Ulna Skeletal Maturity Grading from Hand Radiographs with an Attention Multi-Task Learning Method

Abstract

1. Introduction

2. Materials and Methods

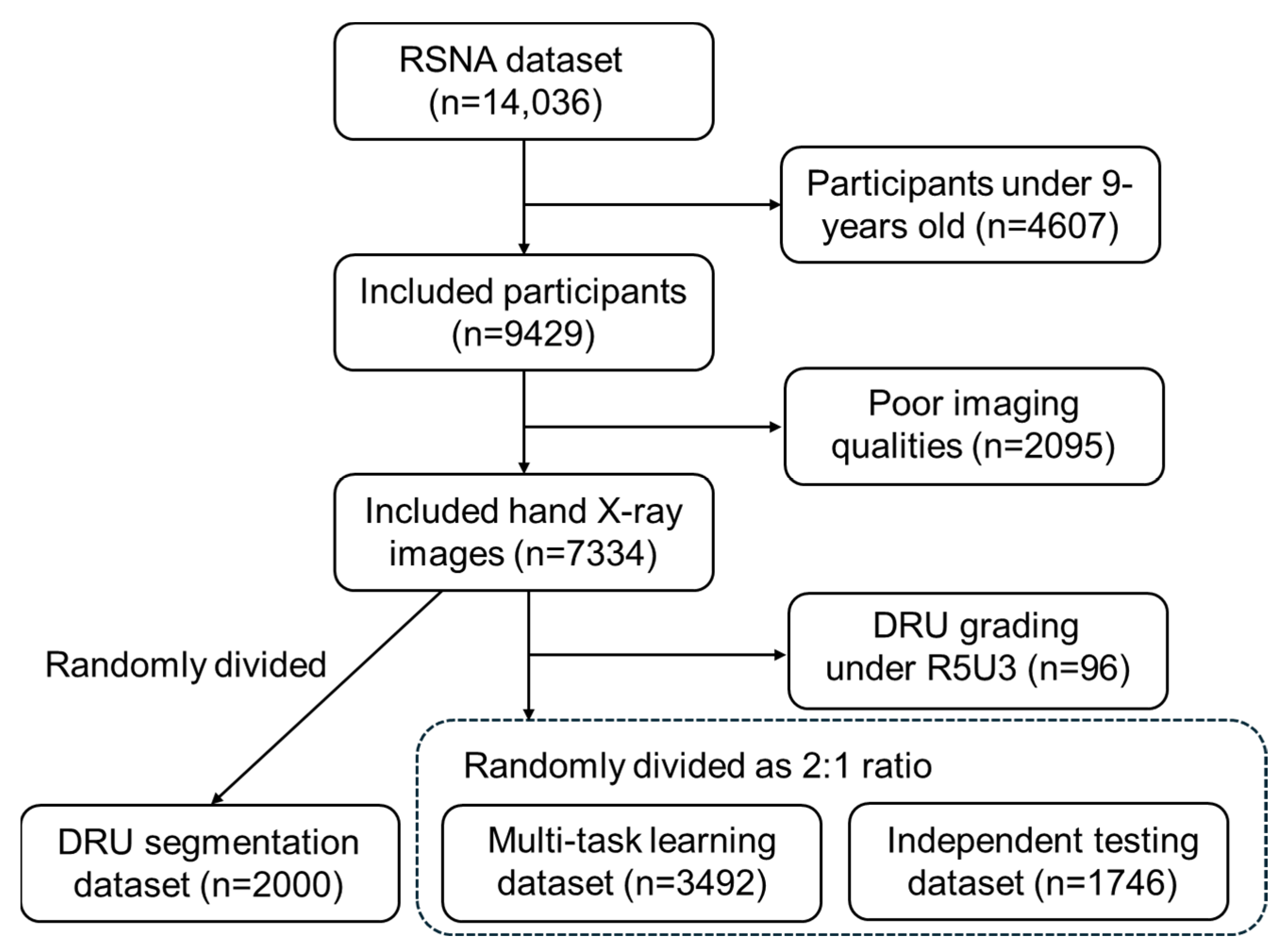

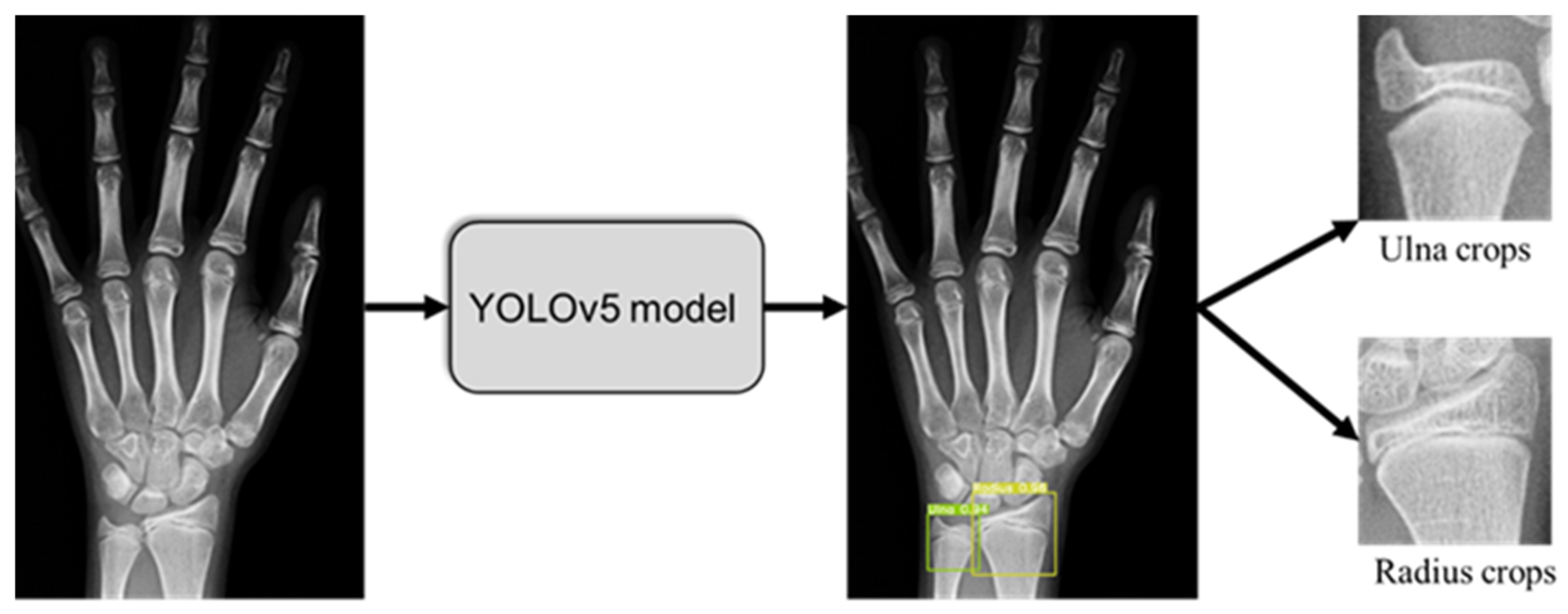

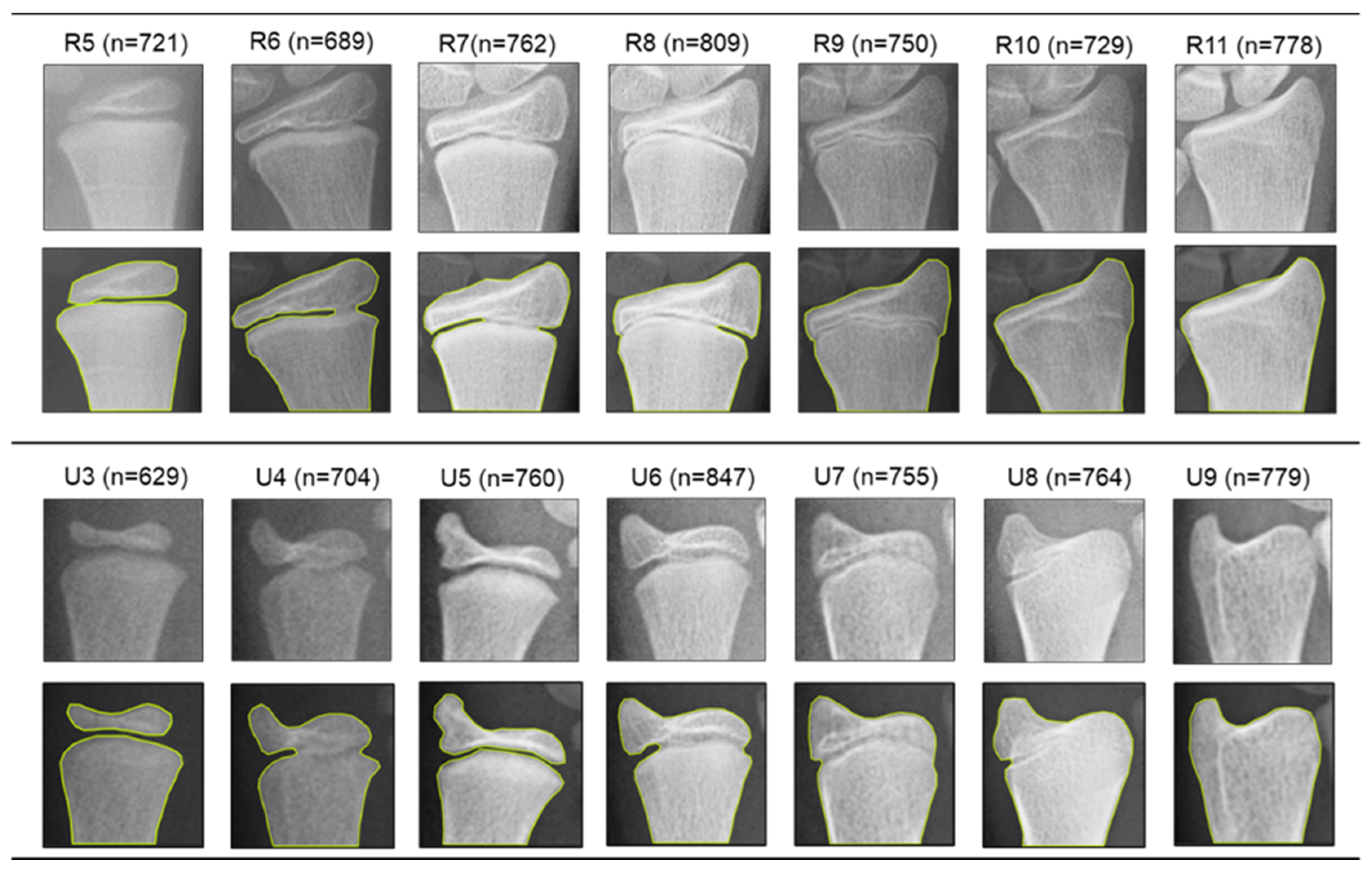

2.1. Dataset and Pre-Processing

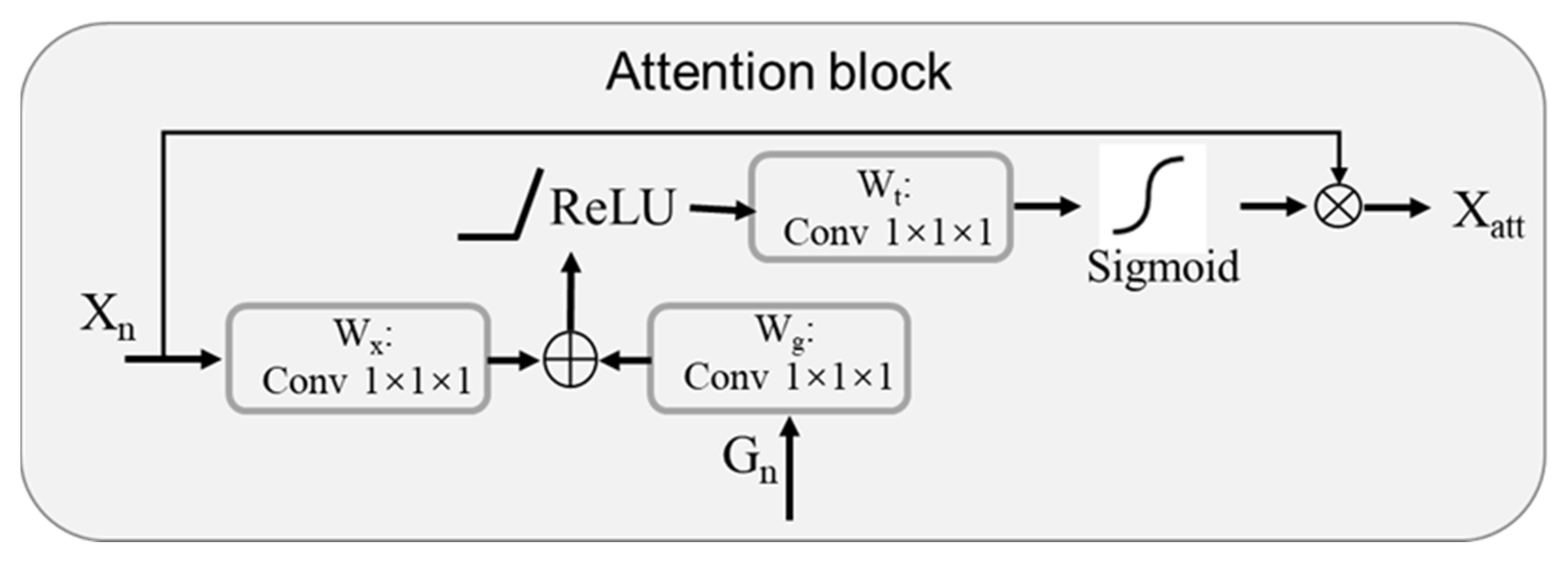

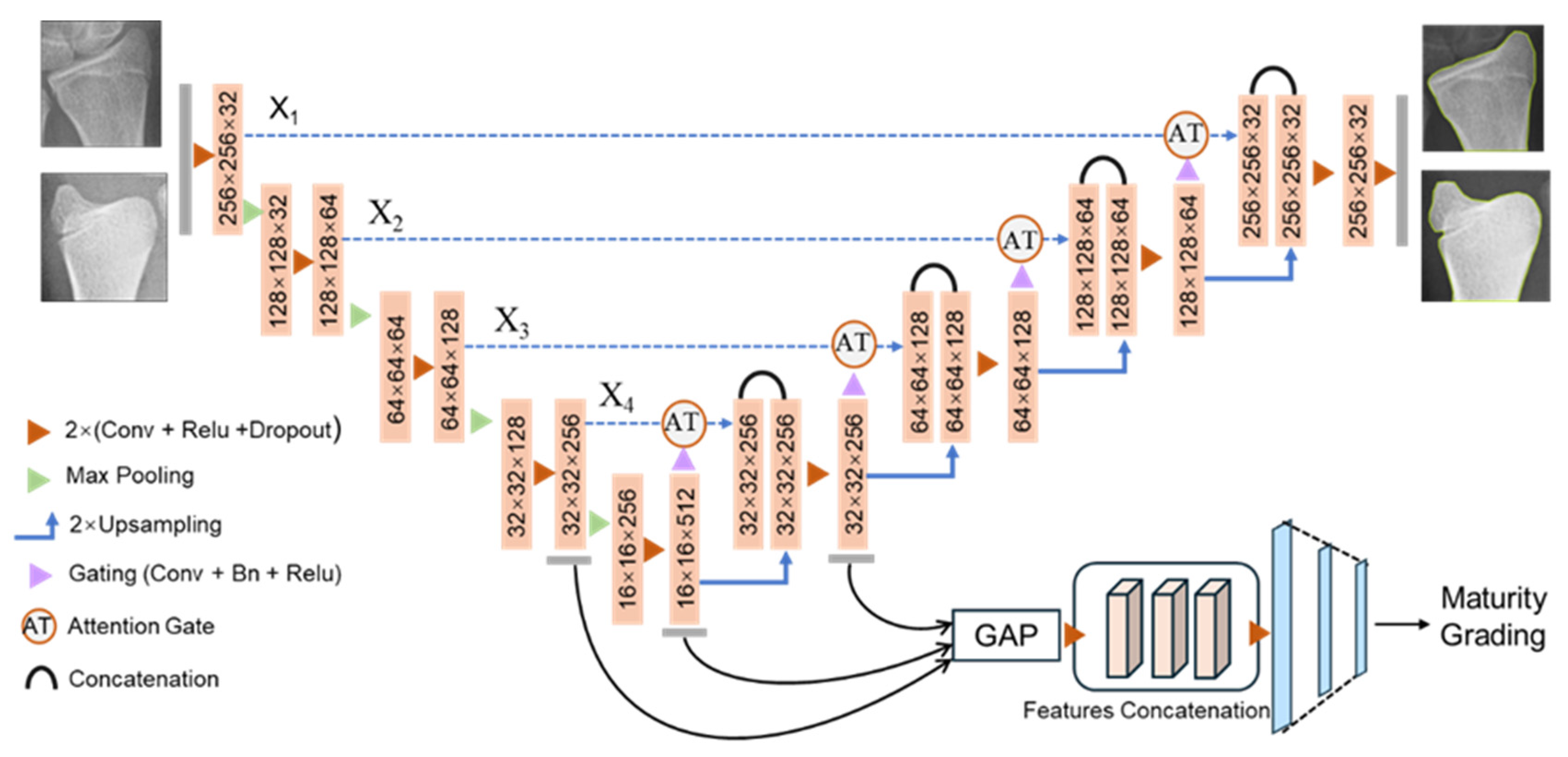

2.2. Attention-U-Net as Backbone of Muti-Task Framework

2.3. Multi-Task Learning Framework for DRU Grading

2.4. Model Training and Evaluation

2.5. Baseline Model Setting for Performance Comparison

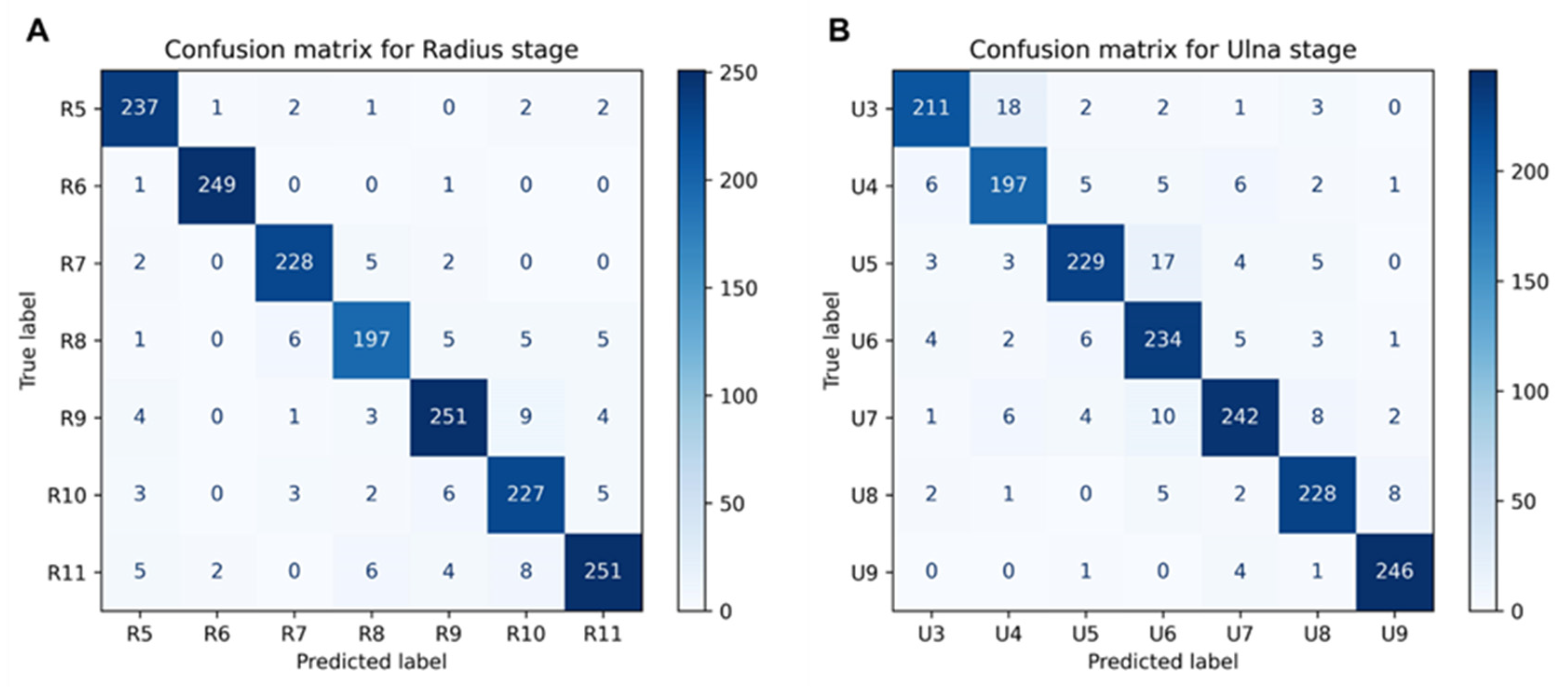

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Molinari, L.; Gasser, T.; Largo, R. A comparison of skeletal maturity and growth. Ann. Hum. Biol. 2013, 40, 333–340. [Google Scholar] [CrossRef] [PubMed]

- Augusto, A.C.L.; Goes, P.C.K.; Flores, D.V.; Costa, M.A.F.; Takahashi, M.S.; Rodrigues, A.C.O.; Padula, L.C.; Gasparetto, T.D.; Nogueira-Barbosa, M.H.; Aihara, A.Y. Imaging review of normal and abnormal skeletal maturation. Radiographics. 2022, 42, 861–879. [Google Scholar] [CrossRef] [PubMed]

- Johnson, M.A.; Flynn, J.M.; Anari, J.B.; Gohel, S.; Cahill, P.J.; Winell, J.J.; Baldwin, K.D. Risk of scoliosis progression in nonoperatively treated adolescent idiopathic scoliosis based on skeletal maturity. J. Pediatr. Orthop. 2021, 41, 543–548. [Google Scholar] [CrossRef]

- Spadoni, G.L.; Cianfarani, S. Bone age assessment in the workup of children with endocrine disorders. Horm. Res. Paediatr. 2010, 73, 2–5. [Google Scholar] [CrossRef]

- Staal, H.M.; Goud, A.L.; van der Woude, H.J.; Witlox, M.A.; Ham, S.J.; Robben, S.G.; Dremmen, M.H.; van Rhijn, L.W. Skeletal maturity of children with multiple osteochondromas: Is diminished stature due to a systemic influence? J. Child. Orthop. 2015, 9, 397–402. [Google Scholar] [CrossRef]

- Banica, T.; Vandewalle, S.; Zmierczak, H.G.; Goemaere, S.; De Buyser, S.; Fiers, T.; Kaufman, J.M.; De Schepper, J.; Lapauw, B. The relationship between circulating hormone levels, bone turnover markers and skeletal development in healthy boys differs according to maturation stage. Bone 2022, 158, 116368. [Google Scholar] [CrossRef]

- Bajpai, A.; Kabra, M.; Gupta, A.K.; Menon, P.S. Growth pattern and skeletal maturation following growth hormone therapy in growth hormone deficiency: Factors influencing outcome. Indian Pediatr. 2006, 43, 593. [Google Scholar]

- Sanders, J.O.; Khoury, J.G.; Kishan, S.; Browne, R.H.; Mooney, J.F., 3rd; Arnold, K.D.; McConnell, S.J.; Bauman, J.A.; Finegold, D.N. Predicting scoliosis progression from skeletal maturity: A simplified classification during adolescence. JBJS 2008, 90, 540–553. [Google Scholar] [CrossRef]

- Mughal, A.M.; Hassan, N.; Ahmed, A. Bone age assessment methods: A critical review. Pak. J. Med. Sci. 2014, 30, 211. [Google Scholar] [CrossRef]

- Gertych, A.; Zhang, A.; Sayre, J.; Pospiech-Kurkowska, S.; Huang, H.K. Bone age assessment of children using a digital hand atlas. Comput. Med. Imaging Graph. 2007, 31, 322–331. [Google Scholar] [CrossRef]

- Furdock, R.J.; Kuo, A.; Chen, K.J.; Liu, R.W. Applicability of shoulder, olecranon, and wrist-based skeletal maturity estimation systems to the modern pediatric population. J. Pediatr. Orthop. 2023, 43, 465–469. [Google Scholar] [CrossRef] [PubMed]

- Benedick, A.; Knapik, D.M.; Duren, D.L.; Sanders, J.O.; Cooperman, D.R.; Lin, F.C.; Liu, R.W. Systematic isolation of key parameters for estimating skeletal maturity on knee radiographs. JBJS 2021, 103, 795–802. [Google Scholar] [CrossRef] [PubMed]

- Sinkler, M.A.; Furdock, R.J.; Chen, D.B.; Sattar, A.; Liu, R.W. The systematic isolation of key parameters for estimating skeletal maturity on lateral elbow radiographs. JBJS 2022, 104, 1993–1999. [Google Scholar] [CrossRef] [PubMed]

- San Román, P.; Palma, J.C.; Oteo, M.D.; Nevado, E. Skeletal maturation determined by cervical vertebrae development. Eur. J. Orthod. 2002, 24, 303–311. [Google Scholar] [CrossRef] [PubMed]

- Hresko, A.M.; Hinchcliff, E.M.; Deckey, D.G.; Hresko, M.T. Developmental sacral morphology: MR study from infancy to skeletal maturity. Eur. Spine J. 2020, 29, 1141–1146. [Google Scholar] [CrossRef]

- Tanner, J.; Whitehouse, R.; Takaishi, M. Standards from birth to maturity for height, weight, height velocity, and weight velocity: British children, 1965. II. Arch. Dis. Child. 1966, 41, 613. [Google Scholar] [CrossRef]

- Schmidt, S.; Nitz, I.; Schulz, R.; Schmeling, A. Applicability of the skeletal age determination method of Tanner and Whitehouse for forensic age diagnostics. Int. J. Leg. Med. 2008, 122, 309–314. [Google Scholar] [CrossRef]

- Mansourvar, M.; Ismail, M.A.; Raj, R.G.; Kareem, S.A.; Aik, S.; Gunalan, R.; Antony, C.D. The applicability of Greulich and Pyle atlas to assess skeletal age for four ethnic groups. J. Forensic Leg. Med. 2014, 22, 26–29. [Google Scholar] [CrossRef]

- Alshamrani, K.; Offiah, A.C. Applicability of two commonly used bone age assessment methods to twenty-first century UK children. Eur. Radiol. 2020, 30, 504–513. [Google Scholar] [CrossRef]

- Canavese, F.; Charles, Y.P.; Dimeglio, A.; Schuller, S.; Rousset, M.; Samba, A.; Pereira, B.; Steib, J.P. A comparison of the simplified olecranon and digital methods of assessment of skeletal maturity during the pubertal growth spurt. Bone Jt. J. 2014, 96, 1556–1560. [Google Scholar] [CrossRef]

- Luk, K.D.; Saw, L.B.; Grozman, S.; Cheung, K.M.; Samartzis, D. Assessment of skeletal maturity in scoliosis patients to determine clinical management: A new classification scheme using distal radius and ulna radiographs. Spine J. 2014, 14, 315–325. [Google Scholar] [CrossRef] [PubMed]

- Cheung, J.P.; Samartzis, D.; Cheung, P.W.; Leung, K.H.; Cheung, K.M.; Luk, K.D. The distal radius and ulna classification in assessing skeletal maturity: A simplified scheme and reliability analysis. J. Pediatr. Orthop. B 2015, 24, 546–551. [Google Scholar] [CrossRef] [PubMed]

- Yahara, Y.; Tamura, M.; Seki, S.; Kondo, Y.; Makino, H.; Watanabe, K.; Kamei, K.; Futakawa, H.; Kawaguchi, Y. A deep convolutional neural network to predict the curve progression of adolescent idiopathic scoliosis: A pilot study. BMC Musculoskelet. Disord. 2022, 23, 610. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Zhang, T.; Zhang, C.; Shi, L.; Ng, S.Y.; Yan, H.C.; Yeung, K.C.; Wong, J.S.; Cheung, K.M.; Shea, G.K. An intelligent composite model incorporating global/regional X-rays and clinical parameters to predict progressive adolescent idiopathic scoliosis curvatures and facilitate population screening. eBioMedicine 2023, 95, 104768. [Google Scholar] [CrossRef] [PubMed]

- García-Cano, E.; Arámbula Cosío, F.; Duong, L.; Bellefleur, C.; Roy-Beaudry, M.; Joncas, J.; Parent, S.; Labelle, H. Prediction of spinal curve progression in adolescent idiopathic scoliosis using random forest regression. Comput. Biol. Med. 2018, 103, 34–43. [Google Scholar] [CrossRef]

- Dallora, A.L.; Anderberg, P.; Kvist, O.; Mendes, E.; Diaz Ruiz, S.; Sanmartin Berglund, J. Bone age assessment with various machine learning techniques: A systematic literature review and meta-analysis. PLoS ONE 2019, 14, e0220242. [Google Scholar] [CrossRef]

- Thodberg, H.H.; Kreiborg, S.; Juul, A.; Damgaard Pedersen, K. The BoneXpert method for automated determination of skeletal maturity. IEEE Trans. Med. Imaging 2008, 28, 52–66. [Google Scholar] [CrossRef]

- Fischer, B.; Weltera, P.; Grouls, C.; Günther, R.W.; Deserno, T.M. Bone age assessment by content-based image retrieval and case-based reasoning. In Medical Imaging 2011: Computer-Aided Diagnosis; SPIE: Bellingham, WA, USA, 2011. [Google Scholar]

- Harmsen, M.; Fischer, B.; Schramm, H.; Seidl, T.; Deserno, T.M. Support vector machine classification based on correlation prototypes applied to bone age assessment. IEEE J. Biomed. Health Inform. 2012, 17, 190–197. [Google Scholar] [CrossRef]

- Halabi, S.S.; Prevedello, L.M.; Kalpathy-Cramer, J.; Mamonov, A.B.; Bilbily, A.; Cicero, M.; Pan, I.; Araújo Pereira, L.; Teixeira Sousa, R.; Abdala, N.; et al. The RSNA pediatric bone age machine learning challenge. Radiology 2019, 290, 498–503. [Google Scholar] [CrossRef]

- Spampinato, C.; Palazzo, S.; Giordano, D.; Aldinucci, M.; Leonardi, R. Deep learning for automated skeletal bone age assessment in X-ray images. Med. Image Anal. 2017, 36, 41–51. [Google Scholar] [CrossRef]

- Li, S.; Liu, B.; Li, S.; Zhu, X.; Yan, Y.; Zhang, D. A deep learning-based computer-aided diagnosis method of X-ray images for bone age assessment. Complex Intell. Syst. 2022, 8, 1929–1939. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Chen, W.; Chen, W.; Ju, Y.; Chen, Y.; Chen, Y.; Hou, Z.; Hou, Z.; Li, X.; Li, X.; et al. Bone age assessment based on deep neural networks with annotation-free cascaded critical bone region extraction. Front. Artif. Intell. 2023, 6, 1142895. [Google Scholar] [CrossRef] [PubMed]

- Son, S.J.; Song, Y.M.; Kim, N.; Do, Y.H.; Kwak, N.; Lee, M.S.; Lee, B.D. TW3-based fully automated bone age assessment system using deep neural networks. IEEE Access 2019, 7, 33346–33358. [Google Scholar] [CrossRef]

- Kim, H.; Kim, C.S.; Lee, J.M.; Lee, J.J.; Lee, J.; Kim, J.S.; Choi, S.H. Prediction of Fishman’s skeletal maturity indicators using artificial intelligence. Sci. Rep. 2023, 13, 5870. [Google Scholar] [CrossRef]

- Makaremi, M.; Lacaule, C.; Mohammad-Djafari, A. Deep learning and artificial intelligence for the determination of the cervical vertebra maturation degree from lateral radiography. Entropy 2019, 21, 1222. [Google Scholar] [CrossRef]

- Kaddioui, H.; Duong, L.; Joncas, J.; Bellefleur, C.; Nahle, I.; Chémaly, O.; Nault, M.L.; Parent, S.; Grimard, G.; Labelle, H. Convolutional neural networks for automatic Risser stage assessment. Radiol. Artif. Intell. 2020, 2, e180063. [Google Scholar] [CrossRef]

- Diméglio, A.; Charles, Y.P.; Daures, J.P.; de Rosa, V.; Kaboré, B. Accuracy of the Sauvegrain method in determining skeletal age during puberty. JBJS 2005, 87, 1689–1696. [Google Scholar]

- Ahn, K.S.; Bae, B.; Jang, W.Y.; Lee, J.H.; Oh, S.; Kim, B.H.; Lee, S.W.; Jung, H.W.; Lee, J.W.; Sung, J.; et al. Assessment of rapidly advancing bone age during puberty on elbow radiographs using a deep neural network model. Eur. Radiol. 2021, 31, 8947–8955. [Google Scholar] [CrossRef]

- Wang, S.; Wang, X.; Shen, Y.; He, B.; Zhao, X.; Cheung, P.W.H. An ensemble-based densely-connected deep learning system for assessment of skeletal maturity. IEEE Trans. Syst. Man Cybern. Syst. 2020, 52, 426–437. [Google Scholar] [CrossRef]

- Zhou, Y.; Chen, H.; Li, Y.; Liu, Q.; Xu, X.; Wang, S.; Yap, P.T.; Shen, D. Multi-task learning for segmentation and classification of tumors in 3D automated breast ultrasound images. Med. Image Anal. 2021, 70, 101918. [Google Scholar] [CrossRef]

- Amyar, A.; Modzelewski, R.; Li, H.; Ruan, S. Multi-task deep learning based CT imaging analysis for COVID-19 pneumonia: Classification and segmentation. Comput. Biol. Med. 2020, 126, 104037. [Google Scholar] [CrossRef] [PubMed]

- Kundu, S.; Karale, V.; Ghorai, G.; Sarkar, G.; Ghosh, S.; Dhara, A.K. Attention u-net: Learning where to look for the pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019. [Google Scholar]

- Charles, Y.P.; Daures, J.P.; de Rosa, V.; Diméglio, A. Progression risk of idiopathic juvenile scoliosis during pubertal growth. Spine 2006, 31, 1933–1942. [Google Scholar] [CrossRef]

- Song, K.M.; Little, D.G. Peak height velocity as a maturity indicator for males with idiopathic scoliosis. J. Pediatr. Orthop. 2000, 20, 286–288. [Google Scholar] [CrossRef]

- Sanders, J.O.; Browne, R.H.; McConnell, S.J.; Margraf, S.A.; Cooney, T.E.; Finegold, D.N. Maturity assessment and curve progression in girls with idiopathic scoliosis. J. Bone Jt. Surg. Am. 2007, 89, 64–73. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, T.; Cheung, K.M.; Shea, G.K. Application of deep learning upon spinal radiographs to predict progression in adolescent idiopathic scoliosis at first clinic visit. eClinicalMedicine 2021, 42, 101220. [Google Scholar] [CrossRef]

- Ferrillo, M.; Curci, C.; Roccuzzo, A.; Migliario, M.; Invernizzi, M.; De Sire, A. Reliability of cervical vertebral maturation compared to hand-wrist for skeletal maturation assessment in growing subjects: A systematic review. J. Back Musculoskelet. Rehabil. 2021, 34, 925–936. [Google Scholar] [CrossRef]

- Cheung, J.P.Y.; Samartzis, D.; Cheung, P.W.H.; Cheung, K.M.; Luk, K.D. Reliability analysis of the distal radius and ulna classification for assessing skeletal maturity for patients with adolescent idiopathic scoliosis. Glob. Spine J. 2016, 6, 164–168. [Google Scholar] [CrossRef]

- Sallam, A.A.; Briffa, N.; Mahmoud, S.S.; Imam, M.A. Normal wrist development in children and adolescents: A geometrical observational analysis based on plain radiographs. J. Pediatr. Orthop. 2020, 40, e860–e872. [Google Scholar] [CrossRef]

- Huang, L.F.; Furdock, R.J.; Uli, N.; Liu, R.W. Estimating skeletal maturity using wrist radiographs during preadolescence: The epiphyseal: Metaphyseal ratio. J. Pediatr. Orthop. 2022, 42, e801–e805. [Google Scholar] [CrossRef]

- Furdock, R.J.; Huang, L.F.; Sanders, J.O.; Cooperman, D.R.; Liu, R.W. Systematic isolation of key parameters for estimating skeletal maturity on anteroposterior wrist radiographs. JBJS 2022, 104, 530–536. [Google Scholar] [CrossRef] [PubMed]

- Larson, D.B.; Chen, M.C.; Lungren, M.P.; Halabi, S.S.; Stence, N.V.; Langlotz, C.P. Performance of a deep-learning neural network model in assessing skeletal maturity on pediatric hand radiographs. Radiology 2018, 287, 313–322. [Google Scholar] [CrossRef] [PubMed]

- Cavallo, F.; Mohn, A.; Chiarelli, F.; Giannini, C. Evaluation of bone age in children: A mini review. Front. Pediatr. 2021, 9, 580314. [Google Scholar] [CrossRef] [PubMed]

- Niu, Z.; Zhong, G.; Yu, H. A review on the attention mechanism of deep learning. Neurocomputing 2021, 452, 48–62. [Google Scholar] [CrossRef]

- Brauwers, G.; Frasincar, F. A general survey on attention mechanisms in deep learning. IEEE Trans. Knowl. Data Eng. 2021, 35, 3279–3298. [Google Scholar] [CrossRef]

| Models | Accuracy (95%CI) | Precision (95%CI) | Recall (95%CI) | F1 score (95%CI) |

|---|---|---|---|---|

| Ensemble DenseNet [40] | 86.2% (85.4–88.7%) | 87.2% (85.9–87.7%) | 85.3% (84.4–86.2%) | 86.2% (85.1–86.9%) |

| ResNet [24] | 83.3% (81.8–84.6%) | 84.2% (83.0–85.4%) | 82.6% (81.1–83.0%) | 83.4% (82.0–84.2%) |

| Efficient-Net B4 | 84.5% (82.2–85.6%) | 83.9% (82.8–84.5%) | 85.2% (84.1–86.3%) | 84.5% (83.4–85.4%) |

| Two-stage framework | 87.3% (86.0–88.4%) | 86.8% (86.3–88.2%) | 88.5% (83.3–88.9%) | 87.6% (84.3–88.5%) |

| U-Net with multitask model | 89.4% (88.2–91.2%) | 90.3% (88.1–92.0%) | 88.0% (87.4–90.8%) | 89.1% (87.7–91.4%) |

| Multi-task without pretrain | 92.5% (90.3–93.1%) | 91.4% (89.9–93.0%) | 93.3% (91.9–94.0%) | 92.3% (90.9–93.5%) |

| Multi-task with regression | 92.2% (90.7–93.6%) | 91.8% (89.3–92.8%) | 92.9% (90.0–93.5%) | 92.3% (89.6–93.1%) |

| Proposed method | 94.3% (91.4–95.0%) | 93.8% (90.7–94.3%) | 94.6% (92.1–95.2%) | 94.2% (91.4–94.7%) |

| Models | Accuracy (95%CI) | Precision (95%CI) | Recall (95%CI) | F1 score (95%CI) |

|---|---|---|---|---|

| Ensemble DenseNet [40] | 83.4% (80.9–84.1%) | 81.3% (79.6–83.0%) | 83.9% (82.1–84.4%) | 83.2% (81.5–84.0%) |

| ResNet [24] | 81.0% (79.5–83.0%) | 78.6% (77.9–80.4%) | 81.5% (80.1–82.4%) | 80.8% (79.5–81.9%) |

| Efficient-Net B4 | 82.8% (81.7–83.6%) | 83.9% (82.0–84.7%) | 82.1% (81.5–83.9%) | 82.5% (81.6–84.1%) |

| Two-stage framework | 85.6% (84.1–85.9%) | 86.0% (84.4–86.7%) | 83.2% (83.0–84.5%) | 83.9% (83.3–85.0%) |

| U-Net with multitask model | 85.9% (84.3–86.7%) | 85.0% (83.9–86.2%) | 86.7% (84.9–87.0%) | 86.3% (84.6–86.8%) |

| Multi-task without pretrain | 87.2% (86.4–88.6%) | 85.0% (83.8–86.2%) | 87.9% (86.1–88.5%) | 87.2% (85.5–87.9%) |

| Multi-task with regression | 89.1% (87.0–91.1%) | 90.3% (88.7–90.9%) | 88.0% (87.6–89.8%) | 88.6% (87.9–90.1%) |

| Proposed method | 90.8% (88.6–93.3%) | 90.3% (89.0–92.6%) | 92.4% (90.1–94.2%) | 91.9% (89.8–93.8%) |

| Models | Distal Radius | Distal Ulna | ||

|---|---|---|---|---|

| IoU (95% CI) | DSC (95% CI) | IoU (95% CI) | DSC (95% CI) | |

| U-Net | 0.912 (0.906–0.926) | 0.930 (0.915–0.939) | 0.918 (0.897–0.922) | 0.920 (0.908–0.934) |

| Multi-task with U-Net | 0.937 (0.912–0.944) | 0.943 (0.921–0.949) | 0.931 (0.906–0.937) | 0.937 (0.919–0.945) |

| Attention-U-Net | 0.945 (0.932–0.953) | 0.950 (0.939–0.958) | 0.948 (0.933–0.961) | 0.950 (0.937–0.962) |

| Proposed methods | 0.960 (0.951–0.973) | 0.973 (0.962–0.979) | 0.966 (0.948–0.977) | 0.969 (0.962–0.978) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, X.; Wang, R.; Jiang, W.; Lu, Z.; Chen, N.; Wang, H. Automated Distal Radius and Ulna Skeletal Maturity Grading from Hand Radiographs with an Attention Multi-Task Learning Method. Tomography 2024, 10, 1915-1929. https://doi.org/10.3390/tomography10120139

Liu X, Wang R, Jiang W, Lu Z, Chen N, Wang H. Automated Distal Radius and Ulna Skeletal Maturity Grading from Hand Radiographs with an Attention Multi-Task Learning Method. Tomography. 2024; 10(12):1915-1929. https://doi.org/10.3390/tomography10120139

Chicago/Turabian StyleLiu, Xiaowei, Rulan Wang, Wenting Jiang, Zhaohua Lu, Ningning Chen, and Hongfei Wang. 2024. "Automated Distal Radius and Ulna Skeletal Maturity Grading from Hand Radiographs with an Attention Multi-Task Learning Method" Tomography 10, no. 12: 1915-1929. https://doi.org/10.3390/tomography10120139

APA StyleLiu, X., Wang, R., Jiang, W., Lu, Z., Chen, N., & Wang, H. (2024). Automated Distal Radius and Ulna Skeletal Maturity Grading from Hand Radiographs with an Attention Multi-Task Learning Method. Tomography, 10(12), 1915-1929. https://doi.org/10.3390/tomography10120139