Abstract

Northern Goshawk Optimization (NGO) is an efficient optimization algorithm, but it has the drawbacks of easily falling into local optima and slow convergence. Aiming at these drawbacks, an improved NGO algorithm named the Multi-Strategy Improved Northern Goshawk Optimization (MSINGO) algorithm was proposed by adding the cubic mapping strategy, a novel weighted stochastic difference mutation strategy, and weighted sine and cosine optimization strategy to the original NGO. To verify the performance of MSINGO, a set of comparative experiments were performed with five highly cited and six recently proposed metaheuristic algorithms on the CEC2017 test functions. Comparative experimental results show that in the vast majority of cases, MSINGO’s exploitation ability, exploration ability, local optimal avoidance ability, and scalability are superior to those of competitive algorithms. Finally, six real world engineering problems demonstrated the merits and potential of MSINGO.

1. Introduction

In the real-world, there are optimization problems in many fields [], such as medicine [,], transportation [,], engineering design [,], economics [], feature selection [,,], artificial neural networks [,,], and so on. The solution of optimization problems can be approached in two principal ways. The first is traditional mathematical methods, and the second is metaheuristic algorithms []. Traditional mathematical methods are deterministic methods, including gradient descent [], Newton’s method [], conjugate gradient [], and so on. They solve the problem by using the derivative information of the objective function, which is an effective solution to a continuously differentiable problem []. However, when the optimization problem is higher dimensional, non-differentiable, and has multiple local optimal solutions, the traditional mathematical method loses its function []. Hence, metaheuristic algorithms have been extensively studied. Metaheuristic algorithms are stochastic methods that consist mainly of two stages: exploration and exploitation phases. In the exploration phase, the algorithm searches for solutions on a global scale to avoid falling into local optima. In the exploitation phase, the algorithm searches in a local area to find a better solution. Metaheuristic algorithms are widely used to solve complex optimization problems in the real world due to their simplicity, flexibility, lack of a deduction mechanism, and ability to avoid local optima.

Metaheuristic algorithms are generally classified into four categories []: (1) evolution-based algorithms; (2) swarm-based algorithms; (3) physics- or chemistry-based algorithms; and (4) social- or human-based algorithms. Some well-known and recently metaheuristic algorithms are summarized in Table 1.

Evolution-based metaheuristic algorithms are mainly implemented by modeling the principles of species evolution in nature. An evolution-based metaheuristic algorithm usually consists of three operations: selection, crossover, and mutation. The Genetic Algorithm (GA) [] and Differential Evolution (DE) [] are two well-known evolution-based metaheuristic algorithms. The GA was first proposed by Holland in 1975, inspired by Darwin’s theory of natural competition. DE was proposed by Storn and Price in 1997, known for its simplicity, ease of implementation, fast convergence, and robustness. Other evolution-based algorithms include Evolutionary Programming (EP) [], Genetic Programming (GP) [], Biogeography-Based Optimization (BBO) [], Memetic Algorithms (MAs) [], and Imperialist Competitive Algorithms (ICAs) [].

Swarm-based metaheuristic algorithms are primarily inspired from the behavior of a group, using the interaction and cooperation of individual sources of information between populations to find the global optimal solution. Particle Swarm Optimization (PSO) is one of the most famous swarm-based algorithms [] mimicking the flocking behavior of birds. Ant Colony Optimization (ACO) [] is another popular swarm-based metaheuristic algorithm, inspired from the behavior of ants searching for the shortest path during their foraging process. Other swarm-based metaheuristic algorithms include the Whale Optimization Algorithm (WOA) [], Cuckoo Search Algorithm (CSA) [], Grey Wolf Optimizer (GWO) [], Moth Flame Optimizer (MFO) [], Sparrow Search Algorithm (SSA) [], Dung Beetle Optimizer (DBO) [], Beluga Whale Optimization (BWO) [], Red Fox Optimization Algorithm (RFO) [], Sea-horse Optimizer (SHO) [], Coati Optimization Algorithm (COA) [], Spider Wasp Optimizer (SWO) [], Cleaner fish optimization (CFO) [], and so on.

Physics- or chemistry-based metaheuristic algorithms are based on the simulation of various laws or phenomena in physics or chemistry. One of the well-known algorithms in this category is Simulated Annealing (SA) [], which is inspired by the physical law of metal cooling and annealing. Other physics- or chemistry-based algorithms include the Gravitational Search Algorithm (GSA) [], Artificial Chemical Reaction Optimization (ACRO) [], Sine Cosine Optimization (SCA) [], Thermal Exchange Optimization (TEO) [], and the Kepler Optimization Algorithm (KOA) [].

Social- or human-based algorithms mainly imitate human behaviors. Teaching and Learning Based Optimization (TLBO) [] is a typical example of this category. It is derived from the behavior of teaching and learning. Other famous or recent social- or human-based algorithms include the Cultural Evolution Algorithm (CEA) [], Social Learning Optimization Algorithm (SLOA) [], Socio Evolution and Learning Optimization Algorithm (SELO) [], and Volleyball Premier League Algorithm (VPL) [].

Table 1.

Metaheuristic algorithms.

Table 1.

Metaheuristic algorithms.

| Category | Algorithms | Authors | Year |

|---|---|---|---|

| Evolutionary | Genetic Algorithm (GA) [] | Holland | 1992 |

| Genetic Programming (GP) [] | Koza et al. | 1994 | |

| Differential Evolution (DE) [] | Storn and Price | 1997 | |

| Evolutionary Programming (EP) [] | Yao et al. | 1999 | |

| Memetic Algorithms (MAs) [] | Moscato | 2003 | |

| Imperialist Competitive Algorithms (ICAs) [] | Atashpaz et al. | 2007 | |

| Biogeography-Based Optimization (BBO) [] | Simon | 2008 | |

| Swarm | Particle Swarm Optimization (PSO) [] | Kennedy and Eberhart | 1995 |

| Ant Colony Optimization (ACO) [] | Dorigo et al. | 1999 | |

| Cuckoo Search Algorithm (CSA) [] | Yang and Deb | 2009 | |

| Grey Wolf Optimizer (GWO) [] | Mirjalili et al. | 2014 | |

| Moth Flame Optimizer (MFO) [] | Mirjalili and Seyedali | 2015 | |

| Whale Optimization Algorithm (WOA) [] | Mirjalili et al. | 2016 | |

| Seagull Optimization Algorithm (SOA)[] | Dhiman and Kumar | 2019 | |

| Sparrow Search Algorithm (SSA) [] | Xue et al. | 2020 | |

| Red Fox Optimization Algorithm (RFO) [] | Połap et al. | 2021 | |

| Northern Goshawk Optimization (NGO) [] | Dehghani et al. | 2021 | |

| Pelican Optimization Algorithm (POA) [] | Trojovský and Dehghani | 2022 | |

| Golden Jackal Optimization (GJO) [] | Chopra and Ansari | 2022 | |

| Beluga Whale Optimization (BWO) [] | Zhong et al. | 2022 | |

| Sea-horse Optimizer (SHO) [] | Zhao et al. | 2023 | |

| Dung Beetle Optimizer (DBO) [] | Xue et al. | 2023 | |

| Coati Optimization Algorithm (COA) [] | Dehghani et al. | 2023 | |

| Spider Wasp Optimizer (SWO) [] | Basset et al. | 2023 | |

| Cleaner fish optimization (CFO) [] | Zhang et al. | 2024 | |

| Physics and Chemistry | Simulated Annealing (SA) [] | Kirkpatrick et al. | 1983 |

| Magnetic Optimization Algorithm (MOA) [] | Tayaraniet al. | 2008 | |

| Gravitational Search Algorithm (GSA) [] | Rashedi et al. | 2009 | |

| Artificial Chemical Reaction Optimization (ACRO) [] | Alatas | 2011 | |

| Lightning Search Algorithm (LSA) [] | Mirjalili | 2015 | |

| Sine Cosine Optimization (SCA) [] | Tanyildizi et al. | 2016 | |

| Golden Sine Algorithm (GSA) [] | Kaveh et al. | 2017 | |

| Thermal Exchange Optimization (TEO) [] | Abualigah et al. | 2017 | |

| Kepler Optimization Algorithm (KOA) [] | Basset et al. | 2023 | |

| Human | Teaching and Learning Based Optimization (TLBO) [] | Rao et al. | 2011 |

| Cultural Evolution Algorithm (CEA) [] | Kuo and Lin | 2013 | |

| Election Algorithm (EA) [] | Emami et al. | 2015 | |

| Social Learning Optimization Algorithm (SLOA) [] | Liu et al. | 2017 | |

| Socio Evolution and Learning Optimization Algorithm (SELO) [] | Kumar et al. | 2018 | |

| Volleyball Premier League Algorithm (VPL) [] | Moghdani et al. | 2018 |

As mentioned earlier, a large number of metaheuristic algorithms have been developed. However, the No Free Lunch (NFL) theorem states that no metaheuristic optimization algorithm can solve all optimization problems []. As real-world problems become increasingly challenging, it is necessary to improve existing optimization algorithms to design more effective and efficient optimization algorithms for increasingly complex optimization problems in the real world. Northern Goshawk Optimization (NGO) [], inspired by the predatory behavior of the northern goshawk, has gained a lot of attention shortly after its first proposal. For example, Chang et al. [] used NGO to optimize the life-cycle costs of the power grid. El-Dabah et al. [] utilized NGO to optimize the parameters in PV modules. Wu et al. [] proposed a deep learning model CNN-LSTM for predicting PV power, where NGO was used to optimize CNN-LSTM. Although the NGO algorithm has achieved some accomplishments, it still has some drawbacks: (1) the initial population is randomly generated and lacks diversity; (2) it exhibits slow convergence speed; (3) it easily falls into local optima.

To address the issues in the original NGO algorithm, this paper adds three strategies to it and proposes a Multi-Strategy Improved Northern Goshawk Optimization, called MSINGO. Then, the improved MSINGO is used to optimize six engineering problems.

The main contributions of this paper are summarized as follows:

- (1)

- To enhance the diversity of the initial population, this paper adds the cubic mapping strategy in the initialization phase of the original NGO algorithm;

- (2)

- To avoid NGO being trapped in local optima, a novel weighted stochastic difference variation strategy is introduced in the exploration phase. It will help NGO jump out of local optima;

- (3)

- To accelerate the convergence speed, a weighted sine and cosine optimization strategy is added in the exploitation phase of the original NGO algorithm;

- (4)

- To evaluate the performance of our improved MSINGO, we compare it with five highly cited and six recently proposed metaheuristic algorithms on CEC2017 test functions and six engineering design problems.

The rest of this paper is structured as follows. Section 2 briefly introduces the original NGO. Section 3 describes the improved MSINGO in detail. Section 4 shows experiments on 29 benchmark functions on CEC2017. Section 5 evaluates the performance of improved MSINGO in solving six engineering problems. Section 6 summarizes this paper.

2. Overview of Original NGO

The mathematical model of the NGO algorithm is briefly presented in this section. NGO mainly simulates the predatory process of the northern goshawk. The process of NGO involves 3 main phases: initialization, the exploration phase and the exploitation phase, which will be described in detail below.

2.1. Initialization

NGO is a population-based algorithm. Each individual northern goshawk in the population is considered as a candidate solution. In the initial stage of the NGO algorithm, candidate solutions are randomly generated, as shown in Equation (1). All candidate solutions form the population matrix, as shown in Equation (2).

where represents the population matrix, storing positions of all possible candidate solutions; is the position of the th candidate solution, which will be updated during optimization; indicates the th dimension of the th solution; is a random real number in the interval [0, 1]; and are the lower bound and upper bound of the th variable, respectively; denotes the size of the population; represents the dimension to be optimized.

In the optimization process, the objective function is used to calculate the fitness value of each candidate solution. All fitness values are stored in the fitness matrix , as shown in Equation (3). The candidate solution with the minimum fitness is the optimal solution.

where is the objective function, and is the fitness value obtained via the objective function with the th proposed solution.

2.2. Exploration Phase

The exploration phase of NGO imitated a northern goshawk randomly selecting prey in the search space and attacking it. In this phase, the mathematical model is shown in Equations (4) and (5)

where represents a random natural number in the interval ; is the position of the kth solution in the th iteration; is the position of the ith solution in the tth iteration; is the position of the ith solution in iteration ; is the fitness value of the th solution of the objective function in the tth iteration; is the fitness value of the ith solution of the objective function in the tth iteration; is the fitness value of the ith solution of the objective function in iteration ; is a random number in the interval ; is a random number of 1 or 2.

2.3. Exploitation Phase

The exploitation phase of the NGO algorithm mimicked the behavior of a northern goshawk chasing and hunting prey. In this phase, the positions are updated using Equations (6)–(8).

where is the current number of iteration, and is the maximum number of iterations; is a random number in the interval .

3. Our Proposed MSINGO

We proposed a Multi-Strategy Improved NGO(MSINGO) by adding three strategies to the original NGO algorithm. In this section, three strategies (i.e., cubic mapping, weighted random difference variation, and weighted sine and cosine optimization) and the proposed MSINGO will be introduced below.

3.1. Cubic Mapping Strategy

In the original NGO algorithm, the initial population is randomly generated, which may lead to insufficient population diversity. Many researchers have proved that chaotic mapping can improve population diversity, making optimization algorithm find the global optimal solution easier [,]. Cubic mapping is a common form of chaotic mapping []. We use it to initialize the population, which will enhance the population’s diversity. The mathematical model of cubic mapping is shown in Equation (9).

where is a parameter set to 2.595; is the th chaotic value, with = 0.3; denotes the population size; indicates the dimension of problem variables to be optimized.

The new population initialization formula with the cubic mapping strategy is shown in Equation (10).

3.2. Weighted Stochastic Difference Mutation Strategy

In the exploration phase of the original NGO algorithm, individuals in the population are updated by generating new individuals nearby randomly. In this way, new individuals may be close to the old ones, which can lead to NGO falling into local optima. A mutation strategy can help escape from the local optima. In this paper, we proposed a novel mutation strategy named weighted stochastic differential mutation strategy, which will help NGO jump out of local optima. Our weighted stochastic difference mutation strategy consists of two parts: differential variation value and its weight .

The differential variation value is calculated with Equation (11).

where represents the current optimal position; denotes a randomly selected position; and are random numbers in the interval .

The weight is generated using the weighted Levy flight technique [], as shown in Equations (12)–(15).

Among them, is the current number of iterations, and is the maximum number of iterations; is 0.05; is 1.5; and are normally distributed random numbers; is the gamma function.

Then, the weighted differential variation value is calculated with Equation (16).

Finally, we replaced the original Equation (4) in the NGO with the new Equation (17).

3.3. Weighted Sine and Cosine Optimization Strategy

The convergence speed of the original algorithm is slow. To solve this problem, we add the weighted sine and cosine optimization strategy to the exploitation phase. The mathematical model of the sine and cosine optimization strategy is shown in Equation (18).

where ; represents a random number in the interval ; is a random number in the interval , ; is a random number between 0 and 1.

Finally, we use Equation (19) instead of Equation (6) as the position updating formula in the exploitation phase.

3.4. The Detail of Our Proposed MSINGO

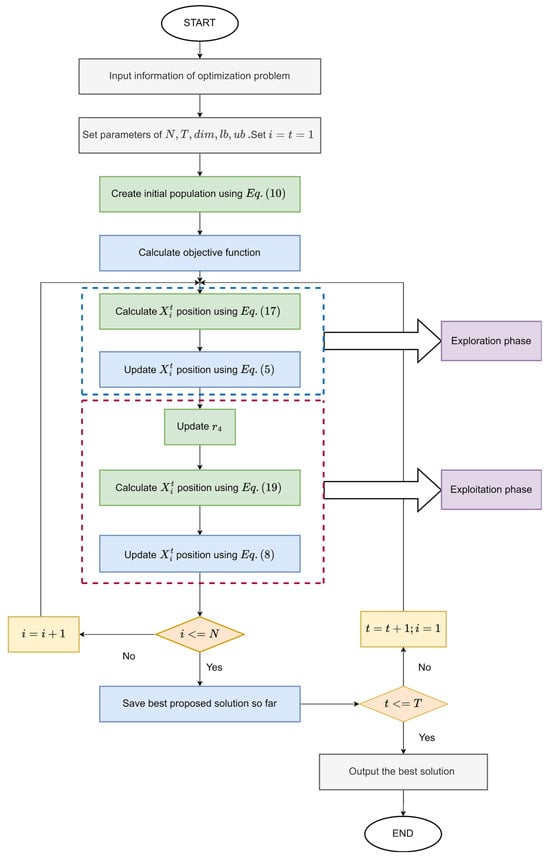

The pseudo-code of the proposed MSINGO algorithm is shown in Algorithm 1. The flow chart of MSINGO is shown in Figure 1, where the green parts are our improvements.

Figure 1.

The flow chart of the MSINGO algorithm.

The pseudo-code of the MSINGO algorithm.

| Algorithm 1. Pseudo-code of the MSINGO algorithm | |

| Input: | The initial parameters of MSINGO, including the maximum number of iterations , the number of population members , the dimension of problem variables , the lower bound and upper bound of problem variables , . |

| Output: | Optimal fitness value |

| 1: | Set |

| 2: | Create initial population using Equation (10) |

| 3: | While Do |

| 4: | While Do |

| 5: | Exploration phase: |

| 6: | Calculate the position of the th solution using Equation (17) |

| 7: | Update the position of the th solution using Equation (5) |

| 8: | Exploitation phase: |

| 9: | Calculate |

| 10: | Calculate the position of the th solution using Equation (19) |

| 11: | Update the position of the th solution using Equation (8) |

| 12: | End While |

| 13: | Save best proposed solution so far |

| 14: | |

| 15: | End While |

| 16: | Output the best solution |

3.5. Time Complexity Analysis

The time complexity of the MSINGO algorithm consists of three parts: population initialization, exploration phase, and exploitation phase. The primary parameters that affect time complexity are the maximum number of iterations T, the dimension to be optimized dim, and the size of population N. First of all, in the population initialization, the time complexity of MSINGO is . Secondly, in the iteration process, each individual will be updated in the exploration and exploitation phases. Therefore, the time complexity in the two phases is , respectively. Consequently, the total time complexity of MSINGO is .

4. Experimental Results and Analysis

In this section, we evaluated the performance of the proposed MSINGO on the CEC2017 test functions. The whole experiment consists of the following four parts: (1) the impact of three strategies on NGOs; (2) qualitative analysis of the MSINGO algorithm; (3) comparison with 11 well-known metaheuristic algorithms; and (4) scalability analysis of the MSINGO algorithm. All experiments were tested in a computer with Intel (R) Core (TM) i7-8565U,1.80 GHz CPU (Intel Corporation. City, Santa Clara, CA, USA). And the algorithms are all based on Python 3.7.

4.1. Benchmark Functions

To evaluate the performance of the MSINGO algorithm, we conducted experiments on 29 benchmark functions of IEEE CEC2017. The CEC2017 test functions contains 2 unimodal functions (C1–C2) to test the exploitation capability, 7 multimodal functions (C3–C9) to test the exploration capability, and 10 hybrid functions (C10–C19) and 10 composition functions (C20–C29) to test the algorithm’s ability to avoid local optima. The detailed descriptions of these benchmark functions are shown in Table 2, where range denotes the boundary of design variables, and represents the optimal value.

Table 2.

Details of the CEC2017 benchmark functions.

4.2. Competitor Algorithms and Parameters Setting

MSINGO is compared with 5 highly cited algorithms, including DE, MFO, WOA, SCA, and SOA, and 6 recently proposed metaheuristic algorithms, including SSA, DBO, POA, BWO, GJO, and NGO. The parameter settings of all algorithms are shown in Table 3. The population size and the maximum number of iterations of all algorithms are set to 30 and 500, respectively. Furthermore, in order to decrease the impact of the random factor, each algorithm was executed independently 30 times on each test function.

Table 3.

Parameter settings of the competitors and proposed MSINGO.

4.3. Influence of the Three Mechanisms

This section combines the cubic mapping strategy (C), weighted stochastic difference mutation strategy (WS), and weighted sine and cosine optimization strategy (WSC) with NGO, and analyzes their influence on improving NGO’s performance. The details of these different NGO algorithms are shown in Table 4, where ‘1’ indicates that the strategy is integrated with NGO and ‘0’ indicates the opposite.

Table 4.

Various NGO algorithms from three mechanisms.

Seven kinds of strategic NGO algorithms and the original NGO are tested on CEC2017 test functions, and the dimension to be optimized (dim) is set to 30. We calculated the average (Ave), standard deviation (Std), and highlighted the optimal algorithm in bold. The results are presented in Table 5.

Table 5.

Experimental results of strategy comparison on CEC2017 test functions.

According to the average fitness (Ave) of each algorithm in Table 5, the Friedman test method was carried out to rank the fitness of all the algorithms. The ranking of each algorithm is shown in Table 6, where average rank represents the average ranking of each algorithm and overall rank represents the final ranking of each algorithm, and +/−/= represents the number of test functions for which MSINGO performs better, lower or equal to that of other comparison algorithms. The smaller the values of average rank and overall rank, the better the performance of the algorithm.

Table 6.

Friedman test results of different strategies.

According to average rank in Table 6, the eight NGO algorithms are ranked as follows, MSINGO > WS_NGO > C_WS_NGO > WS_WSC_NGO > C_WSC_NGO > WSC_NGO > NGO > C_NGO. It can be seen that MSINGO performs better than WS_NGO on only 15 functions, indicating that the weighted stochastic difference variation (WS) strategy has played a crucial role in improving NGO algorithms. However, according to the average ranking of the algorithm, MSINGO is superior to WS_NGO, indicating that the other two strategies also play an auxiliary role in improving NGO algorithms. Therefore, the performance of the algorithm is improved the most when the three strategies are added simultaneously.

4.4. Qualitative Analysis

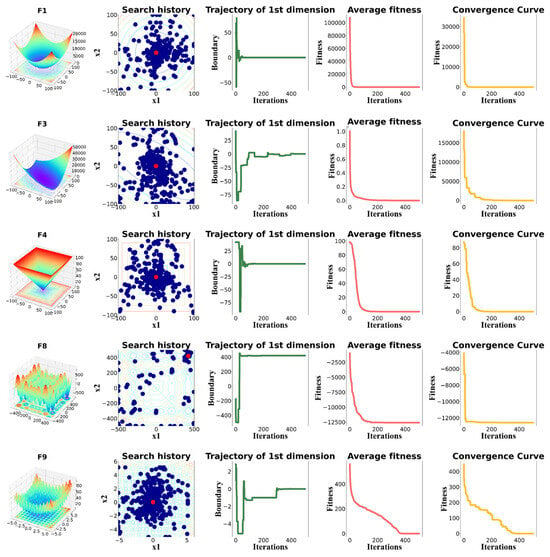

The qualitative analysis of MSINGO in solving common unimodal and multimodal functions are shown in Figure 2, and the detail and complete information of these functions are given in []. In the qualitative analysis of MSINGO, four well-known indicators are used to intuitively analyze the performance of the MSINGO algorithm, including the following: (1) search history; (2) the trajectory of the first northern goshawk in the 1st dimension; (3) the average fitness of the northern goshawk population; and (4) the convergence curve of the best candidate solution.

Figure 2.

Qualitative results of MSINGO, including (a) function’s landscape; (b) search history; (c) trajectory of 1st dimension; (d) average fitness; and (e) convergence curve.

The search history shows the position of each northern goshawk in the search space during the iterations. As can be seen in Figure 2b, the red dot in the search history represents the location of the global optimum and the blue dots represent the locations of the candidate best solutions during the iterations. It can be easily seen from the search history that MSINGO can search globally and converge quickly once finding the main optimal area, indicating MSINGO has a good ability to perform global exploration and local exploitation.

The trajectory of the first dimension refers to the position changes of the first northern goshawk in the first dimension, which indicates the primary exploratory behavior of MSINGO. As shown in Figure 2c, in the trajectory diagram of the first northern goshawk, the position of the first northern goshawk experienced rapid oscillations in the initial iterations, indicating that the MSINGO algorithm can quickly identify the main optimal region. In the subsequent iterations, there were slight oscillations, indicating that MSINGO searched around the optimal position and converged to it.

The average fitness curves and convergence curves for different benchmark functions are also provided in Figure 2d,e. Among them, rapid decline occurred in the initial iterations, representing that MSINGO converged quickly.

4.5. Comparison with 11 Well-Known Metaheuristic Algorithms

In qualitative analysis, we intuitively demonstrated the exploitation and exploration capabilities of the proposed MSINGO algorithm. In this section, we quantitatively compared our proposed MSINGO algorithm with 11 metaheuristic algorithms (5 highly cited and 6 recently proposed) on CEC2017 test functions. The comparison algorithms are described in Section 4.2.

Comparative experiments include the following: (1) comparison of exploitation capabilities on unimodal benchmark functions (C1–C2); (2) comparison of exploration abilities on multimodal benchmark functions (C3–C9); (3) comparison of local optimal avoidance abilities on hybrid functions (C10–C19) and composition functions (C20–C29).

Furthermore, the Friedman test was used to evaluate overall performance of 12 metaheuristics algorithms. The Wilcoxon signed-rank test was used to verify if two sets of solutions are dissimilar statistically substantial or not.

In these comparative experiments, the dimension to be optimized (dim) was set to 30. For a fair comparison, each algorithm was implemented 30 independent runs in each benchmark and the Ave, Std, and Rank were calculated, where Ave and Std indicate the mean and standard deviation of the optimal values, and Rank presents the algorithm’s ranking. A low Ave indicates a higher optimization performance and a lower Std indicates a more stable optimization performance. The algorithm with the best performance is bolded to highlight.

Below is a detailed discussion of these comparative experiments.

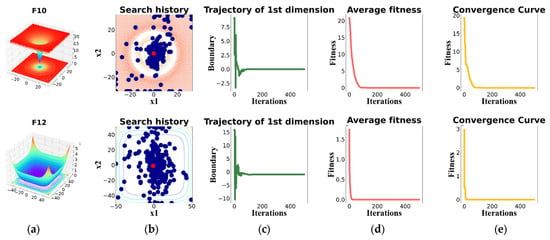

4.5.1. Exploitation Ability Analysis

To test the exploitation ability of MSINGO, we compare it with other 11 metaheuristic algorithms on two unimodal functions (C1–C2). The quantitative results on these two unimodal functions are shown in Table 7. It can be seen from Table 7 that MSINGO ranks first on the two unimodal functions, indicating MSINGO has the best exploitation ability among all the 12 algorithms.

Table 7.

Comparison results on unimodal functions (C1–C2, dimension = 30).

The convergence curves of 12 metaheuristic algorithms on C1 and C2 are shown in Figure 3. Obviously, our MSINGO algorithm has the fastest convergence speed and minimum fitness value, which shows that the proposed MSINGO is the most competitive algorithm in these unimodal functions.

Figure 3.

Convergence curves of different algorithms on the unimodal functions (C1–C2, dimension = 30).

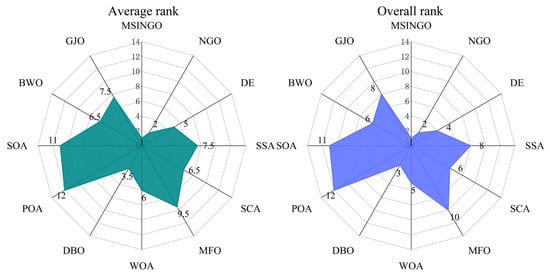

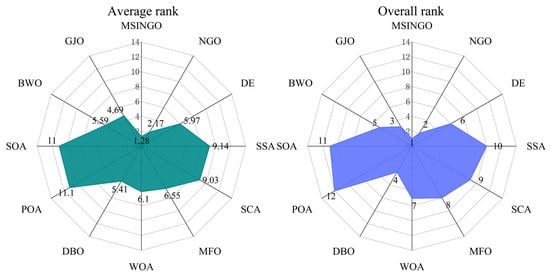

According to the Rank score in Table 7, the Friedman test was performed, and the result is shown in Figure 4, where average rank represents the average ranking of all algorithms, and overall rank represents the final ranking of all algorithms in unimodal functions. The smaller the average rank and overall rank, the better the performance of the algorithm. As can be seen from Figure 4, MSINGO ranks first, indicating that it is superior to other comparison algorithms in exploitation ability.

Figure 4.

Radar maps of Friedman ranking of different algorithms on unimodal functions.

The p-values obtained via the Wilcoxon signed-rank test between MSINGO and each of the comparison algorithms are presented in Table 8. A p-value less than 0.05 indicates a significant difference between the comparison algorithm and MSINGO. Conversely, there is no significant difference. As seen in Table 8, on the two unimodal functions, all p-values are less than 0.05, indicating that MSINGO is significantly better than other comparison algorithms.

Table 8.

Results for p-value from Wilcoxon signed-rank test on unimodal functions (C1–C2, dimension = 30).

The results in this section showed that the MSINGO algorithm has the best exploitation ability among all 12 algorithms.

4.5.2. Exploration Ability Analysis

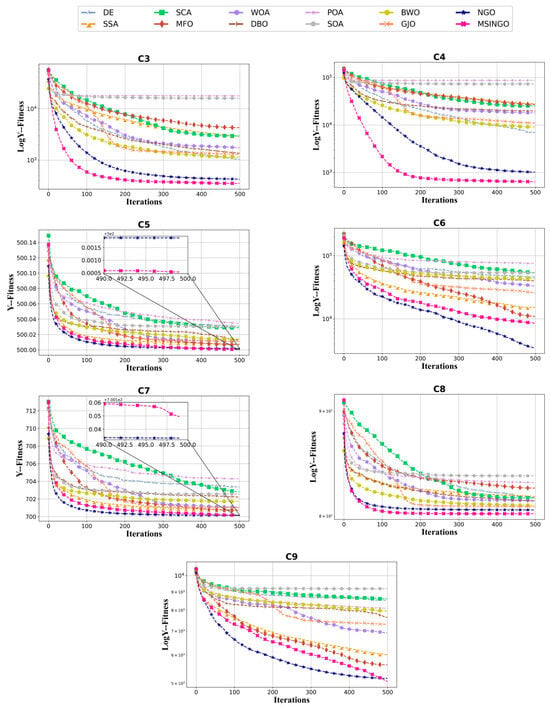

In this section, we tested the exploration ability of MSINGO on seven multimodal functions (C3–C9). Quantitative analysis is presented in Table 9, where MSINGO ranks first on five functions (C3, C4, C5, C8, and C9) and second on two functions (C6 and C7), indicating that MSINGO’s exploration ability is superior to comparative algorithms in the vast majority of cases.

Table 9.

Comparison results on multimodal functions (C3–C9, dimension = 30).

The convergence curves from C3 to C9 are shown in Figure 5. It can be seen that in terms of convergence speed, MSINGO ranks first on three functions (C3, C4, and C8) and second on the other four functions; from the perspective of the optimal solution, MSINGO is the smallest among five functions (C3, C4, C5, C8, and C9) and the second smallest among two functions (C6 and C7).

Figure 5.

Convergence curves of different algorithms on the multimodal functions (C3–C9, dimension = 30).

Friedman test results for these seven multimodal functions are shown in Figure 6, where MSINGO ranks first, indicating it has the best exploration ability among the 12 algorithms.

Figure 6.

Radar maps of Friedman ranking of different algorithms on multimodal functions.

Furthermore, Table 10 shows the p-value results of the Wilcoxon signed-rank test on MSINGO against 11 other algorithms. The vast majority of p-values are less than 0.05, indicating that MSINGO is better than the comparison algorithms in terms of exploration capability.

Table 10.

Results for p-value from Wilcoxon signed-rank test on multimodal functions (C3-C9, dimension = 30).

The results of this section indicate that MSINGO is superior to other comparison algorithms in exploration ability.

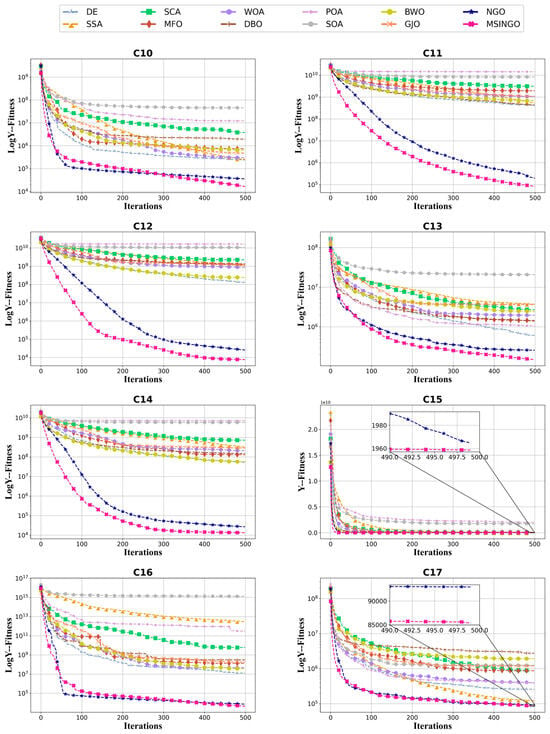

4.5.3. Local Optimal Avoidance Ability Analysis

In this section, 10 hybrid functions (C10–C19) and 10 composition functions (C20–C29) are selected to verify the ability to avoid local optimal on 12 metaheuristic algorithms. Quantitative statistical results are presented in Table 11 and Table 12. It can be seen that MSINGO ranks first on nineteen functions (C10 to C17 and C19 to C29) and second on C18. These results indicate that MSINGO has superior performance in avoiding local optima compared to 11 other metaheuristic algorithms.

Table 11.

Comparison results on hybrid functions (C10–C19, dimension = 30).

Table 12.

Comparison results on composition functions (C20–C29, dimension = 30).

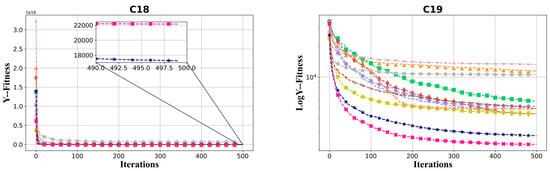

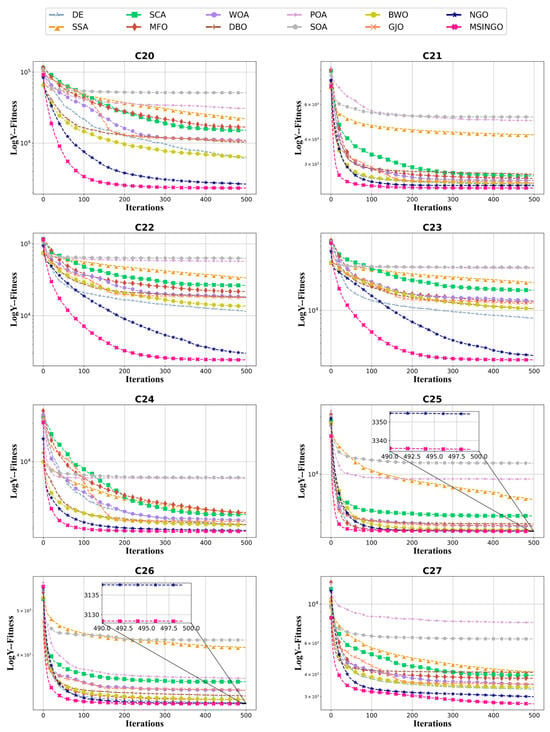

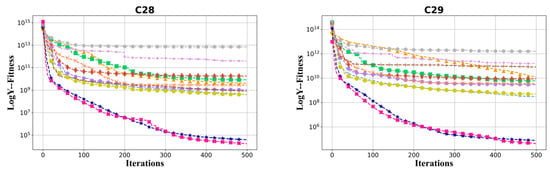

The convergence curves of hybrid functions and composition functions are shown in Figure 7 and Figure 8, respectively. For these 20 hybrid and composition functions, MSINGO ranks first on 19 functions (C10–C17 and C19–C29) and second on C18 in convergence speed and optimal solution.

Figure 7.

Convergence curves of different algorithms on hybrid functions (C10–C19, dimension = 30).

Figure 8.

Convergence curves of different algorithms on composition functions (C20–C29, dimension = 30).

The results of the Friedman test for 20 hybrid and composition functions are shown in Figure 9. It can be seen that MSINGO ranks first among 12 algorithms.

Figure 9.

Radar maps of Friedman ranking of different algorithms on hybrid functions and composition functions.

The p-values of Wilcoxon signed-rank tests on hybrid functions (C10–C19) and composition functions (C20–C29) are shown in Table 13. Obviously, the majority of the p-values are less than 0.05, indicating a significant difference between MSINGO and the majority of algorithms in terms of avoiding local optima.

Table 13.

The p-values from Wilcoxon signed-rank test on hybrid and composition functions (C10–C29, dimension = 30).

The results of this section show that MSINGO is the best in avoiding local optima among all algorithms.

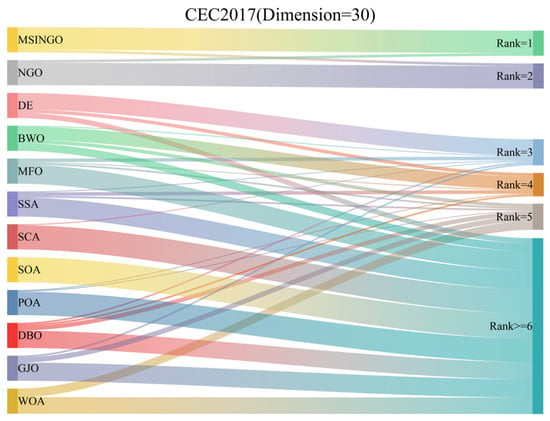

In Figure 10, we showed the Sankey ranking of the 12 algorithms, demonstrating that our proposed algorithm mostly maintains the first position in different test functions.

Figure 10.

The ranking Sankey of different algorithms on CEC2017 (Dimension = 30).

Among all the functions, MSINGO and NGO have similar optimal values in the seven functions of C5, C8, C9, C15, C6, C24, and C26, indicating that MSINGO’s advantages are not very obvious among these seven functions. Meanwhile, the optimal values for functions C6, C7, and C18 are not as good as NGO. This shows that the strategy we designed is not optimal, and some adjustments may be needed, such as parameter tuning. But we have obvious advantages in the other functions, so we need to consider the strategy from multiple aspects.

4.6. Scalability Analysis

In this section, to test the scalability of MSINGO, the dimension of benchmark function to be optimized (dim) is set to 100. For all algorithms, the population size is set to 30 and the maximum number of iterations is set to 500. Meanwhile, to reduce the influence of random factors, each algorithm was executed independently 30 times on each test function, and the Ave, Std, and Rank were calculated. The algorithm with the best performance is bolded. The results are shown in Table 14.

Table 14.

Comparison results of 12 algorithms on CEC2017 (dimension = 100).

Among all the algorithms, MSINGO ranked first on 26 test functions (C1 to C5, C8, and C10 to C29), second on C7, fourth on C9, and fifth on C6.

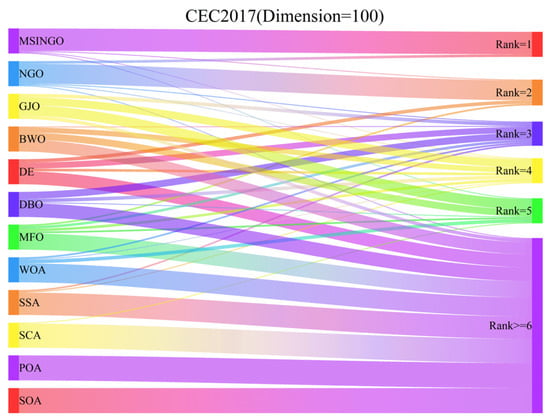

In Figure 11, we showed the Sankey ranking of the 12 algorithms on high-dimensional test functions, demonstrating that our proposed algorithm mostly maintains the first positions in different test functions.

Figure 11.

The Sankey ranking of different algorithms on CEC2017 (dimension = 100).

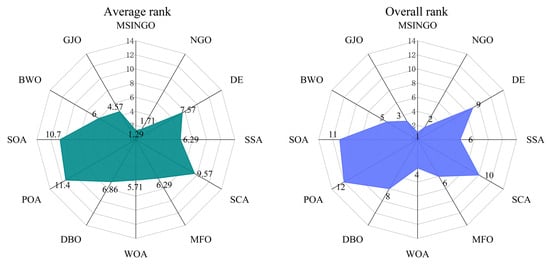

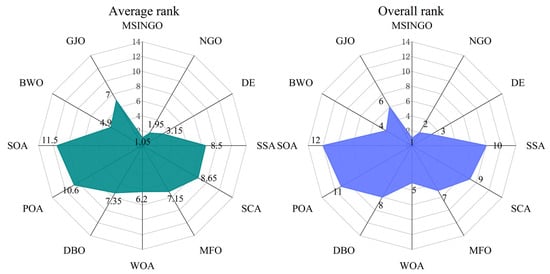

The results of the Friedman test on all 100-dimensional benchmark functions (C1–C29) are shown in Figure 12. It can be seen that MSINGO ranks first in all algorithms, indicating that MSINGO is the best of the 12 algorithms when dealing with high-dimensional problems.

Figure 12.

Radar maps of Friedman ranking of different algorithms on all functions.

4.7. Memory Occupation

We choose one function from unimodal (C1), multimodal (C4), hybrid function (C15), and composition function (C27) to experiment. The population size and the maximum number of iterations of all algorithms are set to 30 and 500, respectively. And the dimension to be optimized (dim) was set to 30. The results are shown in Table 15. It can be concluded that the memory occupation of MSINGO is not the smallest, which indicates that the convergence speed of MSINGO is accelerated, but the memory used is increased.

Table 15.

Memory usage of all algorithms.

5. MSINGO for Engineering Optimization Problems

This section verifies the efficiency of MSINGO in dealing with real-world optimization applications in six practical engineering design problems, including a tension/compression spring design problem (T/CSD) [], cantilever beam design problem (CBD) [], pressure vessel design problem (PVD) [], welded beam design problem (WBD) [], speed reducer design problem (SRD) [], and three-bar truss design problem (T-bTD) []. Parameter settings of MSINGO and the other 11 comparative metaheuristic algorithms in this section are identical to those in Section 4.2.

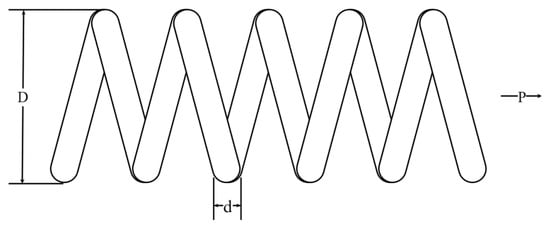

5.1. Tension/Compression Spring Design Problem (T/CSD)

The tension/compression spring design (T/CSD) is an optimization problem to minimize the weight of a tension/compression spring with constraints. Its schematic diagram is shown in Figure 13. There are three variables that require optimization: spring wire diameter (), spring coil diameter (), and the number of active coils (). The mathematical formula of T/CSD is as follows:

Figure 13.

Schematic representation of T/CSD.

Table 16 presents the experimental results of MSINGO and 11 competitor algorithms in achieving the optimal solution for T/CSD. Based on these results, MSINGO ranks first among all the algorithms.

Table 16.

Optimization results for T/CSD.

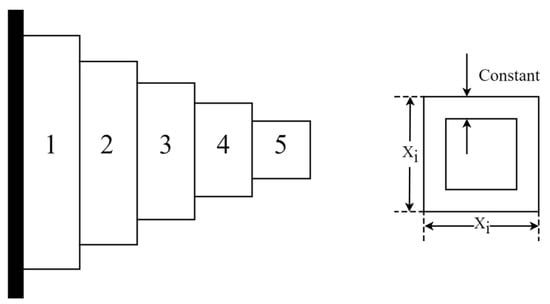

5.2. Cantilever Beam Design Problem (CBD)

The cantilever beam design (CBD) is an engineering optimization problem to minimize the beam’s weight while meeting the constraint conditions. Schematic representation of the CBD is shown in Figure 14. A cantilever beam consists of five hollow blocks, each of which is a hollow square section with a constant thickness. There are five variables to be optimized. The optimization problem of CBD can be defined as follows:

Figure 14.

Schematic representation of CBD.

Table 17 presents the optimal results. Based on Table 17, MSINGO outperforms all the 11 competitor algorithms.

Table 17.

Optimization results for CBD.

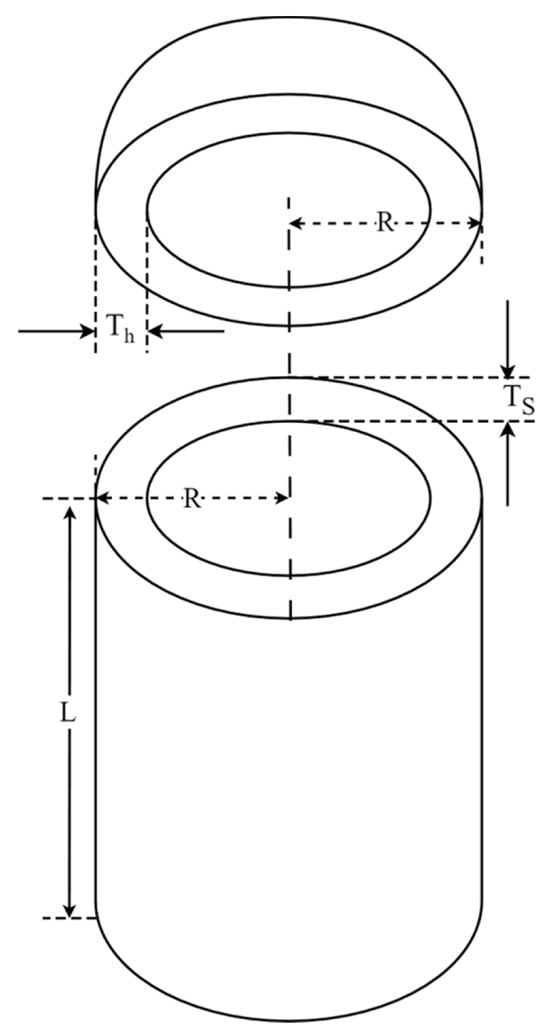

5.3. Pressure Vessel Design Problem (PVD)

The pressure vessel design (PVD) problem aims at minimizing total vessel cost while satisfying the constraint conditions, as illustrated in Figure 15. This problem contains four optimization variables: thickness of the shell (), thickness of the head (), inner radius (), and length of the cylindrical section excluding the head (). The mathematical formula of the PVD optimization problem is as follows:

Figure 15.

Schematic representation of PVD.

Table 18 lists the optimal results of MSINGO and the competitor algorithms. MSINGO ranks second in solving PVD problems, just inferior to DE.

Table 18.

Optimization results for PVD.

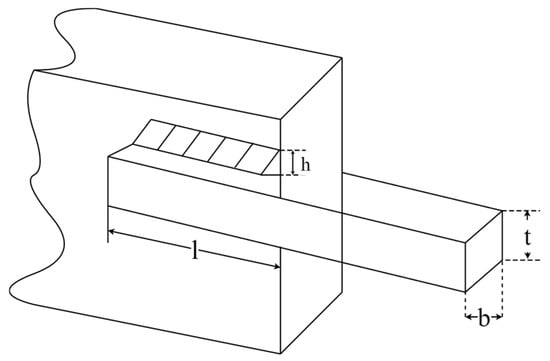

5.4. Welded Beam Design Problem (WBD)

The welded beam design (WBD) problem is to minimize the cost of a welded beam. Figure 16 shows schematic representation of WBD, which has four optimization variables, including weld thickness (), clamping bar length (), bar height (), and bar thickness (). As seen in Figure 10. The mathematical formula of the WBD optimization problem is as follows:

Figure 16.

Schematic representation of WBD.

The results of the WBD problem solved by MSINGO and compared algorithms are summarized in Table 19, where MSINGO ranks the first.

Table 19.

Optimization results for WBD.

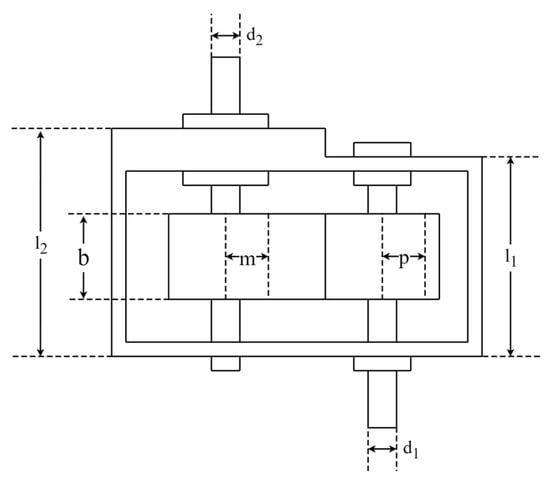

5.5. Speed Reducer Design Problem (SRD)

The speed reducer design (SRD) problem is an engineering optimization problem to minimize the weight of the reducer with constraints. A schematic representation of SRD is shown in Figure 17. It includes seven optimization variables: face width (), module of teeth (), pinion teeth count (), length of the first shaft between bearings (), length of the second shaft between bearings (), diameter of the first shaft (), and diameter of the second shaft (). The mathematical formula of the SRD optimization problem is as follows:

Figure 17.

Schematic representation of SRD.

Table 20 displays the optimization results for SRD. MSINGO ranks first along with NGO, DE, and BWO.

Table 20.

Optimization results for SRD.

5.6. Three-Bar Truss Design Problem (T-bTD)

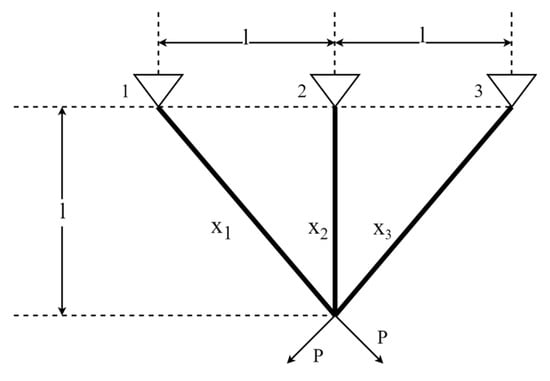

The three-bar truss design (T-bTD) problem is an optimization problem in civil engineering where the objective is to minimize the volume of the three-bar truss. The schematic representation of T-bTD is shown in Figure 18. It has two optimization variables, namely and . The mathematical formula of T-bTD is defined as follows:

Figure 18.

Schematic representation of T-bTD.

Table 21 presents the experimental results. MSINGO ranks second, behind DE.

Table 21.

Optimization results for T-bTD.

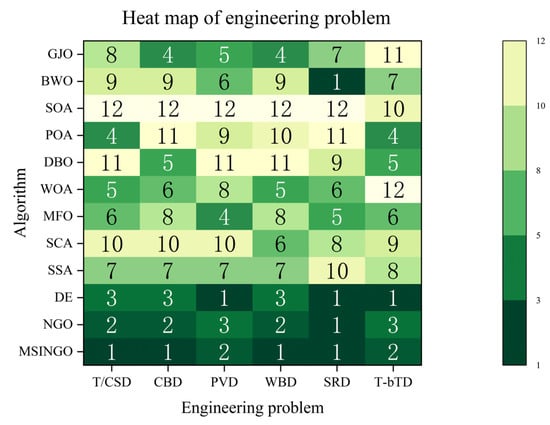

Figure 19 shows a heat map of 12 algorithms on six engineering applications. MSINGO ranks the lowest overall, proving that MSINGO has excellent performance in solving engineering problems.

Figure 19.

The heat map of different algorithms on 6 engineering problems.

Different engineering applications have different conditions, so one algorithm cannot be applicable to all engineering applications. In most cases, MSINGO outperforms other algorithms, but performs worse than DE in the pressure vessel design problem (PVD) and the three-bar truss design problem (T-bTD). Therefore, for different practical problems, algorithm improvement also requires targeted analysis.

6. Conclusions and Future Work

In this paper, we propose a multi-strategy improved northern goshawk optimization named MSINGO. Firstly, cubic mapping is applied in the population initialization to improve the population diversity of the algorithm. Secondly, weighted stochastic difference mutation is added in the exploration phase to make it jump out of the local optimal solution. Finally, we use the weighted sine and cosine optimization strategy instead of the original exploitation formula to enhance convergence speed of the MSINGO. We analyzed the impact of the three strategies on NGOs and verified the effectiveness of the proposed algorithm. Then, MSINGO is compared with 11 well-known algorithms on CEC2017 test functions. Comparative experiments include comparison of exploitation ability, exploration ability, local optimal avoidance ability, and scalability. The comparative experimental results show that in the vast majority of cases, MSINGO’s exploitation ability, exploration ability, local optimal avoidance ability, and scalability are superior to those of competitive algorithms. Additionally, the implementation of MSINGO in addressing six engineering design optimization issues demonstrated the high capability of MSINGO in real-world optimization problems.

In the future, we will verify our algorithm on large size datasets and study its performance on large size datasets, such as parameter selection for a neural network model and so on. We will also use hybrid methods to validate the performance of the algorithm, such as combining it with faster gradient-based methods.

Author Contributions

H.L.: Methodology, Writing—Review and Editing; J.X.: Software, Writing—Original draft; Y.Y.: Resources; S.Z.: Methodology; Y.C.: Methodology; R.Z.: Supervision; Y.M.: Supervision; M.W.: Resources; K.Z.: Resources. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Science Foundation of Hebei Province (D2023512004).

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Inquiries about data availability should be directed to the author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Phan, H.D.; Ellis, K.; Barca, J.C.; Dorin, A. A Survey of Dynamic Parameter Setting Methods for Nature-Inspired Swarm Intelligence Algorithms. Neural Comput. Appl. 2020, 32, 567–588. [Google Scholar] [CrossRef]

- Nadimi-Shahraki, M.H.; Zamani, H.; Mirjalili, S. Enhanced Whale Optimization Algorithm for Medical Feature Selection: A COVID-19 Case Study. Comput. Biol. Med. 2022, 148, 105858. [Google Scholar] [CrossRef]

- Guo, X.; Hu, J.; Yu, H.; Wang, M.; Yang, B. A New Population Initialization of Metaheuristic Algorithms Based on Hybrid Fuzzy Rough Set for High-Dimensional Gene Data Feature Selection. Comput. Biol. Med. 2023, 166, 107538. [Google Scholar] [CrossRef]

- Wang, X.; Choi, T.-M.; Liu, H.; Yue, X. A Novel Hybrid Ant Colony Optimization Algorithm for Emergency Transportation Problems During Post-Disaster Scenarios. IEEE Trans. Syst. Man Cybern Syst. 2018, 48, 545–556. [Google Scholar] [CrossRef]

- Beheshtinia, M.A.; Jozi, A.; Fathi, M. Optimizing Disaster Relief Goods Distribution and Transportation: A Mathematical Model and Metaheuristic Algorithms. Appl. Math. Sci. Eng. 2023, 31, 2252980. [Google Scholar] [CrossRef]

- Shen, Y.; Zhang, C.; Soleimanian Gharehchopogh, F.; Mirjalili, S. An Improved Whale Optimization Algorithm Based on Multi-Population Evolution for Global Optimization and Engineering Design Problems. Expert Syst. Appl. 2023, 215, 119269. [Google Scholar] [CrossRef]

- Jiadong, Q.; Ohl, J.P.; Tran, T.-T. Predicting Clay Compressibility for Foundation Design with High Reliability and Safety: A Geotechnical Engineering Perspective Using Artificial Neural Network and Five Metaheuristic Algorithms. Reliab. Eng. Syst. Saf. 2024, 243, 109827. [Google Scholar] [CrossRef]

- Lin, W.-Y. A Novel 3D Fruit Fly Optimization Algorithm and Its Applications in Economics. Neural Comput. Appl. 2016, 27, 1391–1413. [Google Scholar] [CrossRef]

- Ewees, A.A.; Mostafa, R.R.; Ghoniem, R.M.; Gaheen, M.A. Improved Seagull Optimization Algorithm Using Lévy Flight and Mutation Operator for Feature Selection. Neural Comput. Appl. 2022, 34, 7437–7472. [Google Scholar] [CrossRef]

- Dutta, D.; Rath, S. Innovative Hybrid Metaheuristic Algorithms: Exponential Mutation and Dual-Swarm Strategy for Hybrid Feature Selection Problem. Int. J. Inf. Tecnol. 2024, 16, 77–89. [Google Scholar] [CrossRef]

- Yildirim, S.; Kaya, Y.; Kılıç, F. A Modified Feature Selection Method Based on Metaheuristic Algorithms for Speech Emotion Recognition. Appl. Acoust. 2021, 173, 107721. [Google Scholar] [CrossRef]

- Nasruddin; Sholahudin; Satrio, P.; Mahlia, T.M.I.; Giannetti, N.; Saito, K. Optimization of HVAC System Energy Consumption in a Building Using Artificial Neural Network and Multi-Objective Genetic Algorithm. Sustain. Energy Technol. Assess. 2019, 35, 48–57. [Google Scholar] [CrossRef]

- Eker, E.; Kayri, M.; Ekinci, S.; İzci, D. Comparison of Swarm-Based Metaheuristic and Gradient Descent-Based Algorithms in Artificial Neural Network Training. Adv. Distrib. Comput. Artif. Intell. J. 2023, 12, e29969. [Google Scholar] [CrossRef]

- Mirsaeidi, S.; Shang, F.; Ghaffari, K.; He, J.; Said, D.M.; Muttaqi, K.M. An Artificial Neural Network Based Strategy for Commutation Failure Forecasting in LCC-HVDC Transmission Networks. In Proceedings of the 2023 IEEE International Conference on Energy Technologies for Future Grids (ETFG), Wollongong, Australia, 3 December 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–6. [Google Scholar]

- Zheng, R.; Hussien, A.G.; Qaddoura, R.; Jia, H.; Abualigah, L.; Wang, S.; Saber, A. A Multi-Strategy Enhanced African Vultures Optimization Algorithm for Global Optimization Problems. J. Comput. Des. Eng. 2023, 10, 329–356. [Google Scholar] [CrossRef]

- Hochreiter, S.; Younger, A.S.; Conwell, P.R. Learning to Learn Using Gradient Descent. In Proceedings of the Artificial Neural Networks—ICANN 2001, Vienna, Austria, 21–25 August 2001; Dorffner, G., Bischof, H., Hornik, K., Eds.; Springer: Berlin/Heidelberg, Germany, 2001; pp. 87–94. [Google Scholar]

- Polyak, B.T. Newton’s Method and Its Use in Optimization. Eur. J. Oper. Res. 2007, 181, 1086–1096. [Google Scholar] [CrossRef]

- Fletcher, R. Function Minimization by Conjugate Gradients. Comput. J. 1964, 7, 149–154. [Google Scholar] [CrossRef]

- Zhong, C.; Li, G.; Meng, Z. Beluga Whale Optimization: A Novel Nature-Inspired Metaheuristic Algorithm. Knowl.-Based Syst. 2022, 251, 109215. [Google Scholar] [CrossRef]

- Sahoo, S.K.; Saha, A.K.; Nama, S.; Masdari, M. An Improved Moth Flame Optimization Algorithm Based on Modified Dynamic Opposite Learning Strategy. Artif. Intell. Rev. 2023, 56, 2811–2869. [Google Scholar] [CrossRef]

- Mirjalili, S. Moth-Flame Optimization Algorithm: A Novel Nature-Inspired Heuristic Paradigm. Knowl.-Based Syst. 2015, 89, 228–249. [Google Scholar] [CrossRef]

- Holland, J.H. Genetic Algorithms. Sci. Am. 1992, 267, 66–73. [Google Scholar] [CrossRef]

- Storn, R.; Price, K. Differential Evolution—A Simple and Efficient Heuristic for Global Optimization over Continuous Spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Yao, X.; Liu, Y.; Lin, G. Evolutionary Programming Made Faster. IEEE Trans. Evol. Comput. 1999, 3, 82–102. [Google Scholar] [CrossRef]

- Koza, J.R. Genetic Programming as a Means for Programming Computers by Natural Selection. Stat. Comput. 1994, 4, 87–112. [Google Scholar] [CrossRef]

- Simon, D. Biogeography-Based Optimization. IEEE Trans. Evol. Comput. 2008, 12, 702–713. [Google Scholar] [CrossRef]

- Moscato, P.; Cotta Porras, C. An Introduction to Memetic Algorithms. Int. Artif. 2003, 7, 360. [Google Scholar] [CrossRef]

- Atashpaz-Gargari, E.; Lucas, C. Imperialist Competitive Algorithm: An Algorithm for Optimization Inspired by Imperialistic Competition. In Proceedings of the 2007 IEEE Congress on Evolutionary Computation, Singapore, 25–28 September 2007; IEEE: Piscataway, NJ, USA, 2007; pp. 4661–4667. [Google Scholar]

- Kennedy, J.; Eberhart, R. Particle Swarm Optimization. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; IEEE: Piscataway, NJ, USA, 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Dorigo, M.; Birattari, M.; Stutzle, T. Ant Colony Optimization. IEEE Comput. Intell. Mag. 2006, 1, 28–39. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Yang, X.-S.; Deb, S. Cuckoo Search via Lévy Flights. In Proceedings of the 2009 World Congress on Nature & Biologically Inspired Computing (NaBIC), Coimbatore, India, 9–11 December 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 210–214. [Google Scholar]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Xue, J.; Shen, B. A Novel Swarm Intelligence Optimization Approach: Sparrow Search Algorithm. Syst. Sci. Control Eng. 2020, 8, 22–34. [Google Scholar] [CrossRef]

- Xue, J.; Shen, B. Dung Beetle Optimizer: A New Meta-Heuristic Algorithm for Global Optimization. J. Supercomput. 2023, 79, 7305–7336. [Google Scholar] [CrossRef]

- Połap, D.; Woźniak, M. Red Fox Optimization Algorithm. Expert Syst. Appl. 2021, 166, 114107. [Google Scholar] [CrossRef]

- Zhao, S.; Zhang, T.; Ma, S.; Wang, M. Sea-Horse Optimizer: A Novel Nature-Inspired Meta-Heuristic for Global Optimization Problems. Appl. Intell. 2023, 53, 11833–11860. [Google Scholar] [CrossRef]

- Dehghani, M.; Montazeri, Z.; Trojovská, E.; Trojovský, P. Coati Optimization Algorithm: A New Bio-Inspired Metaheuristic Algorithm for Solving Optimization Problems. Knowl.-Based Syst. 2023, 259, 110011. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Jameel, M.; Abouhawwash, M. Spider Wasp Optimizer: A Novel Meta-Heuristic Optimization Algorithm. Artif. Intell. Rev. 2023, 56, 11675–11738. [Google Scholar] [CrossRef]

- Zhang, W.; Zhao, J.; Liu, H.; Tu, L. Cleaner Fish Optimization Algorithm: A New Bio-Inspired Meta-Heuristic Optimization Algorithm. J. Supercomput. 2024, 80, 17338–17376. [Google Scholar] [CrossRef]

- Kirkpatrick, S.; Gelatt, C.D.; Vecchi, M.P. Optimization by Simulated Annealing. Science 1983, 220, 671–680. [Google Scholar] [CrossRef]

- Rashedi, E.; Nezamabadi-pour, H.; Saryazdi, S. GSA: A Gravitational Search Algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Alatas, B. ACROA: Artificial Chemical Reaction Optimization Algorithm for Global Optimization. Expert Syst. Appl. 2011, 38, 13170–13180. [Google Scholar] [CrossRef]

- Mirjalili, S. SCA: A Sine Cosine Algorithm for Solving Optimization Problems. Knowl.-Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

- Kaveh, A.; Dadras, A. A Novel Meta-Heuristic Optimization Algorithm: Thermal Exchange Optimization. Adv. Eng. Softw. 2017, 110, 69–84. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Azeem, S.A.A.; Jameel, M.; Abouhawwash, M. Kepler Optimization Algorithm: A New Metaheuristic Algorithm Inspired by Kepler’s Laws of Planetary Motion. Knowl.-Based Syst. 2023, 268, 110454. [Google Scholar] [CrossRef]

- Rao, R.V.; Savsani, V.J.; Vakharia, D.P. Teaching–Learning-Based Optimization: A Novel Method for Constrained Mechanical Design Optimization Problems. Comput.-Aided Des. 2011, 43, 303–315. [Google Scholar] [CrossRef]

- Kuo, H.C.; Lin, C.H. Cultural Evolution Algorithm for Global Optimizations and Its Applications. J. Appl. Res. Technol. 2013, 11, 510–522. [Google Scholar] [CrossRef]

- Liu, Z.; Qin, J.; Peng, W.; Chao, H. Effective Task Scheduling in Cloud Computing Based on Improved Social Learning Optimization Algorithm. Int. J. Online Eng. 2017, 13, 4. [Google Scholar] [CrossRef][Green Version]

- Kumar, M.; Kulkarni, A.J.; Satapathy, S.C. Socio Evolution & Learning Optimization Algorithm: A Socio-Inspired Optimization Methodology. Future Gener. Comput. Syst. 2018, 81, 252–272. [Google Scholar] [CrossRef]

- Moghdani, R.; Salimifard, K. Volleyball Premier League Algorithm. Appl. Soft Comput. 2018, 64, 161–185. [Google Scholar] [CrossRef]

- Dhiman, G.; Kumar, V. Seagull Optimization Algorithm: Theory and Its Applications for Large-Scale Industrial Engineering Problems. Knowl.-Based Syst. 2019, 165, 169–196. [Google Scholar] [CrossRef]

- Dehghani, M.; Hubalovsky, S.; Trojovsky, P. Northern Goshawk Optimization: A New Swarm-Based Algorithm for Solving Optimization Problems. IEEE Access 2021, 9, 162059–162080. [Google Scholar] [CrossRef]

- Trojovský, P.; Dehghani, M. Pelican Optimization Algorithm: A Novel Nature-Inspired Algorithm for Engineering Applications. Sensors 2022, 22, 855. [Google Scholar] [CrossRef]

- Chopra, N.; Mohsin Ansari, M. Golden Jackal Optimization: A Novel Nature-Inspired Optimizer for Engineering Applications. Expert Syst. Appl. 2022, 198, 116924. [Google Scholar] [CrossRef]

- Tayarani-N, M.H.; Akbarzadeh-T, M.R. Magnetic Optimization Algorithms a New Synthesis. In Proceedings of the 2008 IEEE Congress on Evolutionary Computation (IEEE World Congress on Computational Intelligence), Hong Kong, China, June 1–6 June 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 2659–2664. [Google Scholar]

- Shareef, H.; Ibrahim, A.A.; Mutlag, A.H. Lightning Search Algorithm. Appl. Soft Comput. 2015, 36, 315–333. [Google Scholar] [CrossRef]

- Tanyildizi, E.; Demir, G. Golden Sine Algorithm: A Novel Math-Inspired Algorithm. Adv. Electr. Comput. Eng. 2017, 17, 71–78. [Google Scholar] [CrossRef]

- Emami, H.; Derakhshan, F. Election Algorithm: A New Socio-Politically Inspired Strategy. AI Commun. 2015, 28, 591–603. [Google Scholar] [CrossRef]

- Wolpert, D.H.; Macready, W.G. No Free Lunch Theorems for Optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Chang, T.; Ge, Y.; Lin, Q.; Wang, Y.; Chen, R.; Wang, J. Optimal Configuration of Hybrid Energy Storage Capacity Based on Northern Goshawk Optimization. In Proceedings of the 2023 35th Chinese Control and Decision Conference (CCDC), Yichang, China, 20 May 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 301–306. [Google Scholar]

- El-Dabah, M.A.; El-Sehiemy, R.A.; Hasanien, H.M.; Saad, B. Photovoltaic Model Parameters Identification Using Northern Goshawk Optimization Algorithm. Energy 2023, 262, 125522. [Google Scholar] [CrossRef]

- Wu, X.; He, L.; Wu, G.; Liu, B.; Song, D. Optimizing CNN-LSTM Model for Short-Term PV Power Prediction Using Northern Goshawk Optimization. In Proceedings of the 2023 6th International Conference on Power and Energy Applications (ICPEA), Weihai, China, 24 November 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 248–252. [Google Scholar]

- Deng, H.; Liu, L.; Fang, J.; Qu, B.; Huang, Q. A Novel Improved Whale Optimization Algorithm for Optimization Problems with Multi-Strategy and Hybrid Algorithm. Math. Comput. Simul. 2023, 205, 794–817. [Google Scholar] [CrossRef]

- Fan, J.; Li, Y.; Wang, T. An Improved African Vultures Optimization Algorithm Based on Tent Chaotic Mapping and Time-Varying Mechanism. PLoS ONE 2021, 16, e0260725. [Google Scholar] [CrossRef]

- Rogers, T.D.; Whitley, D.C. Chaos in the Cubic Mapping. Math. Model 1983, 4, 9–25. [Google Scholar] [CrossRef]

- Chechkin, A.V.; Metzler, R.; Klafter, J.; Gonchar, V.Y. Introduction to the Theory of Lévy Flights. In Anomalous Transport; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2008; pp. 129–162. ISBN 978-3-527-62297-9. [Google Scholar]

- Belegundu, A.D.; Arora, J.S. A Study of Mathematical Programmingmethods for Structural Optimization. Part II: Numerical Results. Int. J. Numer. Methods Eng. 1985, 21, 1601–1623. [Google Scholar] [CrossRef]

- Chickermane, H.; Gea, H.C. Structural Optimization Using a New Local Approximation Method. Int. J. Numer. Methods Eng. 1996, 39, 829–846. [Google Scholar] [CrossRef]

- Ghasemi, M.; Golalipour, K.; Zare, M.; Mirjalili, S.; Trojovský, P.; Abualigah, L.; Hemmati, R. Flood Algorithm (FLA): An Efficient Inspired Meta-Heuristic for Engineering Optimization. J. Supercomput. 2024, 80, 22913–23017. [Google Scholar] [CrossRef]

- Coello Coello, C.A. Use of a Self-Adaptive Penalty Approach for Engineering Optimization Problems. Comput. Ind. 2000, 41, 113–127. [Google Scholar] [CrossRef]

- Mezura-Montes, E.; Coello, C.A.C. Useful Infeasible Solutions in Engineering Optimization with Evolutionary Algorithms. In Proceedings of the MICAI 2005: Advances in Artificial Intelligence, Monterrey, Mexico, 14–18 November 2005; Gelbukh, A., de Albornoz, Á., Terashima-Marín, H., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; pp. 652–662. [Google Scholar]

- Ray, T.; Saini, P. Engineering Design Optimization Using a Swarm with an Intelligent Information Sharing among Individuals. Eng. Optim. 2001, 33, 735–748. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).